Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

45 results about "Active camera" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

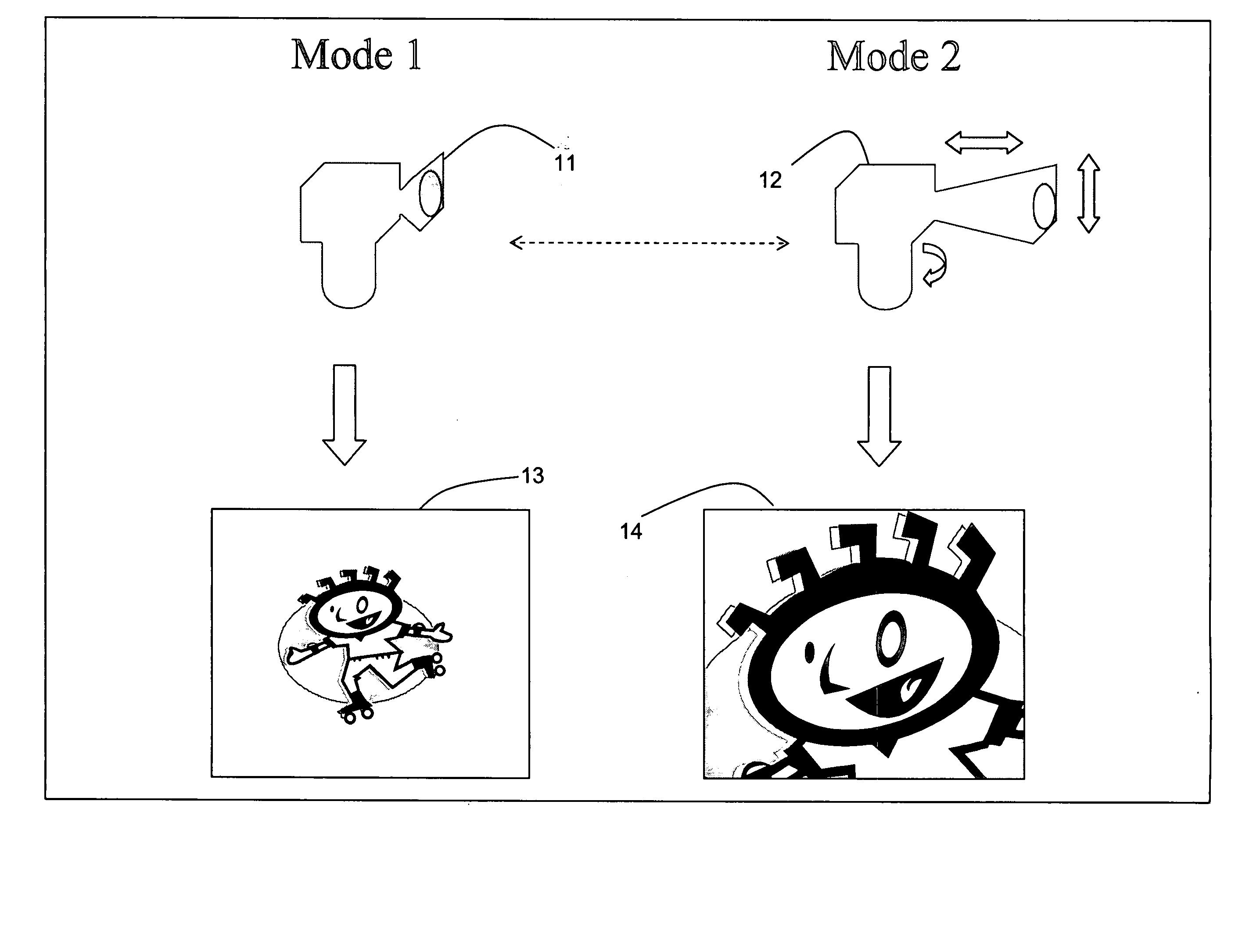

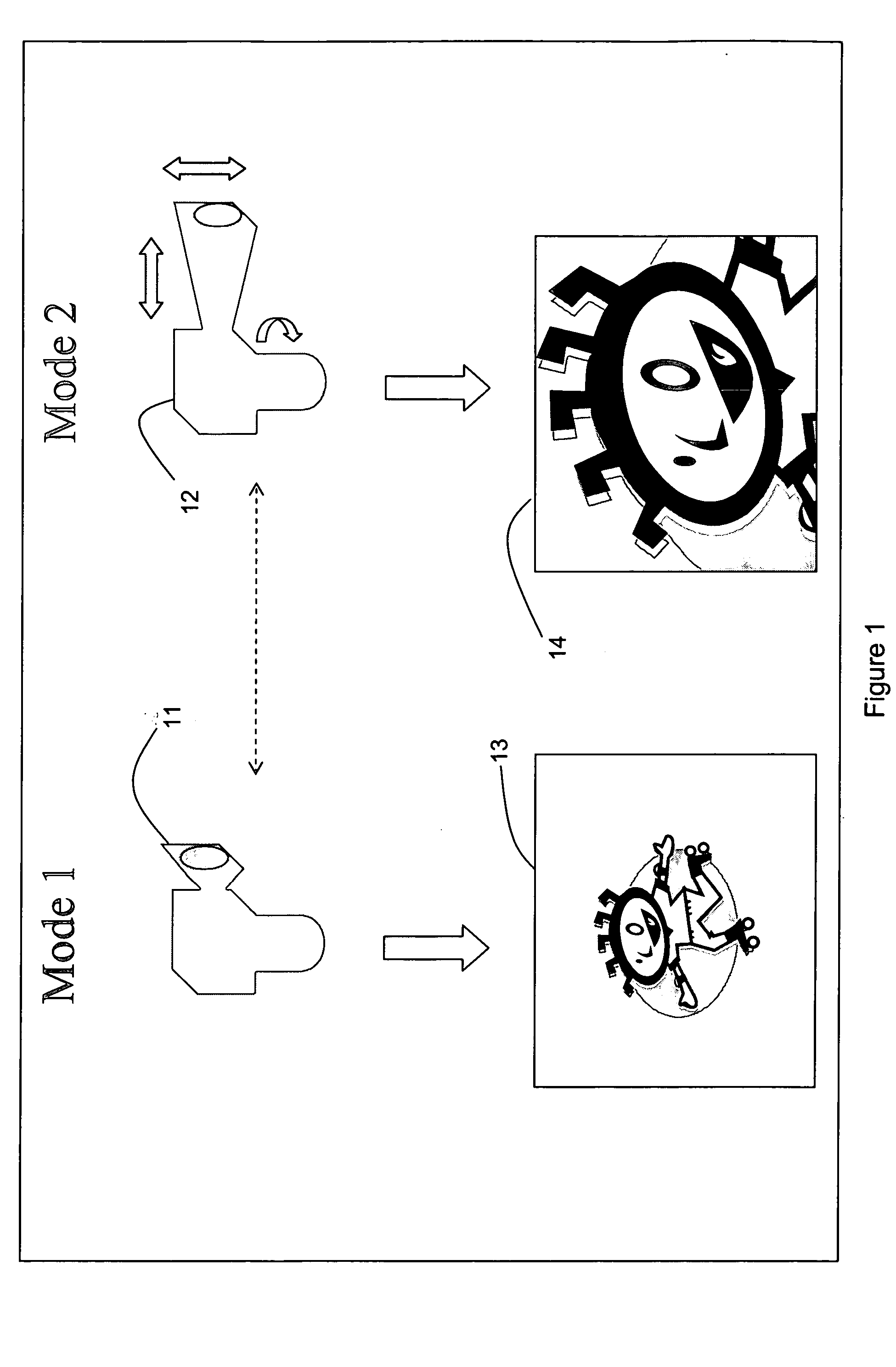

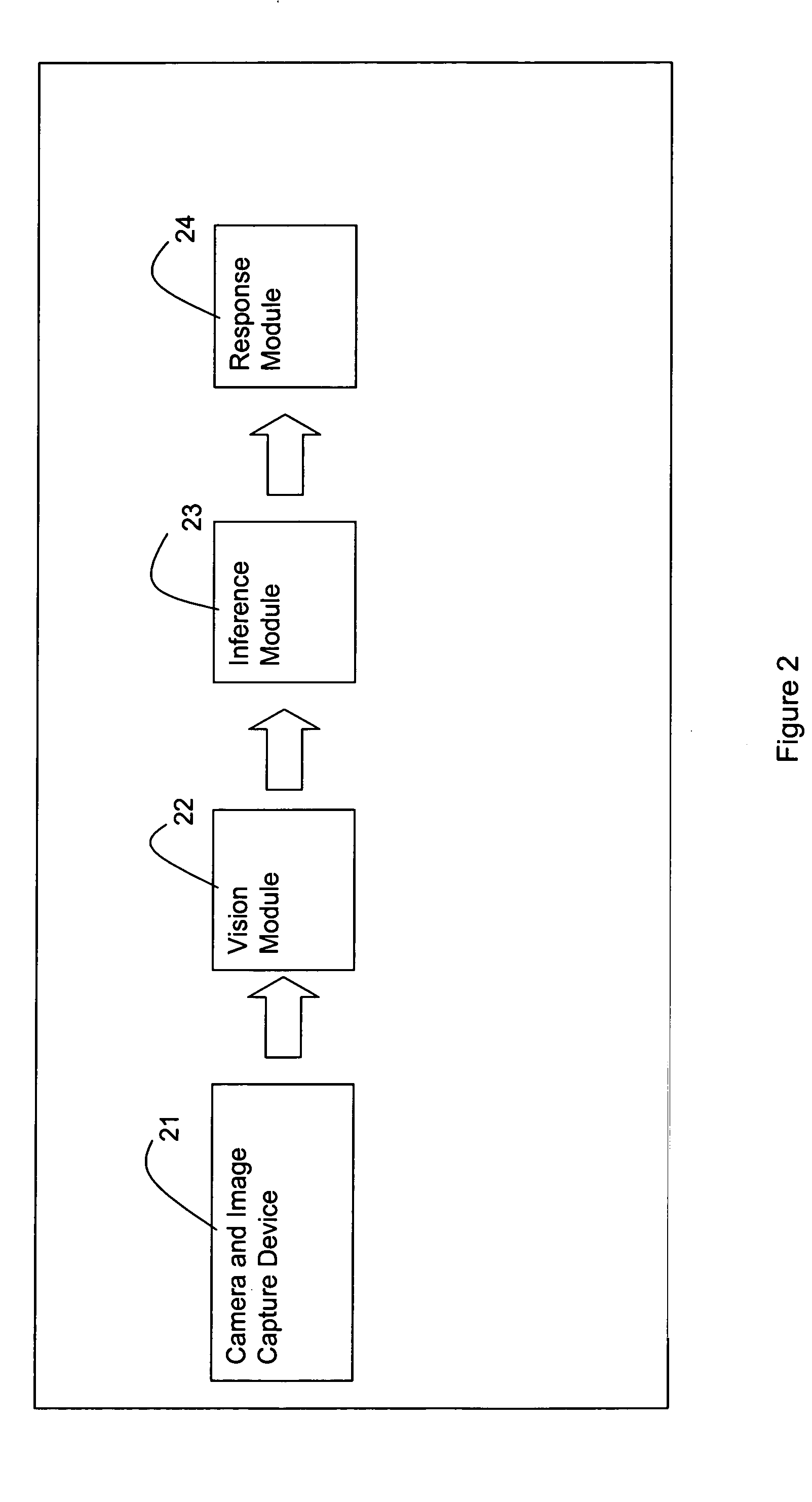

Active camera video-based surveillance systems and methods

InactiveUS20050104958A1Quality improvementTelevision system detailsCharacter and pattern recognitionActive cameraImaging data

A video surveillance system comprises a sensing unit capable of being operated in a first mode and second mode and a computer system coupled to the sensing unit. The computer system is adapted to receive and process image data from the sensing unit, to detect and track targets, and to determine whether the sensing unit operates in the first mode or in the second mode based on the detection and tracking of targets.

Owner:OBJECTVIDEO

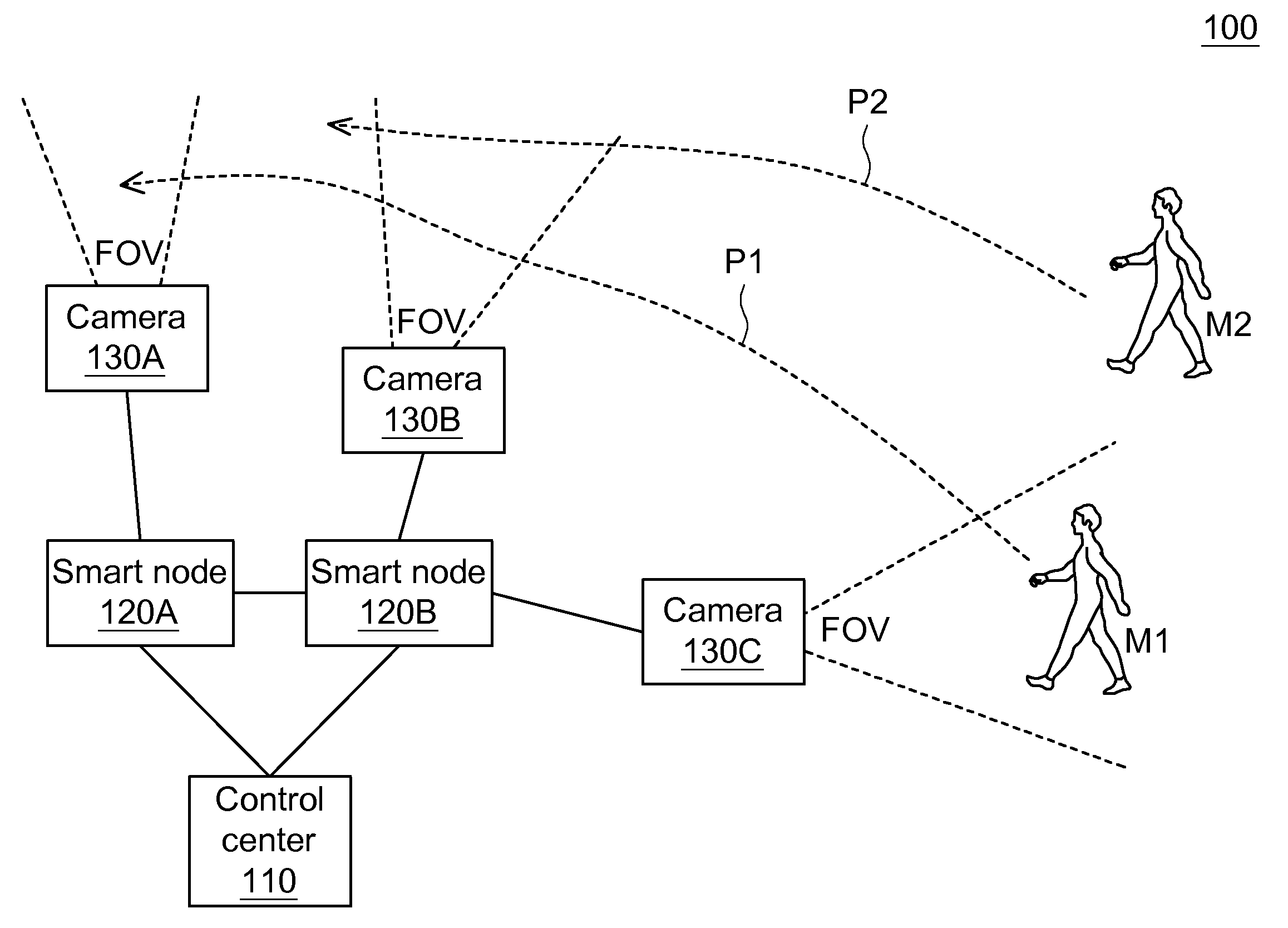

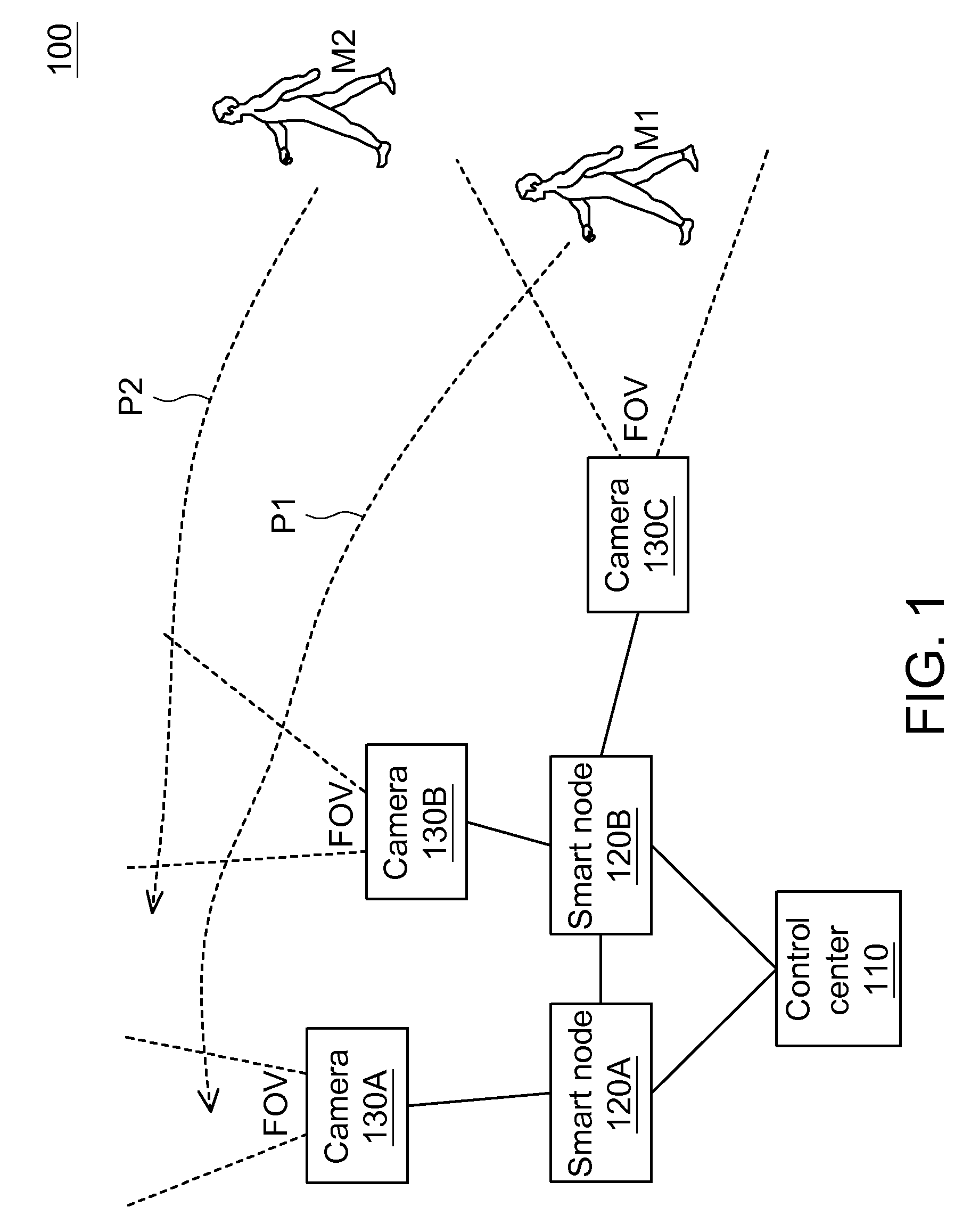

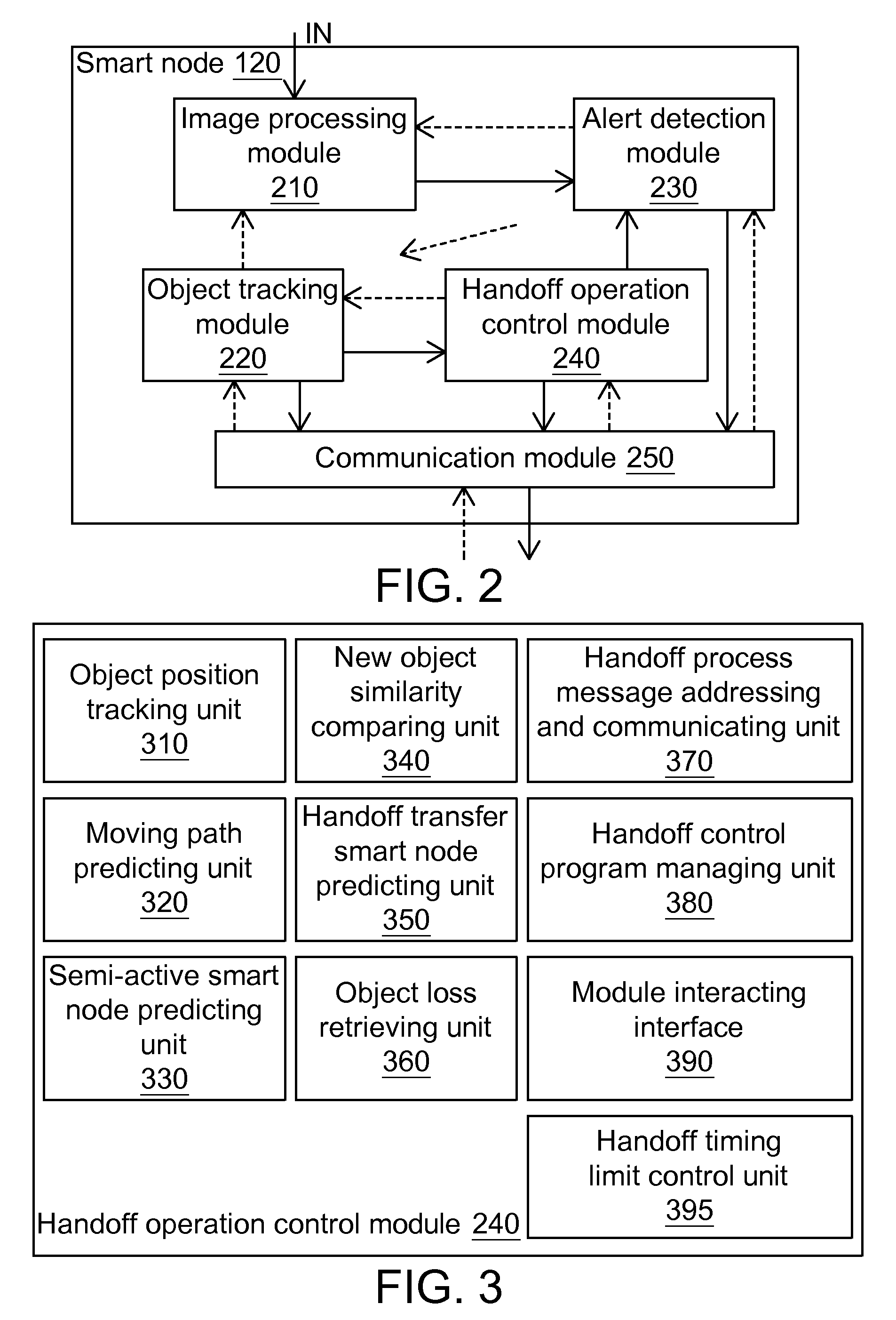

Object tracking system, method and smart node using active camera handoff

If an active smart node detects that an object leaves a center region of a FOV for a boundary region, the active smart node predicts a possible path of the object. When the object gets out of the FOV, the active smart node predicts the object appears in a FOV of another smart node according to the possible path and a spatial relation between cameras. The active smart node notifies another smart node to become a semi-active smart node which determines an image characteristic similarity between the object and a new object and returns to the active smart node if a condition is satisfied. The active smart node compares the returned characteristic similarity, an object discovery time at the semi-active smart node, and a distance between the active smart node and the semi-active smart node to calculate possibility.

Owner:IND TECH RES INST

Systems and Methods for High Dynamic Range Imaging Using Array Cameras

ActiveUS20160057332A1Television system detailsSignal generator with multiple pick-up deviceHigh-dynamic-range imagingActive camera

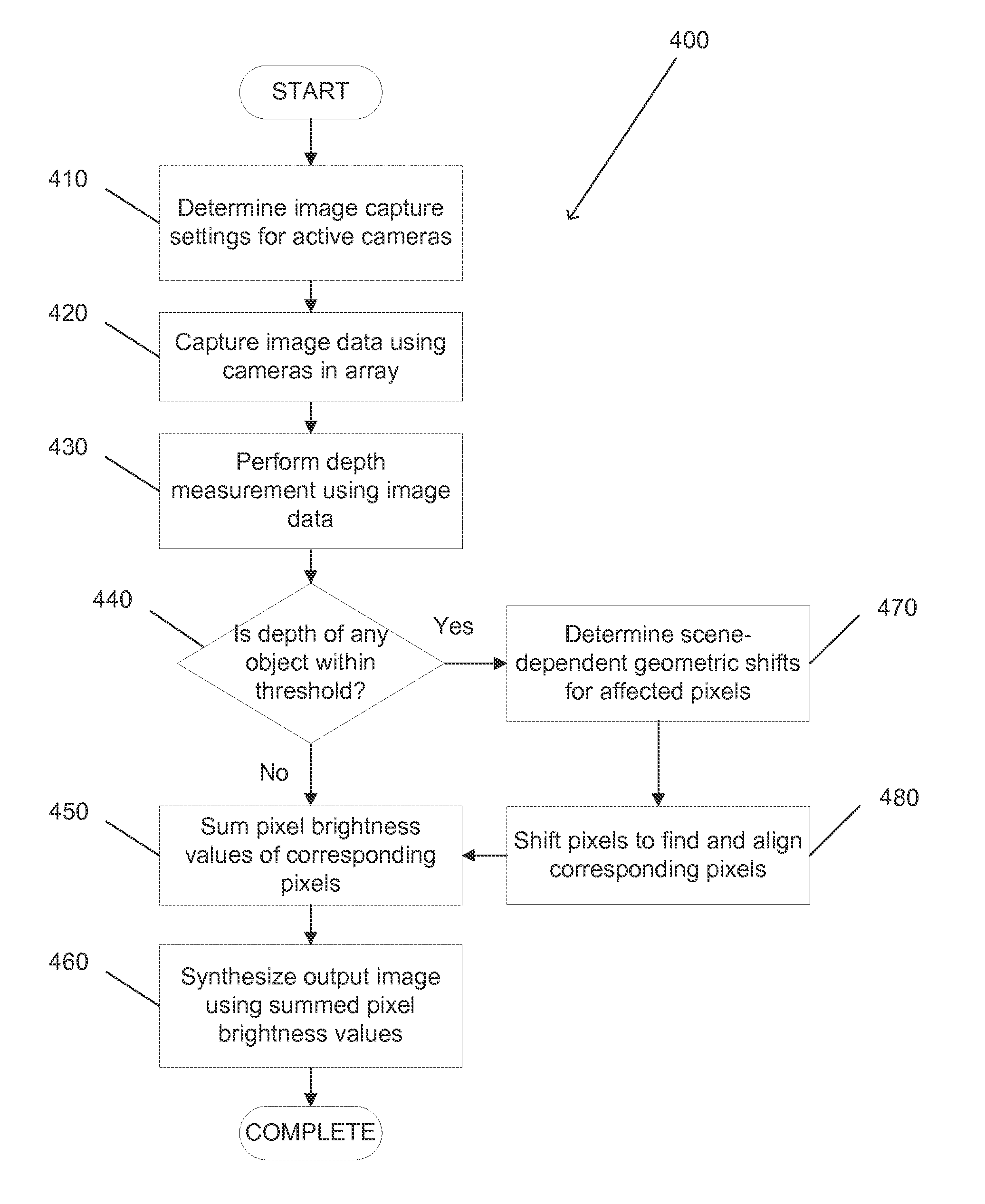

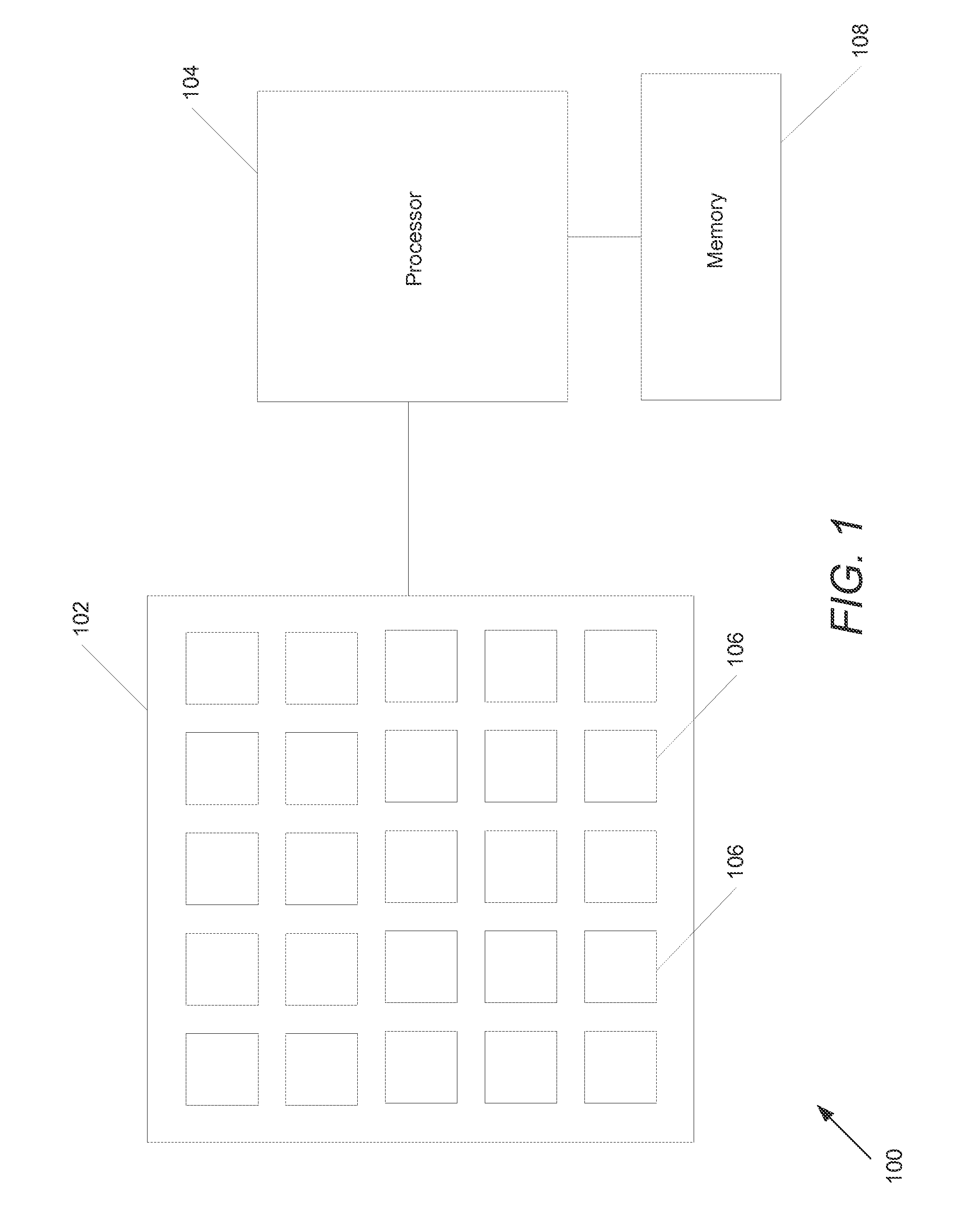

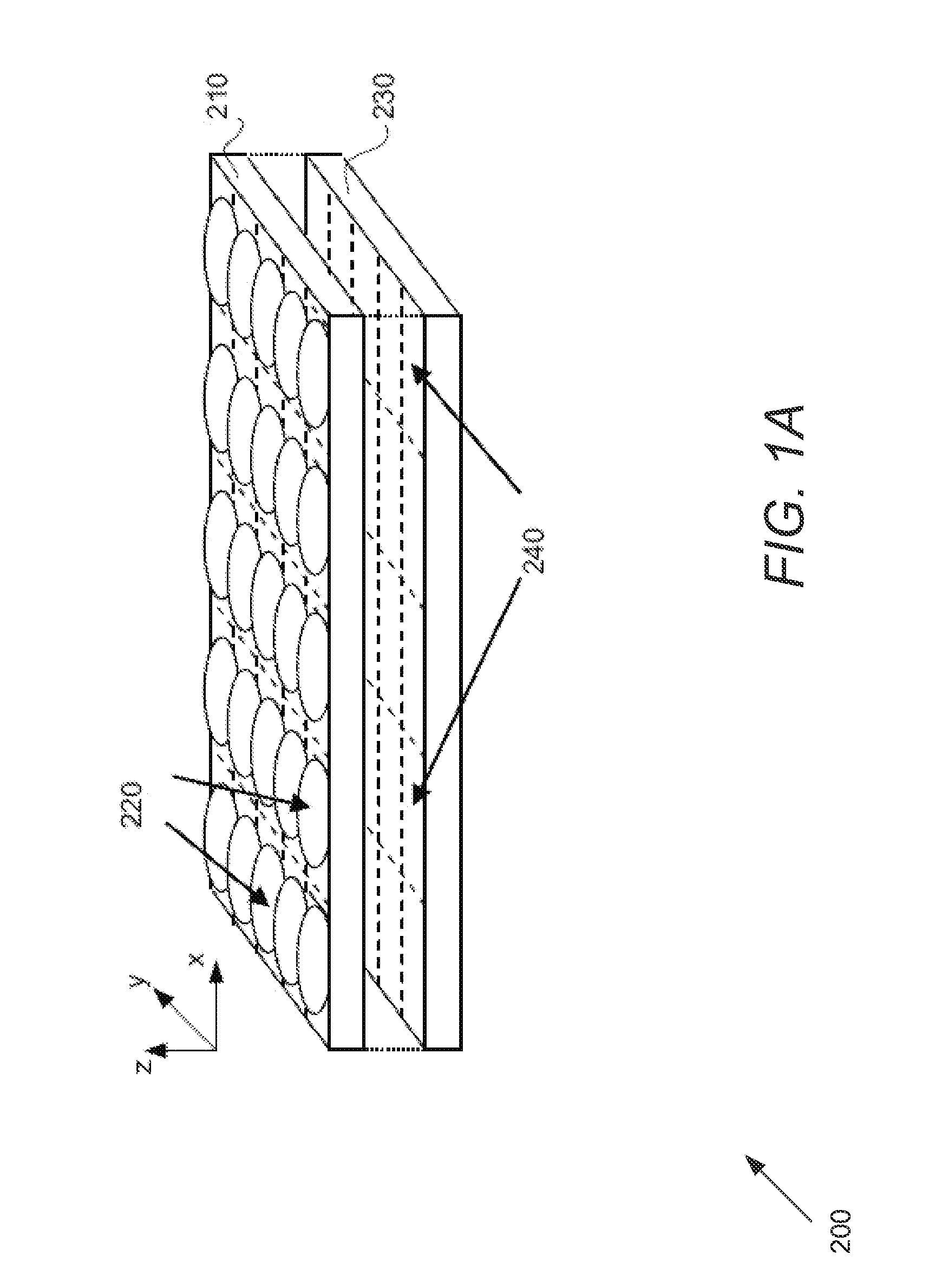

Systems and methods for high dynamic range imaging using array cameras in accordance with embodiments of the invention are disclosed. In one embodiment of the invention, a method of generating a high dynamic range image using an array camera includes defining at least two subsets of active cameras, determining image capture settings for each subset of active cameras, where the image capture settings include at least two exposure settings, configuring the active cameras using the determined image capture settings for each subset, capturing image data using the active cameras, synthesizing an image for each of the at least two subset of active cameras using the captured image data, and generating a high dynamic range image using the synthesized images.

Owner:FOTONATION LTD

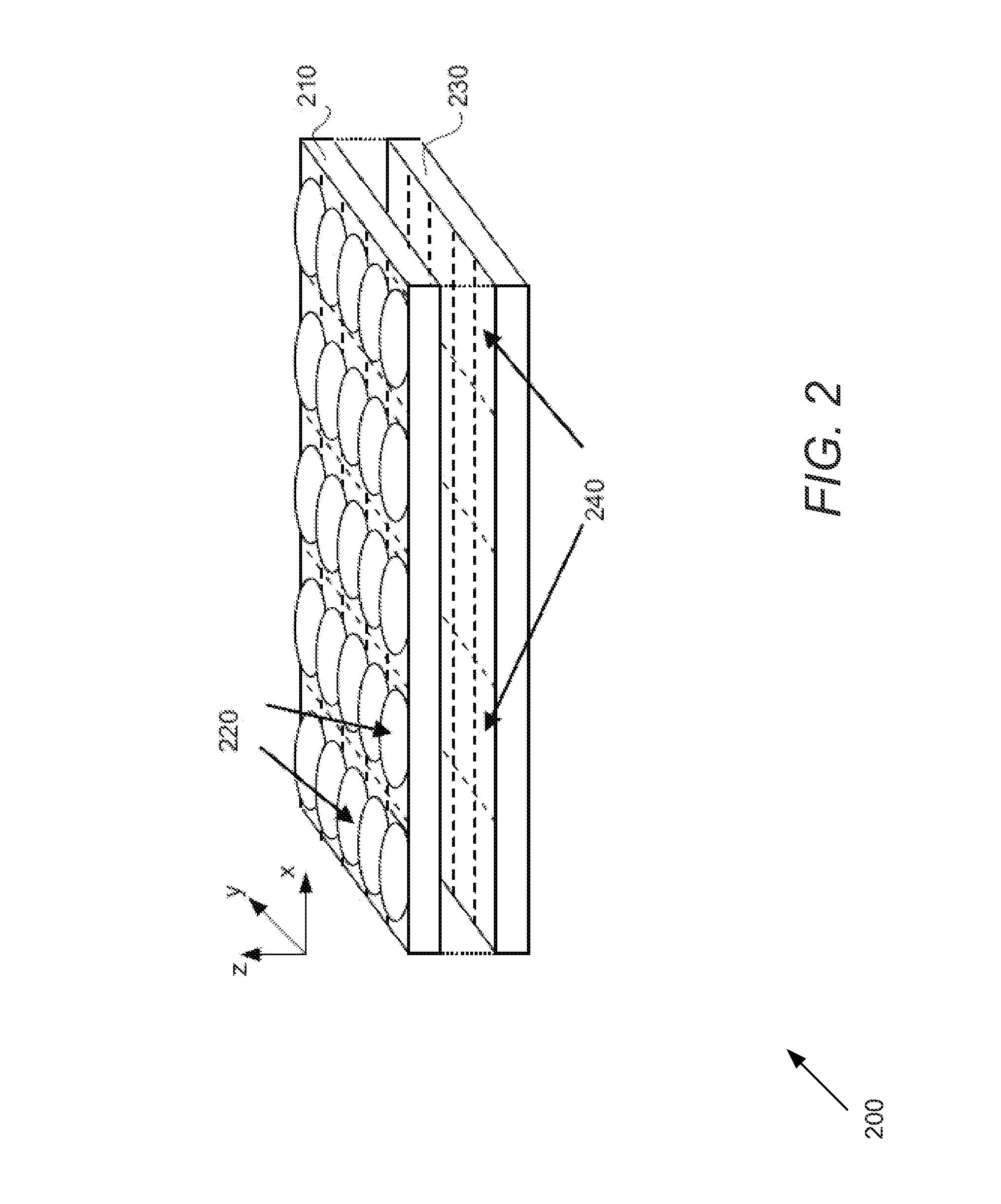

Systems and Methods for Measuring Scene Information While Capturing Images Using Array Cameras

Systems and methods for measuring scene information while capturing images using array cameras in accordance with embodiments of the invention are disclosed. In one embodiment, a method of measuring scene information while capturing an image using an array camera includes defining at least two subsets of active cameras, configuring the active cameras using image capture settings, capturing image data using the active cameras, synthesizing at least one image using image data captured by a first subset of active cameras, measuring scene information using image data captured by a second subset of active cameras, and determining whether the image capture settings satisfy at least one predetermined criterion for at least one image capture parameter using the measured scene information, where new image capture settings are determined and utilized to configure the active cameras upon a determination that the image capture settings do not satisfy the at least one predetermined criterion.

Owner:FOTONATION LTD

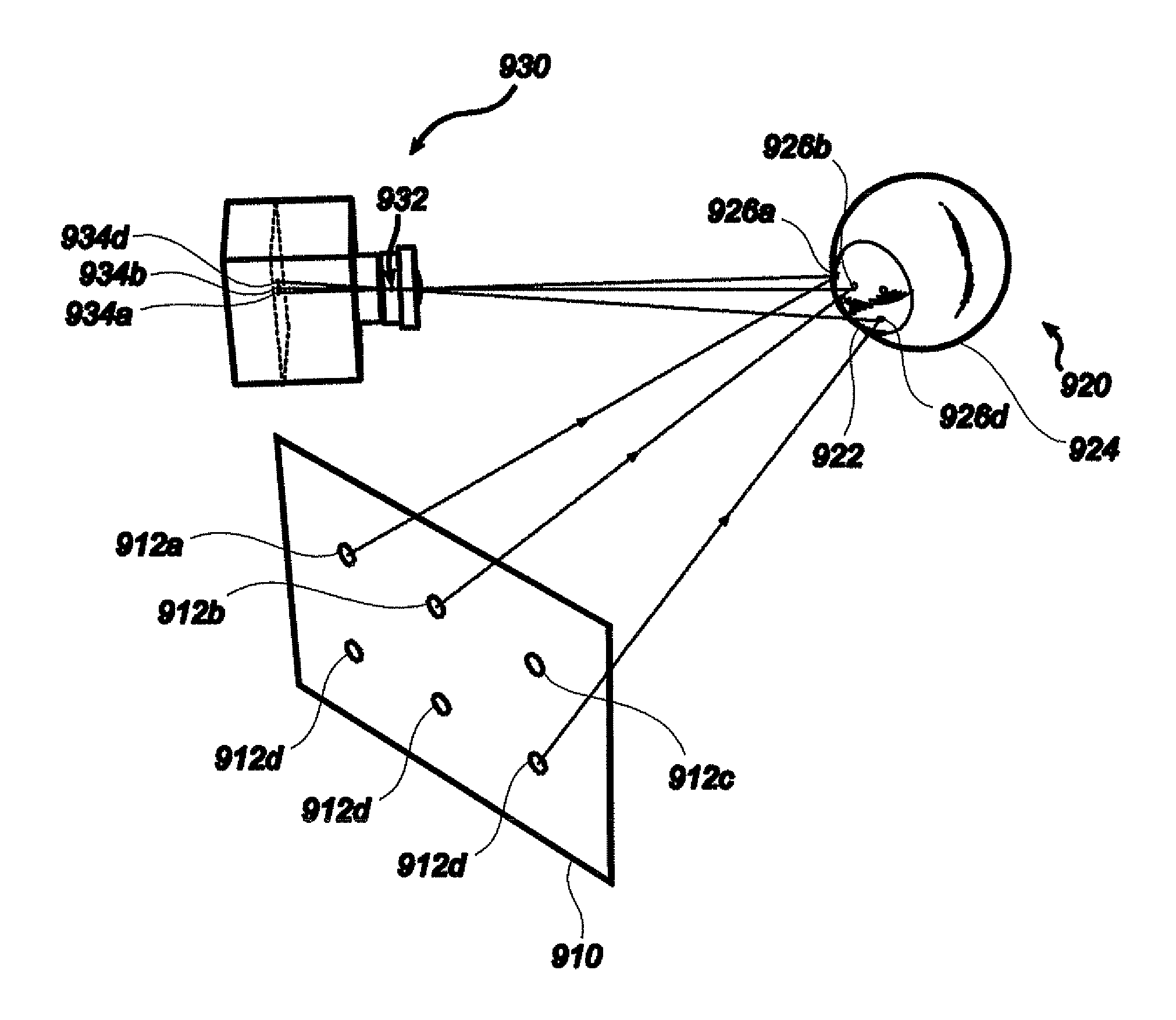

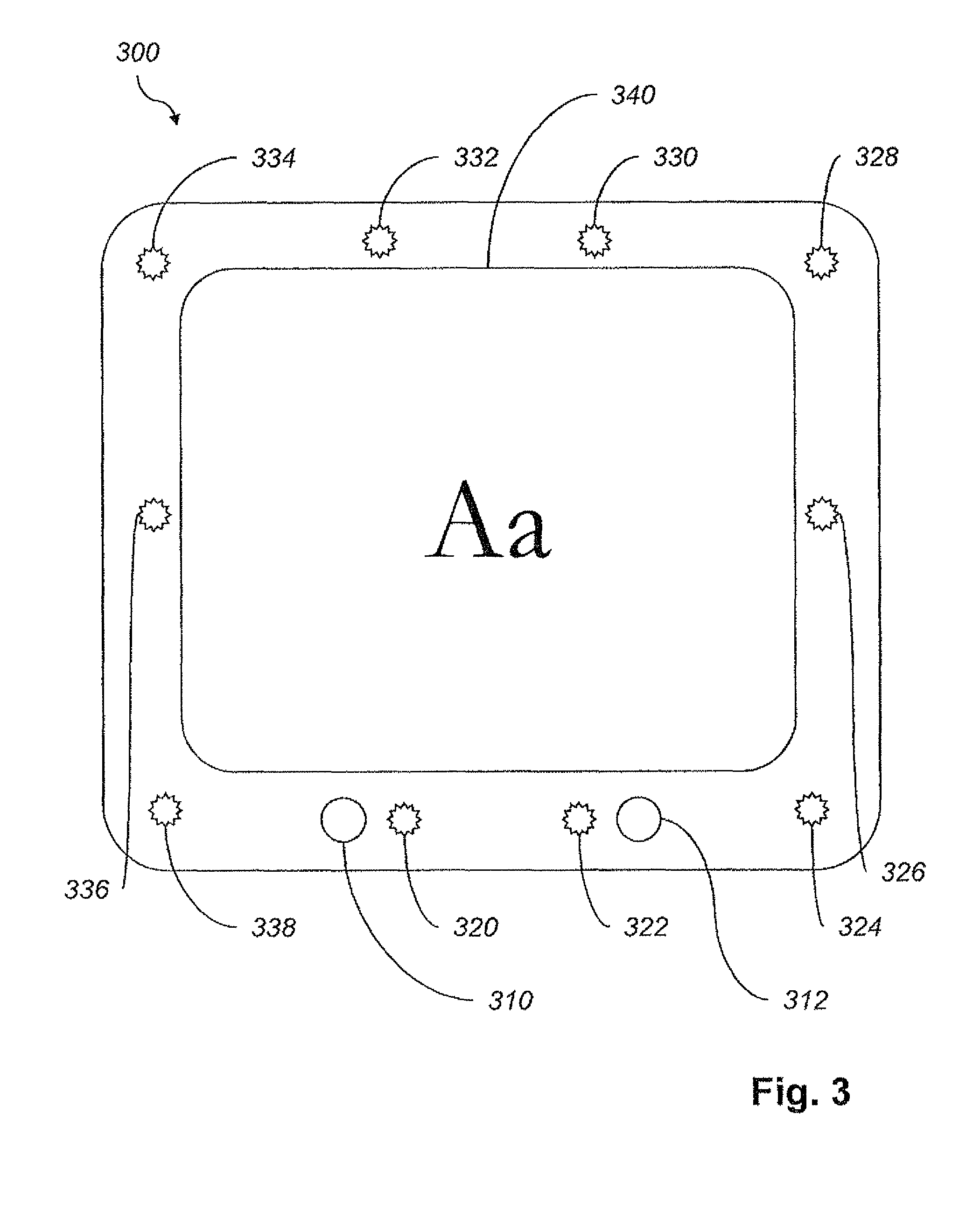

Adaptive camera and illuminator eyetracker

ActiveUS20100328444A1Minimize timeIncrease probabilityPrintersProjectorsImaging qualityActive camera

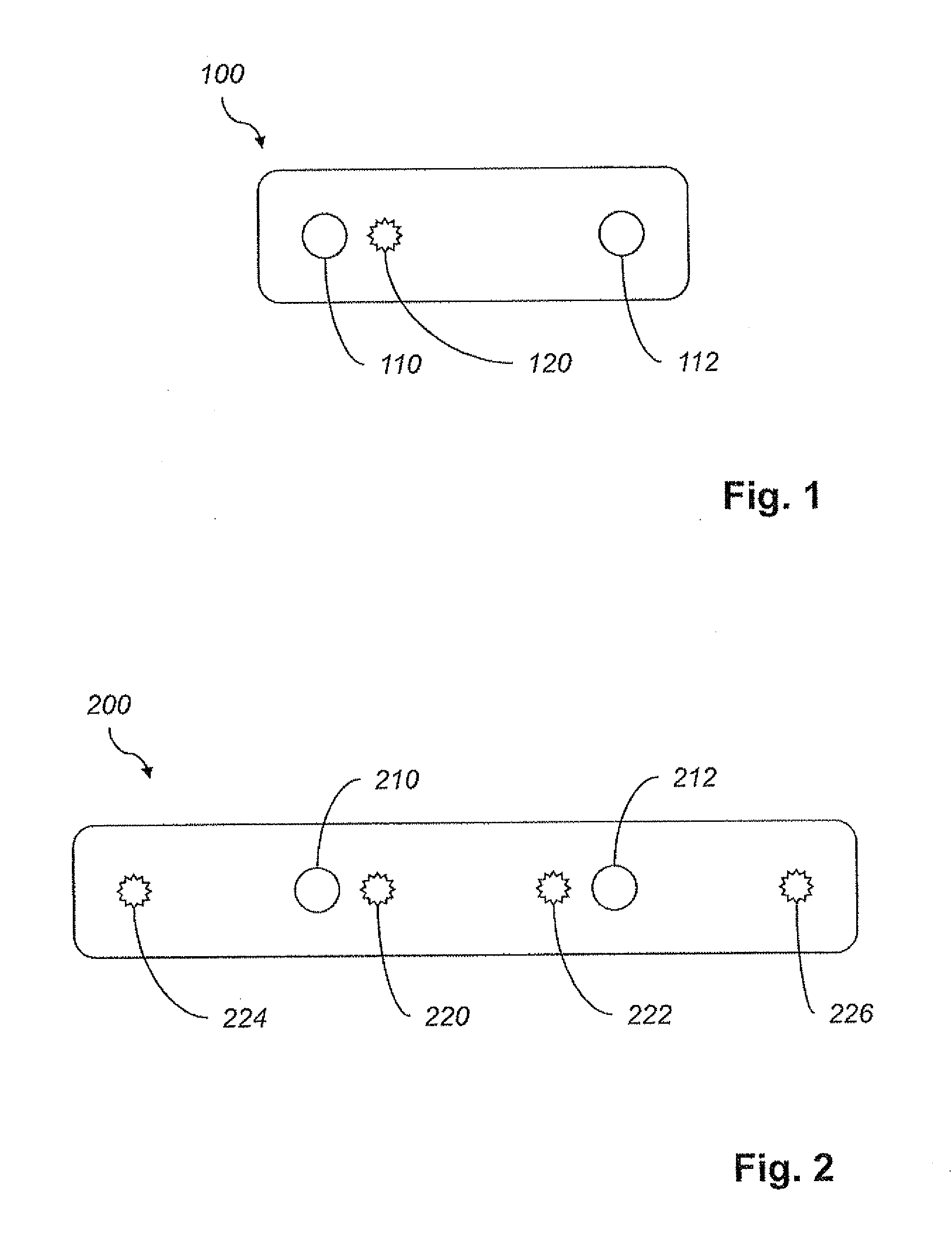

An eye tracker includes at least one illuminator for illuminating an eye, at least two cameras for imaging the eye, and a controller. The configuration of the reference illuminator(s) and cameras is such that, at least one camera is coaxial with a reference illuminator and at least one camera is non-coaxial with a reference illuminator. The controller is adapted to select one of the cameras to be active to maximize an image quality metric and avoid obscuring objects. The eye tracker is operable in a dual-camera mode to improve accuracy. A method and computer-program product for selecting a combination of an active reference illuminator from a number of reference illuminators, and an active camera from a plurality of cameras are provided.

Owner:TOBII TECH AB

Systems and methods for reducing motion blur in images or video in ultra low light with array cameras

Owner:FOTONATION LTD

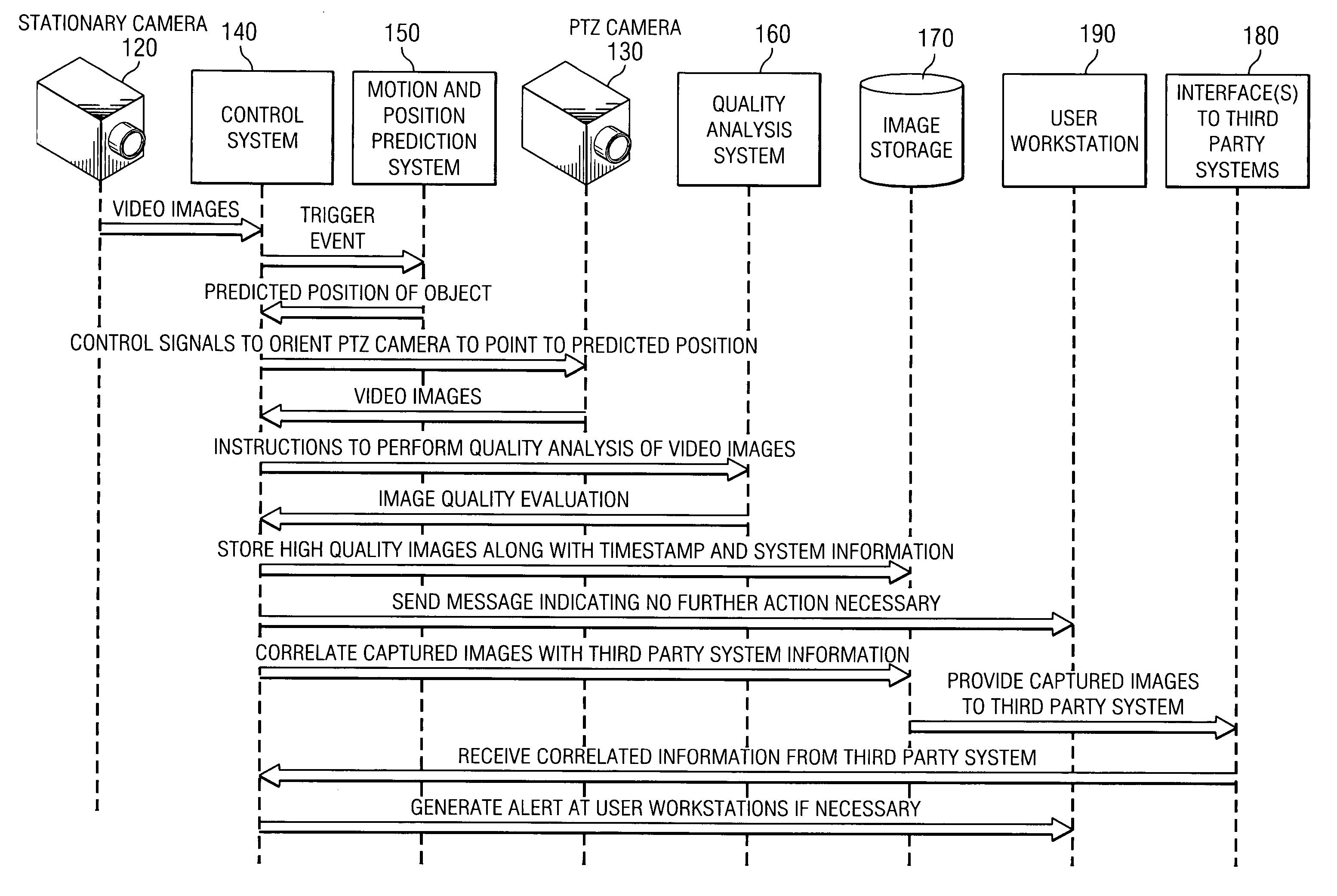

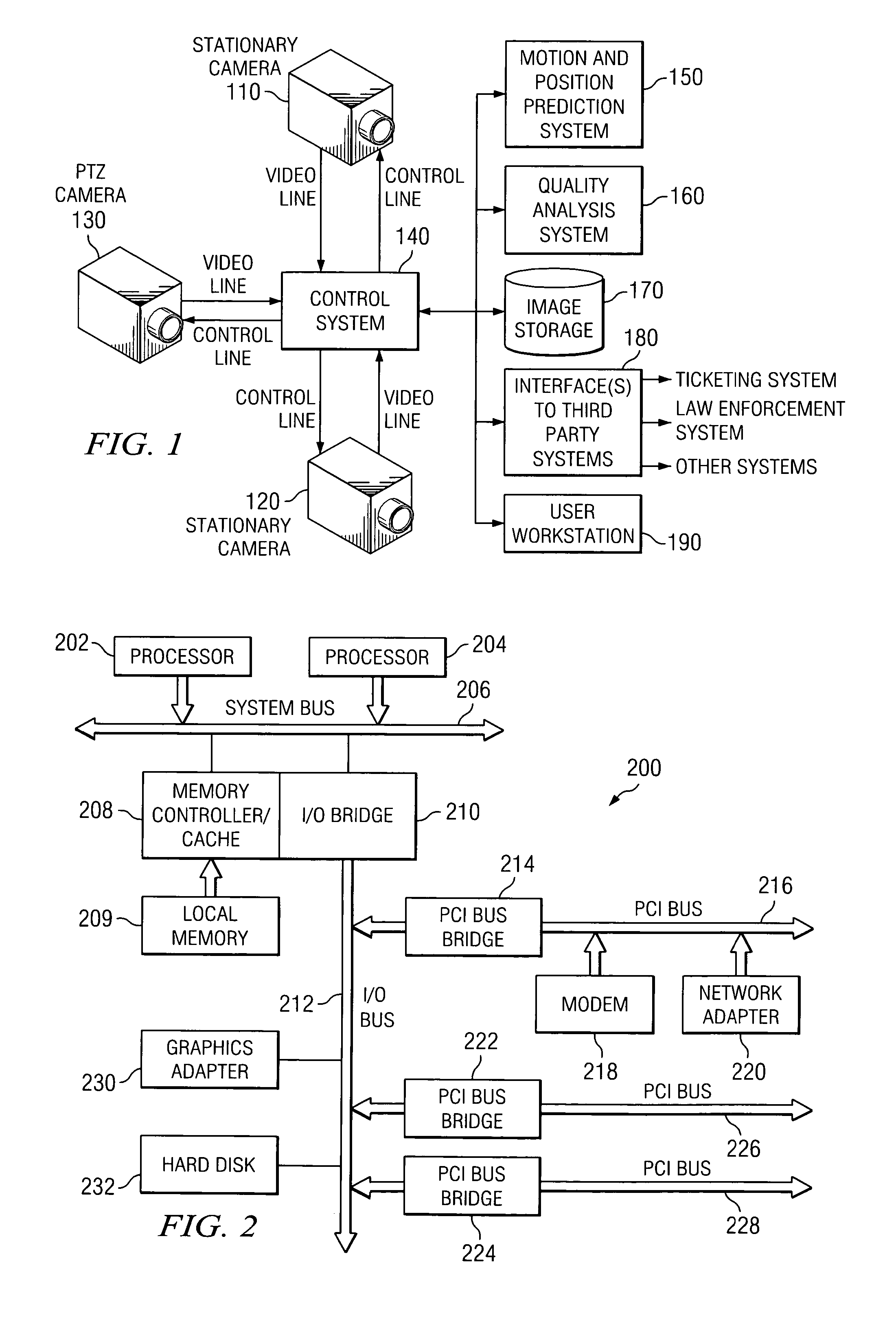

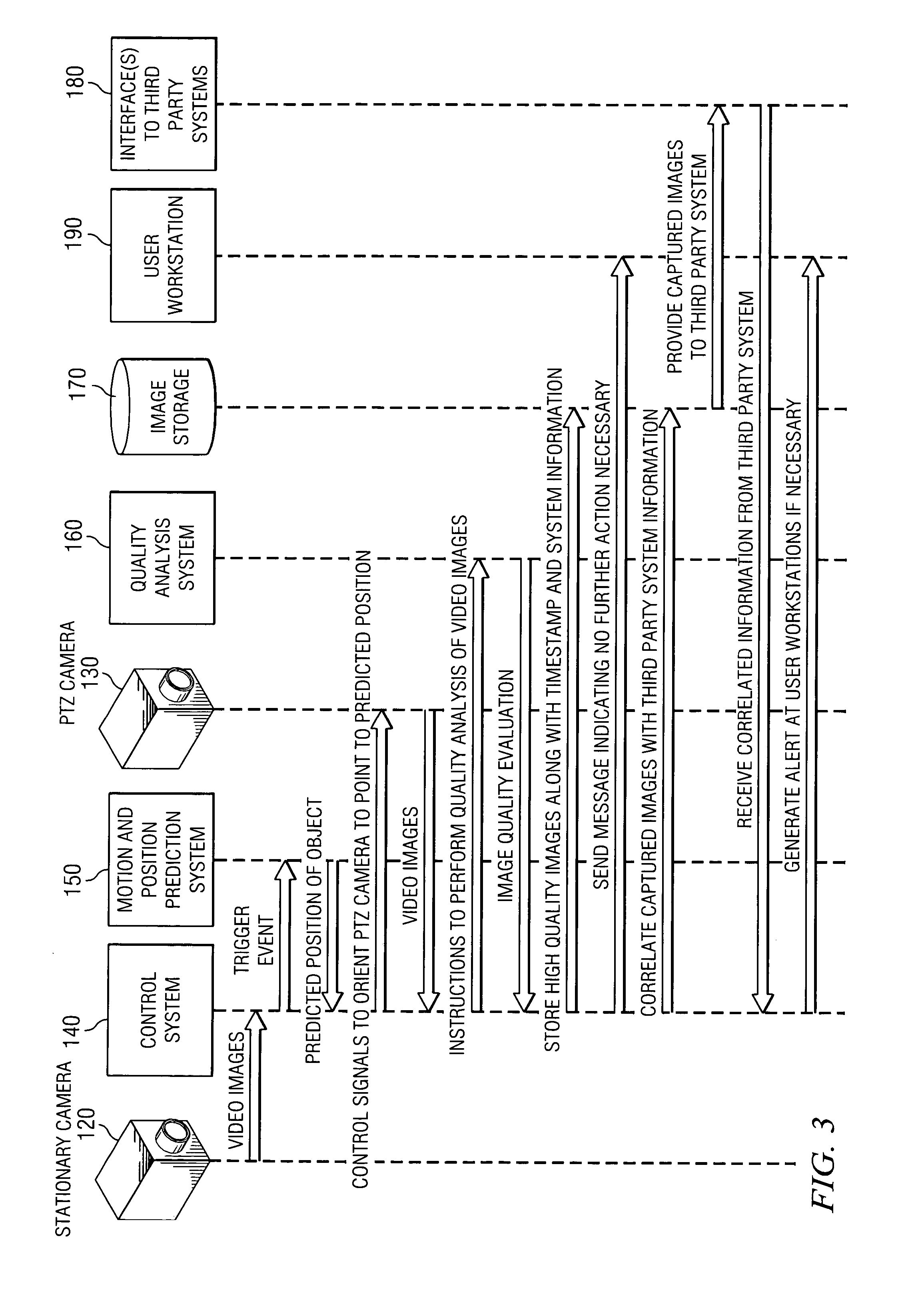

System and method for assuring high resolution imaging of distinctive characteristics of a moving object

InactiveUS20050244033A1Less likelihoodSolve the real problemCharacter and pattern recognitionColor television detailsHigh resolution imagingPan tilt zoom

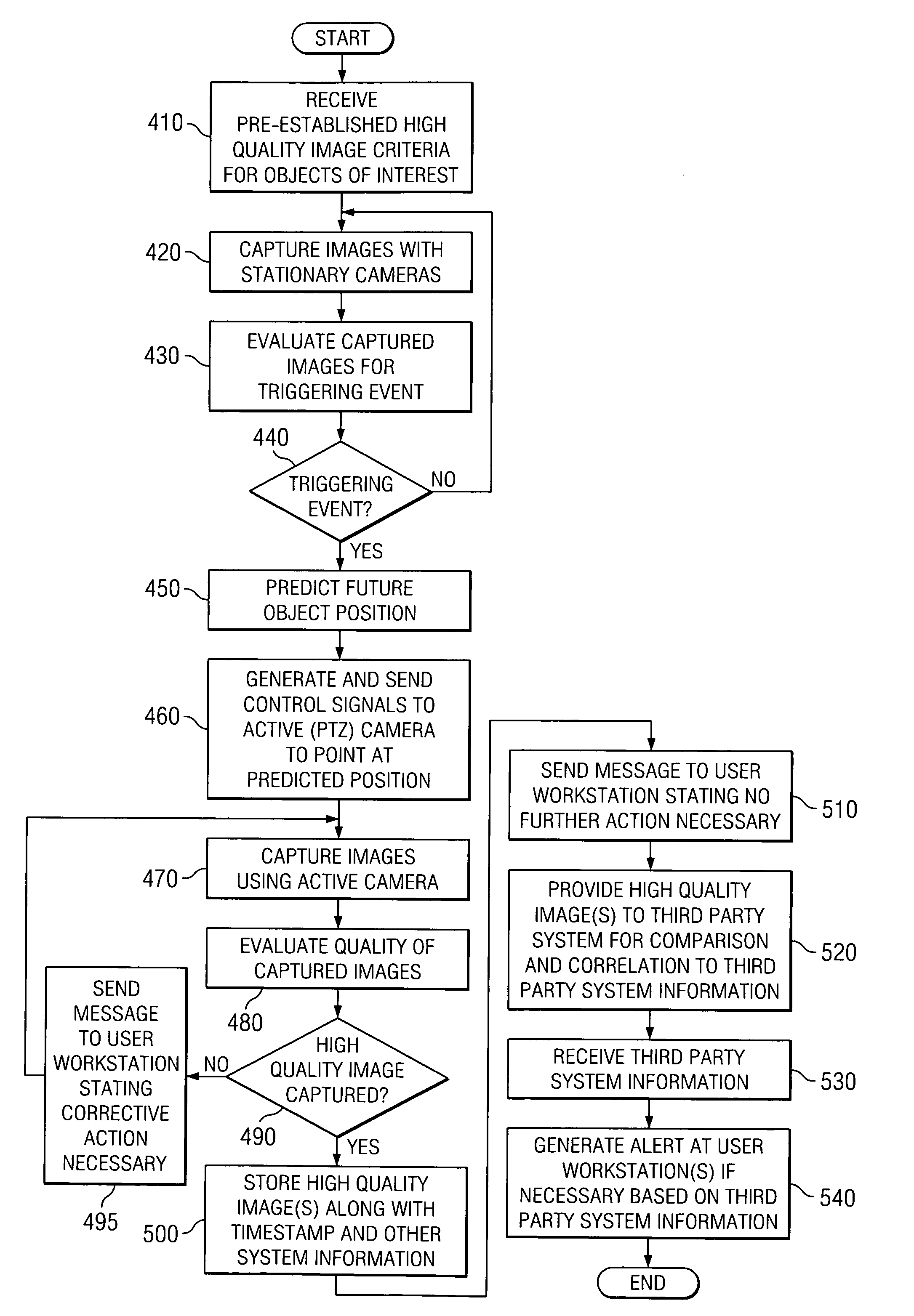

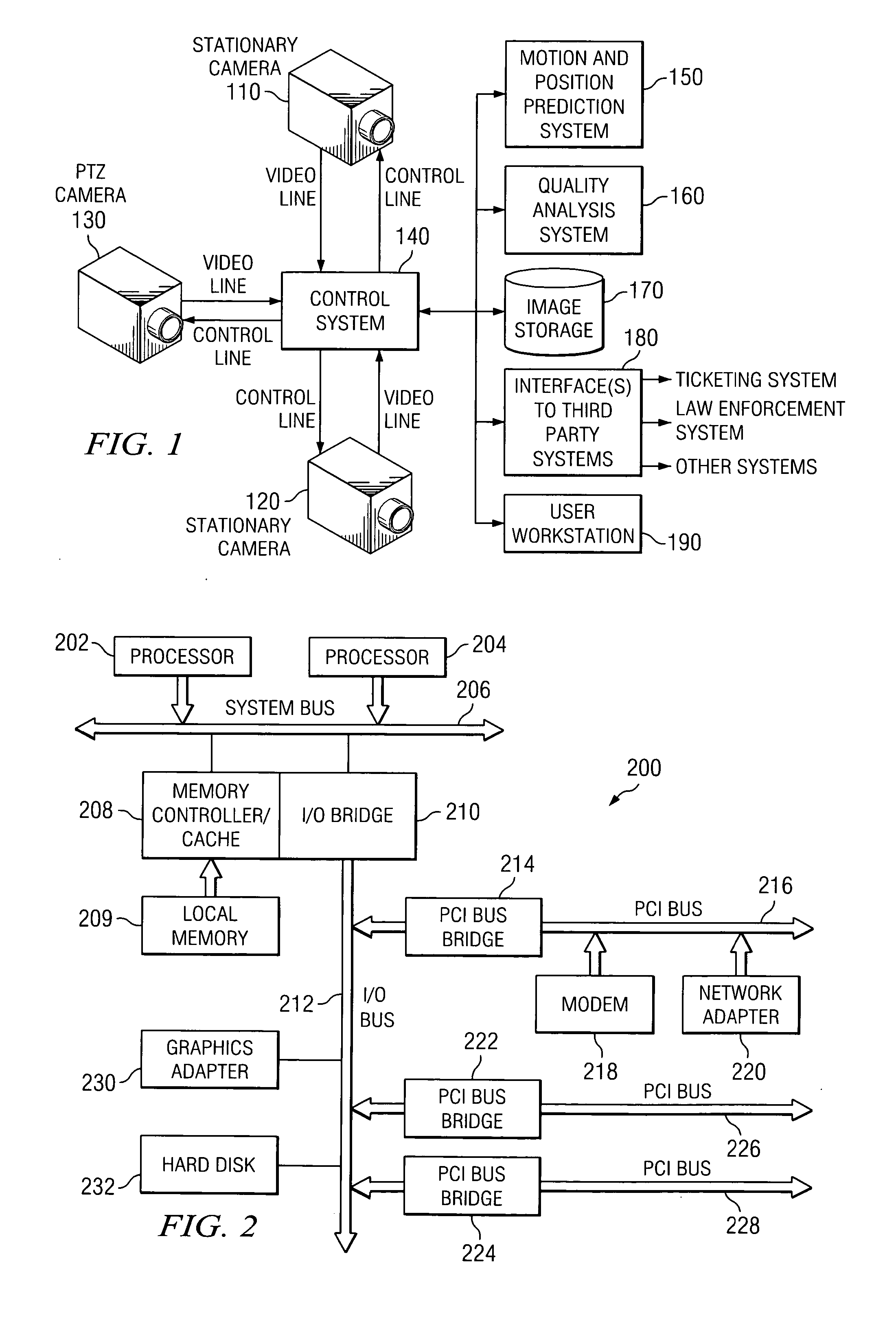

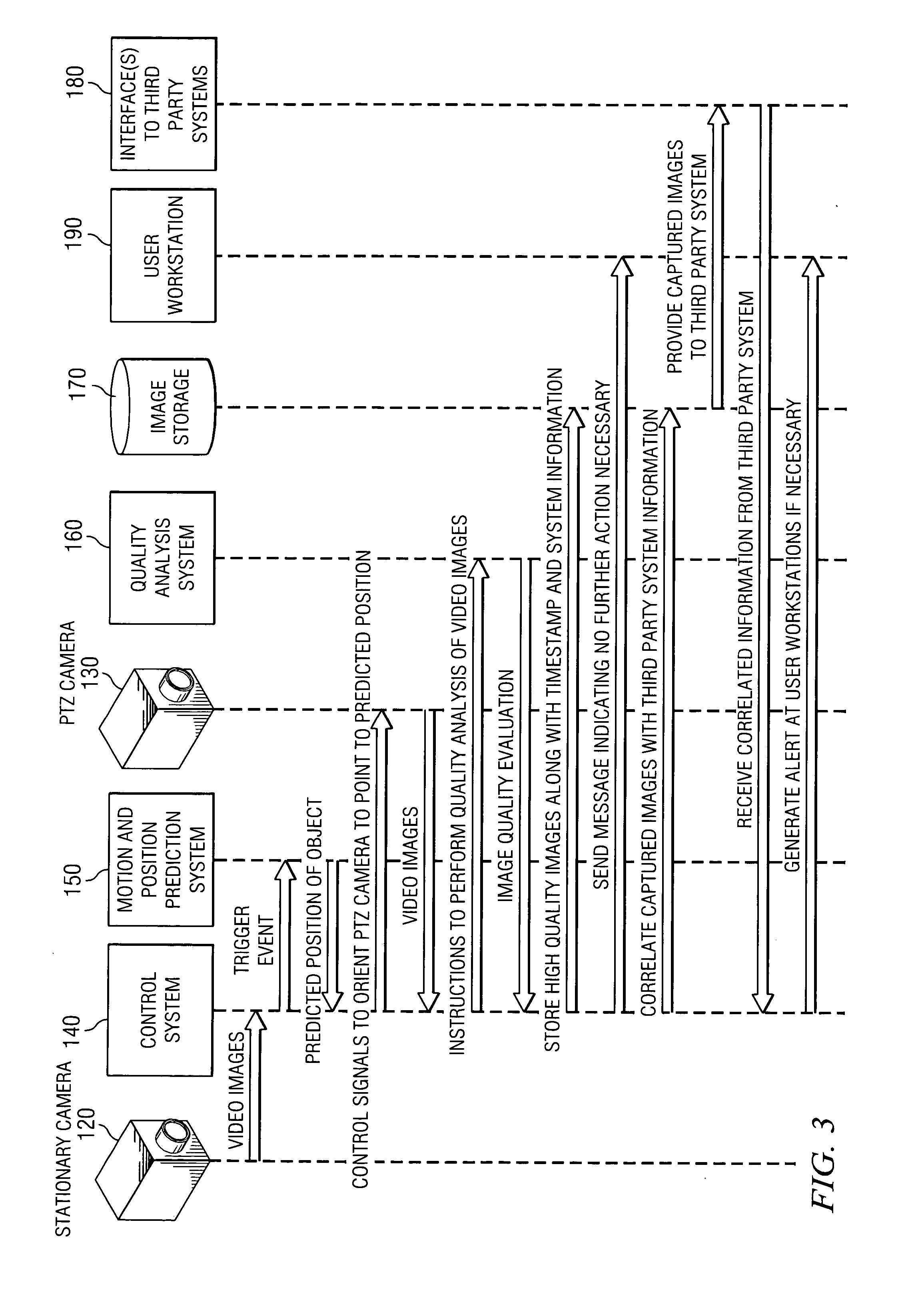

A system and method for assuring a high resolution image of an object, such as the face of a person, passing through a targeted space are provided. Both stationary and active or pan-tilt-zoom cameras are utilized. The at least one stationary camera acts as a trigger point such that when a person passes through a predefined targeted area of the at least one stationary camera, the system is triggered for object imaging and tracking. Upon the occurrence of a triggering event in the system, the system predicts the motion and position of the person. Based on this predicted position of the person, an active camera that is capable of obtaining an image of the predicted position is selected and may be controlled to focus its image capture area on the predicted position of the person. After the active camera control and image capture processes, the system evaluates the quality of the captured face images and reports the result to the security agents and interacts with the user.

Owner:IBM CORP

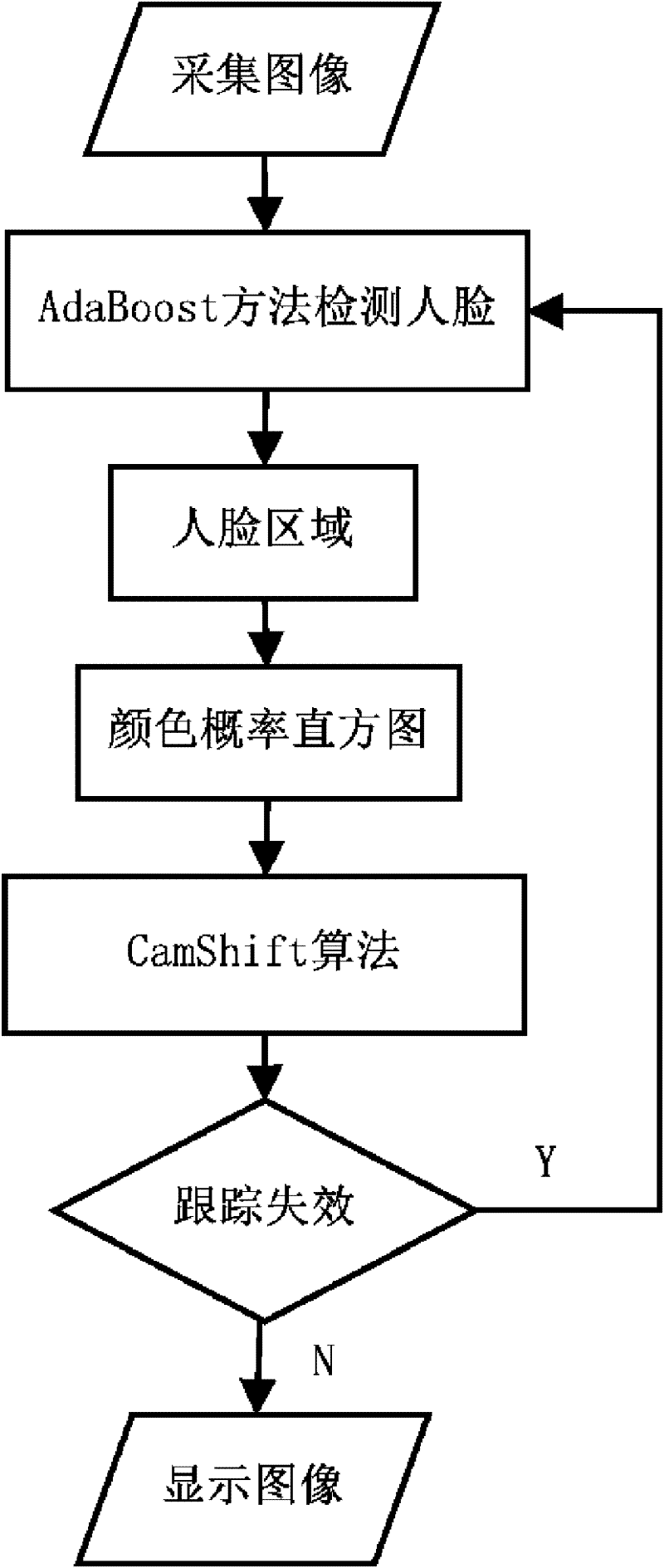

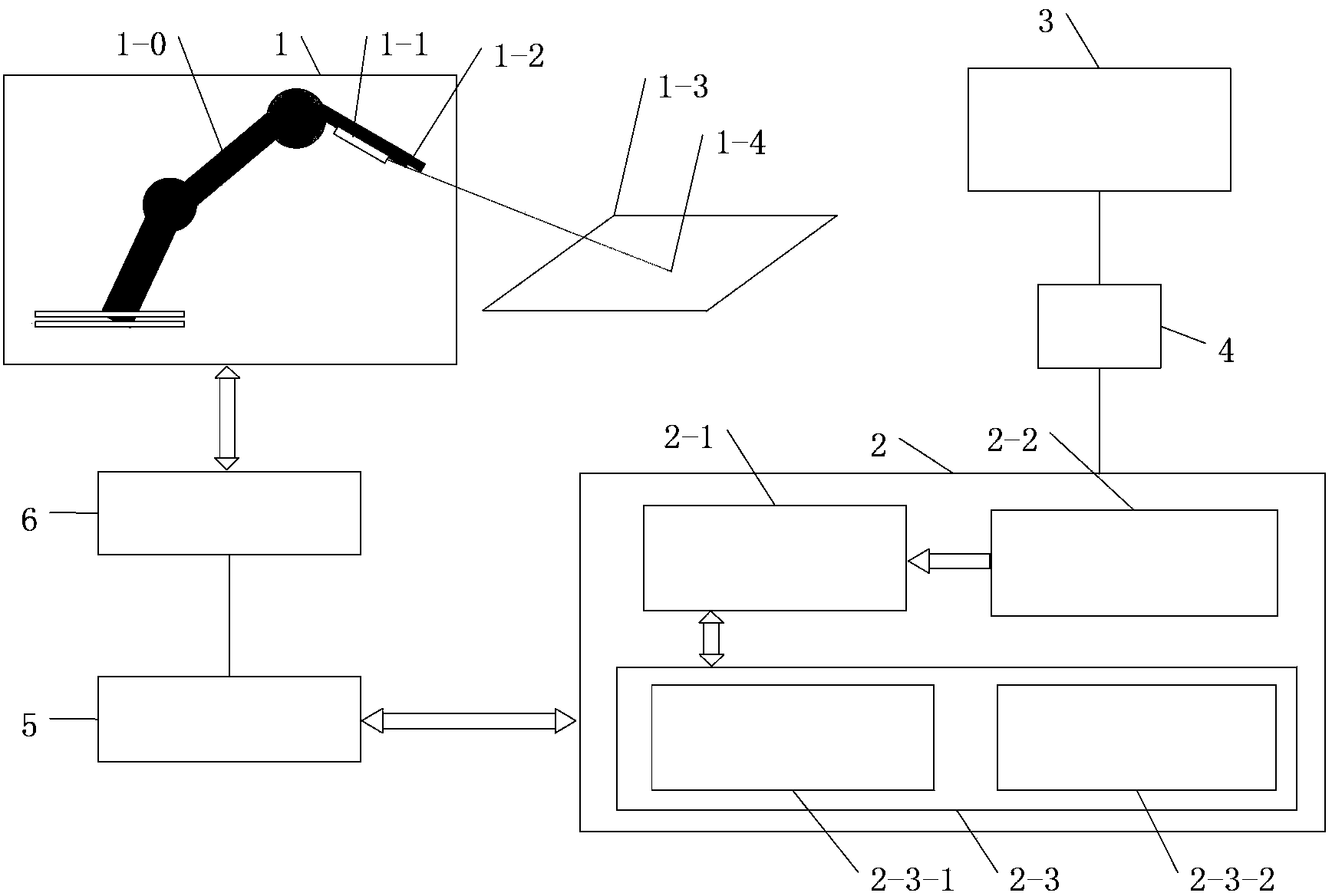

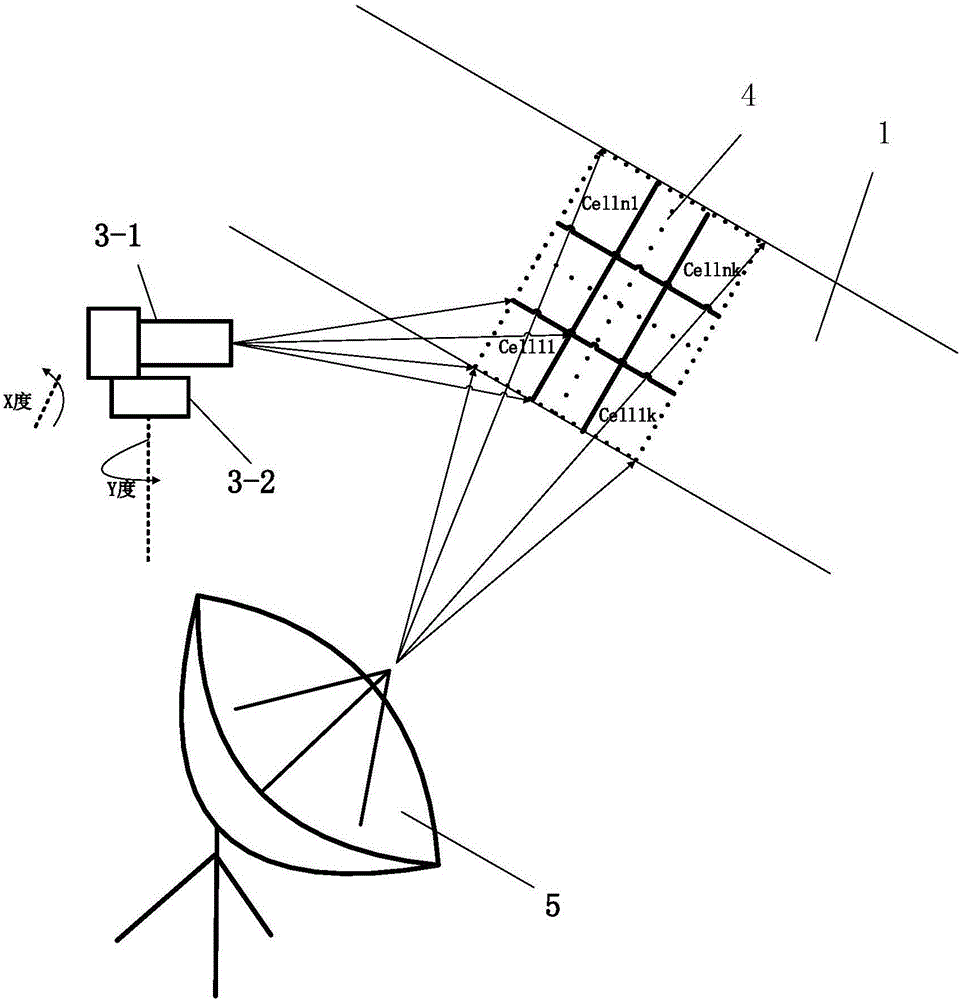

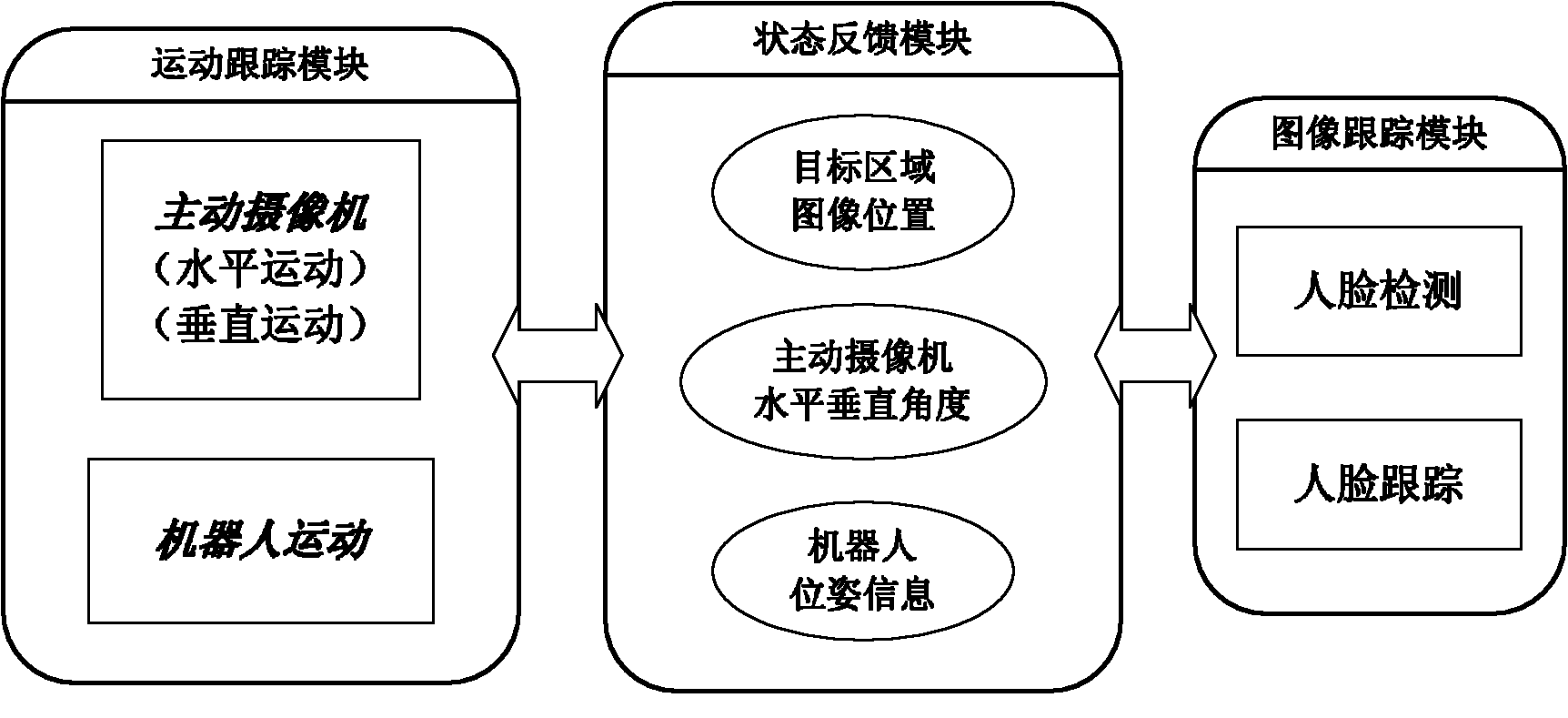

Active vision human face tracking method and tracking system of robot

ActiveCN102411368AThere will be no loss of targetOvercome limitationsCharacter and pattern recognitionPosition/course control in two dimensionsFace detectionPattern perception

The invention discloses an active vision human face tracking method and tracking system of a robot; the tracking method comprises the steps that: (1) the mobile robot acquires an environment information image and detects a human face target through an active camera; and (2) after the human face target is detected, the robot tracks the human face target, and maintains the human face target in the center of the image through the active camera and the movement of the robot. The tracking system comprises the active camera, an image tracking module, a movement tracking module, a hierarchy buffer module and a state feedback module. The invention realizes the automatic human face detection and tracking by the robot, overcomes the limitation of a smaller image vision angle and establishes a perception-movement ring of the mobile robot based on active vision by combining the image tracking with the movement tracking, so that the movement scope for human face tracking is expanded to 360 degrees, the all-sided expansion of the tracking scope is ensured. A two-layer buffer region ensures the tracking continuity, so that the human face target is always maintained in the center of the image.

Owner:PEKING UNIV

System and method for assuring high resolution imaging of distinctive characteristics of a moving object

InactiveUS7542588B2Less likelihoodSolve the real problemCharacter and pattern recognitionColor television detailsHigh resolution imagingPan tilt zoom

A system and method for assuring a high resolution image of an object, such as the face of a person, passing through a targeted space are provided. Both stationary and active or pan-tilt-zoom cameras are utilized. The at least one stationary camera acts as a trigger point such that when a person passes through a predefined targeted area of the at least one stationary camera, the system is triggered for object imaging and tracking. Upon the occurrence of a triggering event in the system, the system predicts the motion and position of the person. Based on this predicted position of the person, an active camera that is capable of obtaining an image of the predicted position is selected and may be controlled to focus its image capture area on the predicted position of the person. After the active camera control and image capture processes, the system evaluates the quality of the captured face images and reports the result to the security agents and interacts with the user.

Owner:INT BUSINESS MASCH CORP

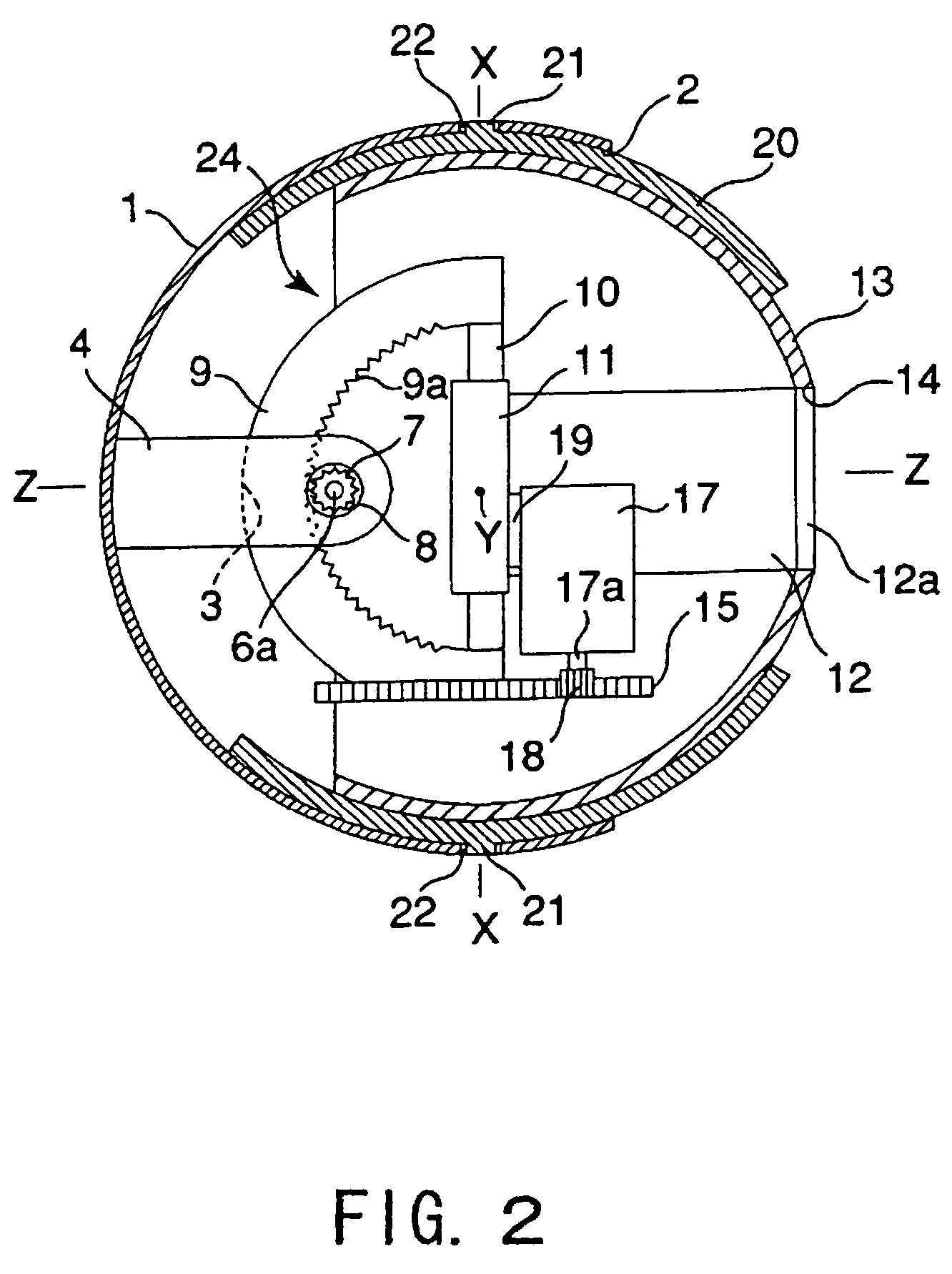

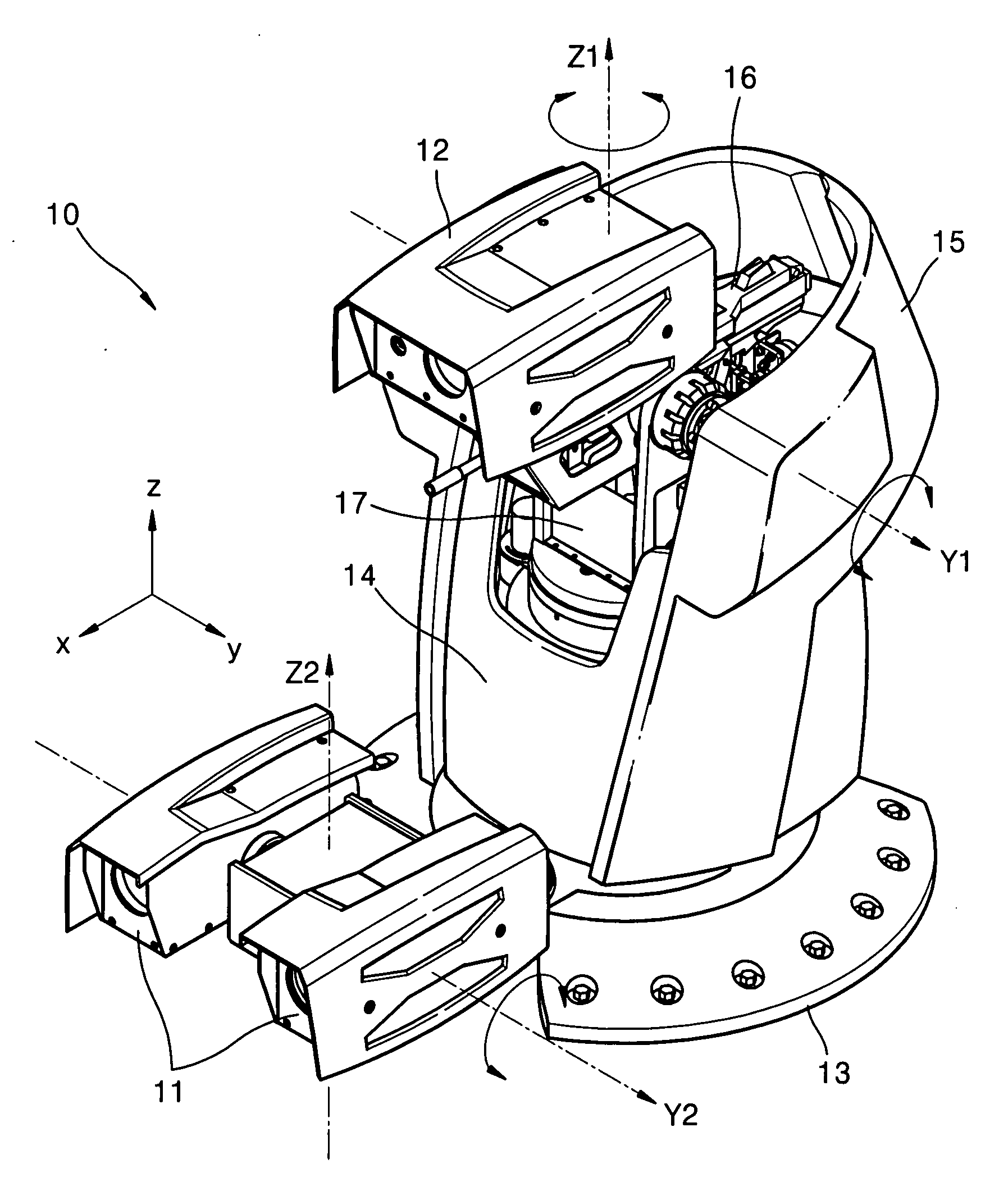

Active camera apparatus and robot apparatus

An outer body of ball shell type has an opening. A camera is located in the outer body and receives an image from outside of the outer body through the opening. A camera support unit is located in the outer body and rotationally supports the camera along a first axis and a second axis mutually crossed at a center of the outer body. A first camera actuator is located in the outer body and rotationally actuates the camera around the first axis. A second camera actuator is located in the outer body and rotationally actuates the camera around the second axis.

Owner:KK TOSHIBA

Adaptive camera and illuminator eyetracker

ActiveUS8339446B2Increase probabilityPower dissipationPrintersProjectorsImaging qualityActive camera

Owner:TOBII TECH AB

Active camera apparatus and robot apparatus

Owner:KK TOSHIBA

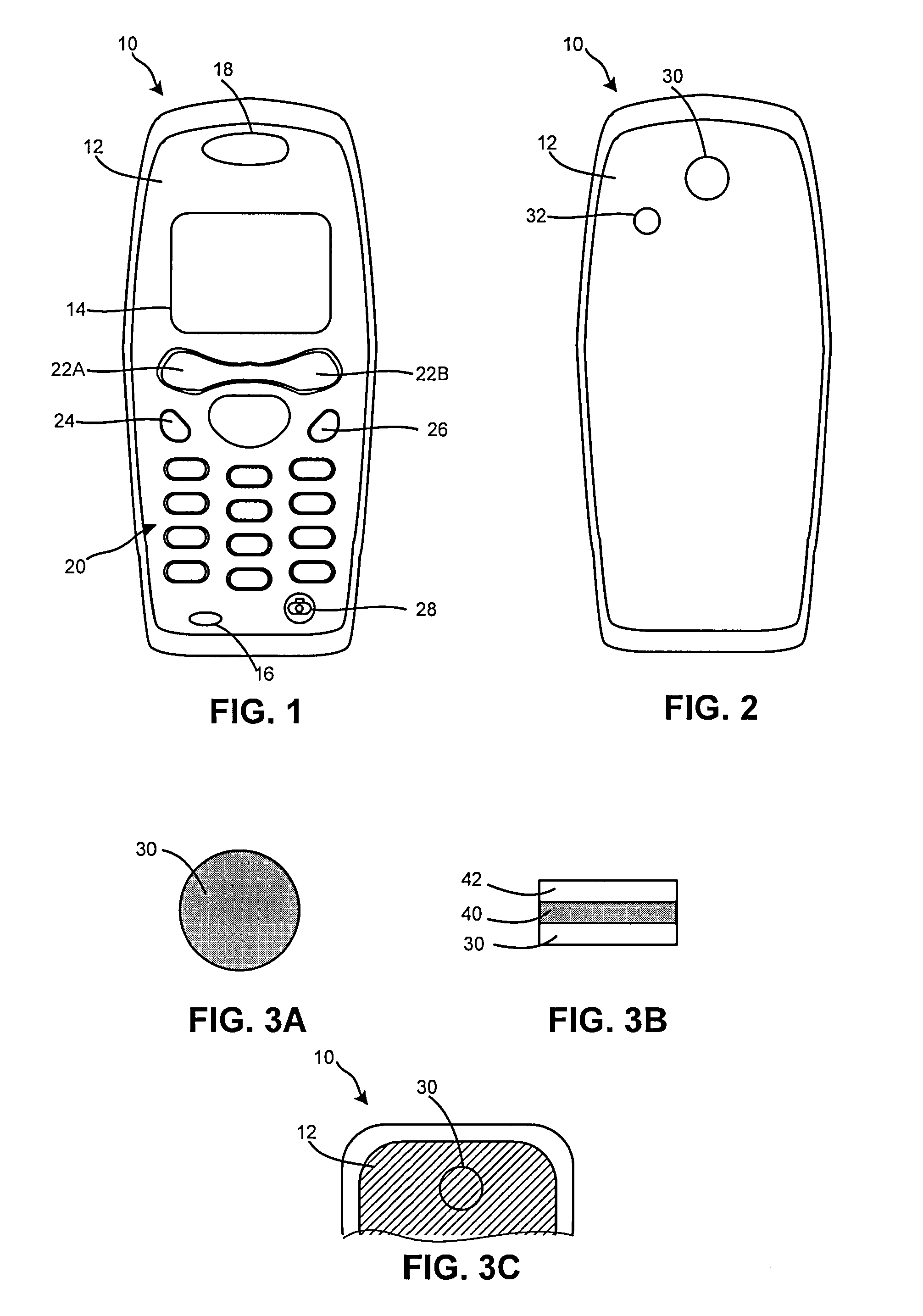

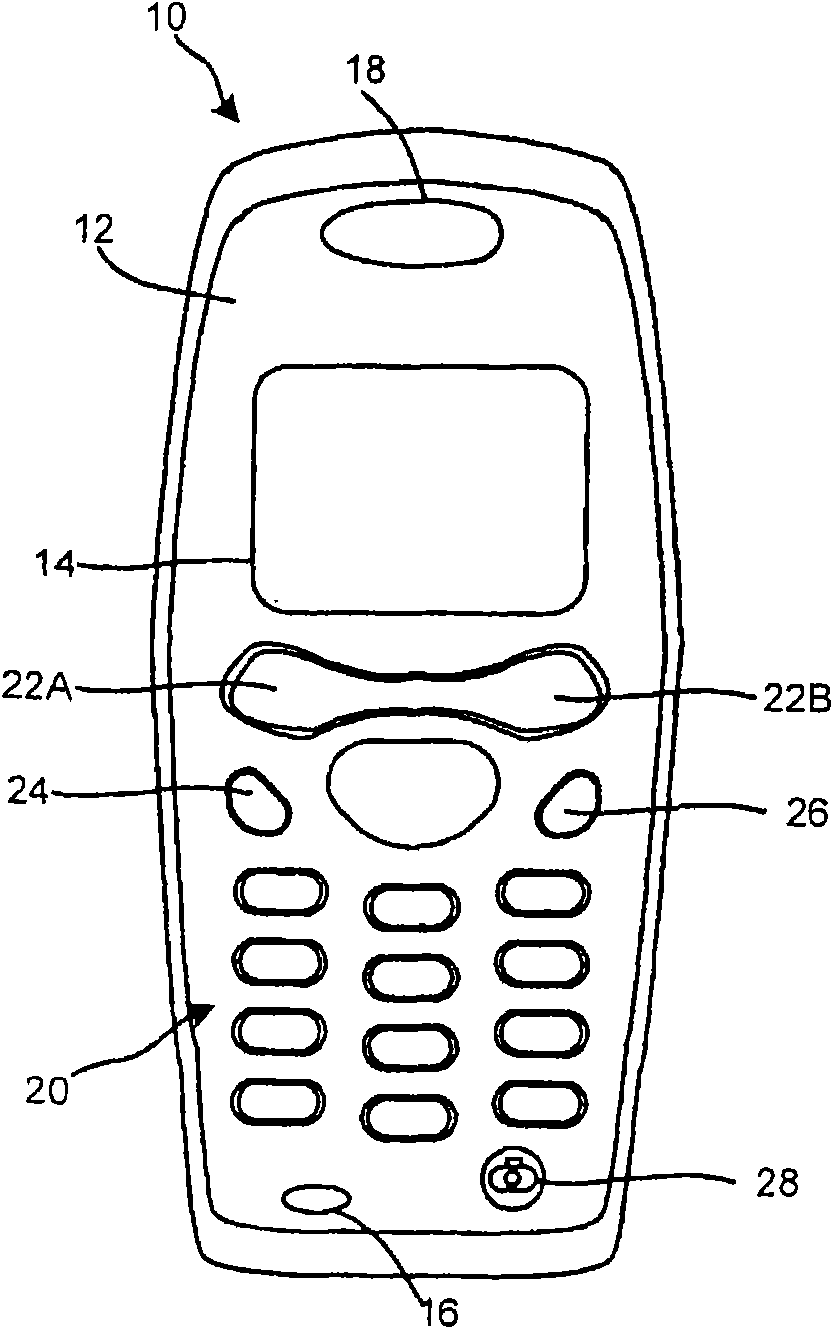

Thin active camera cover for an electronic device

InactiveUS20080304819A1Sacrificing sizeSacrificing convenienceTelevision system detailsSubstation equipmentCamera lensActive camera

An electronic device includes camera circuitry for carrying out a camera related operation, the camera circuitry including a camera lens that is at least partially covered by a visual indicator element. The visual indicator element is substantially opaque when the camera circuitry is in a first state (e.g., an “off” state) and the visual indicator element is substantially transparent when the camera circuitry is in a second state (e.g., an “on” state). Optionally, an audible and / or visual indication mechanism may also be output to the user when the camera circuitry is activated.

Owner:SONY ERICSSON MOBILE COMM AB

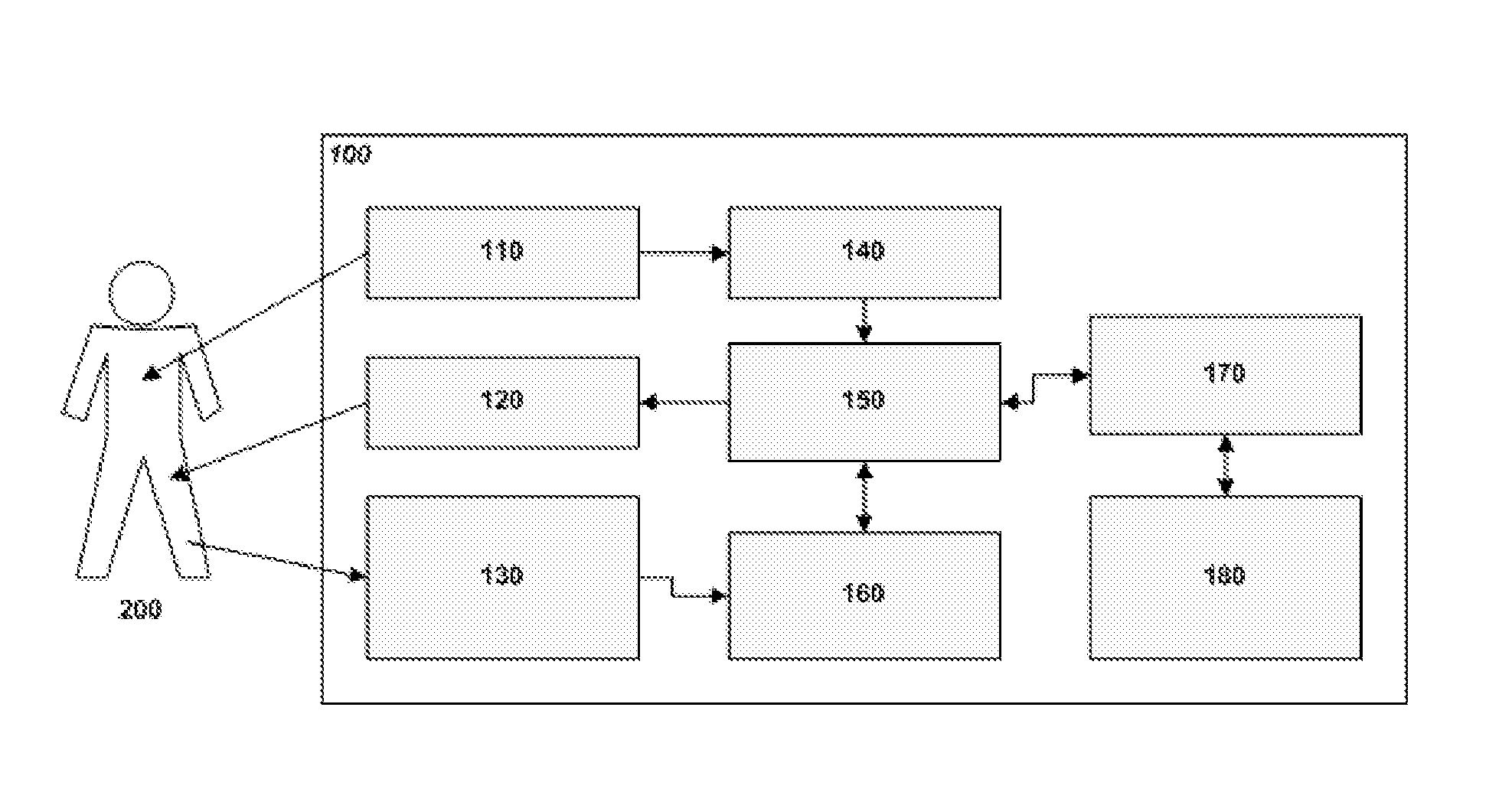

Functional optical coherent imaging

ActiveUS20110169978A1Improve spatial resolutionGood statistical confidenceTelevision system detailsDiagnostics using lightStatistical analysisBody area

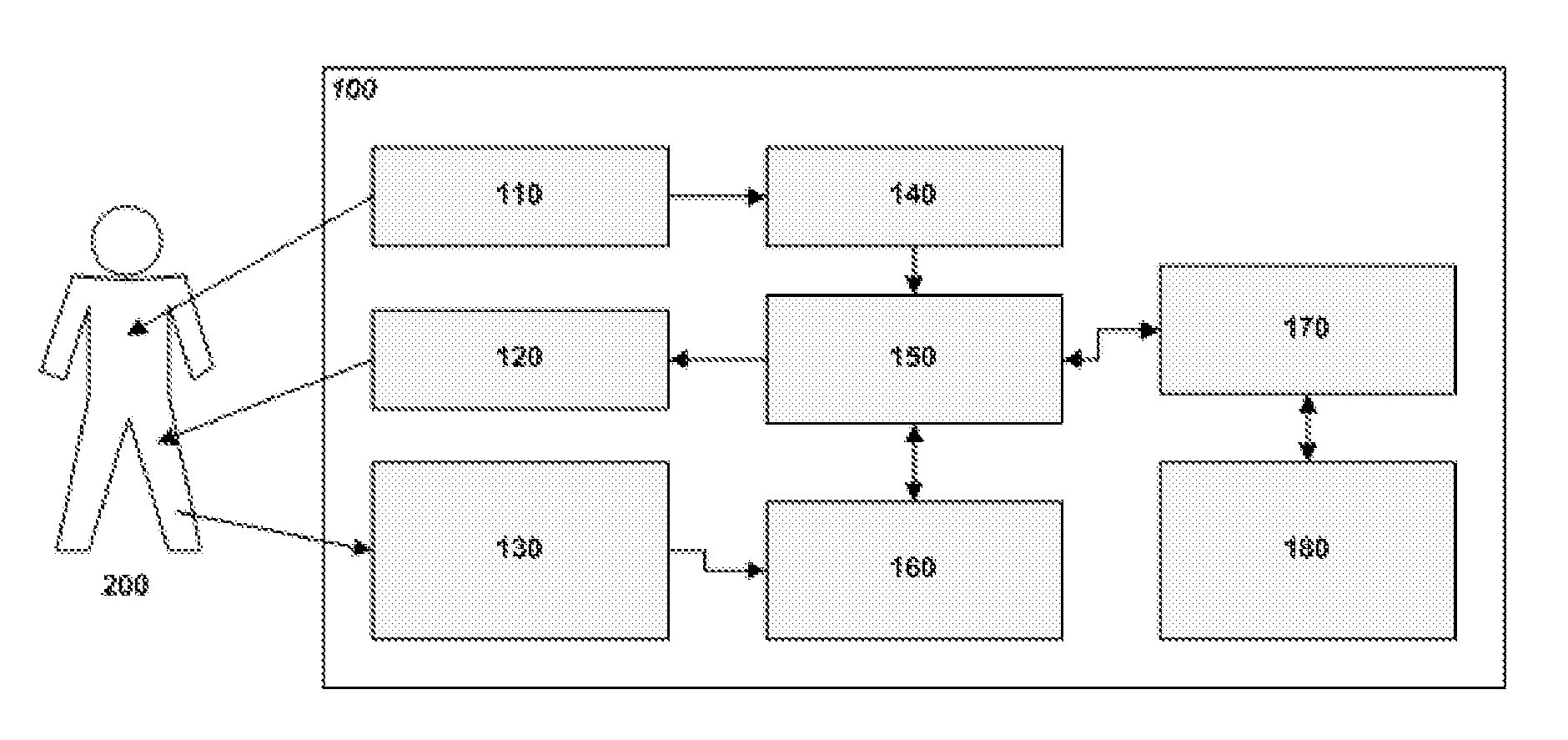

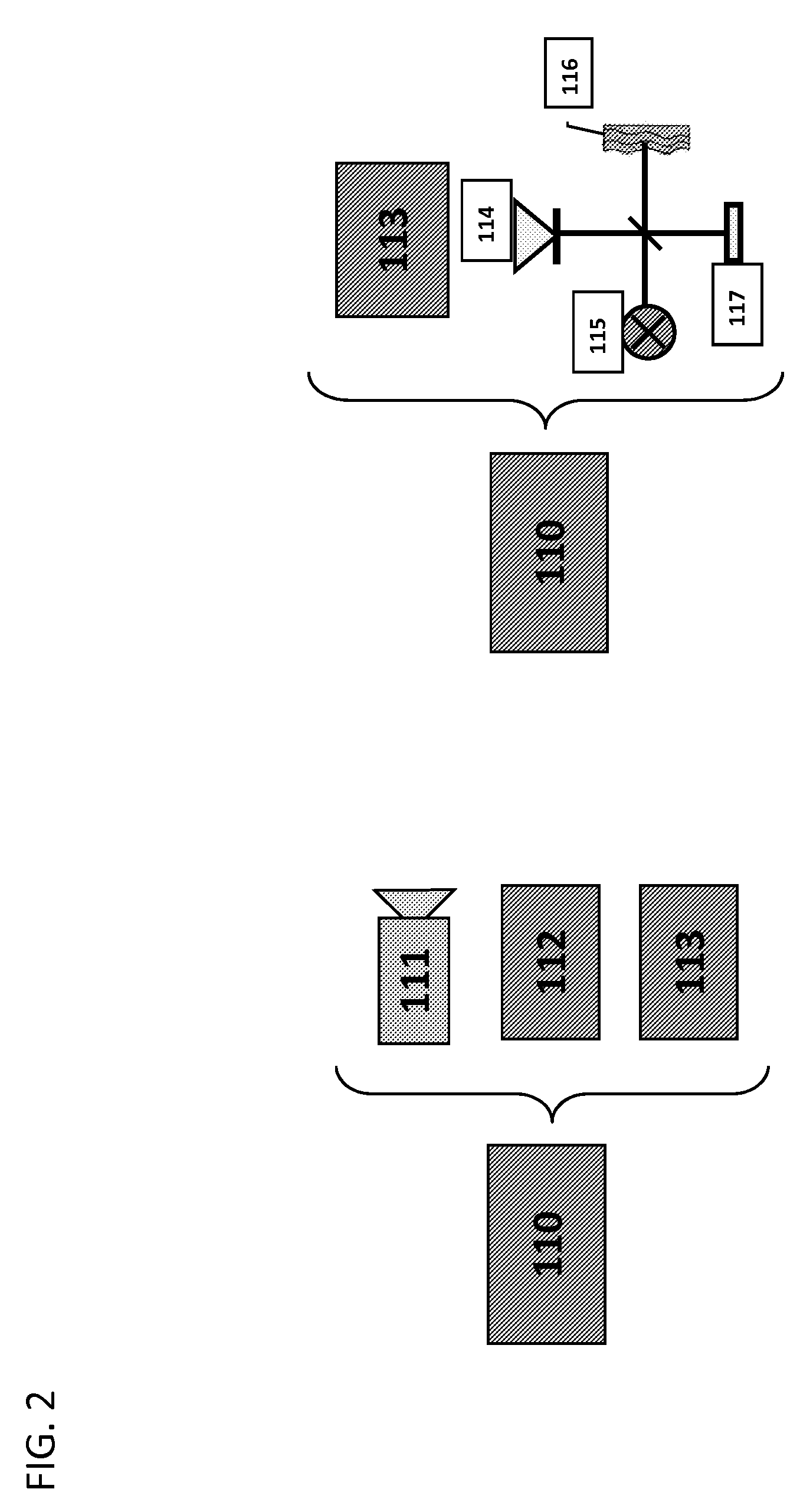

The present invention relates to a functional optical coherent imaging (fOCI) platform comprising at least one active camera unit (ACU) comprising a coherent and / or a partially coherent light source, and means for spectral filtering and imaging a selected body area of interest; an image processing unit (IPU) for pre-processing data received from an ACU; at least one stimulation unit (STU) transmitting a stimulation to a subject; at least one body function reference measurement unit (BFMU); a central clock and processing unit (CCU), with interconnections to the ACU, the IPU, the STU, for collecting pre-processed data from the IPU, stimuli from the STU body function reference data from the BFMU in a synchronized manner; a post-processing unit (statistical analysis unit, SAU); and an operator interface (HOD. Further the invention relates to a process for acquiring stimuli activated subject data comprising the steps of aligning a body function unit at a subject and monitoring pre-selected body function; selecting a stimulus or stimuli; imaging a body area of interest; exerting one or a series of stimuli on the subject; imaging the body area of interest synchronous with said stimuli and the preselected body functions; and transferring said synchronized image, stimuli and body function data to a statistical analysis unit (SAU) and performing calculations to generate results pertaining to body functions.

Owner:ECOLE POLYTECHNIQUE FEDERALE DE LAUSANNE (EPFL)

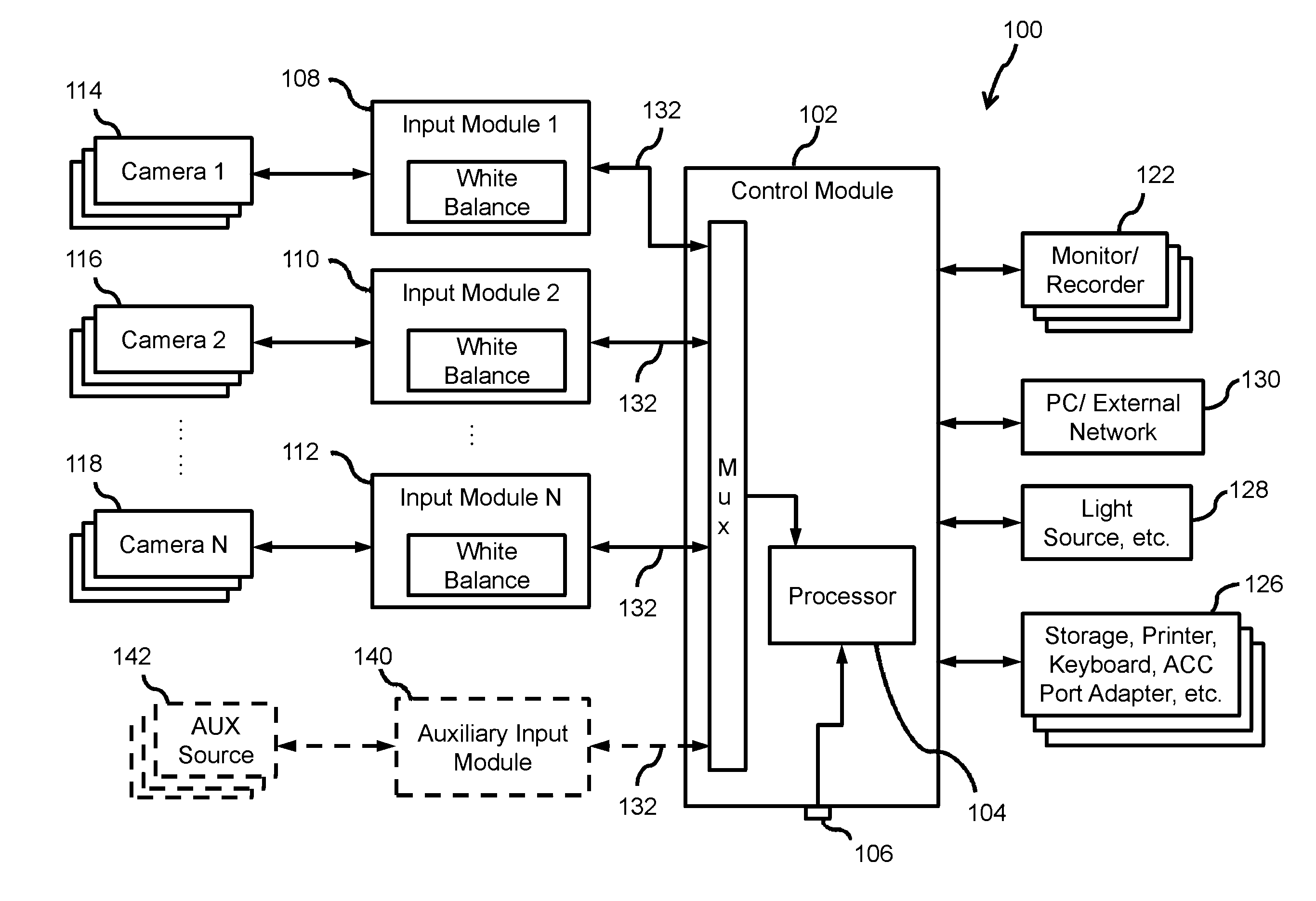

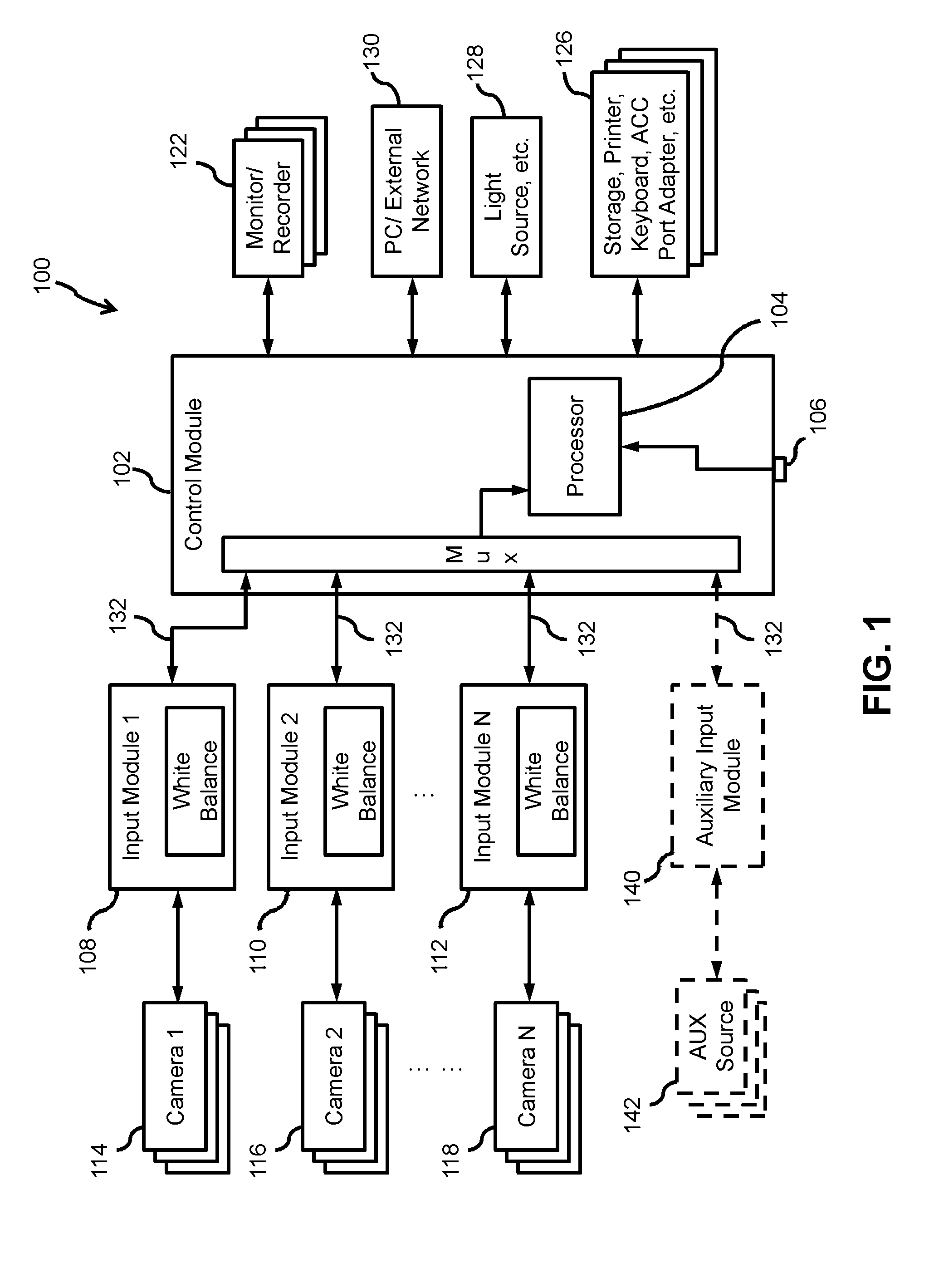

Video Imaging System With Multiple Camera White Balance Capability

ActiveUS20140184765A1Efficient white balance calibrationEfficient and reliable white balance correctionColor signal processing circuitsEndoscopesComputer moduleActive camera

A system for capturing image data including a control module having a control switch and a processor, the processor being adapted for white balance control and the control switch being adapted to initiate white balance control, at least one input module connected to the control module, the input module providing white balance calibration, and at least one camera coupled to the input module. One or more of the at least one input module are configured as active input modules, each active input module having an active camera generating an image signal. The control module detects the one or more active input modules and controls one of the one or more active input modules, selected based on user feedback, to calibrate white balance of the image signal of the active camera coupled to the selected input module and to transmit the calibrated image signal to the control module for display.

Owner:KARL STORZ IMAGING INC

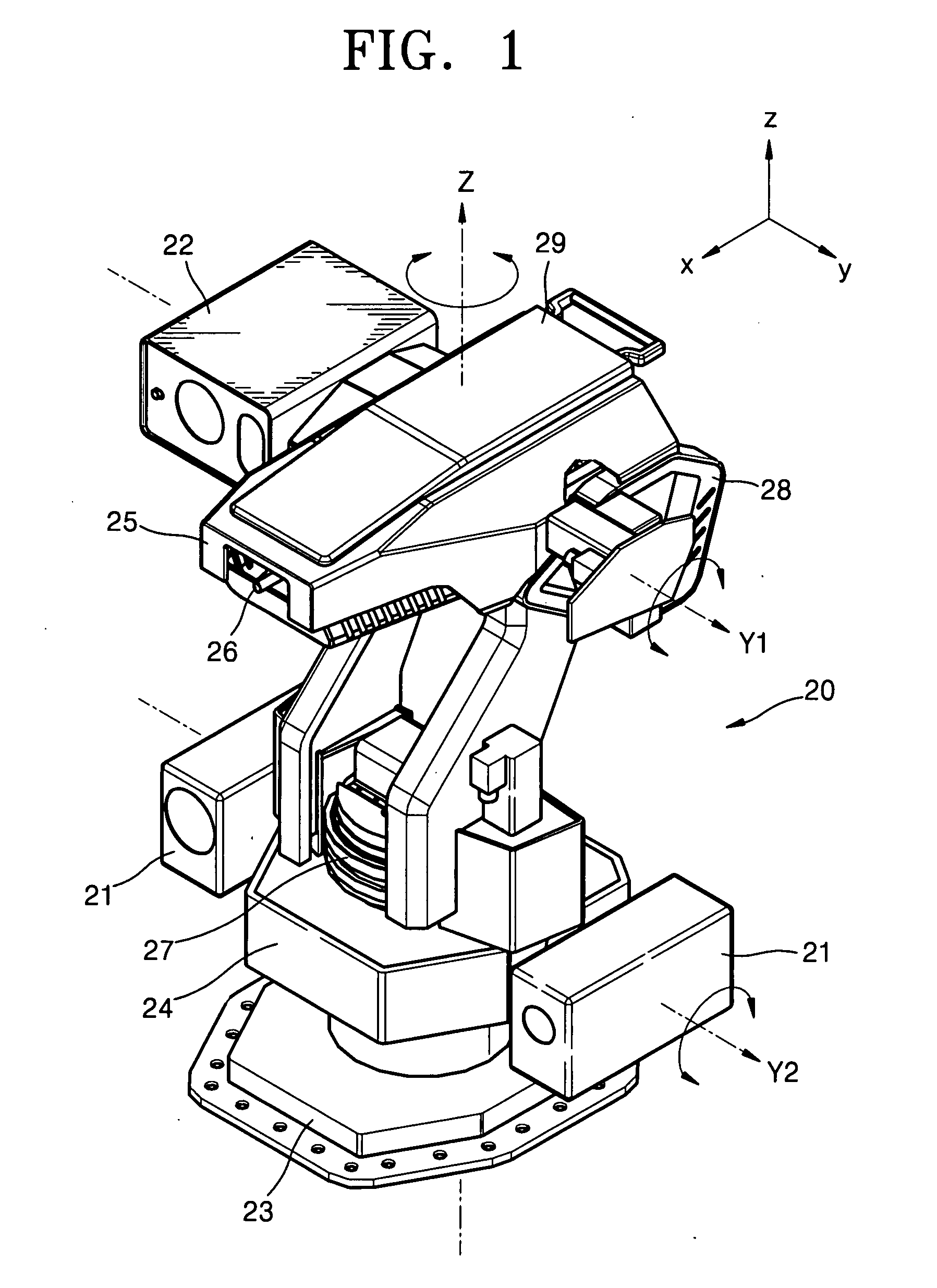

Sentry robot

A monitoring and sentry robot which can perform wide and narrow monitoring in short and long ranges and can automatically shoot at a target. The sentry robot includes a base, a main body installed on the base capable of pivoting, a master camera capable of rotating with the main body, and an active camera arranged on the main body capable of rotating in left and right directions and up and down directions with respect to the main body.

Owner:SAMSUNG TECHWIN CO LTD

Industrial robot three-dimensional real-time and high-precision positioning device and method

ActiveCN104298244AHigh positioning accuracySimple and fast operationPosition/course control in three dimensionsWide fieldMulti camera

The invention discloses an industrial robot three-dimensional real-time and high-precision positioning device and method. The device comprises an industrial robot system, an industrial computer and a camera unit, wherein the industrial computer is connected with the industrial robot controller through a first gigabyte Ethernet, and then is connected into the industrial robot system, the industrial computer is connected with the camera unit through a second gigabyte Ethernet, and the camera unit is an active camera unit or a multi-camera unit. The method includes the following steps that: the position state of the camera unit is set according to the positions of a feature point and an actual target point; a mapping relationship of three-dimensional coordinates in a robot space and two-dimensional coordinates in a camera space is established; the position of a target point in the three-dimensional robot space is obtained, the industrial robot controller sends a command so as to control a robot to move to the target point and position the robot on the target point; and finally, the robot is positioned on the actual target point through judgment. The industrial robot three-dimensional real-time and high-precision positioning device and method of the invention have the advantages of no need for calibration, high precision, wide field of vision and high real-time performance.

Owner:南京赫曼机器人自动化有限公司

Object tracking system, method and smart node using active camera handoff

Owner:IND TECH RES INST

Thin active camera cover for an electronic device

An electronic device (10) includes camera circuitry (82) for carrying out a camera related operation, the camera circuitry including a camera lens (30) that is at least partially covered by a visual indicator element. The visual indicator element (40) is substantially opaque when the camera circuitry is in a first state (e.g., an 'off' state) and the visual indicator element is substantially transparent when the camera circuitry is in a second state (e.g., an 'on' state). Optionally, an audible and / or visual indication mechanism may also be output to the user when the camera circuitry is activated.

Owner:SONY ERICSSON MOBILE COMM AB

Active camera target positioning method based on depth reinforcement learning

ActiveCN106373160AUniversalVersatilityImage analysisNeural architecturesPattern recognitionDecision networks

The invention provides a method carrying out target positioning through active adjustment of a camera in an image acquisition application and belongs to the mode identification technology field and the active camera positioning technology field. The method comprises steps that a depth neural network for evaluating the camera positioning effect is trained; multiple times of target positioning tests are carried out, in a positioning test process, a depth neural network for fitting a reinforcement learning value function is trained, and quality of seven types of operation including upward turn, downward turn, leftward turn, rightward turn, amplification, reduction and no change of the camera is determined through the depth neural network; decision for camera operation is made through employing a decision network according to the image information presently acquired by the camera. Through the method based on depth reinforcement learning, image acquisition quality is improved, different target positioning tasks can be adapted to, the method is an autonomous learning positioning method, artificial participation stages are quite few, and the method refers to a method of active camera learning and autonomous target learning.

Owner:TSINGHUA UNIV

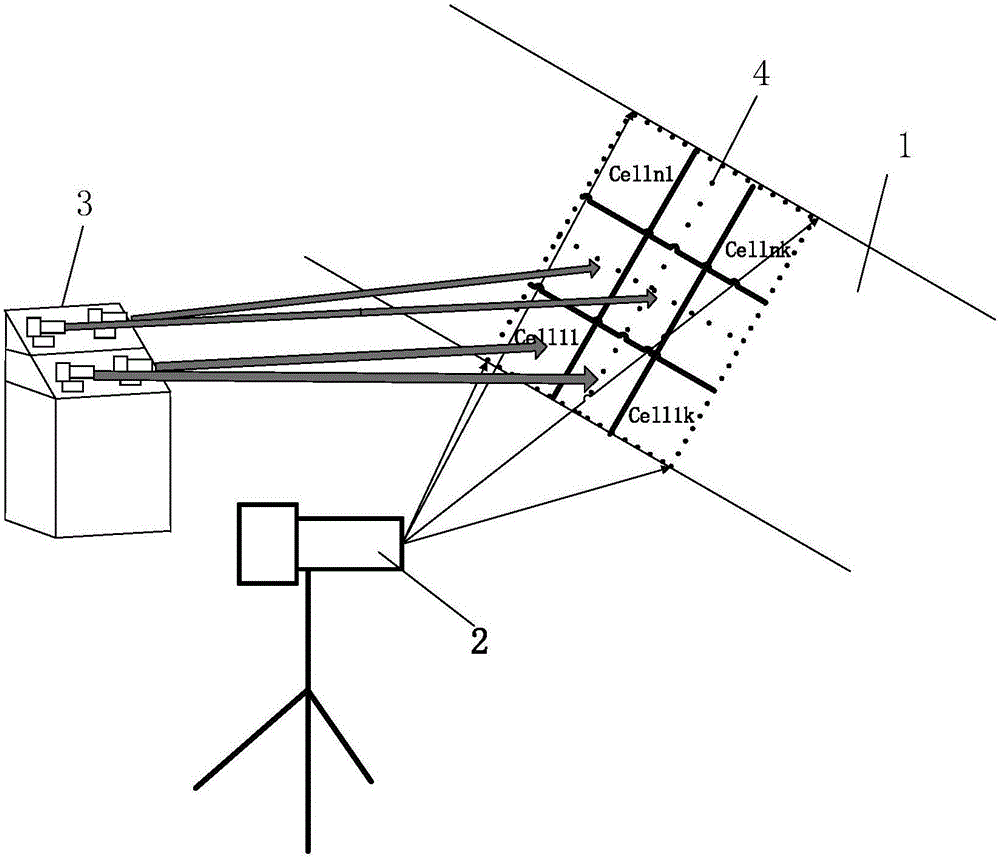

Complex water area target tracking method based on AIS (automatic identification system) and active cameras

The present invention discloses a complex water area target tracking method based on an AIS (automatic identification system) and an active camera. The method comprises the following steps that: 1) an observation area is divided into n cells, each camera of an active camera group is separately calibrated according to each cell, so that the intrinsic parameters and extrinsic parameters of the cameras are obtained; (2) the AIS device is utilized to calibrate the cells in advance, and calibration information is stored, after a target object enters a relevant cell, the cell where the target object is located is determined according to position information returned by the AIS; (3) the active camera group is made to rapidly move to the area of the cell where the target is located, wherein the active camera group contains m active cameras; and (4) the clear video image of the target object is shot, and the target object is tracked according to an tracking algorithm. According to the method of the invention, a new image pickup idea is provided; and the cell division mode is adopted, and the AIS system and the cells are combined to determine the position and heading of the target object.

Owner:WUHAN UNIV OF TECH

Functional optical coherent imaging

ActiveUS9066686B2Improve spatial resolutionGood statistical confidenceTelevision system detailsDiagnostics using lightMain processing unitActive camera

The present invention relates to a functional optical coherent imaging (fOCI) platform comprising at least one active camera unit (ACU) comprising a coherent and / or a partially coherent light source, and means for spectral filtering and imaging a selected body area of interest; an image processing unit (IPU) for pre-processing data received from an ACU; at least one stimulation unit (STU) transmitting a stimulation to a subject; at least one body function reference measurement unit (BFMU); a central clock and processing unit (CCU), with interconnections to the ACU, the IPU, the STU, for collecting pre-processed data from the IPU, stimuli from the STU body function reference data from the BFMU in a synchronized manner; a post-processing unit (statistical analysis unit, SAU); and an operator interface (HOD. Further the invention relates to a process for acquiring stimuli activated subject data comprising the steps of aligning a body function unit at a subject and monitoring pre-selected body function; selecting a stimulus or stimuli; imaging a body area of interest; exerting one or a series of stimuli on the subject; imaging the body area of interest synchronous with said stimuli and the preselected body functions; and transferring said synchronized image, stimuli and body function data to a statistical analysis unit (SAU) and performing calculations to generate results pertaining to body functions.

Owner:ECOLE POLYTECHNIQUE FEDERALE DE LAUSANNE (EPFL)

System and method for locating a target with a network of cameras

The present invention relates to a system, a method and an intelligent camera for following at least one target (X) with at least one intelligent camera (S) comprising means (S1) for processing data implementing at least one algorithm (AS, AD, AF) for following target(s), means (S2) for acquiring images and means (S21) for communication, characterized in that a detection of at least one target (X) in the region (Z) covered, by virtue of at least one algorithm (AD) for detection at an initial instant, is followed, for each instant (t), by an iteration of at least one step of following the target (X) with at least one camera, termed an active camera, by virtue of at least one variational filtering algorithm (AF) based on a variational filter by estimation (551) of the position of the target (X) through a continuous mixture of Gaussians.

Owner:UNIVERSITY OF TECHNOLOGY OF TROYES

Systems and methods for interleaving multiple active camera frames

ActiveUS20180262740A1Acquiring/recognising eyesElectromagnetic wave reradiationActive cameraThroughput

A system for three-dimensional imaging includes a plurality of three-dimensional imaging cameras. A first camera and a second camera may have integration times that are interleaved with the readout time of the other camera to limit interference while increasing framerate and throughput.

Owner:MICROSOFT TECH LICENSING LLC

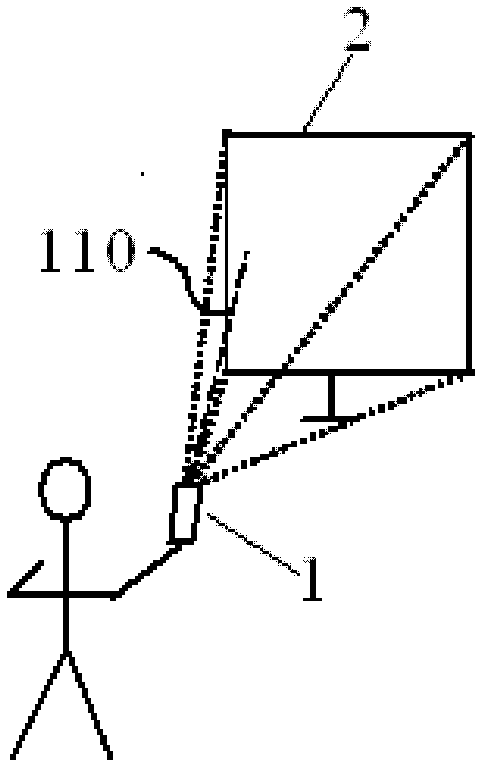

Intelligent remote controlled television system and remote control method thereof

InactiveCN102984563AEasy to operateImprove interactivitySelective content distributionTelevision systemRemote control

The present invention relates to an intelligent remote controlled television system and a remote control method thereof. The television system comprises a remote control device and a television, wherein the remote control device includes a remote control communication module, and the television includes a television communication module, a screen display module and an application module. The remote control device further includes a main control module, an active camera, a storage module, and a video processing and analyzing module; and the television further includes a cursor mode interface support module. According to the system and the method of the present invention, the position of the television screen in the image captured by the active camera is detected and analyzed via the video processing and analyzing module, the position and the direction of the remote control device relative to the television are mapped as the cursor position on the television screen, and the cursor mode interface support module in the television receives the cursor position information and executes relative actions; thereby realizing the control of the television cursor mode by using a monocular camera.

Owner:FUJITSU SEMICON SHANGHAI

Three-eye depth obtaining camera with adjustable optical axes

InactiveCN107147891AHigh measurement accuracyIncrease or decrease feature pointsSteroscopic systemsOptical axisAngular degrees

The invention discloses a trinocular depth acquisition camera with adjustable optical axis. Two independent camera modules are arranged on the left and right sides, and an active depth camera is arranged on the top. Drive synchronous rotation, and automatically output the angle. The left and right cameras are matched through a threaded shaft hole, and another code disc is used to indicate the angle between the two optical axes. In the present invention, the non-parallel optical axis is used in the calculation of the depth information, and the angle of view in the acquisition of the depth information becomes a value that can be adjusted according to the actual situation by setting the form of the adjustable optical axis. And by adding an active depth camera, the binocular ranging information is combined with the active depth camera ranging information, so that the final depth data can be better reflected.

Owner:ZHEJIANG UNIV

Power transmission line icing image collection device

InactiveCN106043724AReduced load weight for icing explorationImprove battery lifeAircraft componentsVisibilityIcing conditions

An embodiment of the invention discloses a power transmission line icing image collection device which comprises a fixing component and an image collection camera. The fixing component comprises a fixing box used for placement of the image collection camera, and a support, the support comprises a base platform and supporting seats symmetrically arranged at two ends of the base platform and perpendicular to the base platform, a threaded hole used for docking with an unmanned aerial vehicle is formed in the base platform, and the supporting seat is fixedly connected with the fixing box; the image collection camera comprises an active camera set and a standby camera set. The power transmission line icing image collection device can explore icing condition of a power transmission line in an environment with all-weather low visibility; through preferred equipment, icing exploration load weight of the unmanned aerial vehicle is lowered effectively, and cruising ability of the unmanned aerial vehicle is improved effectively.

Owner:YUNNAN POWER GRID CO LTD ELECTRIC POWER RES INST

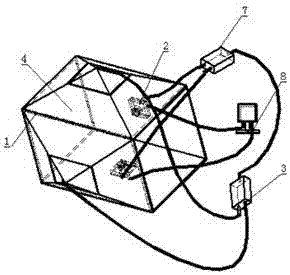

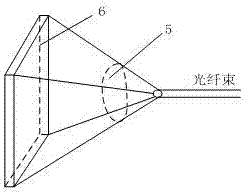

Active camera system for measuring flying state of projectile and measuring method thereof

InactiveCN107339919ANegligible impactUnlimited field of viewAmmunition testingTime scheduleActive camera

The invention discloses an active camera system for measuring the flying state of a projectile and a measuring method thereof. The camera system is composed of a laser, a high-speed CCD (Charge Coupled Device) camera, an outer structural frame, a time schedule controller of a FPGA (Field Programmable Gate Array) and an upper computer, wherein laser emitted by the laser is divided into two parts as background light sources, the laser light sources and the high-speed CCD camera are controlled by the time schedule controller of the FPGA, when the laser emits laser, the CCD camera begins to shoot and keeps for certain exposure time, the laser light sources flicker for many times, so that positions of a target in different time can be obtained in the same photo, and the photo is transmitted to the upper computer by the CCD camera through a network cable after shooting is finished. According to the active camera system, the field requirement of the camera can be satisfied to the maximum, and while a clear target image is obtained, the energy requirement of the laser light sources is reduced.

Owner:XIAN TECHNOLOGICAL UNIV

Cell-based active intelligent sensing device and method

InactiveCN106846284AEasy to shootThe process is convenient and fastImage enhancementImage analysisImaging processingActive perception

The invention provides a cell-based active intelligent sensing device. The device comprises a detector, an active camera unit and an image processing unit; wherein the detector is used for detecting whether a target object exists in a to-be-detected area; the area which can be detected by the detector is called as a cell area; the cell area is divided into n*k cell units; the active camera unit includes a controller and a plurality of active cameras; each active camera is provided with a rotation holder for adjusting a rotation angle of the active camera and a capturing range of each active camera is the same as one cell unit; the controller is used for adjusting the rotation holder of the corresponding active camera according to a signal of the detector; and the image processing unit is used for synthesizing pictures captured by the active cameras. The cell-based active intelligent sensing device can actively sense a target object, the area which can be detected by the detector is divided into subareas, clear images are captured for the subareas by use of the calibrated active cameras when the target object is available, and the active cameras are facilitated to capture one or more cell units.

Owner:WUHAN UNIV OF TECH

Active vision human face tracking method and tracking system of robot

ActiveCN102411368BOvercome limitationsGuaranteed full scaleCharacter and pattern recognitionPosition/course control in two dimensionsFace detectionPattern perception

The invention discloses an active vision human face tracking method and tracking system of a robot; the tracking method comprises the steps that: (1) the mobile robot acquires an environment information image and detects a human face target through an active camera; and (2) after the human face target is detected, the robot tracks the human face target, and maintains the human face target in the center of the image through the active camera and the movement of the robot. The tracking system comprises the active camera, an image tracking module, a movement tracking module, a hierarchy buffer module and a state feedback module. The invention realizes the automatic human face detection and tracking by the robot, overcomes the limitation of a smaller image vision angle and establishes a perception-movement ring of the mobile robot based on active vision by combining the image tracking with the movement tracking, so that the movement scope for human face tracking is expanded to 360 degrees, the all-sided expansion of the tracking scope is ensured. A two-layer buffer region ensures the tracking continuity, so that the human face target is always maintained in the center of the image.

Owner:PEKING UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com