Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

417 results about "Visual Objects" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Visual Objects is an object-oriented computer programming language that is used to create computer programs that operate primarily under Windows. Although it can be used as a general-purpose programming tool, it is almost exclusively used to create database programs.

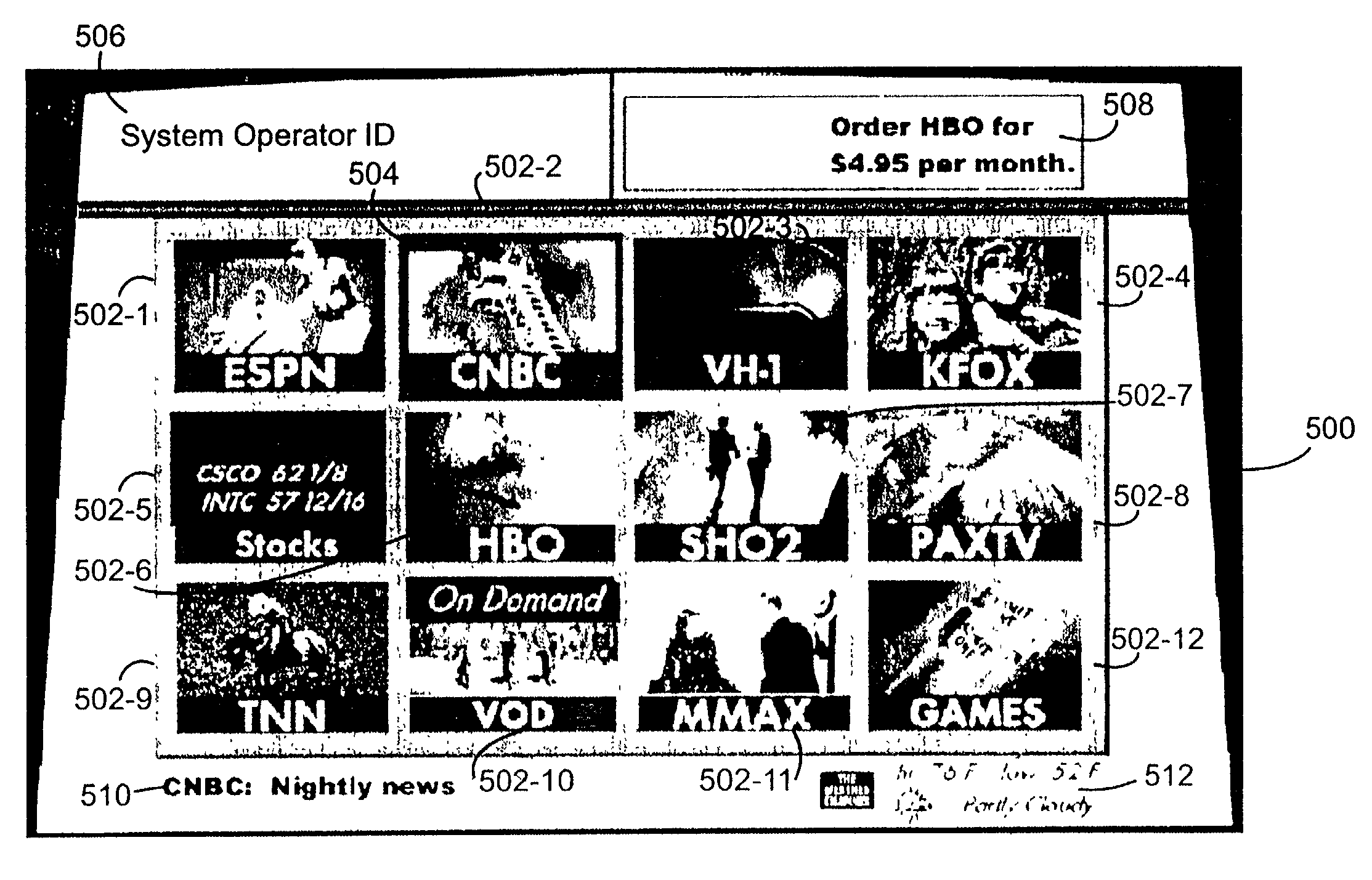

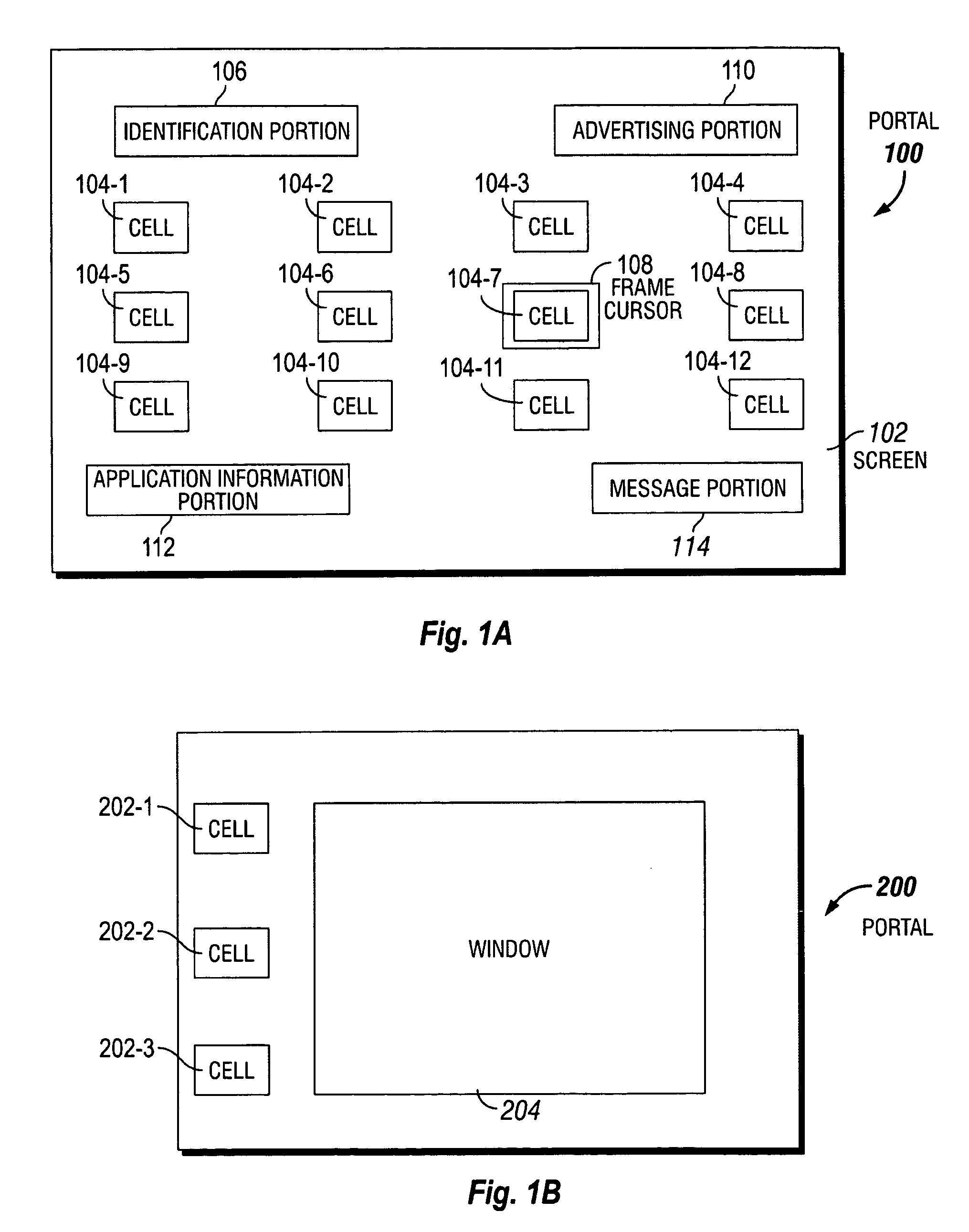

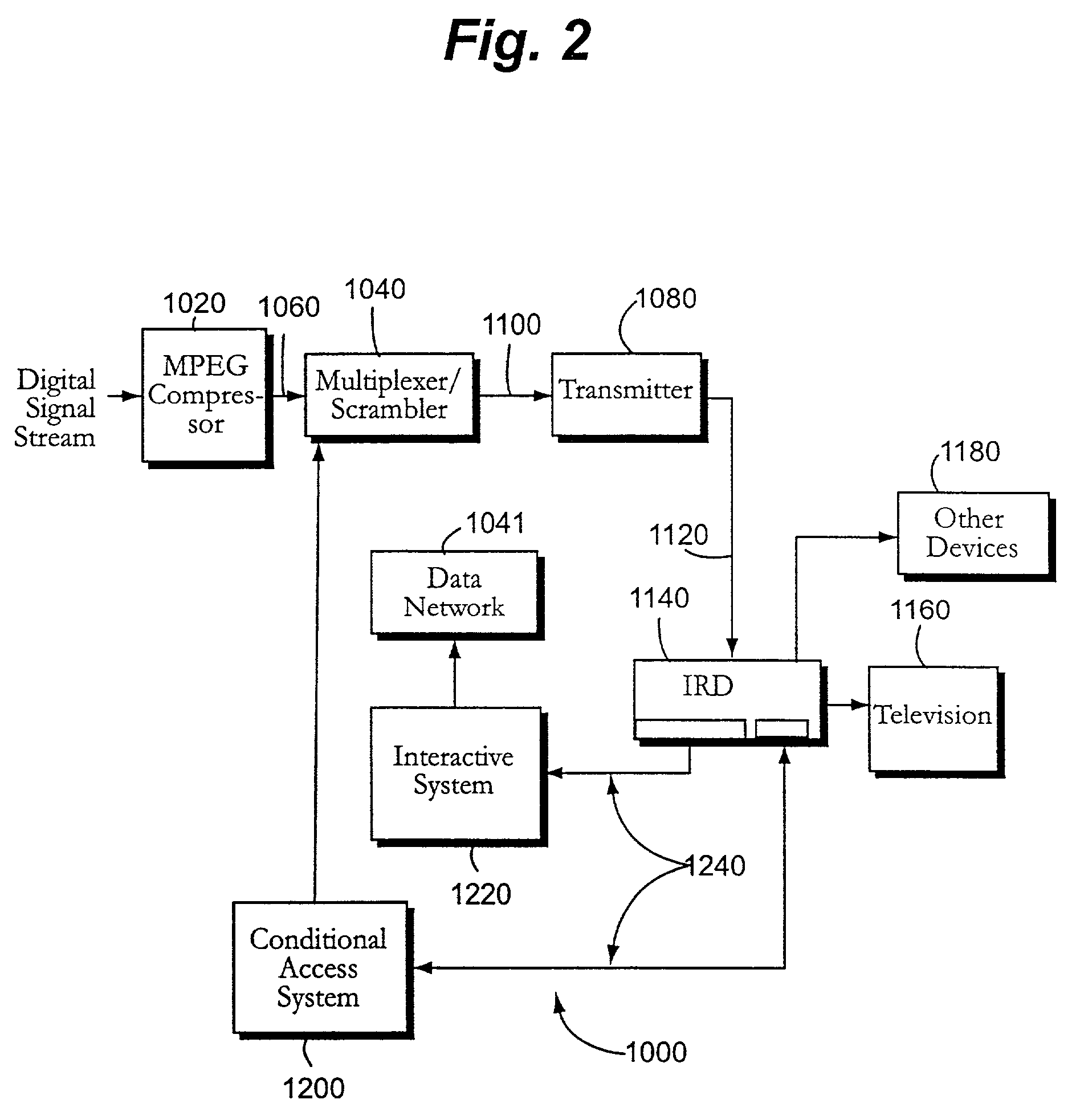

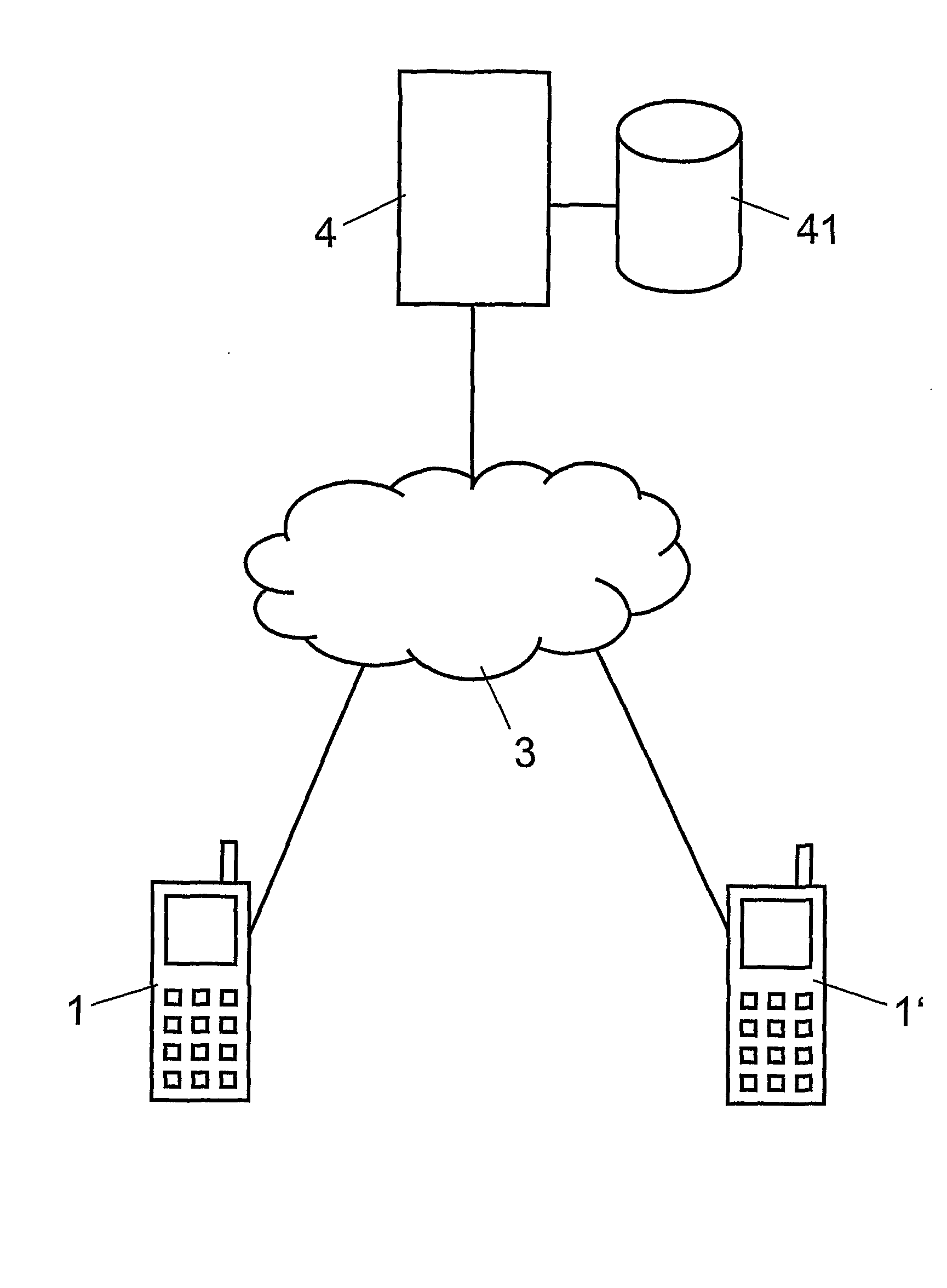

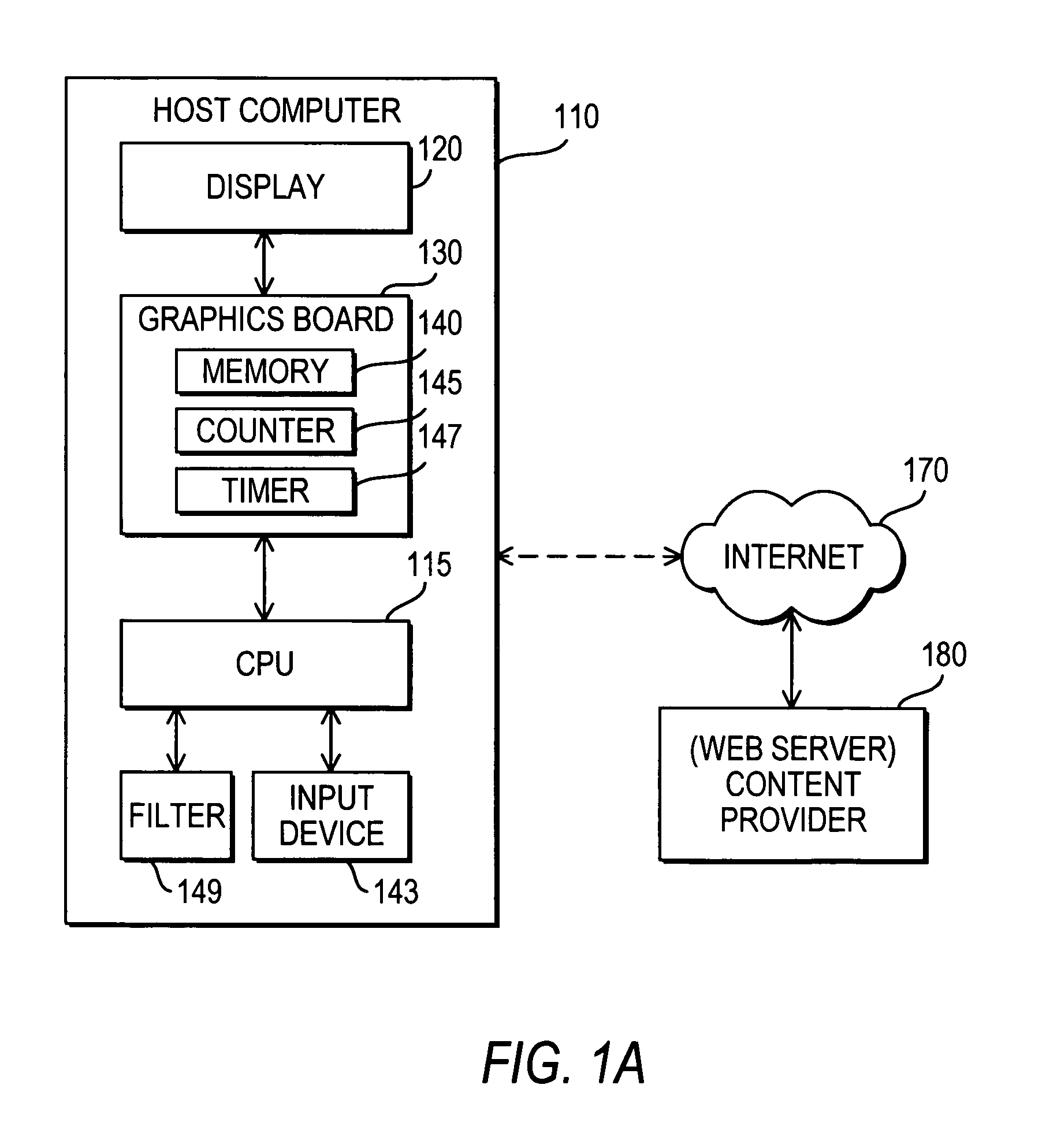

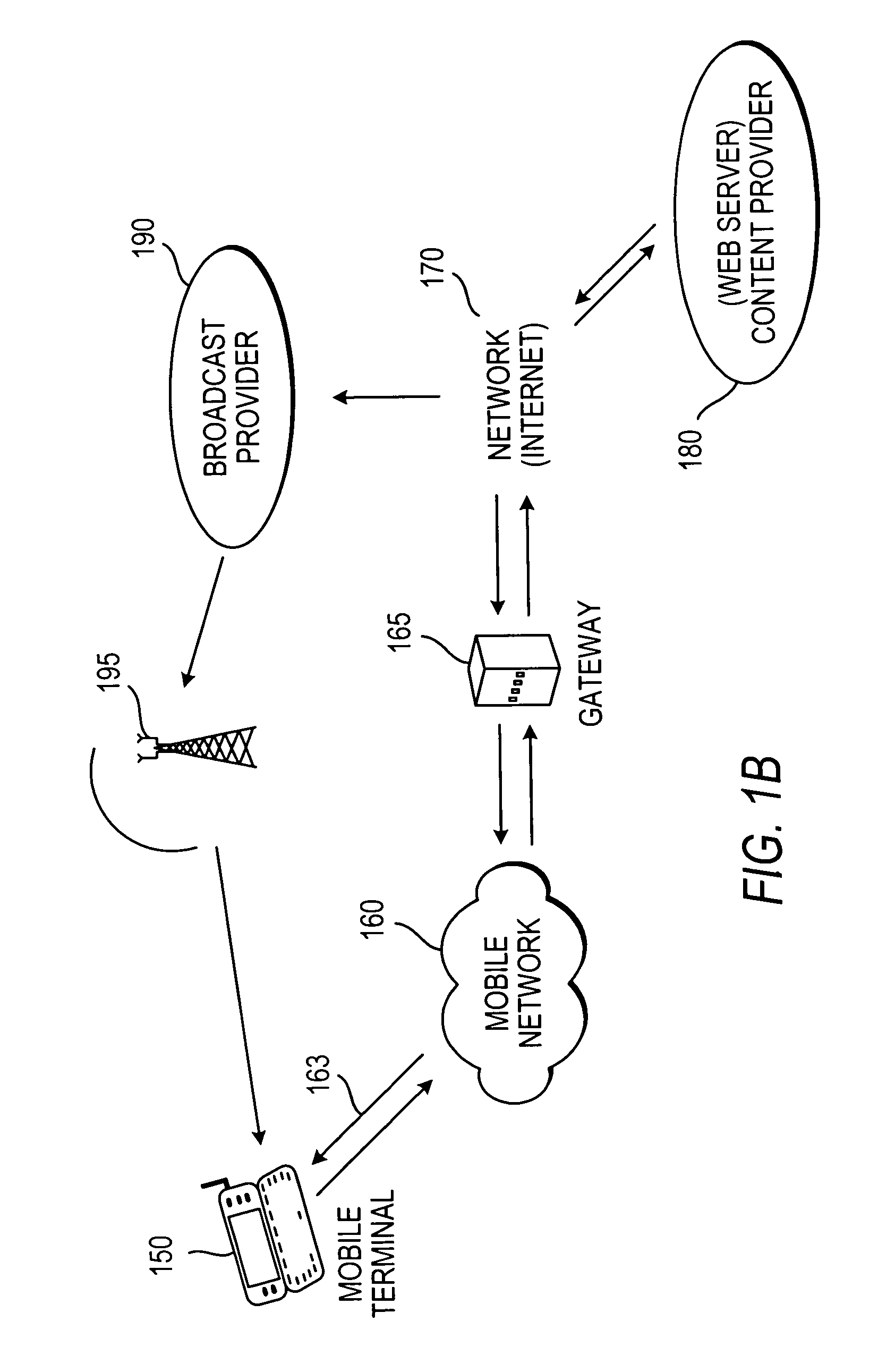

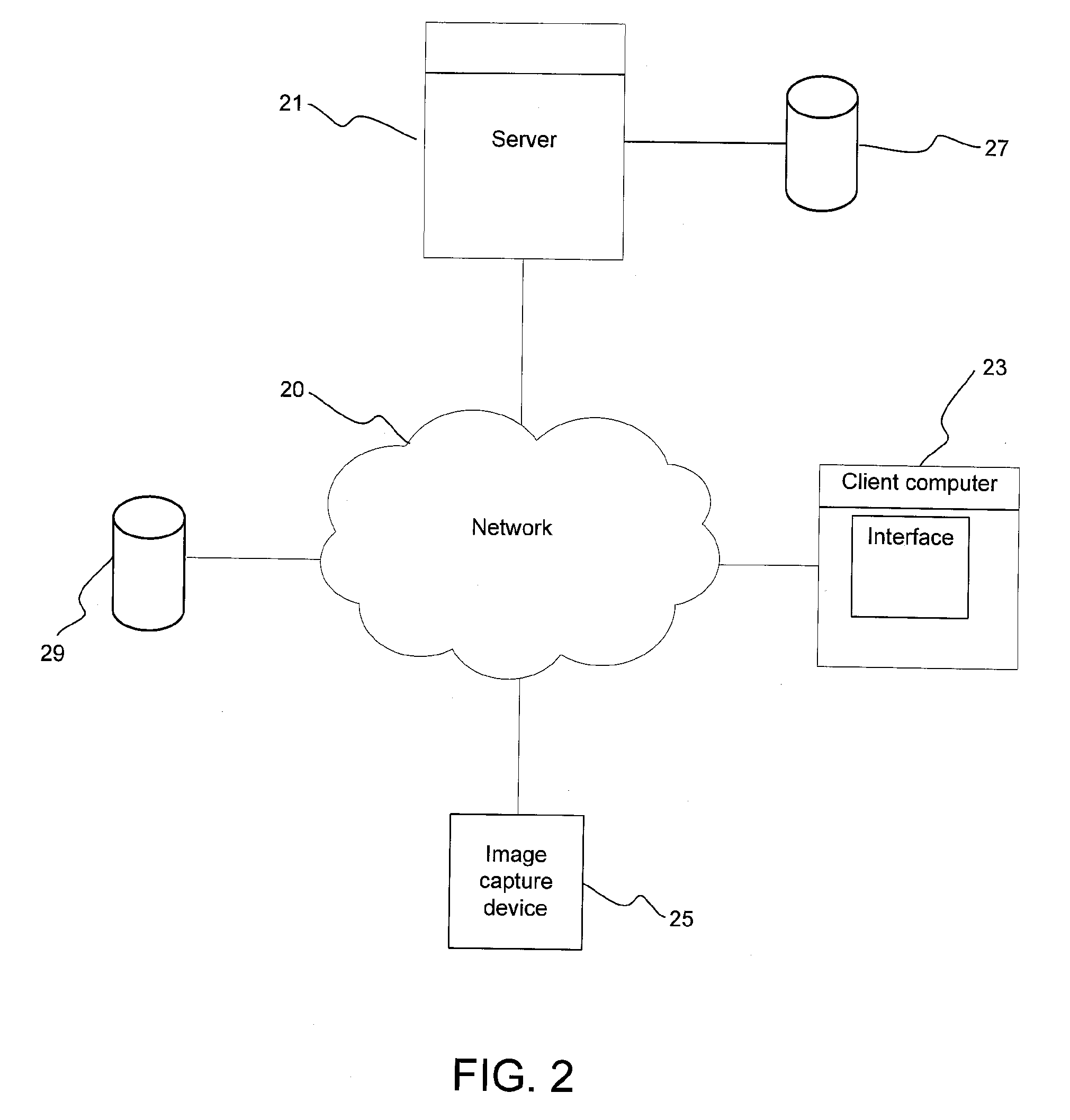

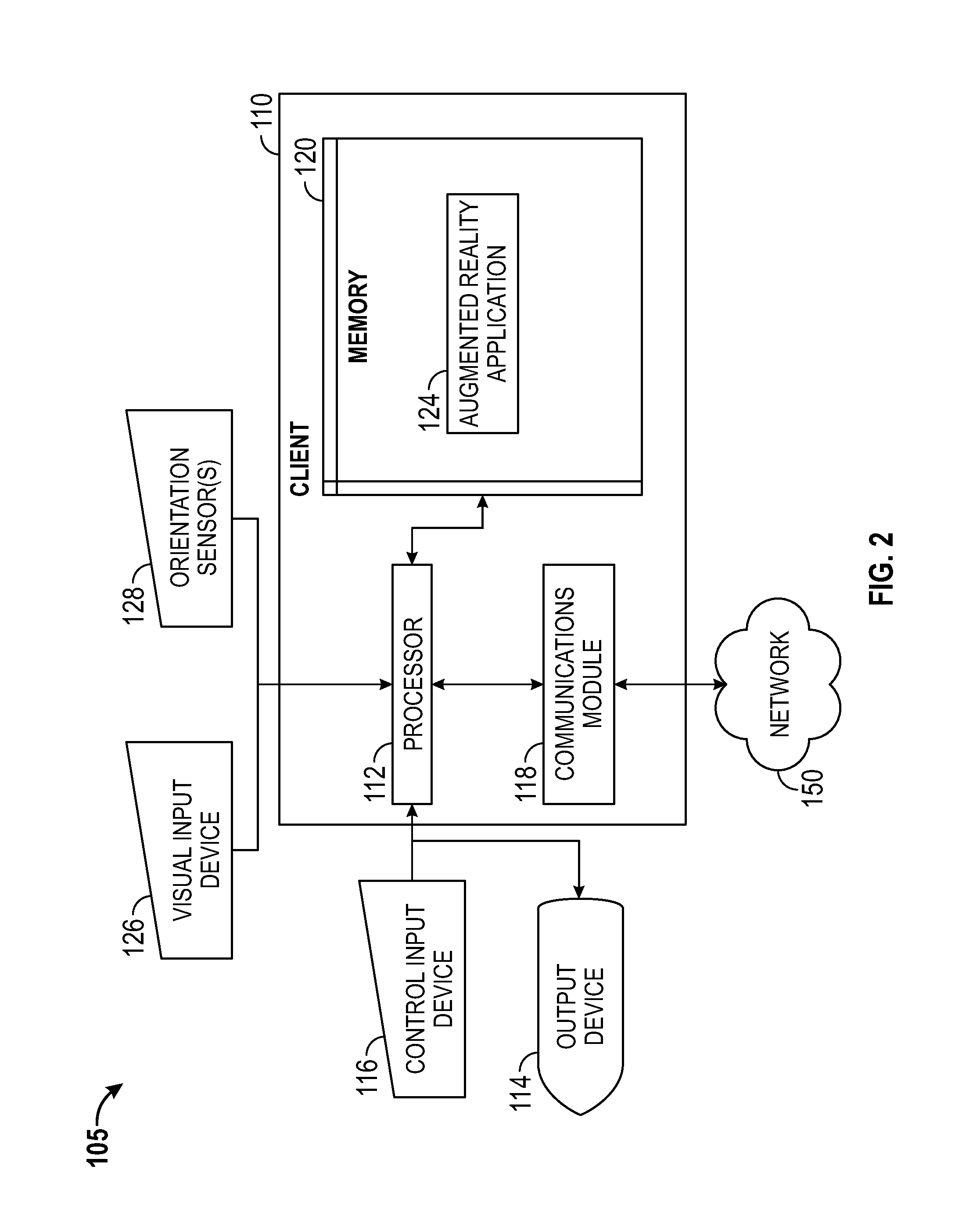

Portal for a communications system

InactiveUS7174512B2Television system detailsRecording carrier detailsCommunications systemUser input

A portal for a communications system that includes a remote terminal connected via a communications network to a broadcast center. The portal includes a display connected to the remote terminal for displaying an arrangement of cells, each cell including a visual object and an underlying application. A user input device enables user inputs to select one of the cells. The portal provides simple and intuitive access to the wide variety of services currently offered, and to be offered in the future, in communications systems.

Owner:INTERDIGITAL MADISON PATENT HLDG

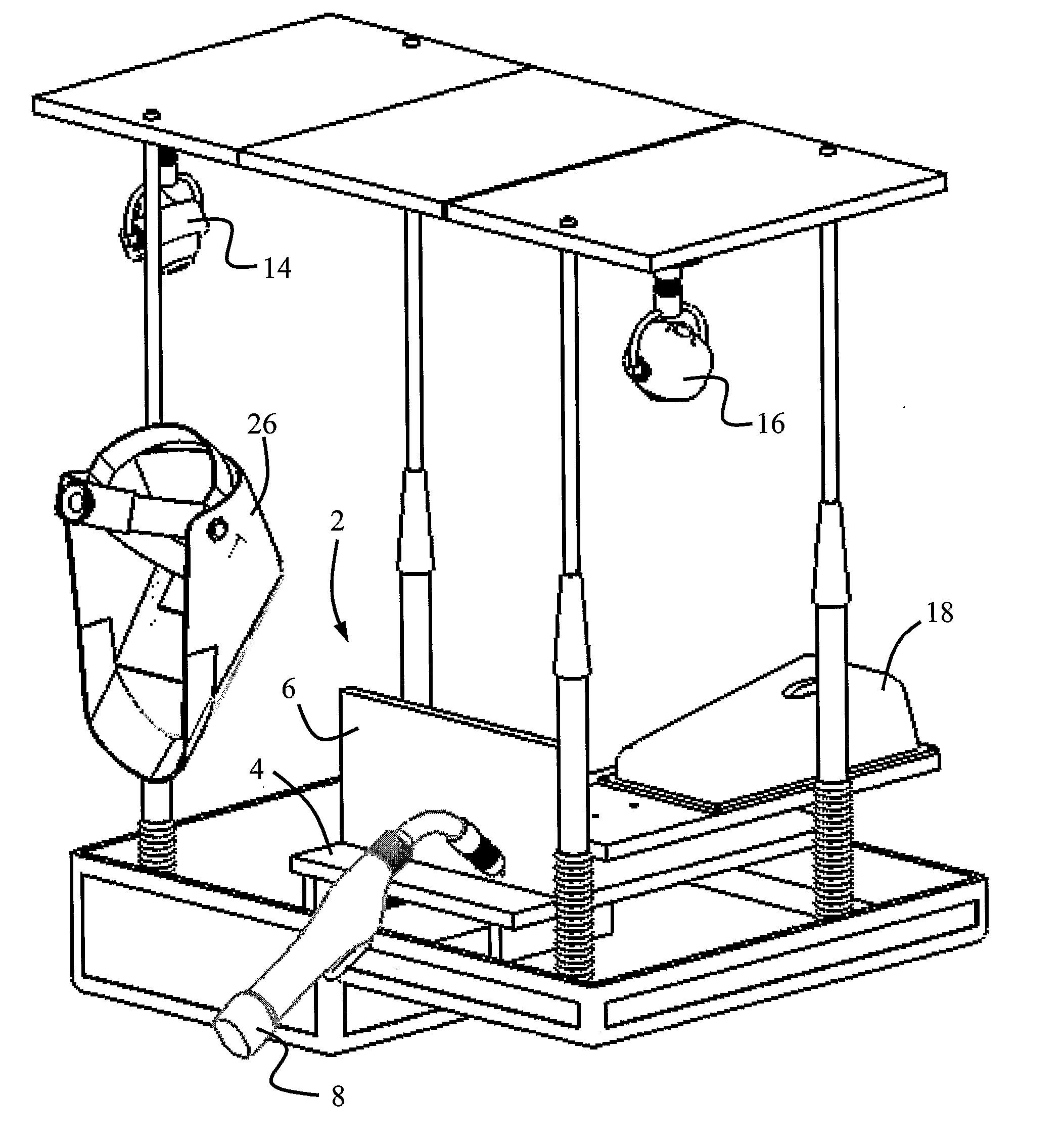

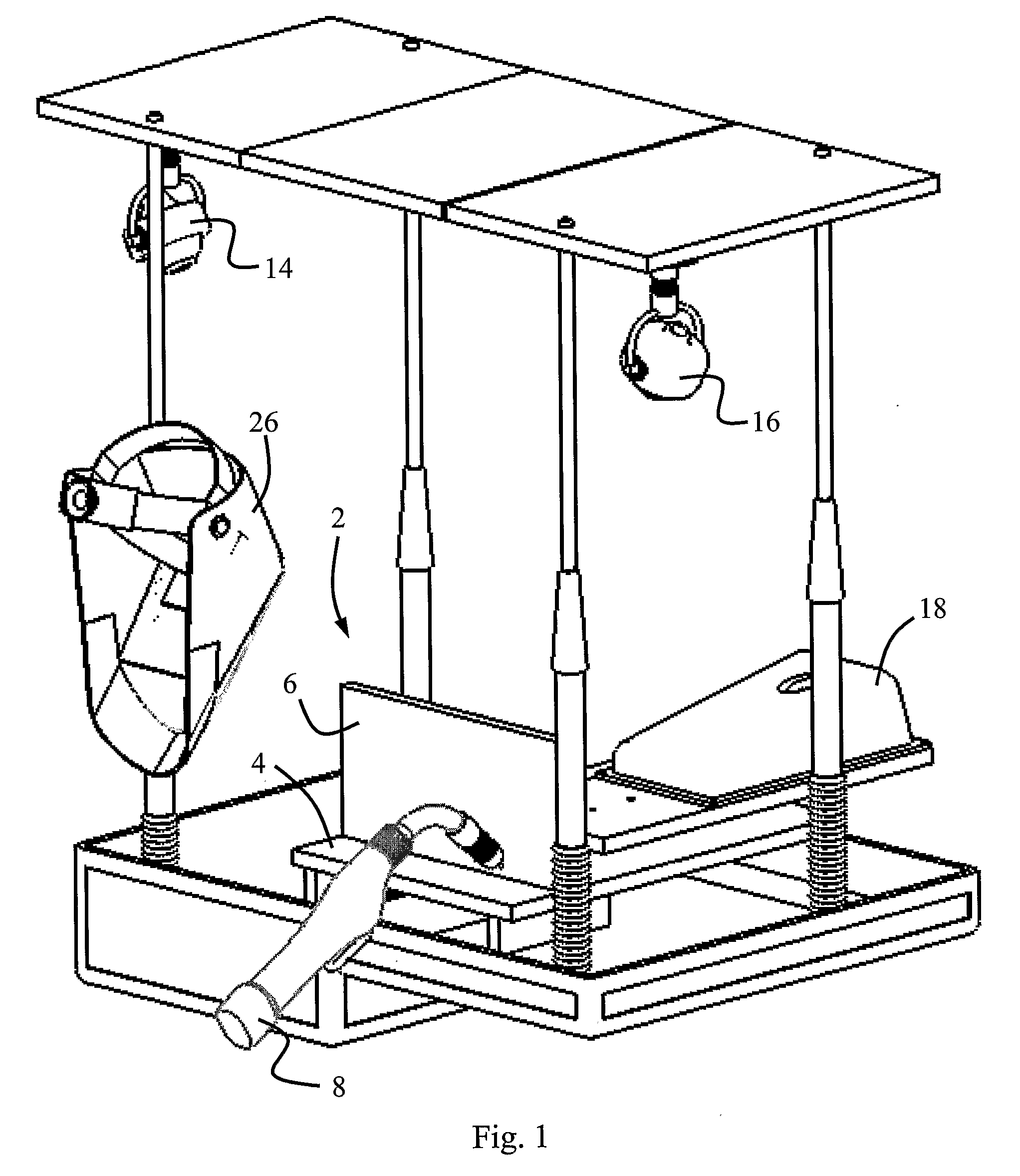

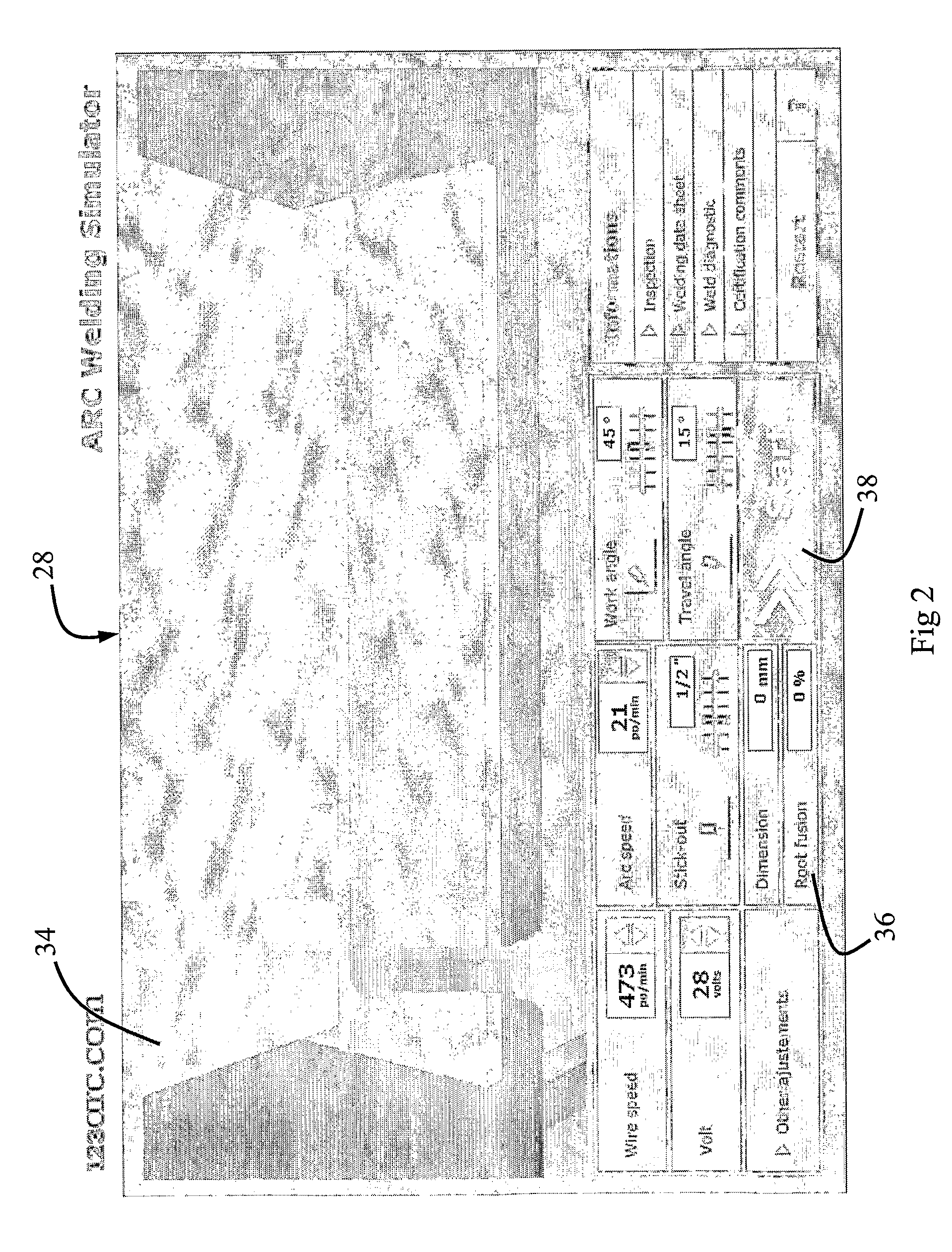

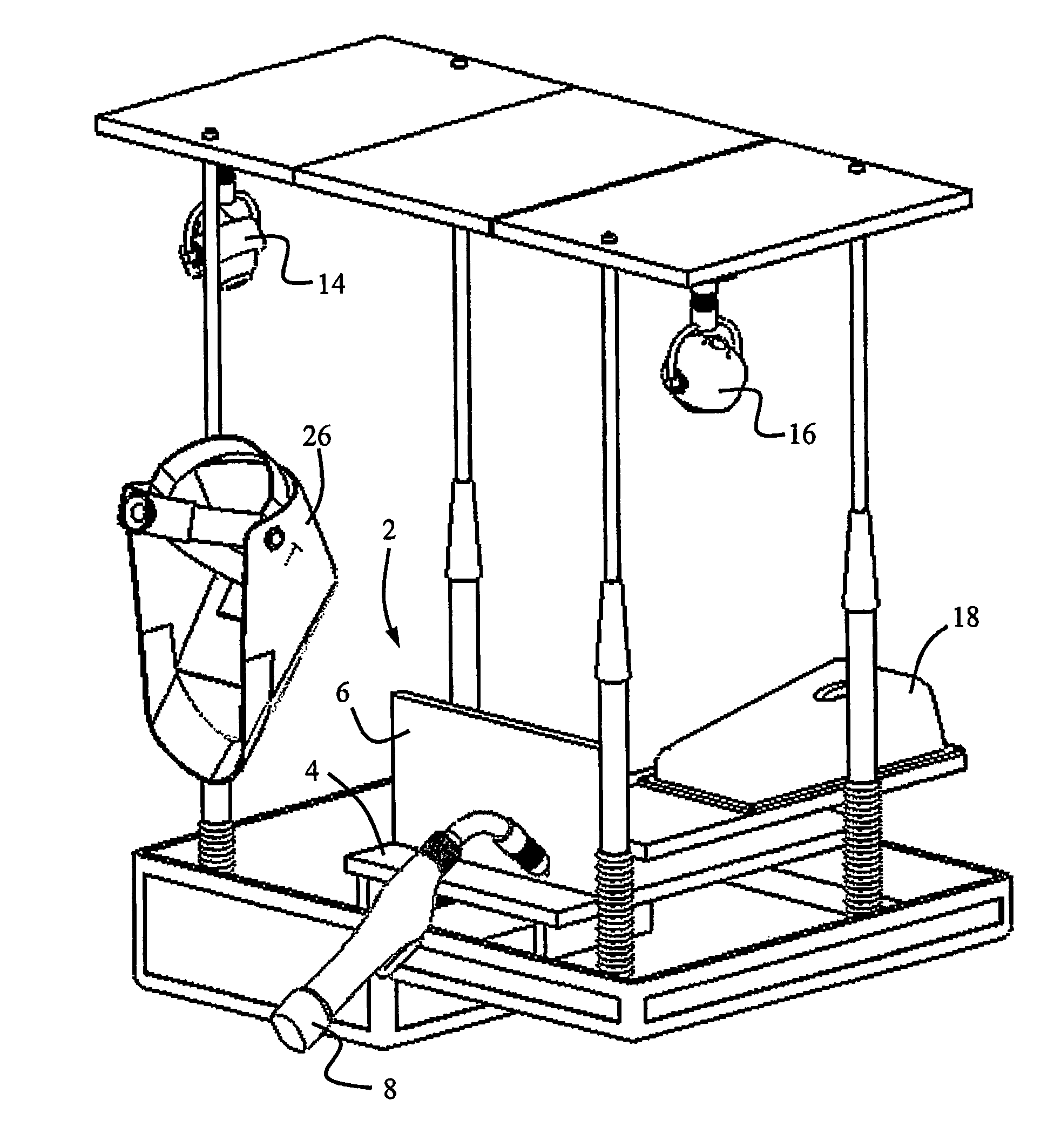

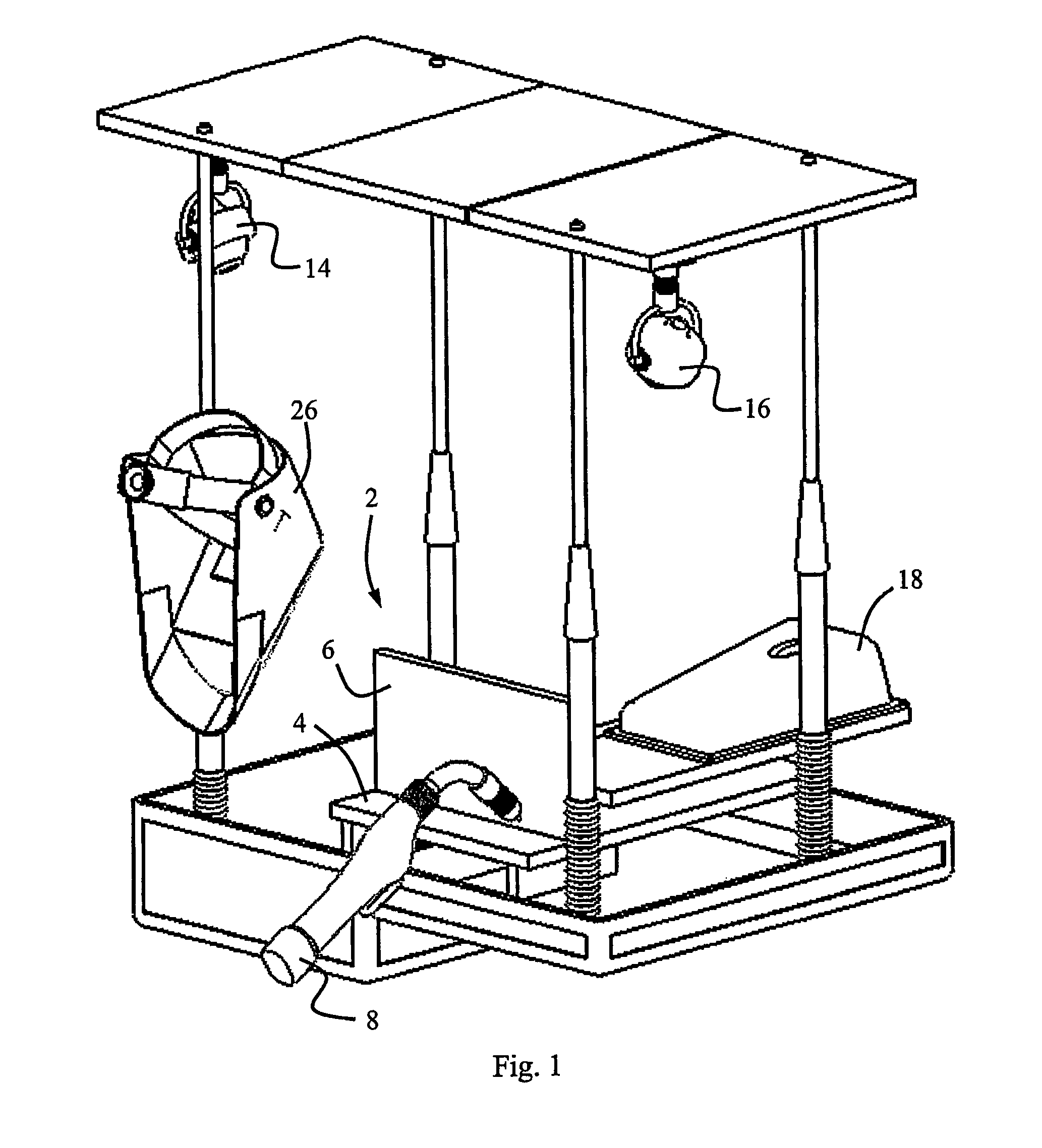

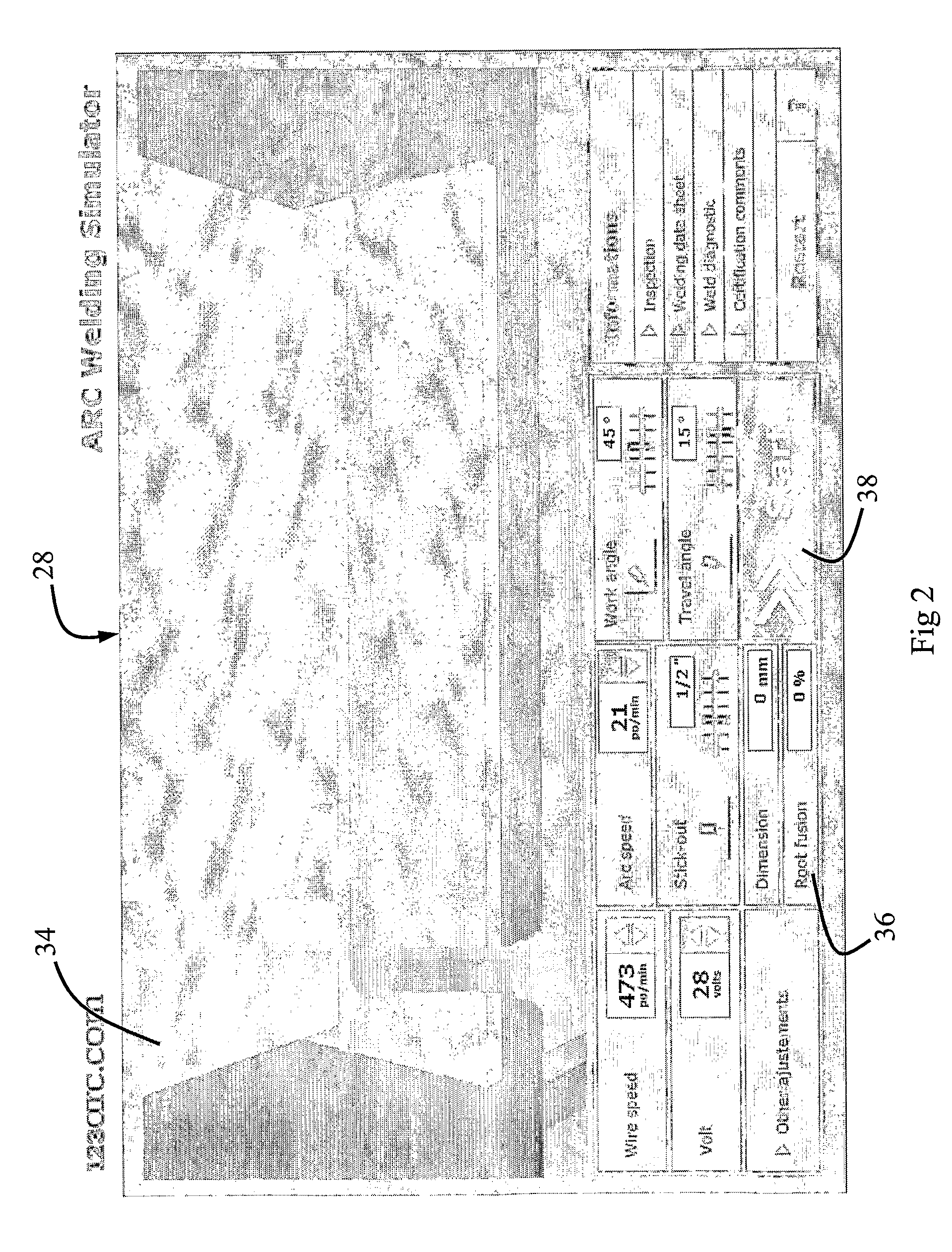

Body Motion Training and Qualification System and Method

InactiveUS20080038702A1Defective reproductionCosmonautic condition simulationsElectric discharge heatingSimulationDisplay device

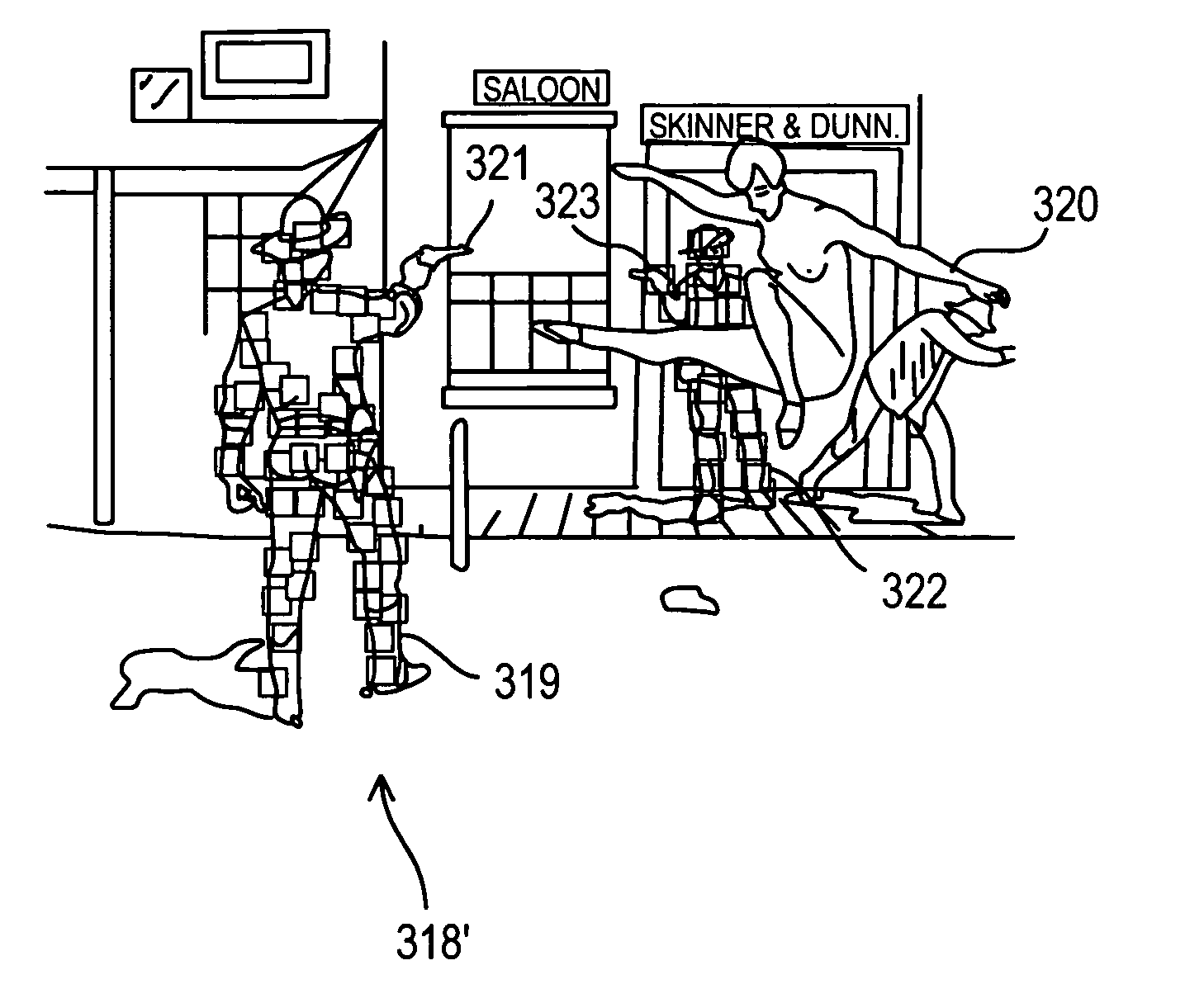

The system allows training and qualification of a user performing a skill-related training exercise involving body motion in a workspace. A training environment is selected through a computer apparatus, and variables, parameters and controls of the training environment and the training exercise are adjusted. Use of an input device by the user is monitored. The 3D angles and spatial coordinates of reference points related to the input device are monitored through a detection device. A simulated 3D dynamic environment reflecting effects caused by actions performed by the user on objects is computed in real time as a function of the training environment selected. Images of the simulated 3D dynamic environment in real time are generated on a display device viewable by the user as a function of a computed organ of vision-object relation. Data indicative of the actions performed by the user and the effects of the actions are recorded and user qualification is set as a function of the recorded data.

Owner:123 CERTIFICATION

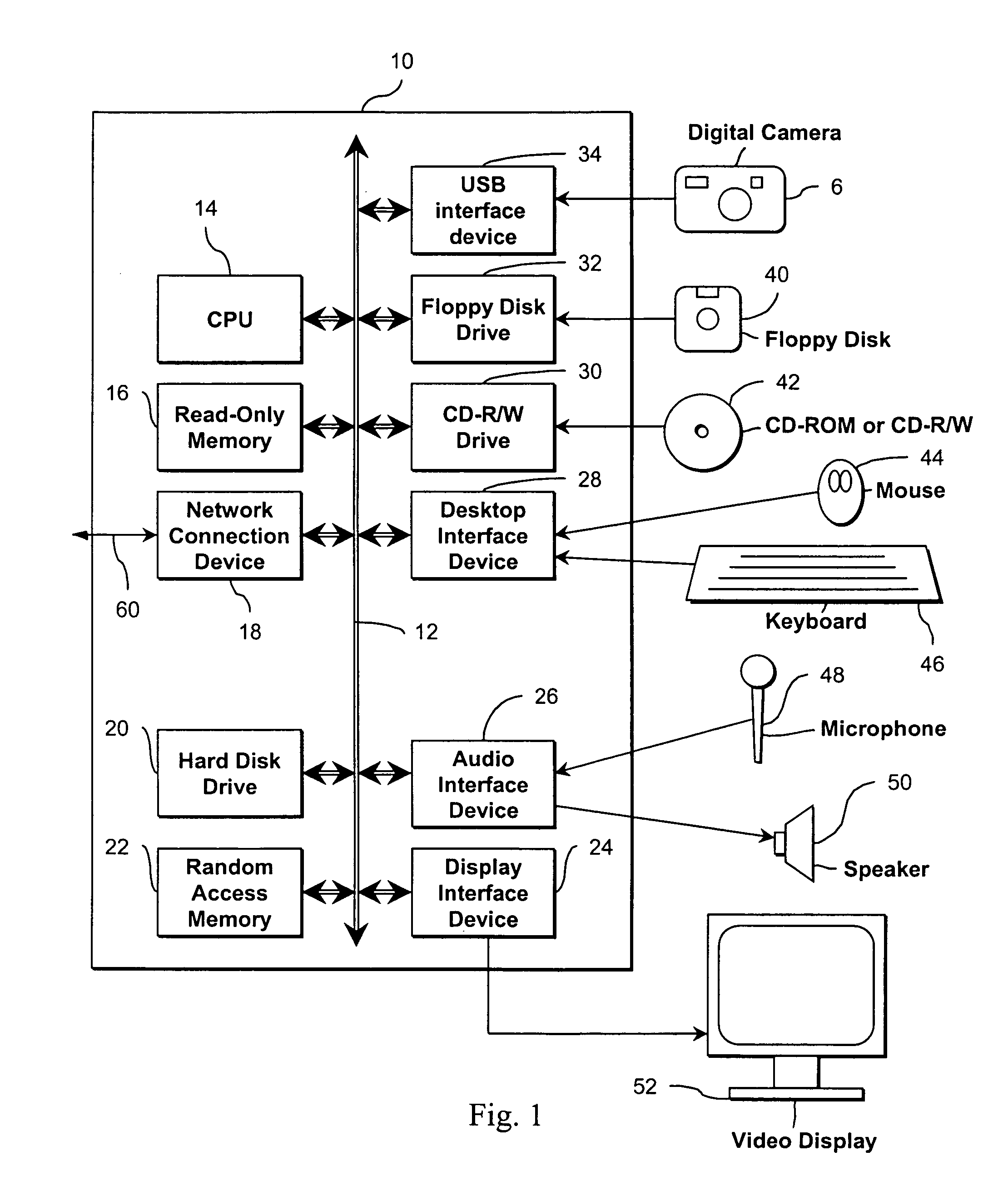

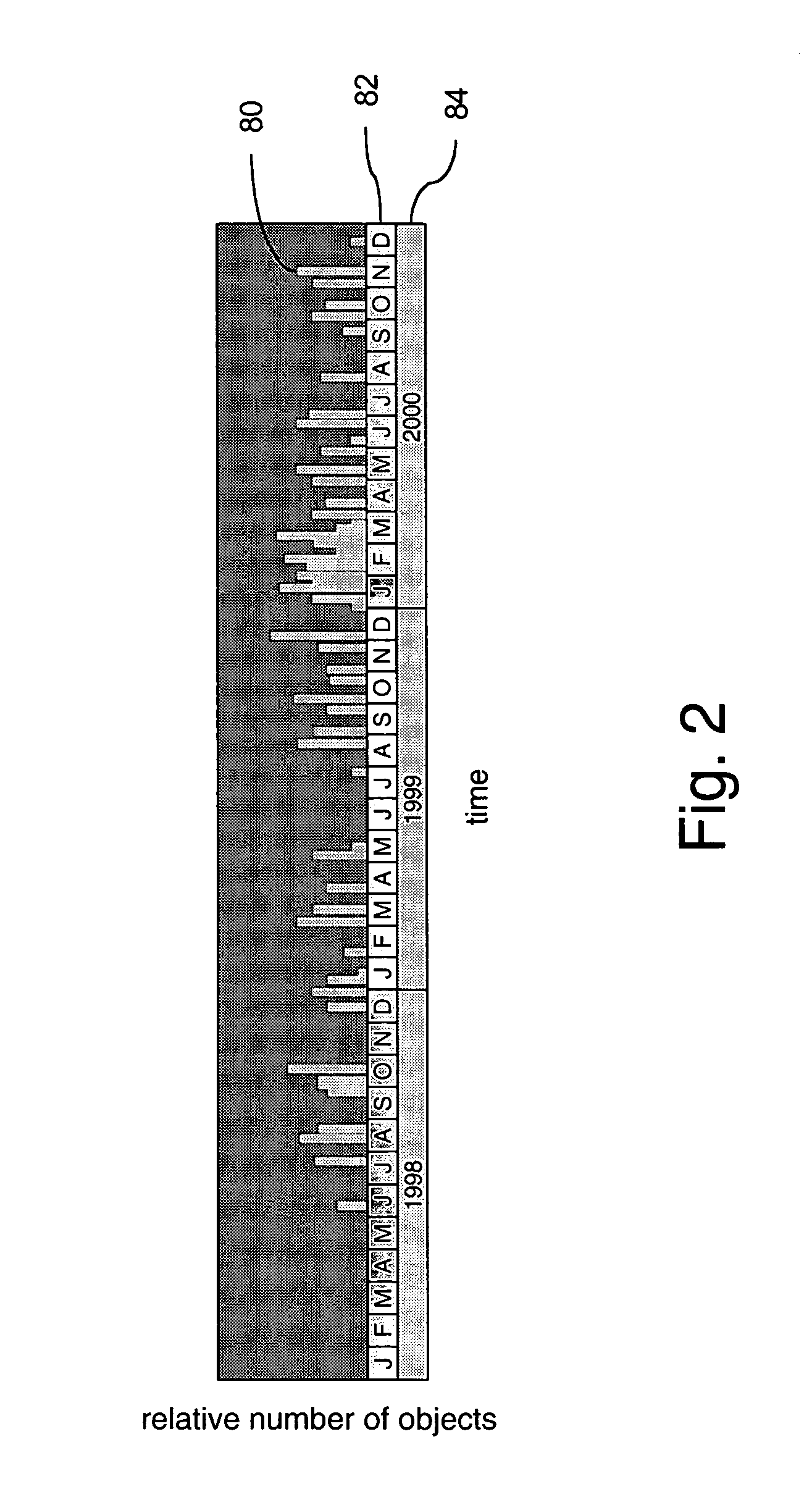

Using digital objects organized according to a histogram timeline

InactiveUS6996782B2Improve representationEnhanced interactionStill image data browsing/visualisationMetadata still image retrievalThumbnailVisual Objects

A method for organizing visual digital objects and for selecting one or more of such visual digital objects for viewing includes developing a histogram timeline which identifies a number of visual digital objects organized according to predetermined time periods and providing thumbnail representations thereof; selecting a portion of the histogram timeline for viewing such thumbnail representations of visual digital objects corresponding to such selected portion; and determining if one or more of the viewed such thumbnail representations is of interest and then viewing the corresponding digital visual object(s).

Owner:NOKIA TECHNOLOGLES OY

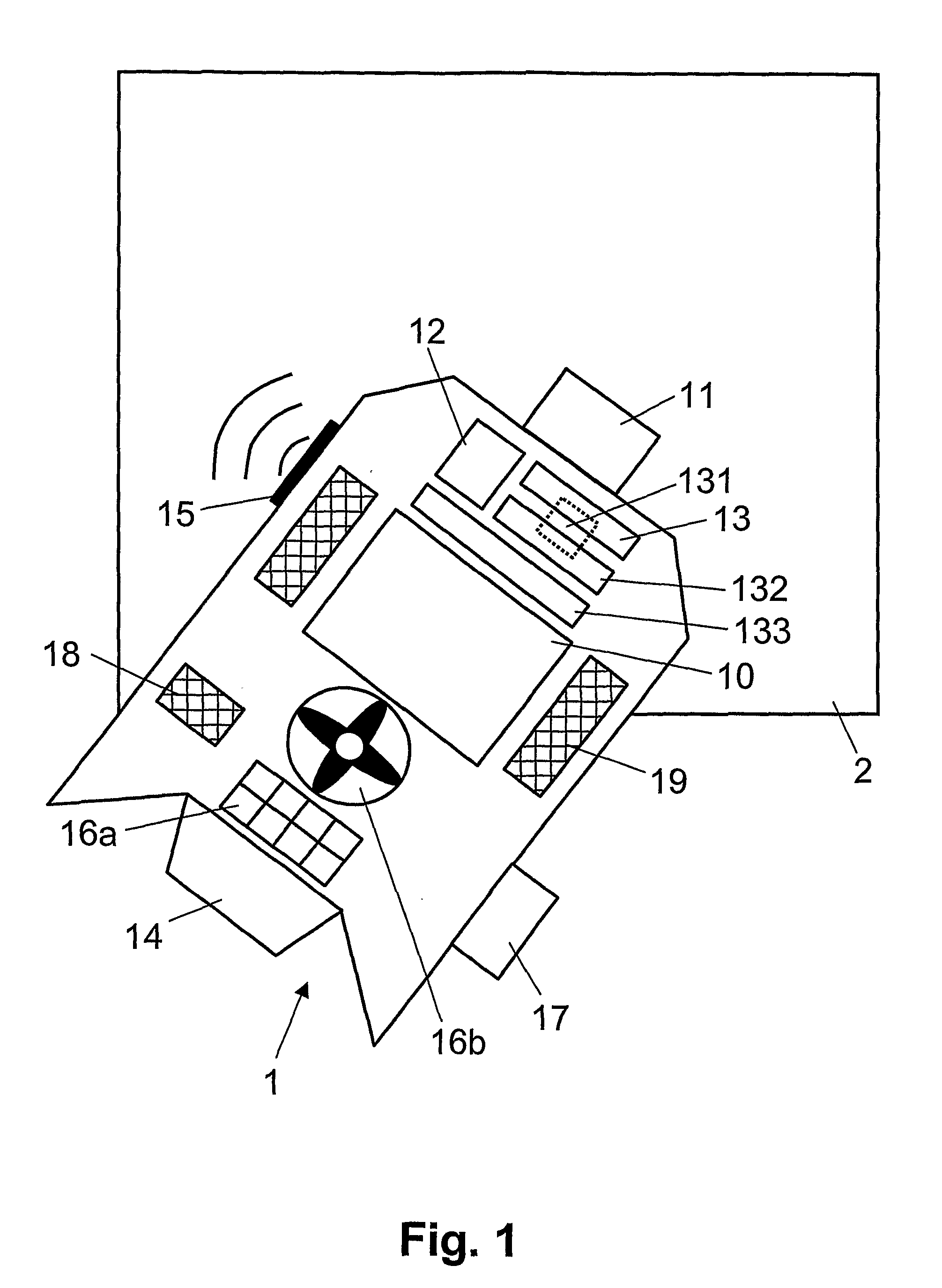

Body motion training and qualification system and method

InactiveUS8512043B2Defective reproductionElectric discharge heatingGymnastic exercisingWorkspaceSimulation

The system allows training and qualification of a user performing a skill-related training exercise involving body motion in a workspace. A training environment is selected through a computer apparatus, and variables, parameters and controls of the training environment and the training exercise are adjusted. Use of an input device by the user is monitored. The 3D angles and spatial coordinates of reference points related to the input device are monitored through a detection device. A simulated 3D dynamic environment reflecting effects caused by actions performed by the user on objects is computed in real time as a function of the training environment selected. Images of the simulated 3D dynamic environment in real time are generated on a display device viewable by the user as a function of a computed organ of vision-object relation. Data indicative of the actions performed by the user and the effects of the actions are recorded and user qualification is set as a function of the recorded data.

Owner:123 CERTIFICATION

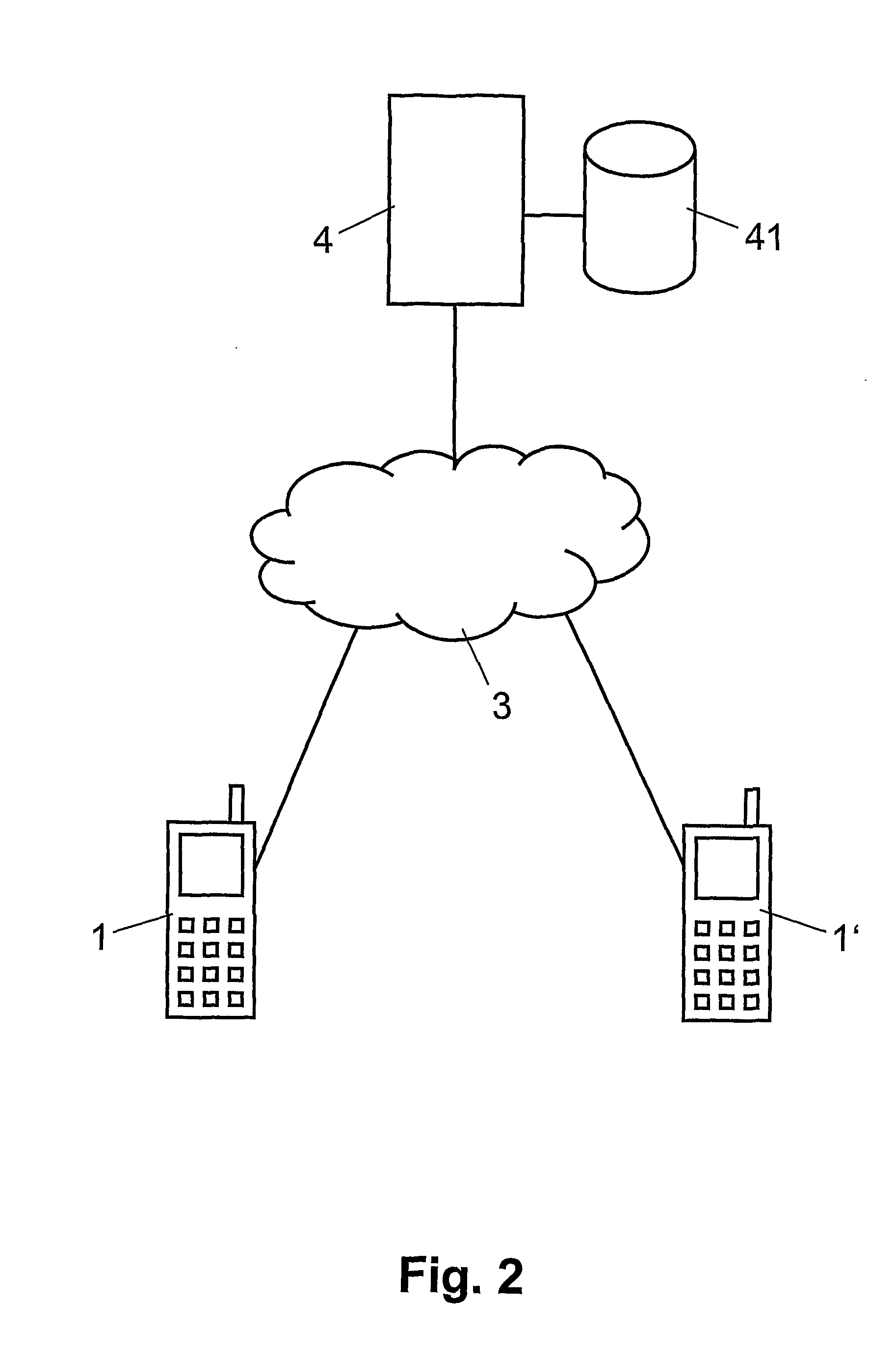

Method Of Executing An Application In A Mobile Device

InactiveUS20080194323A1Video gamesSpecial data processing applicationsPosition dependentVisual Objects

For executing an application (13) in a mobile device (1) comprising a camera (14), a visual background (2) is captured through the camera (14). A selected application (13) is associated with the visual background (2). The selected application (13) is executed in the mobile device (1). Determined are orientation parameters indicative of an orientation of the mobile device (1) relative to the visual background (2). Based on the orientation parameters, application-specific output signals are generated in the mobile device (1). Particularly, the captured visual background (2) is displayed on the mobile device (1) overlaid with visual objects based on the orientation parameters. Displaying the captured visual background with overlaid visual objects, selected and / or positioned dependent on the relative orientation of the mobile device (1), makes possible interactive augmented reality applications, e.g. interactive augmented reality games, controlled by the orientation of the mobile device (1) relative to the visual background (2).

Owner:ETH ZZURICH

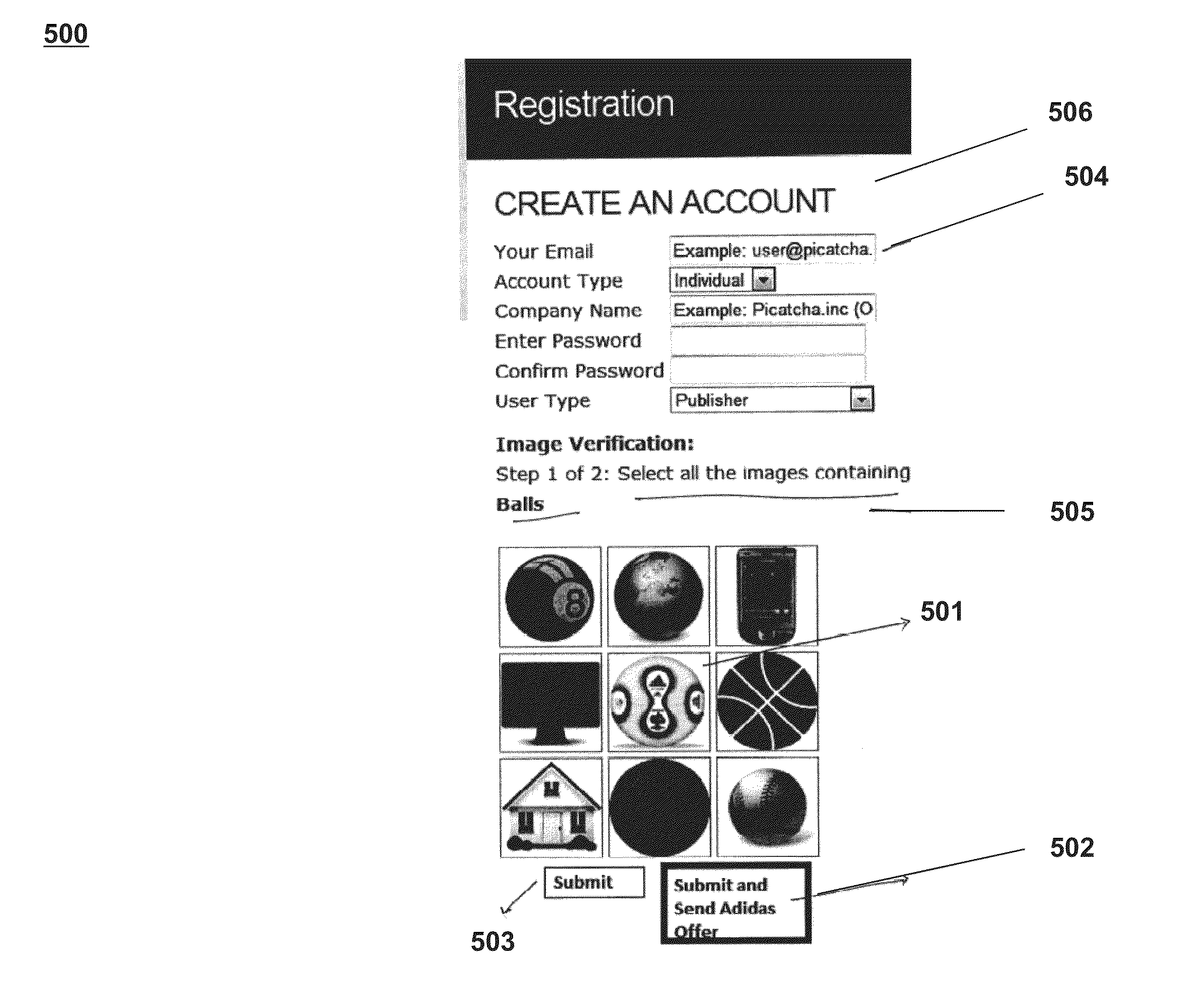

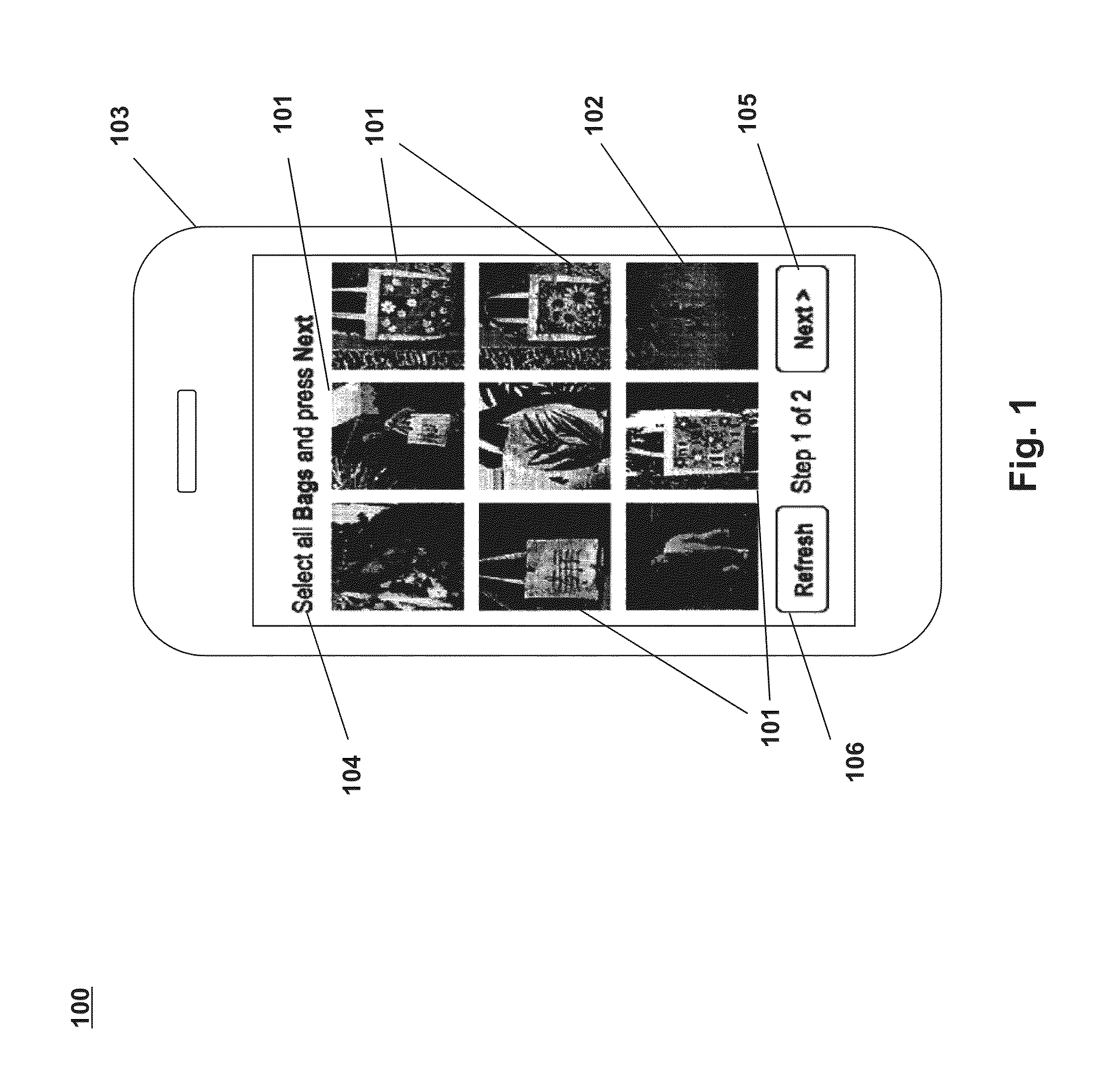

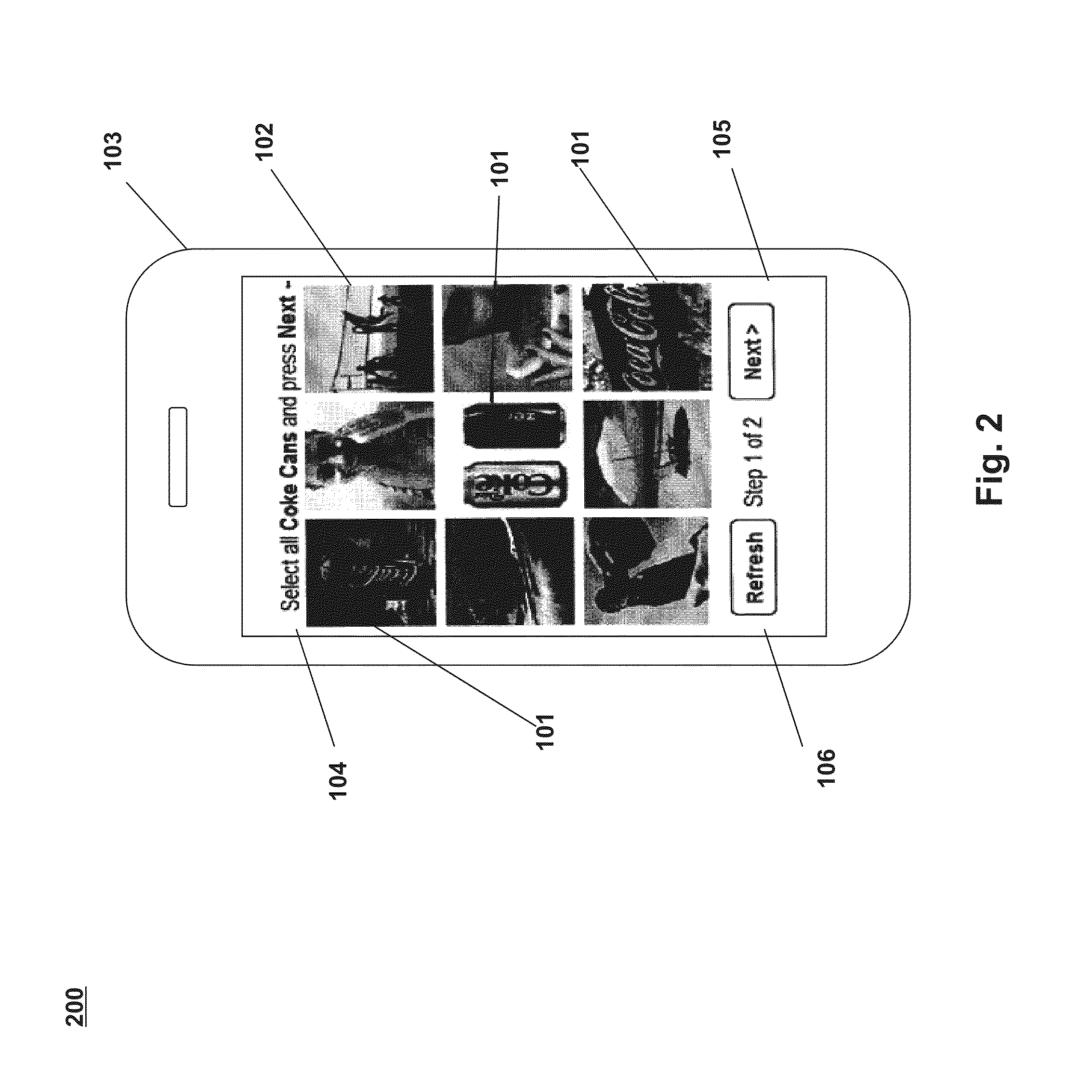

Captcha authentication processes and systems using visual object identification

InactiveUS20130145441A1Accurate identificationDigital data processing detailsMultiple digital computer combinationsUser verificationEngineering

Systems and processes for performing user verification using an imaged-based CAPTCHA are disclosed. The verification process can include receiving a request from a user to access restricted content. In response to the request, a plurality of images may be presented to the user. A challenge question or command that identifies one or more of the displayed plurality of images may also be presented to a user. A selection of one or more of the plurality images may then be received from the user. The user's selection may be reviewed to determine the accuracy of the selection with respect to the challenge question or command. If the user correctly identifies a threshold number of images, then the user may be authenticated and allowed to access the restricted content. However, if the user does not correctly identify the threshold number of images, then the user may be denied access the restricted content.

Owner:PICATCHA

Automatic large scale video object recognition

ActiveUS8254699B1Quick fixTelevision system detailsColor signal processing circuitsNonlinear dimensionality reductionFeature vector

An object recognition system performs a number of rounds of dimensionality reduction and consistency learning on visual content items such as videos and still images, resulting in a set of feature vectors that accurately predict the presence of a visual object represented by a given object name within an visual content item. The feature vectors are stored in association with the object name which they represent and with an indication of the number of rounds of dimensionality reduction and consistency learning that produced them. The feature vectors and the indication can be used for various purposes, such as quickly determining a visual content item containing a visual representation of a given object name.

Owner:GOOGLE LLC

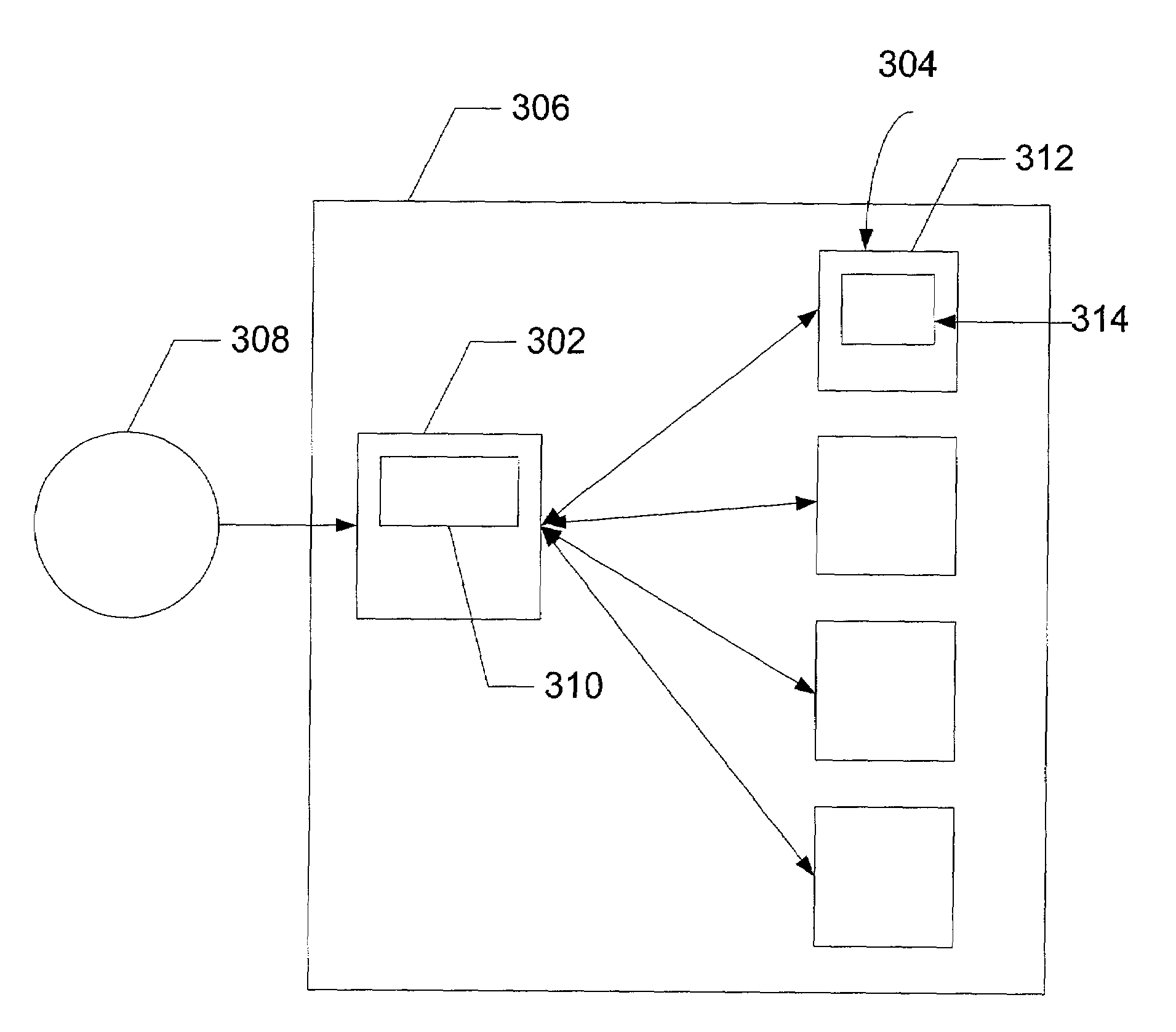

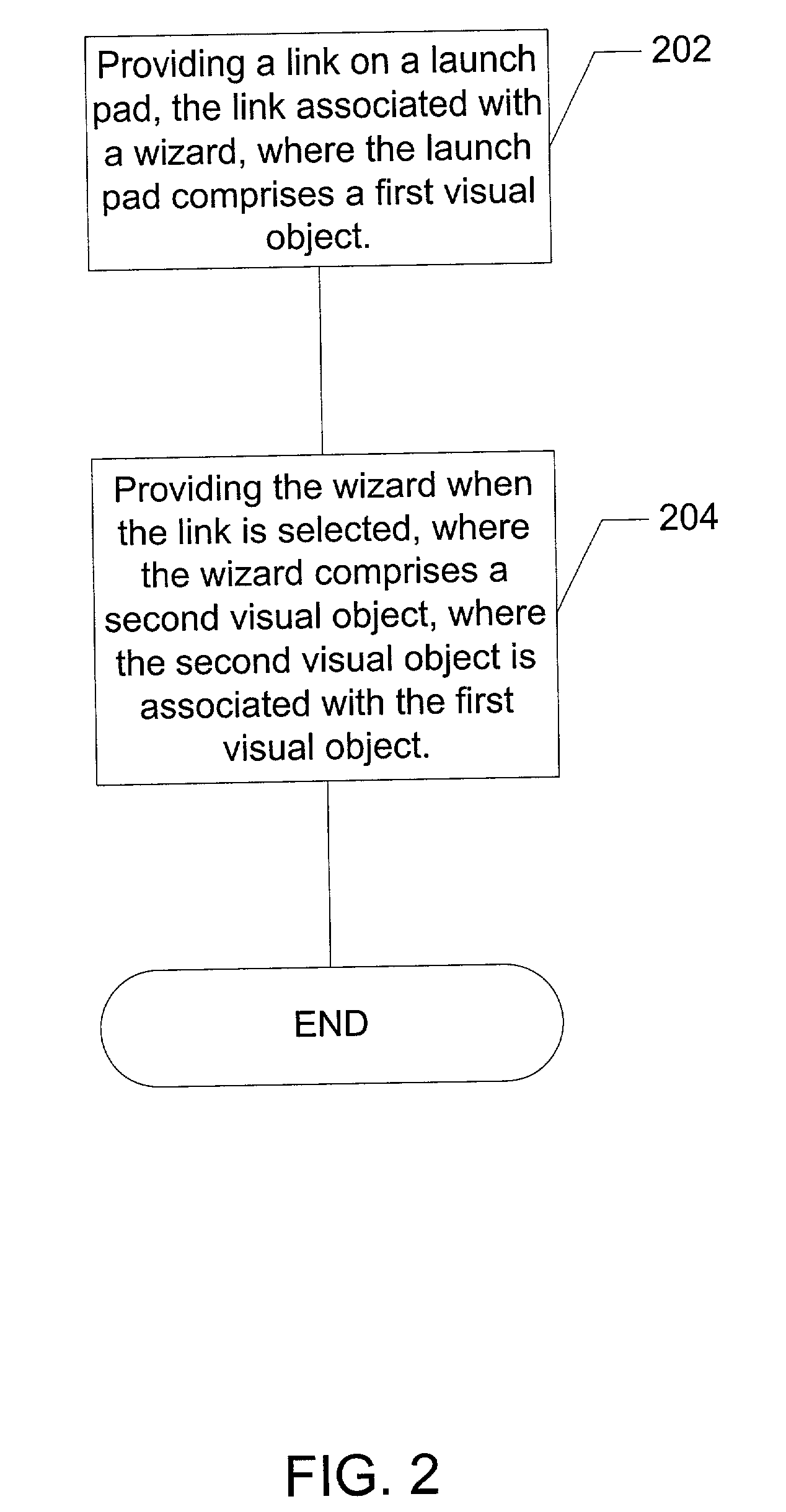

Use of conceptual diagrams to support relationships between launchpads and its wizards

InactiveUS6968505B2More informationDigital computer detailsCathode-ray tube indicatorsComputerized systemVisual Objects

The present invention provides launch pads on the display of a computer system as interactive interfaces between a user and wizards. The present invention includes: (a) providing a link on a launch pad, where the link is associated with a wizard, where the launch pad comprises a first visual object, where the first visual object in the launch pad relates to the task performed by the wizard; and providing the wizard when the link is selected, where the wizard comprises a second visual object, where the second visual object is associated with the first visual object. In the preferred embodiment, the second visual object in the wizard provides more information concerning the task than the first visual object. In this manner, a user is provided a visual connection between the information provided on the launch pad and the information provided on the wizard concerning the task performed by the wizard.

Owner:IBM CORP

Statistical metering and filtering of content via pixel-based metadata

InactiveUS7805680B2AdvertisementsSelective content distributionVisual field lossComputer graphics (images)

An apparatus for using metadata to more precisely process displayed content may include a processor executing stored instructions, which causes the apparatus to at least receive an image data frame including pixels having a visual object(s), wherein bits in the image data frame includes pixel data for a single pixel. The pixel data includes content and metadata fields. The metadata field includes a value. The metadata value indicates that the single pixel is part of a visual object in a category. The execution of the stored instructions may also cause the apparatus to identify pixels including a visual object by their metadata fields. The pixels include a visual object identifiable within the image data frame such that certain operations are performed on the pixels forming an individual visual object separate from pixels forming remaining visual objects in the visual field. Corresponding methods and computer program products are also provided.

Owner:NOKIA TECH OY

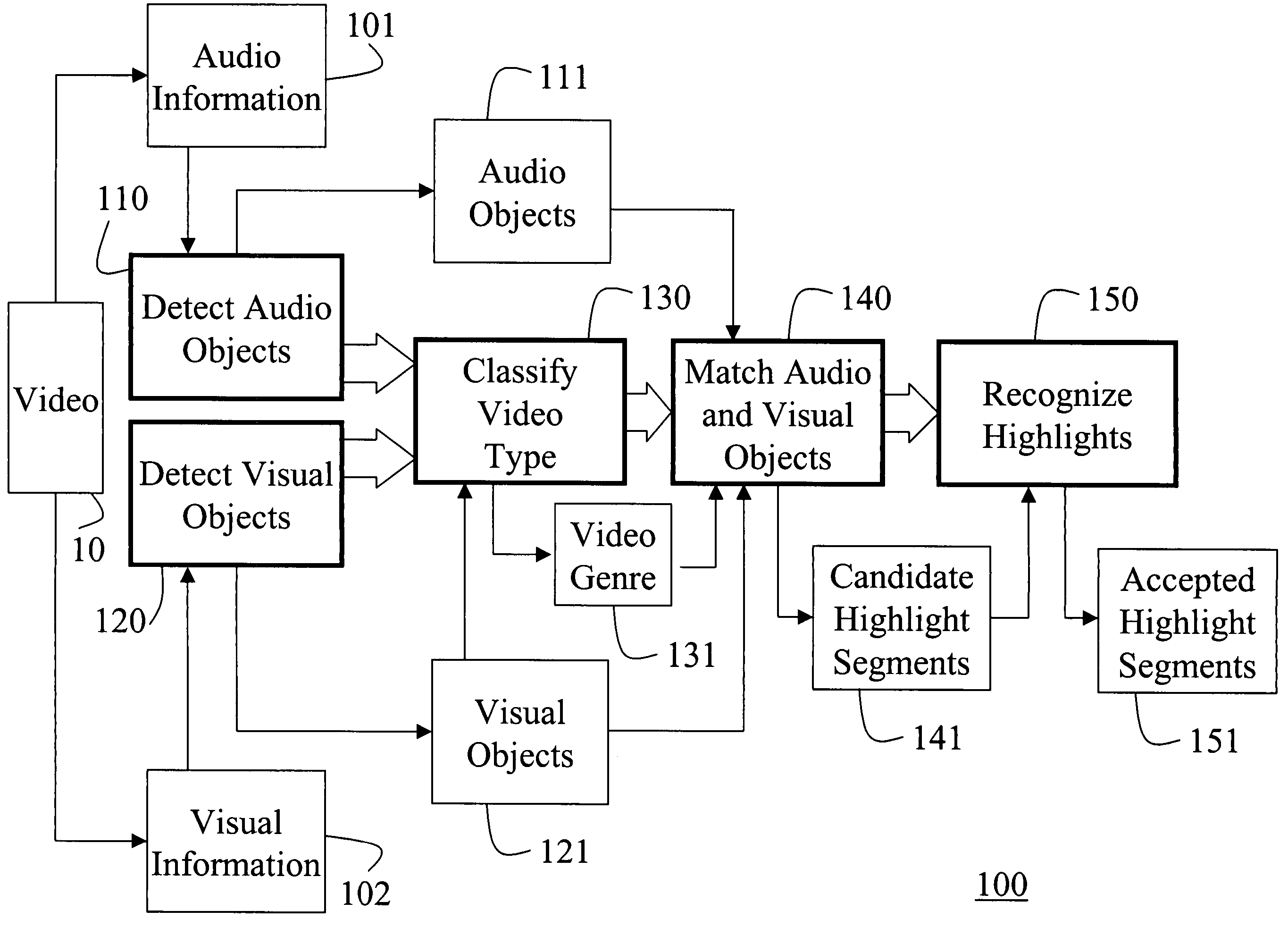

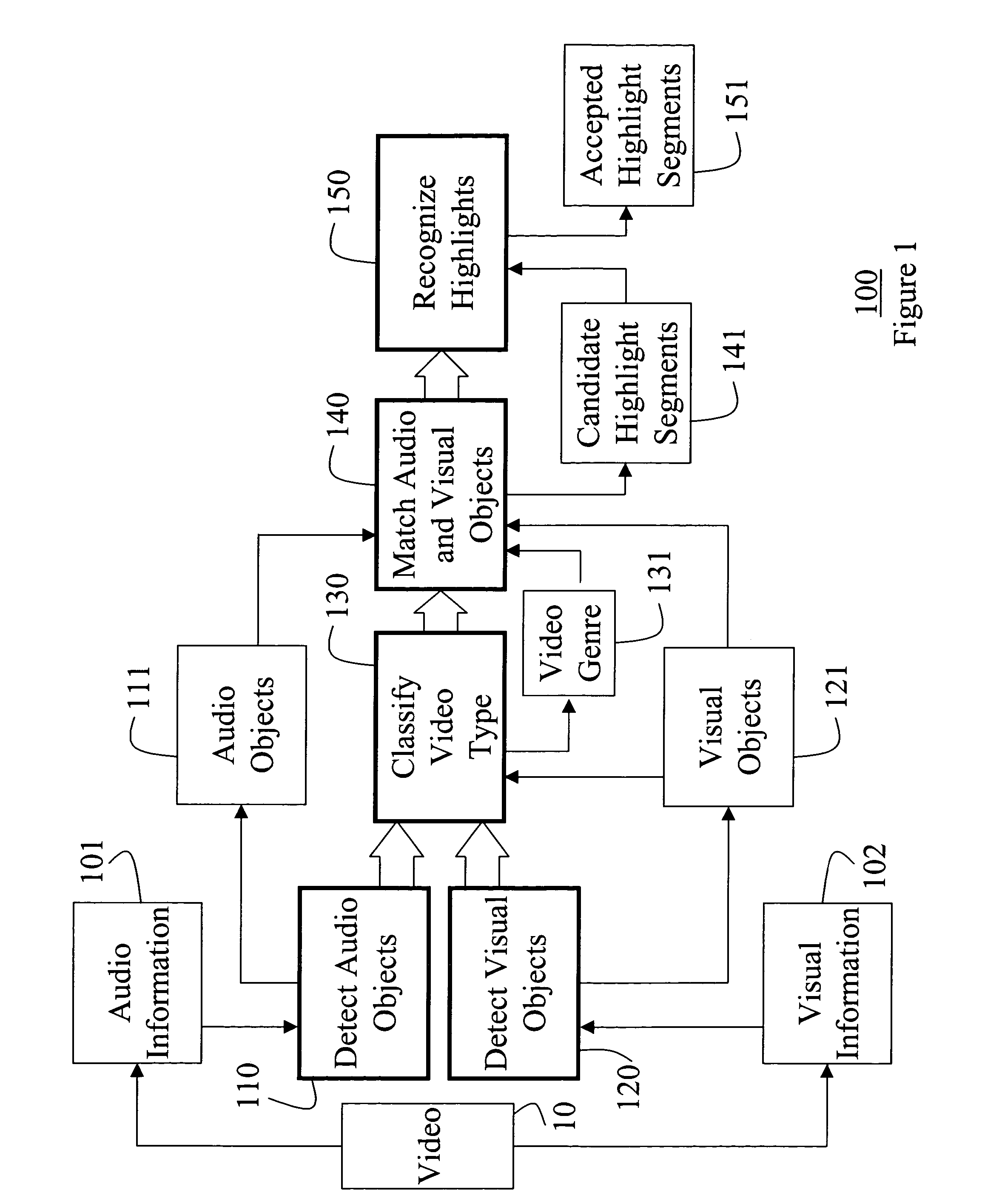

Identifying video highlights using audio-visual objects

InactiveUS20060059120A1Digital data information retrievalCharacter and pattern recognitionVisual ObjectsVisual perception

A method identifies highlight segments in a video including a sequence of frames. Audio objects are detected to identify frames associated with audio events in the video, and visual objects are detected to identify frames associated with visual events. Selected visual objects are matched with an associated audio object to form an audio-visual object only if the selected visual object matches the associated audio object, the audio-visual object identifying a candidate highlight segment. The candidate highlight segments are further refined, using low level features, to eliminate false highlight segments.

Owner:MITSUBISHI ELECTRIC RES LAB INC

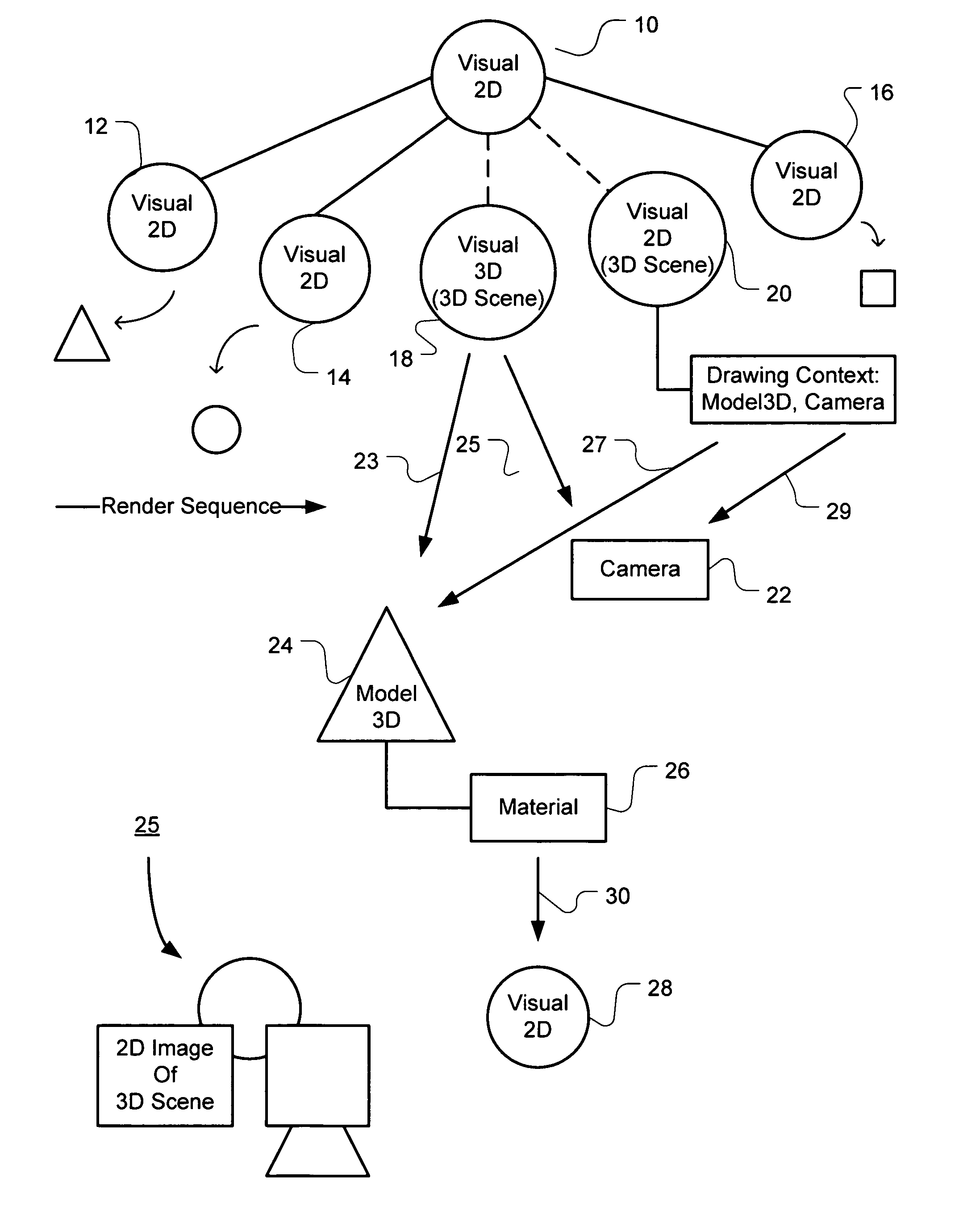

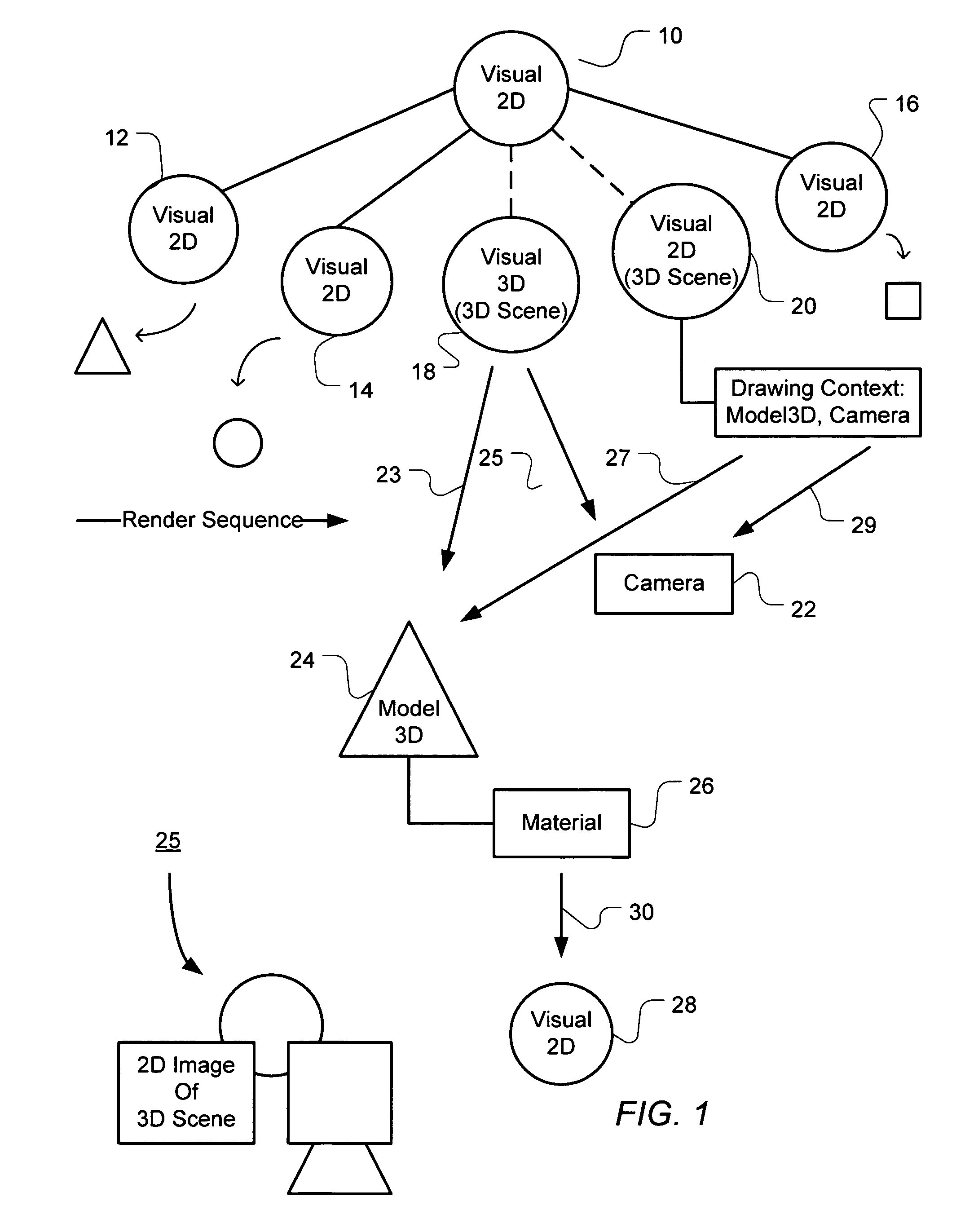

Integration of three dimensional scene hierarchy into two dimensional compositing system

A hierarchy of 2D visual objects and 3D scene objects are integrated for seamless processing to render 2D images including a 2D view of a 3D scene on a 2D computer display. The processing of the 3D model objects and 2D visual objects in the visual hierarchy is integrated so that the processing is readily handed off between 3D and 2D operations. Further the number of transitions between processing visual 2D objects and 3D model objects when creating a display image has no architectural limit. A data structure integrates computer program objects for creating 3D images and 2D images in a visual tree object hierarchy having visual 2D objects or 3D scene objects pointing to 3D model objects. The data structure comprises an object tree hierarchy, one or more visual 2D objects, and one or more 3D reference or scene objects pointing to 3D model objects. The visual 2D objects define operations drawing a 2D image. The 3D reference or scene objects define references pointing to objects with operations that together draw a two-dimensional view of a three-dimensional scene made up of one or more 3D models. The 3D reference or scene objects point to 3D model objects and a camera object. The camera object defines a two-dimensional view of the 3D scene. The 3D model objects draw the 3D models and define mesh information used in drawing contours of a model and material information used in drawing surface texture of a model. The material information for the surface texture of a model may be defined by a visual 2D object, a 3D reference or scene object or a tree hierarchy of visual 2D objects and / or 3D reference scene objects.

Owner:MICROSOFT TECH LICENSING LLC

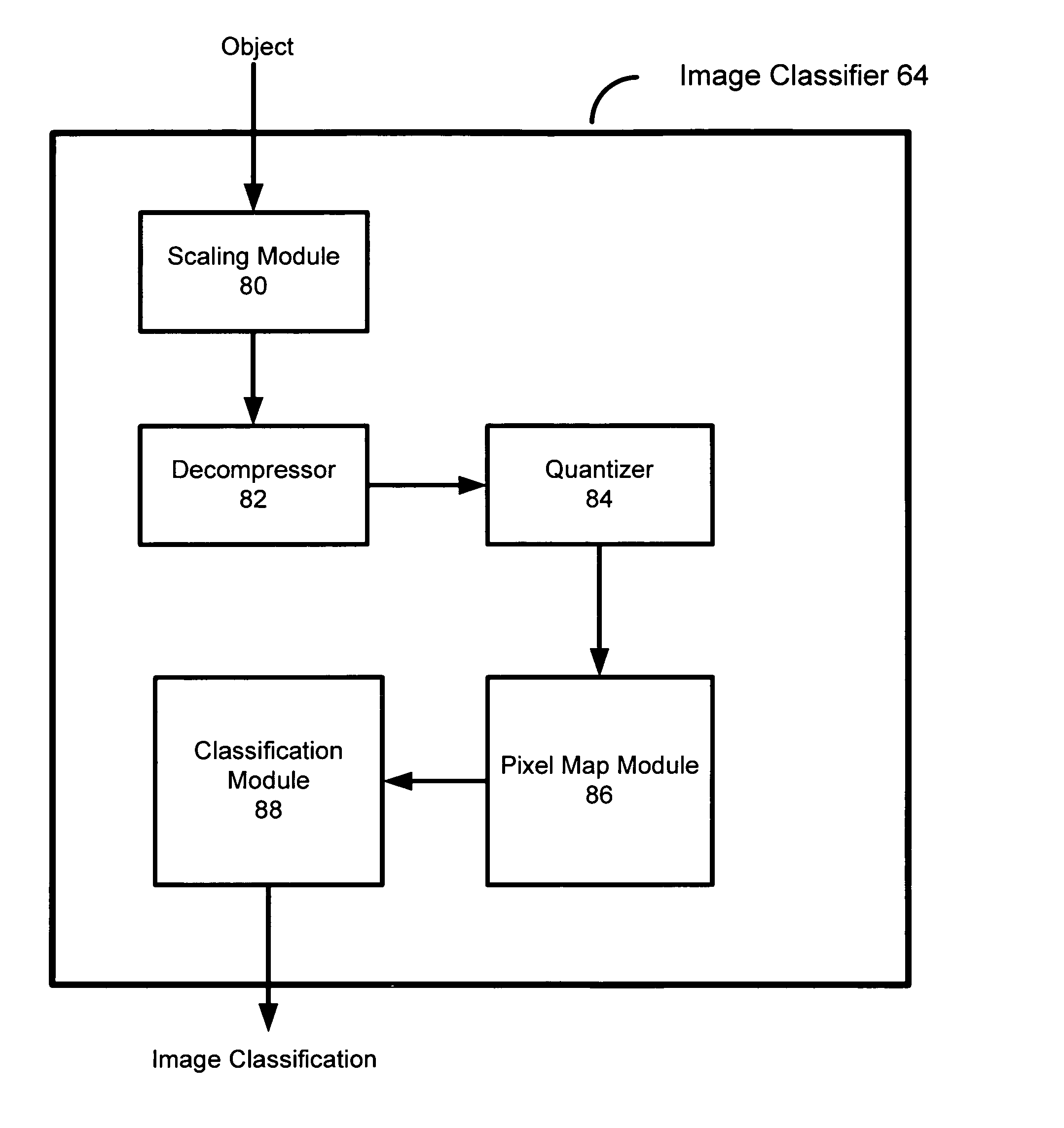

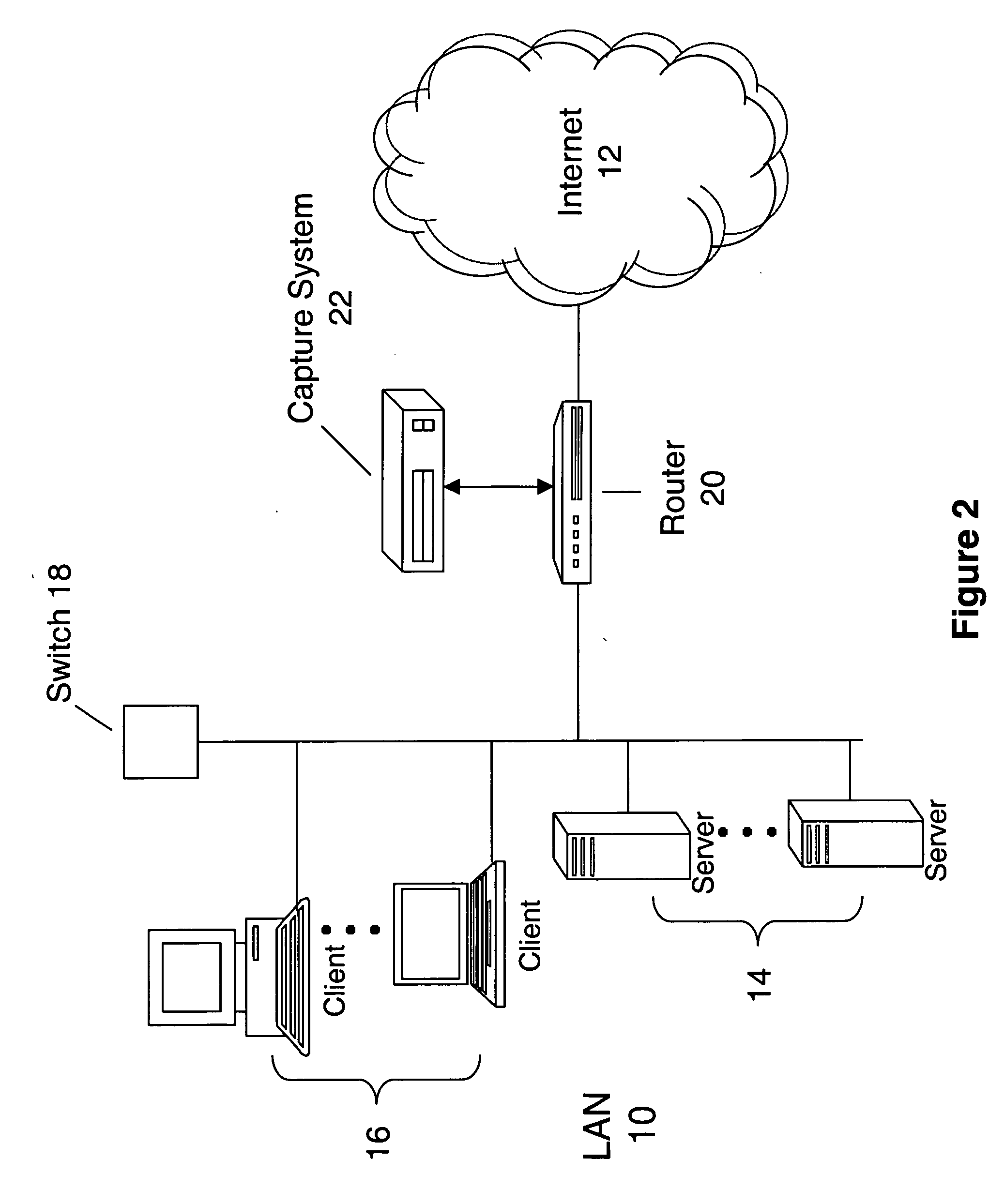

Identifying image type in a capture system

InactiveUS20070116366A1Character and pattern recognitionPlatform integrity maintainancePattern recognitionVisual Objects

Visual objects can be classified according to image type. In one embodiment, the present invention includes capturing a visual object, and decompressing the visual object to a colorspace representation exposing each pixel. The contribution of each pixel to a plurality of image types can then be determined. Then, the contributions can be combined, and the image type of the visual object can be determined based on the contributions.

Owner:MCAFEE LLC

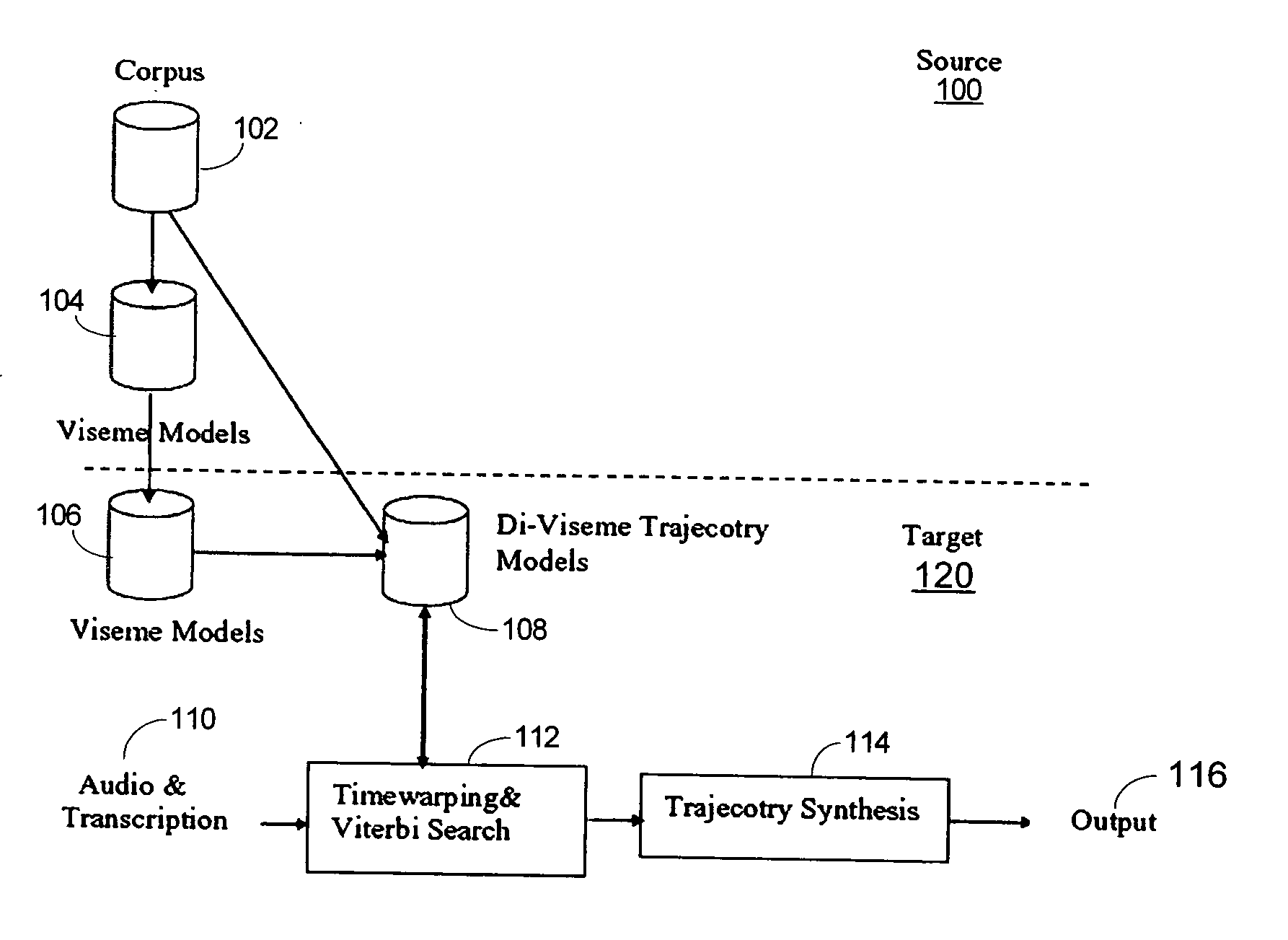

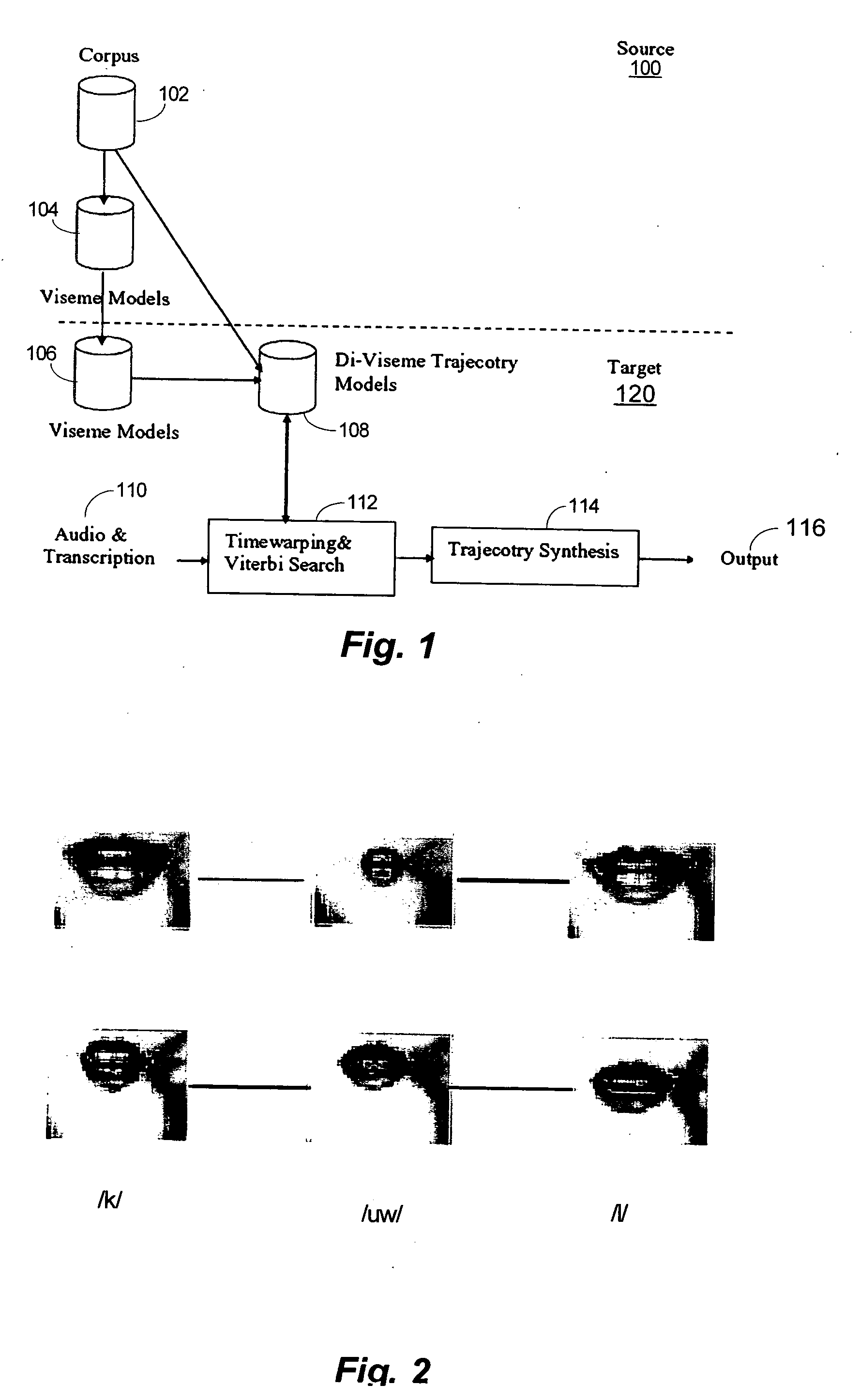

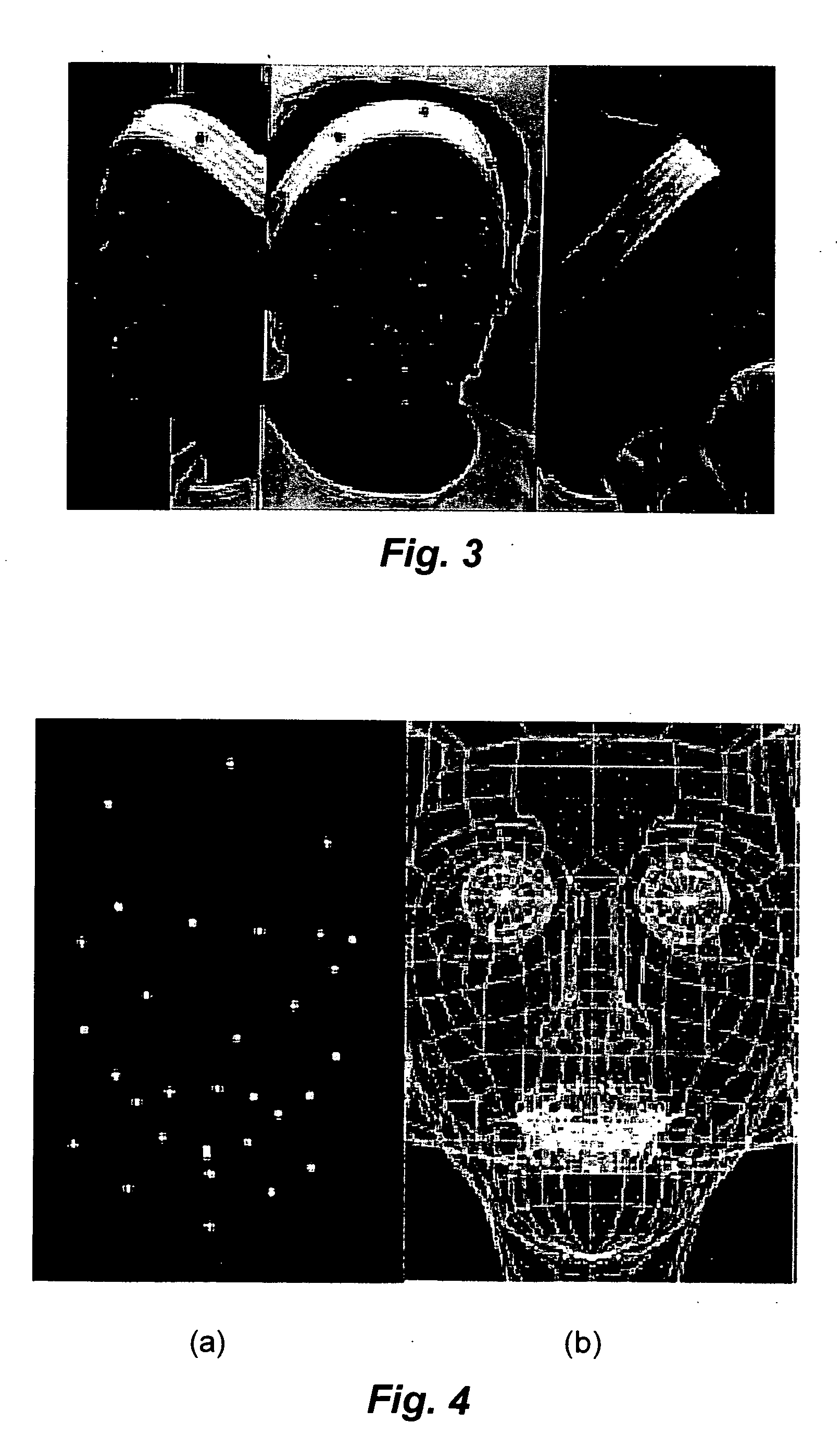

Methods and systems for synthesis of accurate visible speech via transformation of motion capture data

The disclosure describes methods for synthesis of accurate visible speech using transformations of motion-capture data. Methods are provided for synthesis of visible speech in a three-dimensional face. A sequence of visemes, each associated with one or more phonemes, are mapped onto a three-dimensional target face, and concatentated. The sequence may include divisemes corresponding to pairwise sequences of phonemes, wherein the diviseme is comprised of motion trajectories of a set facial points. The sequence may also include multi-units corresponding to words and sequences of words. Various techniques involving mapping and concatenation are also addressed.

Owner:UNIV OF COLORADO THE REGENTS OF

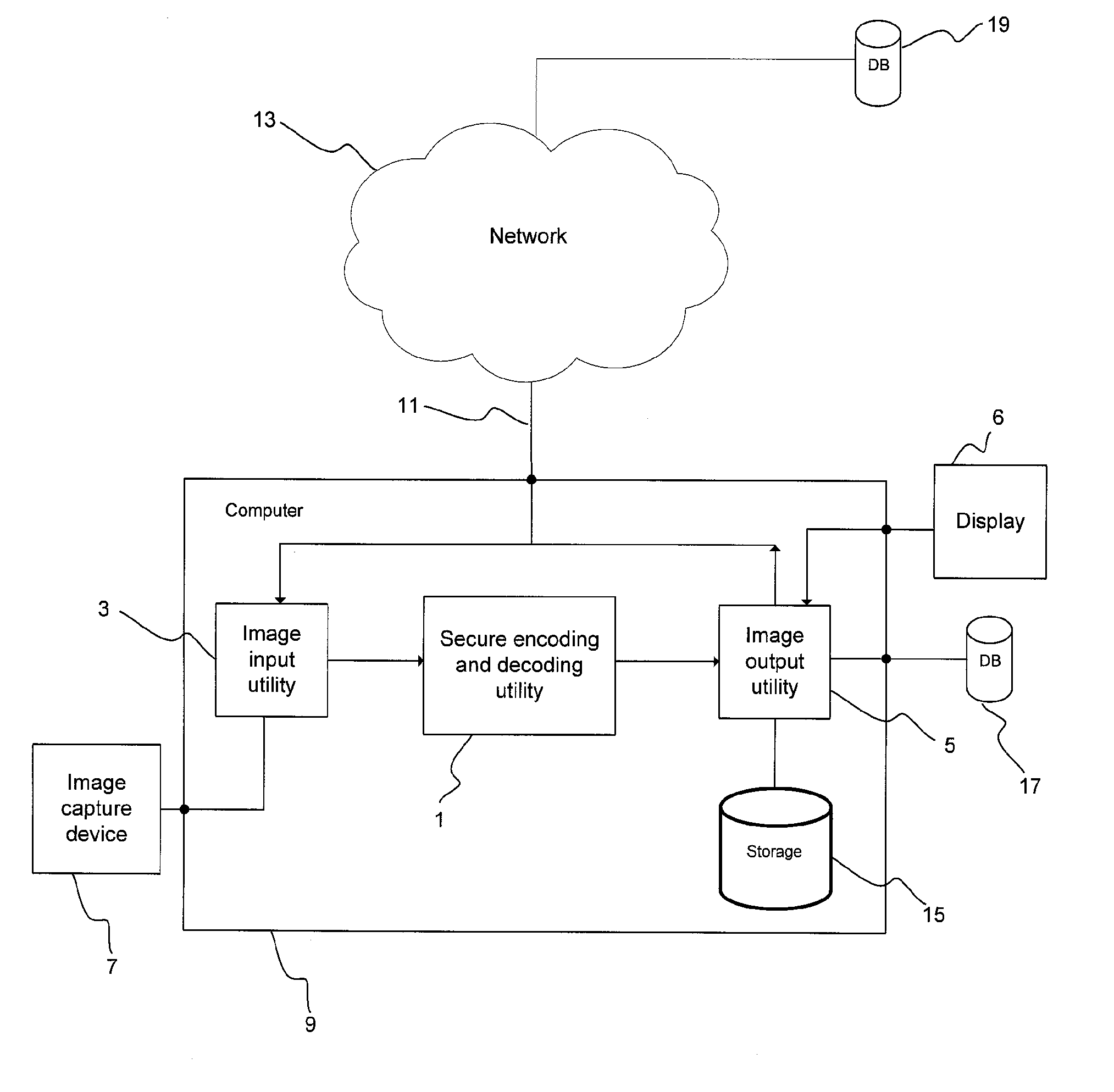

Method and system for secure coding of arbitrarily shaped visual objects

InactiveUS20110158470A1Easy to compressGood encryptionCharacter and pattern recognitionImage codingComputer graphics (images)Visual Objects

The present invention relates to a method and system for secure coding of arbitrarily shaped visual objects. More specifically, a system and method are provided for encoding an image, characterized by the steps of selecting one or more objects in the image from the background of the image, separating the one or more objects from the background, and compressing and encrypting, or facilitating the compression and encryption, by one or more computer processors, each of the one or more objects using a single coding scheme. The coding scheme also is operable to decrypt and decode each of the objects.

Owner:THE GOVERNINIG COUNCIL OF THE UNIV OF TORANTO

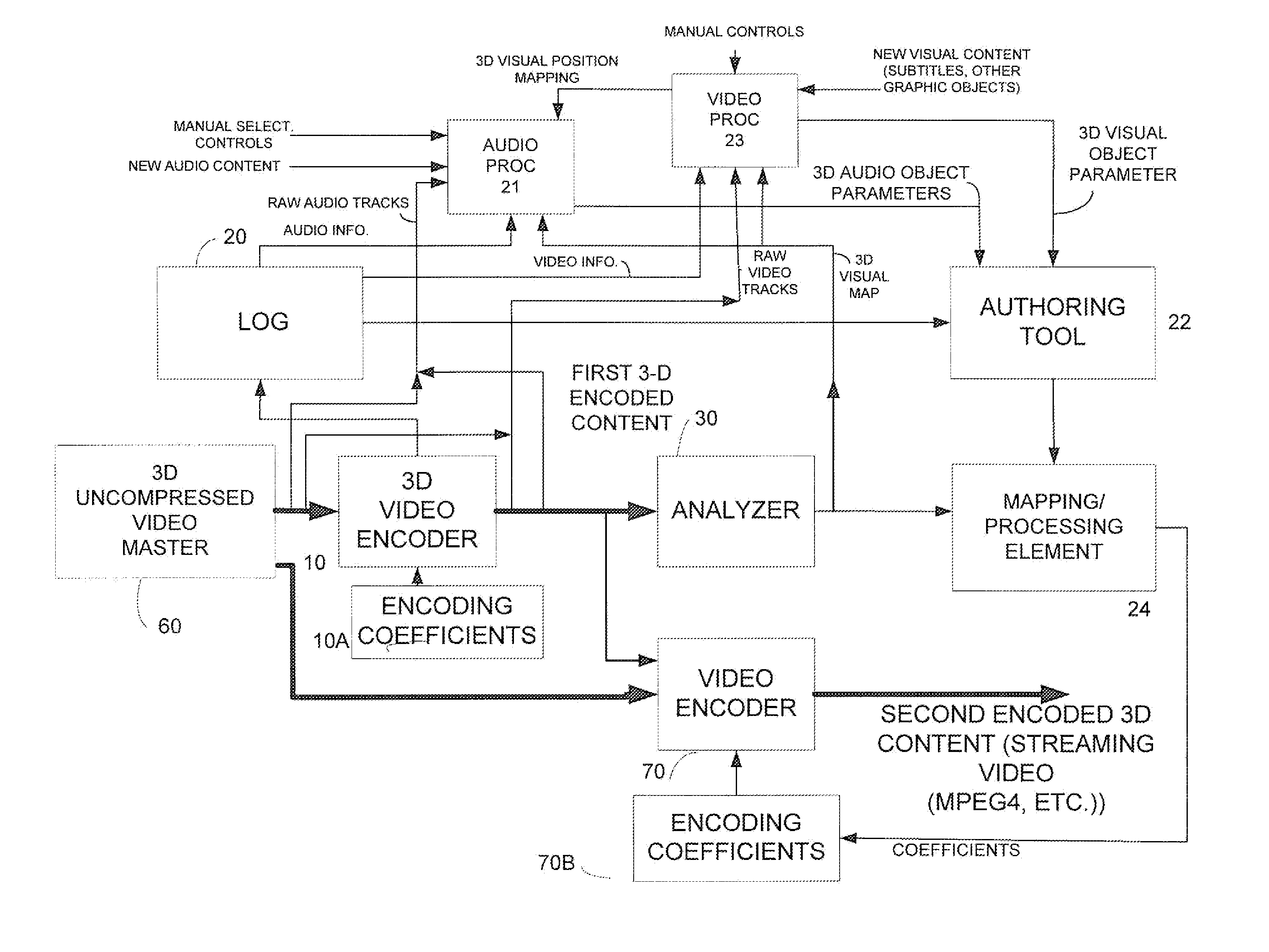

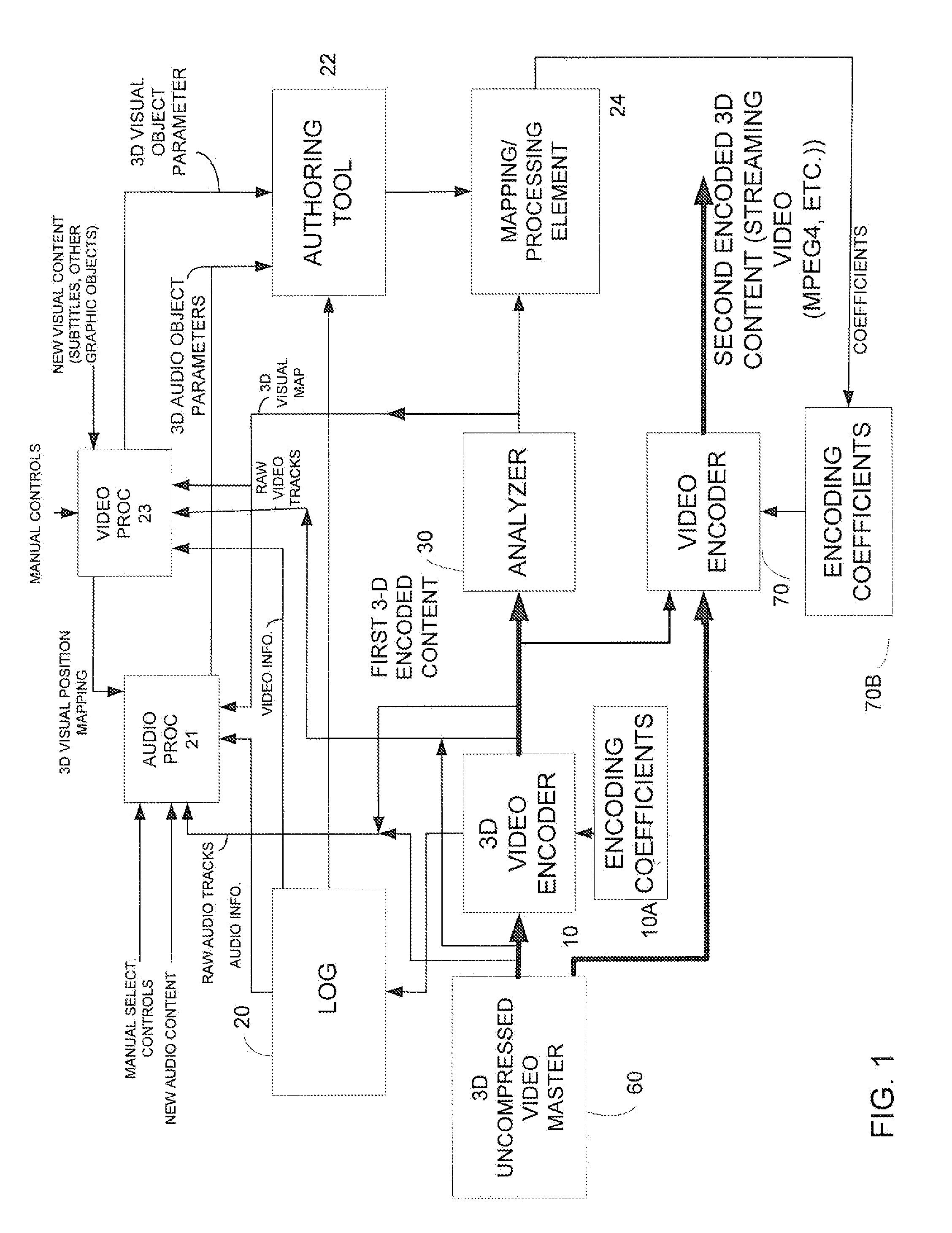

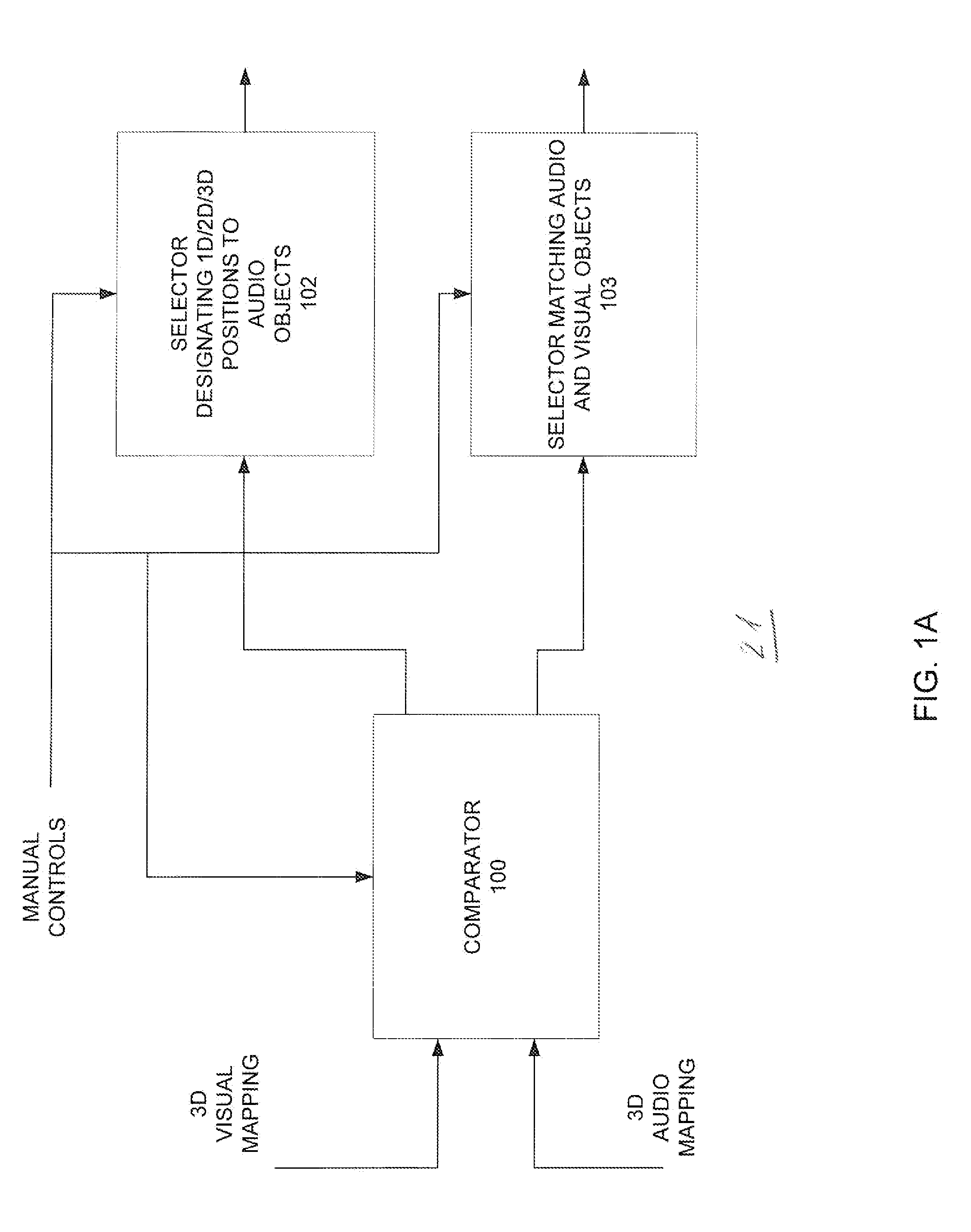

Method and Apparatus for Generating 3D Audio Positioning Using Dynamically Optimized Audio 3D Space Perception Cues

ActiveUS20120062700A1Electronic editing digitised analogue information signalsColor television signals processingVideo encodingPattern perception

An apparatus generating audio cues for content indicative of the position of audio objects within the content comprising:an audio processor receiving raw audio tracks for said content and information indicative of the positions of at least some of said audio tracks within frames of said content, said audio processor generating corresponding audio parameters;an authoring tool receiving said audio parameters and generating encoding coefficients, said audio parameters including audio cue of the position of audio objects corresponding to said tracks in at least one spatial dimension; anda first audio / video encoder receiving an input and encoding said input into an audio visual content having visual objects and audio objects, said audio objects being disposed at location corresponding to said one spatial position, said encoder using said encoding coefficients for said encoding.

Owner:WARNER BROS ENTERTAINMENT INC

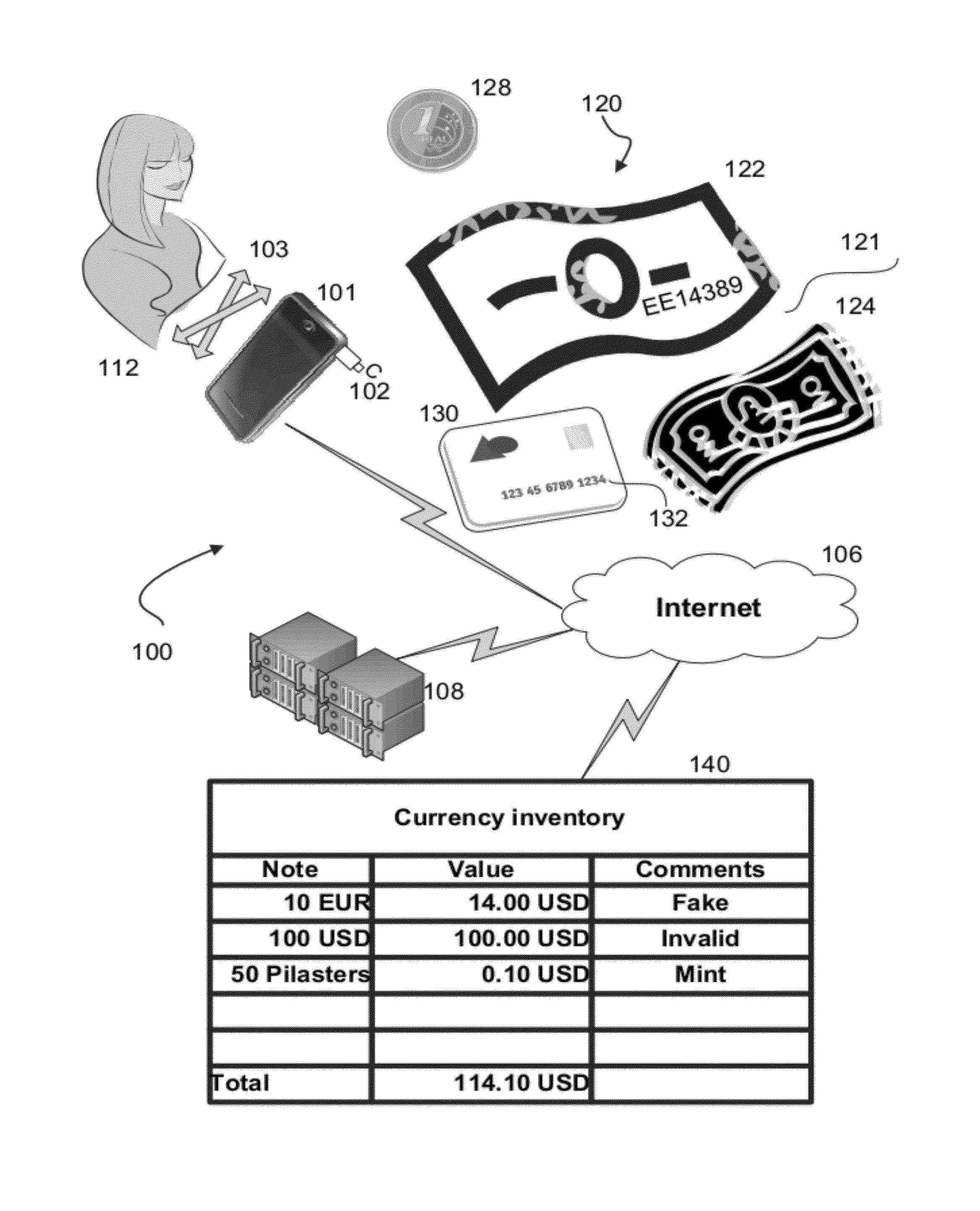

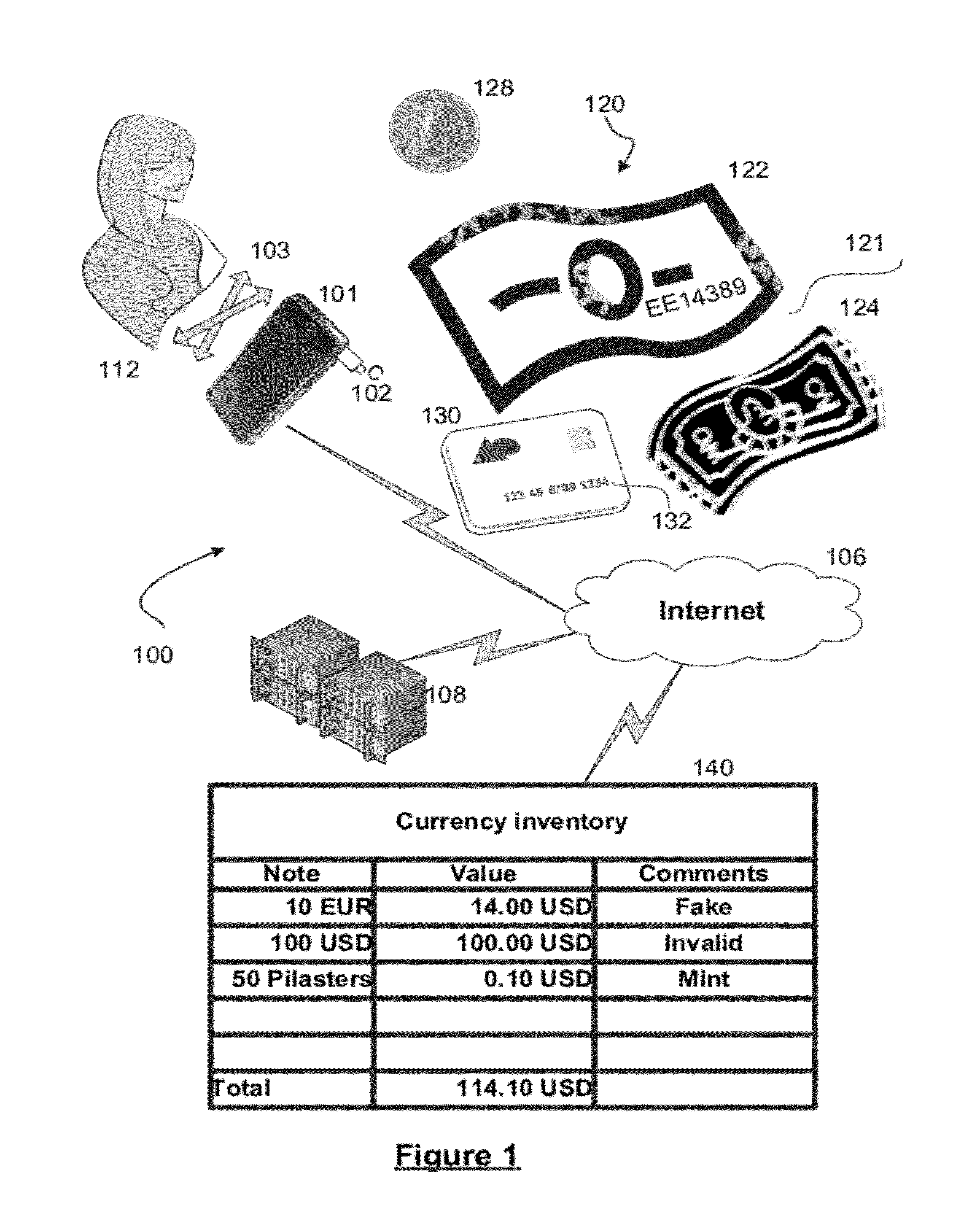

System and process for automatically analyzing currency objects

A method, system, and computer program product for analyzing images of visual objects, such as currency and payment cards, captured on a mobile device in determining their authenticity, and total amount of value. The system may be used in verifying the authenticity of hard currency, to count the total amount of the currency captured in one or more images, and to convert the currency using real time monetary exchange rates. The mobile device may also be used to verify the identity of a credit card user utilizing images of the card holder's face and signature, name on the card, card number, card signature, and card security code. Computerized content analysis of the captured images on the mobile devices as compared to images stored on a database facilitates a merchant processing a customer's payment and a financial institute accepting a cash deposit.

Owner:ATSMON ALON +1

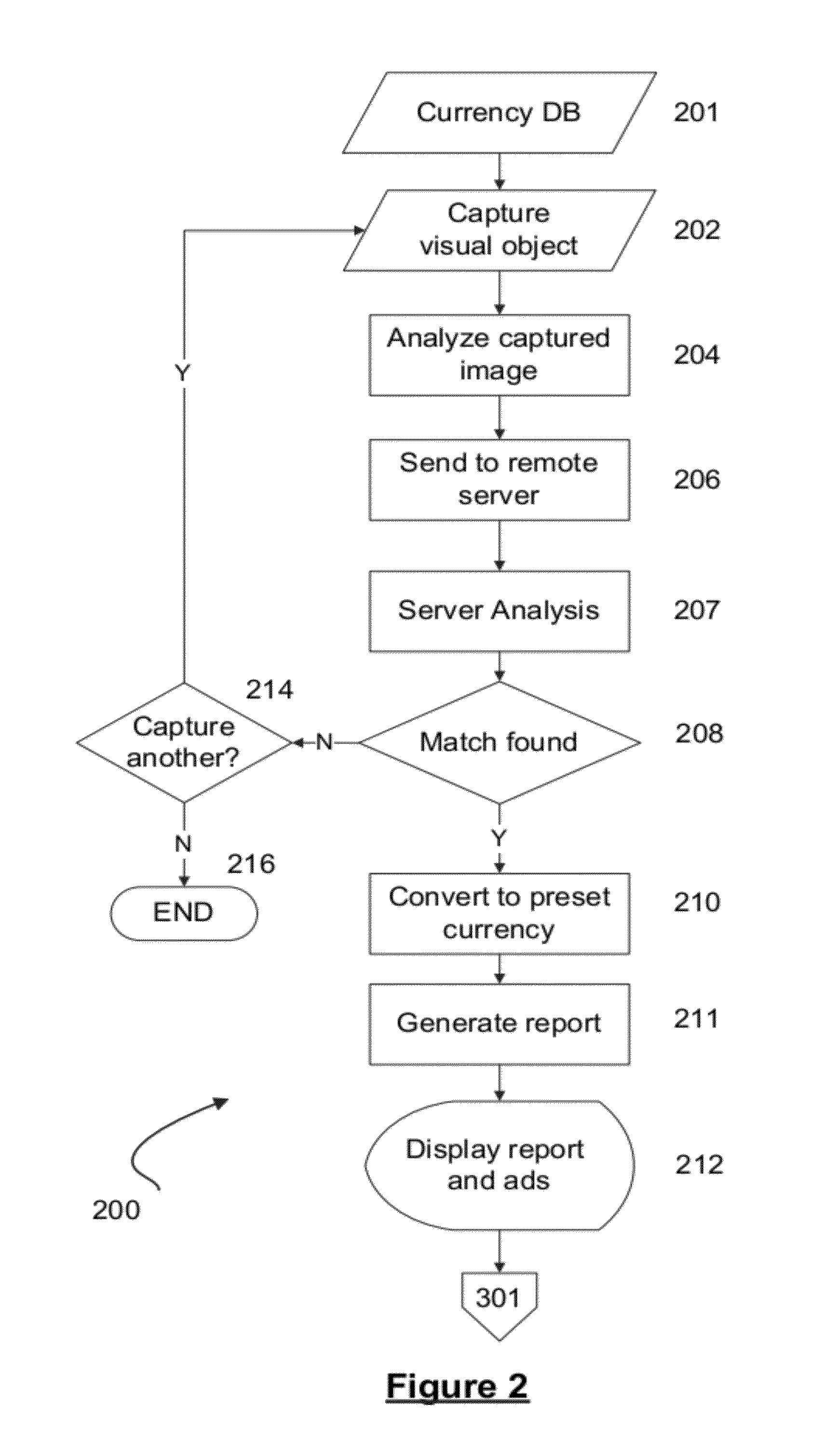

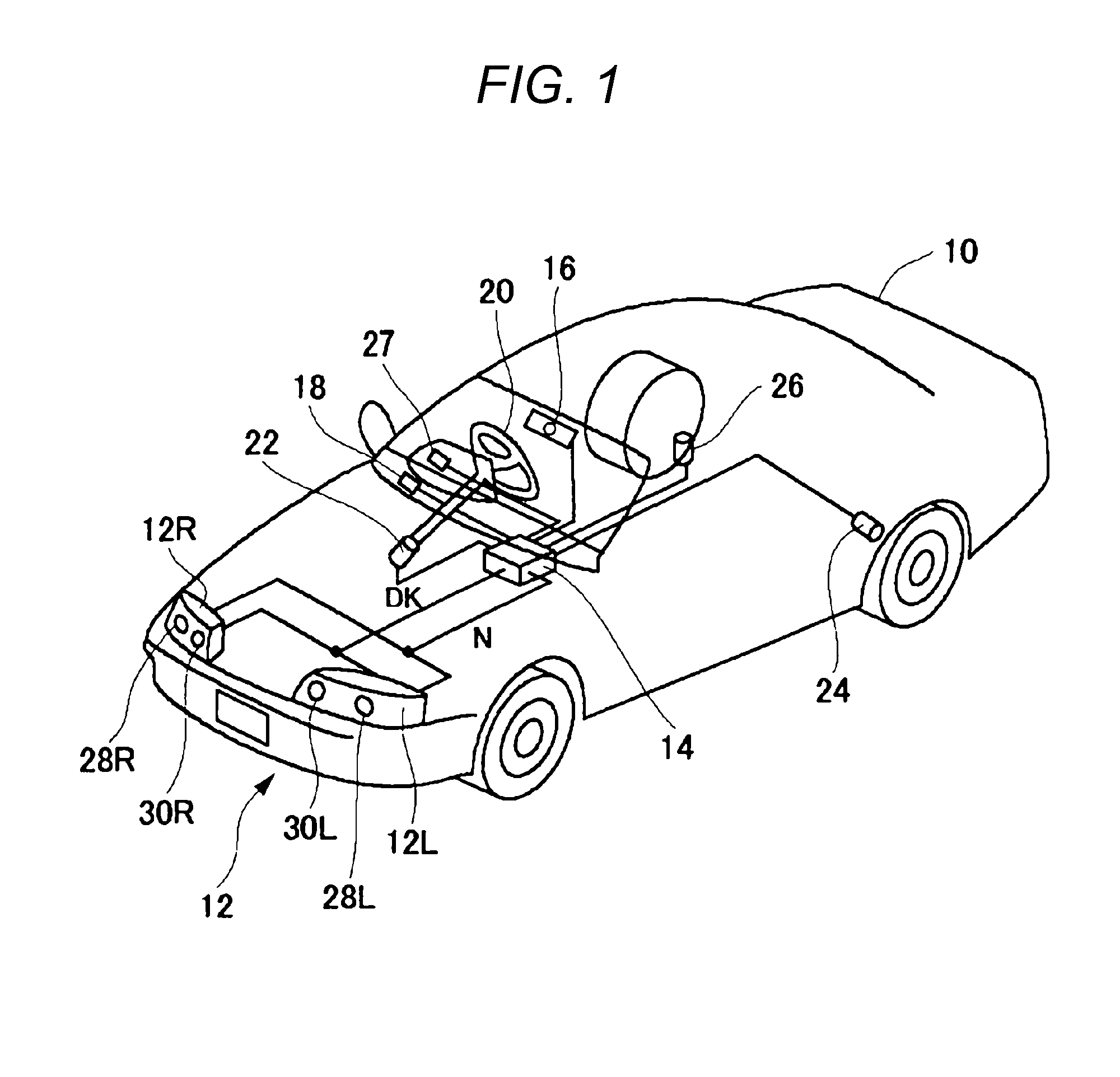

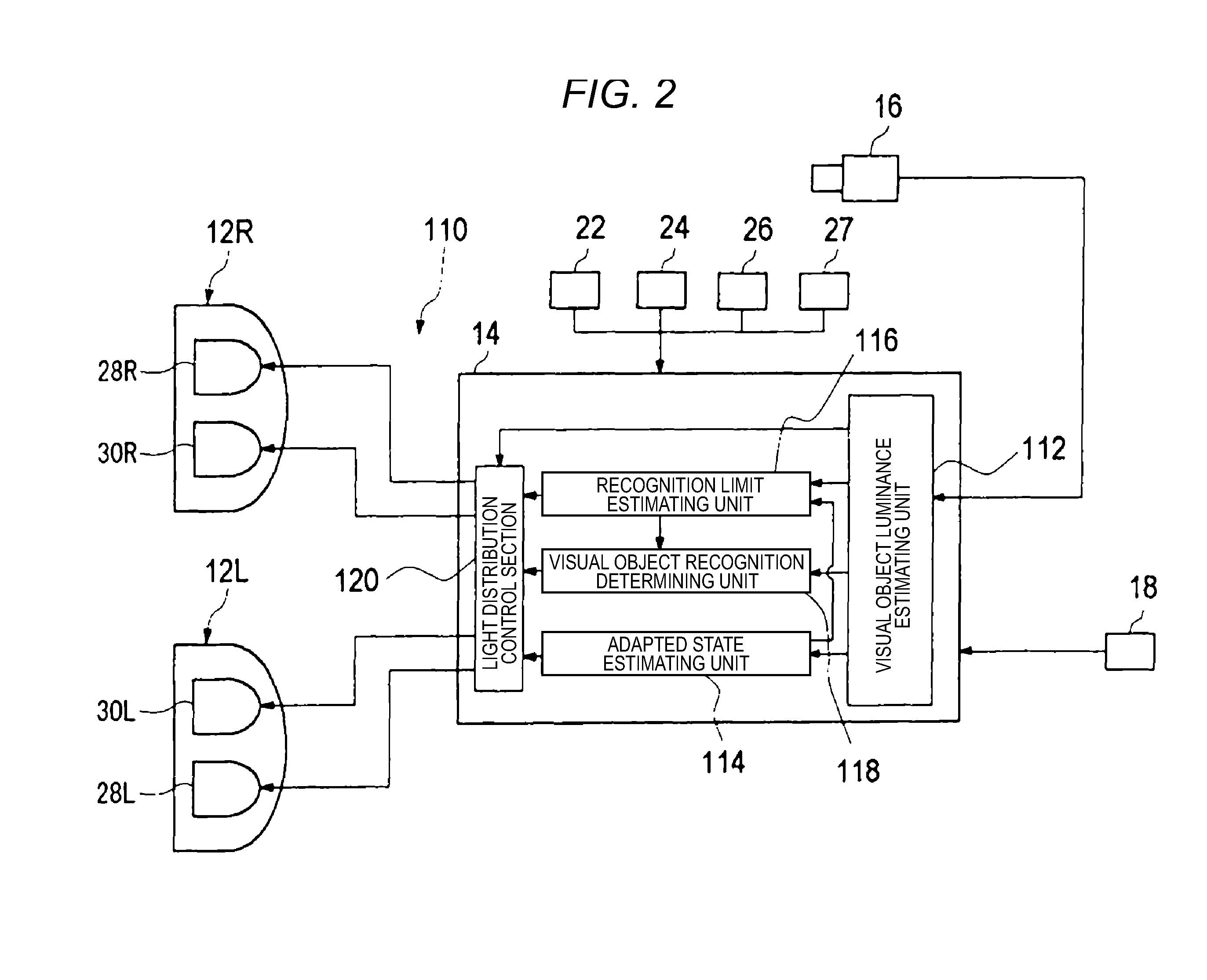

Headlamp control device and vehicle headlamp having headlamp control device

InactiveUS20100052550A1Improve performanceNon-electric lightingVehicle headlampsPattern recognitionDistribution control

A headlamp control device is provided. The device includes a visual object luminance unit, an adapted state unit, a recognition limit unit, a visual object recognition unit, and a light distribution control unit. The visual object luminance unit estimates a visual object luminance based on information about a vehicle forward captured image. The adapted state unit estimates an adaptation luminance based on the information about the vehicle forward captured image. The recognition limit unit estimates a recognition limit luminance, in the visual object range, based on at least the adaptation luminance. The visual object recognition unit determines whether the visual object luminance is less than the recognition limit luminance. The light distribution control unit controls a light distribution of a headlamp unit such that the visual object luminance is included in a range of the recognition limit luminance, if the visual object luminance is less than the recognition limit luminance.

Owner:KOITO MFG CO LTD

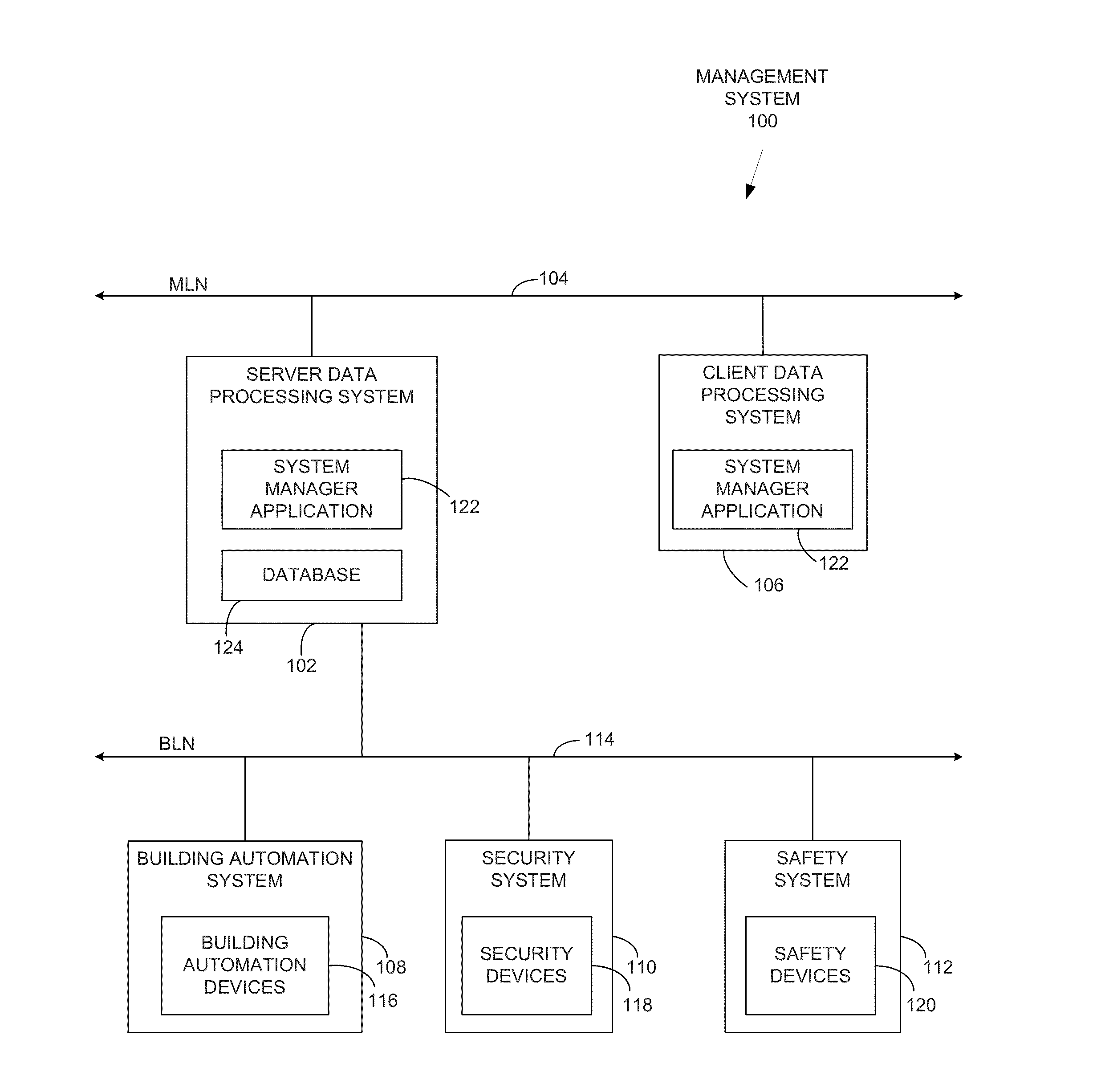

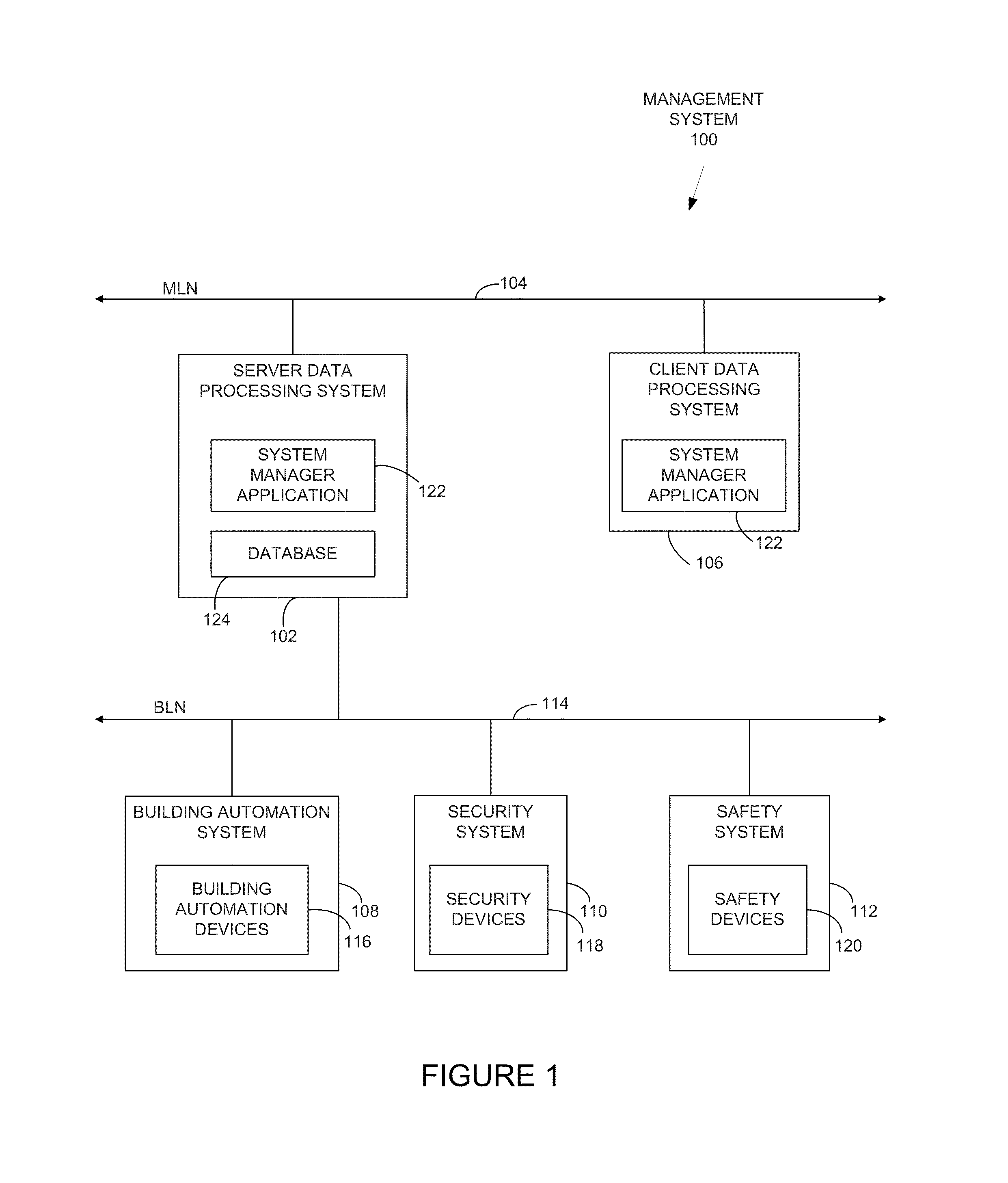

Navigation and filtering with layers and depths for building automation graphics

Management systems, methods, and mediums. A method includes identifying a set of layers and a zoom factor based on a depth of a graphic to be displayed. The set of layers comprise symbols for objects corresponding to devices in a building managed by the management system. The method includes identifying a number of visible objects from the objects of the symbols in the identified set of layers based on the zoom factor. The method includes identifying a state of a device represented by a visual object in the number of visible objects from the management system communicably coupled with the devices. Additionally, the method includes generating a display for the depth. The display includes the identified set of layers, a symbol for each of the number of visible objects in the identified set of layers, and a graphical indication of the state of the device.

Owner:SIEMENS SWITZERLAND

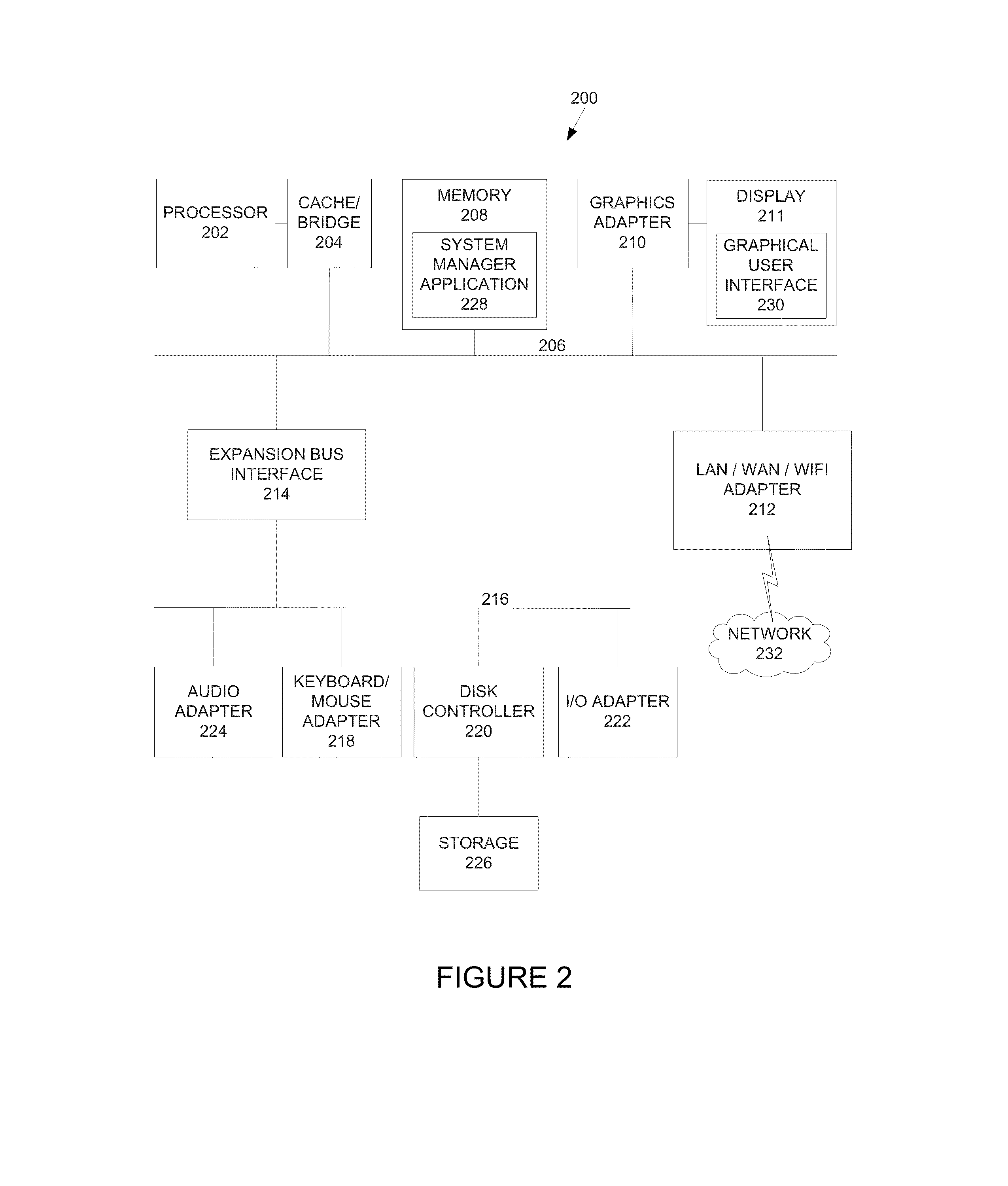

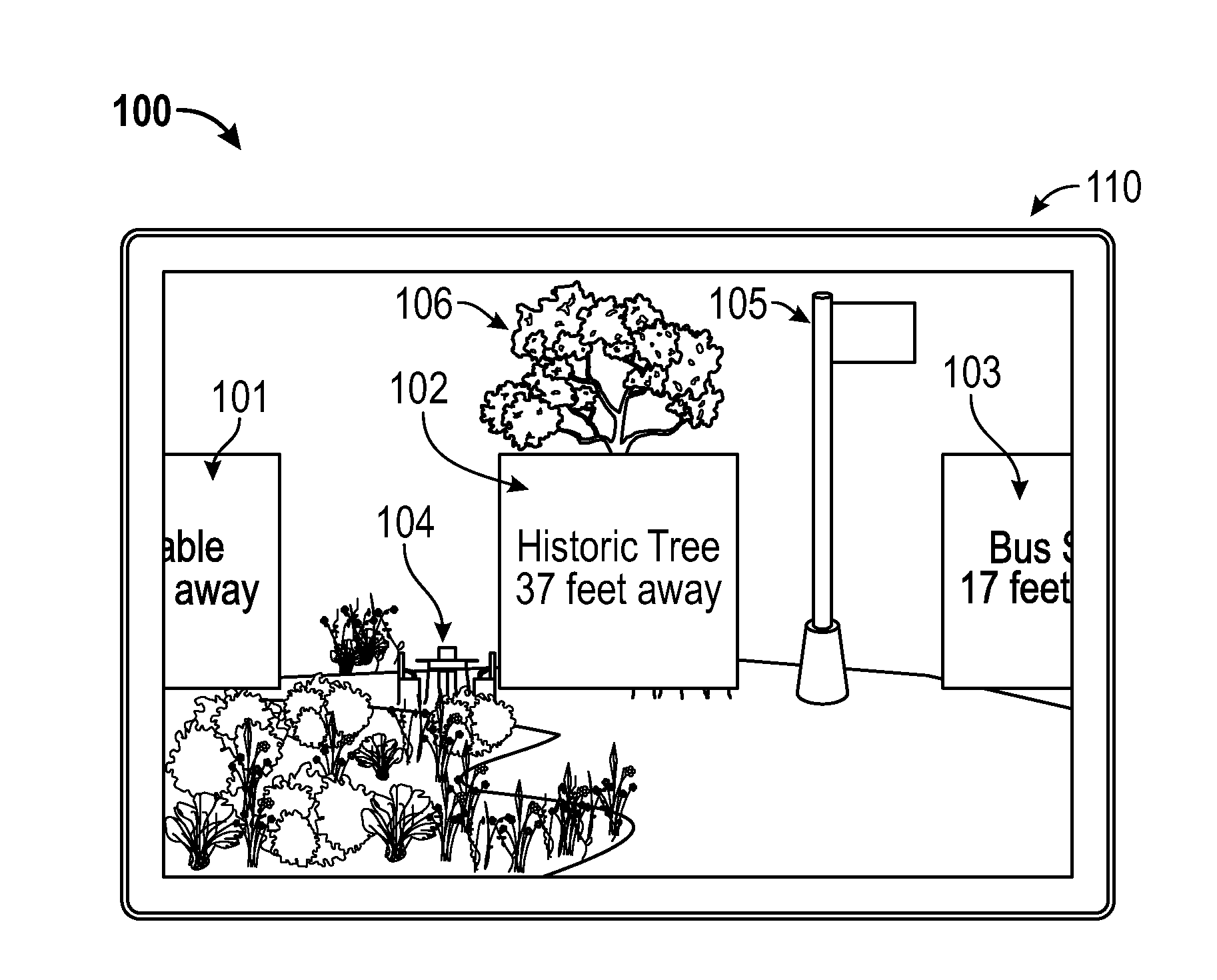

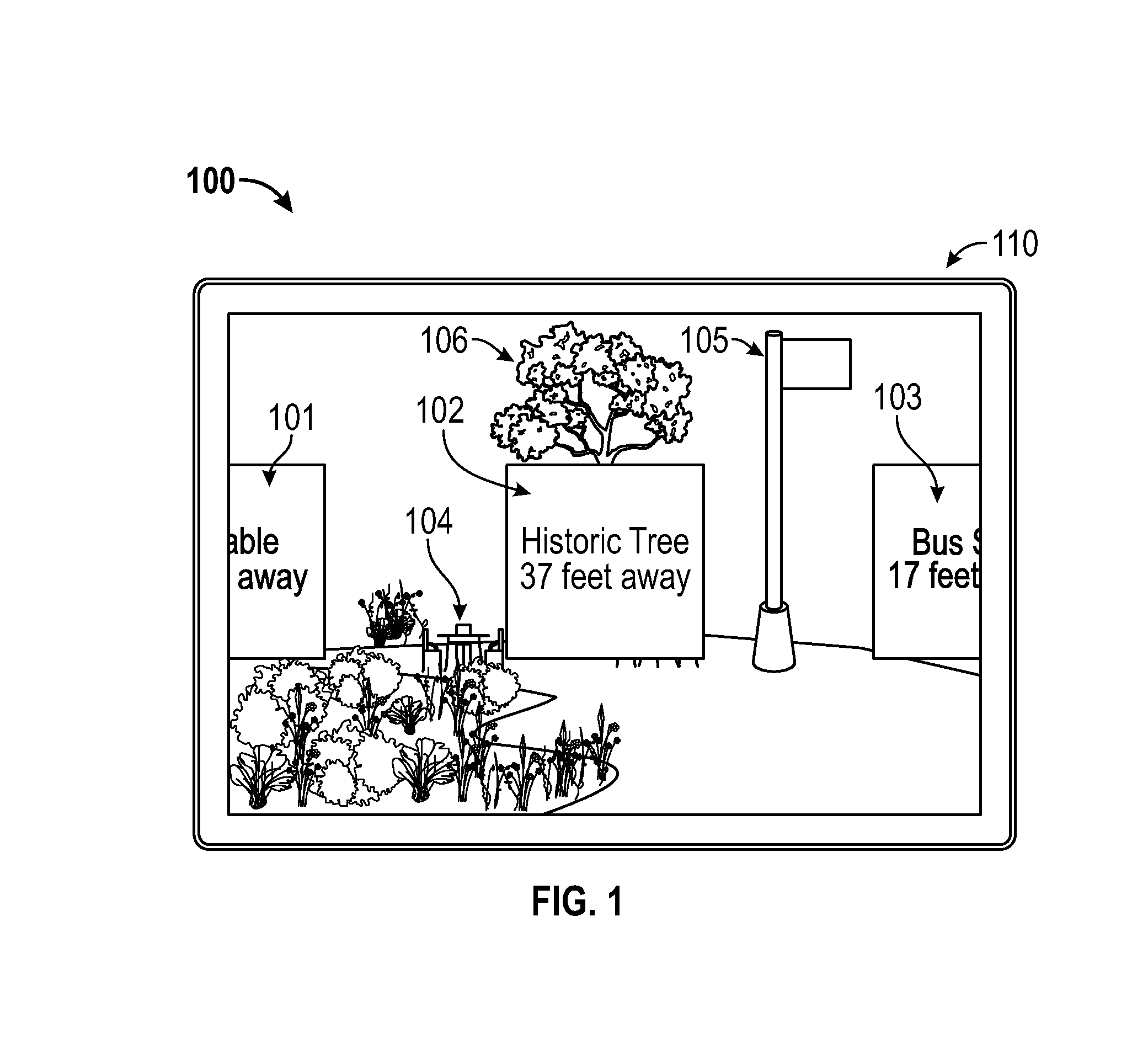

Augmented reality interface

InactiveUS20140002443A1Great realismImprove the display effectCathode-ray tube indicators3D-image renderingComputer graphics (images)Visual Objects

Methods for augmenting a view of a physical environment with computer-generated sensory input are provided. In one aspect, a method includes receiving visual data providing for display an image of a physical three-dimensional environment and orientation data indicating an orientation of a device within the physical environment, and generating a simulated three-dimensional environment. The method also includes providing the image of the physical environment for display within the simulated environment, and providing at least one computer-generated visual object within the simulated environment for overlaying on the displayed image of the physical environment. The computer-generated visual object is displayed using perspective projection. Systems and machine-readable storage media are also provided.

Owner:BLACKBOARD INC

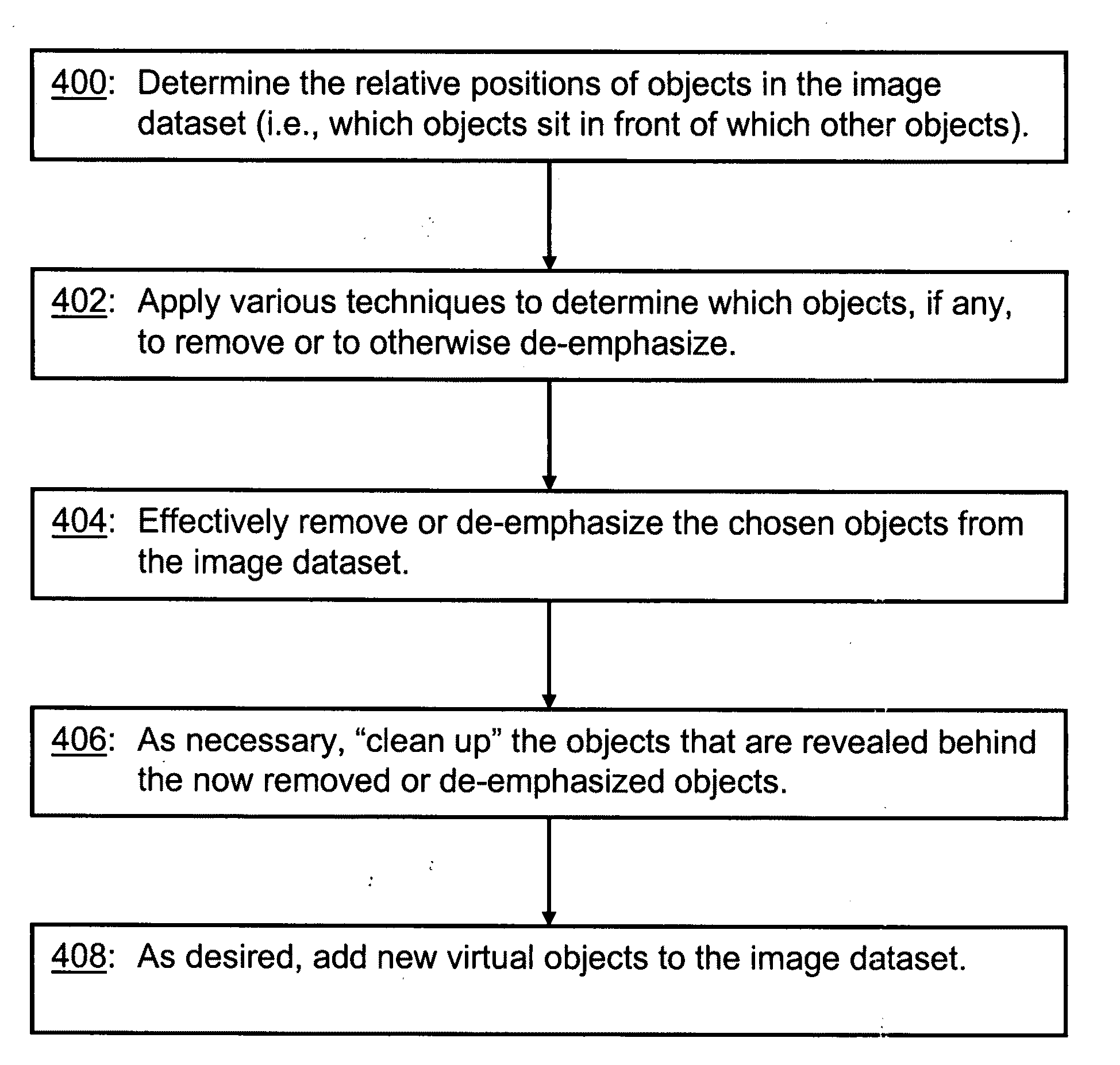

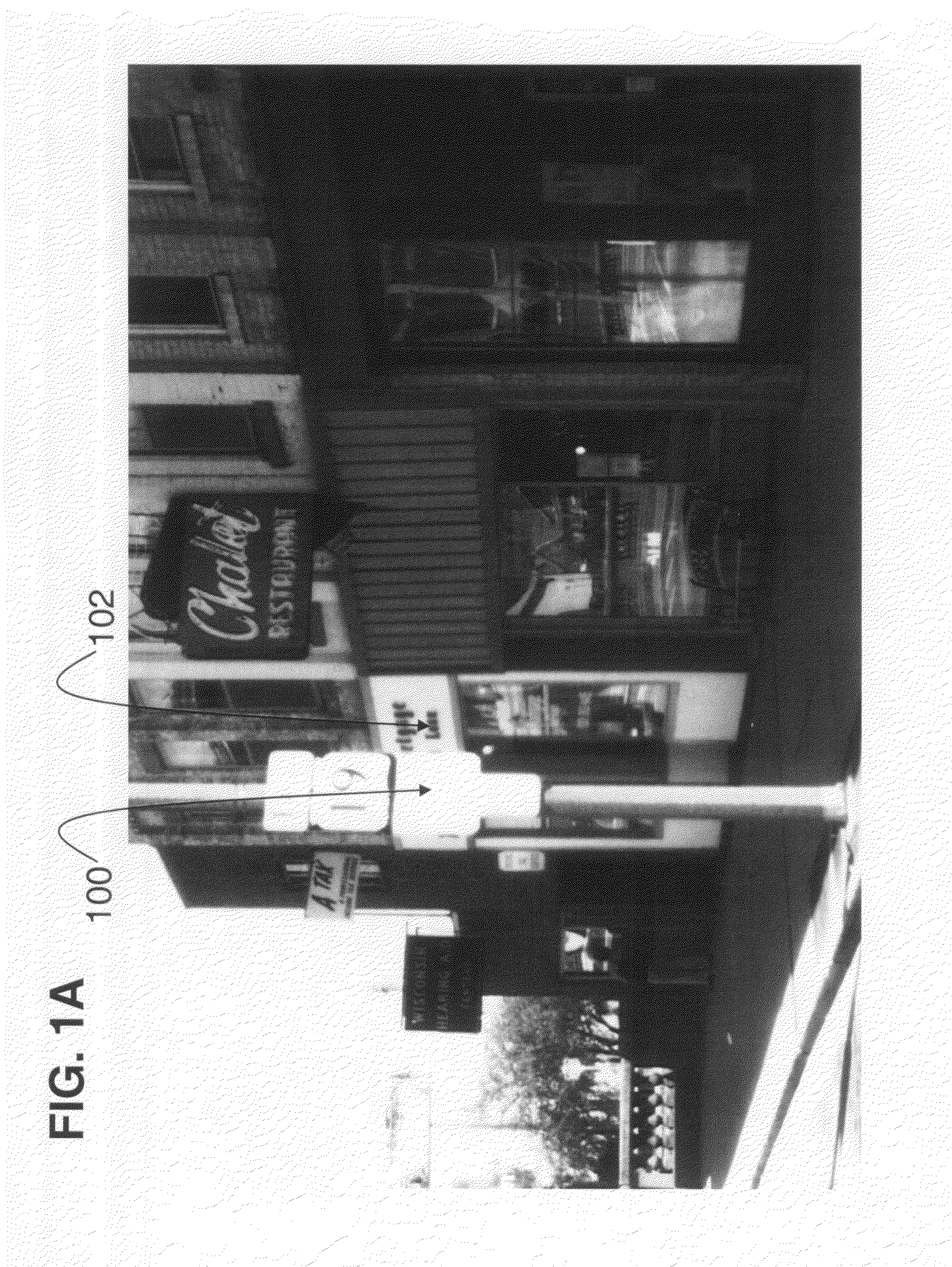

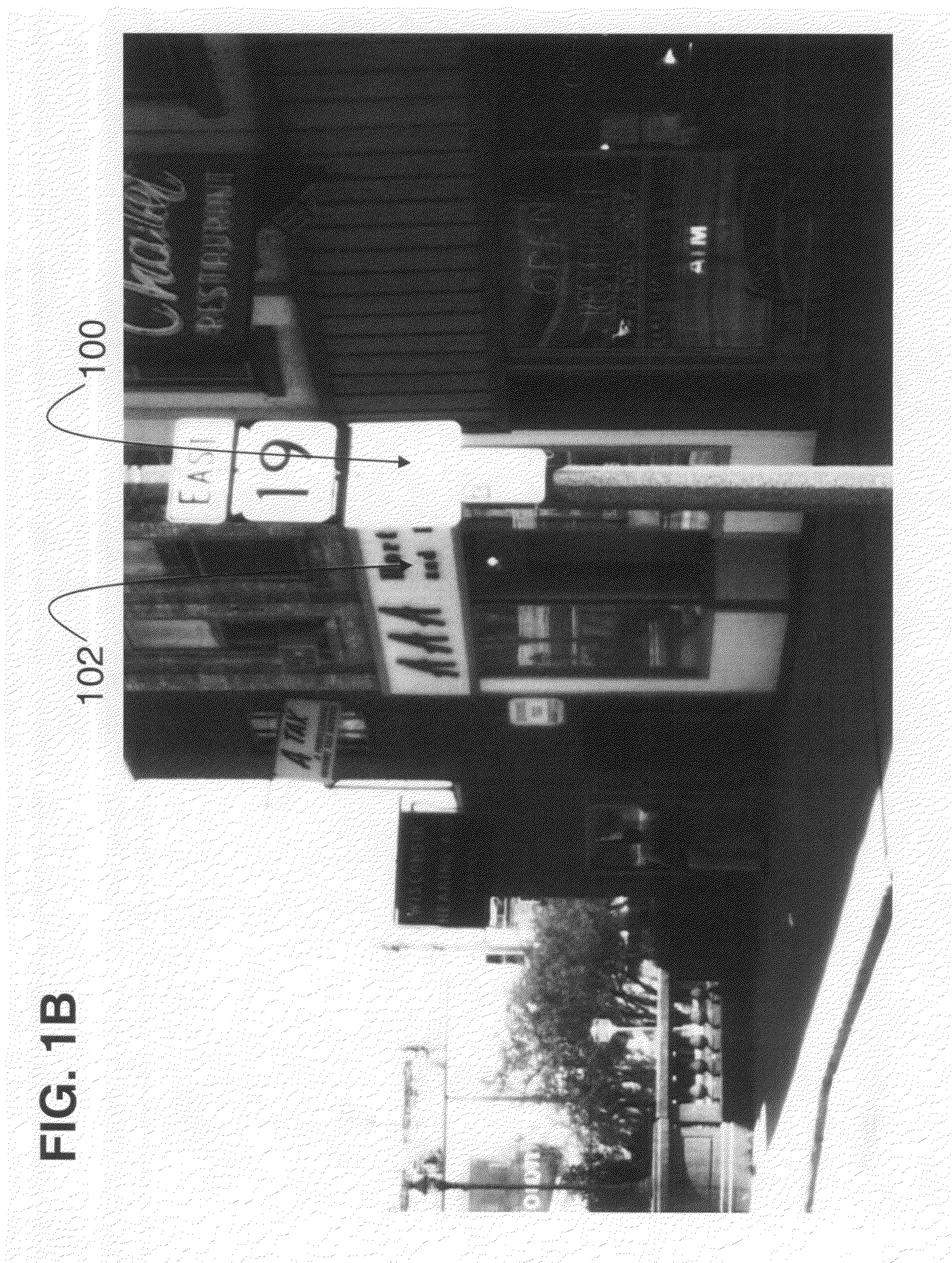

System and method for revealing occluded objects in an image dataset

Disclosed are a system and method for identifying objects in an image dataset that occlude other objects and for transforming the image dataset to reveal the occluded objects. In some cases, occluding objects are identified by processing the image dataset to determine the relative positions of visual objects. Occluded objects are then revealed by removing the occluding objects from the image dataset or by otherwise de-emphasizing the occluding objects so that the occluded objects are seen behind it. A visual object may be removed simply because it occludes another object, because of privacy concerns, or because it is transient. When an object is removed or de-emphasized, the objects that were behind it may need to be “cleaned up” so that they show up well. To do this, information from multiple images can be processed using interpolation techniques. The image dataset can be further transformed by adding objects to the images.

Owner:HERE GLOBAL BV

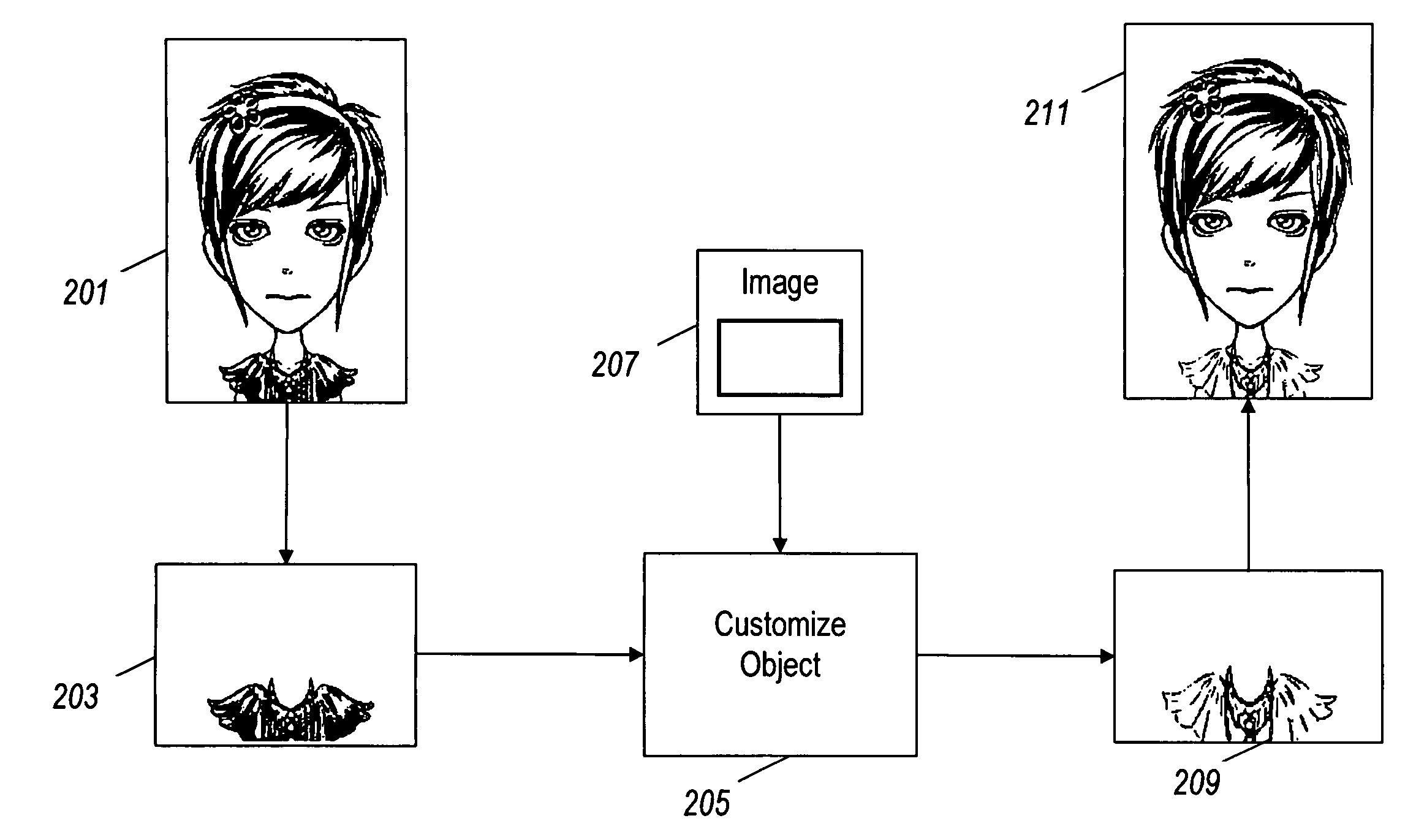

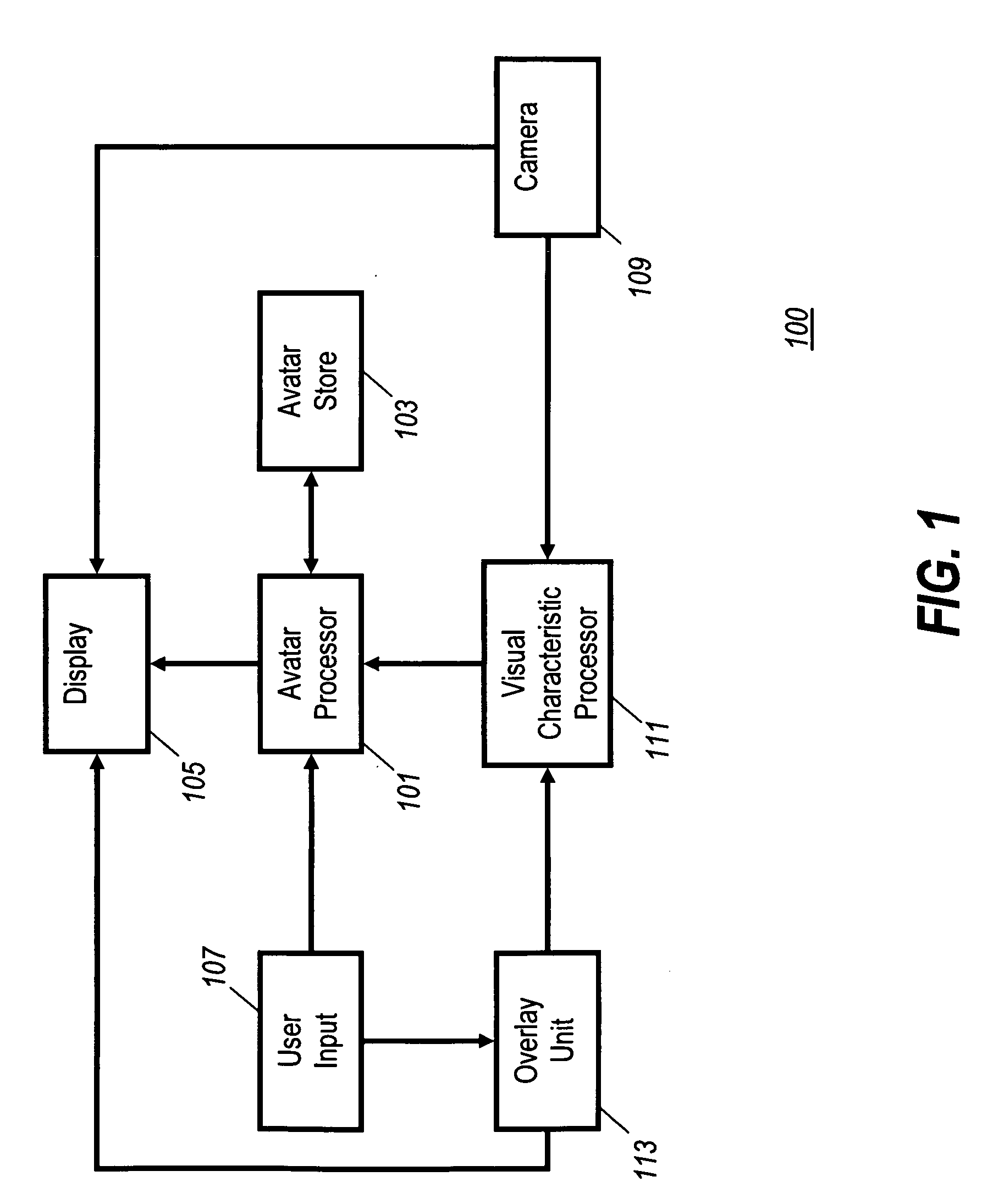

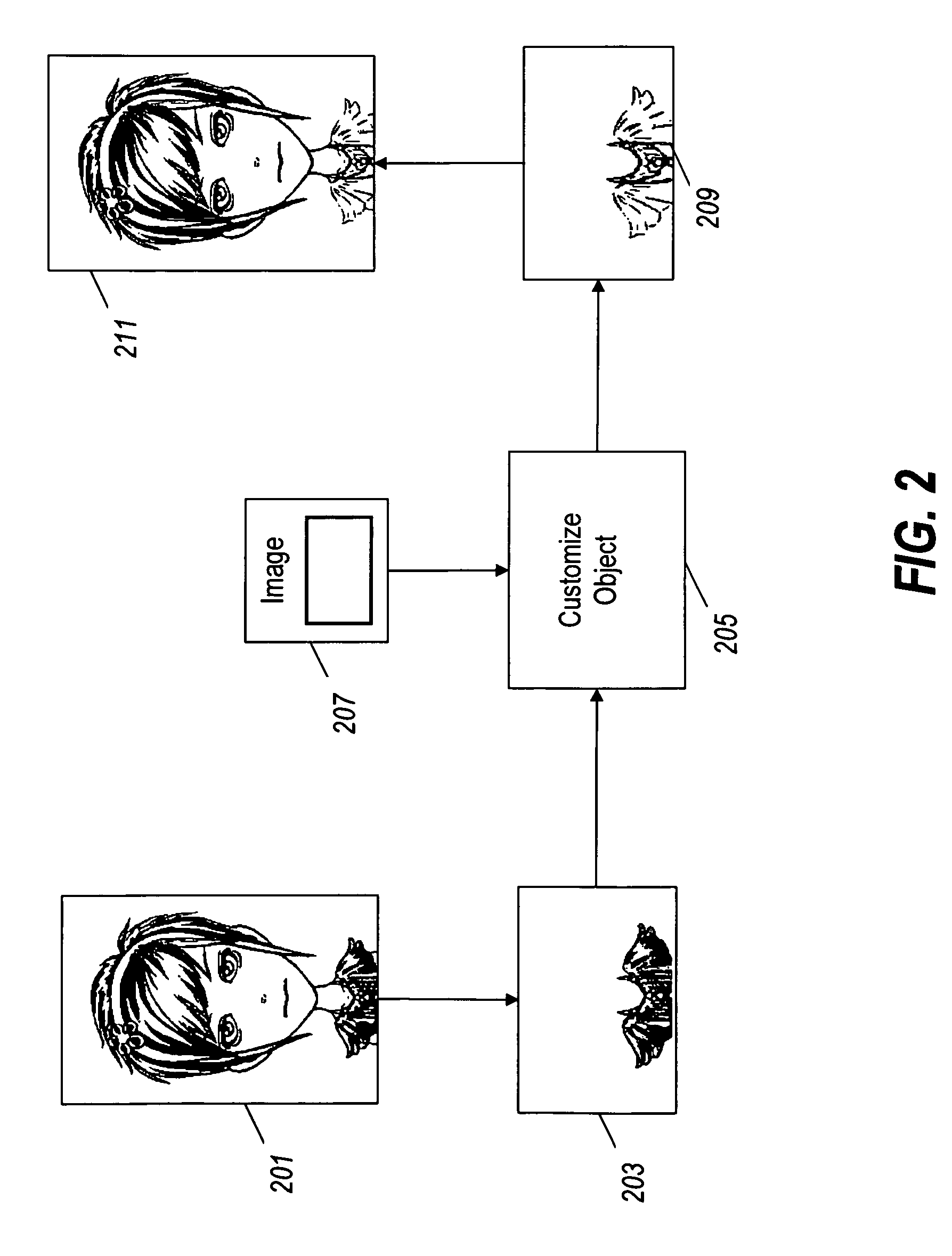

Avatar for a portable device

InactiveUS20090251484A1Improved and facilitated modificationImprove personalizationPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningVisual ObjectsData memory

A portable device comprises a data storage for storing avatar data defining a user avatar. The user avatar is formed by a plurality of visual objects. The portable device further comprises a camera for capturing an image. A visual characteristic processor is arranged to determine a first visual characteristic from the image and an avatar processor is arranged to set an object visual characteristic of an object of the plurality of visual objects in response to the first visual characteristic. The invention may allow improved customization of user avatars. For example, a color of an element of a user avatar may be adapted to a color of a real-life object simply by a user taking a picture thereof.

Owner:MOTOROLA MOBILITY LLC

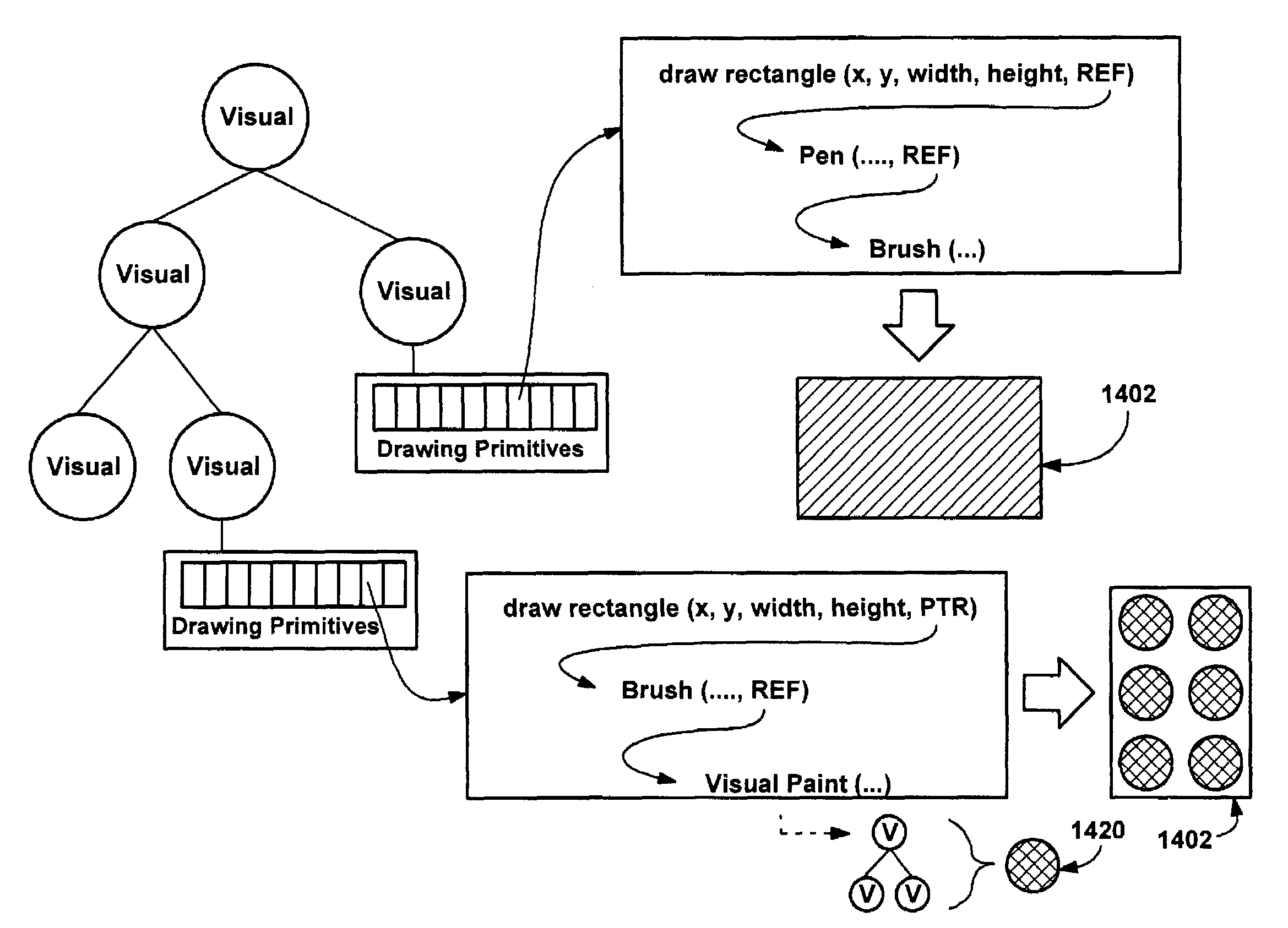

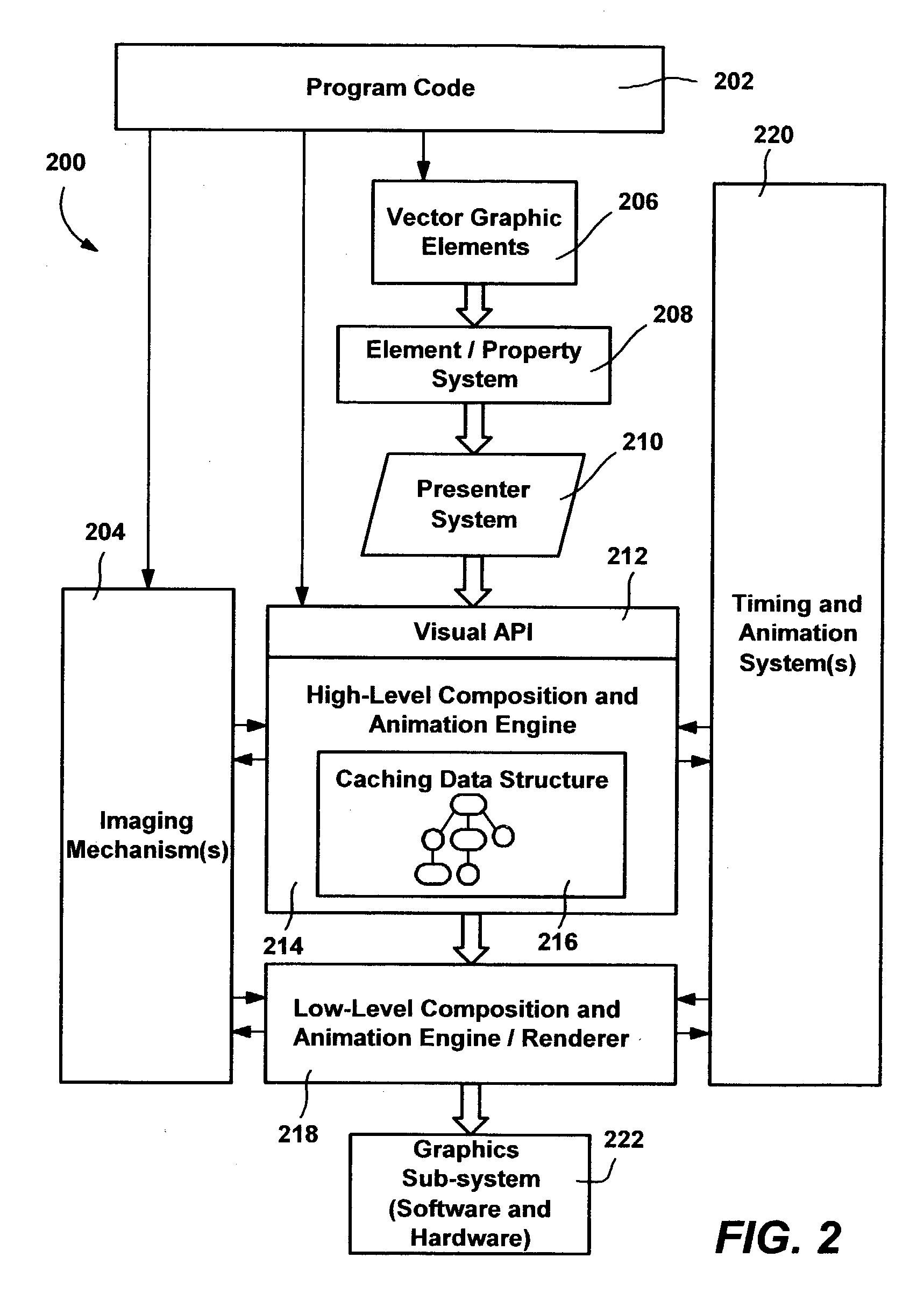

Visual and scene graph interfaces

A method and system implemented in an application programming interface (API) and an object model allows program code developers to interface in a consistent manner with a scene graph data structure to output graphics. Via the interfaces, program code writes drawing primitives such as geometry data, image data, animation data and other data to visuals that represent a drawing surface, including validation visual objects, drawing visual objects and surface visual objects. The code can also specify transform, clipping and opacity properties on visuals, and add child visuals to other visuals to build up a hierarchical scene graph. A visual manager traverses the scene graph to provide rich graphics data to lower-level graphics components.

Owner:MICROSOFT TECH LICENSING LLC

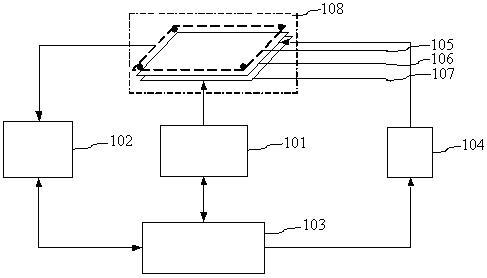

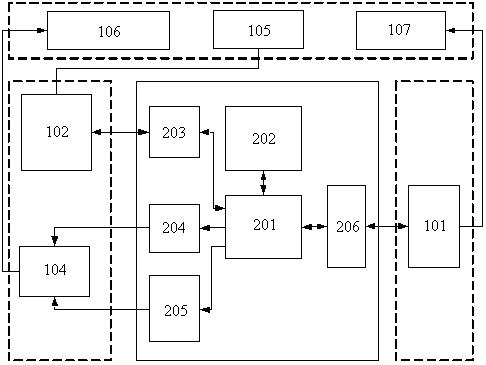

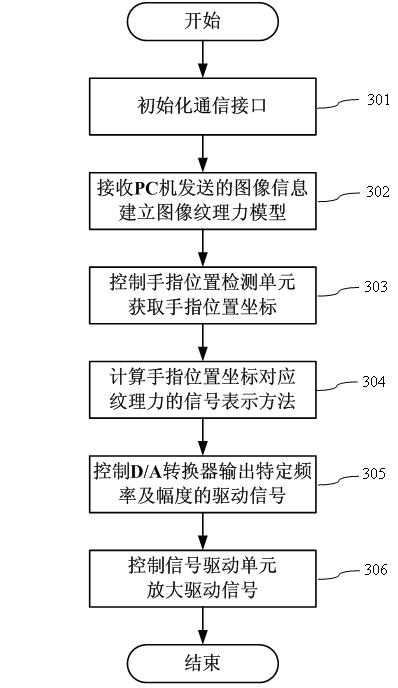

Touch representation device based on electrostatic force

InactiveCN102662477ARealize the function of tactile reproductionLow costInput/output for user-computer interactionGraph readingVisual ObjectsPersonal computer

The invention relates to a touch representation device based on electrostatic force, belonging to a touch representation device. The touch representation device comprises an electrostatic force touch representation interactive screen, a finger trace tracking unit, an electrostatic force touch representation controller, a signal driving unit and a PC (Personal Computer). The finger trace tracking unit detects a finger position, and maps a touch force representation method of a visual object at the position; an electric signal parameter for representing a touch force is generated according to the corresponding relation between an electric signal and the touch force; a driving signal is output; the driving signal is amplified; and the amplified driving signal acts on the electrostatic force touch representation interactive screen to cause skin deformation of a finger so as to sense an absorption force and a repelling force, thereby realizing touch representation. The touch representation device has the advantages of low cost, high flexibility and strong applicability, and can realize the touch representation function on a small mobile terminal.

Owner:孙晓颖

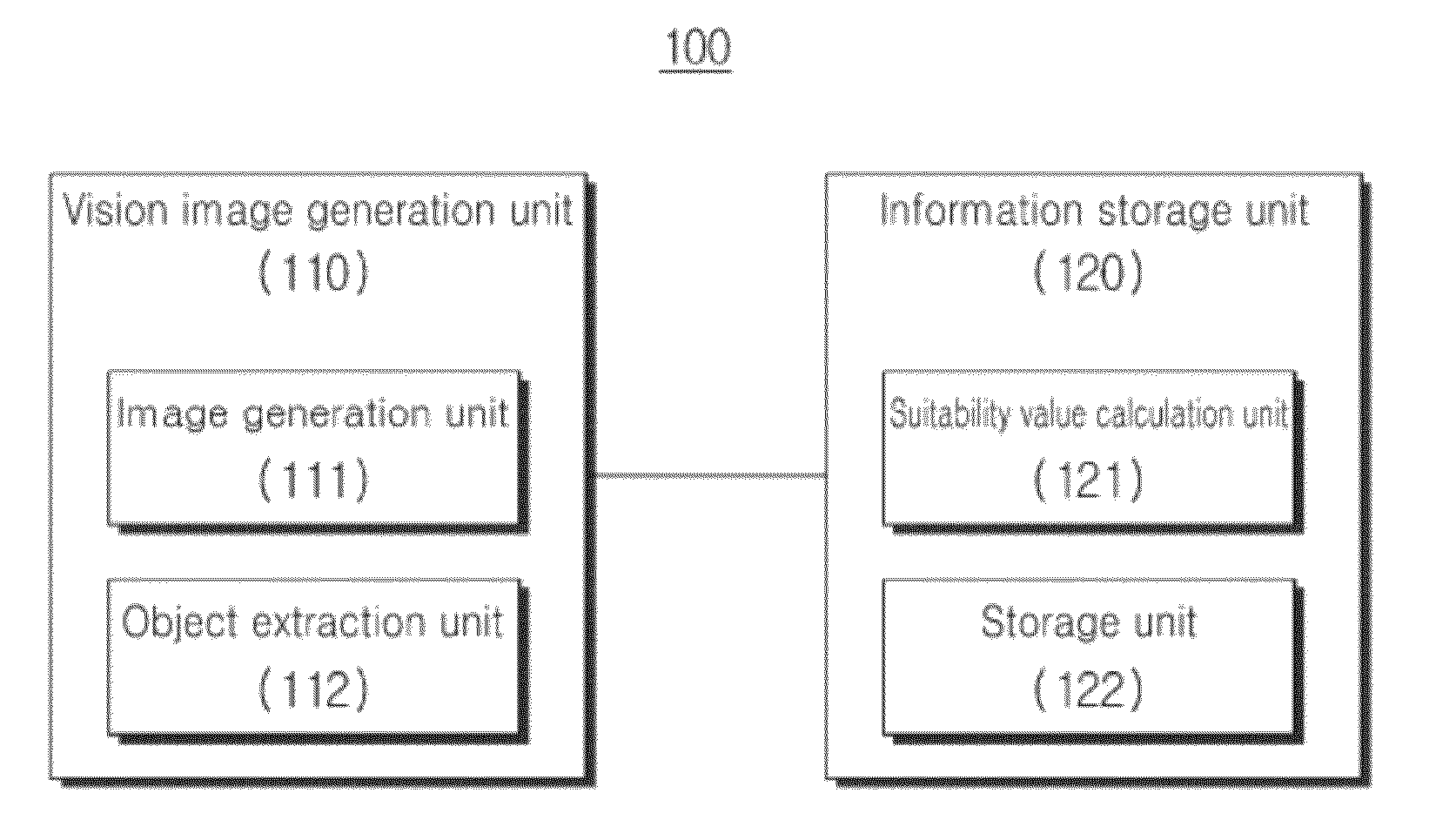

Vision image information storage system and method thereof, and recording medium having recorded program for implementing method

InactiveUS20120087580A1Eliminate inconvenienceImprove system speedBack restsAcoustic signal devicesViewpointsVisual Objects

The present invention relates to a system for generating a vision image from the viewpoint of an agent in an augmented reality environment, a method thereof, and a recording medium in which a program for implementing the method is recorded. The invention provides a vision image information storage system comprising: a vision image generator which extracts visual objects from an augmented reality environment based on a predetermined agent, and generates a vision image from the viewpoint of the agent; and an information storage unit which evaluates the objects included in the generated vision image based on a predetermined purpose, and stores information on the evaluated objects.

Owner:INTELLECTUAL DISCOVERY CO LTD

Electronic automatically adjusting bidet with visual object recognition software

An electronic bidet system that uses one or more internal cameras to capture one or more images or video of a user as he or she sits on the bidet prior to use. The images or video are analyzed using object recognition technology to identify and segment / bound the types, sizes, shapes, and positions of the lower body orifices, and conditions (e.g. hemorrhoids), in the user's genital and rectal areas. Based on these analyzed images or video, the system automatically adjusts the bidet settings for the specific conditions (e.g. hemorrhoids) and types of orifices, locations of orifices, sizes of orifices, shapes of orifices, gender, body type, and weight of the user.

Owner:SMART HYGIENE INC

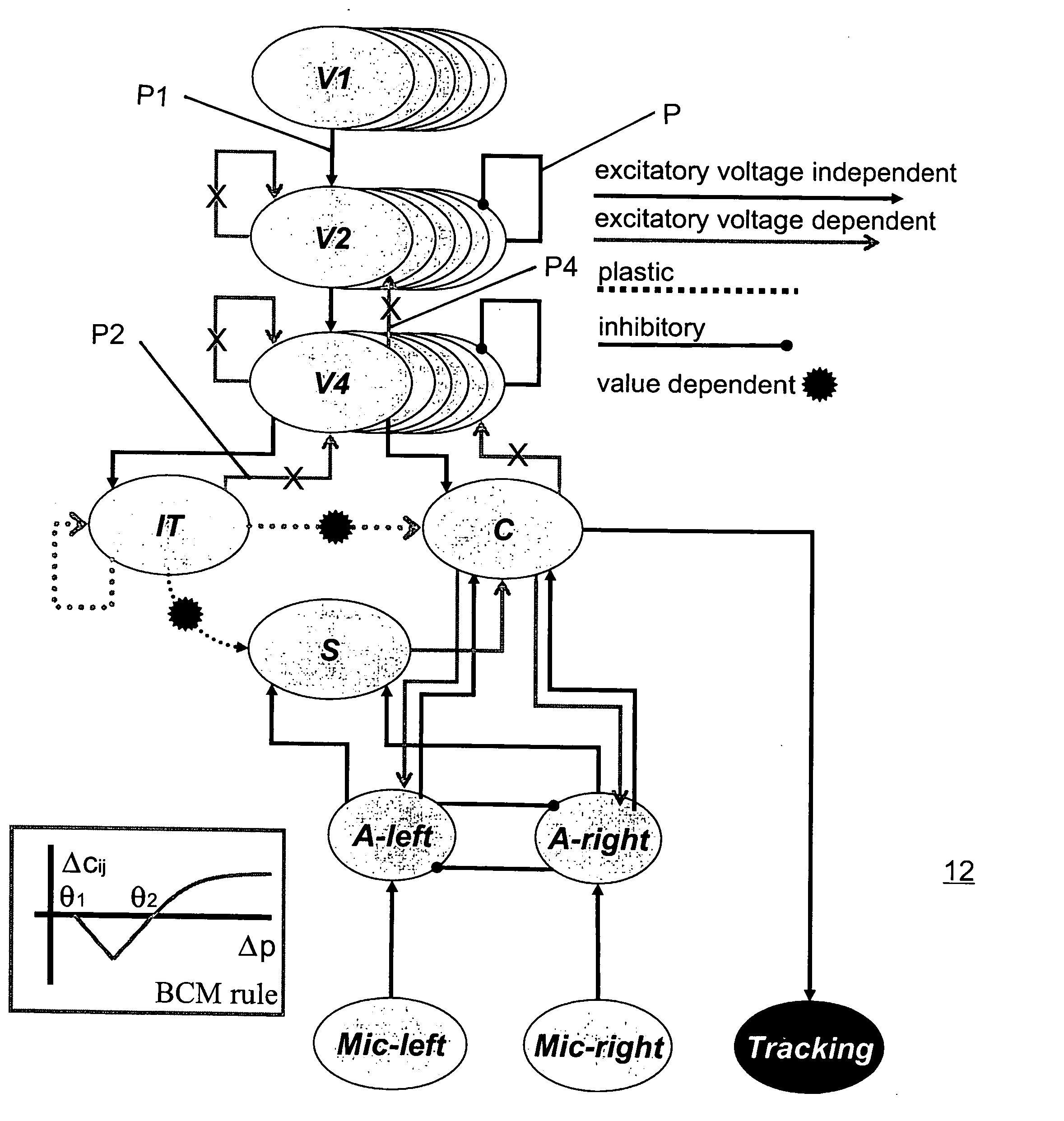

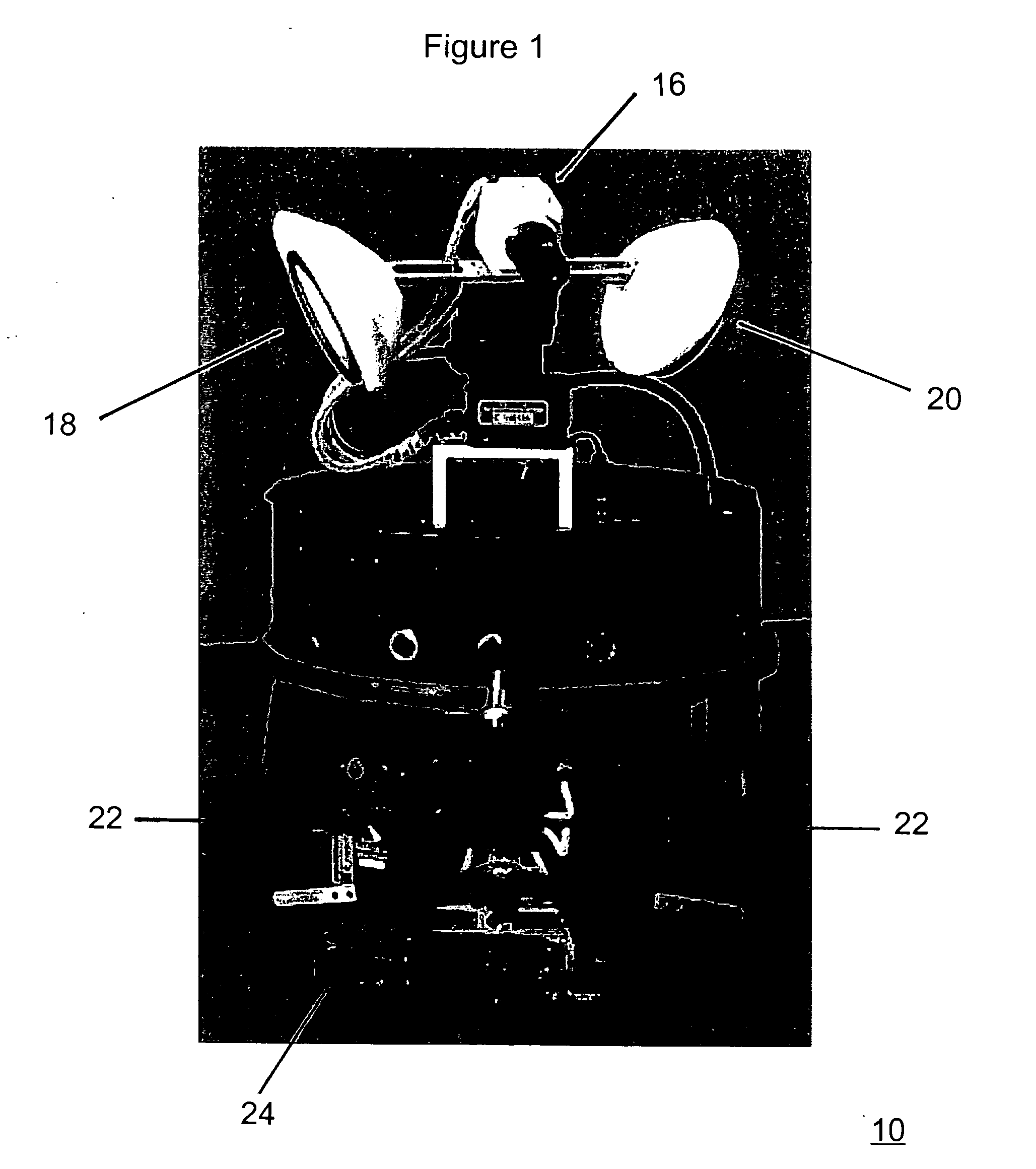

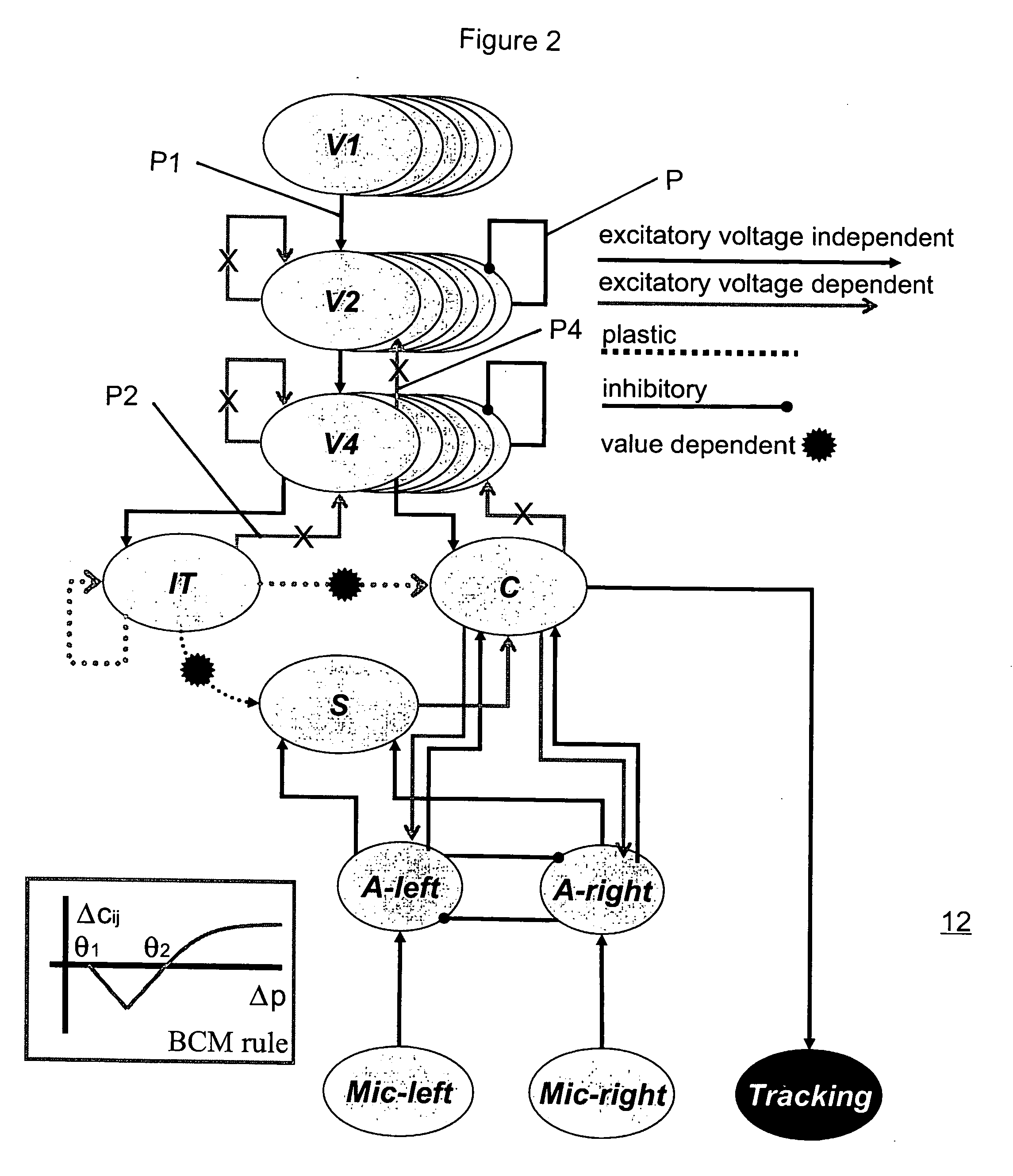

Mobile brain-based device for use in a real world environment

InactiveUS20050261803A1Input/output for user-computer interactionCharacter and pattern recognitionNervous systemVisual Objects

A mobile brain-based device BBD includes a mobile base equipped with sensors and effectors (Neurally Organized Mobile Adaptive Device or NOMAD), which is guided by a simulated nervous system that is an analogue of cortical and sub-cortical areas of the brain required for visual processing, decision-making, reward, and motor responses. These simulated cortical and sub-cortical areas are reentrantly connected and each area contains neuronal units representing both the mean activity level and the relative timing of the activity of groups of neurons. The brain-based device BBD learns to discriminate among multiple objects with shared visual features, and associated “target” objects with innately preferred auditory cues. Globally distributed neuronal circuits that correspond to distinct objects in the visual field of NOMAD 10 are activated. These circuits, which are constrained by a reentrant neuroanatomy and modulated by behavior and synaptic plasticity, result in successful discrimination of objects. The brain-based device BBD is moveable, in a rich real-world environment involving continual changes in the size and location of visual stimuli due to self-generated or autonomous, movement, and shows that reentrant connectivity and dynamic synchronization provide an effective mechanism for binding the features of visual objects so as to reorganize object features such as color, shape and motion while distinguishing distinct objects in the environment.

Owner:NEUROSCI RES FOUND

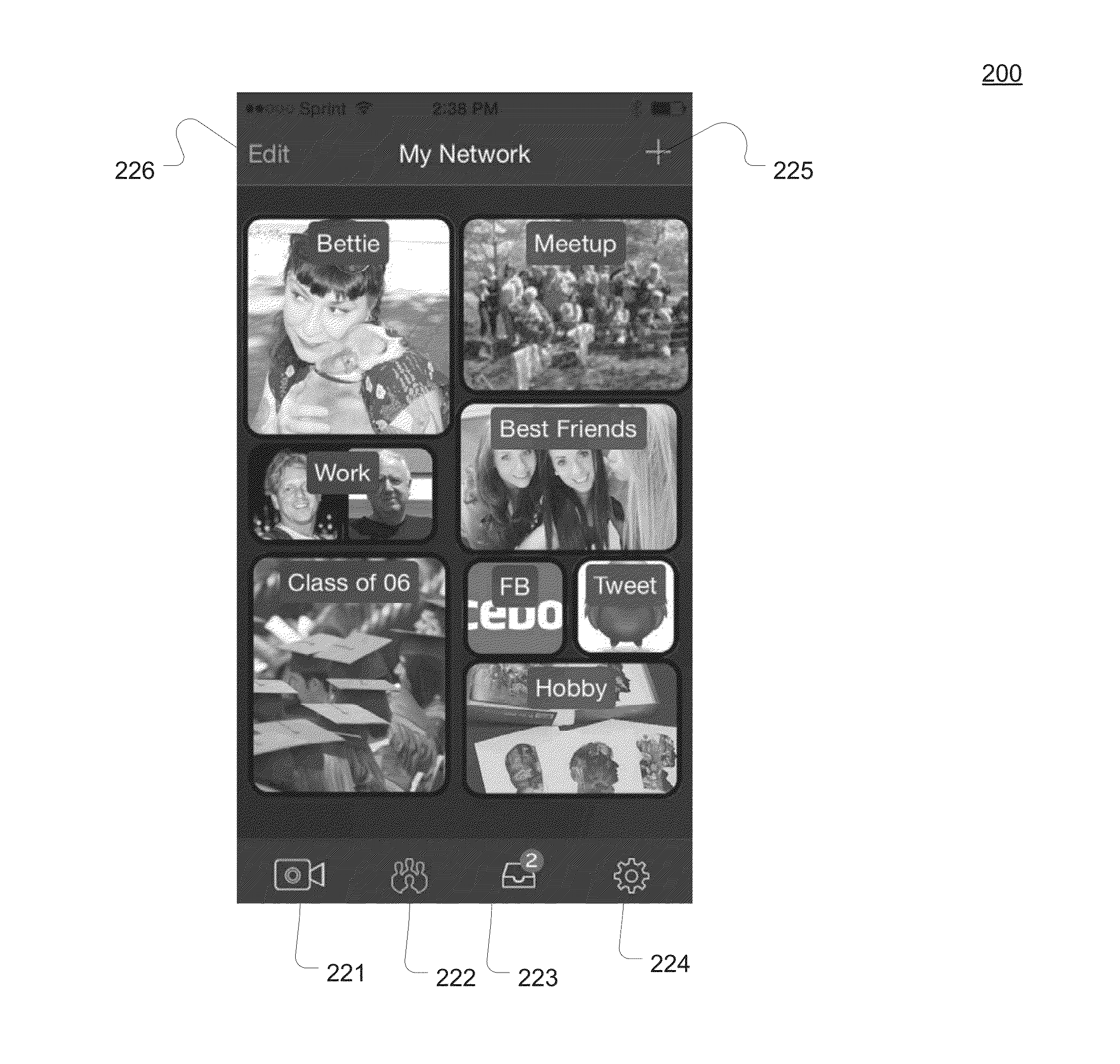

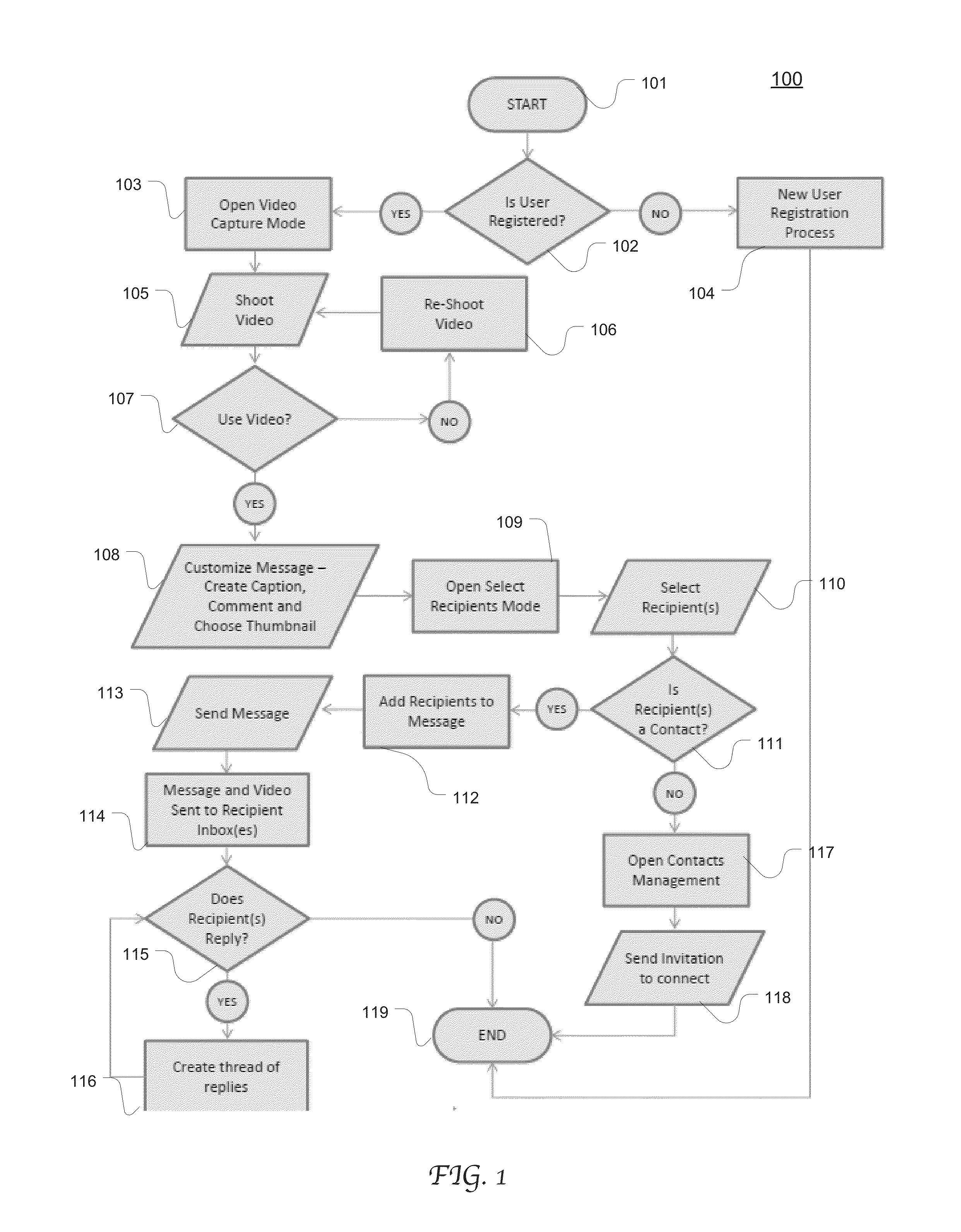

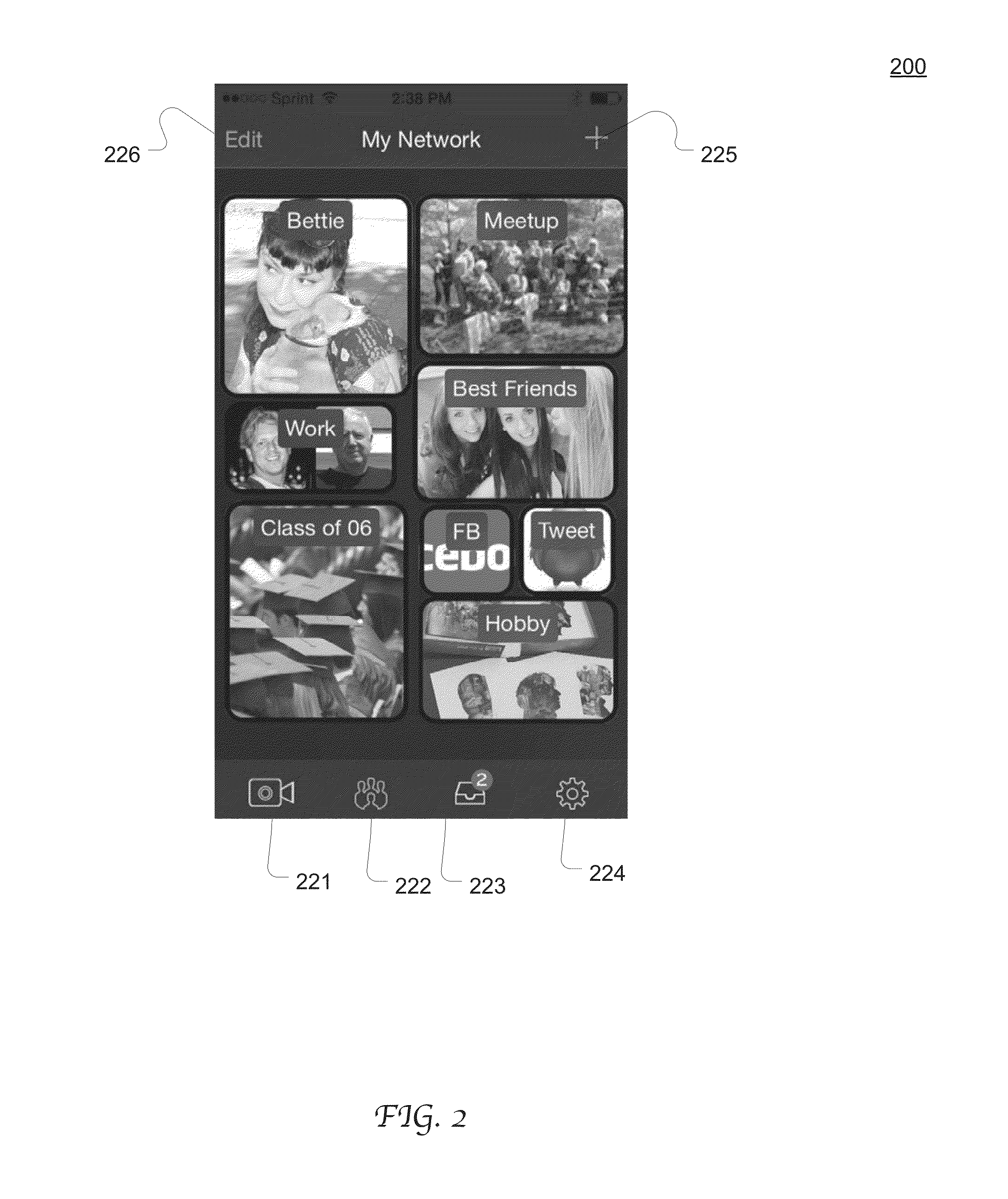

Integrated video capturing and sharing application on handheld device

ActiveUS20160248840A1Simple and intuitiveEfficient managementTelevision system detailsColor television detailsVisual ObjectsApplication software

An integrated application program for video shooting, video sharing, video editing and contact information management on a mobile device. Upon being invoked, the application program directly enters into the video shooting mode of a graphical user interface (GUI) where a user can start to shoot a video after interacting with the GUI only once. Further, contact information is represented using a grid like visual object within the GUI. A grid includes a plurality of pictorial tiles, each representing a contact group. The social grid is customizable by user configurations and / or automatically based on a number of predefined factors. Once recipients are selected from the grid, the video message can be transmitted to the selected recipients. All functionalities can be within a single application program resident on the mobile device.

Owner:GRIDEO TECH INC

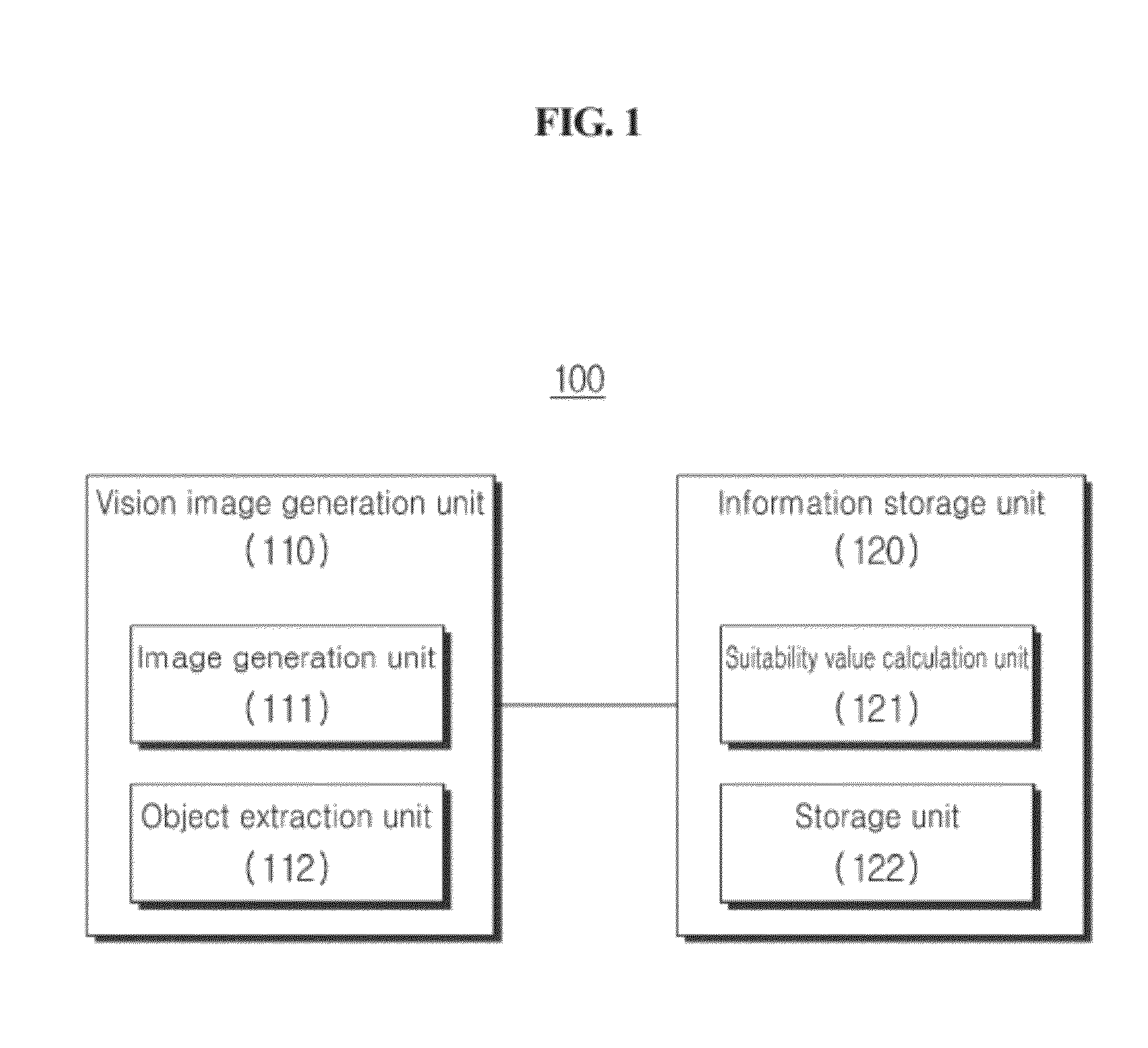

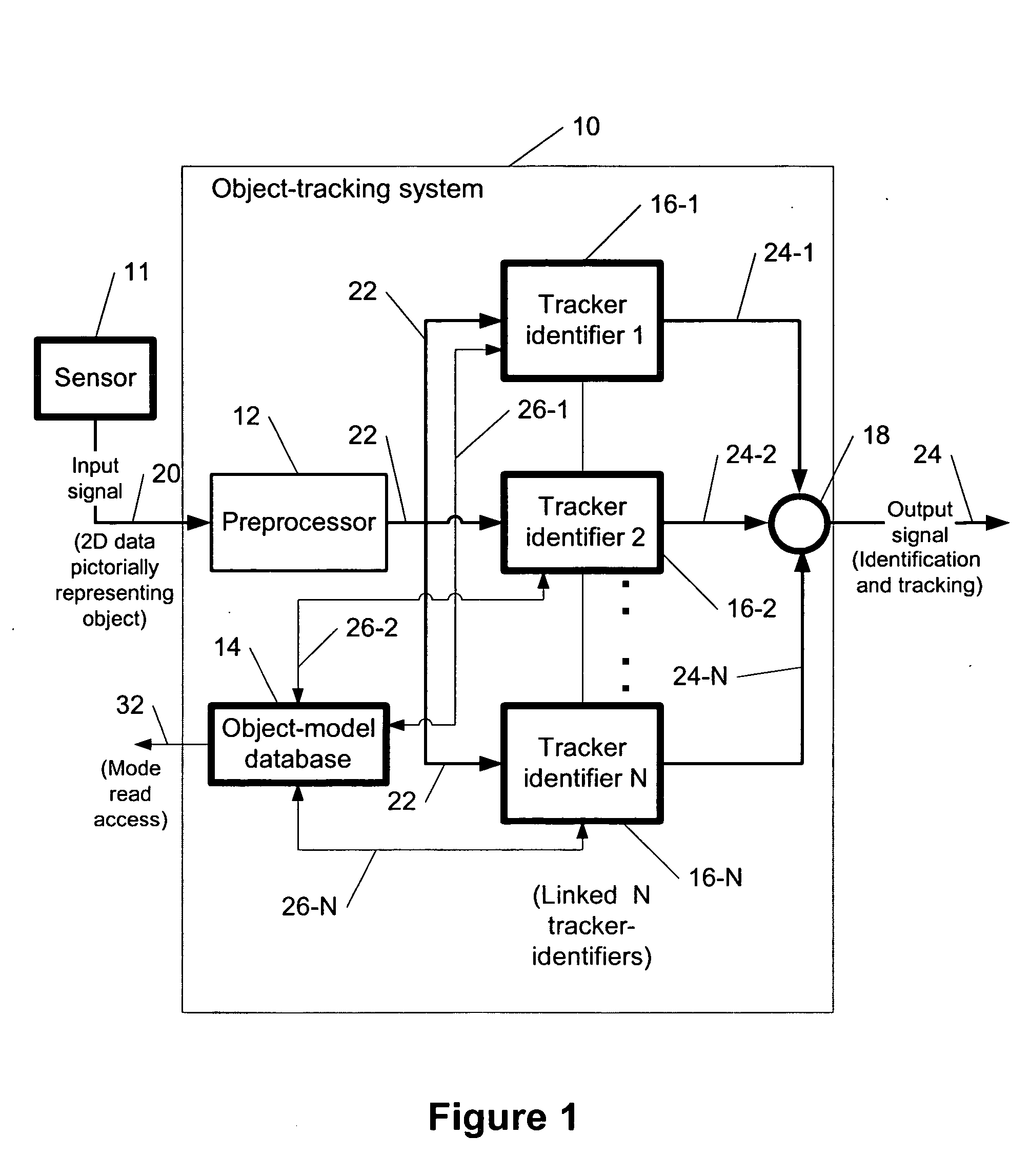

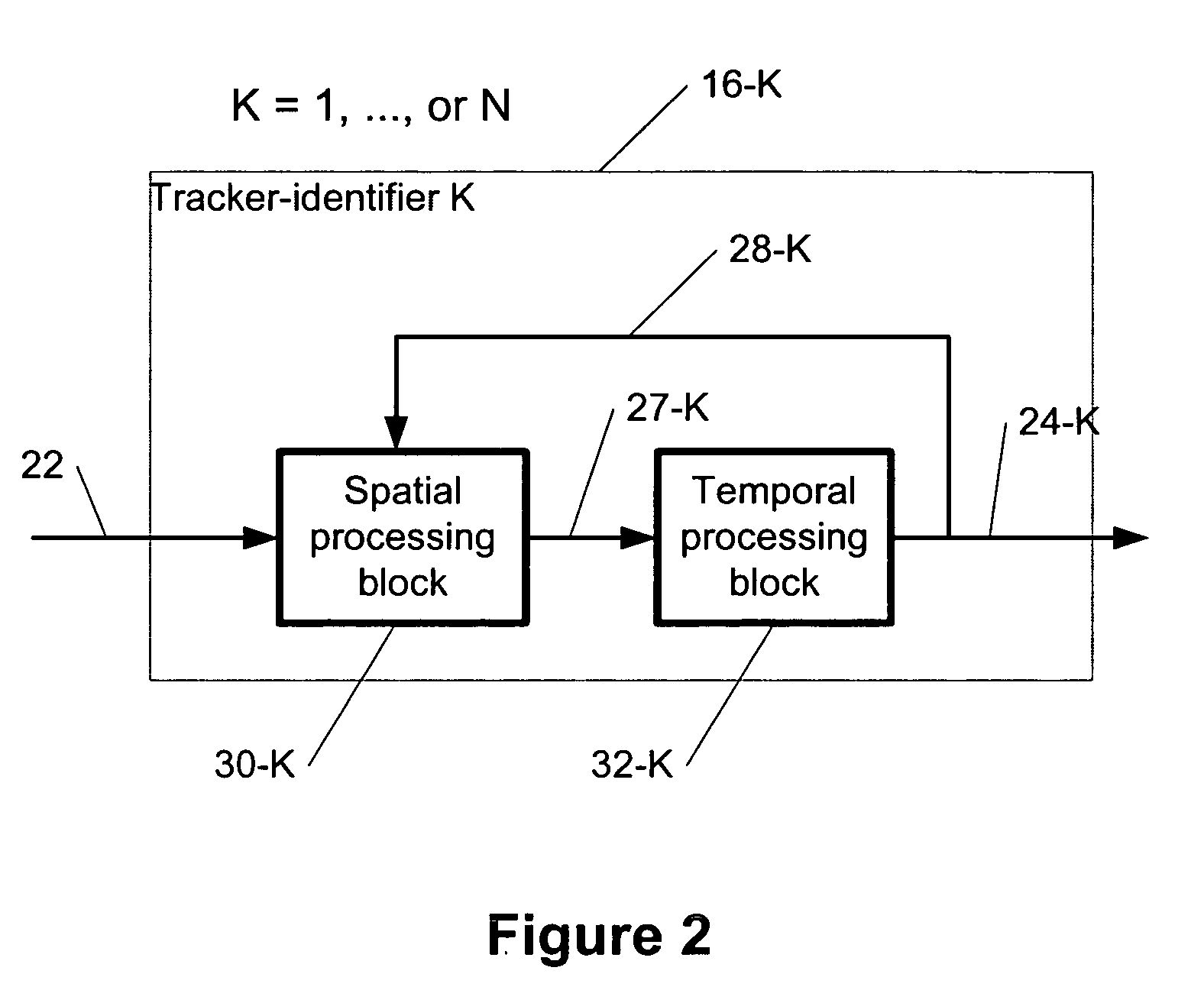

Visual object recognition and tracking

This invention describes a method for identifying and tracking an object from two-dimensional data pictorially representing said object by an object-tracking system through processing said two-dimensional data using at least one tracker-identifier belonging to the object-tracking system for providing an output signal containing: a) a type of the object, and / or b) a position or an orientation of the object in three-dimensions, and / or c) an articulation or a shape change of said object in said three dimensions.

Owner:UNIVERSAL ROBOTS USA INC

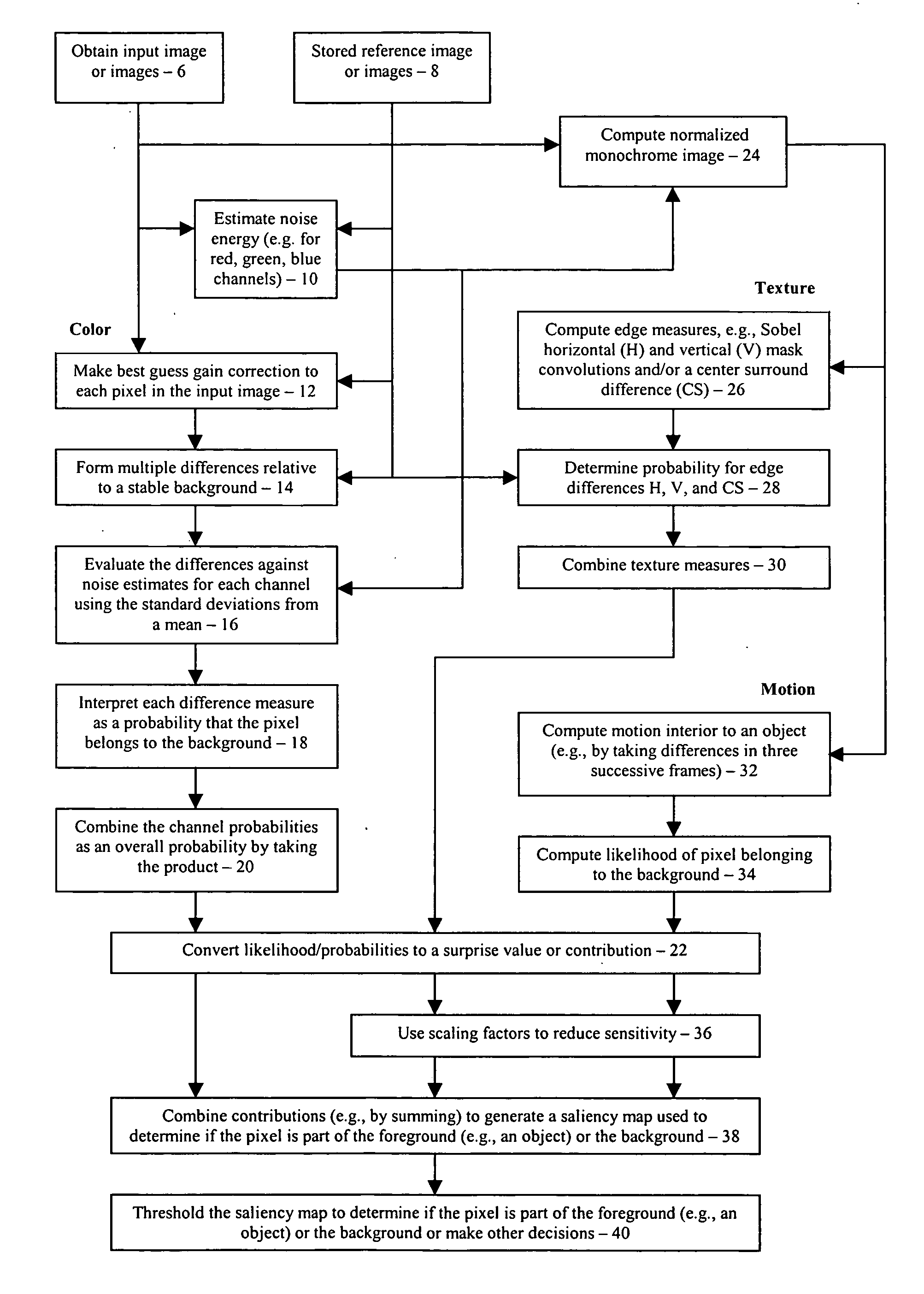

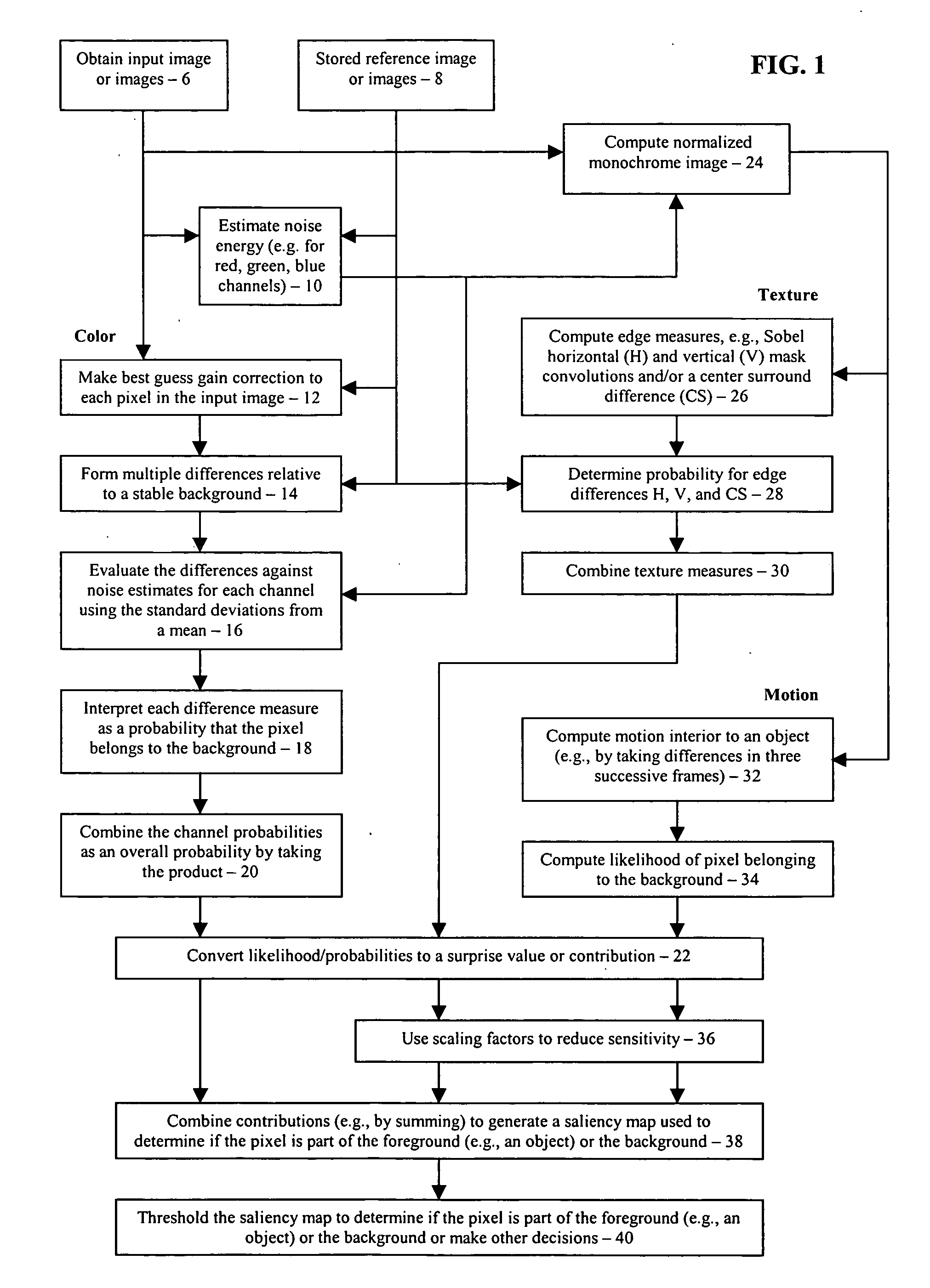

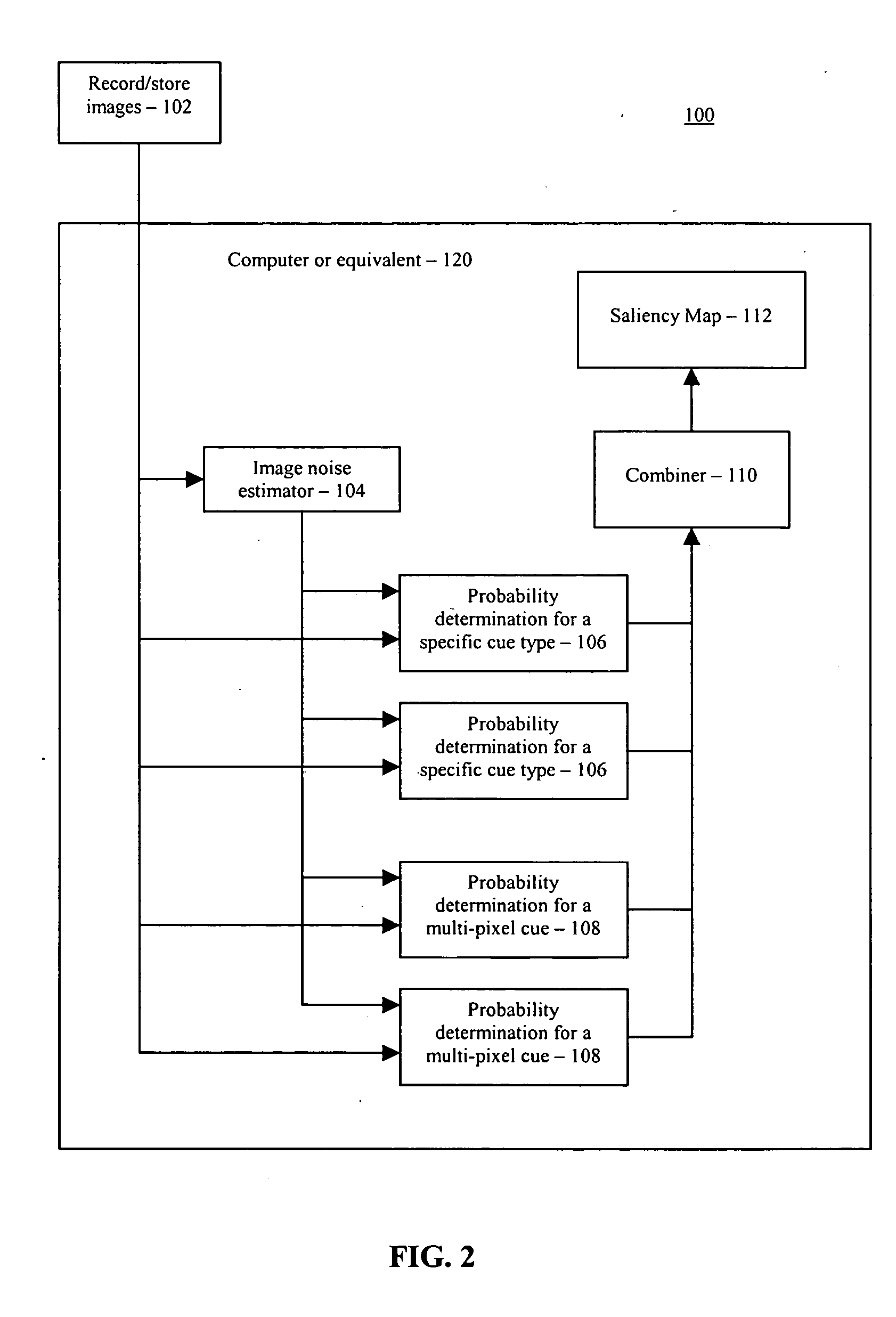

Combining multiple cues in a visual object detection system

Systems and methods for detecting visual objects by employing multiple cues include statistically combining information from multiple sources into a saliency map, wherein the information may include color, texture and / or motion in an image where an object is to be detected or background determined. The statistically combined information is thresholded to make decisions with respect to foreground / background pixels.

Owner:IBM CORP

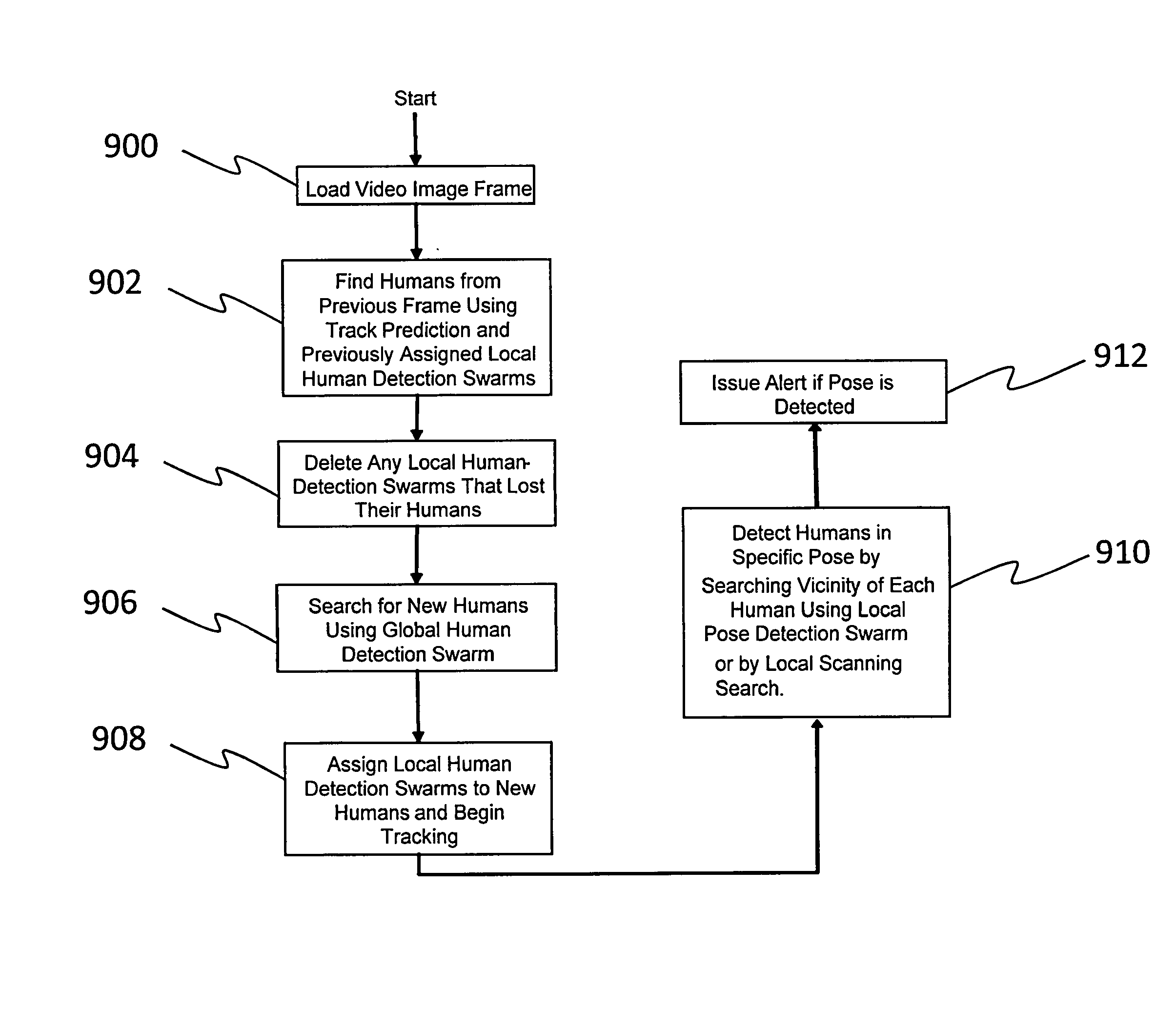

Multi-stage method for object detection using cognitive swarms and system for automated response to detected objects

A multi-stage method of visual object detection is disclosed. The method was originally designed to detect humans in specific poses, but is applicable to generic detection of any object. A first stage comprises acts of searching for members of a predetermined general-class of objects (such as humans) in an image using a cognitive swarm, detecting members of the general-class of objects in the image, and selecting regions of the image containing detected members of the general-class of objects. A second stage comprises acts of searching for members of a predetermined specific-class of objects (such as humans in a certain pose) within the selected regions of the image using a cognitive swarm, detecting members of the specific-class of objects within the selected regions of the image, and outputting the locations of detected objects to an operator display and optionally to an automatic response system.

Owner:HRL LAB

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com