Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

119 results about "Action prediction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Information providing method and information providing device

InactiveUS20040128066A1Instruments for road network navigationRoad vehicles traffic controlInformation acquisitionAction prediction

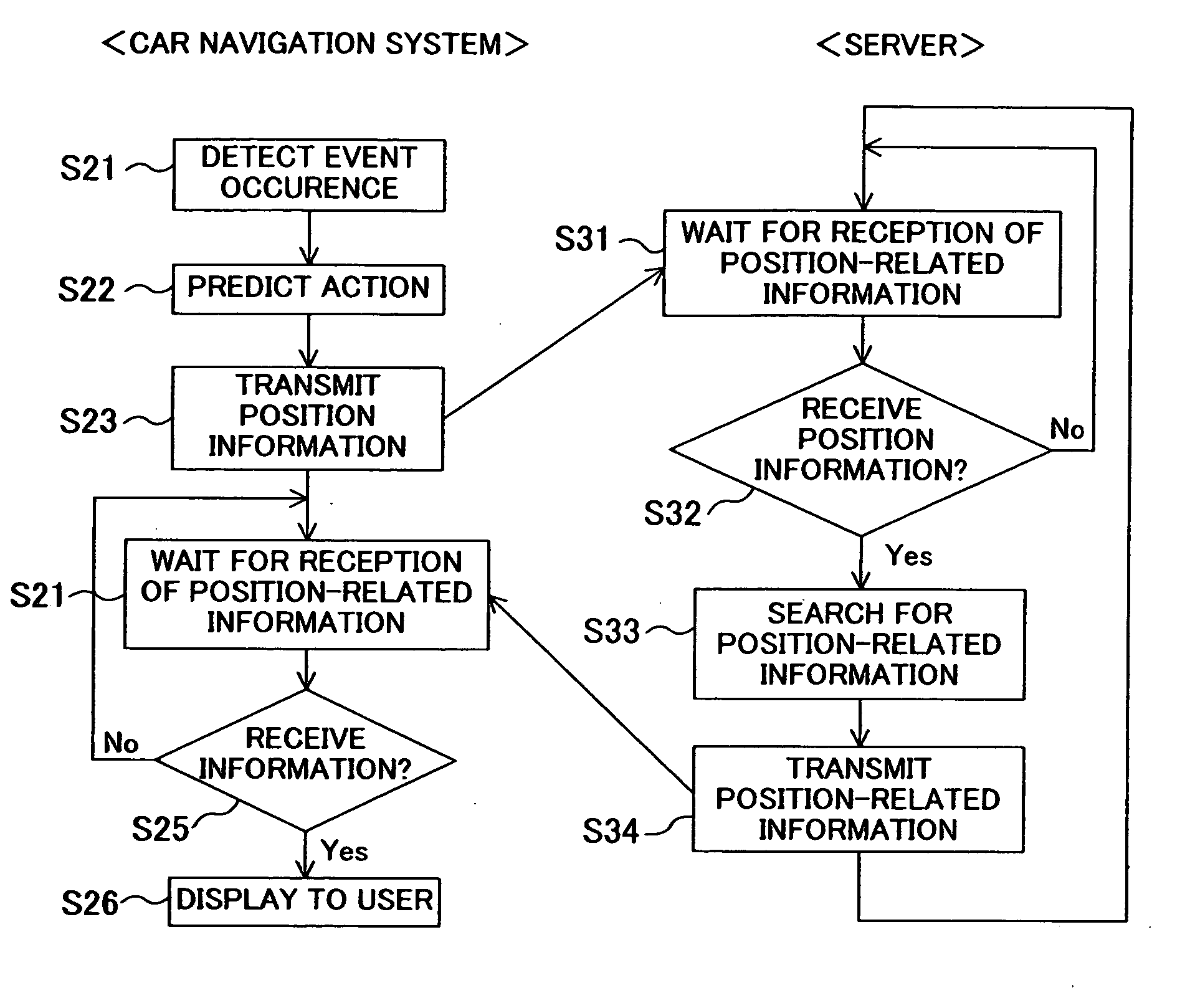

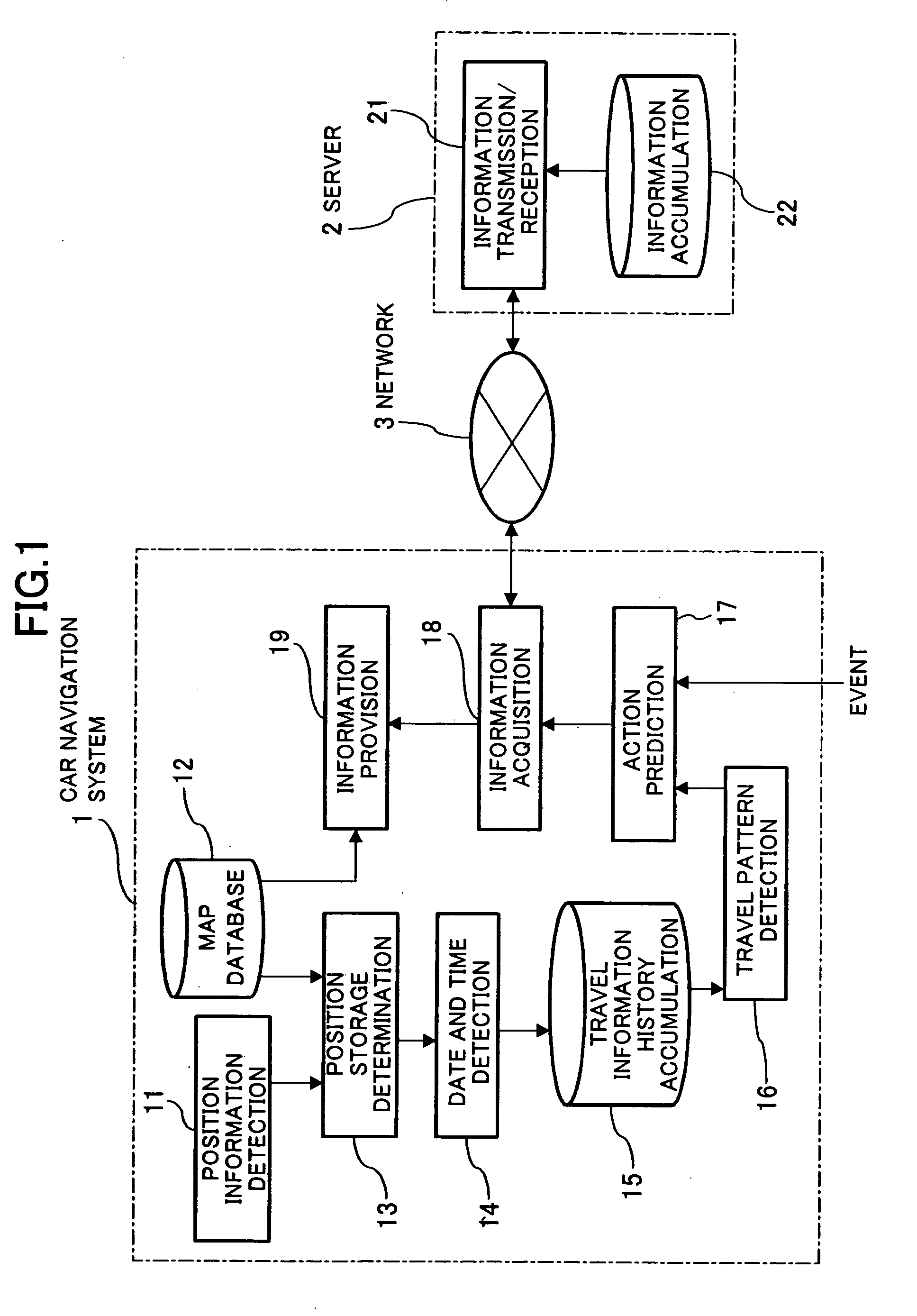

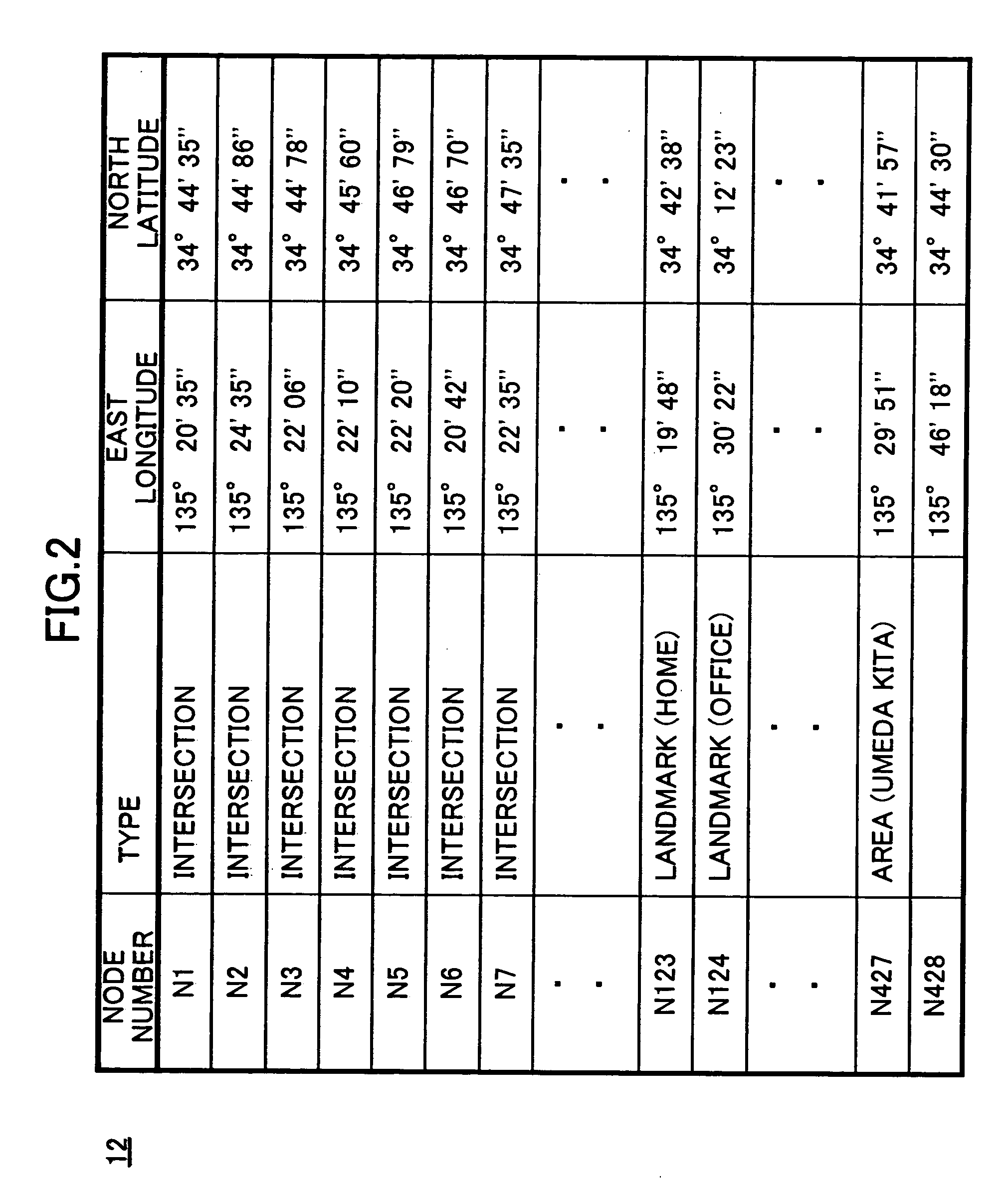

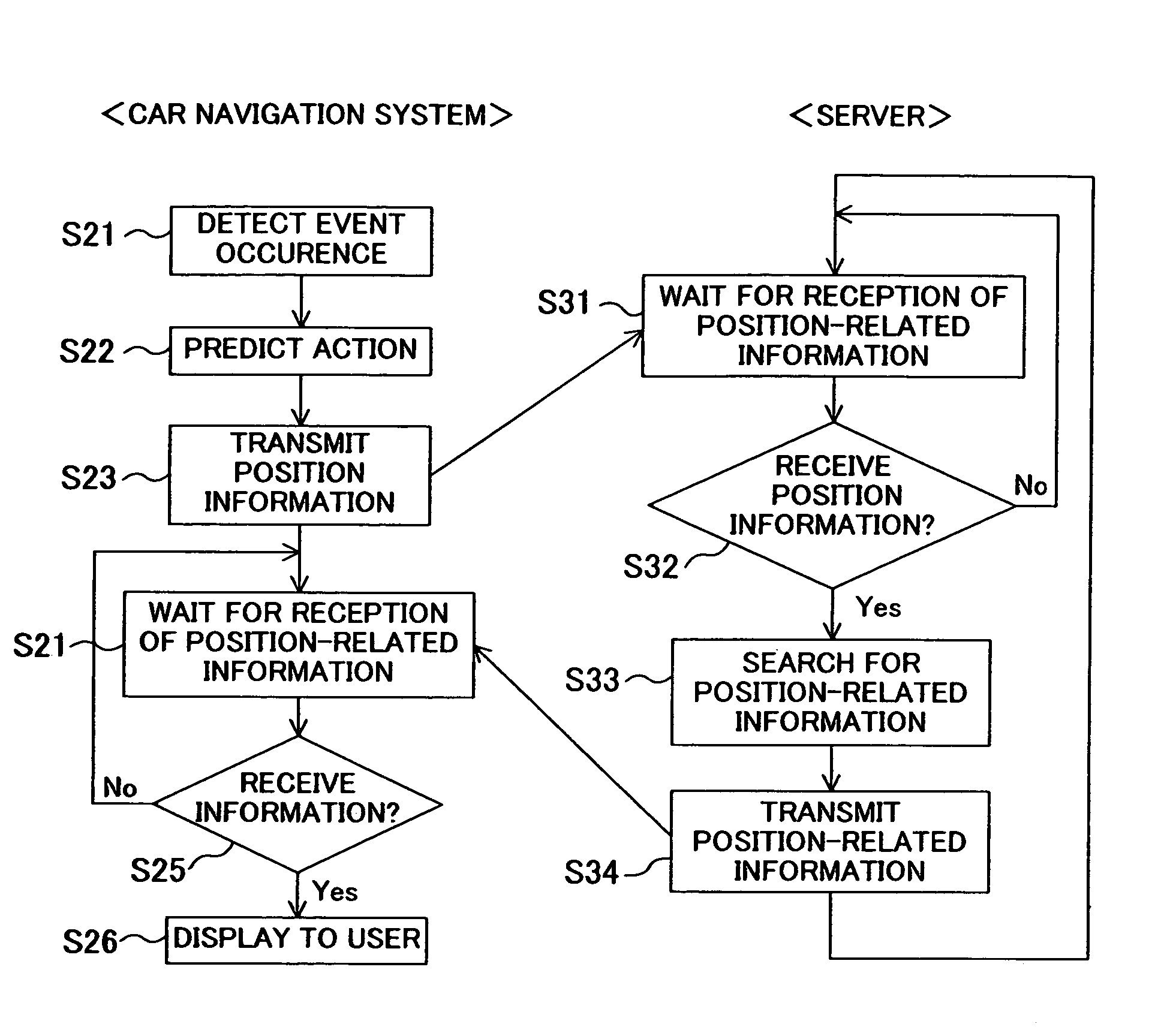

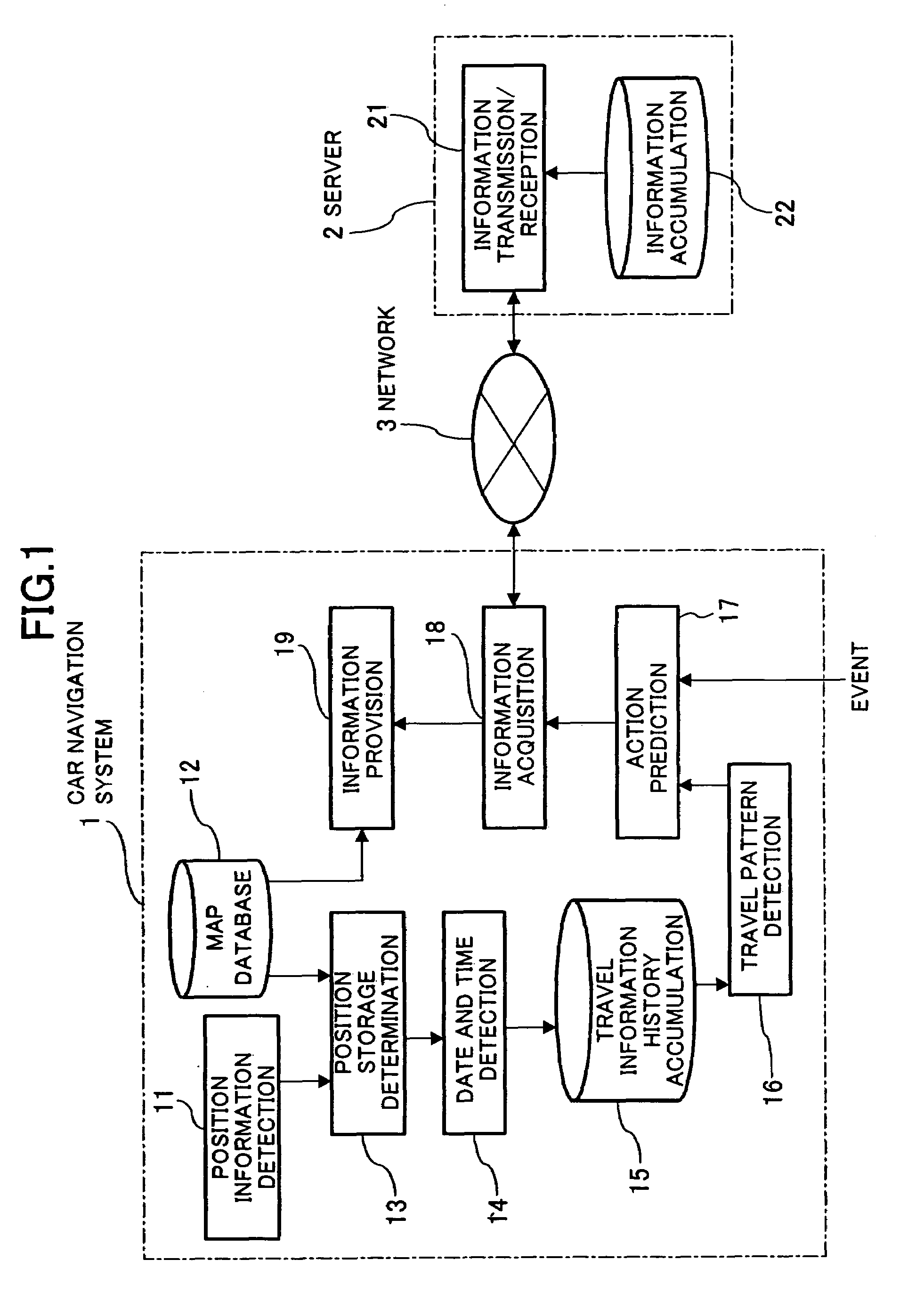

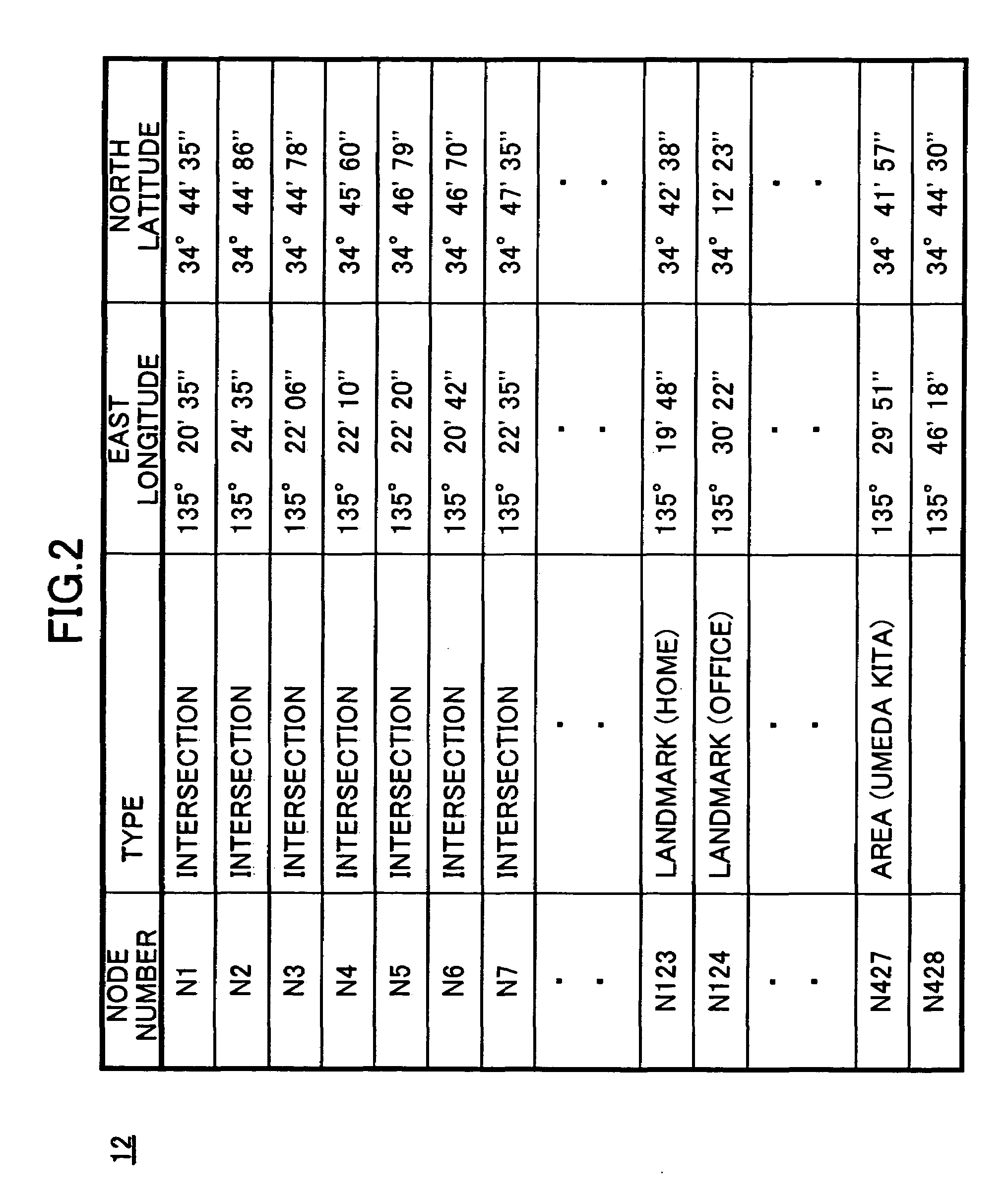

In a car navigation system (1), a position information detection means (11) detects position information on a vehicle using, for example, a GPS. A travel information history of the vehicle obtained based on the detected position information is accumulated in a travel information history means (15). When detecting an event such as start of an engine, an action prediction means (17) predicts a destination of the vehicle by referring to a route to the current time and to the accumulated travel information history. Commercial or traffic information regarding the predicted destination is acquired by an information acquisition means (18) from a server (2), and then is displayed on a screen, for example, by an information provision means (19).

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

Information providing method and information providing device

InactiveUS7130743B2Instruments for road network navigationDigital data processing detailsAction predictionInformation acquisition

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

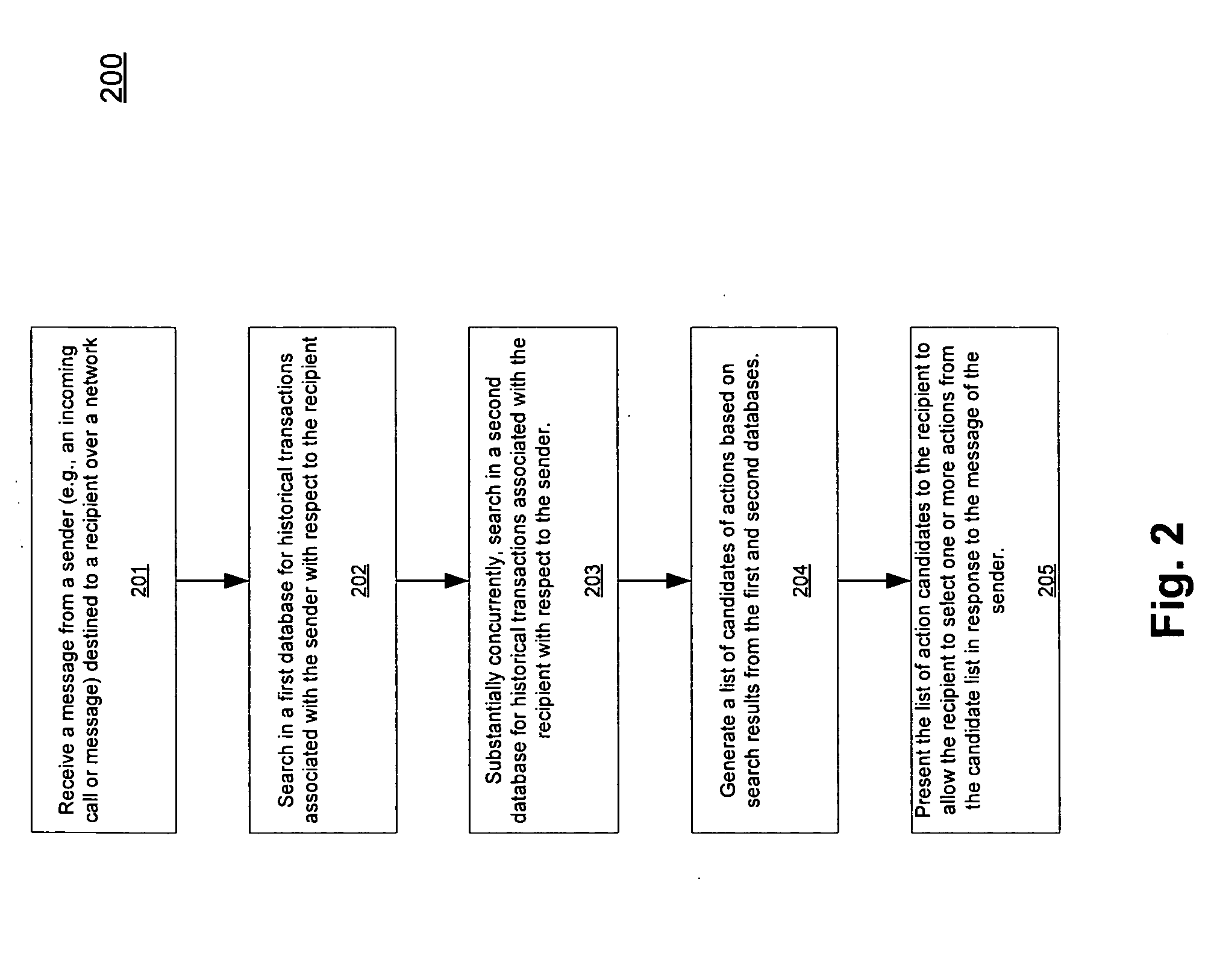

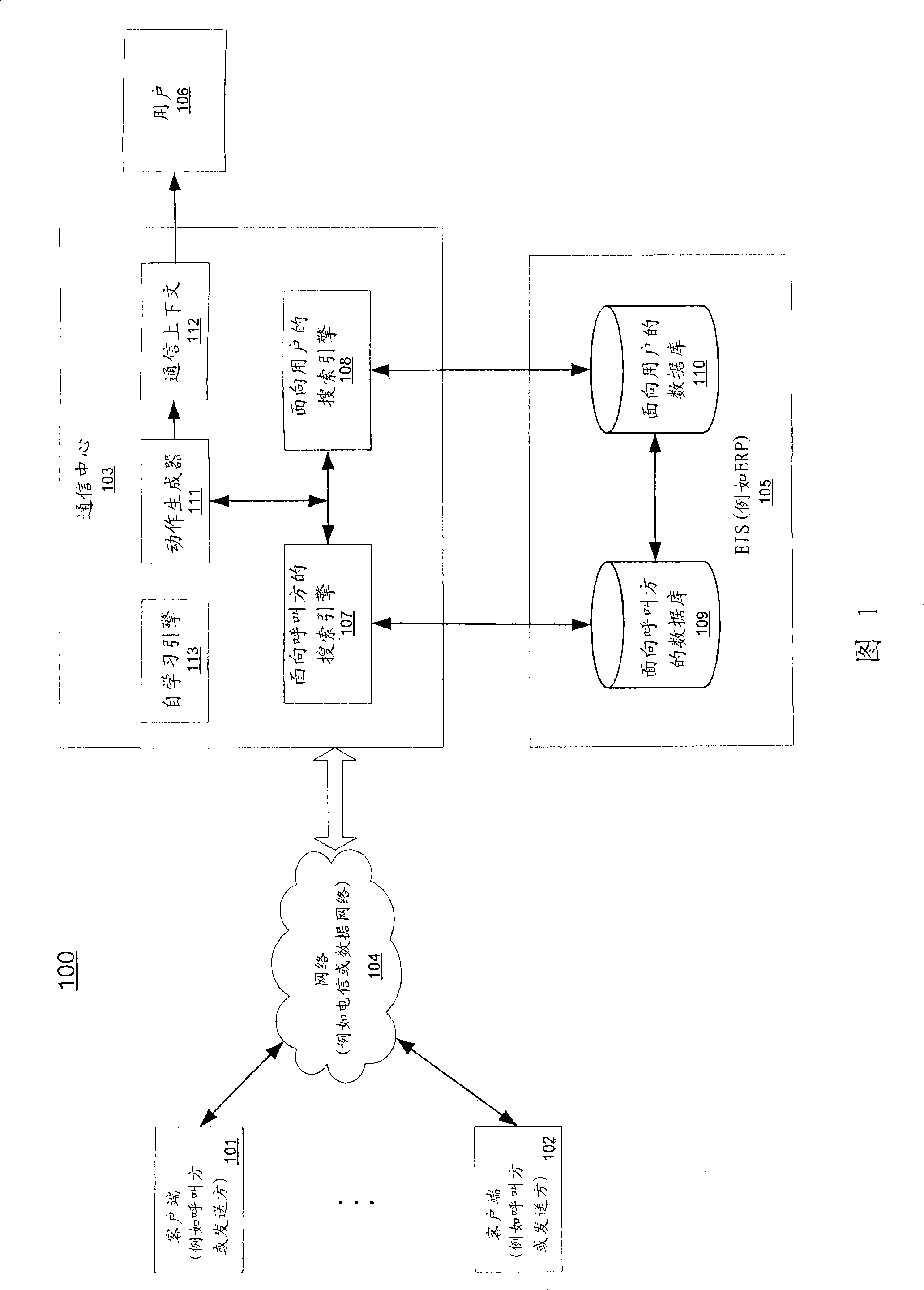

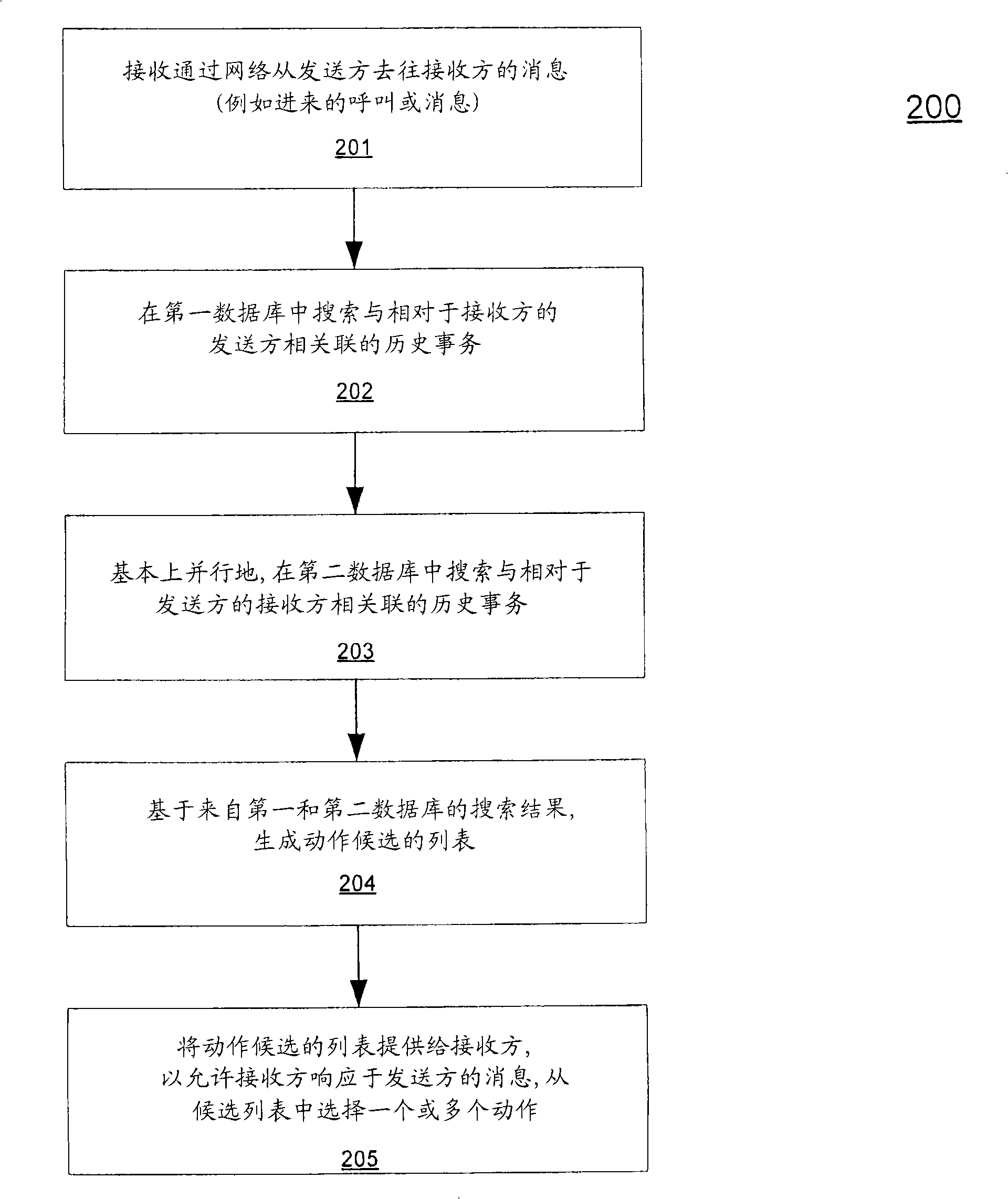

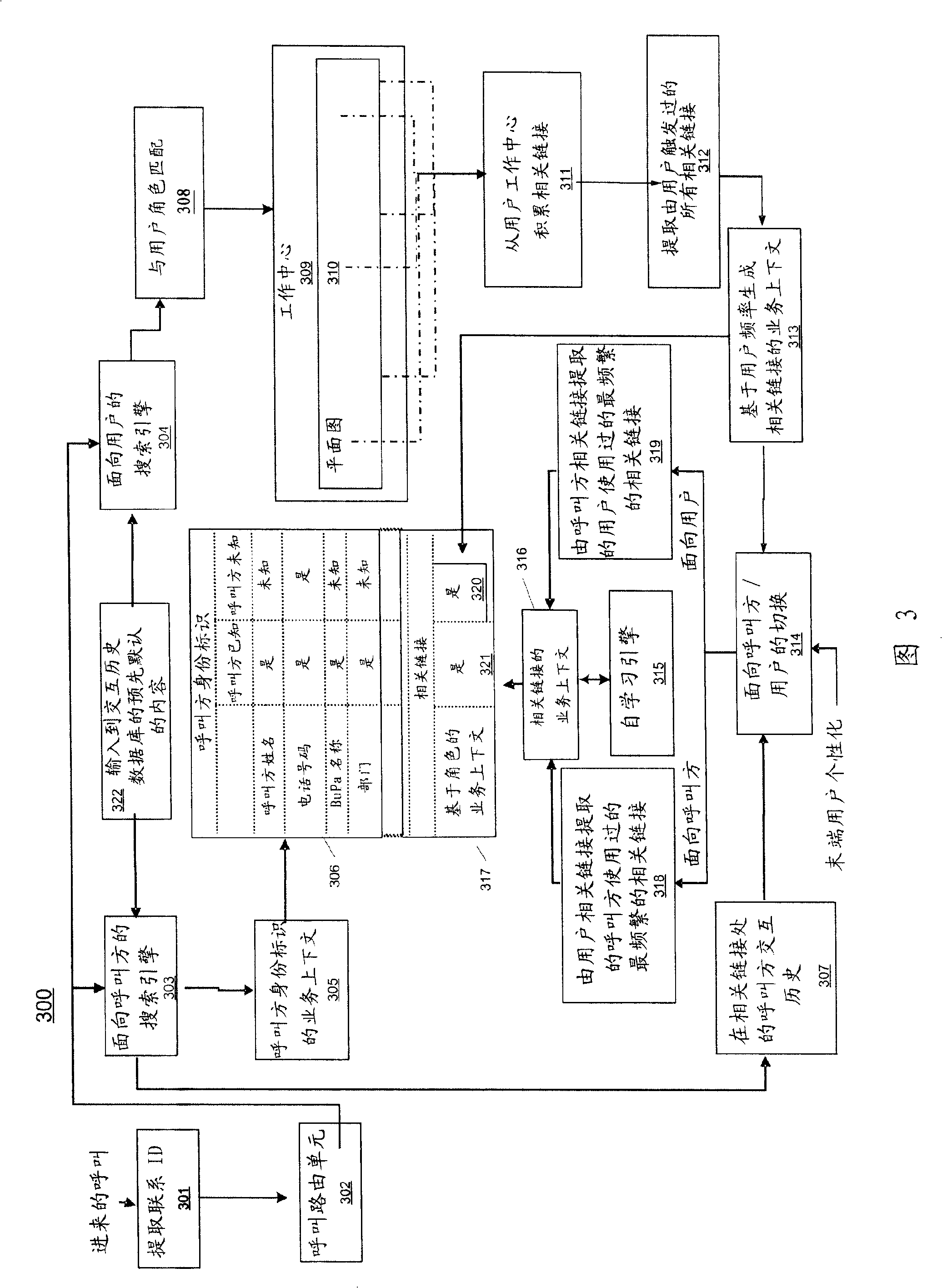

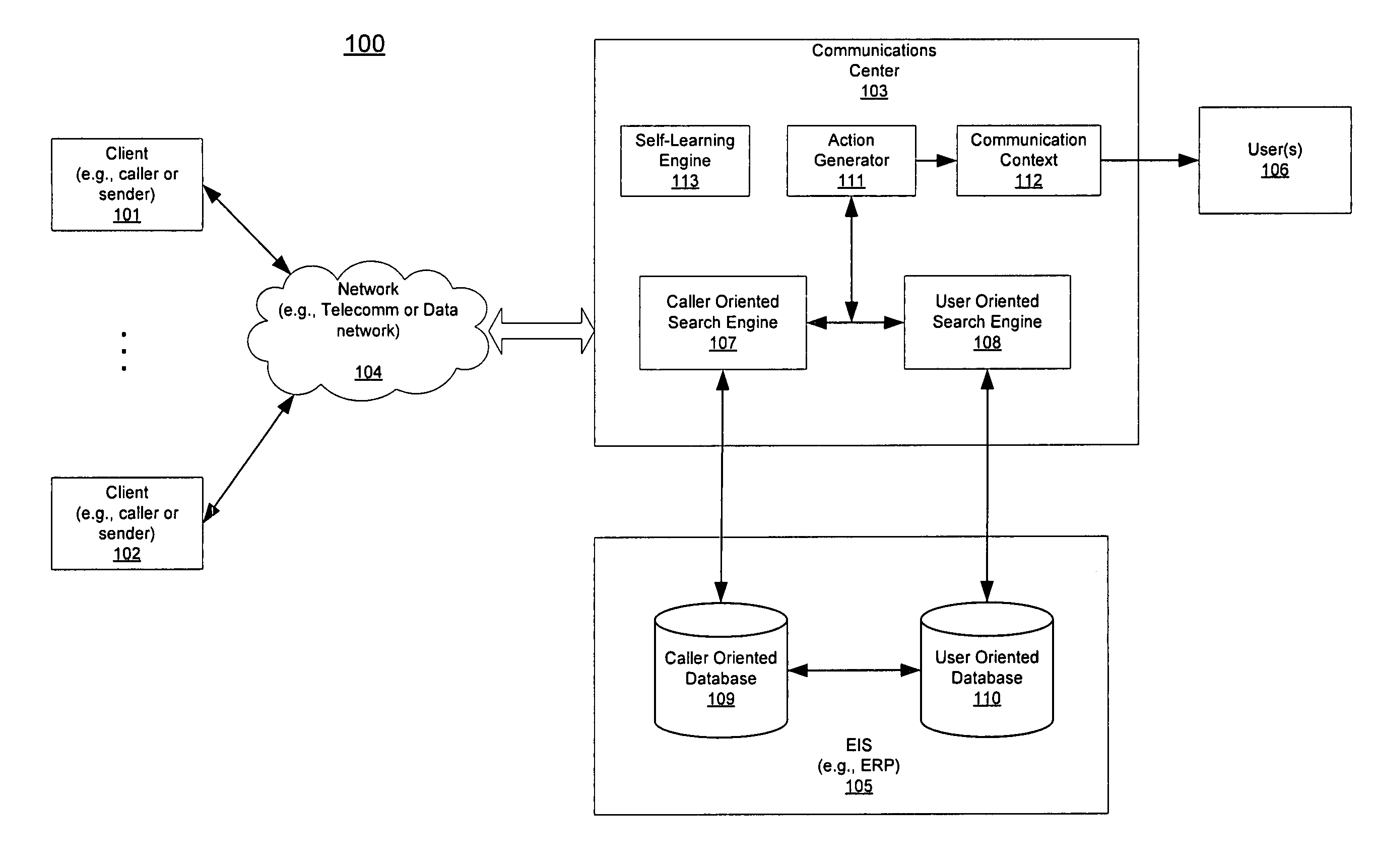

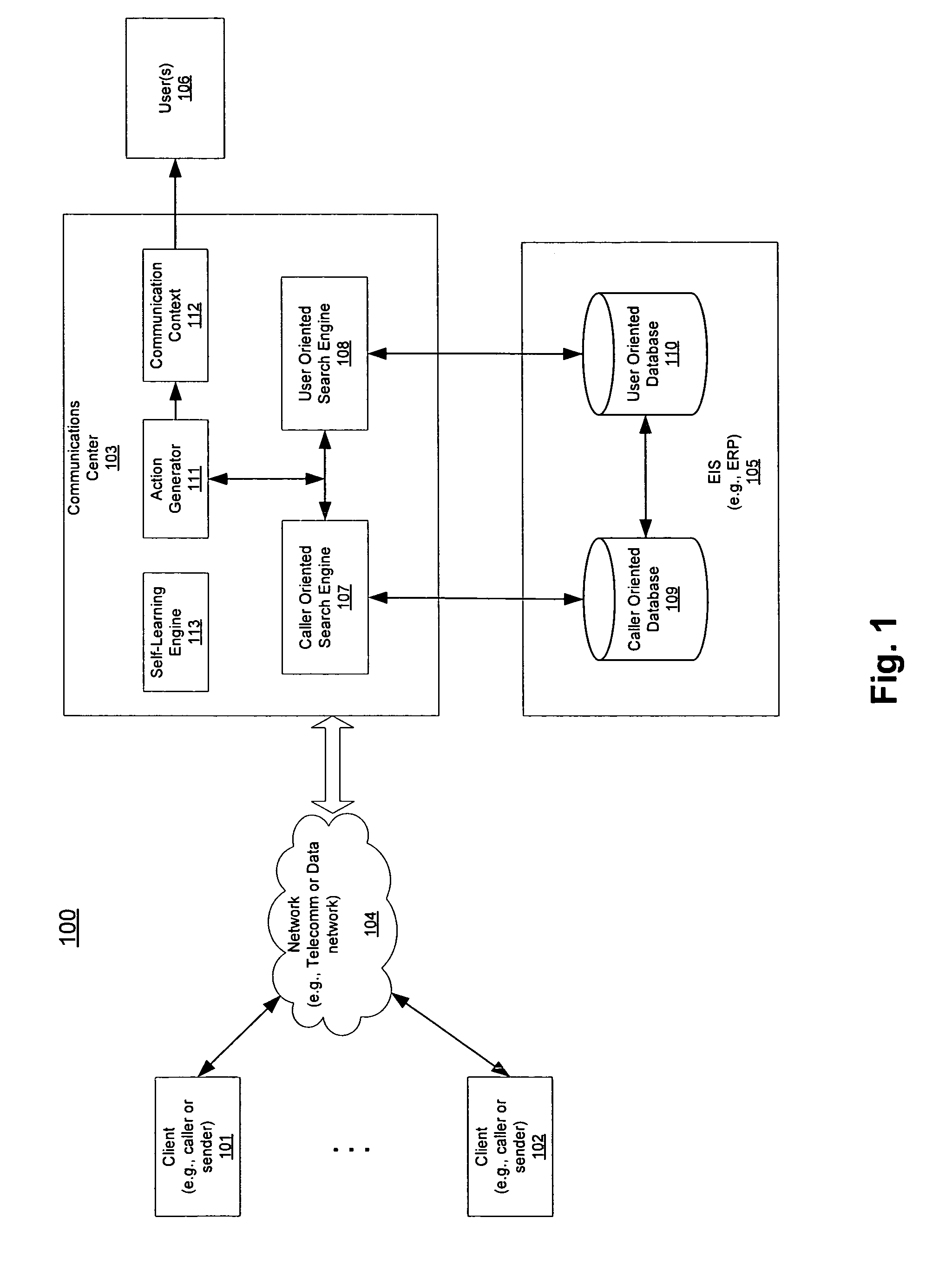

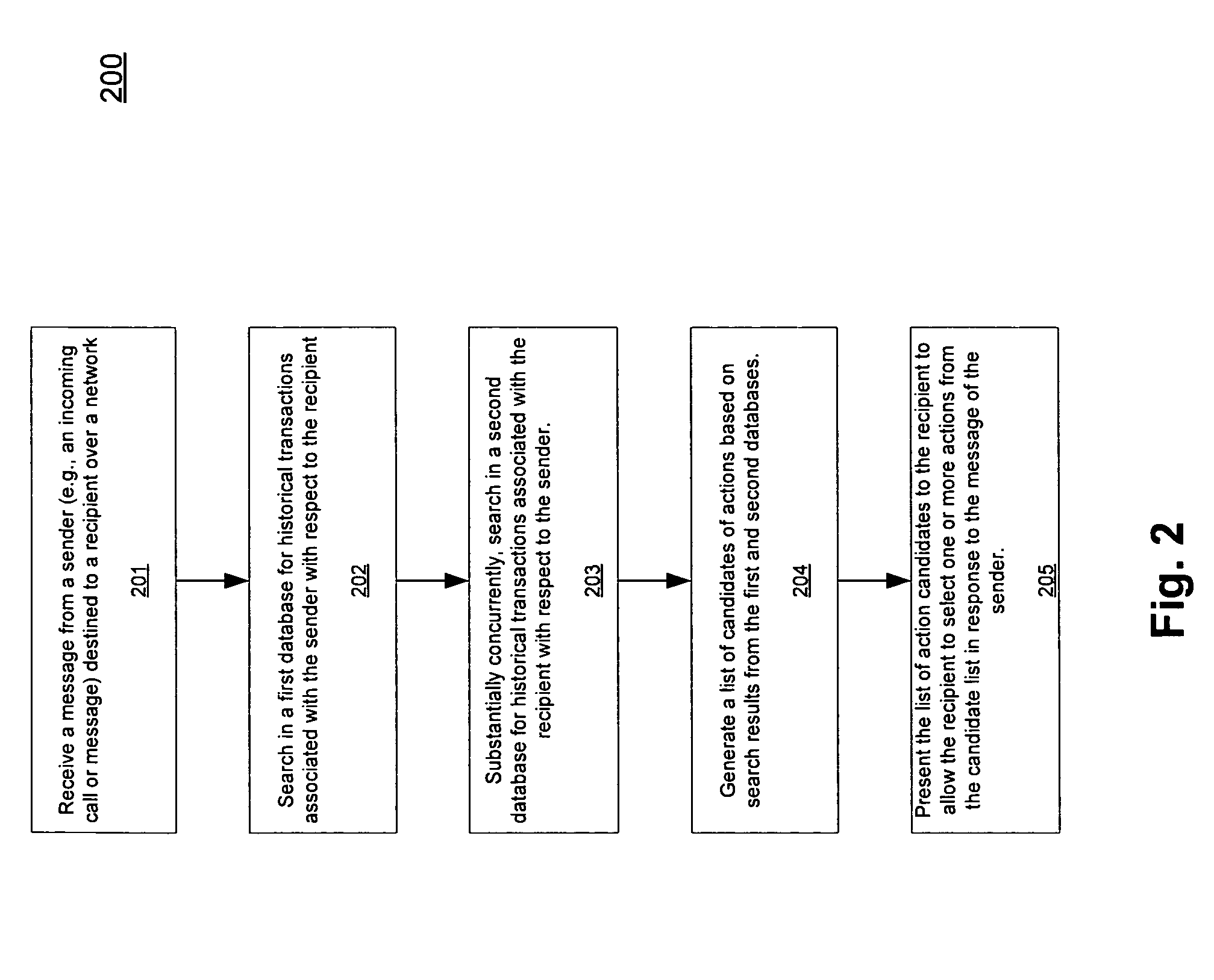

Action prediction based on interactive history and context between sender and recipient

ActiveUS20080126310A1Digital data processing detailsOffice automationSpeech applicationsAction prediction

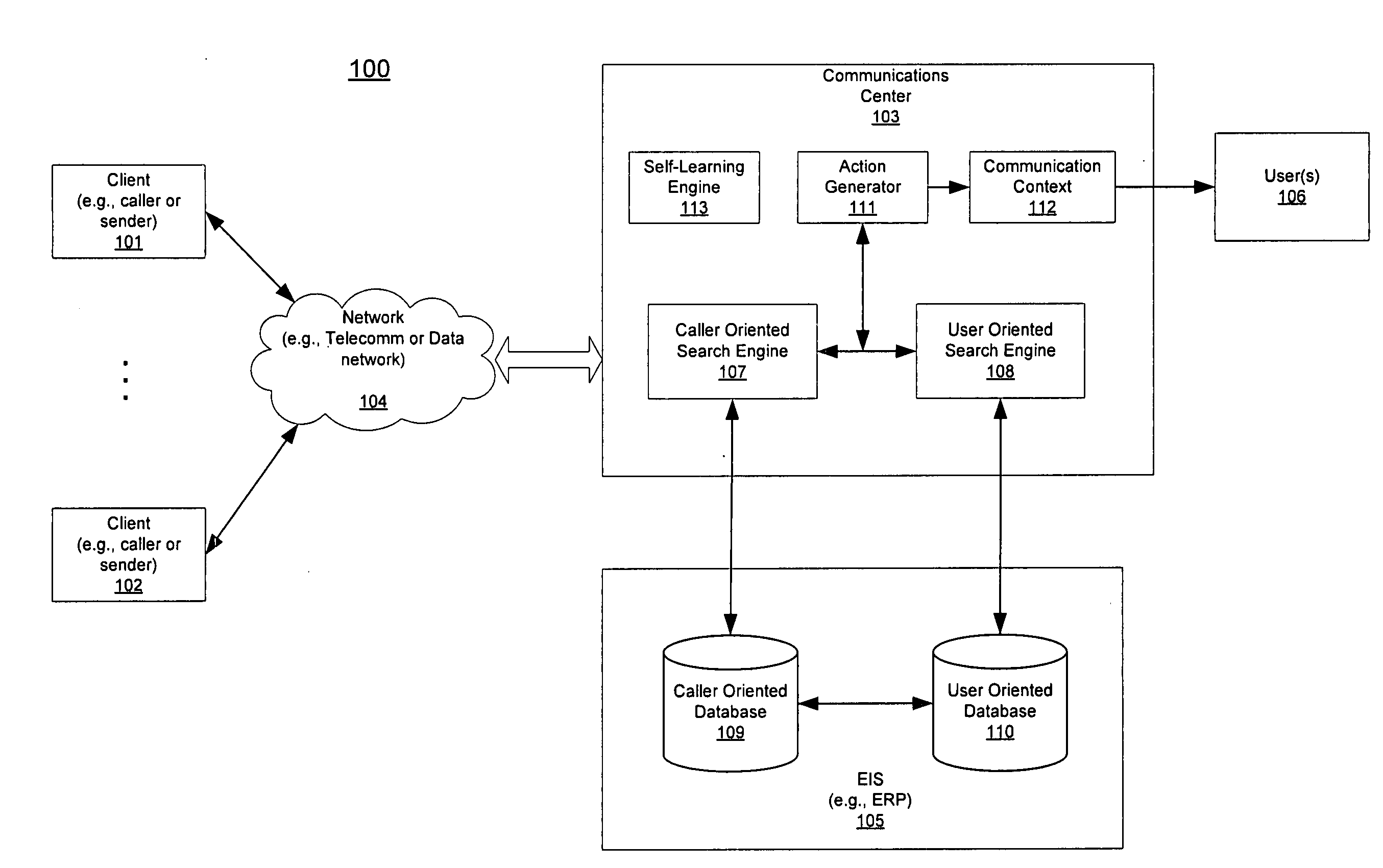

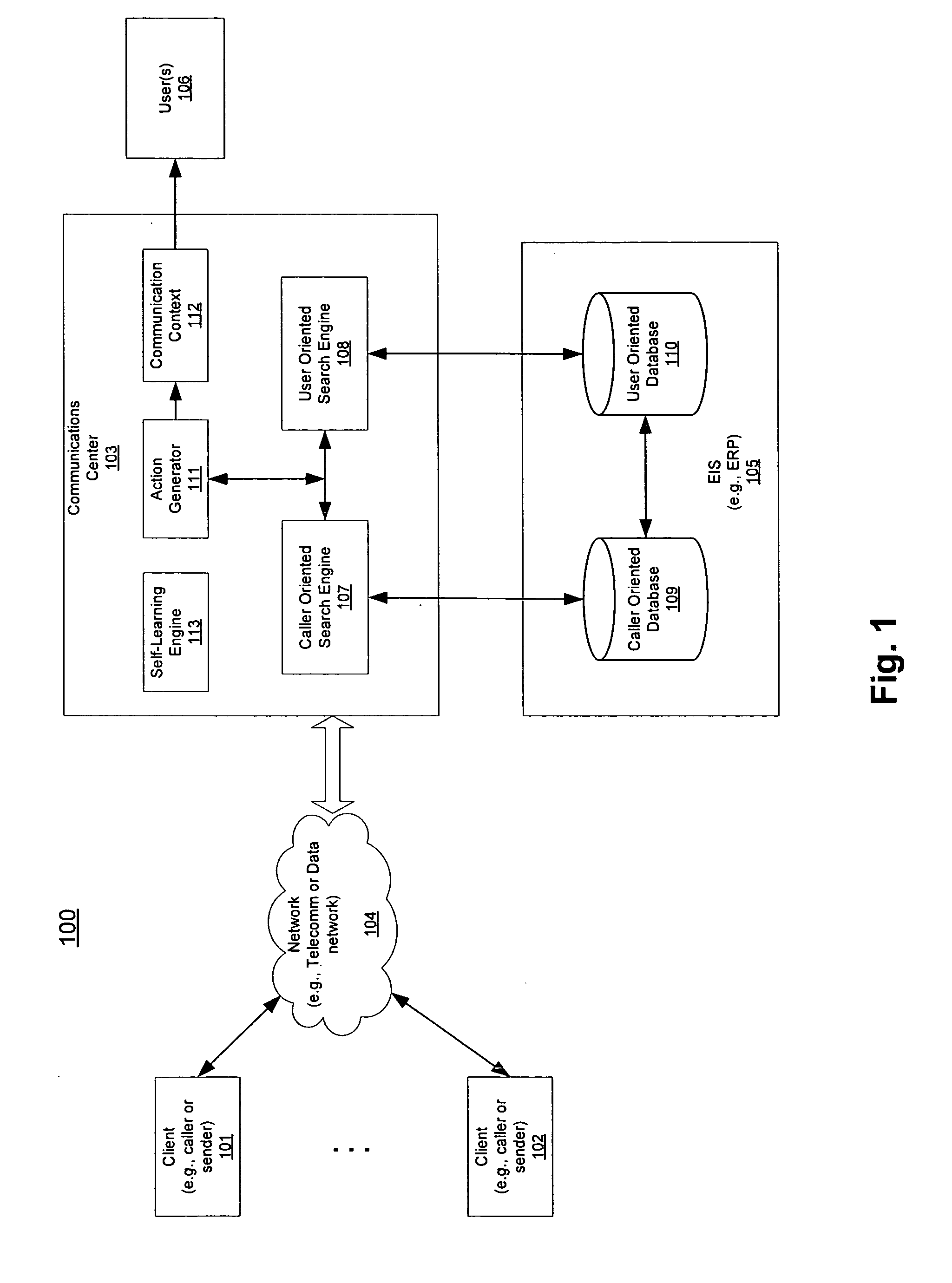

Techniques for action prediction based on interactive history and context between a sender and a recipient are described herein. In one embodiment, a process includes, but is not limited to, in response to a message to be received by a recipient from a sender over a network, determining one or more previous transactions associated with the sender and the recipient, the one or more previous transactions being recorded during course of operations performed within an entity associated with the recipient, and generating a list of one or more action candidates based on the determined one or more previous transactions, wherein the one or more action candidates are optional actions recommended to the recipient, in addition to one or more actions required to be taken in response to the message. Key word identification out of voice applications as well as guided actions has also been applied to generate action prediction candidates interactive history links Other methods and apparatuses are also described.

Owner:SAP AG

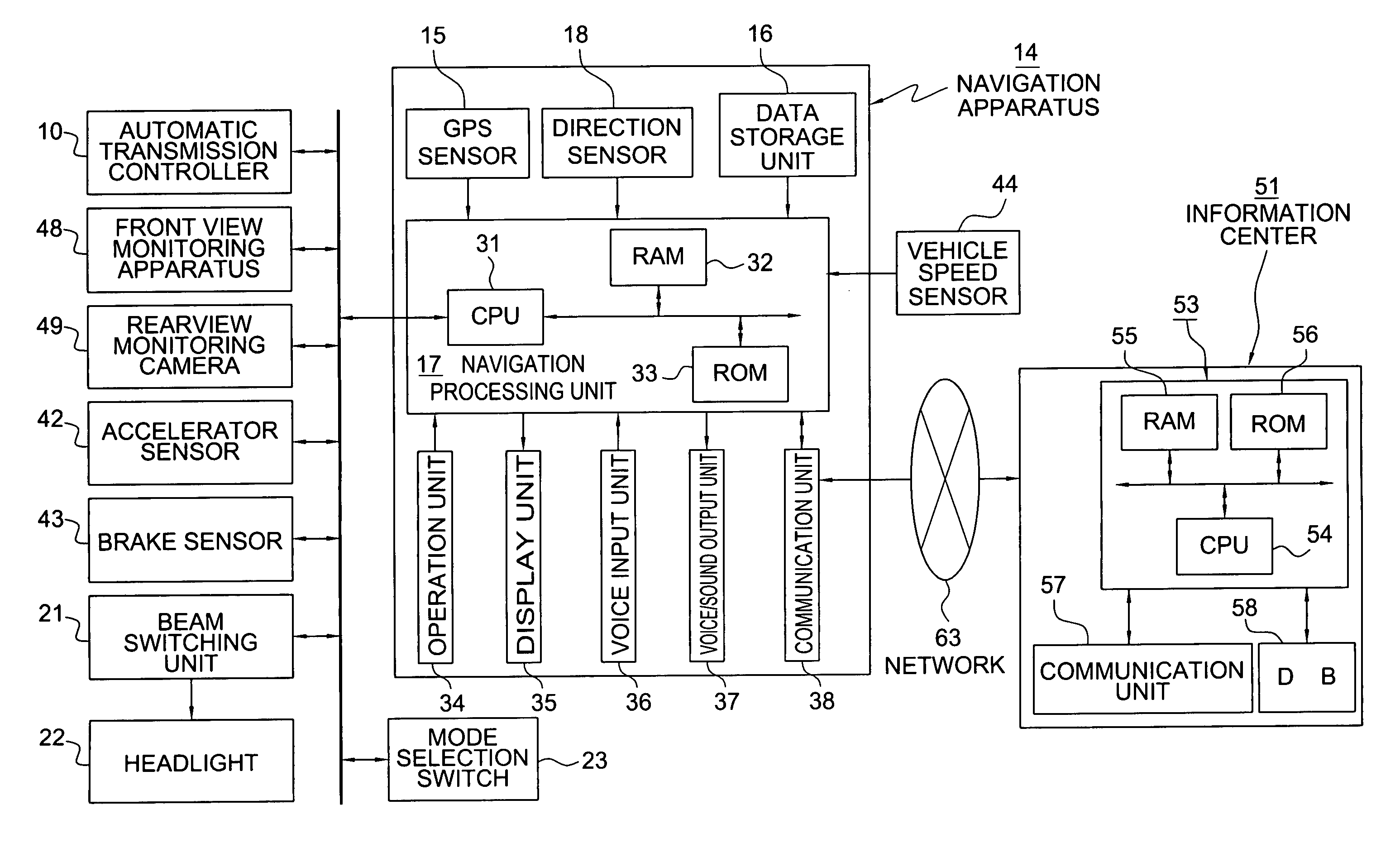

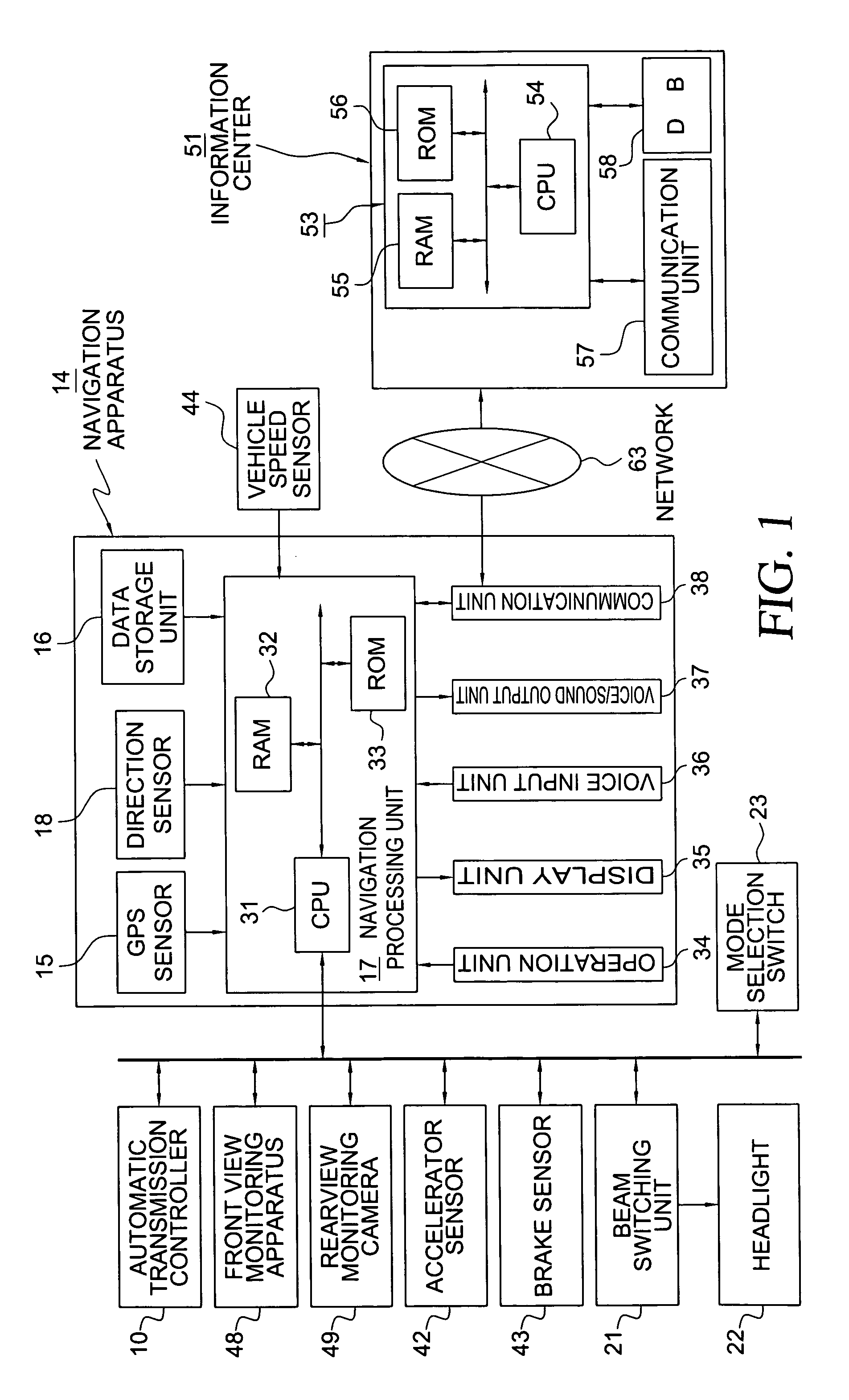

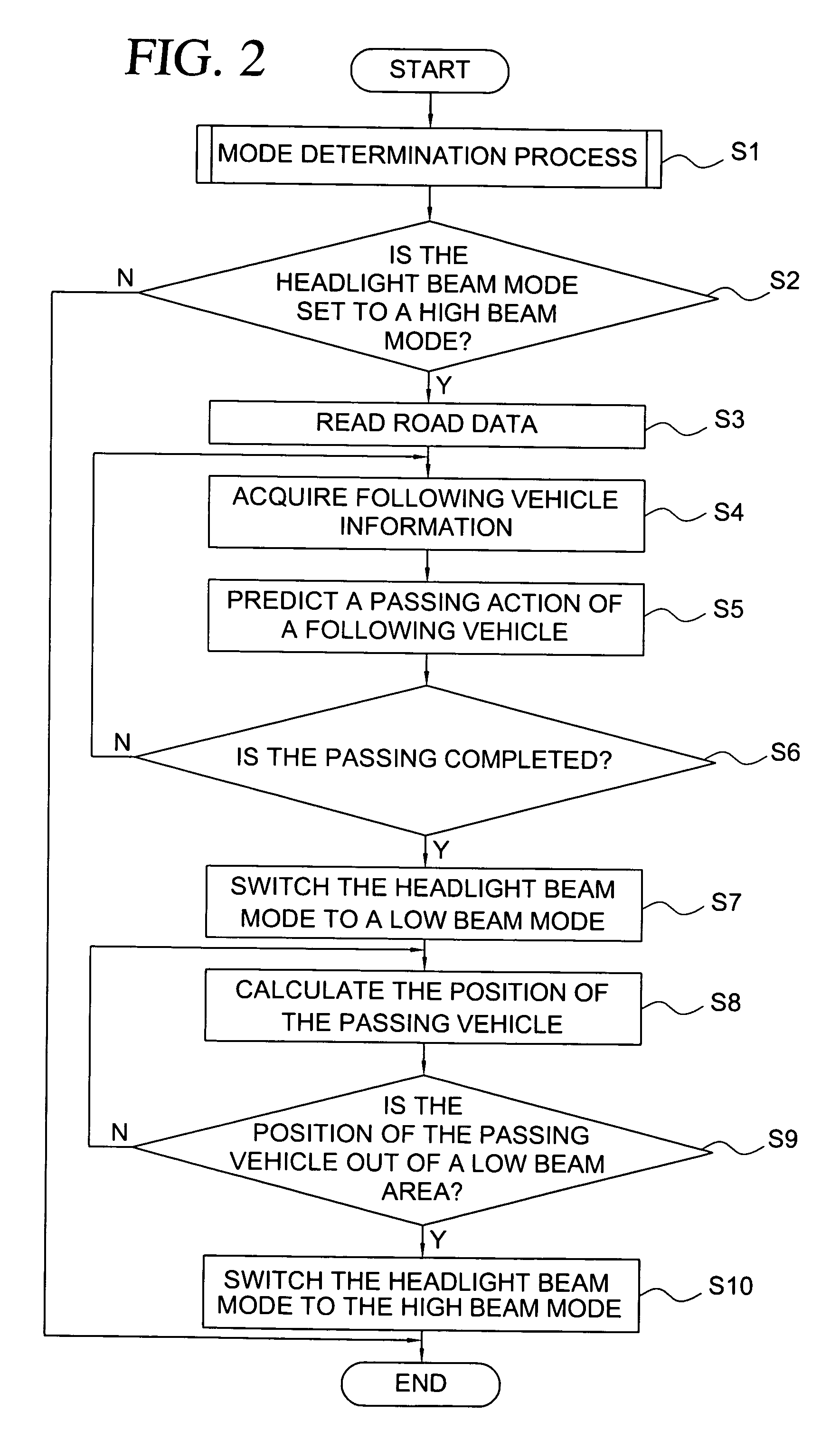

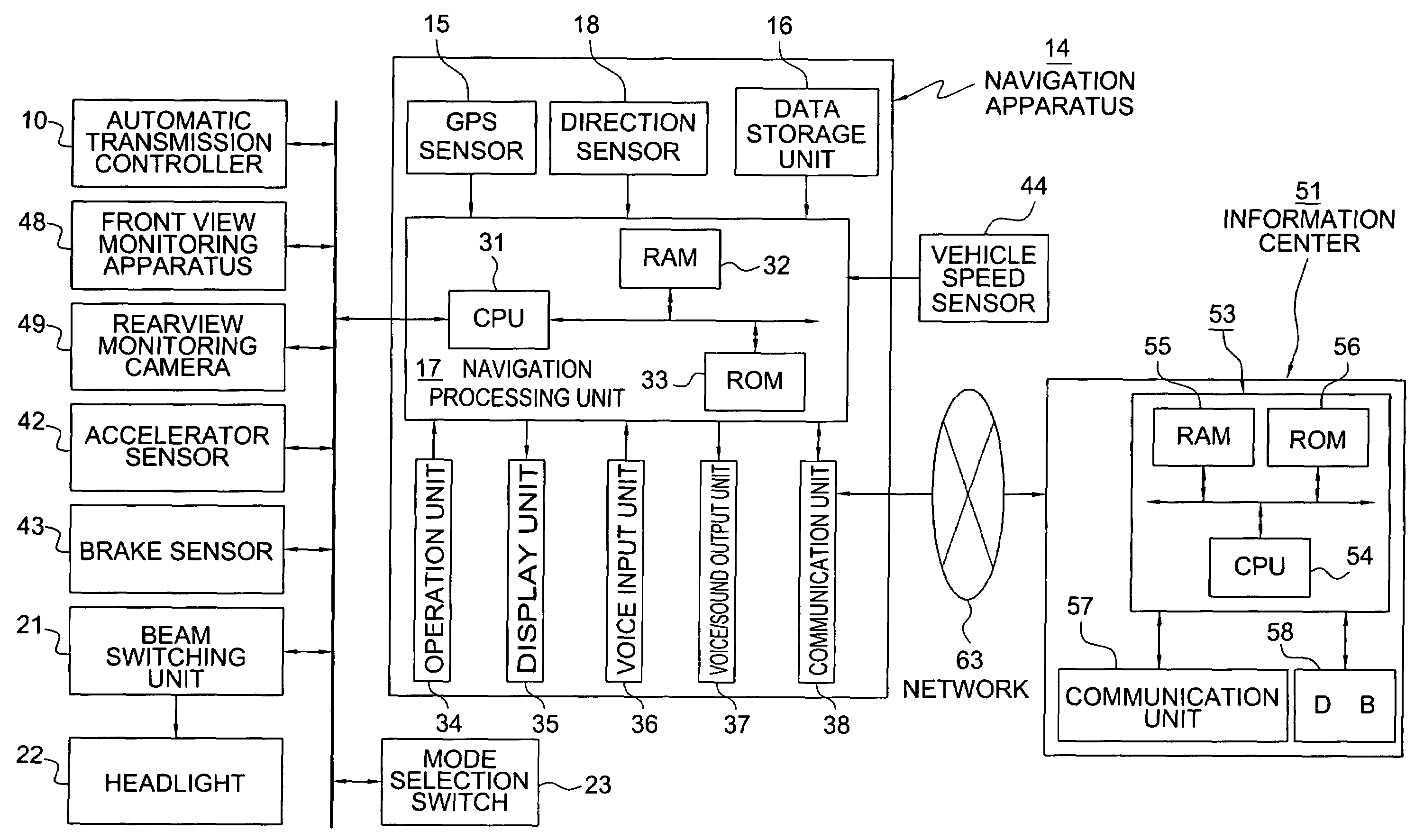

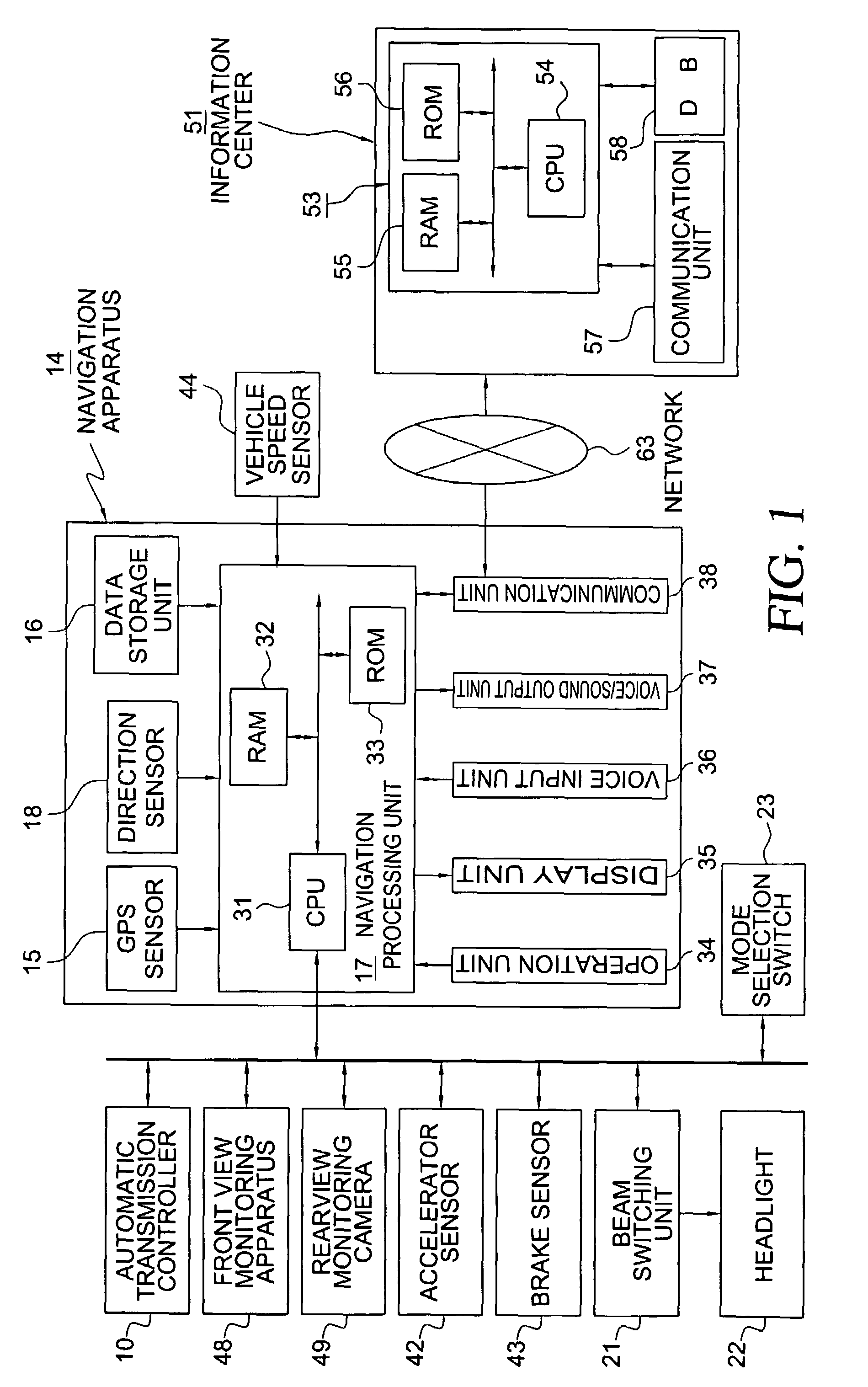

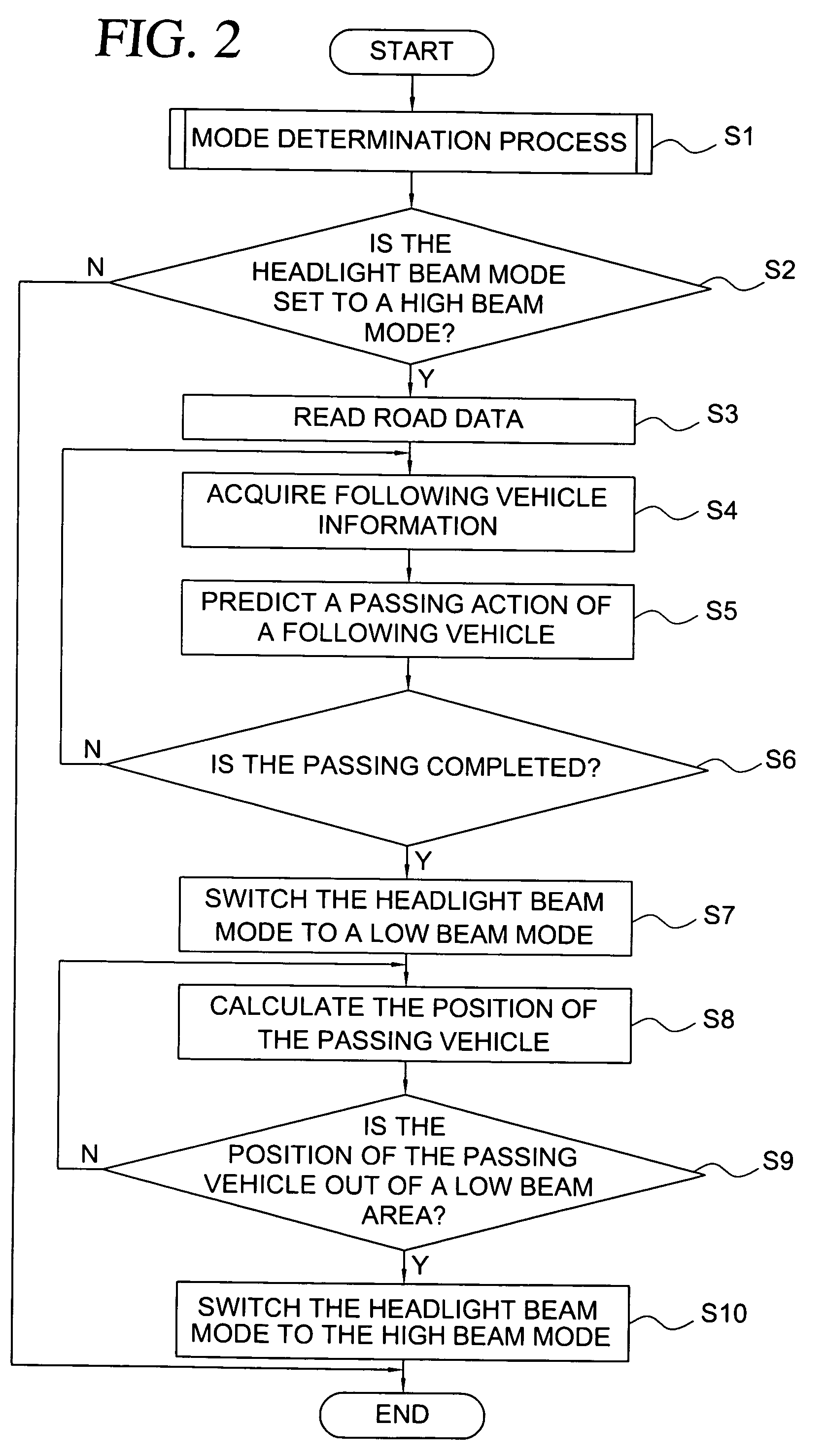

Headlight beam control system and headlight beam control method

A headlight beam control system includes an image taking apparatus for capturing an image to the rear of the user's vehicle and for generating image data from the captured image, a following vehicle information acquisition unit for acquiring following vehicle information from the image data, a passing action prediction unit for predicting a passing action based on the following vehicle information, a mode switching condition judgment unit for judging whether a mode switching condition is satisfied, based on the predicted passing action, and an automatic mode setting unit for switching the headlights between a high beam mode and a low beam mode when the mode switching condition is satisfied. When passing of the user's vehicle is predicted based on the following vehicle information, the headlights are switched from the high beam mode to the low beam mode.

Owner:AISIN AW CO LTD

Headlight beam control system and headlight beam control method

A headlight beam control system includes an image taking apparatus for capturing an image to the rear of the user's vehicle and for generating image data from the captured image, a following vehicle information acquisition unit for acquiring following vehicle information from the image data, a passing action prediction unit for predicting a passing action based on the following vehicle information, a mode switching condition judgment unit for judging whether a mode switching condition is satisfied, based on the predicted passing action, and an automatic mode setting unit for switching the headlights between a high beam mode and a low beam mode when the mode switching condition is satisfied. When passing of the user's vehicle is predicted based on the following vehicle information, the headlights are switched from the high beam mode to the low beam mode.

Owner:AISIN AW CO LTD

Action prediction based on interactive history and context between sender and recipient

ActiveCN101287040ASpecial service for subscribersOffice automationWord identificationSpeech applications

Techniques for action prediction based on interactive history and context between a sender and a recipient are described herein. In one embodiment, a process includes, but is not limited to, in response to a message to be received by a recipient from a sender over a network, determining one or more previous transactions associated with the sender and the recipient, the one or more previous transactions are recorded during course of operations performed within an entity associated with the recipient, and generating a list of one or more action candidates based on the determined one or more previous transactions. The invention is characterized in that the one or more action candidates are optional actions recommended to the recipient, in addition to one or more actions required to be taken in response to the message. Key word identification out of voice applications as well as guided actions has also been applied to generate action prediction candidates interactive history links. Other methods and devices are also described.

Owner:SAP AG

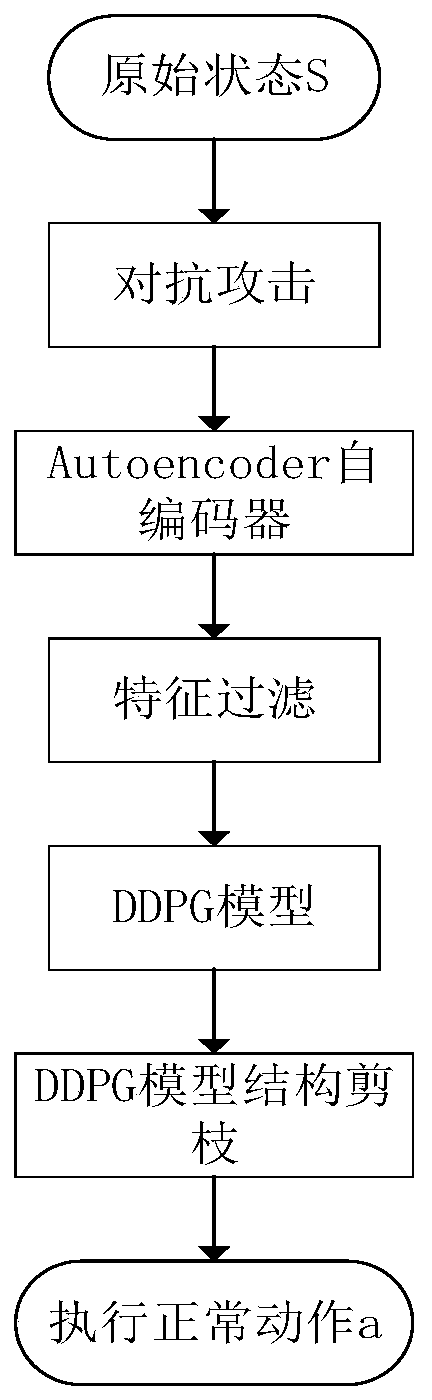

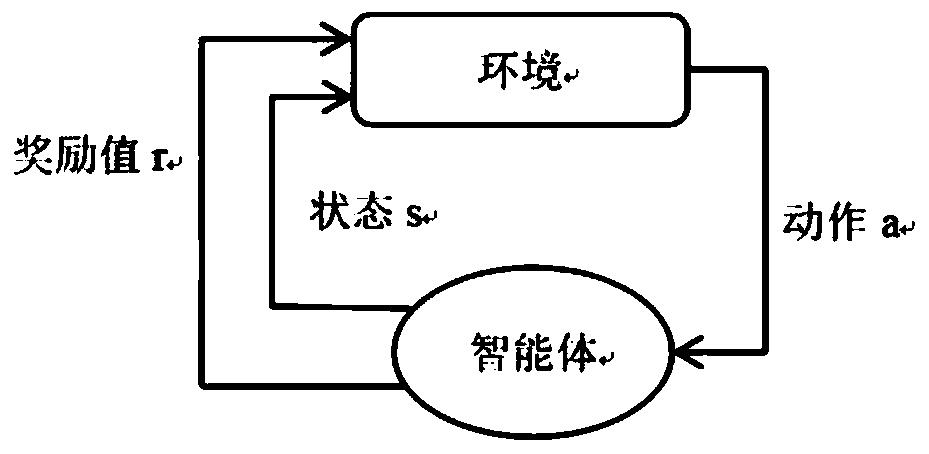

Feature filtering defense method for deep reinforcement learning model

ActiveCN111600851AEffective filteringMinor Disturbance RemovalCharacter and pattern recognitionNeural architecturesAlgorithmEngineering

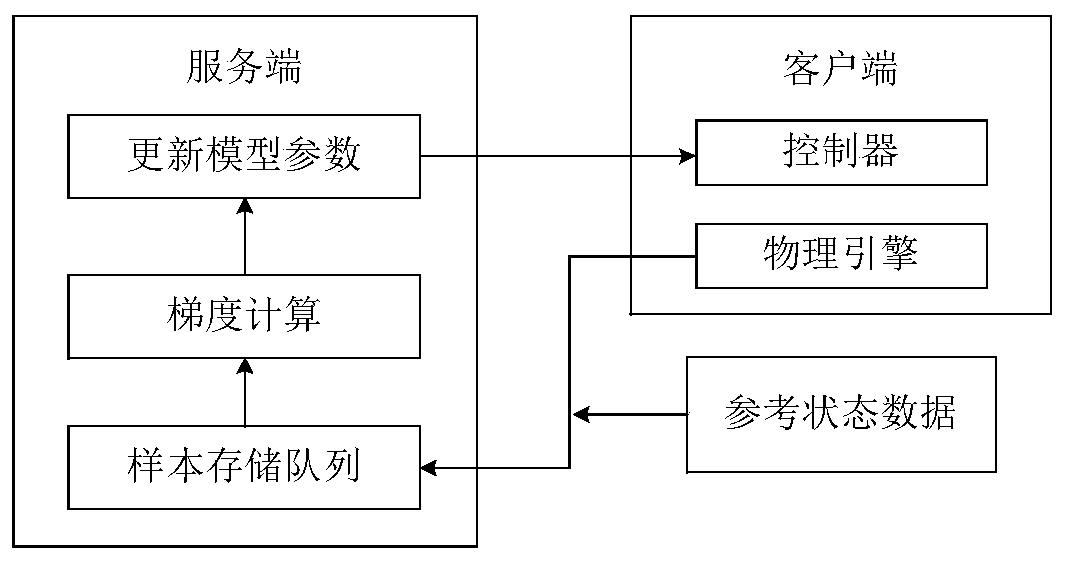

The invention discloses a feature filtering defense method for a deep reinforcement learning model. The feature filtering defense method comprises the following steps that: (1) aiming at the]= DDPG model for generating continuous behaviors, a system comprises an actor network and a critic network, wherein the actor network comprises an action estimation network and an action implementation network, the critic network comprises a state estimation network and a state implementation network, pre-training is conducted on the deep reinforcement learning model DDPG, and a current state, a behavior,a reward value and a next state of a pre-training stage are stored in a cache region; (2) an auto-encoder is trained, feature filtering is performed on an input state by using an encoder of the trained auto-encoder to obtain a feature map corresponding to the filtered input state, and a feature map is stored in the cache region; and (3) a convolution kernel in the pre-trained DDPG model is pruned,action prediction is performed by using the pruned DPG model, and a prediction action is output and executed.

Owner:ZHEJIANG UNIV OF TECH

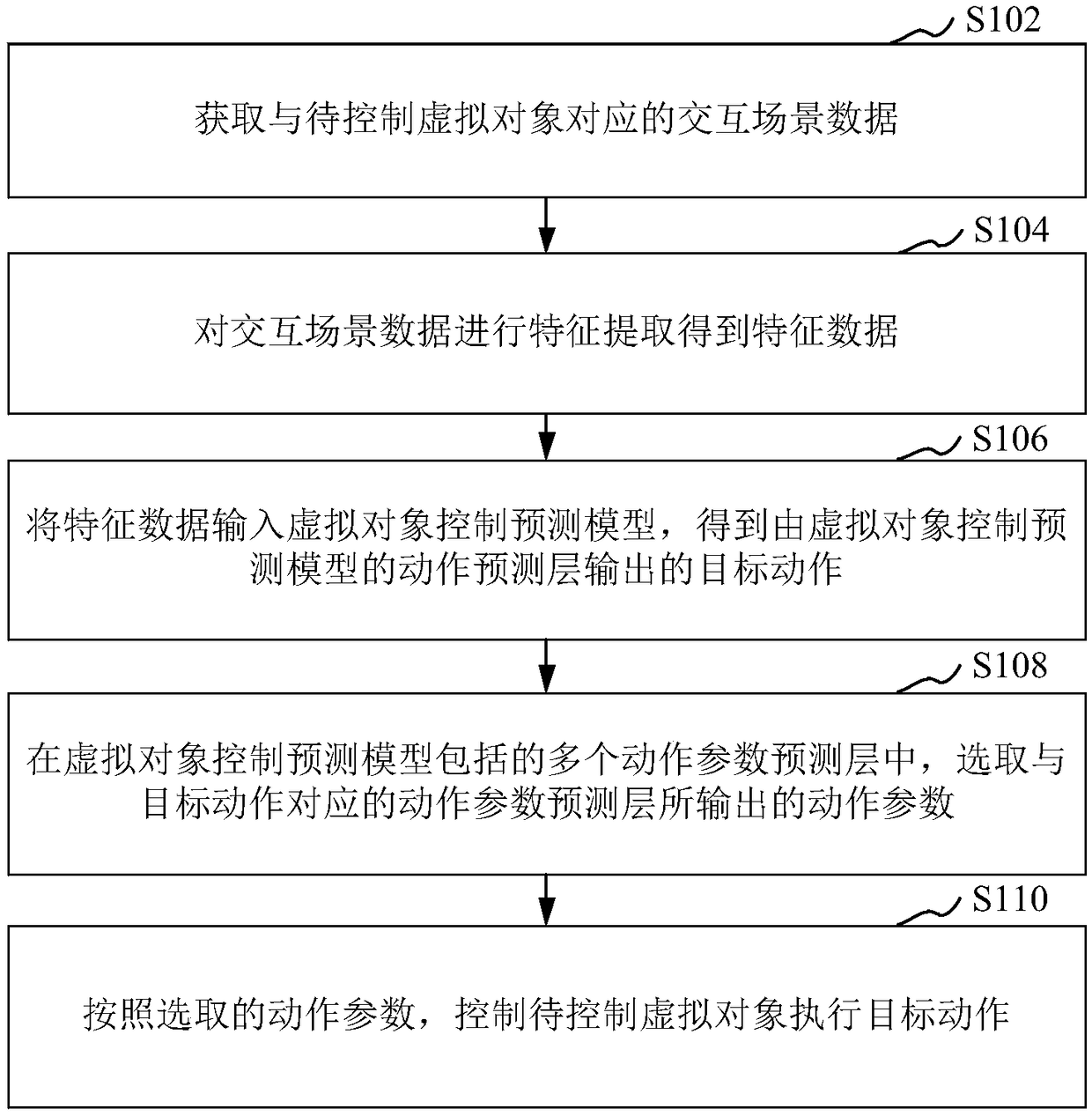

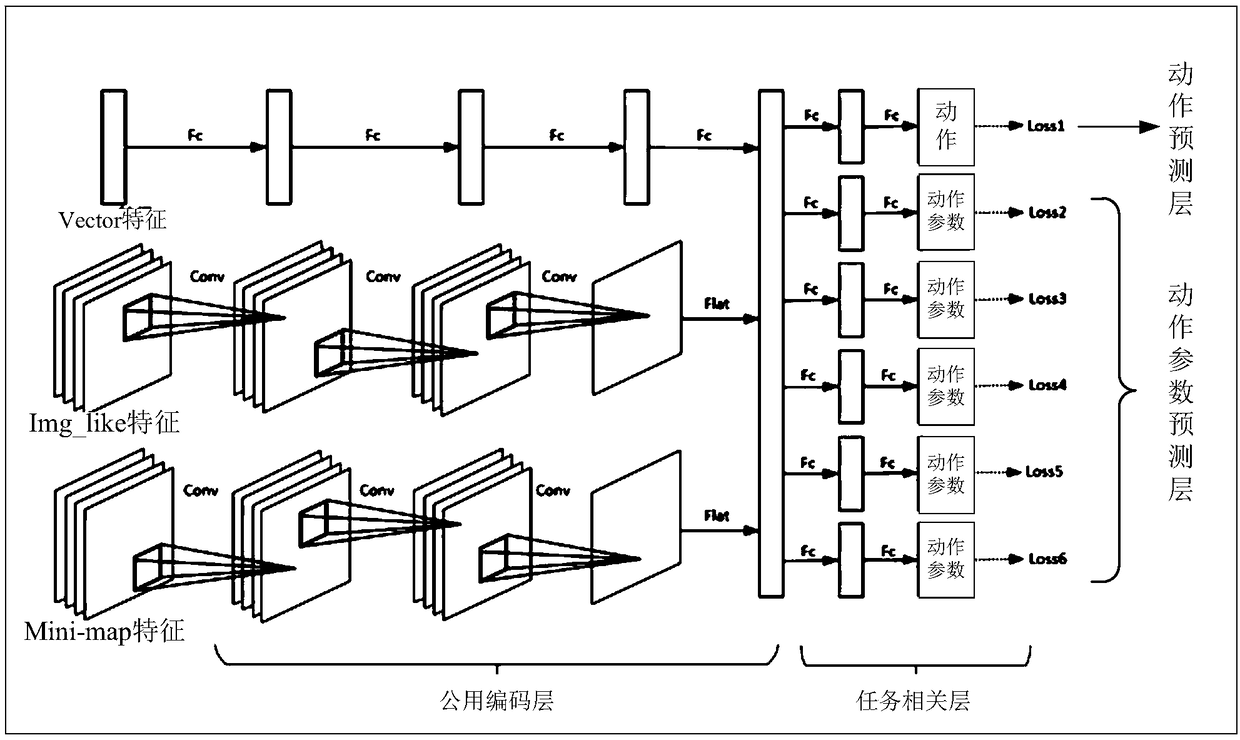

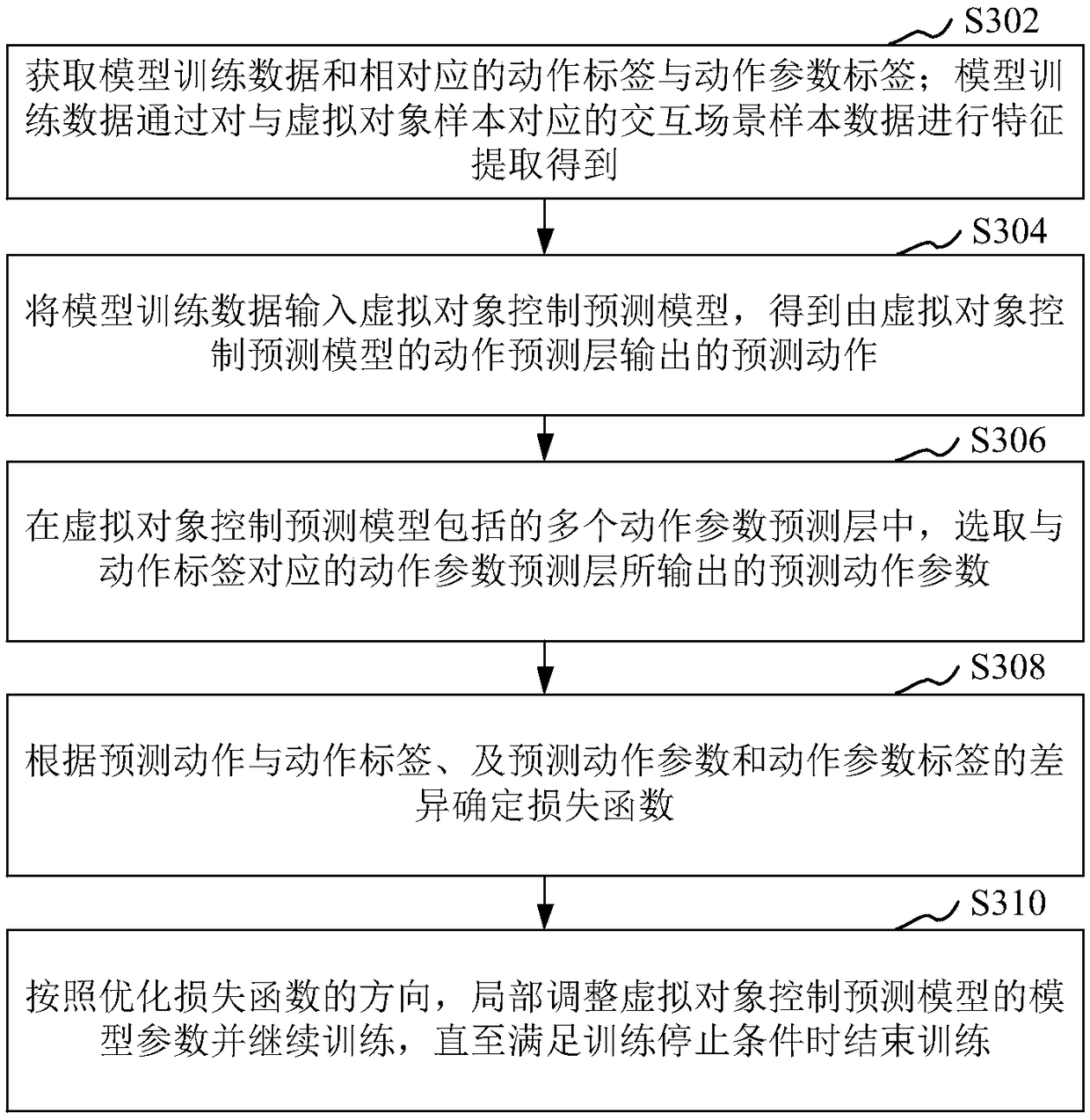

Virtual object control and model training method, device, storage medium and equipment

ActiveCN109464803ARealize automatic controlImprove control efficiencyVideo gamesFeature extractionTarget–action

The invention relates to a virtual object control and model training method, a device, a storage medium and equipment, wherein the virtual object control method comprises the following steps: acquiring interactive scene data corresponding to a virtual object to be controlled; carrying out characteristic extraction on the interactive scene data to obtain characteristic data; inputting the characteristic data into a virtual object control prediction model to obtain a target action output by an action prediction layer of the virtual object control prediction model; selecting action parameters output by an action parameter prediction layer corresponding to the target action in a plurality of action parameter prediction layers included in the virtual object control prediction model; controllingthe virtual object to be controlled to execute the target action according to the selected action parameters. The virtual object control and model training method, the device, the storage medium andequipment provided by the invention can provide the control efficiency of the virtual object.

Owner:TENCENT TECH (SHENZHEN) CO LTD

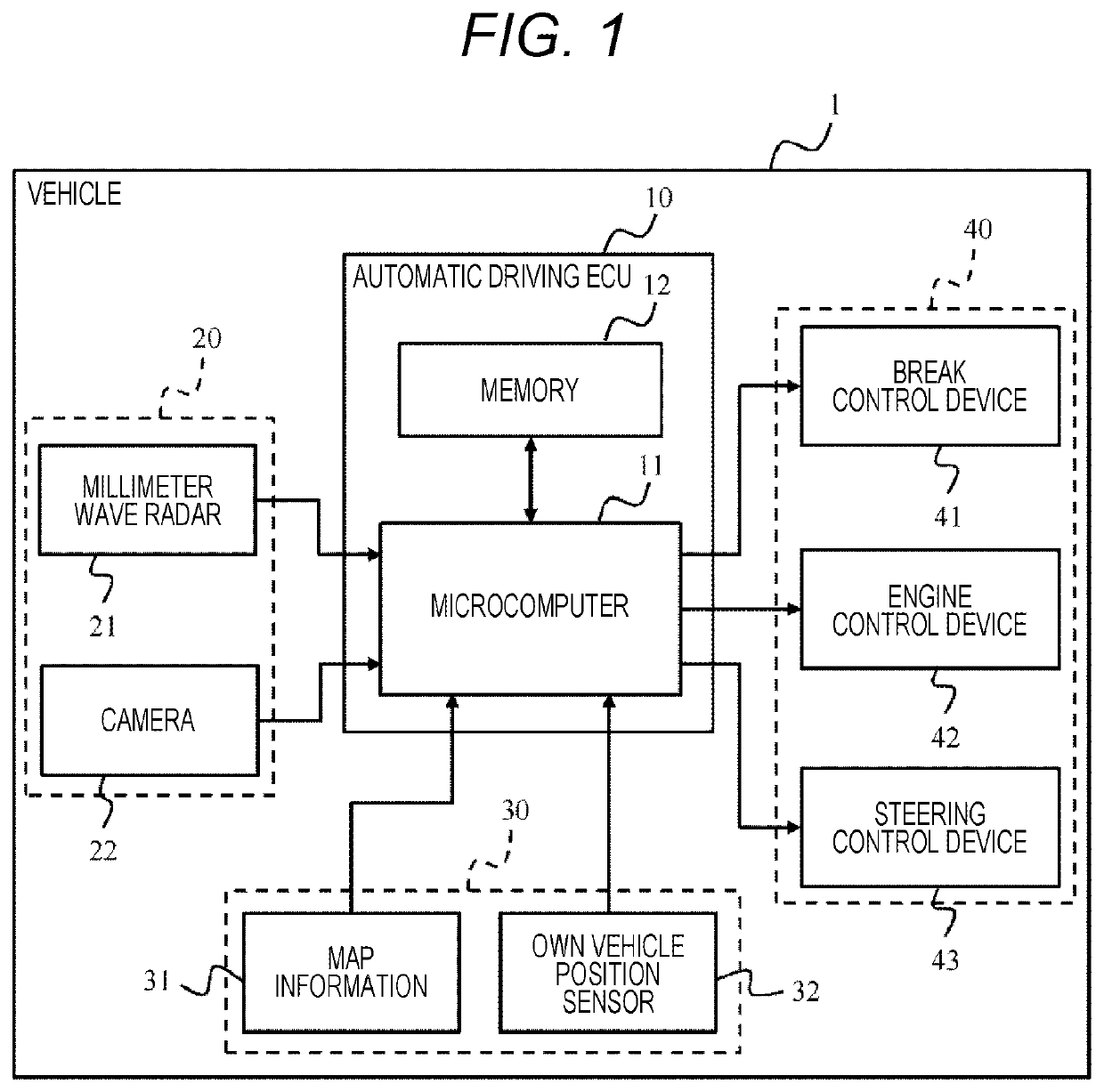

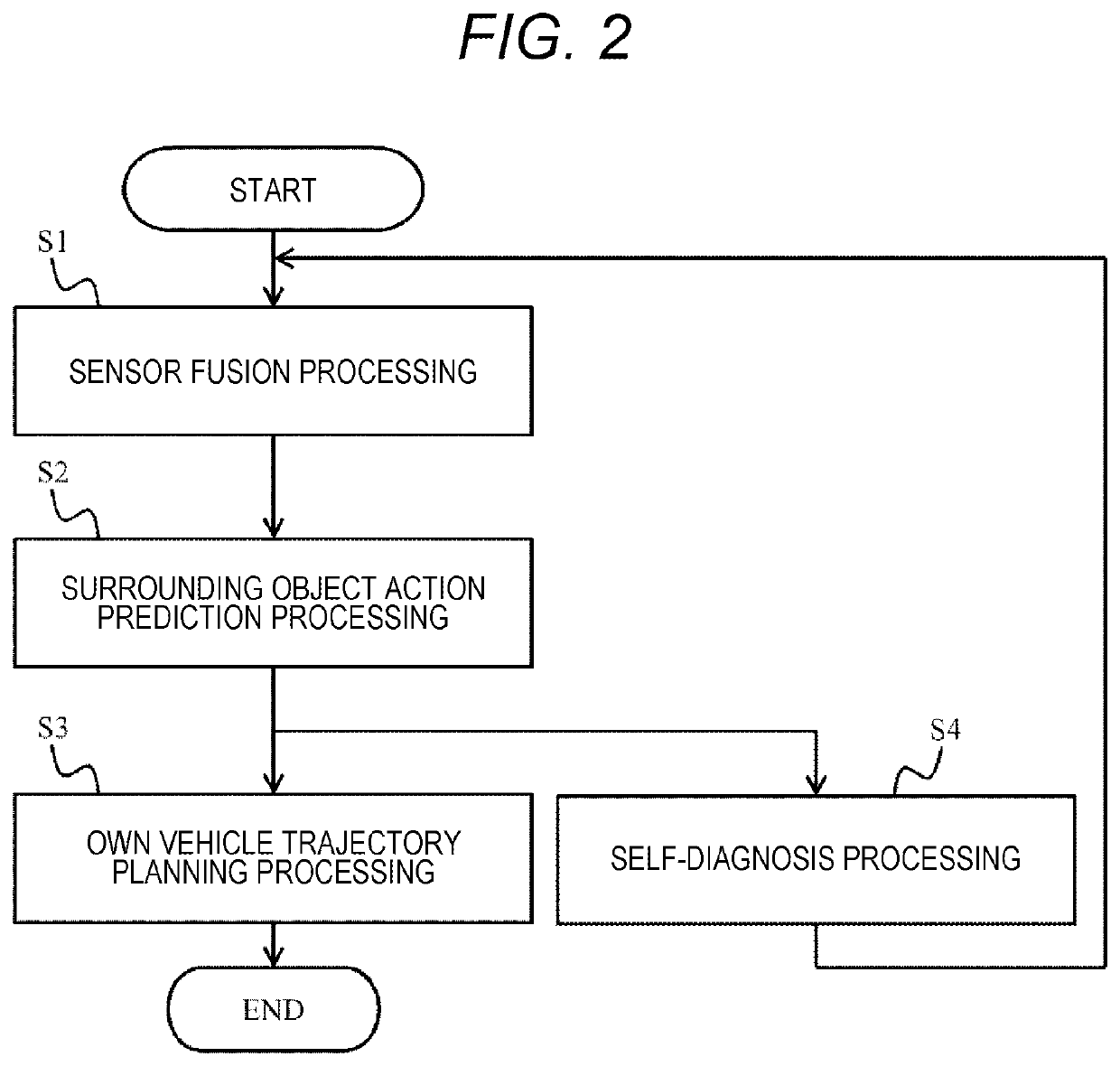

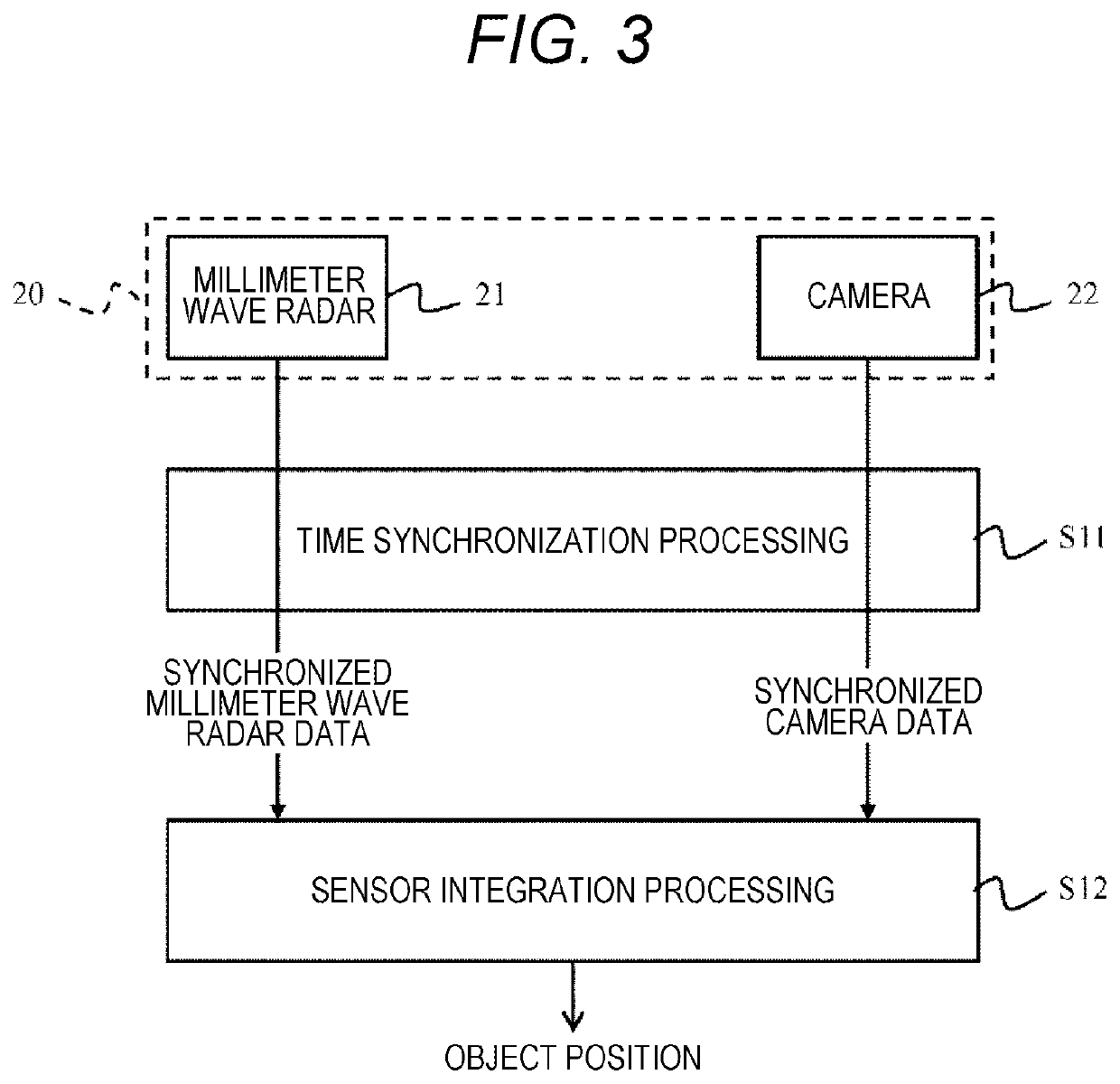

Electronic Control Device for Vehicle

ActiveUS20200070847A1Registering/indicating working of vehiclesInference methodsSimulationAction prediction

An electronic control device for a vehicle according to the present invention includes an action prediction unit that predicts the action of an object around the vehicle on the basis of external information acquired from external information detection units that detect external information of the vehicle, and a determination unit for a detection unit that determines whether an abnormality has occurred in the external information detection unit by comparing external information acquired from the external information detection unit at the time corresponding to a prediction result of the action prediction unit to the prediction result of the action prediction unit.

Owner:HITACHI ASTEMO LTD

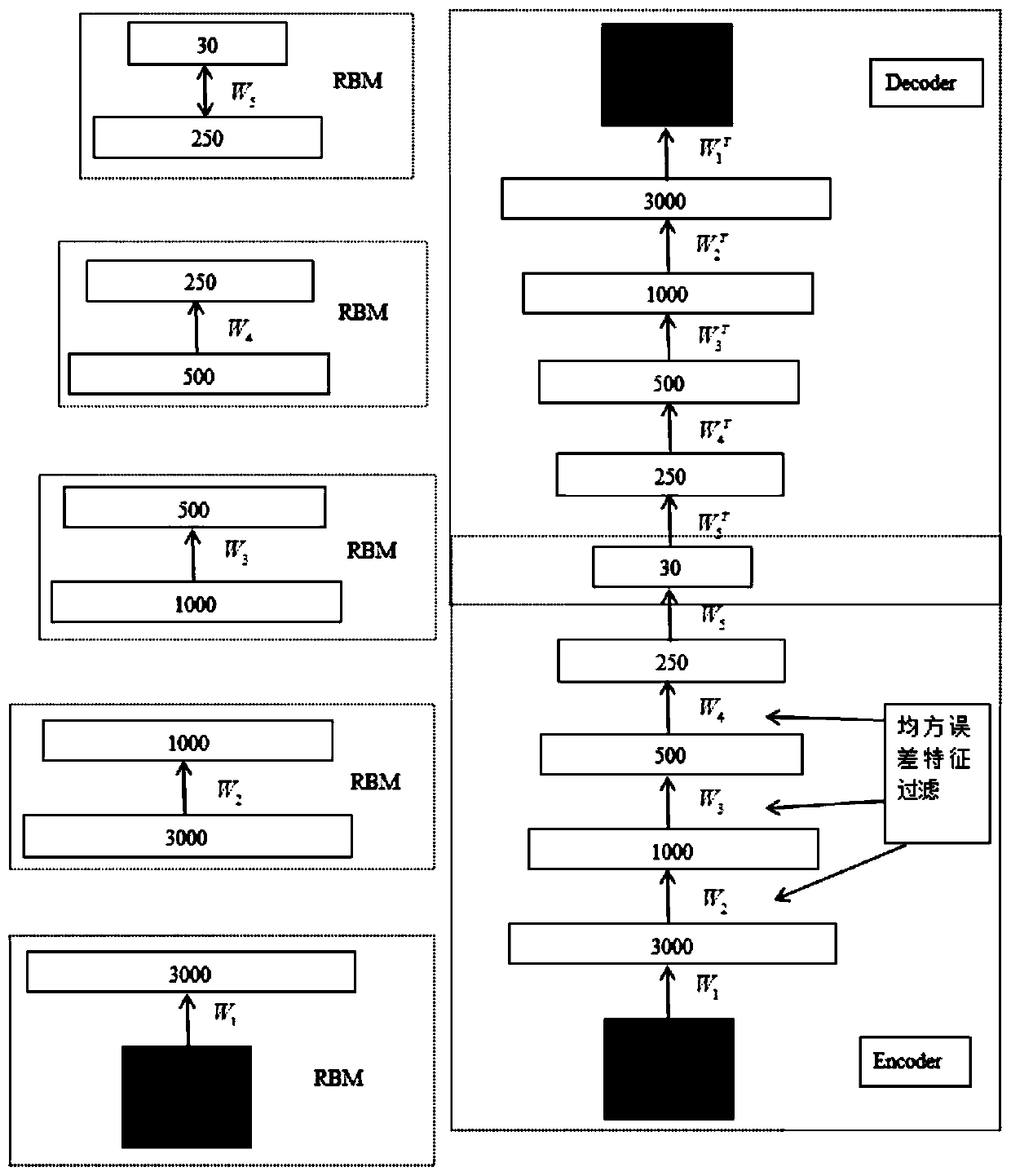

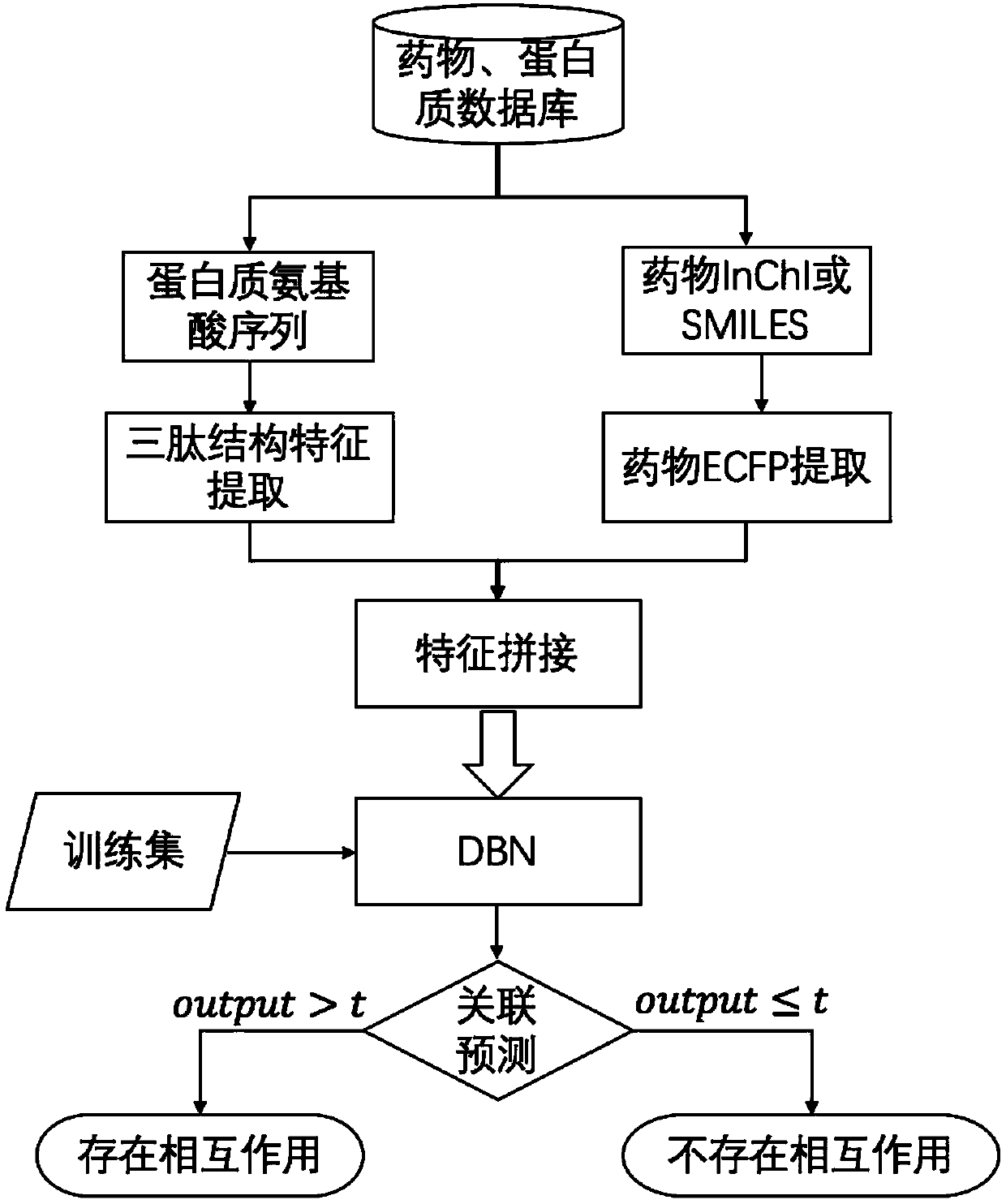

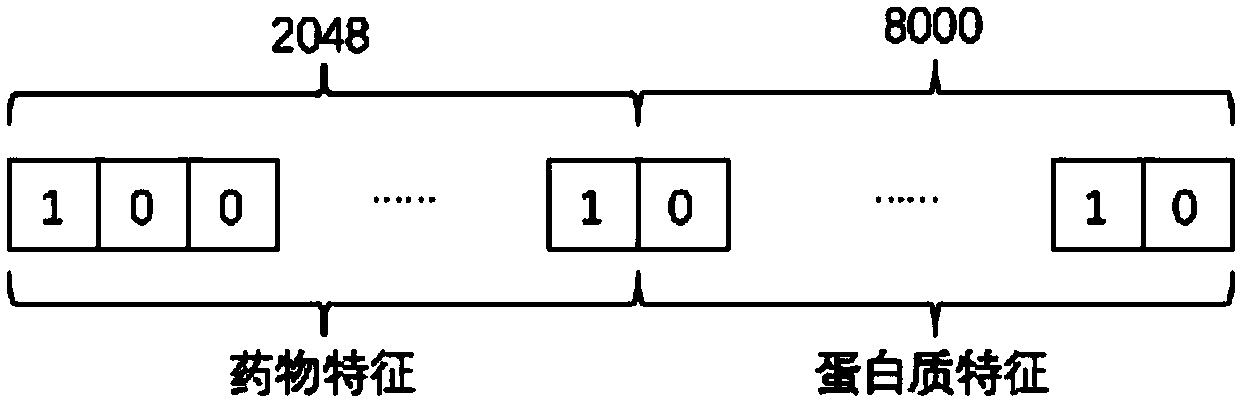

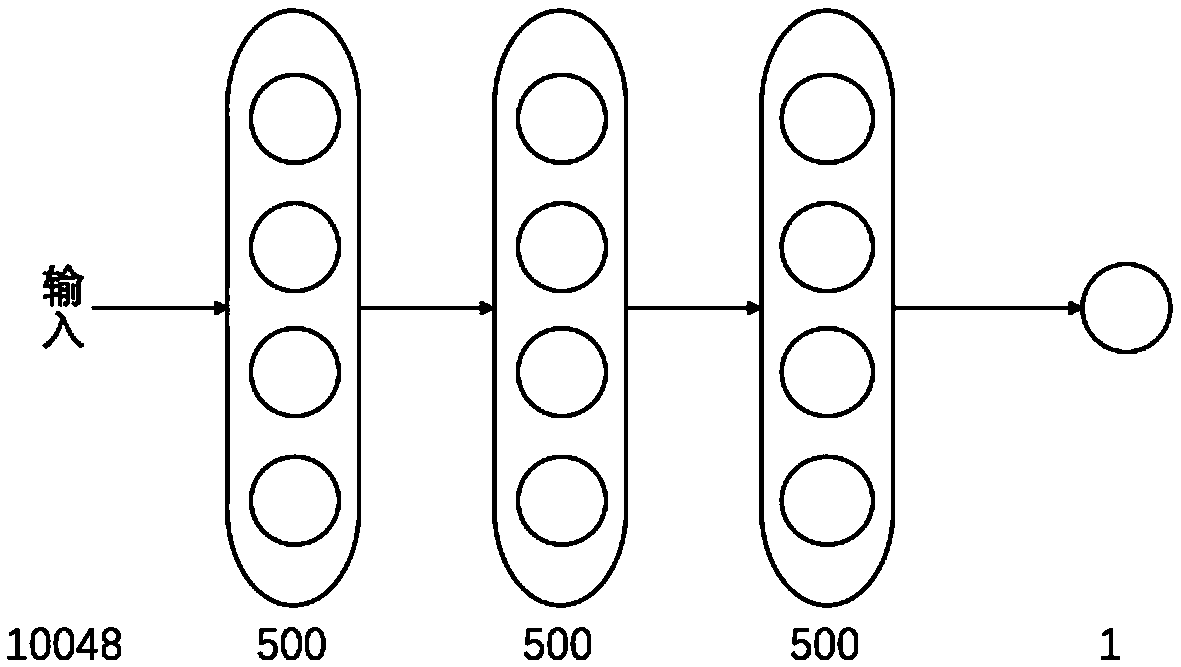

DBN algorithm based drug targeting protein action prediction method

InactiveCN108959841AEffective use of biochemical propertiesGood foundation in biochemical interpretationNeural architecturesNeural learning methodsMedicineDrug development

The invention discloses a DBN algorithm based drug targeting protein action prediction method. The method comprises the steps of extracting extended communication fingerprint of the drug by starting with the molecular structure of the drug; extracting tripeptide structural characteristic of the protein by starting with amino acid sequence of the protein, splicing the extended communication fingerprint of the drug and the tripeptide structural characteristic of the protein to compose a drug-protein characteristic vector, then inputting the drug-protein characteristic vector to a depth confidence network, wherein the output of the network is the probability of the drug-protein input by the network for the mutual action, and finally selecting a suitable threshold to judge the pair of the association is set up. The DBN algorithm based drug targeting protein action prediction method can give out possible interaction pair of drug-targeted protein rapidly without manual intervention, can accordingly save drug development and testing costs and speeds up drugging and discovery of new functions of drugs.

Owner:SOUTH CHINA AGRI UNIV

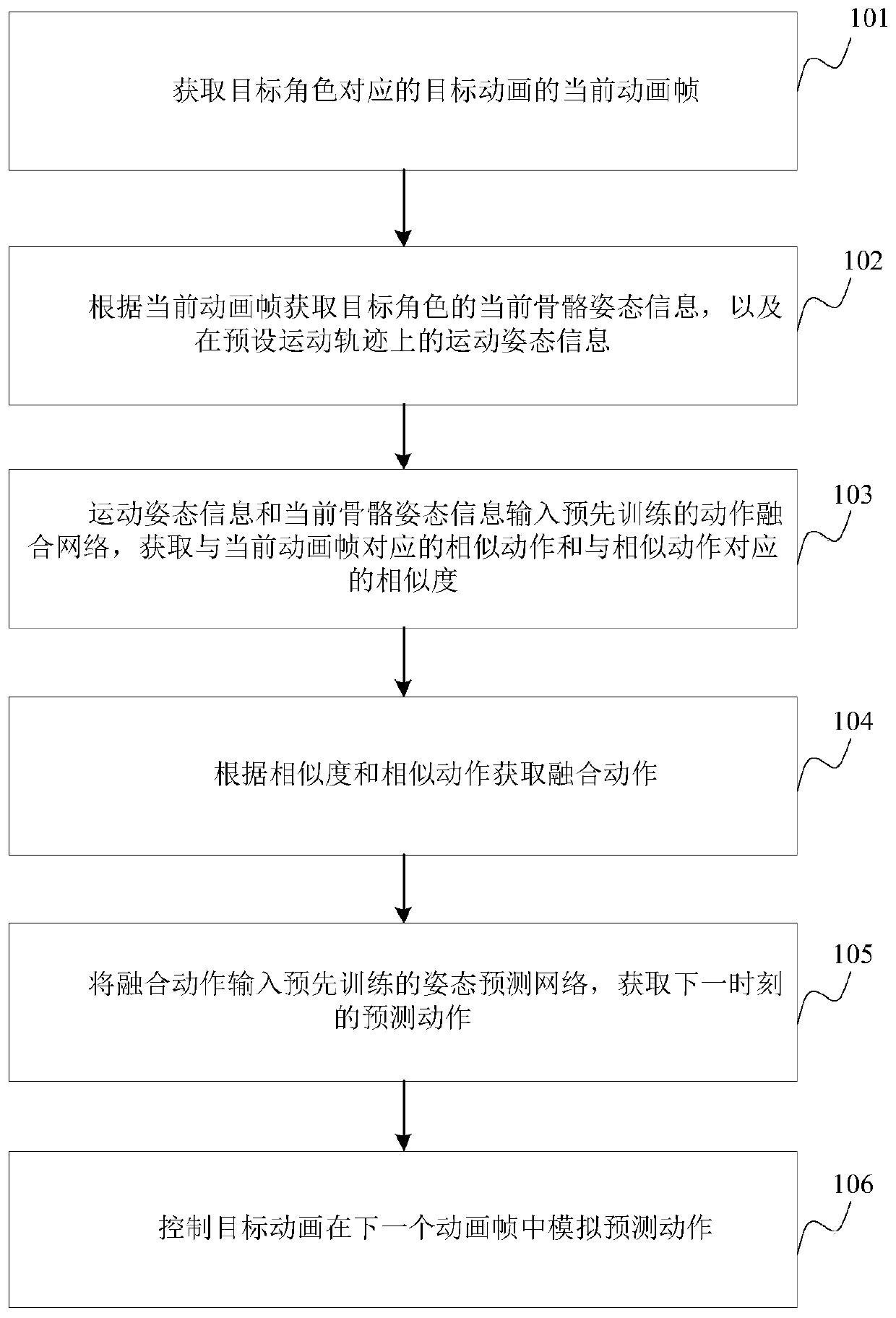

Animation-based action prediction generation method and device

The invention provides an animation-based action prediction generation method and device, wherein the method comprises the steps of obtaining a current animation frame of a target animation corresponding to a target role; obtaining the current skeleton posture information of the target character and the motion posture information on a preset motion track according to the current animation frame; acquiring a similar action corresponding to the current animation frame and a similarity corresponding to the similar action; obtaining a fusion action according to the similarity and the similar action; obtaining a prediction action at the next moment; and controlling the target animation to simulate the prediction action in the next animation frame. According to the invention, the network learning is carried out on the motion characteristics of an action body with a skeleton structure, such as a human, an animal, etc., in different motion states and the change characteristics between the motion states; and finally, different motion characteristics can be well represented, and the change between different motion states can be naturally fused, so that the advancing animation effect of the people or animals is produced, and the natural effect of the animation effect is ensured.

Owner:TSINGHUA UNIV

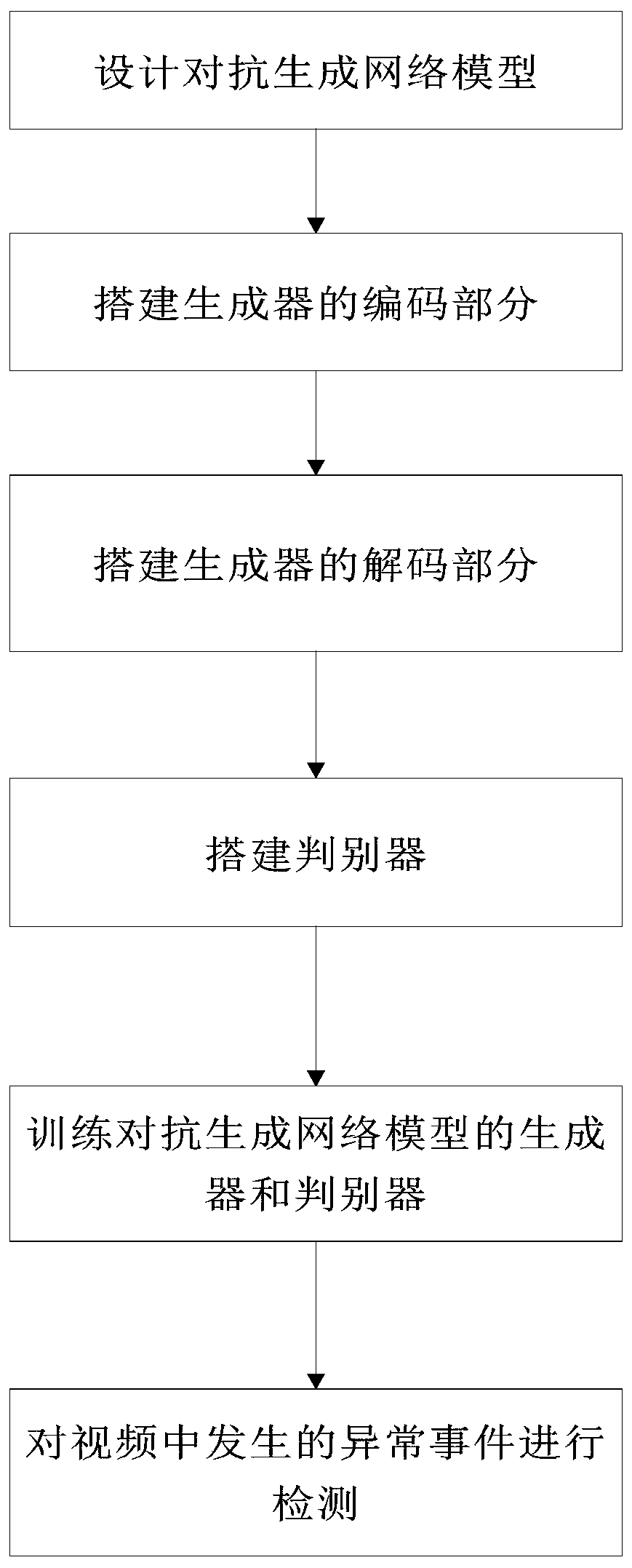

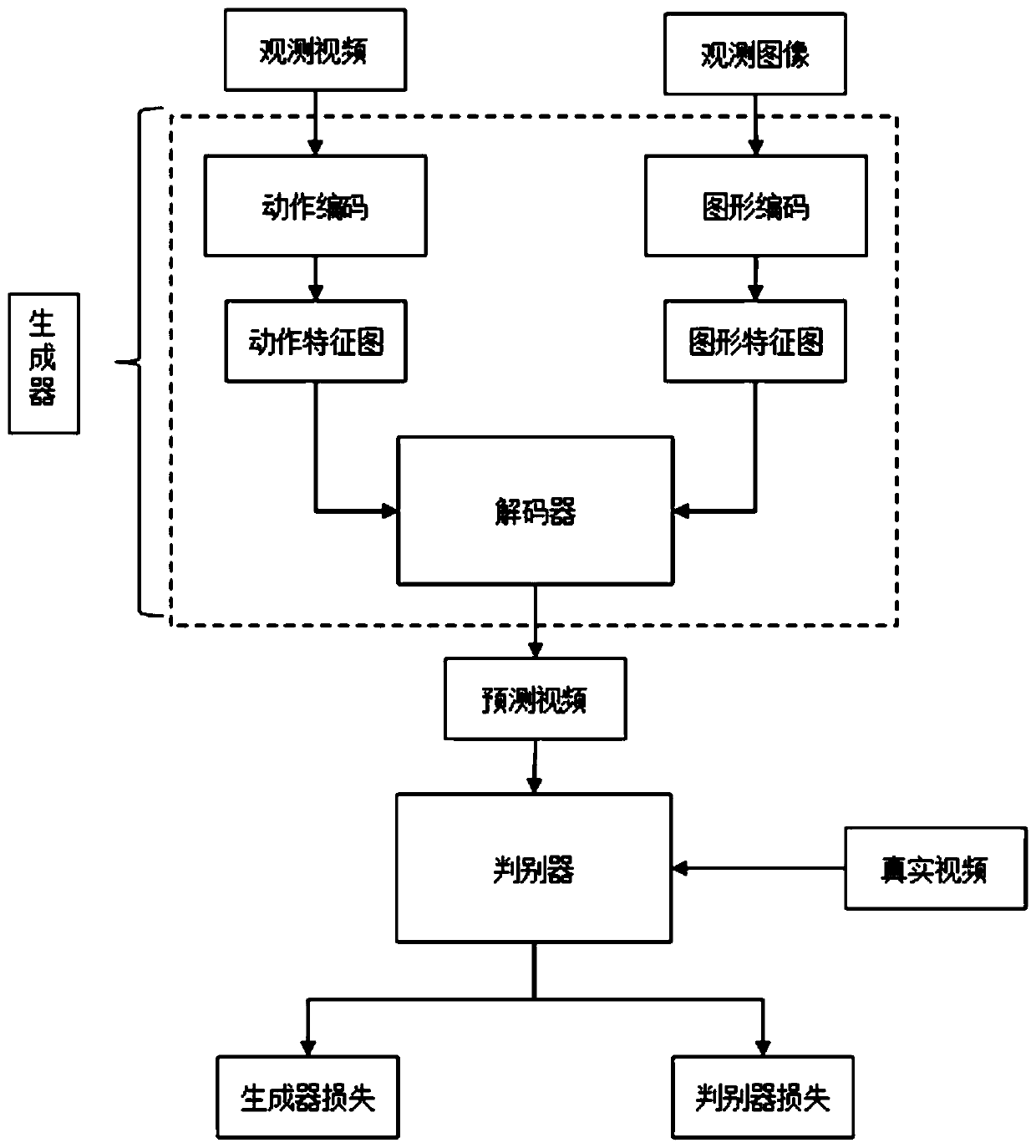

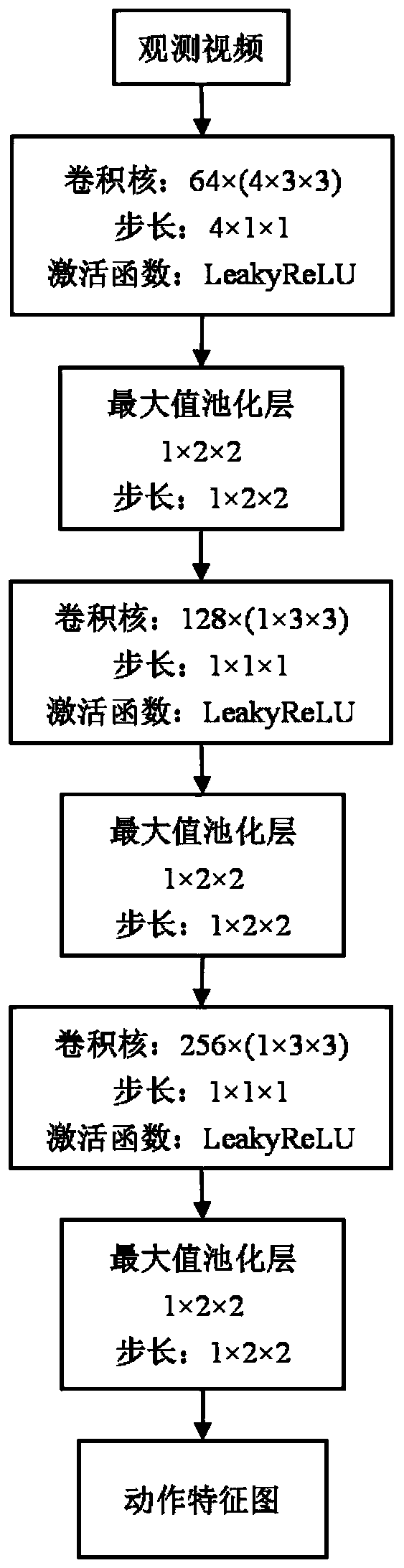

A video abnormal behavior detection method based on action prediction

ActiveCN109919032AProcessing speedReduce preprocessing timeCharacter and pattern recognitionNeural architecturesPattern recognitionDiscriminator

The invention discloses a video abnormal behavior detection method based on motion prediction, and the method comprises the specific steps: designing a confrontation generation network model which comprises a generator and a discriminator; Constructing a coding part of the generator; Constructing a decoding part of the generator; Establishing a discriminator; Training a generator and a discriminator of the adversarial generation network model; And detecting an abnormal event occurring in the video according to the obtained optimal generator network. According to the method, a part of videos ofnormal behaviors are used for counting the generation errors, the abnormal detection threshold values are dynamically generated according to different scenes and time changes, the method can be applied to more different scenes, and robustness is improved.

Owner:SOUTH CHINA UNIV OF TECH

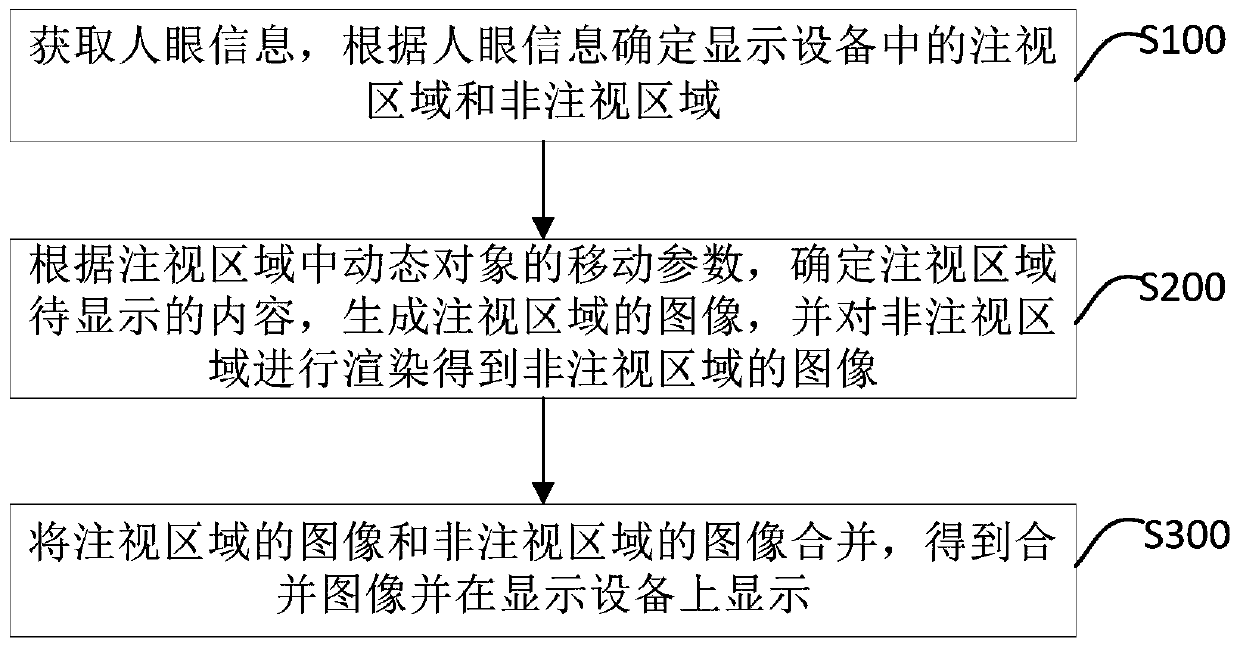

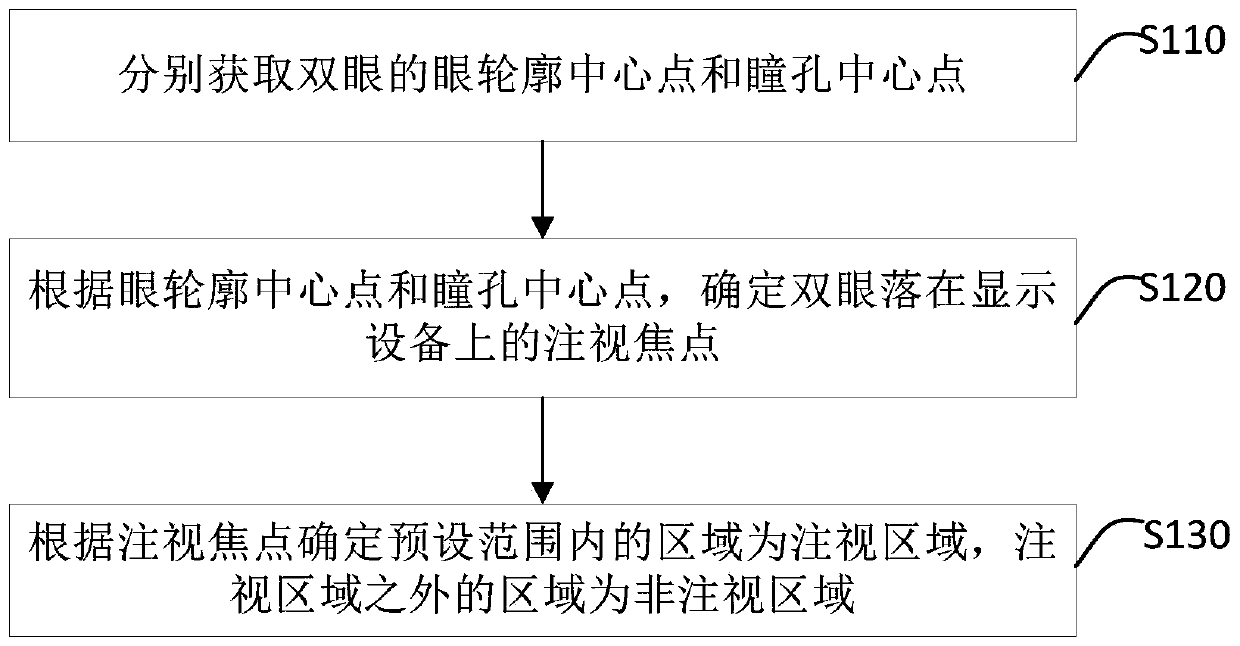

Display method, device, equipment and computer readable storage medium

ActiveCN110460831AEliminate or avoid ghostingGuaranteed display clarityImage analysisGeometric image transformationDisplay deviceAction prediction

The invention provides a display method, a device, equipment and a computer readable storage medium, and the method comprises the steps: obtaining human eye information, and determining a gaze regionand a non-gaze region in display equipment according to the human eye information; determining to-be-displayed content of the gaze area according to the movement parameters of the dynamic object in the gaze area, generating an image of the gaze area, and rendering the non-gaze area to obtain an image of the non-gaze area; and combining the image of the gaze area and the image of the non-gaze areato obtain a combined image, and displaying the combined image on a display device. According to the display method provided by the invention, the fixation area and the non-fixation area in the displayequipment are divided according to the human eye information; by determining the dynamic object in the gaze area and predicting the action of the dynamic object, the content to be displayed in the gaze area can be determined according to the action prediction of the dynamic object, ghosting of the dynamic object during scene movement is eliminated or avoided, the display definition of a dynamic picture is ensured, and the display performance of equipment is improved.

Owner:BOE TECH GRP CO LTD +1

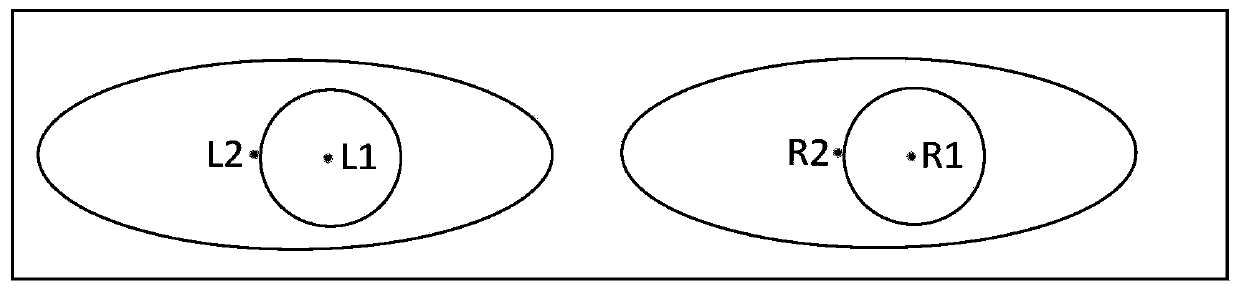

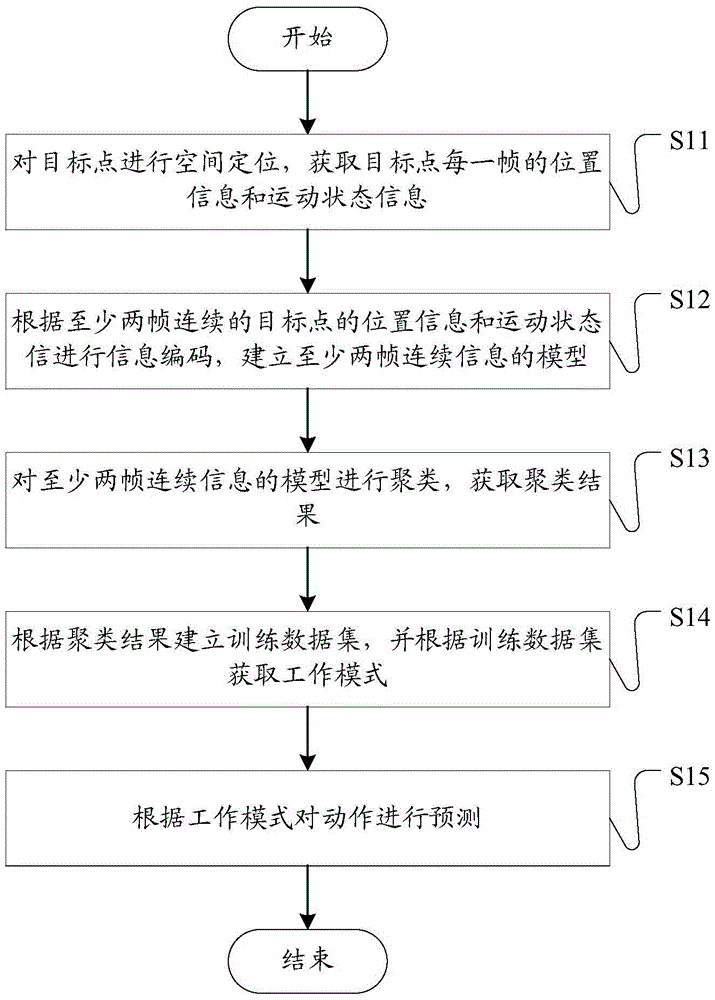

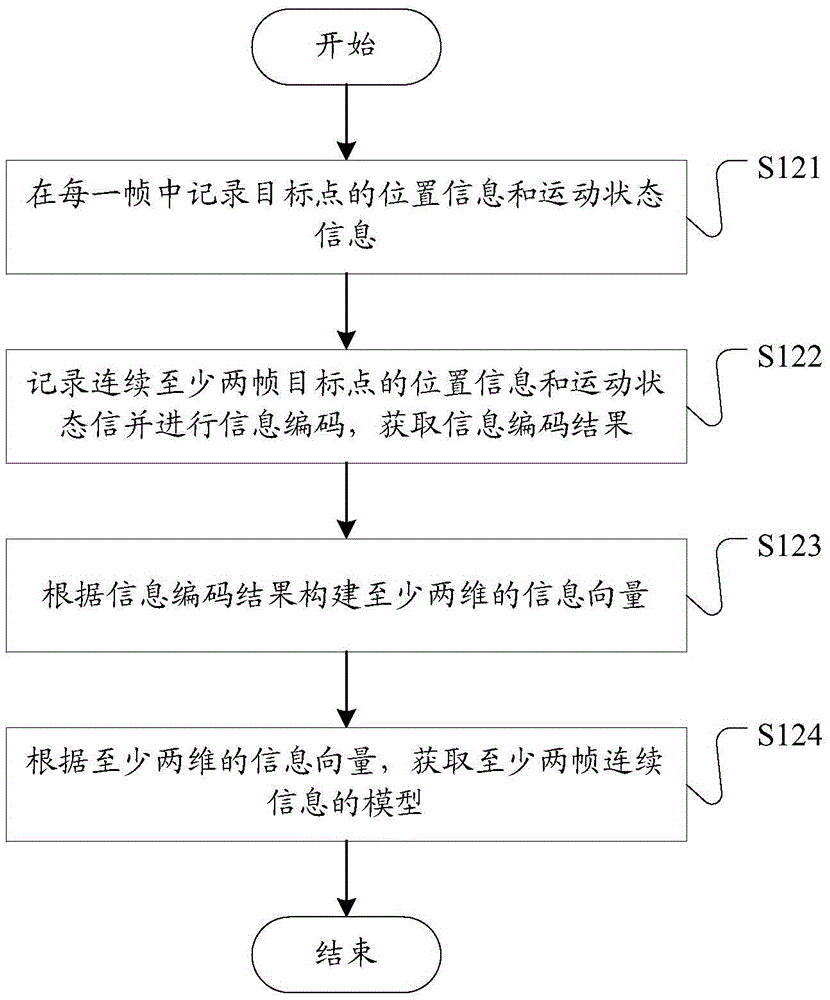

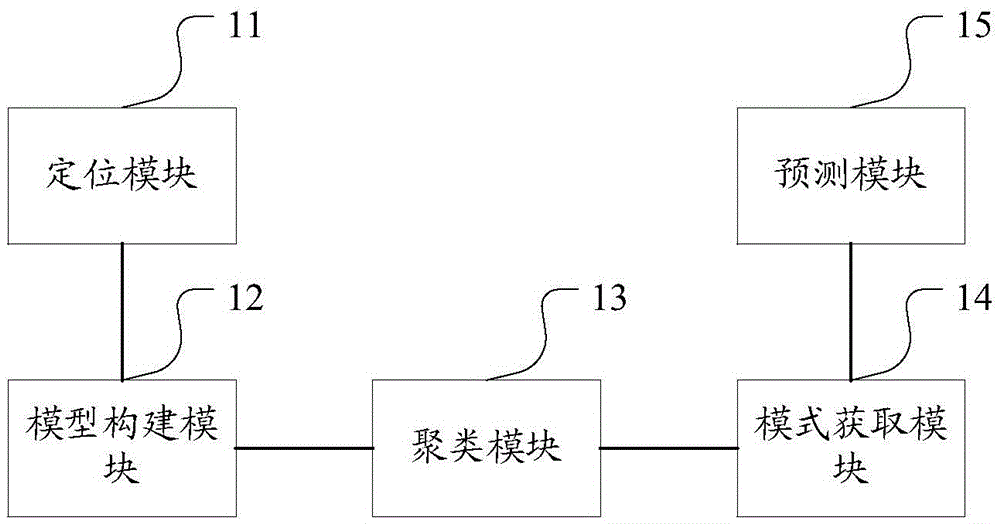

Spatial positioning and clustering based action prediction method and system

ActiveCN105488794AImprove user experienceReduce latencyImage analysisCharacter and pattern recognitionSpatial positioningData set

The invention discloses a spatial positioning and clustering based action prediction method and system. The method comprises: performing spatial positioning on target points to obtain position information and motion state information of each frame of the target points; performing information coding according to the position information and the motion state information of at least two frames of continuous target points to establish models of at least two frames of information; clustering the models of the at least two frames of continuous information to obtain a clustering result; establishing a training data set according to the clustering result, and obtaining a working mode according to the training data set; and predicting an action according to the working mode. By implementing embodiments of the invention, the target points are subjected to the spatial positioning and the action is effectively predicted through a clustering technology based on externally input huge data, so that the time delays generated in a signal transmission process are reduced, the subjective delays are effectively reduced, and the user experience is improved.

Owner:SUN YAT SEN UNIV

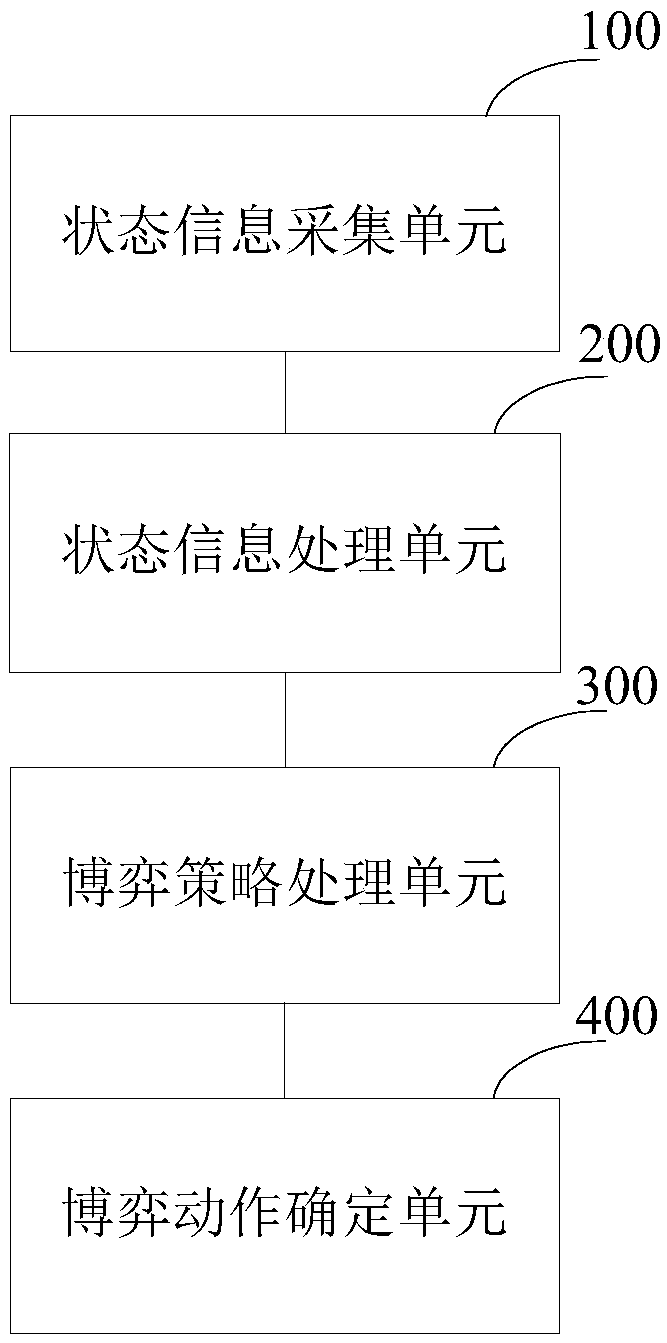

Modeling method oriented to multi-agent synchronous gaming and action prediction system

The invention provides a modeling method oriented to multi-agent synchronous gaming and an action prediction system. The modeling method comprises the steps that a state set and an action set of synchronous gaming problems are obtained; according to the characteristics of the synchronous gaming problems, gaming features and a feature coding method are designed; according to the gaming features andthe feature coding method, the state set and the action set are subjected to data preprocessing, and thus a basic feature graph and an action decision graph are obtained; a multi-scale feature fusionsynchronous gaming strategy model is established on the basis of a deep neural network; and according to the basic feature graph and the action decision graph, the synchronous gaming strategy model is trained layer by layer, thus the trained synchronous gaming strategy model is obtained, and the synchronous gaming strategy model has the high real-time performance while the gaming ability of the synchronous gaming strategy model is improved. The action prediction system realized based on the synchronous gaming strategy model is high in accuracy and real-time performance and has the good practical application value.

Owner:UNIV OF SCI & TECH OF CHINA

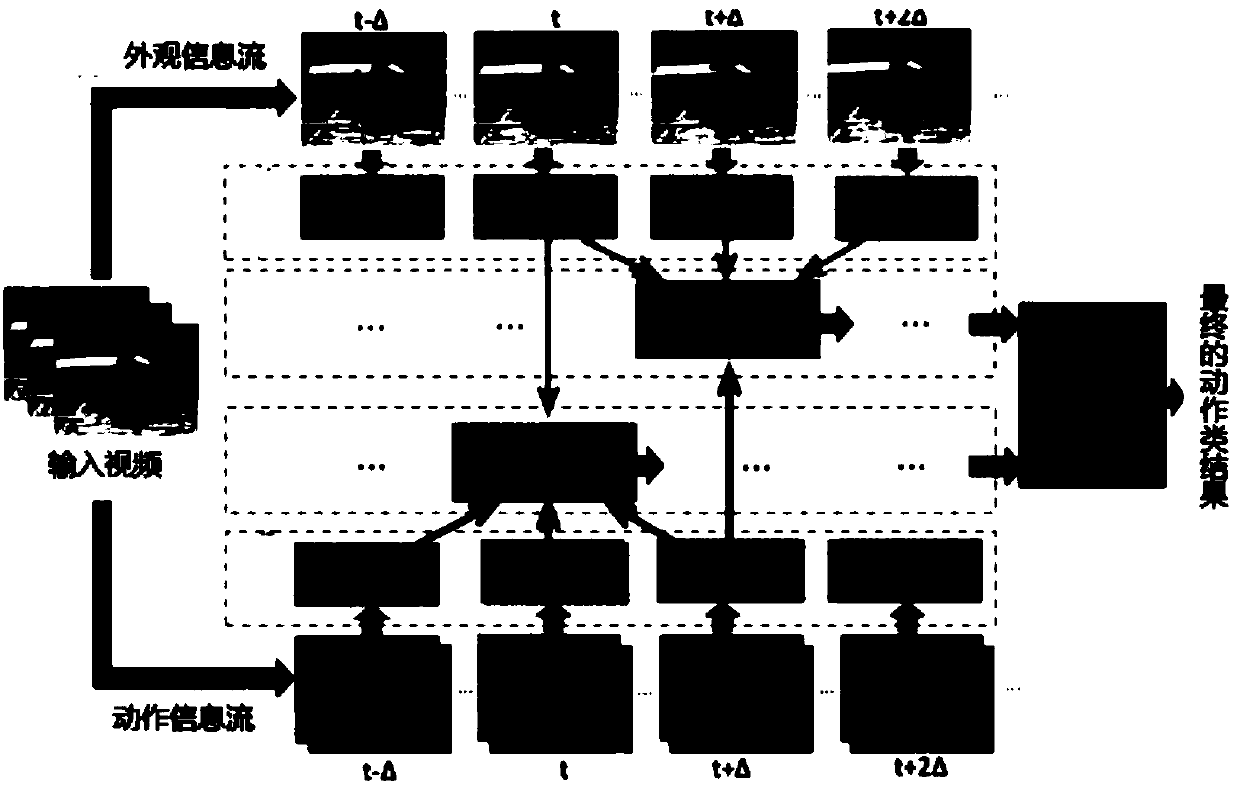

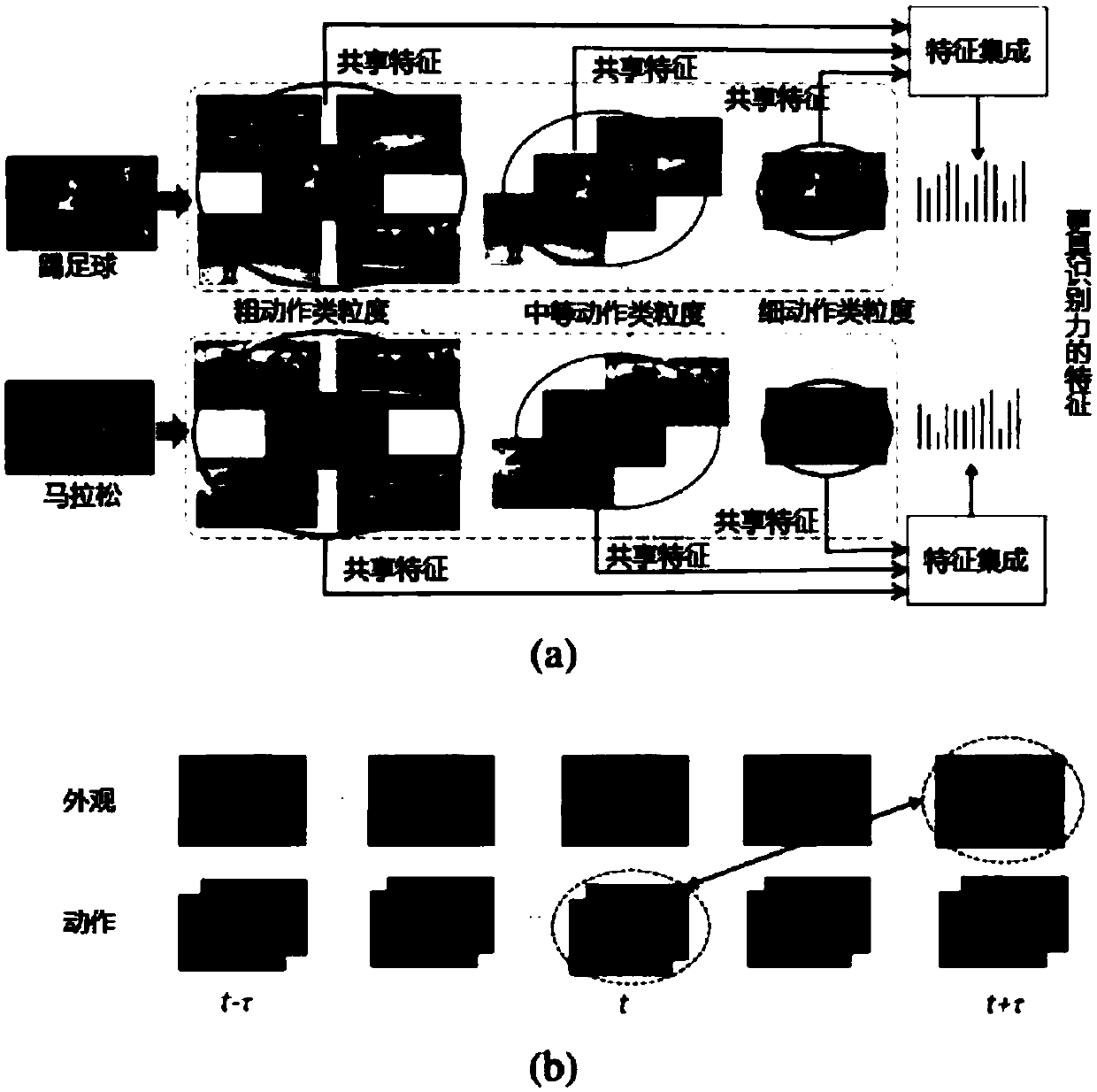

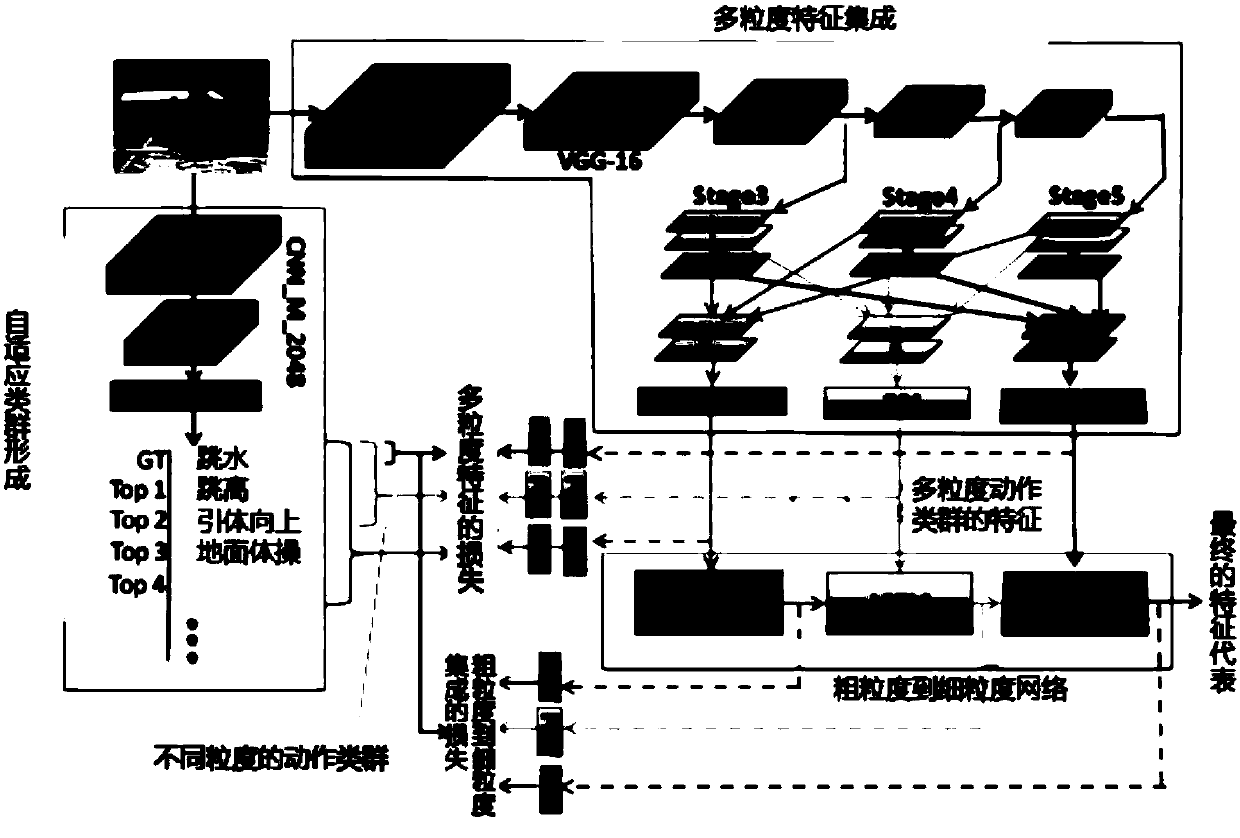

Method for recognizing actions on basis of deep feature extraction asynchronous fusion networks

InactiveCN108280443ACharacter and pattern recognitionNeural architecturesFeature extractionShort terms

The invention provides a method for recognizing actions on the basis of deep feature extraction asynchronous fusion networks. The method is implemented by the aid of main contents including coarse-grained-to-fine-grained networks, asynchronous fusion networks and the deep feature extraction asynchronous fusion networks. The method includes procedures of inputting each short-term light stream stackof each space frame and each movement stream of input video appearance stream into the coarse-grained-to-fine-grained networks; integrating depth features of a plurality of action class grain sizes;creating accurate feature representation; inputting extracted features into the asynchronous fusion networks with different integrated time point information stream features; acquiring each action class prediction results; combining the different action prediction results with one another by the deep feature extraction asynchronous fusion networks; determining ultimate action class labels of inputvideo. The method has the advantages that deep-layer features can be extracted from the multiple action class grain sizes and can be integrated, accurate action representation can be obtained, complementary information in a plurality of pieces of information stream can be effectively utilized by means of asynchronous fusion, and the action recognition accuracy can be improved.

Owner:SHENZHEN WEITESHI TECH

Action prediction

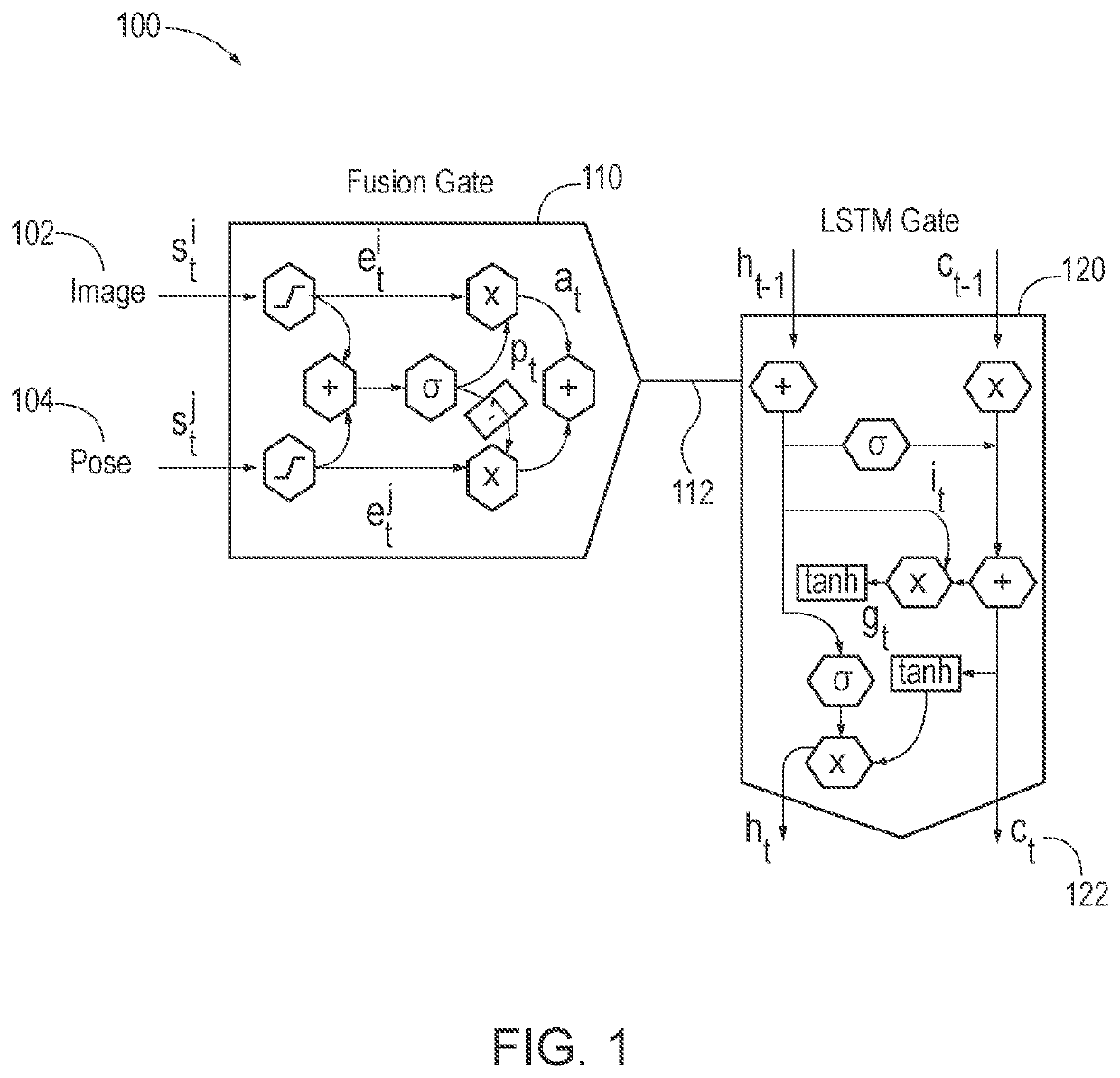

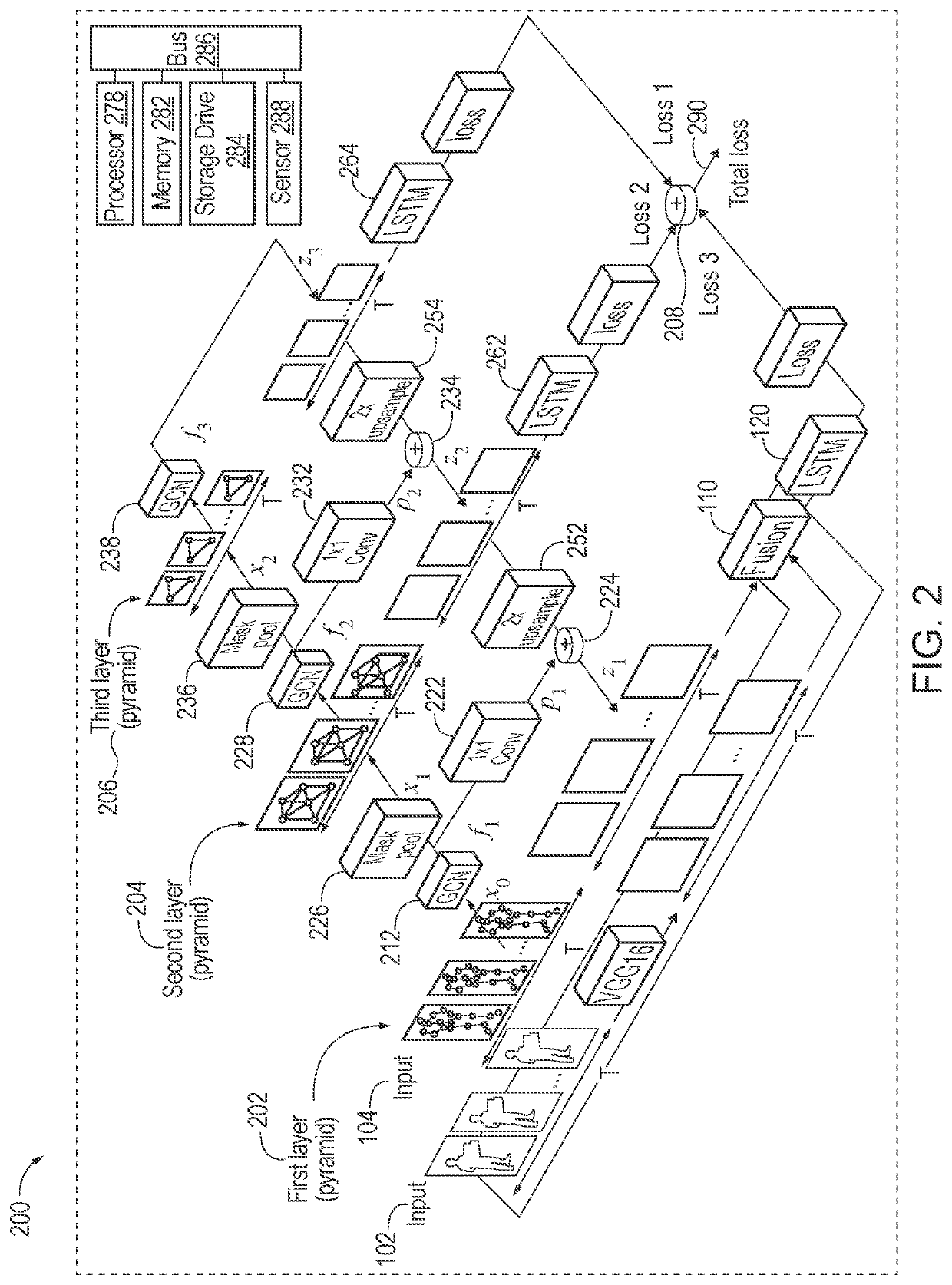

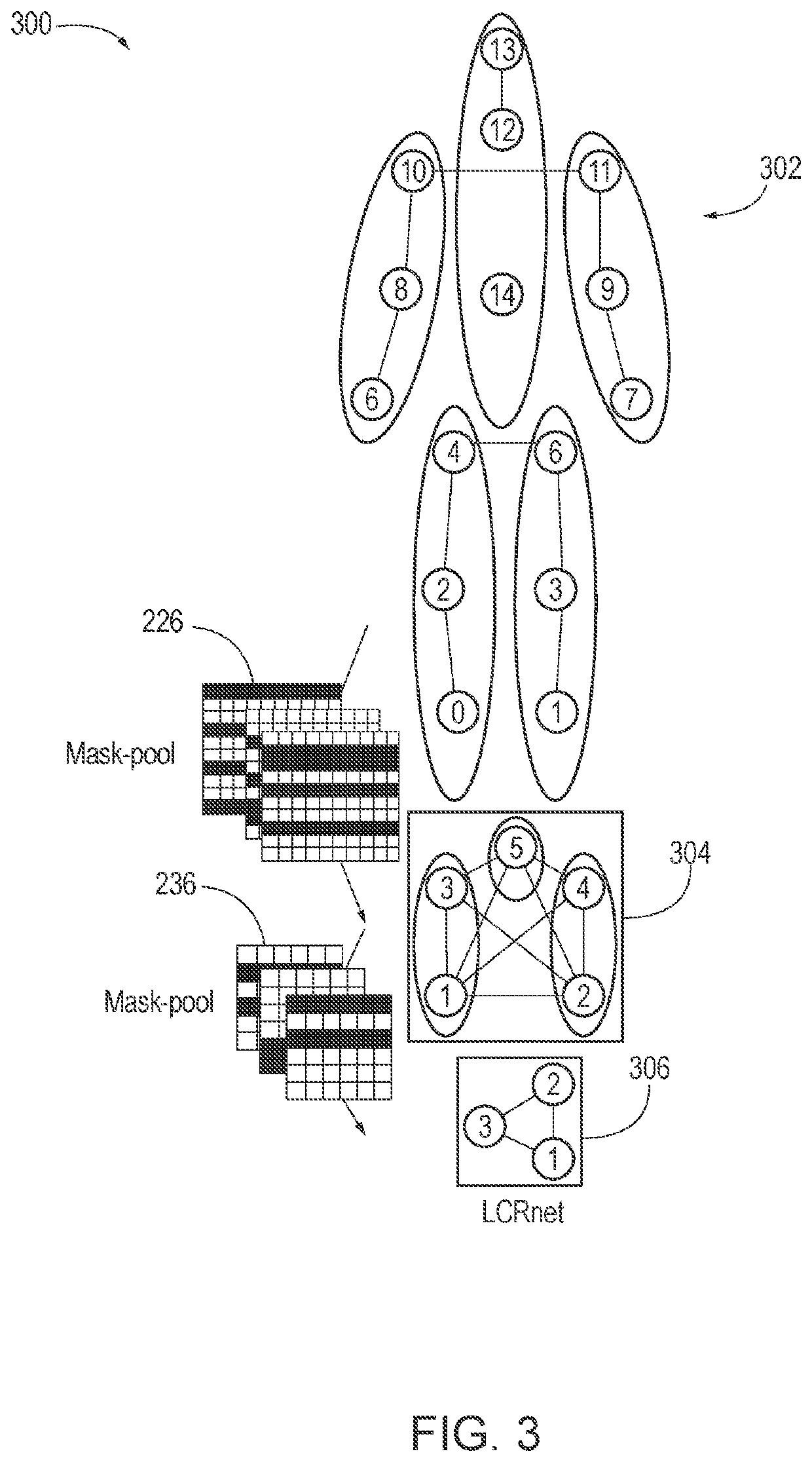

According to one aspect, action prediction may be implemented via a spatio-temporal feature pyramid graph convolutional network (ST-FP-GCN) including a first pyramid layer, a second pyramid layer, a third pyramid layer, etc. The first pyramid layer may include a first graph convolution network (GCN), a fusion gate, and a first long-short-term-memory (LSTM) gate. The second pyramid layer may include a first convolution operator, a first summation operator, a first mask pool operator, a second GCN, a first upsampling operator, and a second LSTM gate. An output summation operator may sum a first LSTM output and a second LSTM output to generate an output indicative of an action prediction for an inputted image sequence and an inputted pose sequence.

Owner:HONDA MOTOR CO LTD

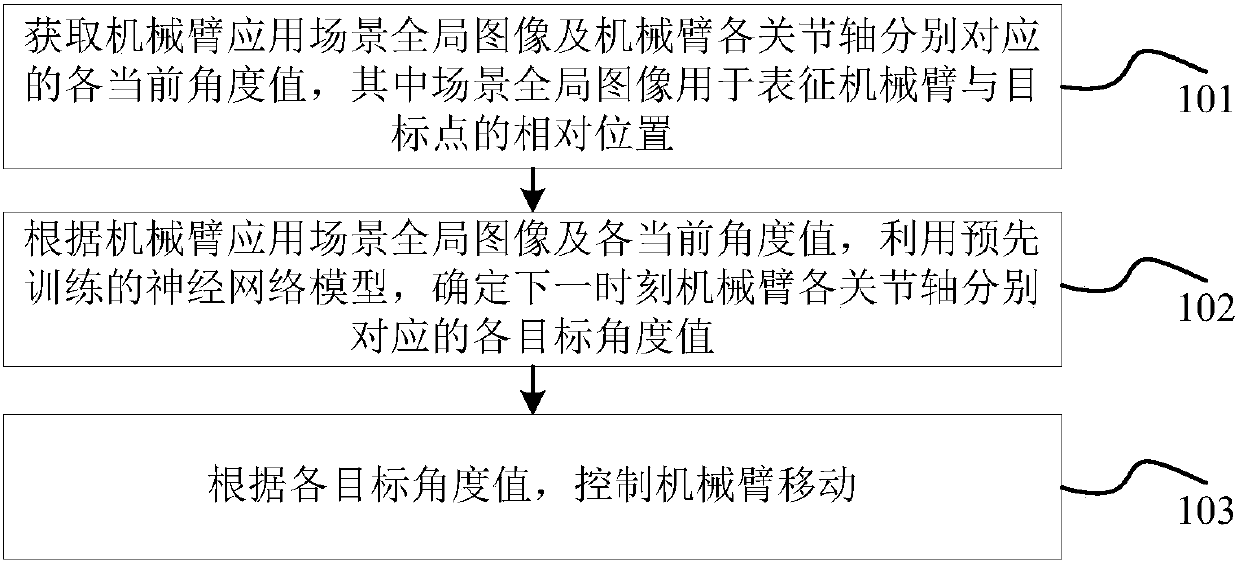

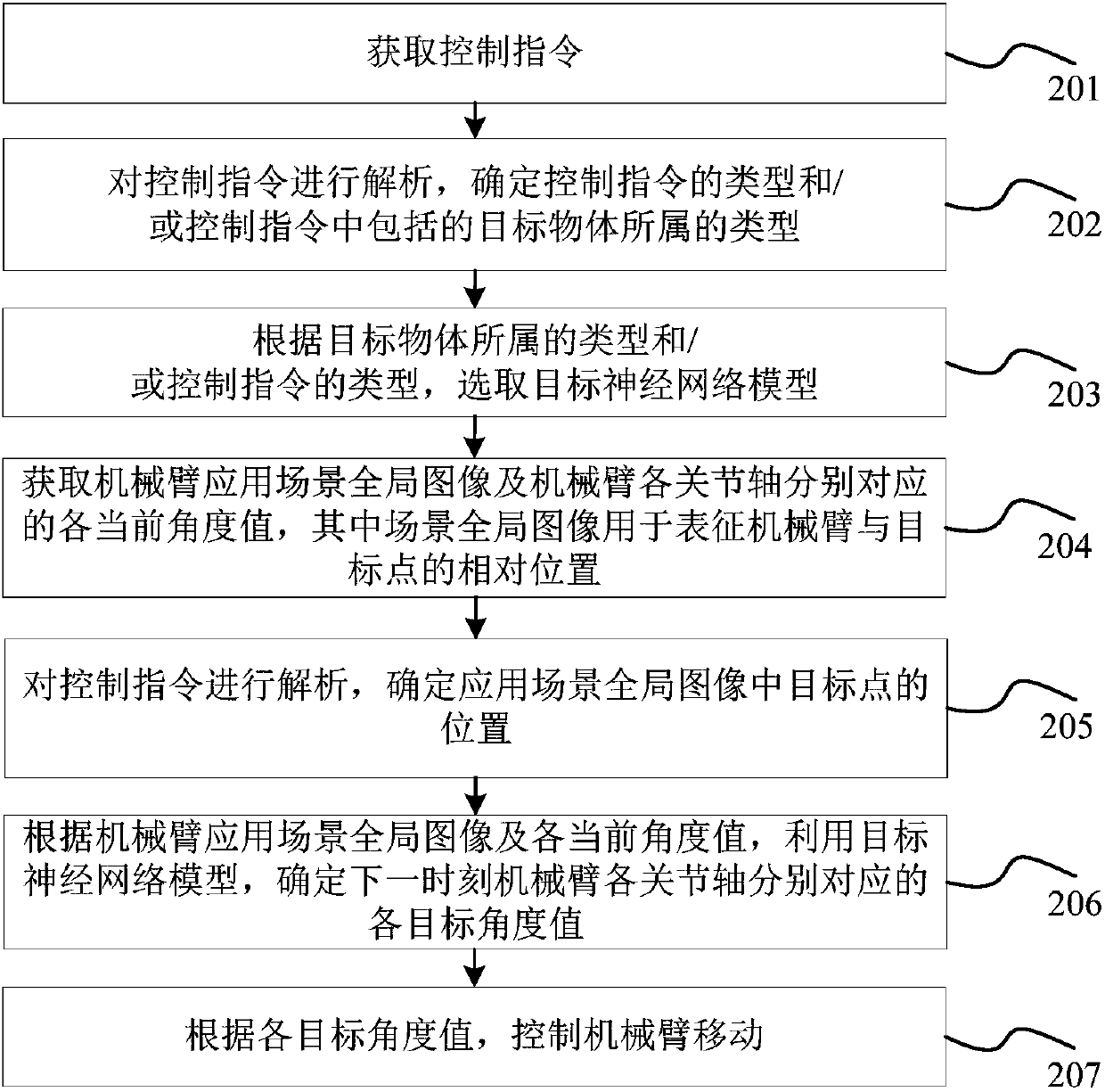

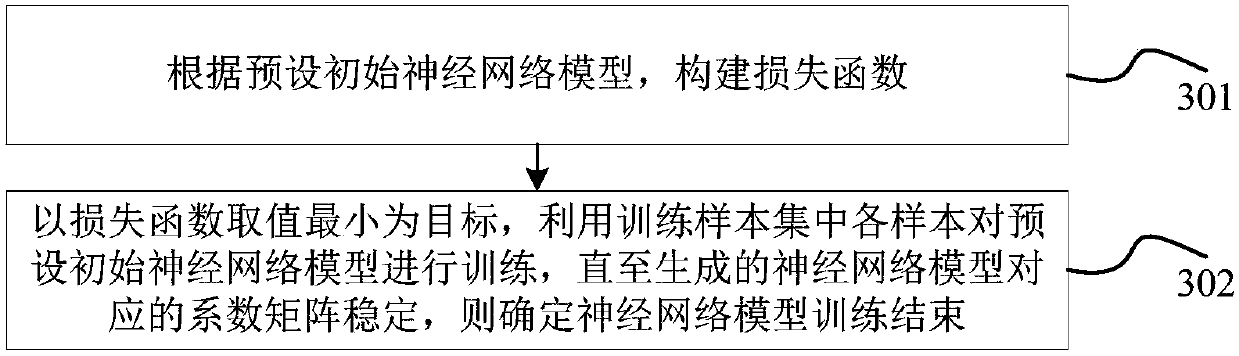

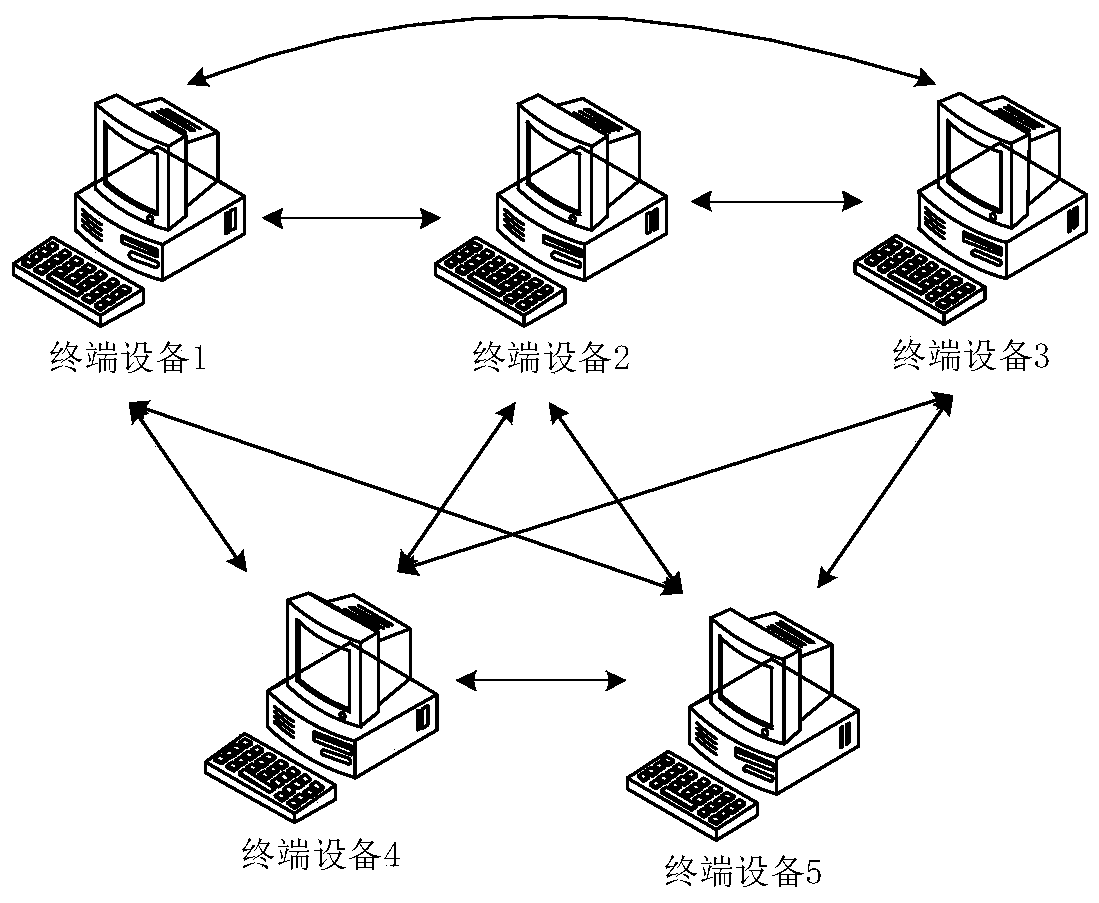

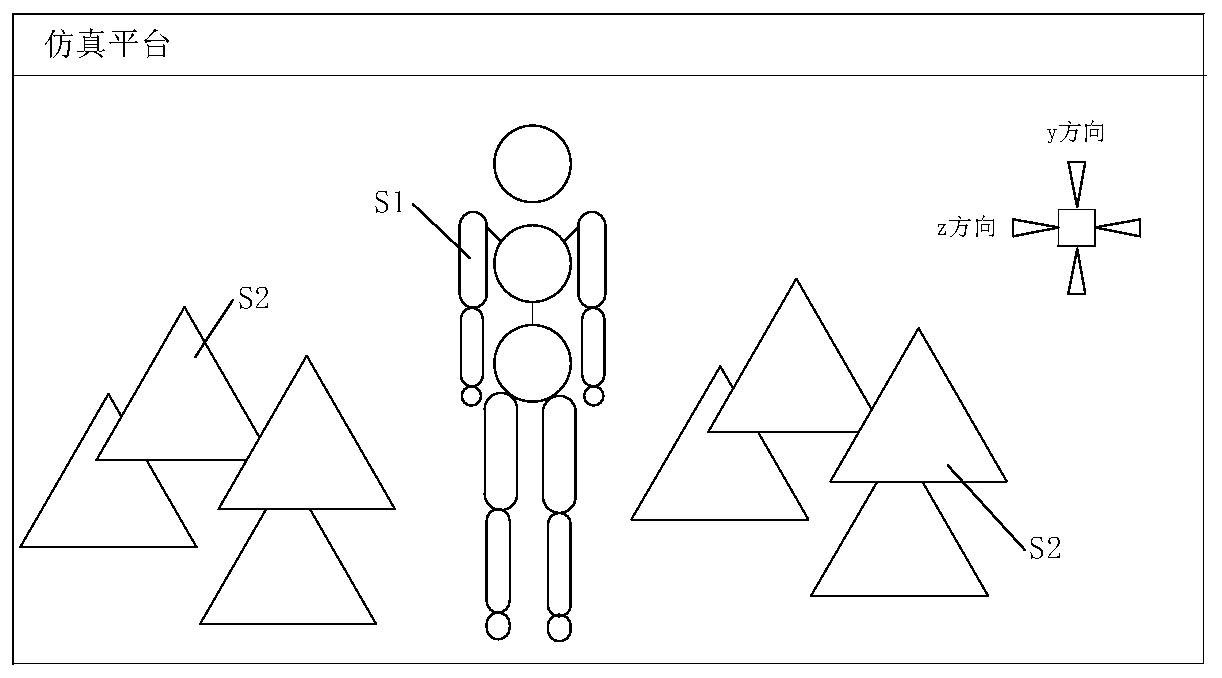

Mechanical arm control method and device, control device and storage medium

ActiveCN110293552AImprove accuracyHigh speedProgramme-controlled manipulatorNetwork modelComputer science

The invention provides a mechanical arm control method and device, a control device and a storage medium. The mechanical arm control method comprises the following steps of acquiring a global image ofa mechanical arm application scene and current angle values corresponding to joint axes of the mechanical arm, wherein the scene global image is used for representing the relative position of a mechanical arm and a target point; according to the global image of the mechanical arm application scene and the current angle values, determining each target angle value corresponding to each joint axis of the mechanical arm at the next moment by using a pre-trained neural network model; and controlling the mechanical arm to move according to the target angle values. According to the method, the action of the mechanical arm is predicted by utilizing the neural network model, the accuracy of the action prediction of the mechanical arm is improved, and the flexibility of the action of the mechanicalarm is improved.

Owner:BEIJING ORION STAR TECH CO LTD

Training method of action control model, related device and storage medium

ActiveCN111340211AImprove training efficiencyImprove training effectImage analysisAnimationAlgorithmAnimation

The invention discloses a training method of an action control model, and the method is applied to the field of artificial intelligence, and specifically comprises the steps: obtaining first state data corresponding to a target role from a to-be-trained segment; based on the first state data, obtaining an action prediction value through a to-be-trained action control model; determining action dataof the target role according to the action prediction value and the M groups of offset parameter sets; and updating model parameters of a to-be-trained action control model according to the first state data and the action data. The invention further discloses a model training device and a storage medium. According to the method, the predicted value of the joint can be converted into the reasonable motion range of the joint, manual adjustment is not needed, the model training efficiency can be improved, the model training effect can also be improved, and therefore the animation effect of character performance is better.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Action prediction based on interactive history and context between sender and recipient

Techniques for action prediction based on interactive history and context between a sender and a recipient are described herein. In one embodiment, a process includes, but is not limited to, in response to a message to be received by a recipient from a sender over a network, determining one or more previous transactions associated with the sender and the recipient, the one or more previous transactions being recorded during course of operations performed within an entity associated with the recipient, and generating a list of one or more action candidates based on the determined one or more previous transactions, wherein the one or more action candidates are optional actions recommended to the recipient, in addition to one or more actions required to be taken in response to the message. Key word identification out of voice applications as well as guided actions has also been applied to generate action prediction candidates interactive history links Other methods and apparatuses are also described.

Owner:SAP AG

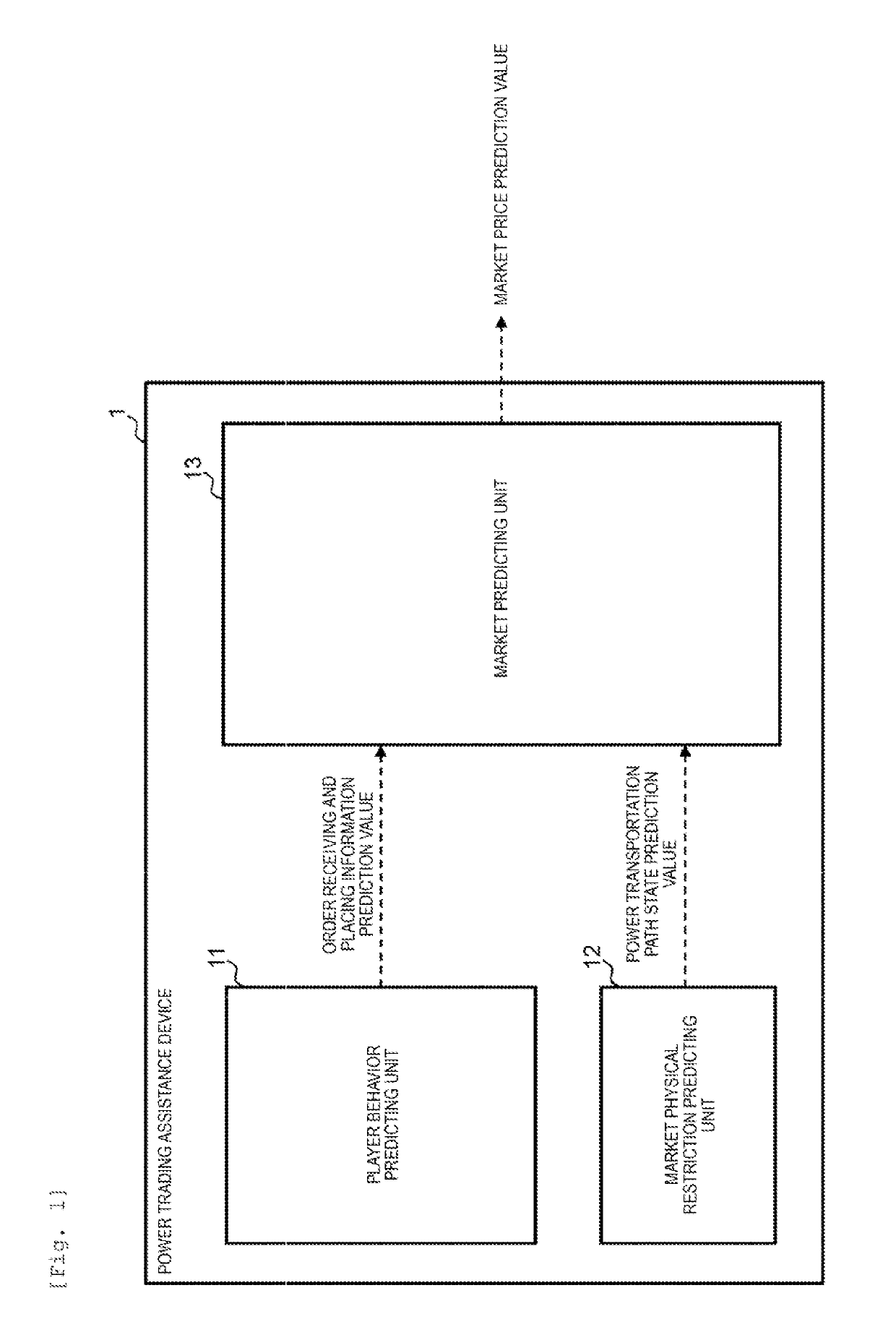

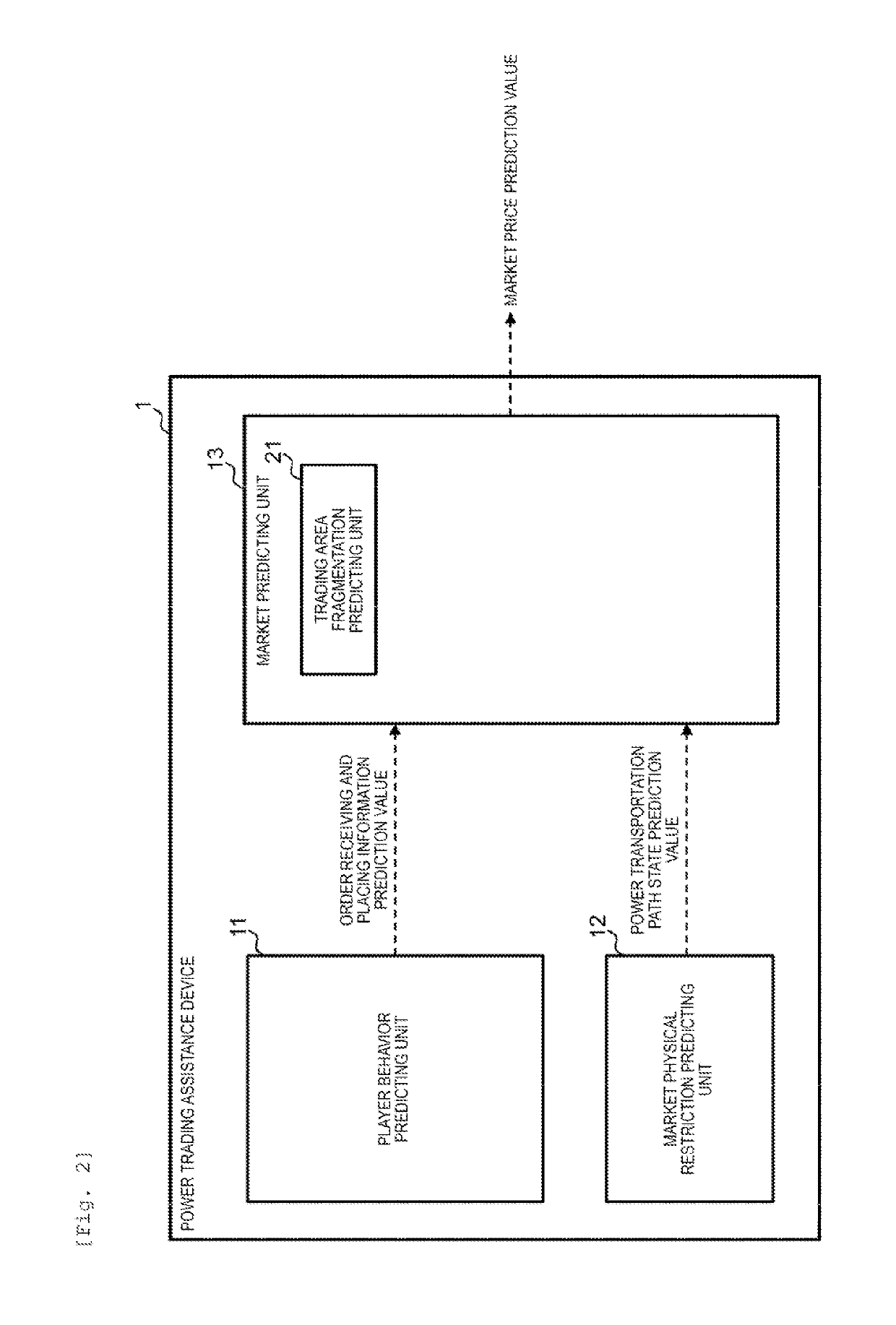

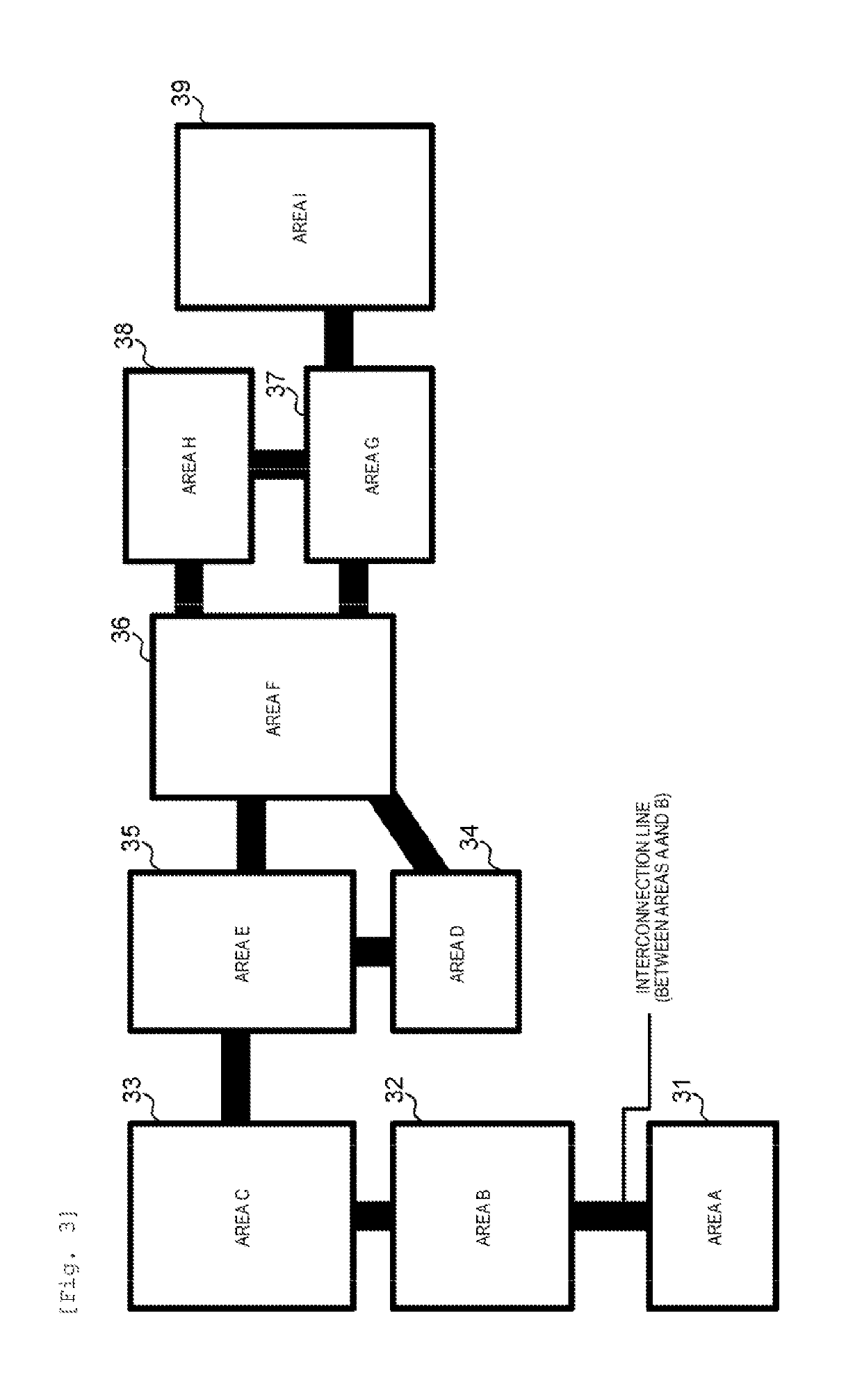

Power Trading Assistance Device and Market Price Prediction Information Generation Method

The present invention is provided with: a player action prediction unit that predicts the action of a player and calculates a sales order information prediction value; a market physical restraint prediction unit that predicts the state of a power transfer path, which includes grid-connection line available capacity indicating excess transferred power between areas, and calculates a prediction value for the state of the power transport path; and a market prediction unit that predicts a market price on the basis of the sales order information prediction value and the prediction value for the power transfer path state.

Owner:HITACHI LTD

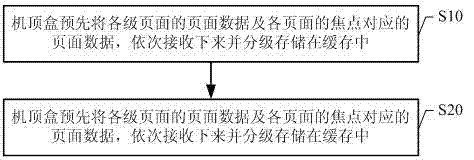

Data broadcasting system based on user action prediction and data acceleration method thereof

The invention discloses a data broadcasting system based on user action prediction and a data acceleration method thereof. The method comprises the following steps: A, a set top box sequentially receives page data from pages of all stages and page data corresponding to focuses of all pages in advance and hierarchically stores the page data from pages of all stages and the page data corresponding to focuses of all pages into a buffer; when page browsing is required and user's operational order is received through clicking to a focus, page date corresponding to the focus is called out from the buffer to display. According to the invention, page data possibly browsed through the current action of a user can be received in advance through a multistage page data in-advance receiving mechanism, when a user click a focus, a corresponding page is displayed immediately, a browsing speed is increased, and conveniences are brought to users.

Owner:深圳康泓兴智能科技有限公司

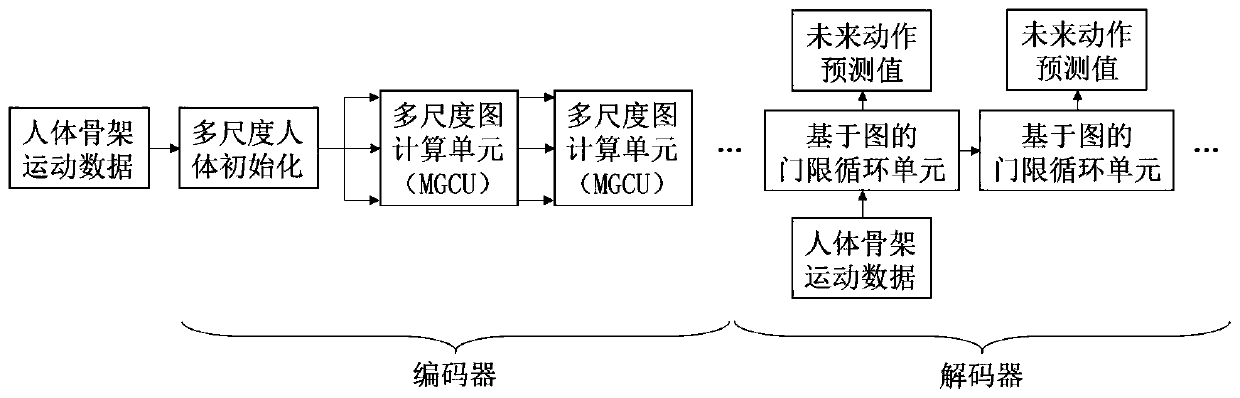

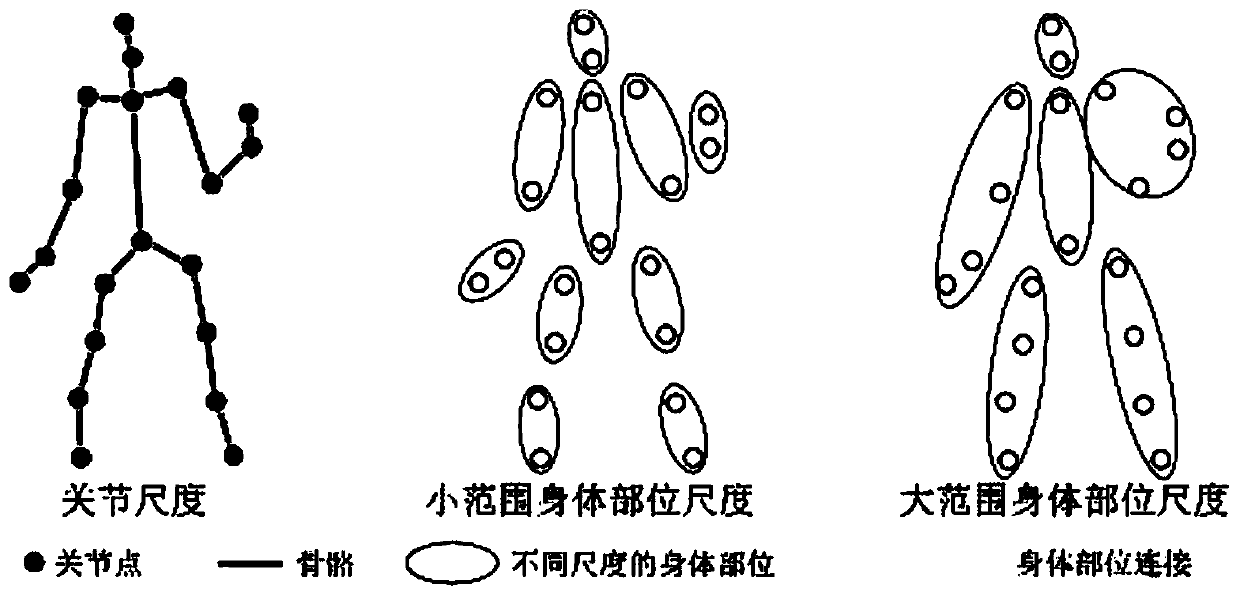

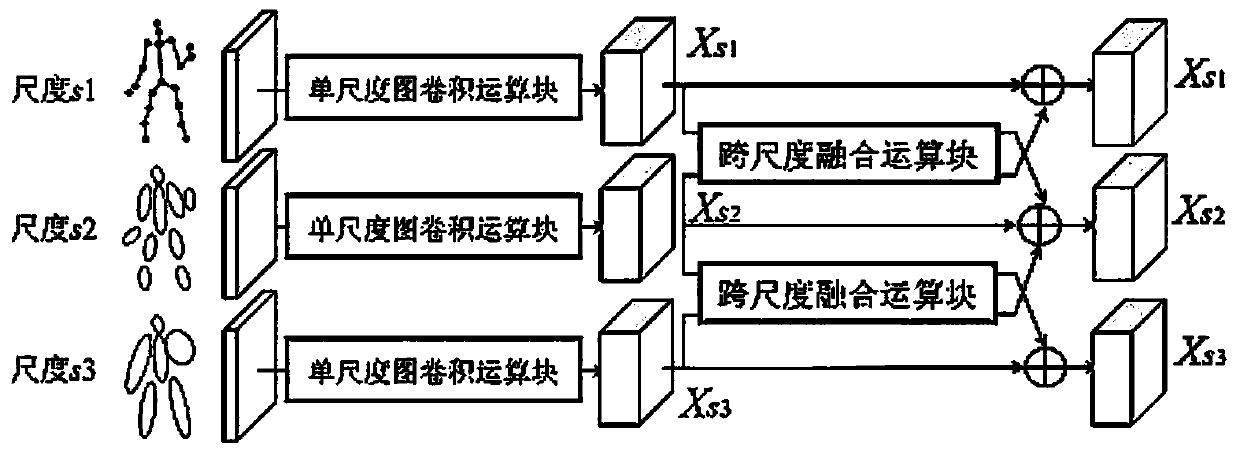

Human skeleton-oriented motion prediction method and system

ActiveCN111199216AEasy to captureEnrich sports informationCharacter and pattern recognitionNeural learning methodsHuman bodyFeature extraction

The invention provides a human skeleton-oriented motion prediction method and system. The method comprises the following steps: a data acquisition step: acquiring human skeleton data; a human body multi-scale map construction step: constructing a multi-scale human body according to the human body skeleton data, and constructing a human body multi-scale map by taking body parts as points and relationships among the parts as edges based on the multi-scale human body; a human body motion feature extraction step: introducing the human body multi-scale map into a depth model formed by spatial multi-scale map convolution, and extracting the comprehensive action semantic information of the multi-scale human body; and an action analysis and prediction step: realizing action prediction according tothe comprehensive action semantic information. According to the method, the high-level semantic information of the action can be extracted by utilizing the self-adaptive and dynamic graph structure and the DMGNN, and the action prediction is realized by utilizing the high-level semantic information.

Owner:SHANGHAI JIAO TONG UNIV

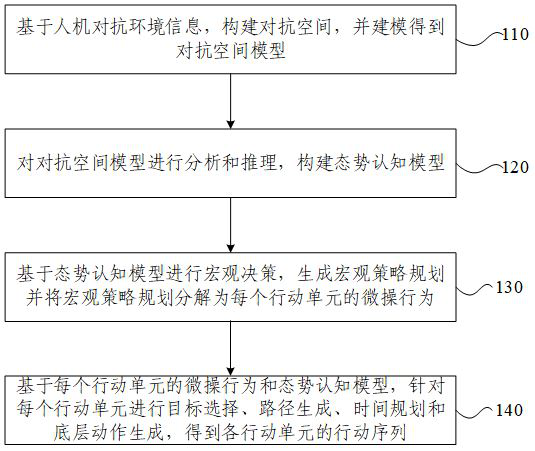

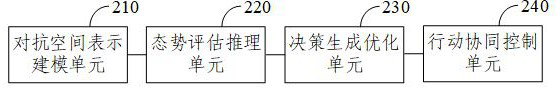

Man-machine adversarial action prediction method and device, electronic equipment and storage medium

ActiveCN112633519AEnhanced confrontation abilityAbundant space for confrontationMachine learningMan machinePath generation

The invention provides a man-machine adversarial action prediction method and device, electronic equipment and a storage medium, and the method comprises the steps: constructing an adversarial space based on man-machine adversarial environment information, and carrying out the modeling to obtain an adversarial space model; analyzing and reasoning the adversarial space model to construct a situation cognition model; performing macroscopic decision based on the situation cognition model, generating a macroscopic strategy plan, and decomposing the macroscopic strategy plan into microoperation behaviors of each action unit; and on the basis of the micro-operation behavior of each action unit and the situation cognition model, performing target selection, path generation, time planning and underlying action generation on each action unit to obtain an action sequence of each action unit. According to the invention, the man-machine adversarial capability of the intelligent agent is improved.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

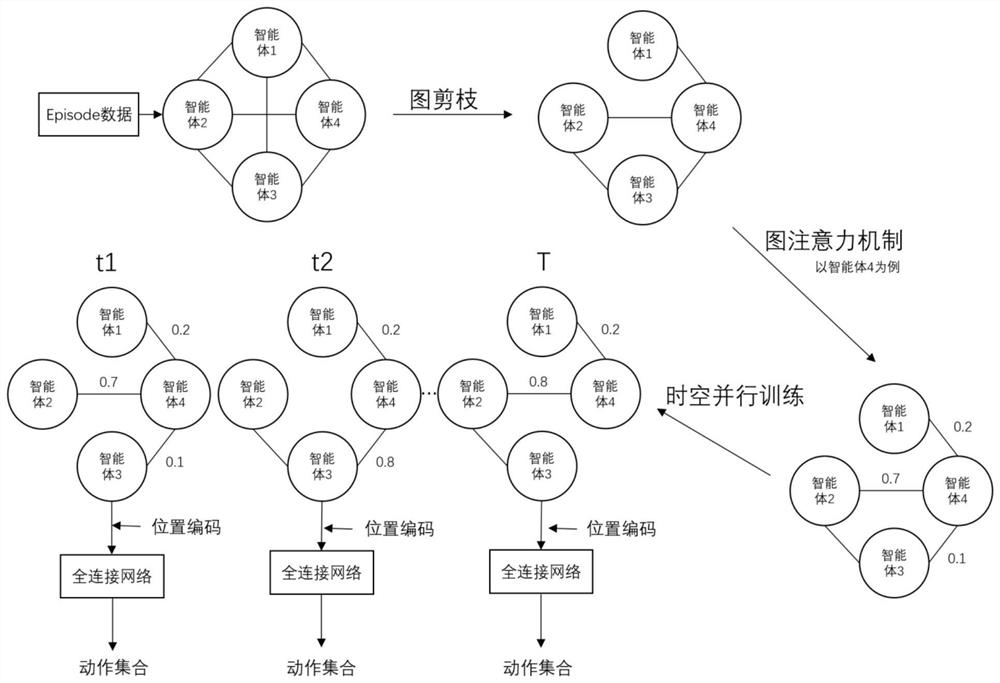

Multi-agent confrontation method and system based on dynamic graph neural network

PendingCN113627596AReal graph relationshipImprove efficiencyNeural architecturesNeural learning methodsFeature vectorMulti-agent system

The invention belongs to the field of reinforcement learning of a multi-agent system, particularly relates to a multi-agent confrontation method and system based on a dynamic graph neural network, and aims at solving the problems that an existing multi-agent model based on the graph neural network is low in training speed and low in efficiency, and much manual intervention is needed in graph construction. The method comprises the following steps: obtaining an observation vector of each agent, and carrying out linear transformation to obtain an observation feature vector; calculating a connection relationship between adjacent agents, and constructing a graph structure between the agents; carrying out embedded representation on a graph structure between the intelligent agents in combination with the observation feature vectors; performing network space-time parallel training on the action prediction result of the action network and the evaluation of the evaluation network by using the embedded representation; and performing action prediction and action evaluation in multi-agent confrontation through the trained network. According to the method, a more real graph relationship is established through pruning, space-time parallel training is realized by utilizing the full-connection neural network and position coding, the training efficiency is high, and the effect is good.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +1

Video processing device, electronic equipment and computer readable storage medium

InactiveCN113362946AReduce operational burdenMedical communicationTelevision system detailsVideo processingComputer science

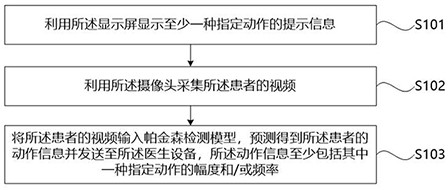

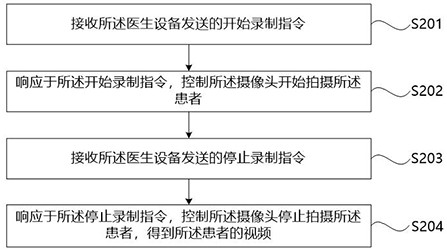

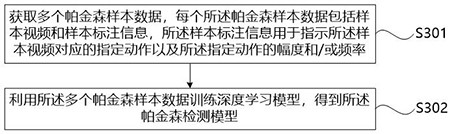

The invention provides a video processing device, electronic equipment and a computer readable storage medium, and the device comprises: a prompt display module which is used for displaying prompt information of at least one specified action through a display screen; a video acquisition module which is used for acquiring a video of the patient by using the camera; an action prediction module which is used for inputting the video of the patient into a Parkinson's disease detection model, performing predicting to obtain action information of the patient and sending the action information to the doctor equipment; an information acquisition module which is used for acquiring disease information of the patient; and a suggestion strategy module which is used for obtaining a suggestion program control strategy of the patient based on the disease information and the action information of the patient and sending the suggestion program control strategy to the doctor equipment. According to the device, the patient can be prompted to do the specified action based on the prompt information, the corresponding action information is obtained according to the video of the specified action of the patient, and a doctor is helped to quantitatively know the local posture and the movement performance condition of the patient in the movement process.

Owner:景昱医疗器械(长沙)有限公司

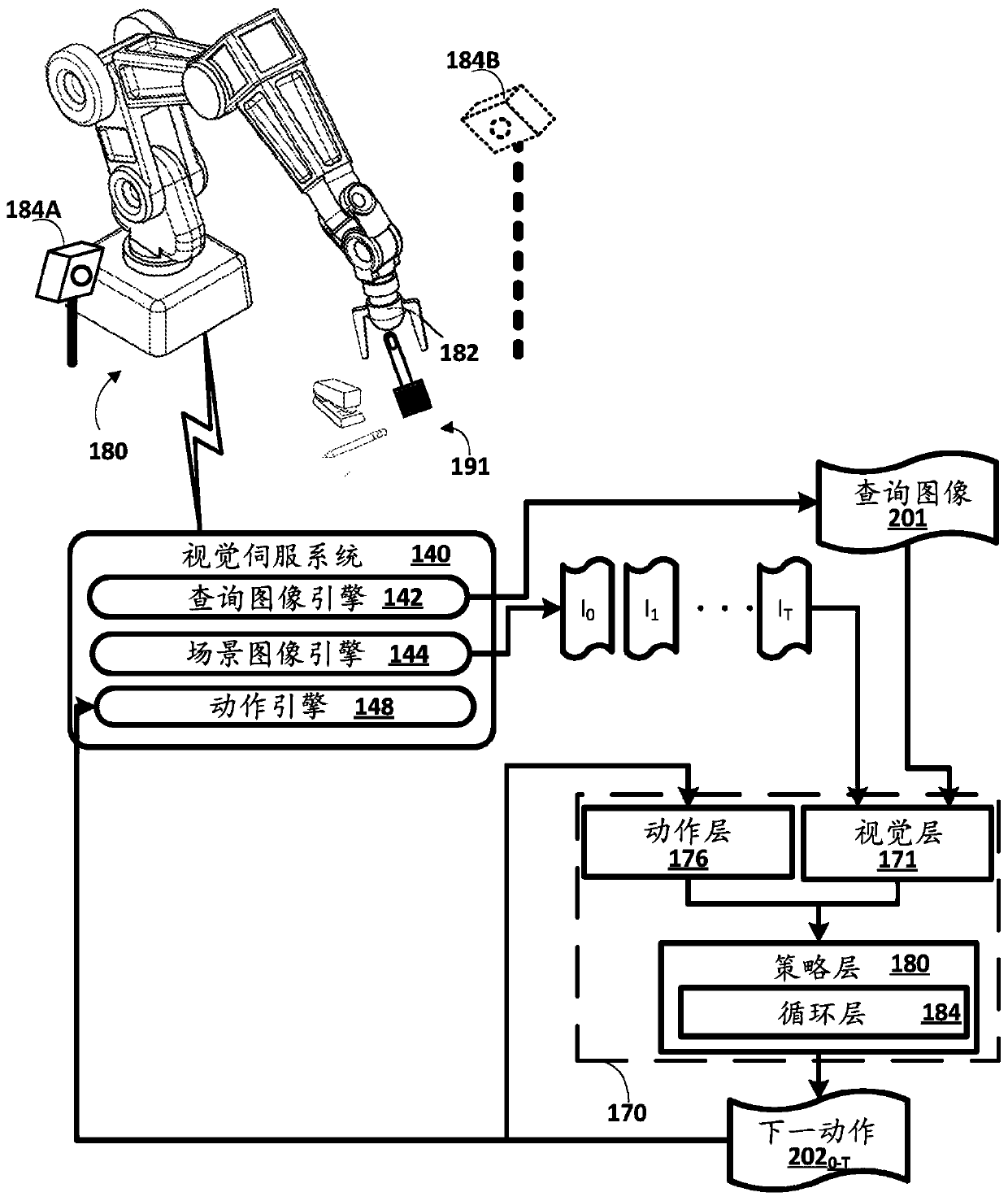

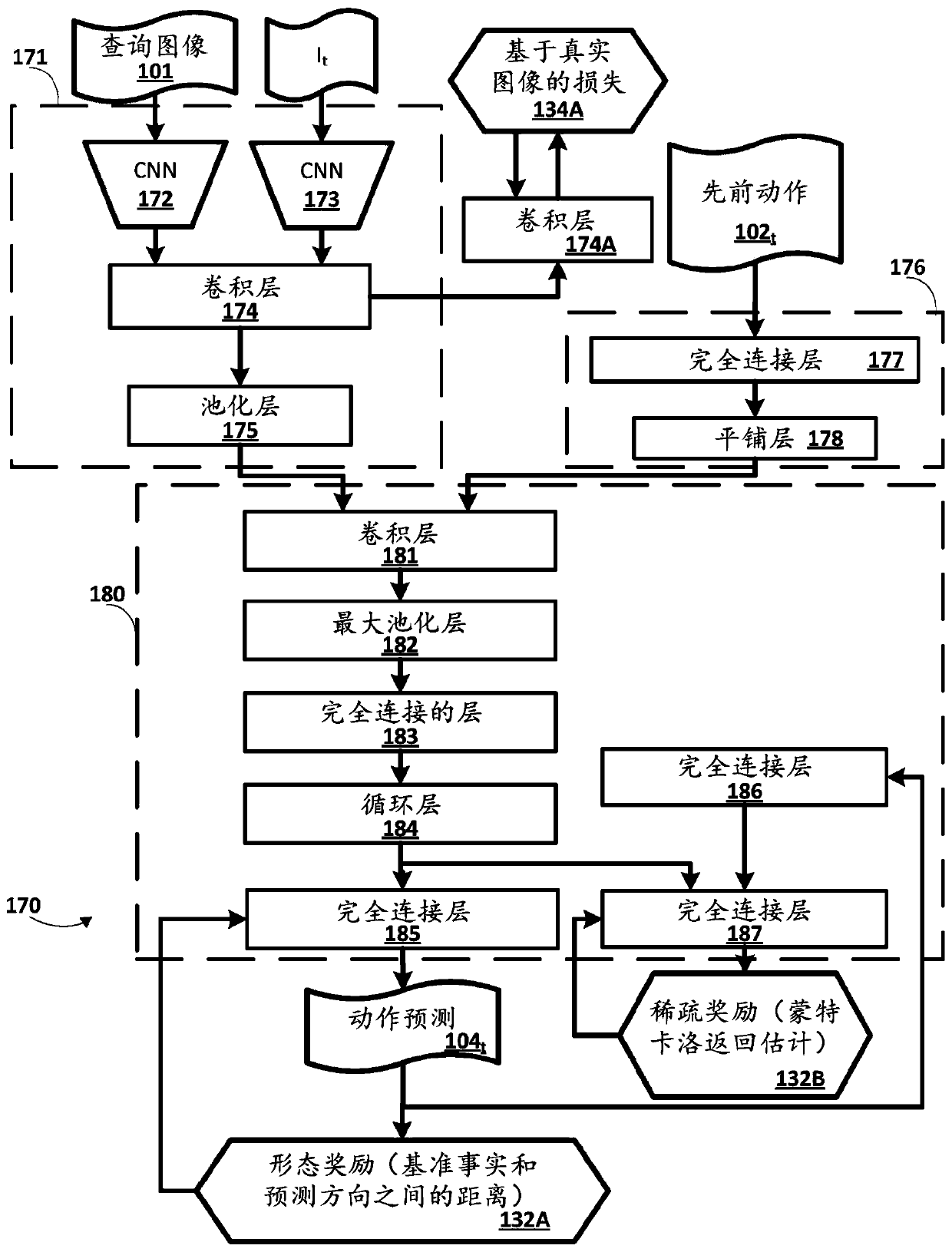

Viewpoint invariant visual servoing of robot end effector using recurrent neural network

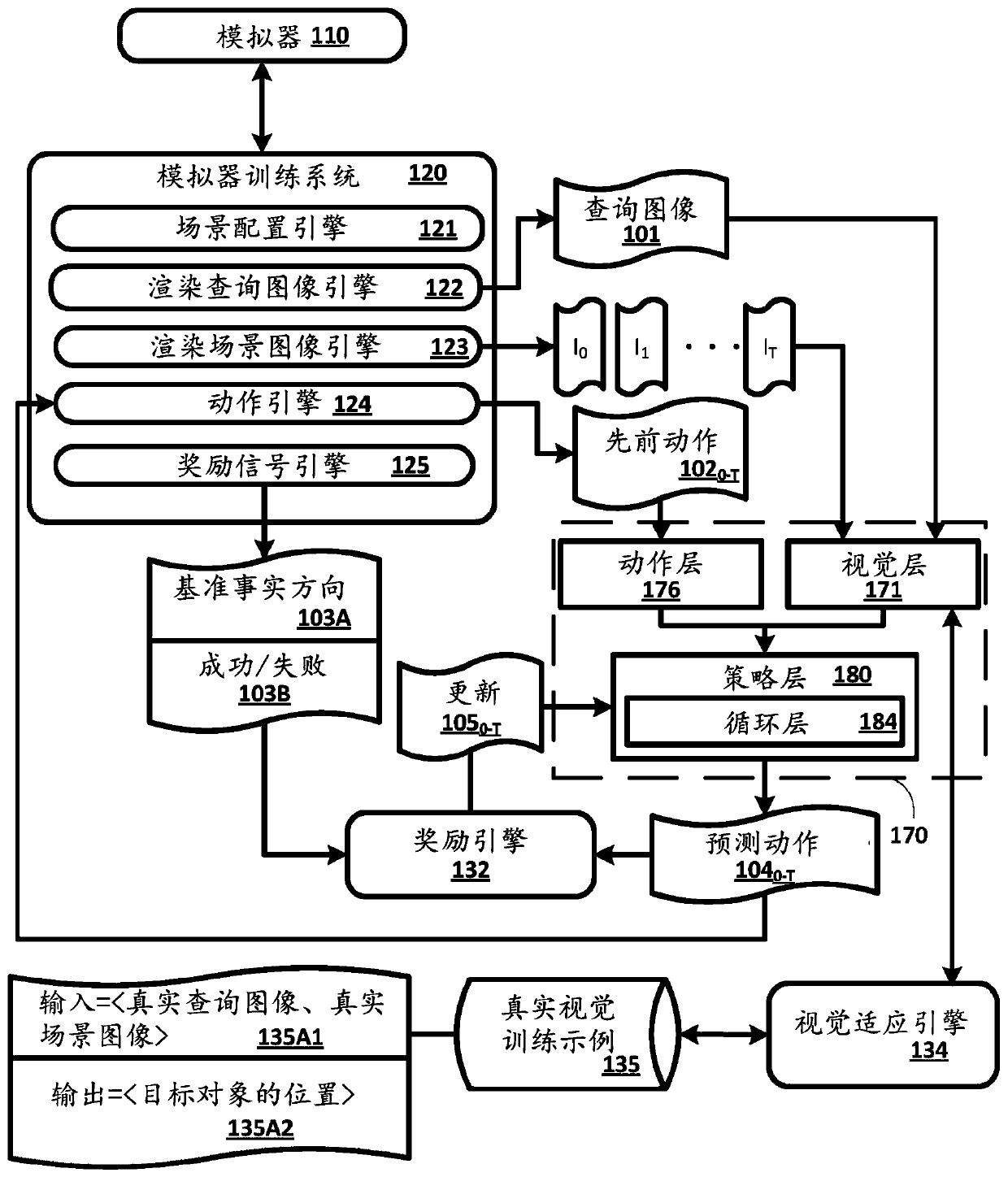

Training and / or using a recurrent neural network model for visual servoing of an end effector of a robot. In visual servoing, the model can be utilized to generate, at each of a plurality of time steps, an action prediction that represents a prediction of how the end effector should be moved to cause the end effector to move toward a target object. The model can be viewpoint invariant in that it can be utilized across a variety of robots having vision components at a variety of viewpoints and / or can be utilized for a single robot even when a viewpoint, of a vision component of the robot, is drastically altered. Moreover, the model can be trained based on a large quantity of simulated data that is based on simulator(s) performing simulated episode(s) in view of the model. One or more portions of the model can be further trained based on a relatively smaller quantity of real training data.

Owner:GOOGLE LLC

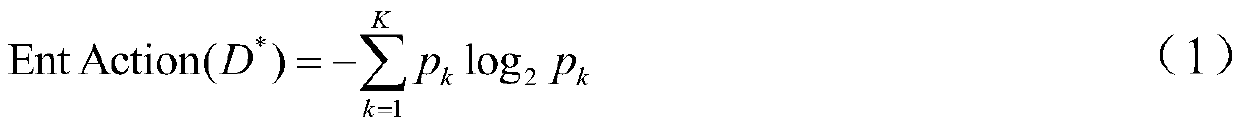

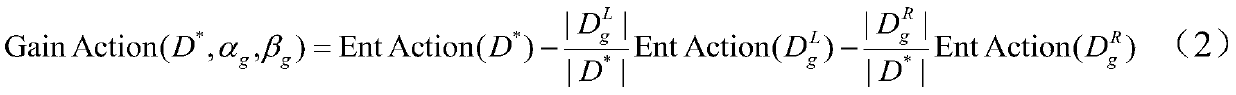

Action prediction method based on multi-task random forest

The invention discloses an action prediction method based on a multitask random forest, and the method comprises the following steps: building an action prediction model based on the multitask randomforest through employing a training video marked with an action type label and an observation rate label at the same time; for a newly input video containing incomplete actions, predicting the actiontype of the video by using a multi-task random forest. According to the action prediction method based on the multi-task random forest, aiming at the difficulties that an input video is incomplete andthe observation rate is unknown in action prediction, the performance of an action prediction model is greatly improved by jointly learning classifiers of two tasks, namely action classification andvideo observation rate identification.

Owner:SHENYANG AEROSPACE UNIVERSITY

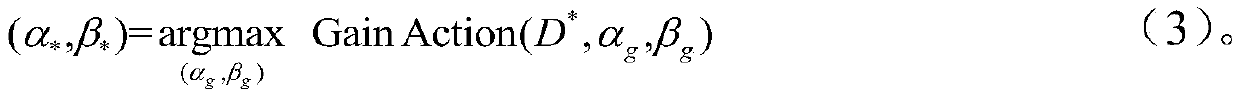

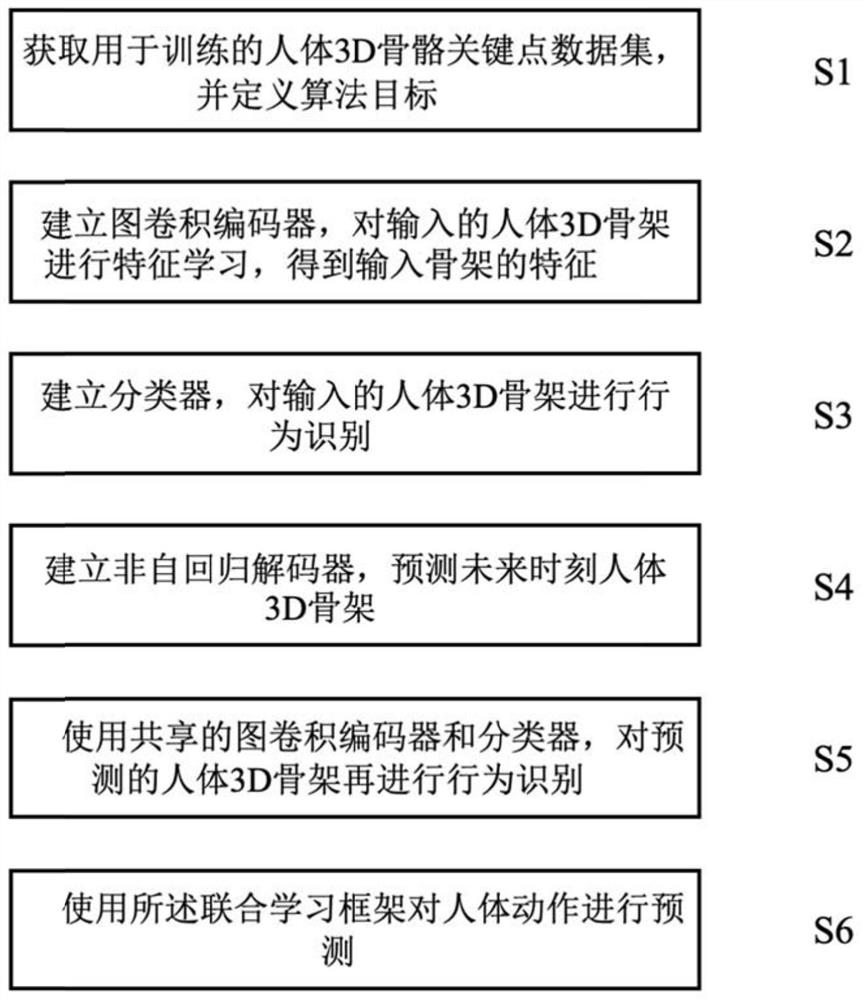

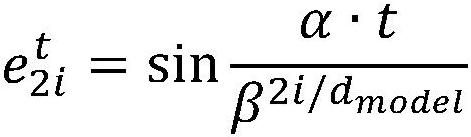

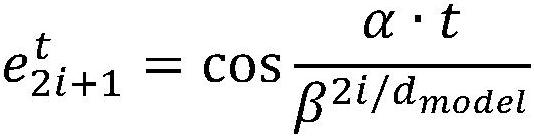

Human skeleton action prediction method based on multi-task non-autoregressive decoding

PendingCN111931549AAvoid passingSolving the action prediction problemCharacter and pattern recognitionNeural architecturesHuman bodyData set

The invention discloses a human skeleton motion prediction method based on multi-task non-autoregressive decoding, which is used for solving the problem of motion prediction of a human 3D skeleton. The method specifically comprises the following steps of obtaining a human body 3D skeleton key point data set for training, and defining an algorithm target; establishing a graph convolution encoder, and performing feature learning on the input human body 3D skeleton to obtain features of the input skeleton; establishing a classifier, and performing behavior recognition on the input human body 3D skeleton input; establishing a non-autoregressive decoder, and predicting a human body 3D skeleton at a future moment; performing behavior recognition on the predicted human body 3D skeleton by using ashared graph convolution encoder and a classifier; and using the joint learning framework to carry out human body action prediction at a future moment. The method is used for human body action prediction analysis in a real video, and has good effect and robustness for various complex conditions.

Owner:ZHEJIANG UNIV

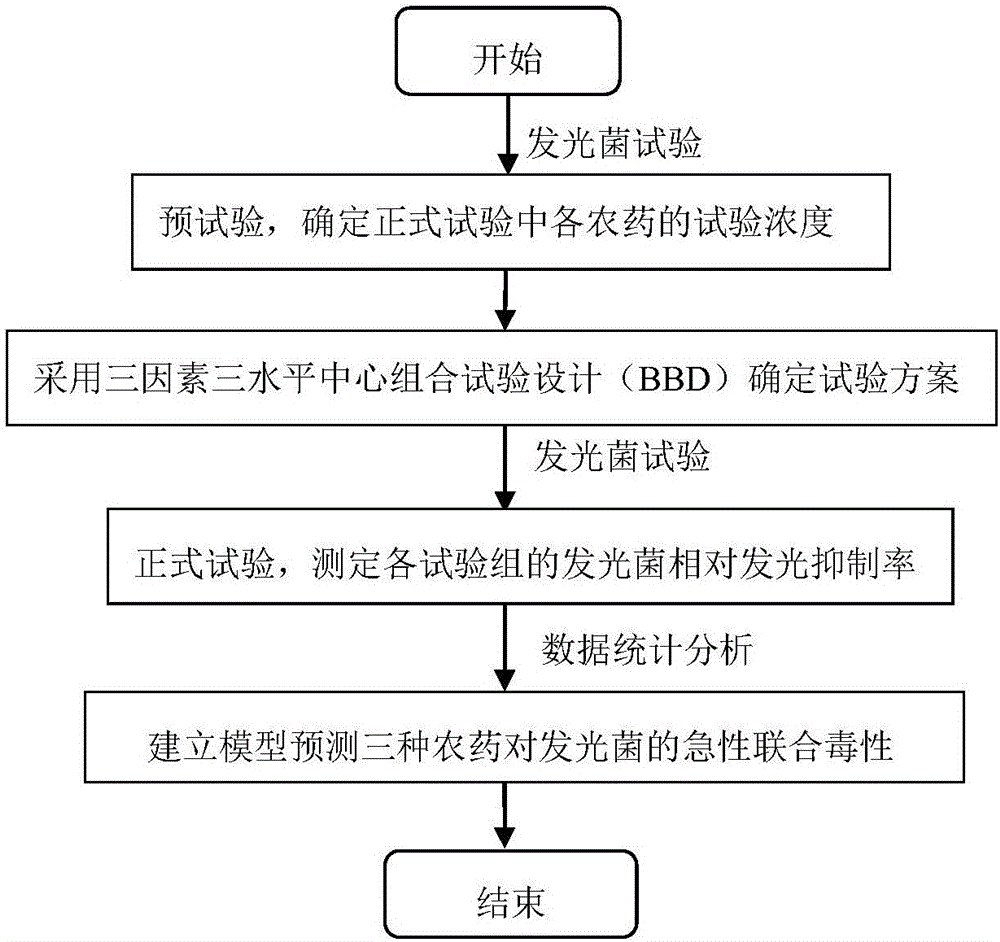

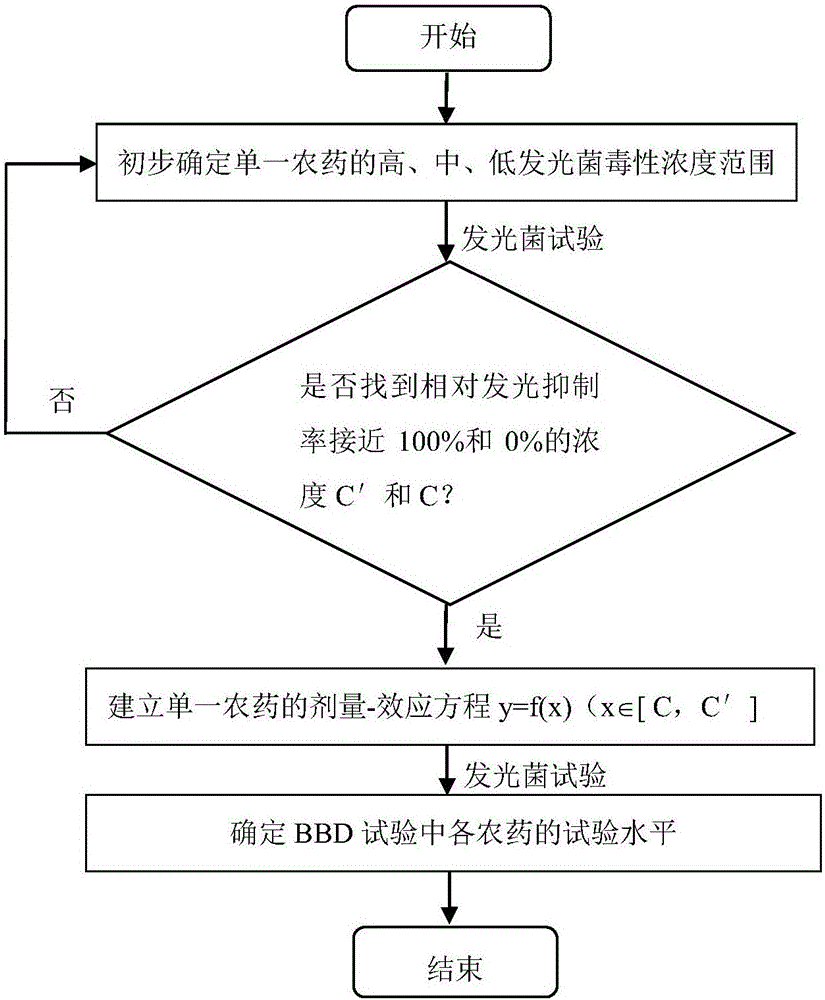

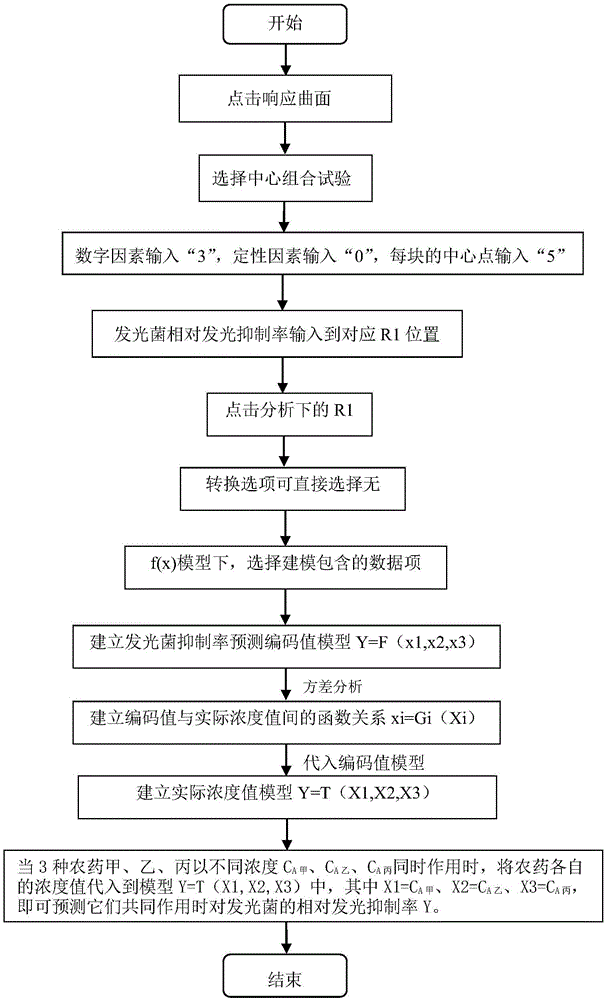

Method for predicting acute joint toxicity of three pesticides to photogenic bacteria

InactiveCN106525822ARealize Quantitative PredictionChemiluminescene/bioluminescenceThree levelPathogenic bacteria

The invention discloses a method for predicting acute joint toxicity of three pesticides to photogenic bacteria, which aims to overcome problems that a conventional toxicology acute joint toxicity evaluation technique is large in testing workload and quantitative evaluation and acute joint toxicity action prediction methods are not available. The method for predicting the acute joint toxicity of three pesticides to photogenic bacteria comprises the following steps: (1) performing pretesting, namely confirming the testing concentration of different pesticides in official tests, wherein the step of performing pretesting, namely confirming testing concentrations of different pesticides in official tests comprises the following steps: (1) primarily confirming high, medium and low photogenic bacteria toxicity concentration ranges of a single pesticide; (2) establishing a dosage-effect equation that y is equal to f(x)(x belongs to [C,C']) of the single pesticide; (3) confirming the testing concentrations of different pesticides in the BBD tests; (2) confirming testing schemes through three-factor three-level center combined testing design (BBD); (3) performing official testing, namely, testing the relative light emission inhibition rates of different test groups of photogenic bacteria; and (4) establishing a model to predict the acute joint toxicity of the three pesticides to the photogenic bacteria.

Owner:JILIN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com