Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

51 results about "Computer-generated imagery" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Computer-generated imagery (CGI) is the application of computer graphics to create or contribute to images in art, printed media, video games, films, television programs, shorts, commercials, videos, and simulators. The visual scenes may be dynamic or static and may be two-dimensional (2D), though the term "CGI" is most commonly used to refer to 3D computer graphics used for creating scenes or special effects in films and television. Additionally, the use of 2D CGI is often mistakenly referred to as "traditional animation", most often in the case when dedicated animation software such as Adobe Flash or Toon Boom is not used or the CGI is hand drawn using a tablet and mouse.

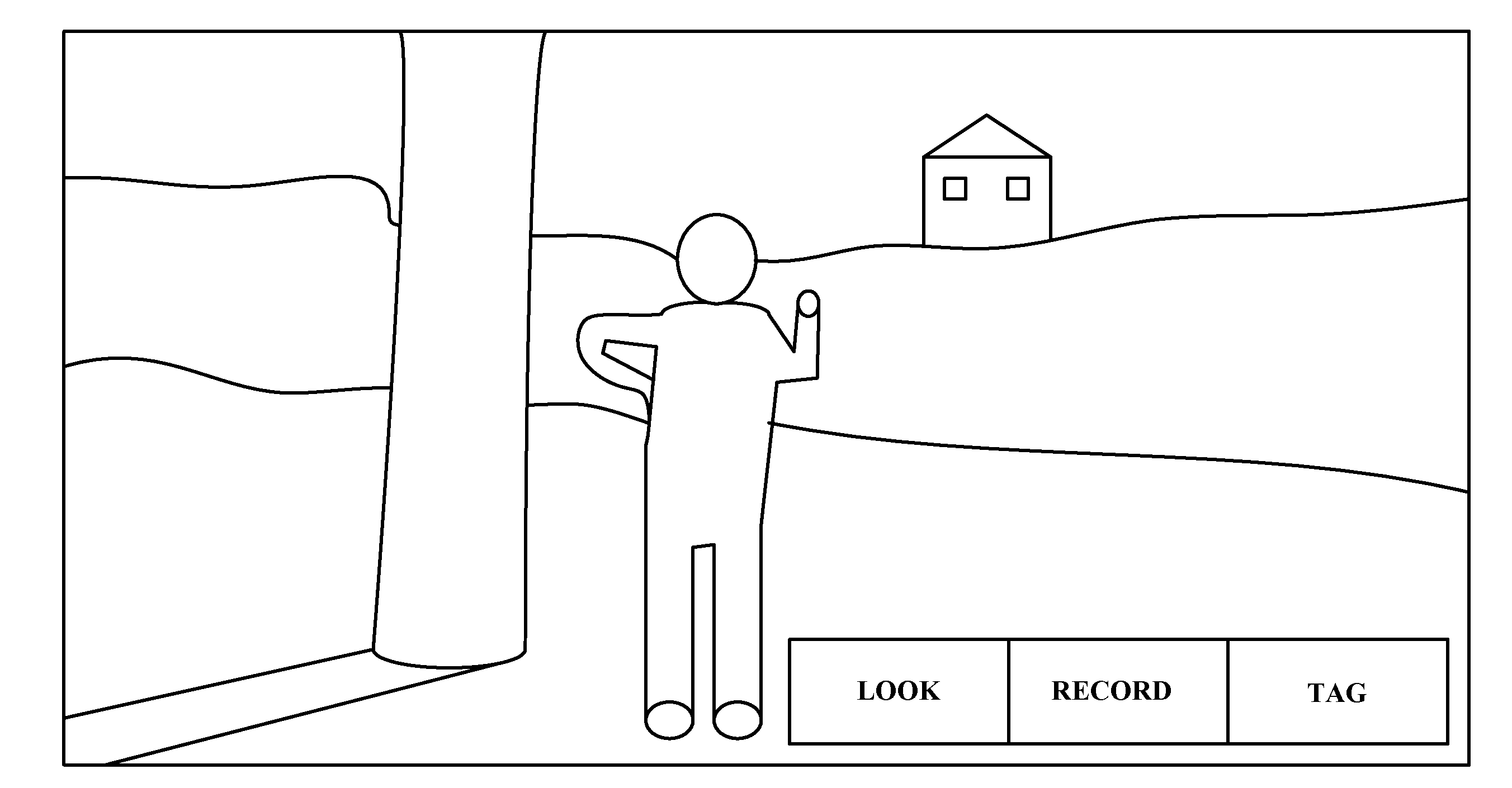

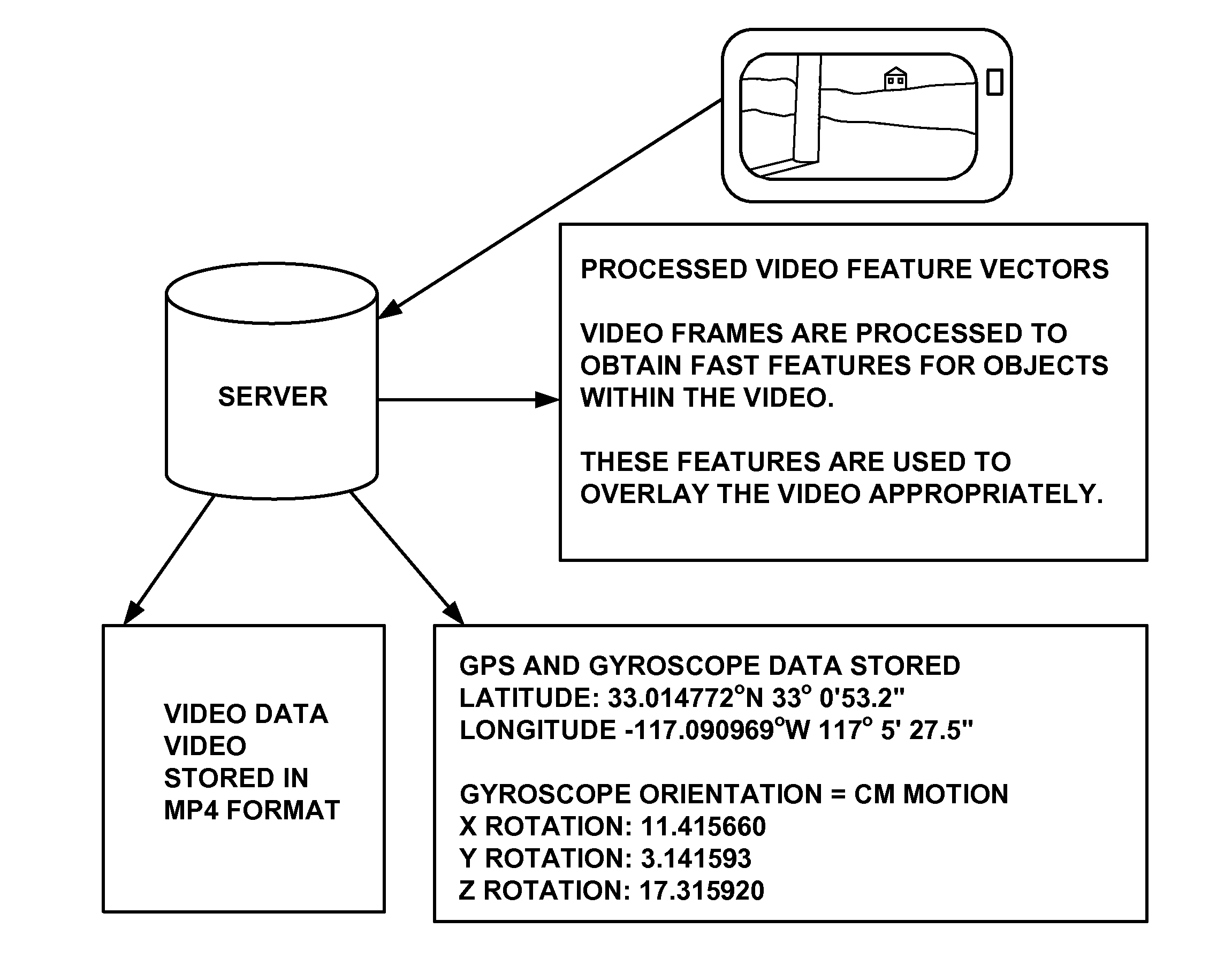

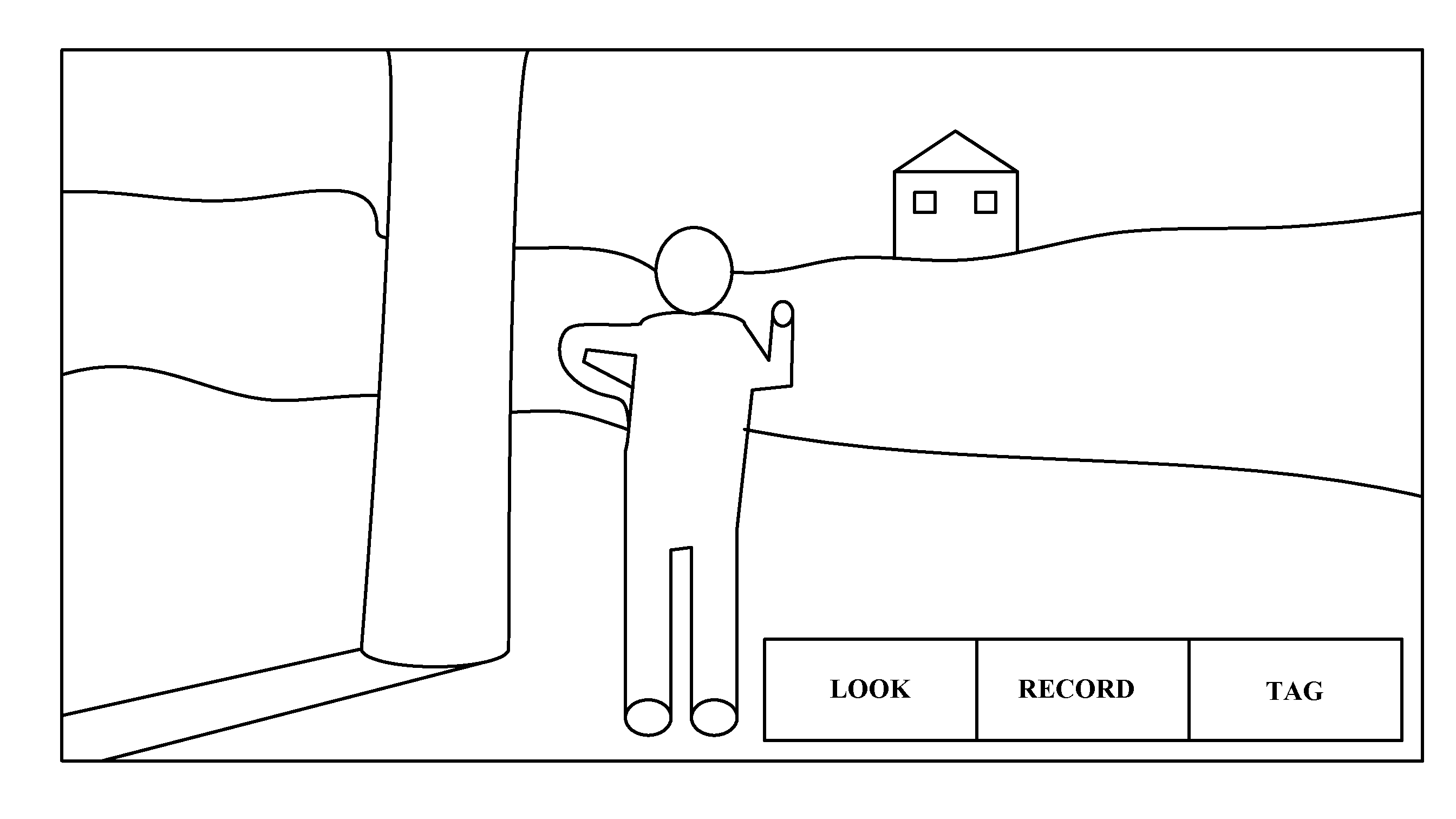

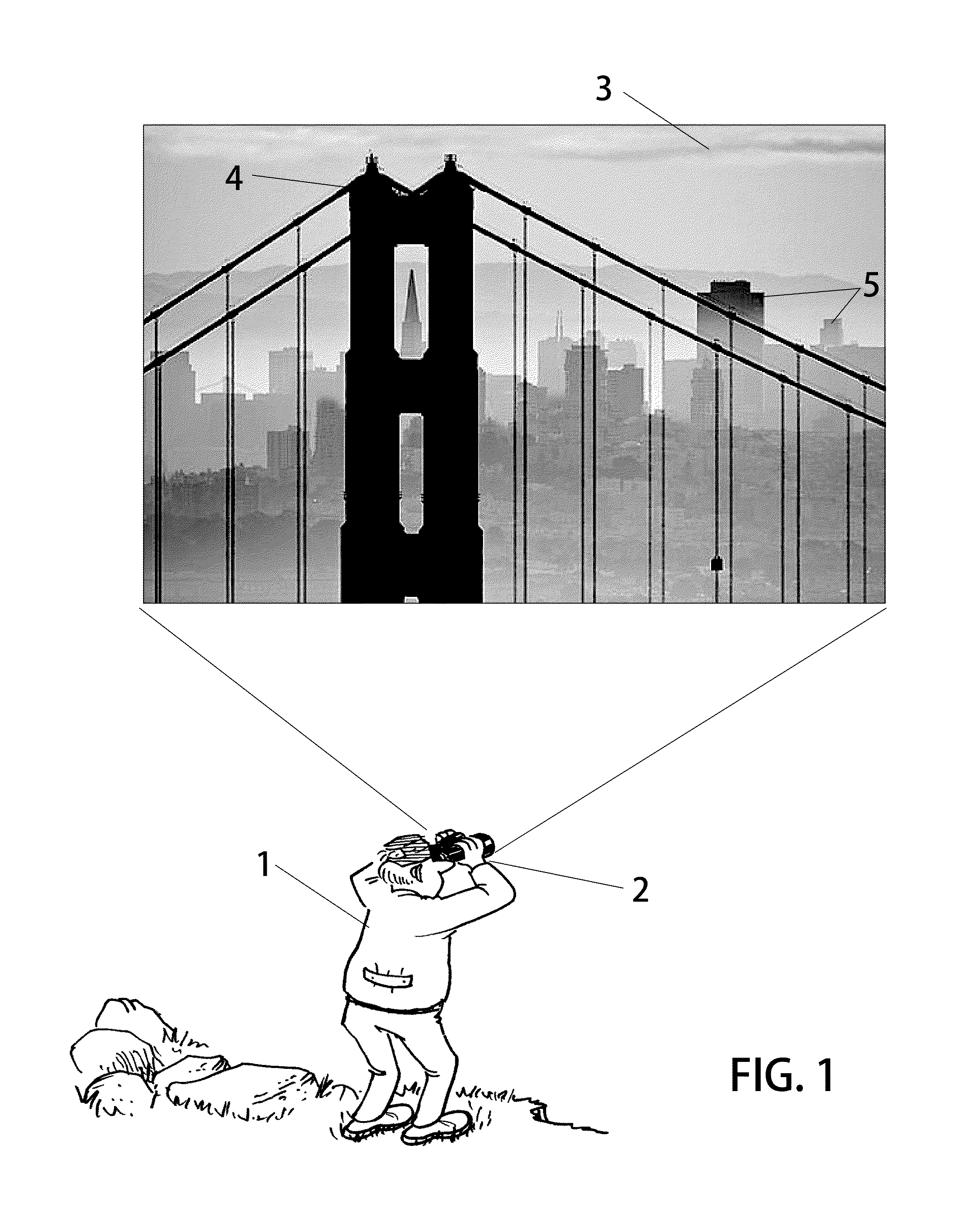

Augmented reality system for position identification

A system, method, and computer program product for automatically combining computer-generated imagery with real-world imagery in a portable electronic device by retrieving, manipulating, and sharing relevant stored videos, preferably in real time. A video is captured with a hand-held device and stored. Metadata including the camera's physical location and orientation is appended to a data stream, along with user input. The server analyzes the data stream and further annotates the metadata, producing a searchable library of videos and metadata. Later, when a camera user generates a new data stream, the linked server analyzes it, identifies relevant material from the library, retrieves the material and tagged information, adjusts it for proper orientation, then renders and superimposes it onto the current camera view so the user views an augmented reality.

Owner:SONY CORP

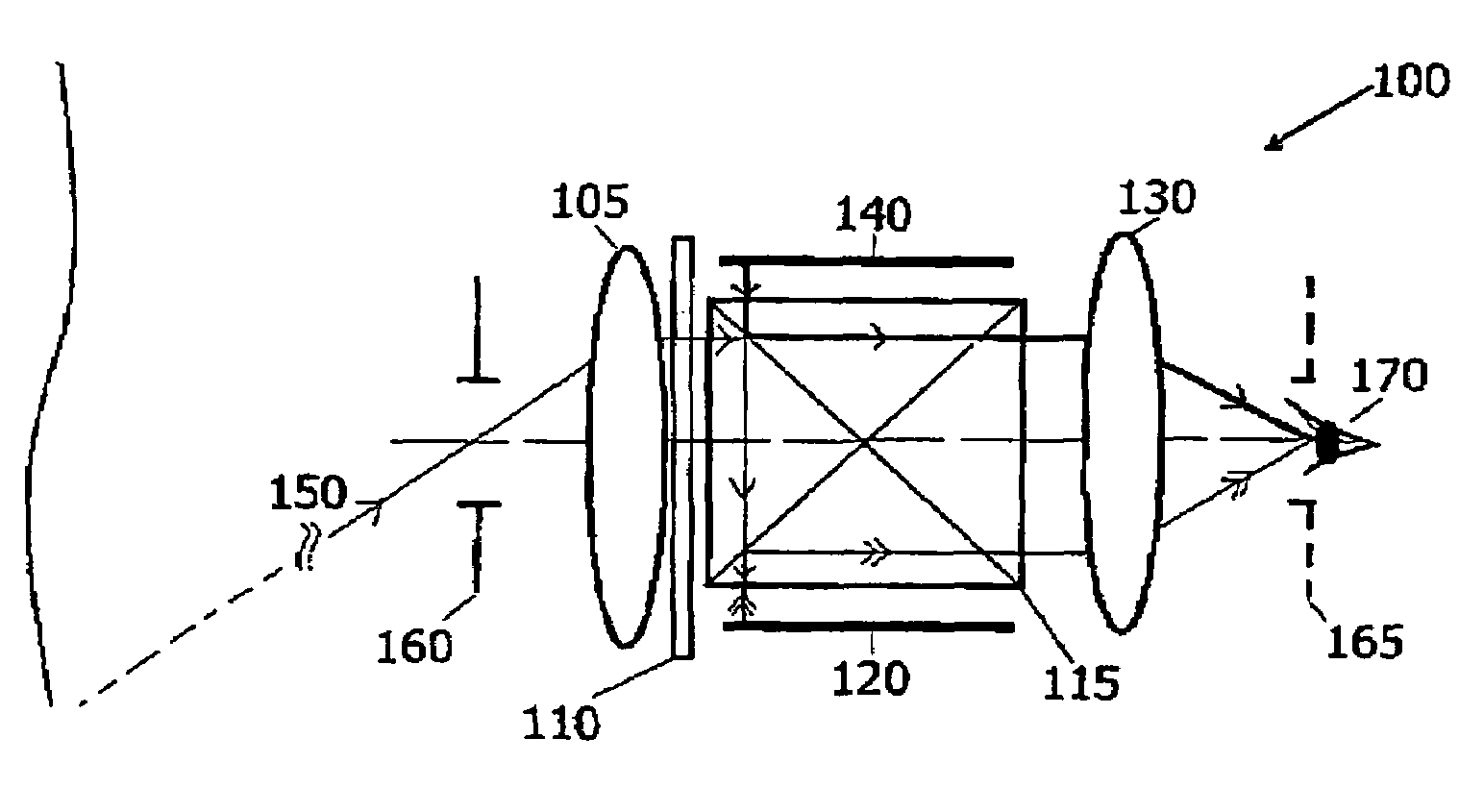

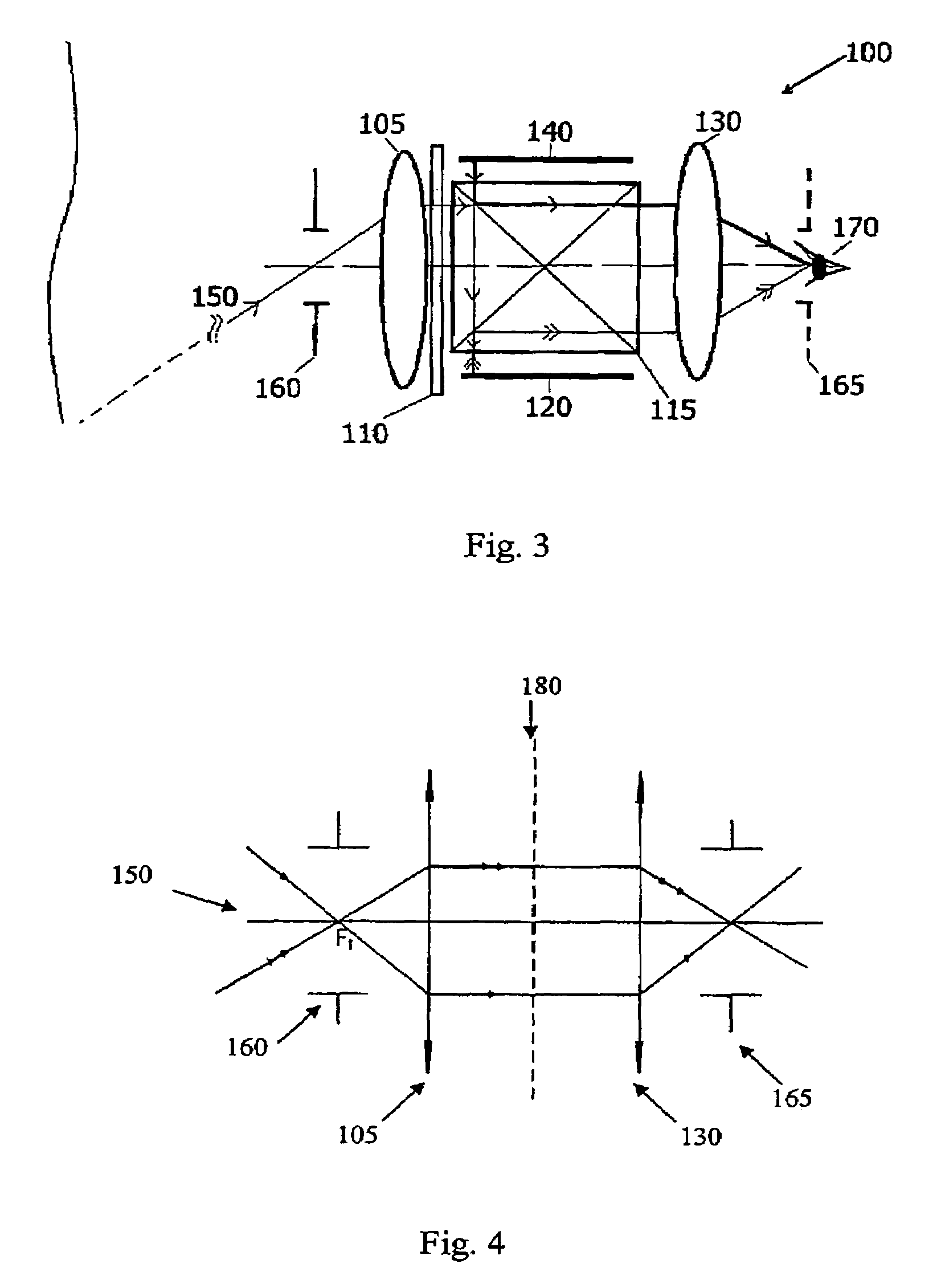

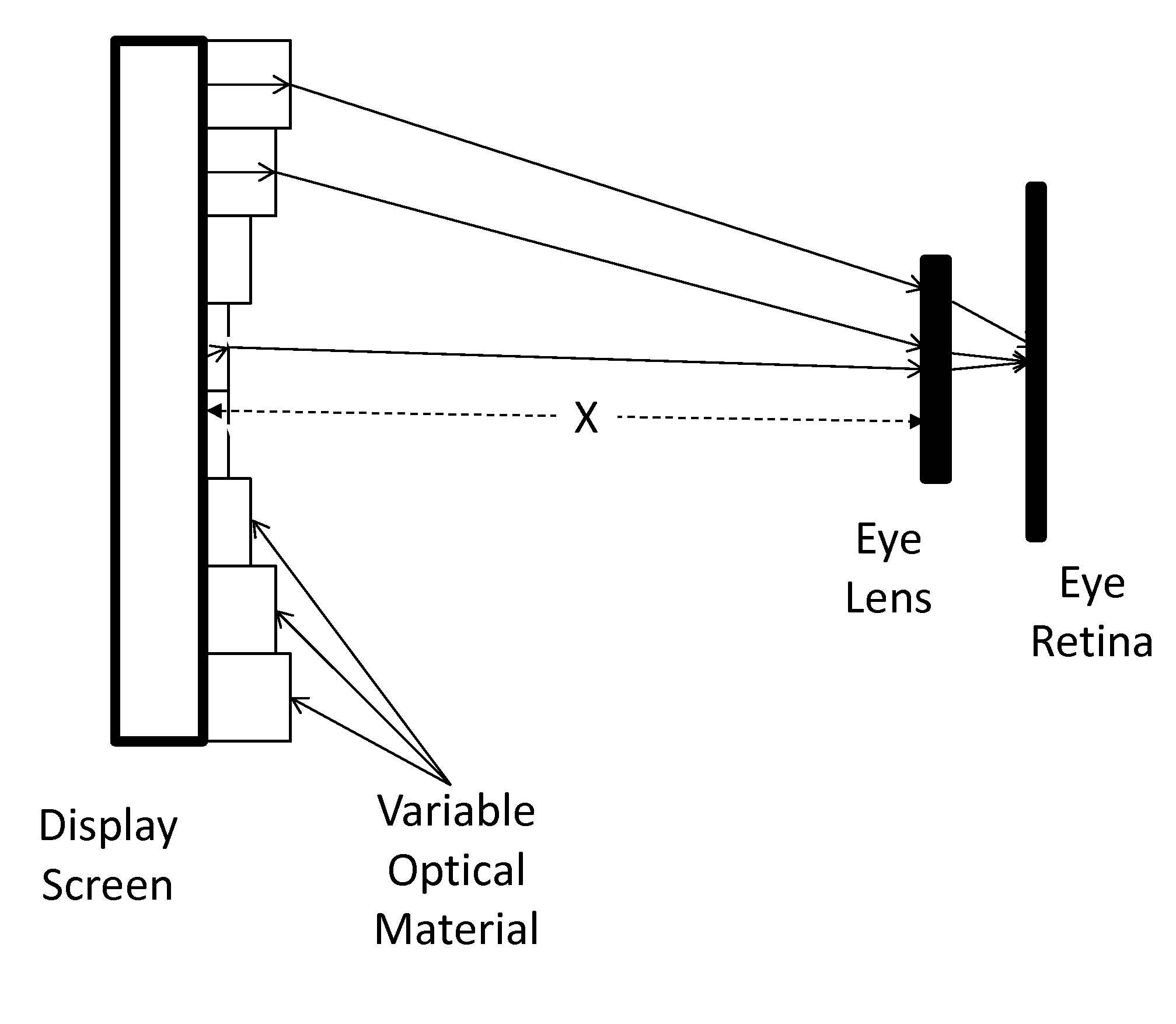

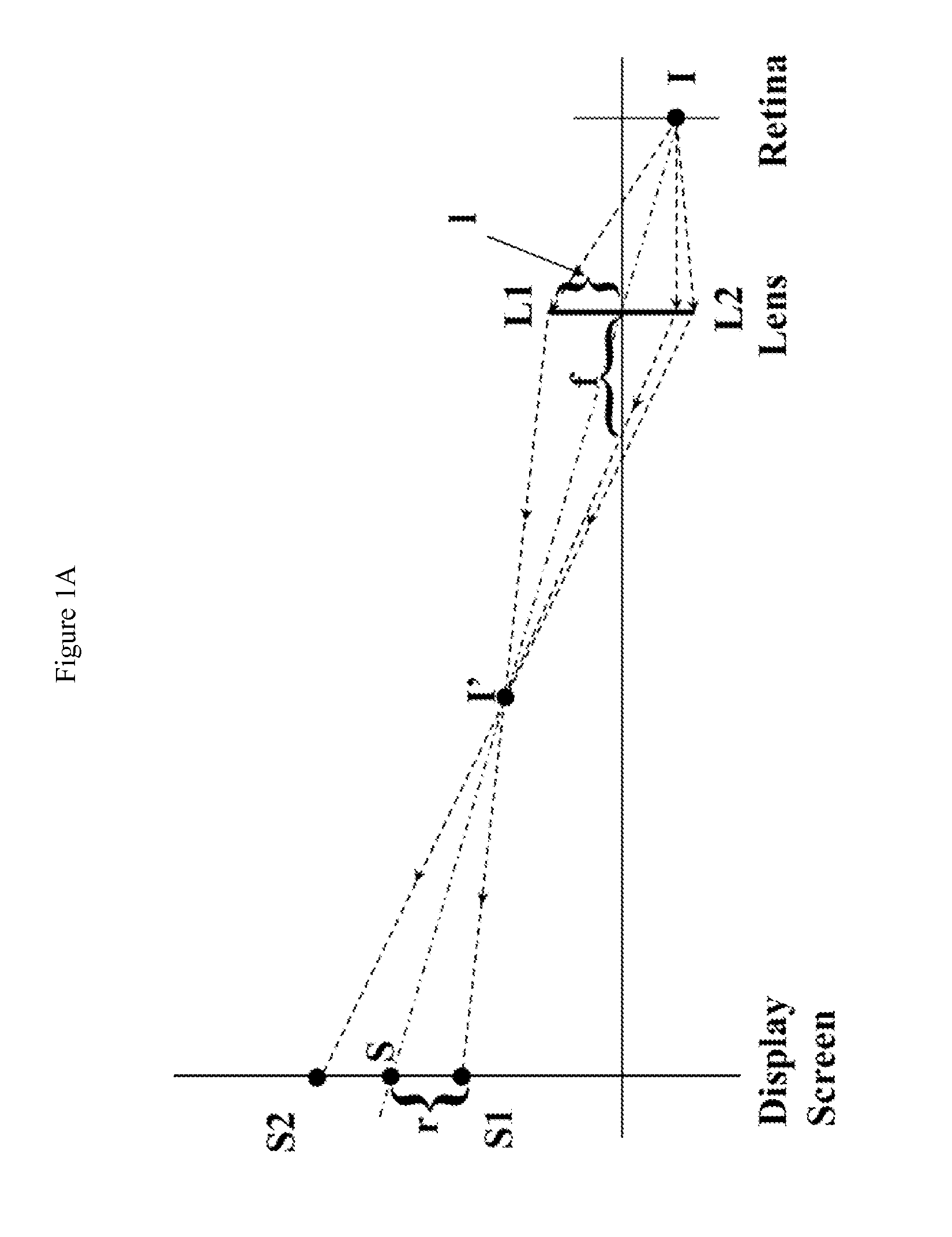

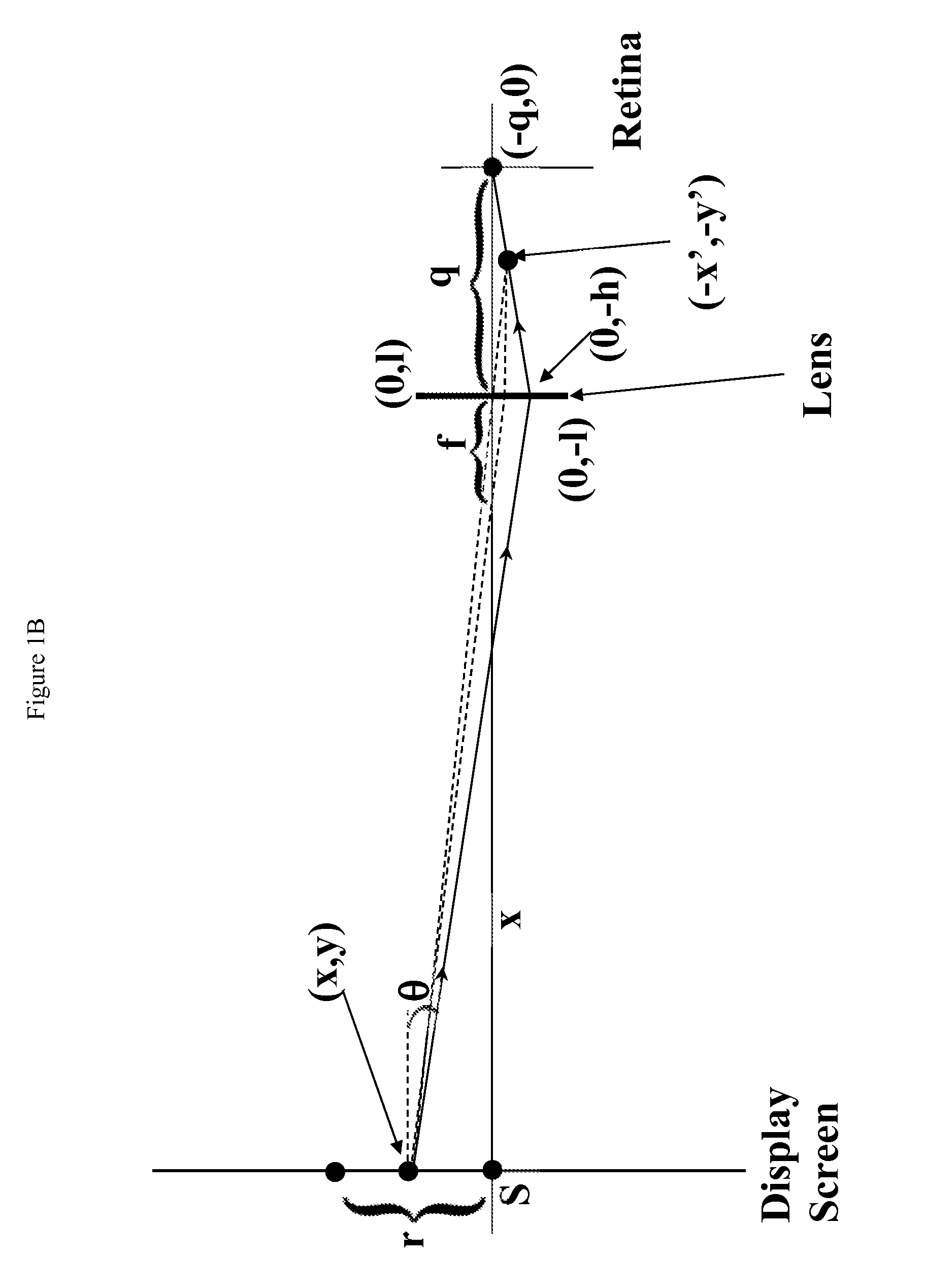

Compact optical see-through head-mounted display with occlusion support

An optical system, apparatus and method are capable of mutual occlusions. The core of the system is a spatial light modulator (SLM) which allows users to block or pass certain parts of a scene which is viewed through the head worn display. An objective lens images the scene onto the SLM and the modulated image is mapped back to the original scene via an eyepiece. The invention combines computer-generated imagery with the modulated version of the scene to form the final image a user would see. The invention is a breakthrough in display hardware from a mobility (i.e. compactness), resolution, and speed, and is specifically, related to virtual objects being able to occlude real objects and real objects being able to occlude virtual objects.

Owner:UNIV OF CENT FLORIDA RES FOUND INC

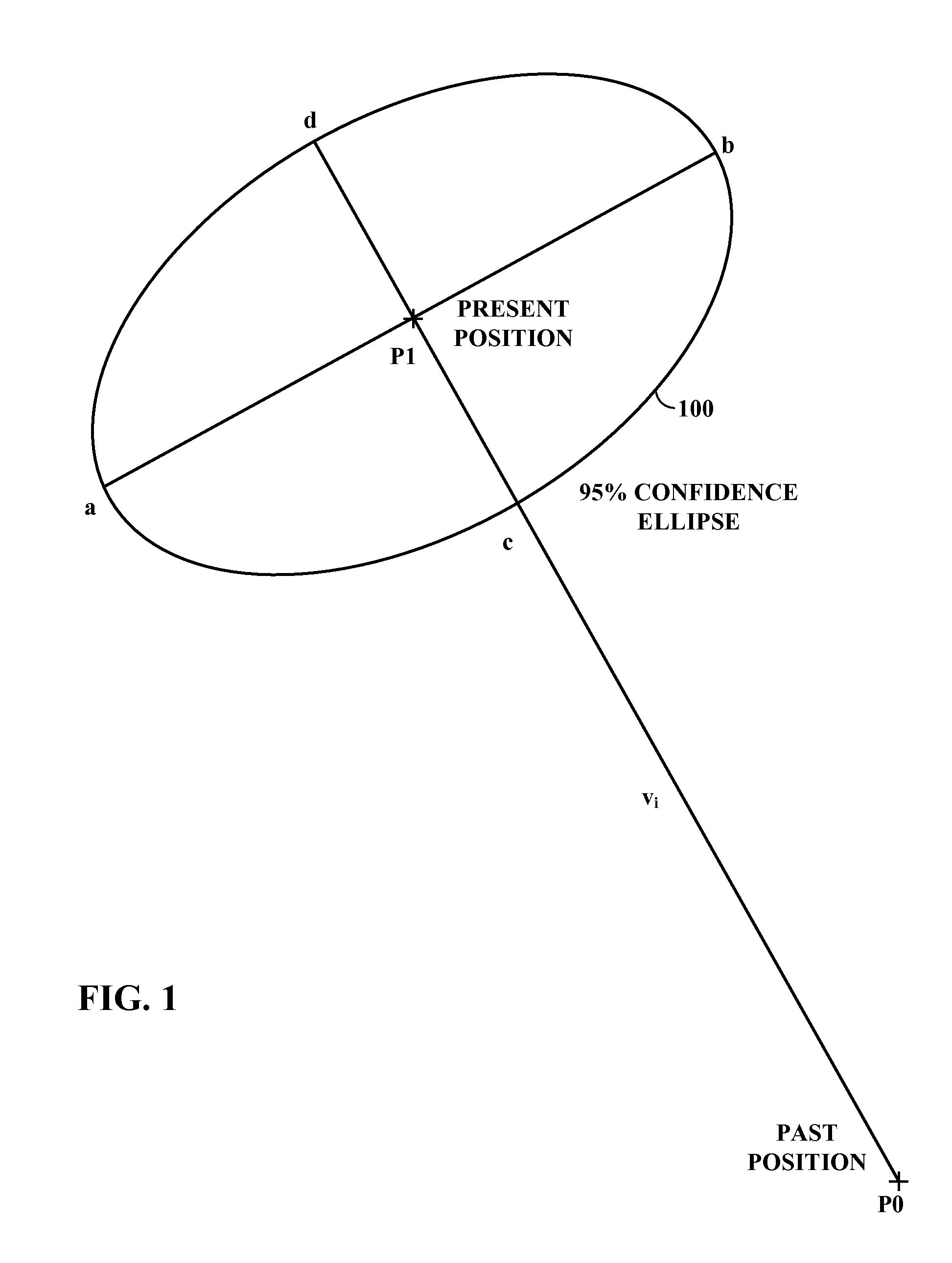

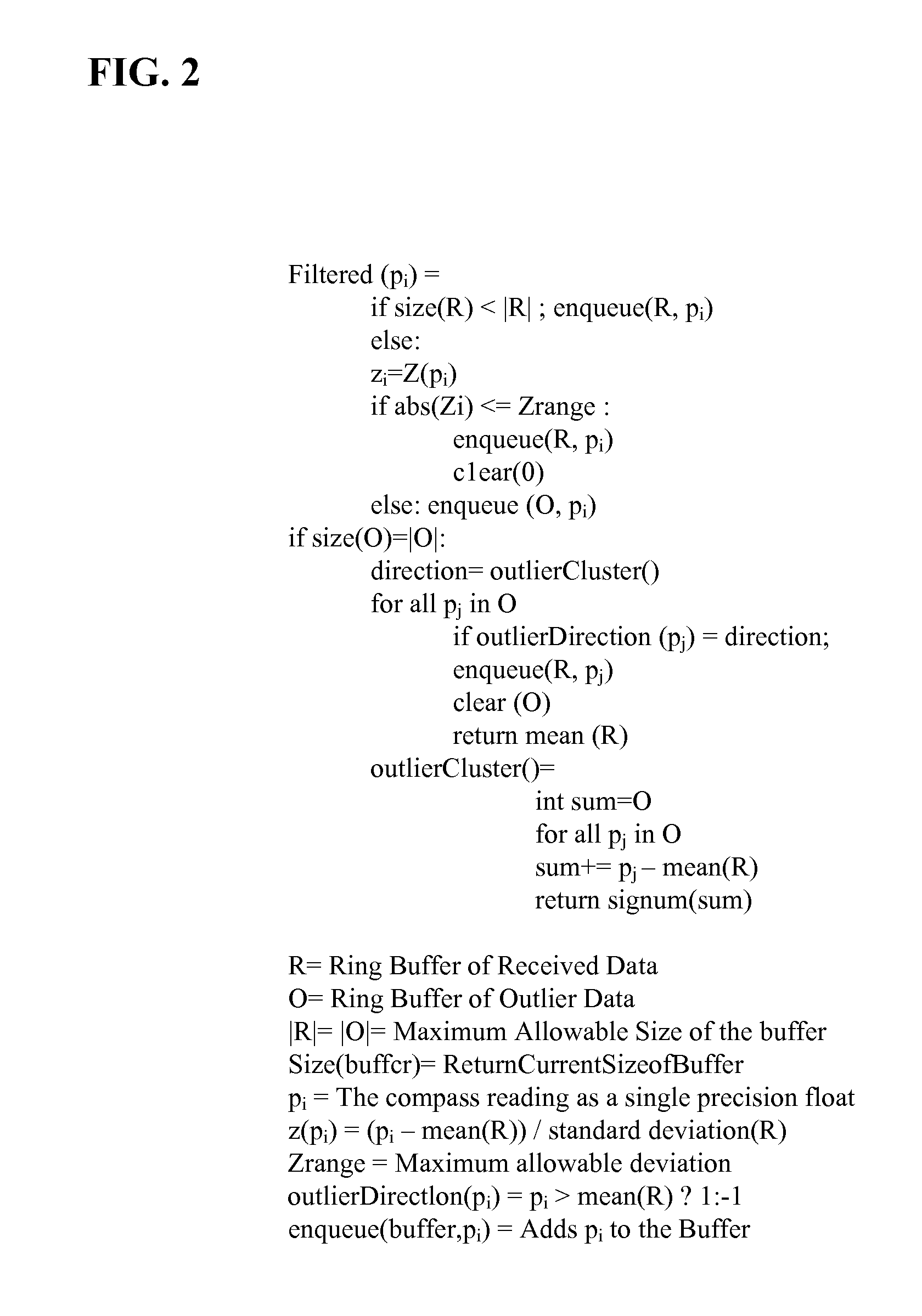

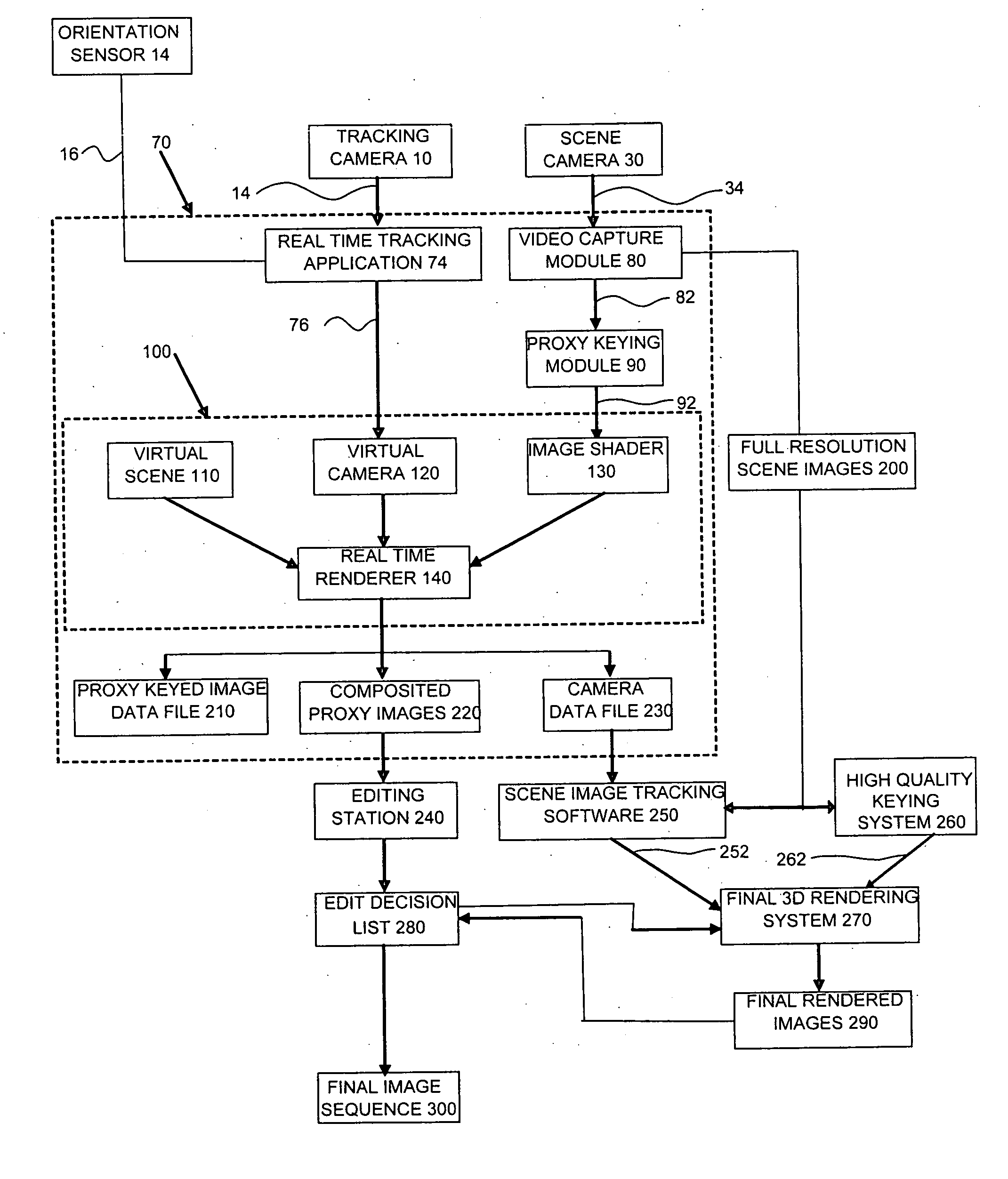

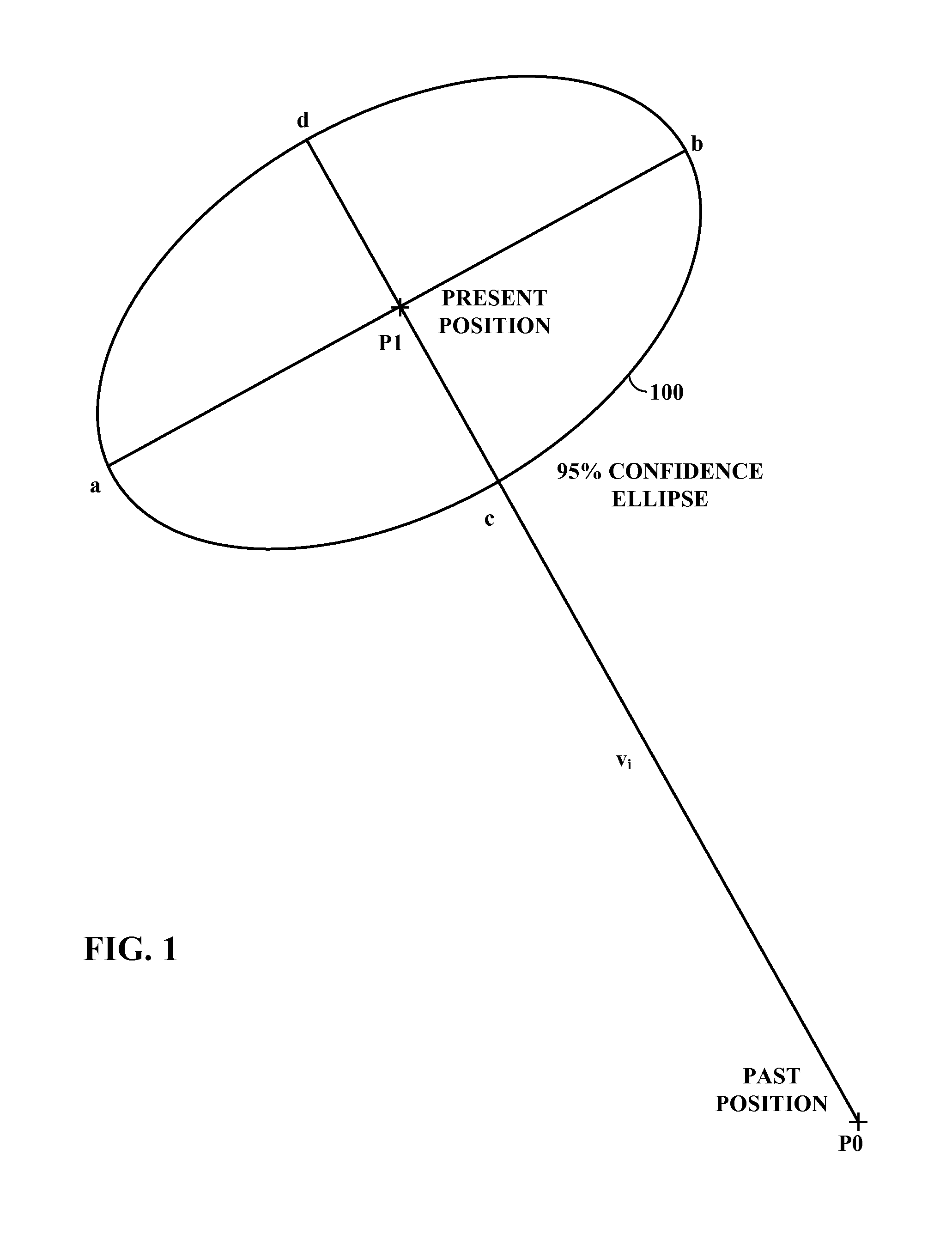

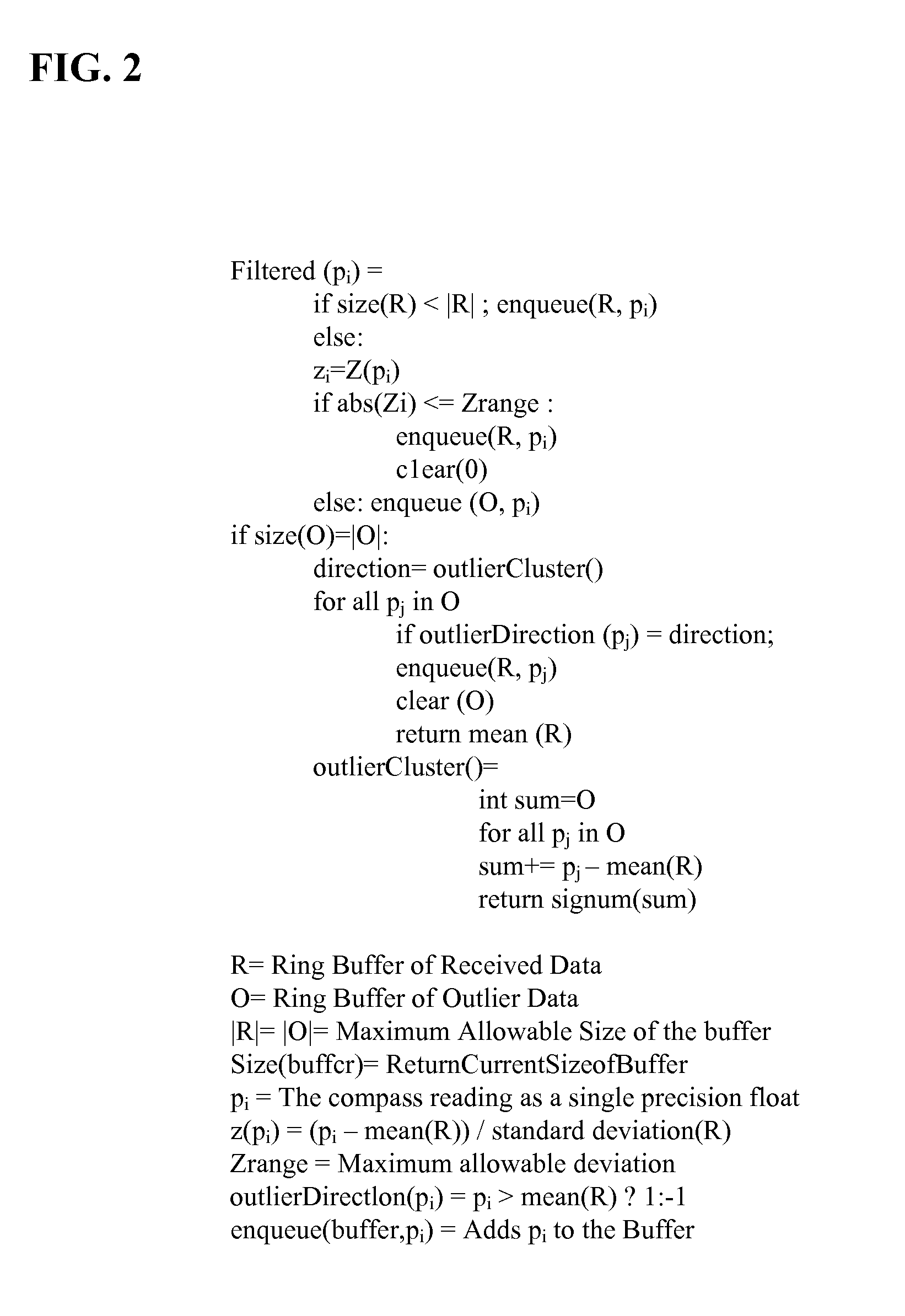

Method and apparatus for a wide area virtual scene preview system

InactiveUS20070248283A1Increase capacityIncrease creative freedomImage enhancementTelevision system detailsFilter algorithmComputer-generated imagery

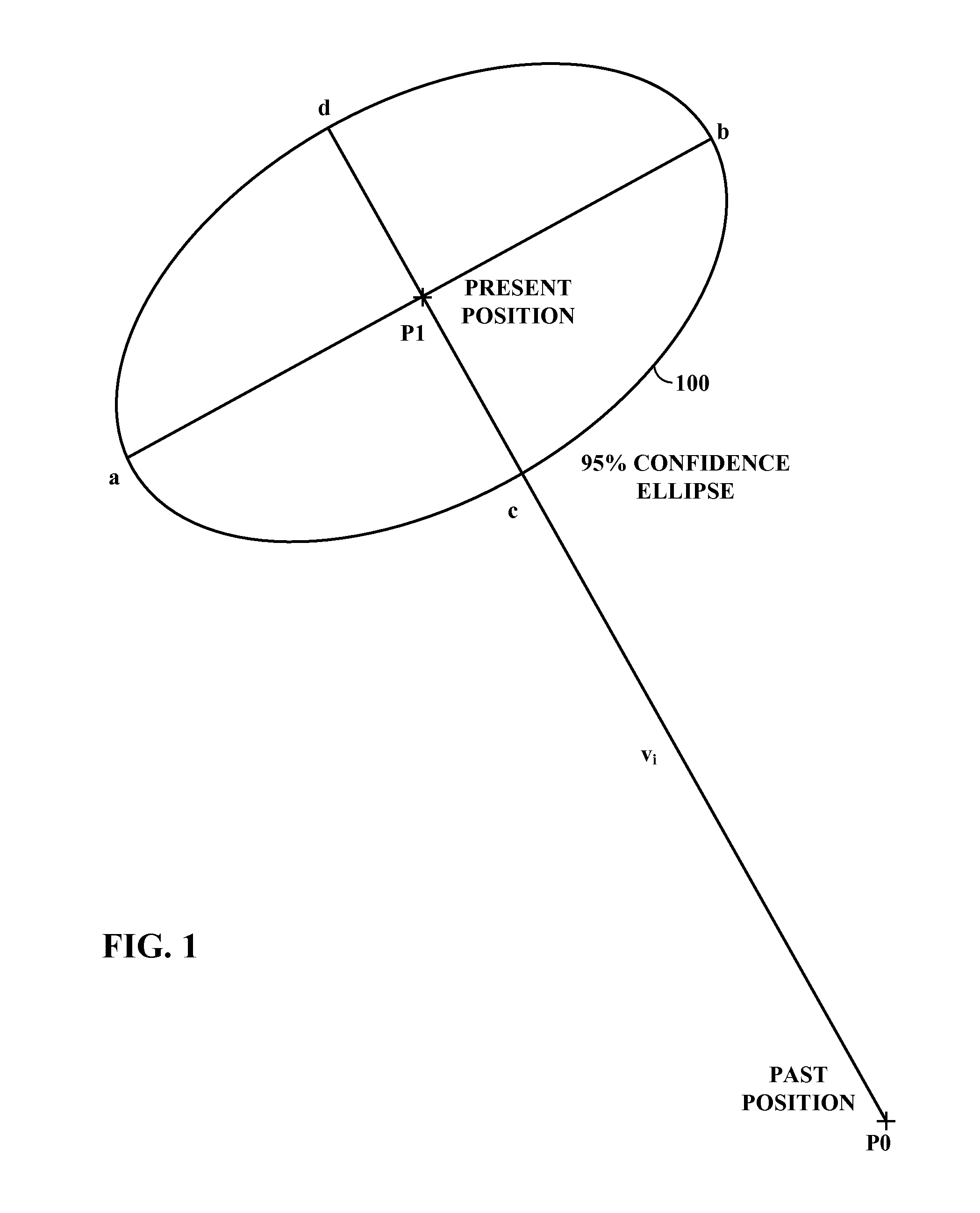

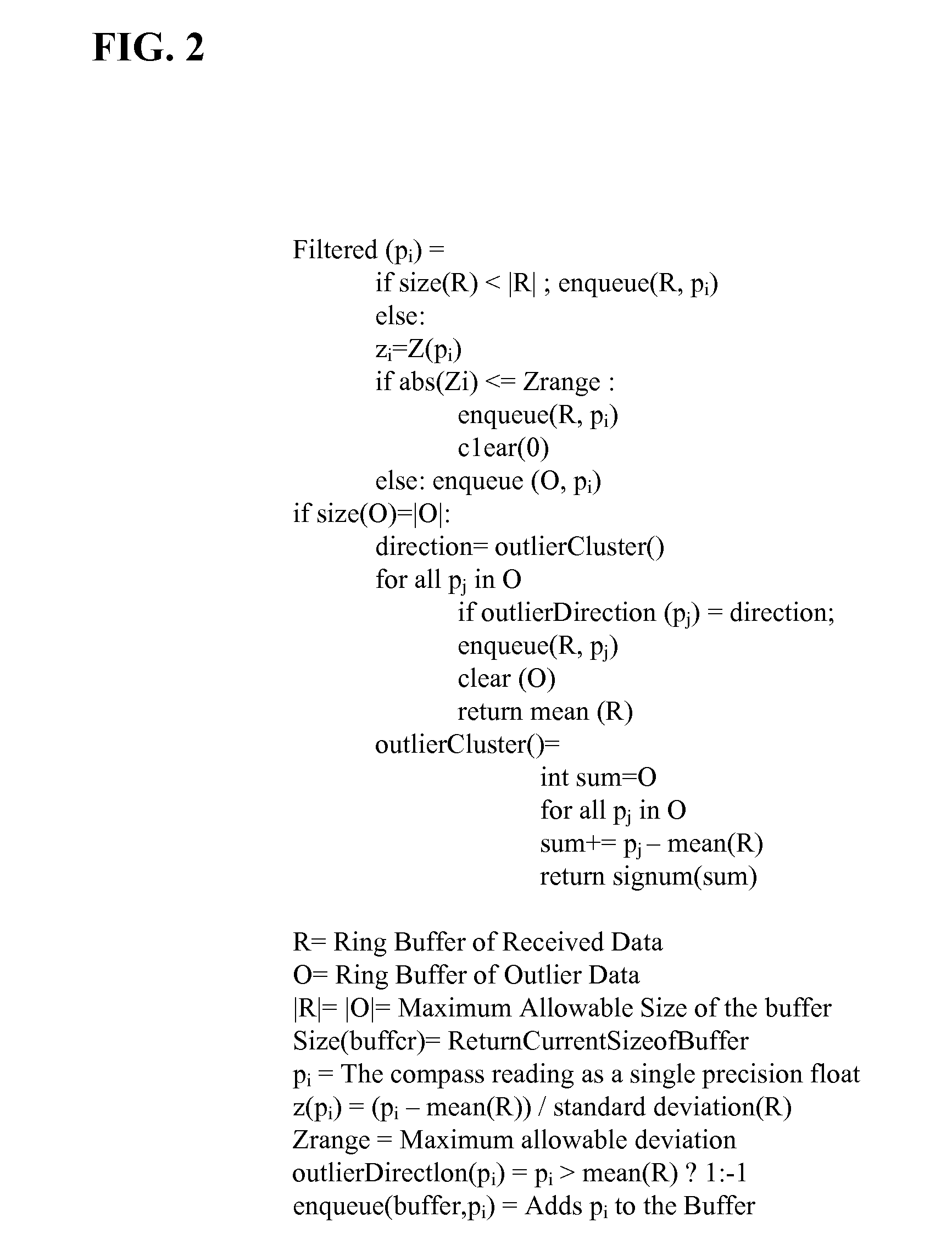

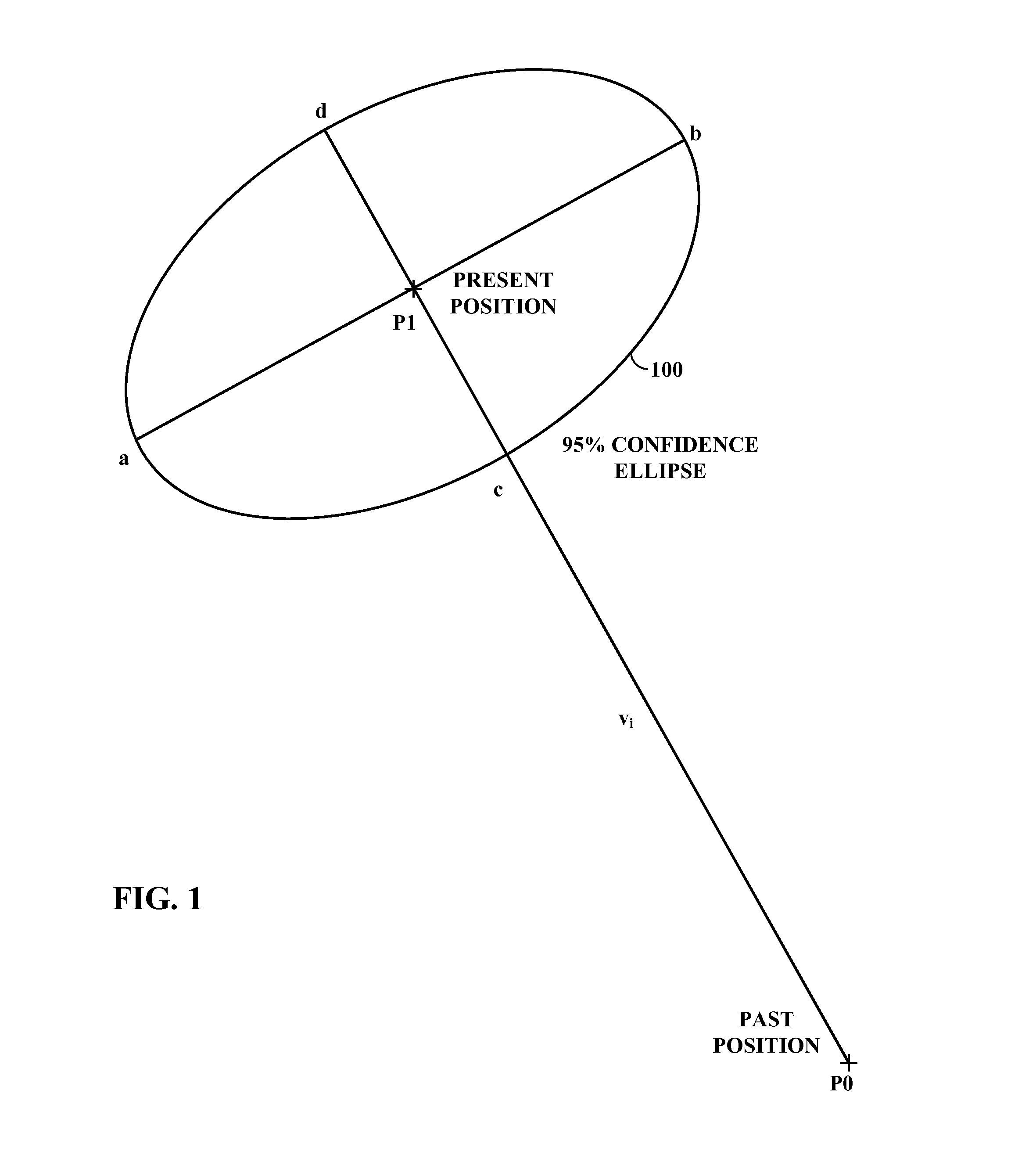

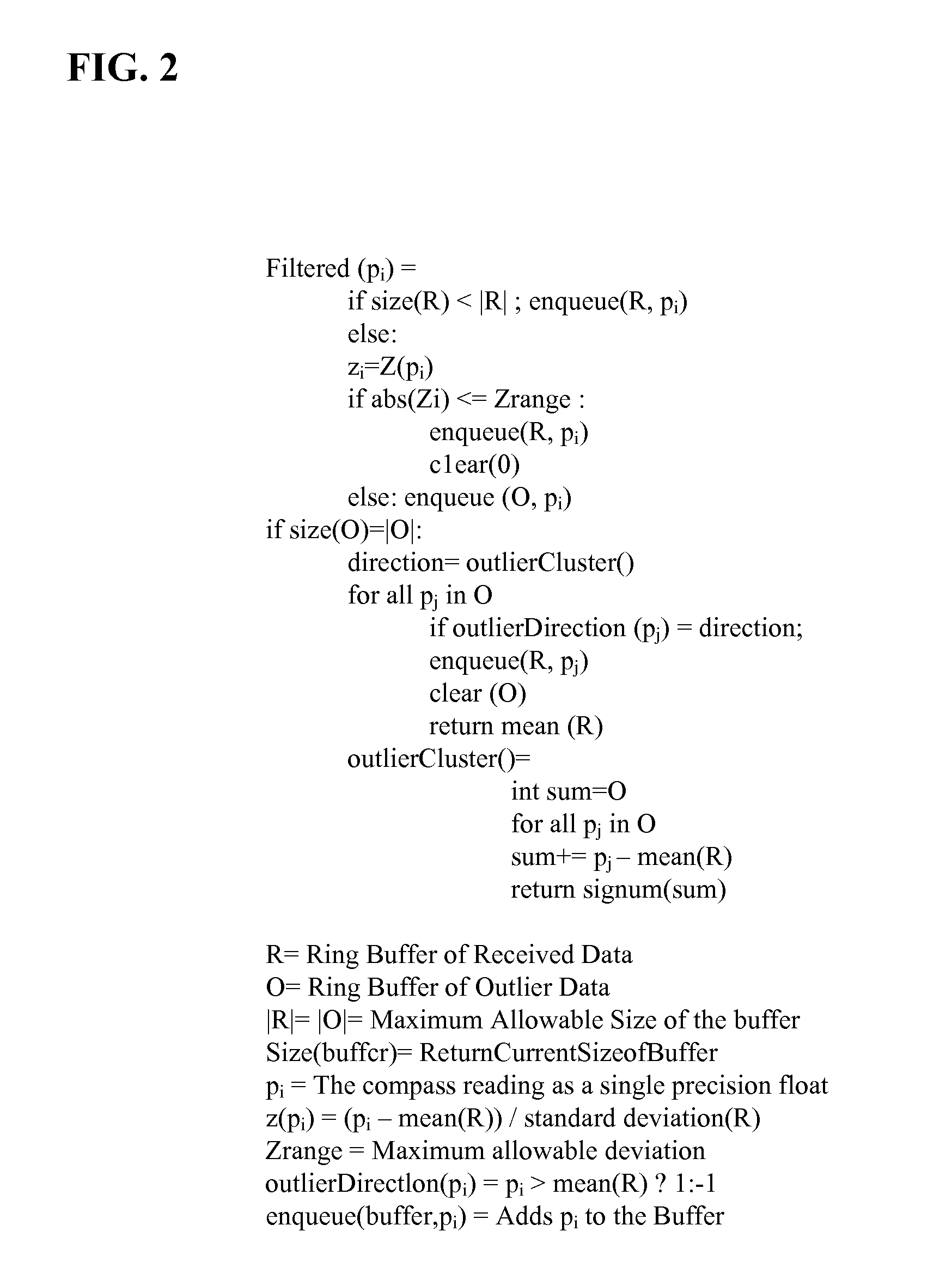

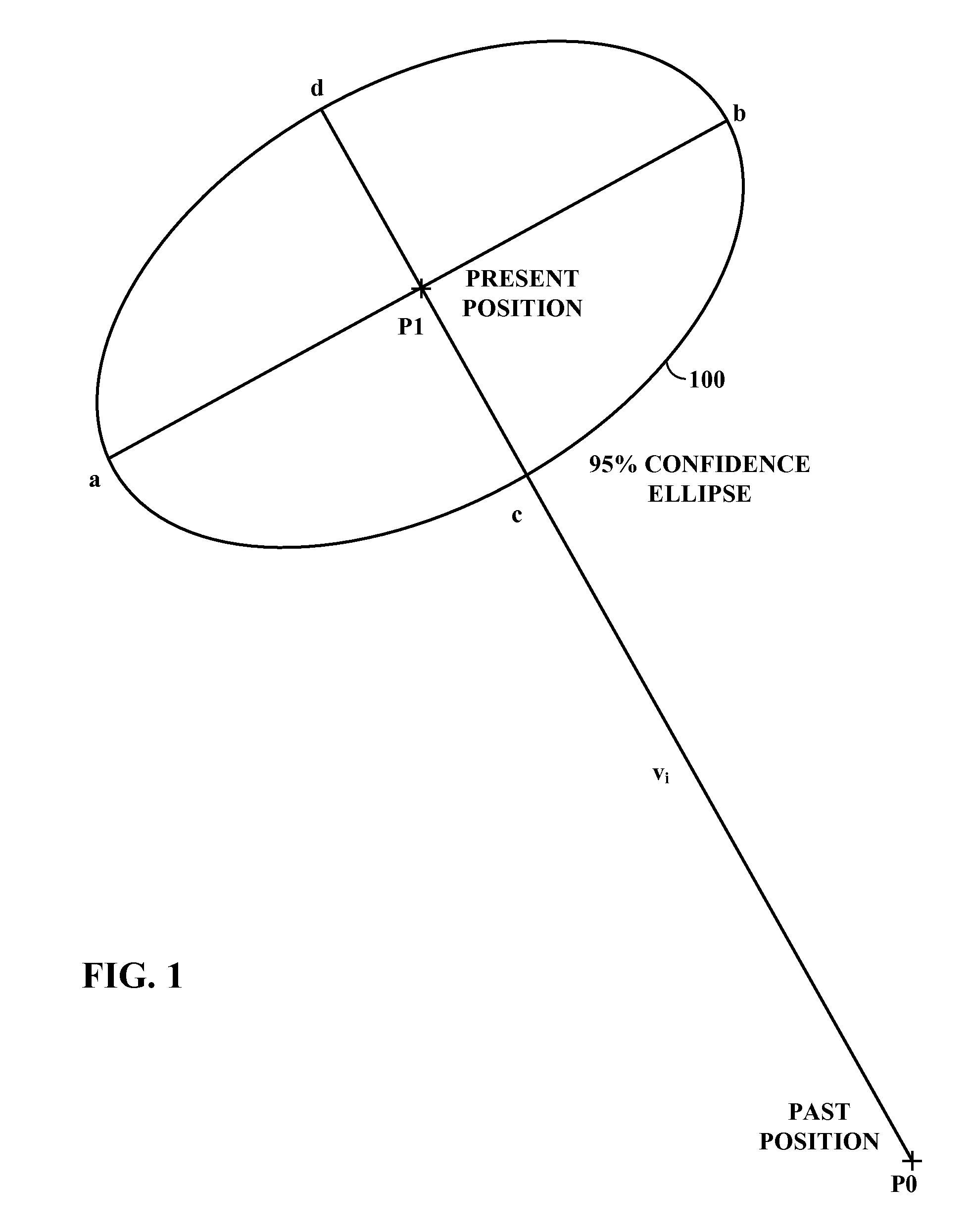

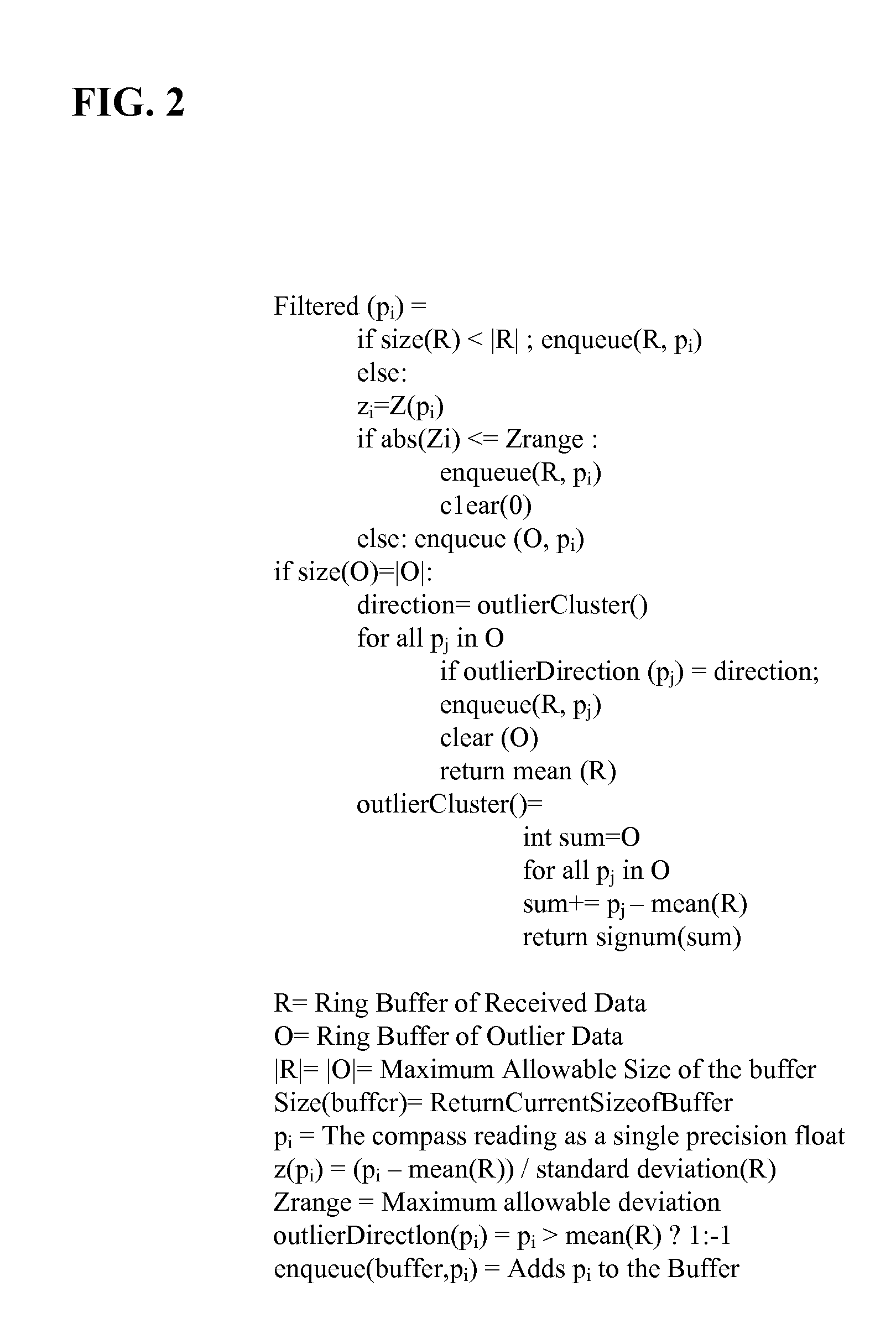

The present invention provides a cost effective, reliable system for producing a virtual scene combining live video enhanced by other imagery, including computer generated imagery. In one embodiment it includes a scene camera with an attached tracking camera, the tracking camera viewing a tracking marker pattern, which has a plurality of tracking markers with identifying indicia. The tracking marker pattern is positioned proximate so that when viewed by the tracking camera, the coordinate position of the scene camera can be determined in real time. The present invention also includes novel filtering algorithms, that vary based on camera motion and maintain accurate positioning.

Owner:CINITAL

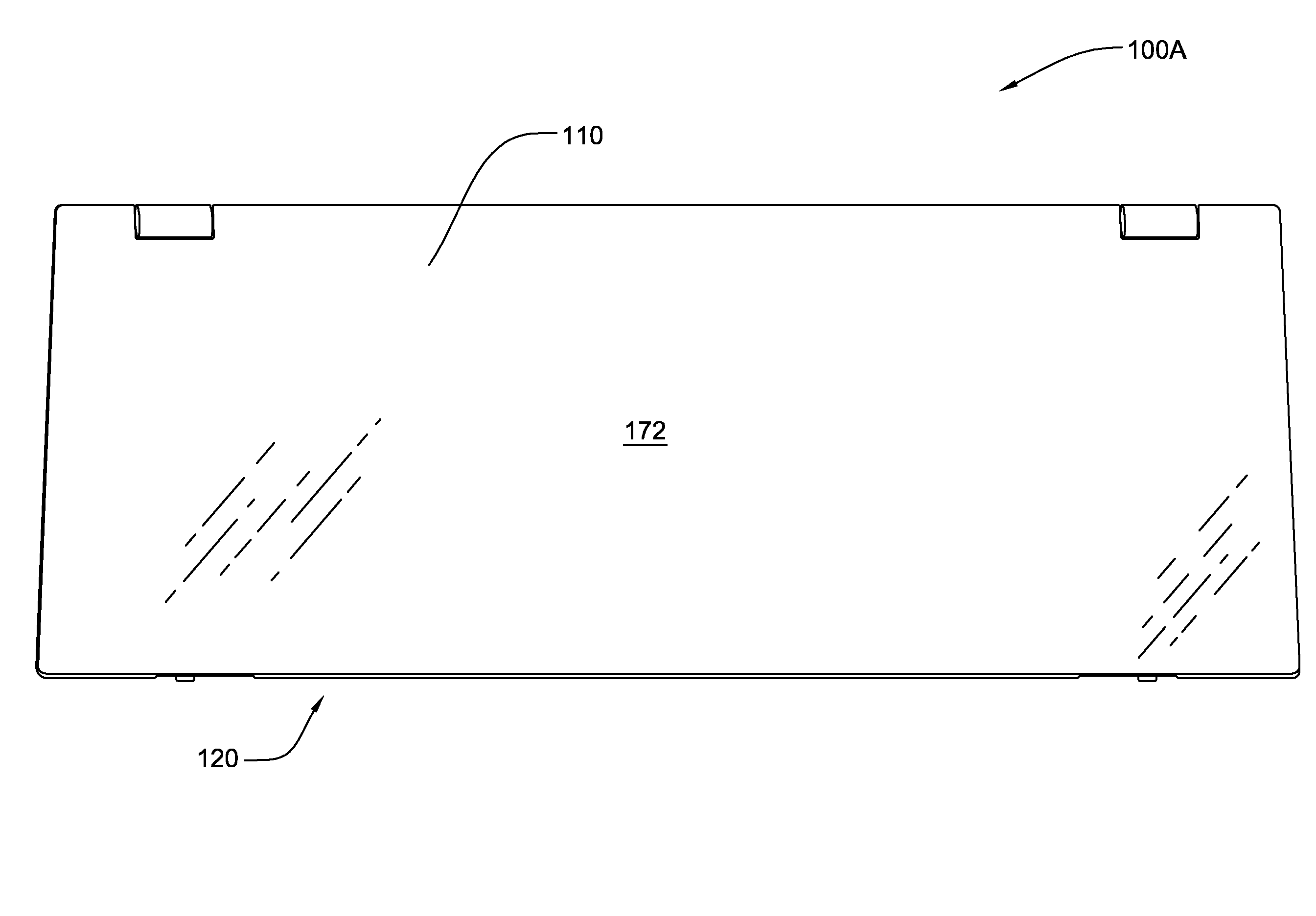

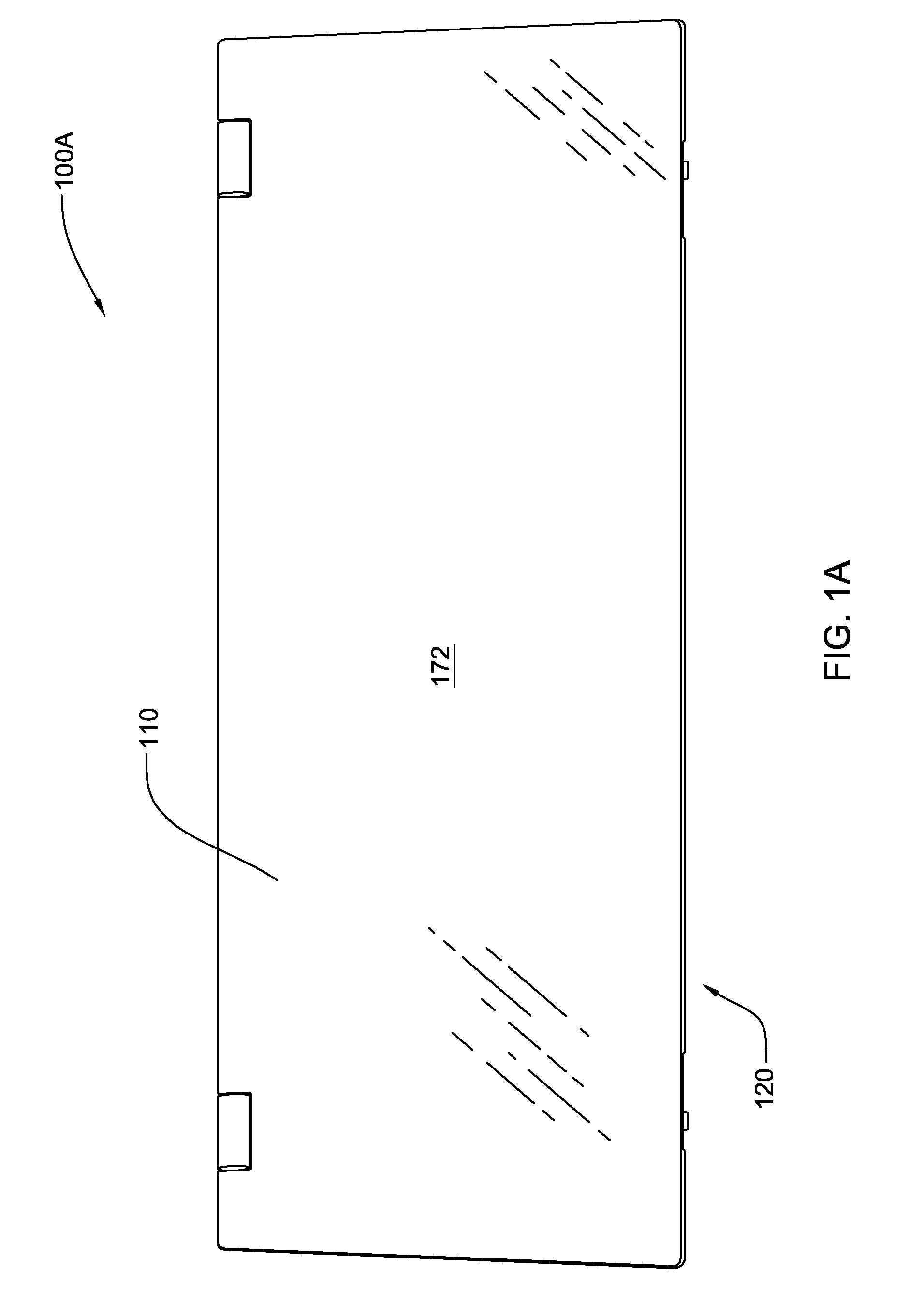

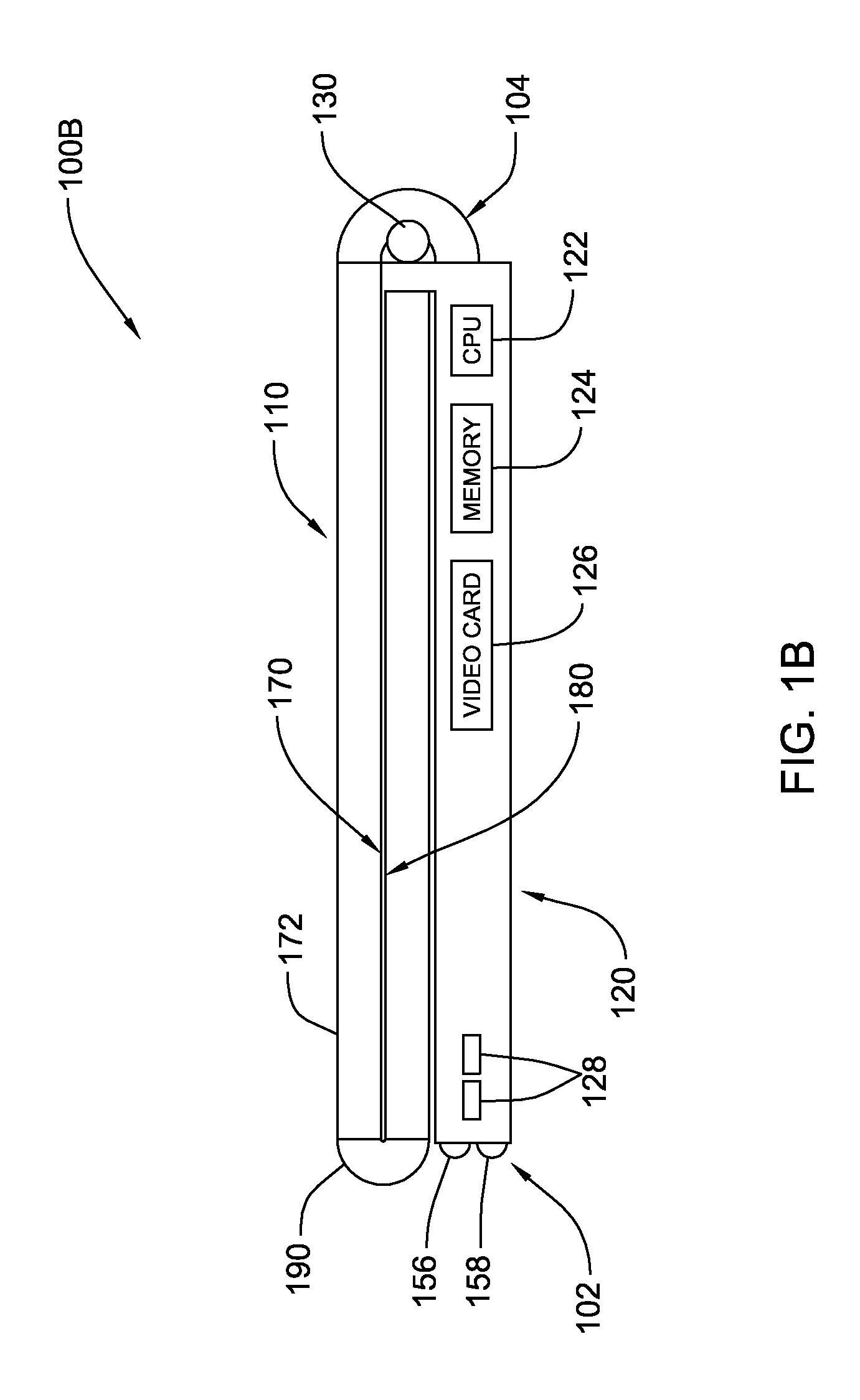

Compact size portable computer having a fully integrated virtual keyboard projector and a display projector

InactiveUS20100067181A1Television system detailsPicture reproducers using projection devicesComputer-generated imageryProjector

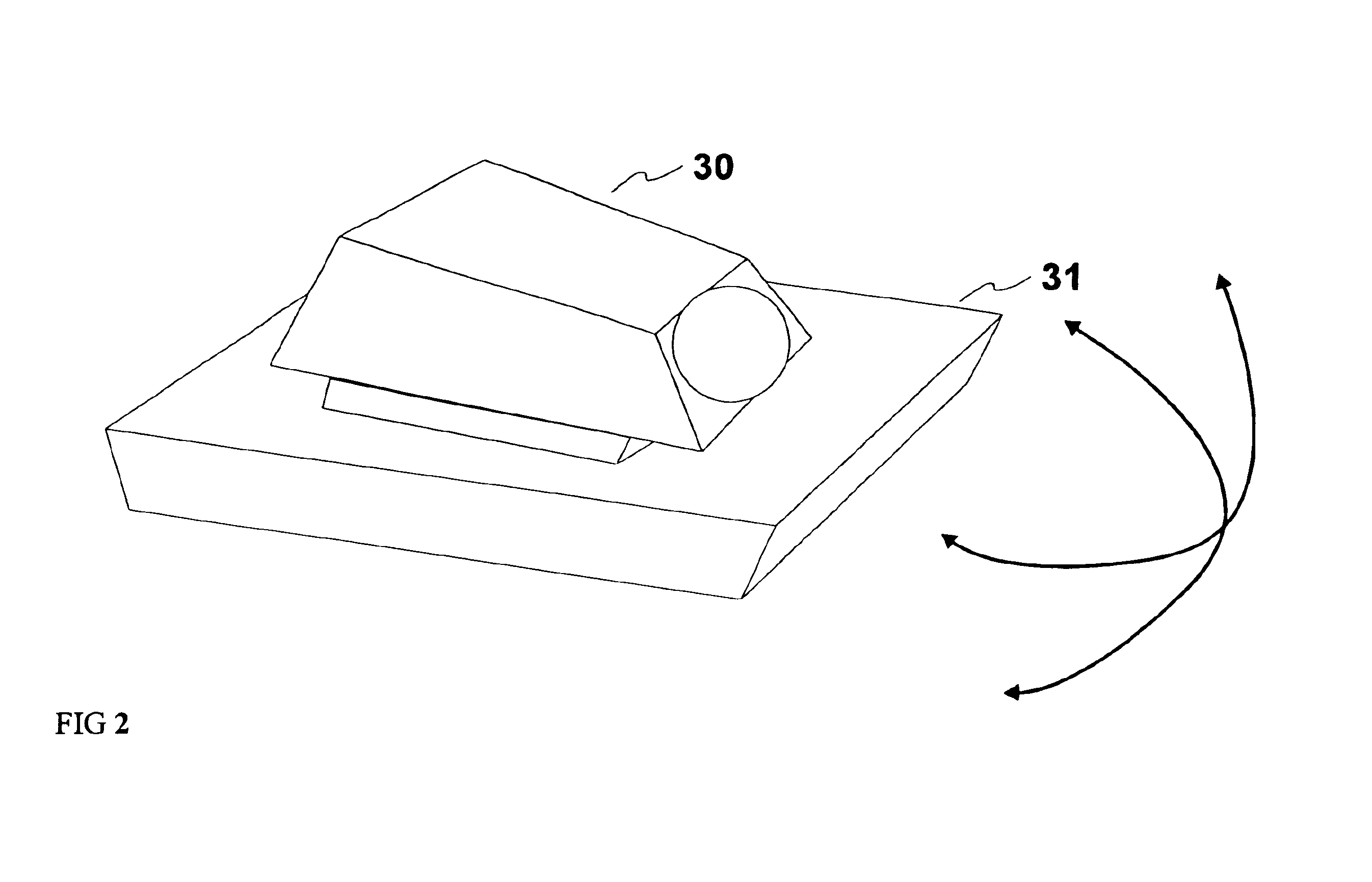

A computer with a fully integrated virtual keyboard projector and a display projector. The computer includes a base having a CPU, a video card and memory, a screen pivotally mounted to one side of the base, and a display projector mounted to an opposite side of the base and angled to project a computer generated image onto the screen. Further, the computer includes a keyboard projector mounted to the opposite side of the base to project a keyboard onto a surface adjacent to the opposite side of the base. Further, the computer includes another display projector mounted to the opposite side of the base and digitally synchronized with the first display projector for projecting a superimposed image.

Owner:LINKEDIN

Projection display system with pressure sensing at a screen, a calibration system corrects for non-orthogonal projection errors

InactiveUS20080042999A1Special service provision for substationProjectorsComputer graphics (images)Projection image

Calibration structure, method, and code for correcting non-orthogonal misalignment of a computer-generated image that is projected onto a large screen touch-detecting display, so that a computer-generated image appears substantially where the display is touched. Structure / method / code generates at least four calibration marks, respectively, substantially proximate four corners of a projected image to be displayed on the display. Structure / method / code, responsive to a touch on the large screen touch-detecting display where each of the four calibration marks is displayed, identifies horizontal and vertical coordinates of each touched location. Structure / method / code calibrates the projected image to the display after the four calibration marks have been touched. Structure / method / code, responsive to another touch on the display after calibration, displays a computer image substantially at the location of the another touch.

Owner:SMART TECH INC (CA)

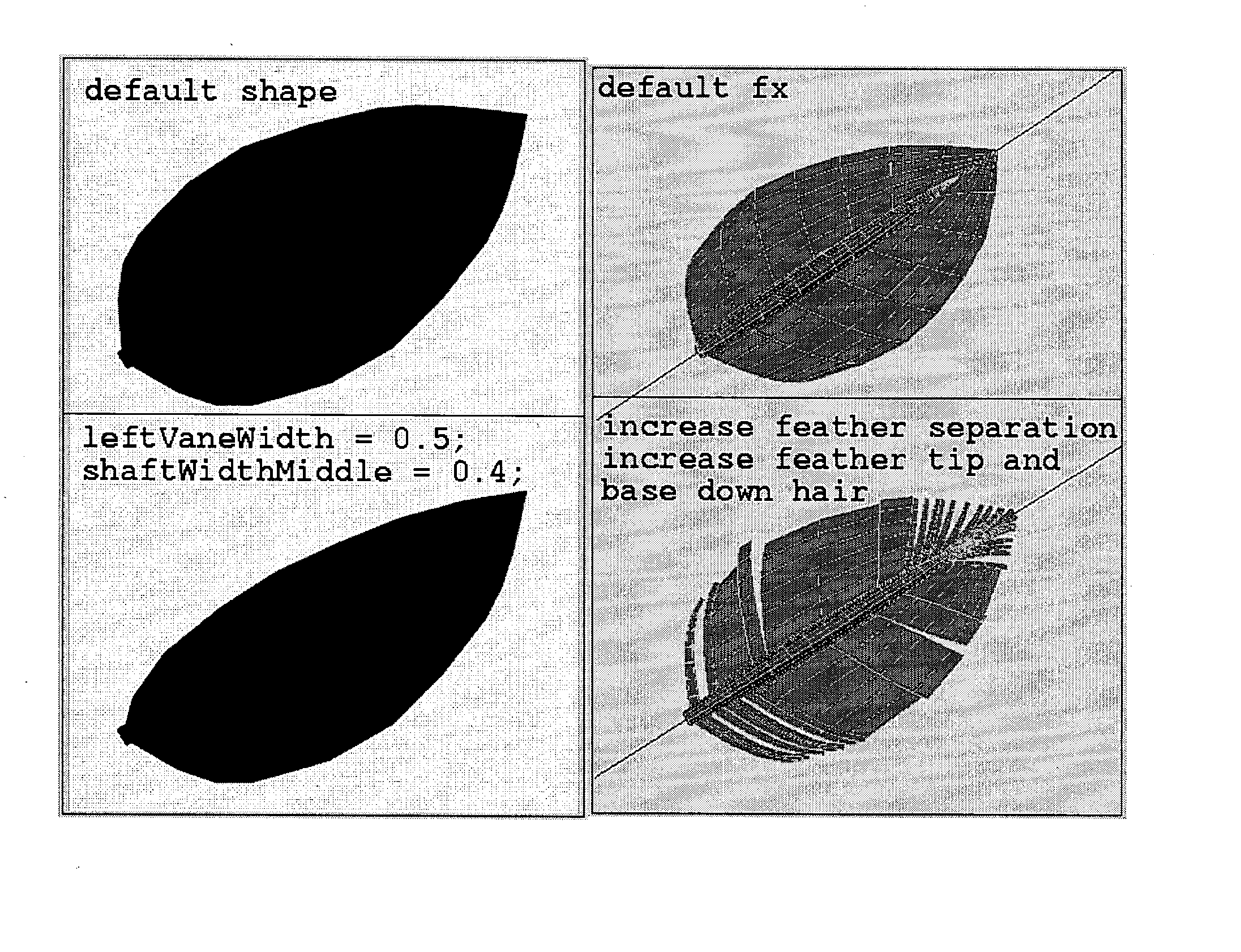

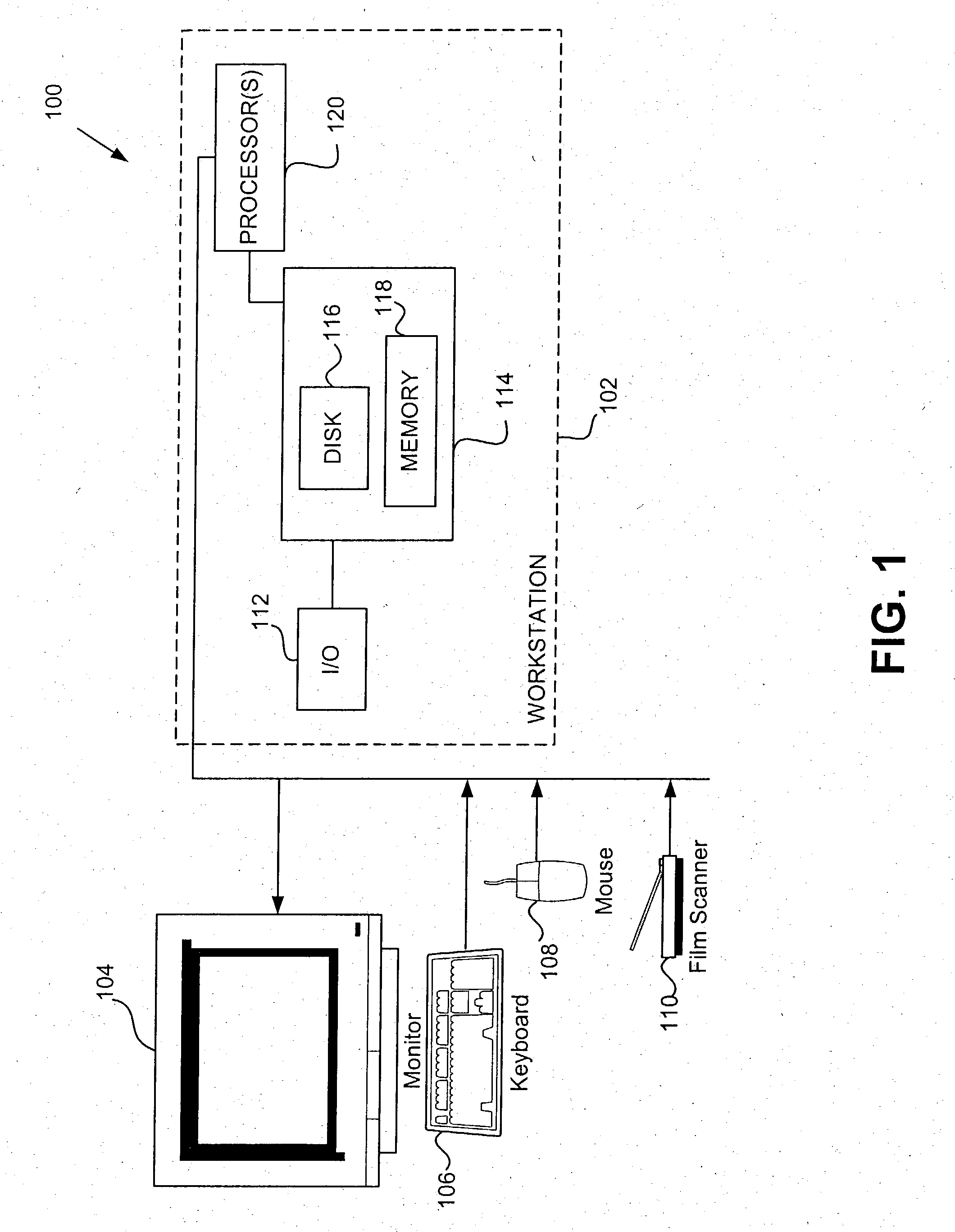

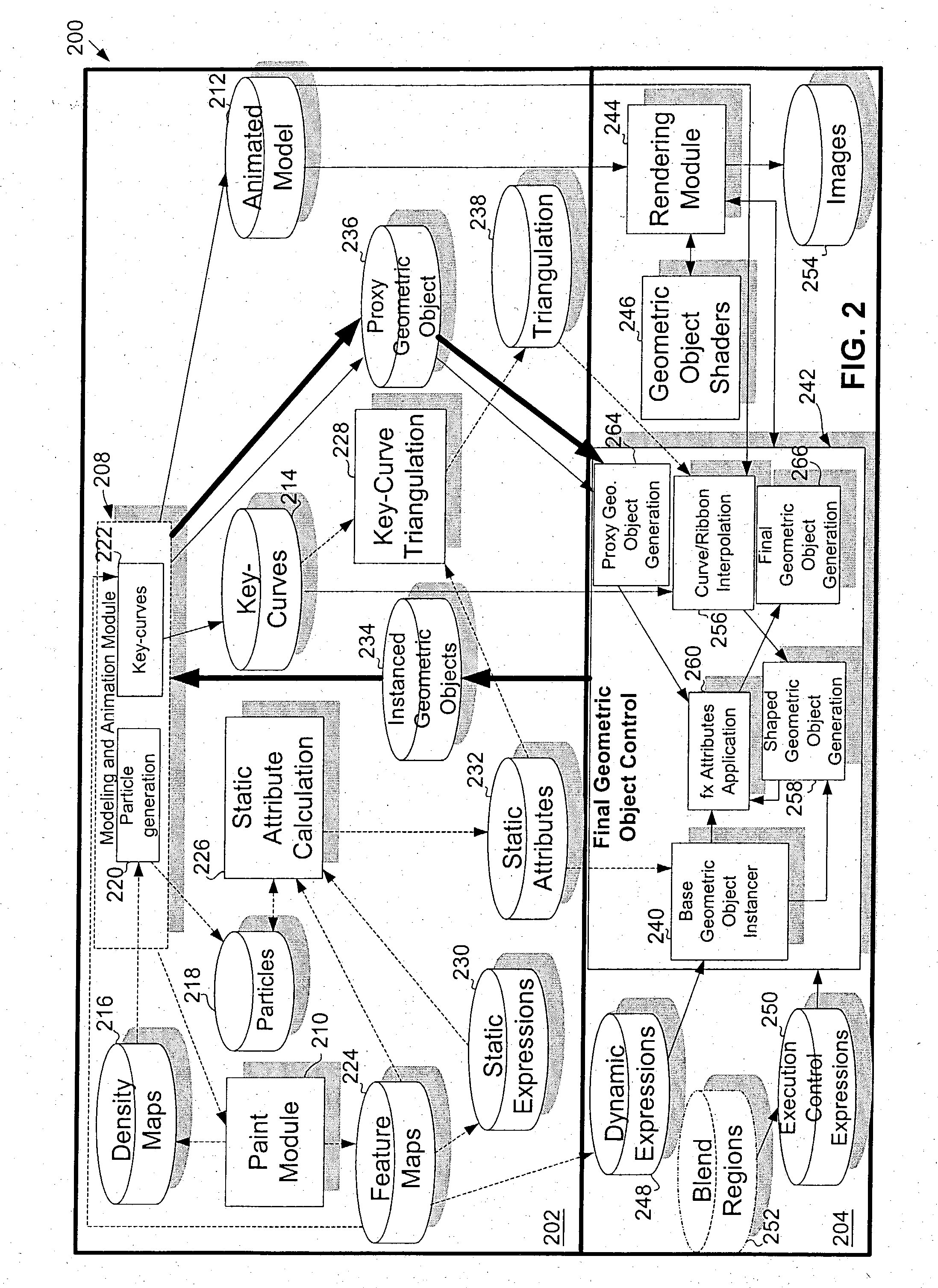

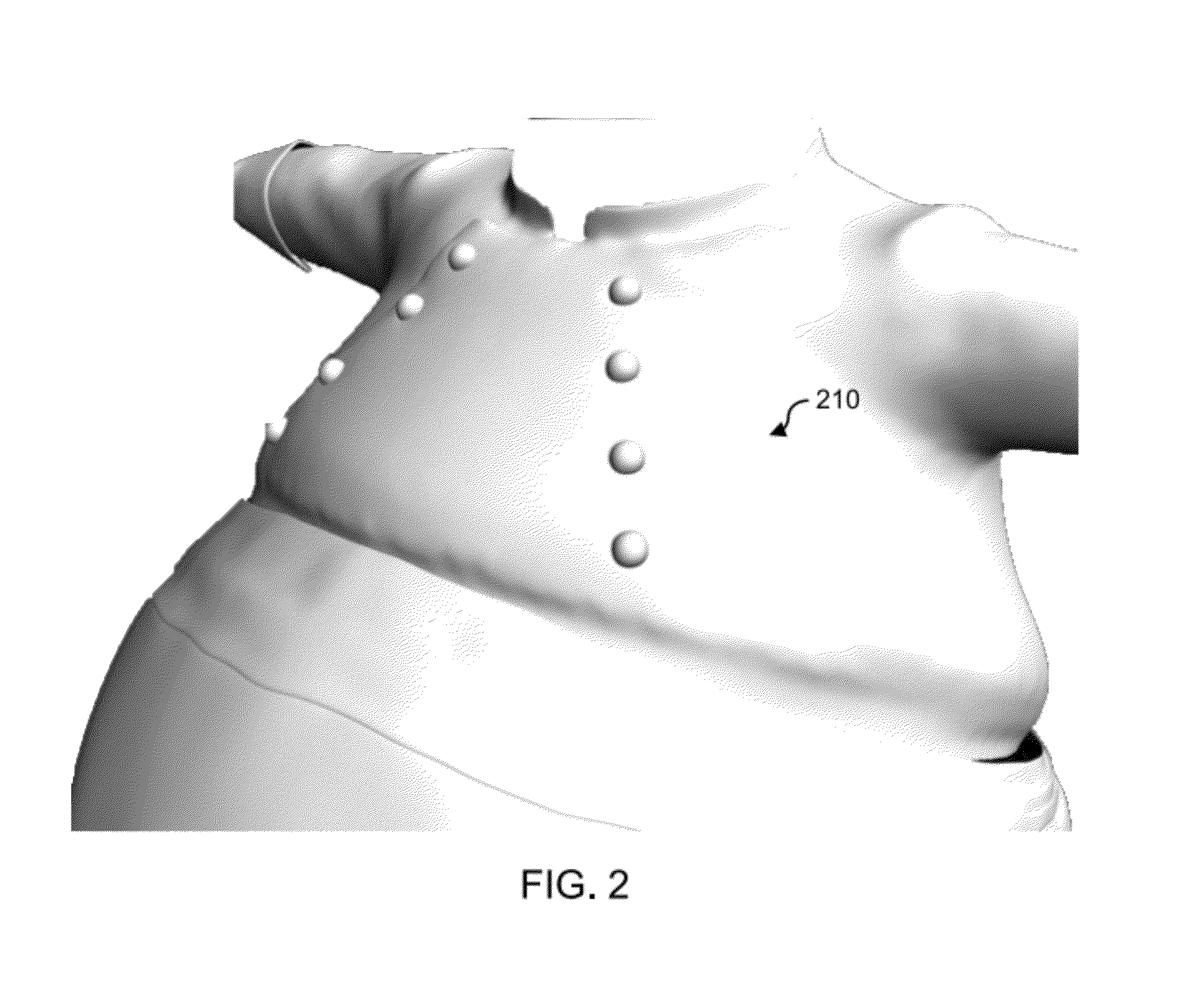

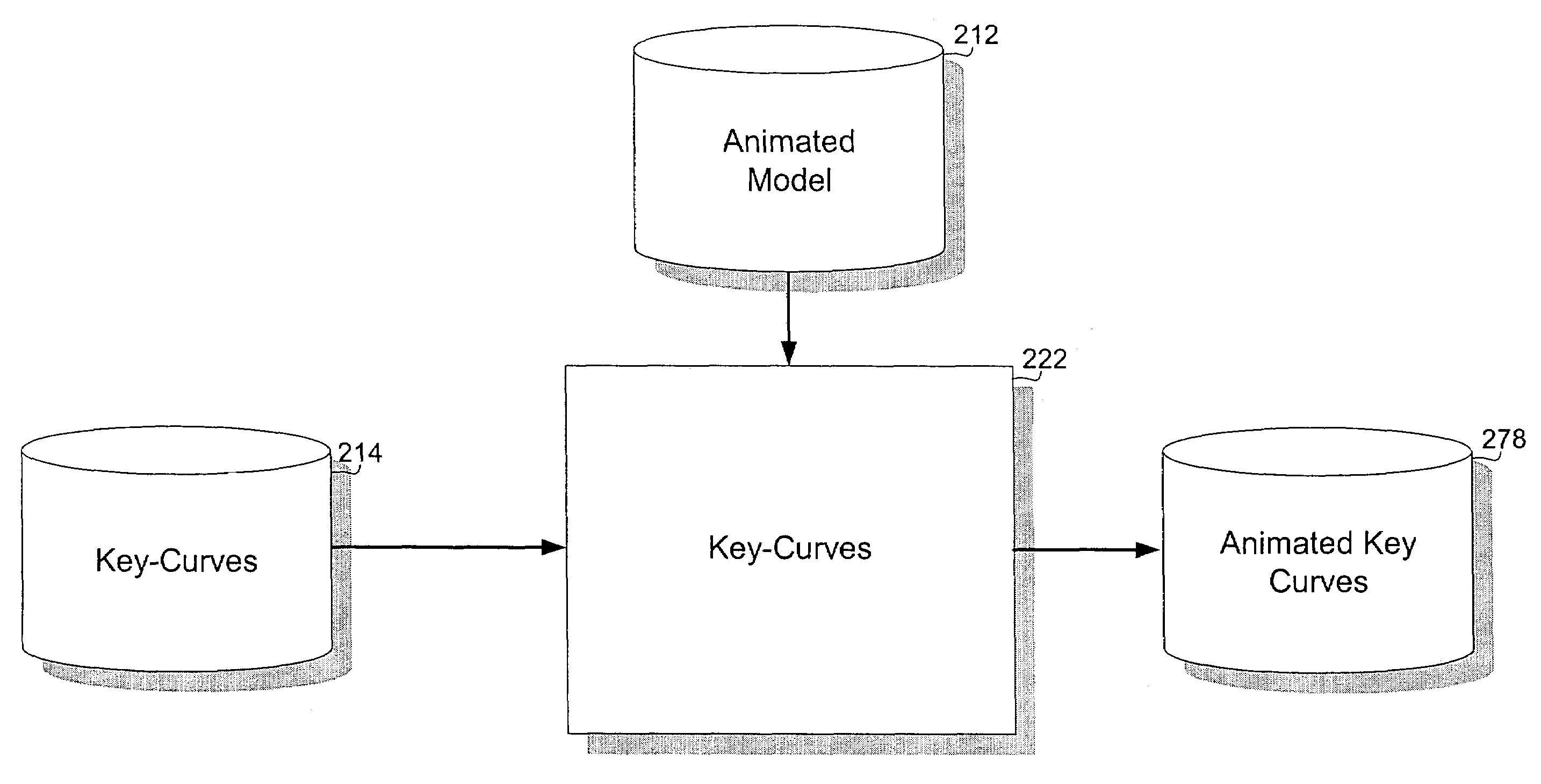

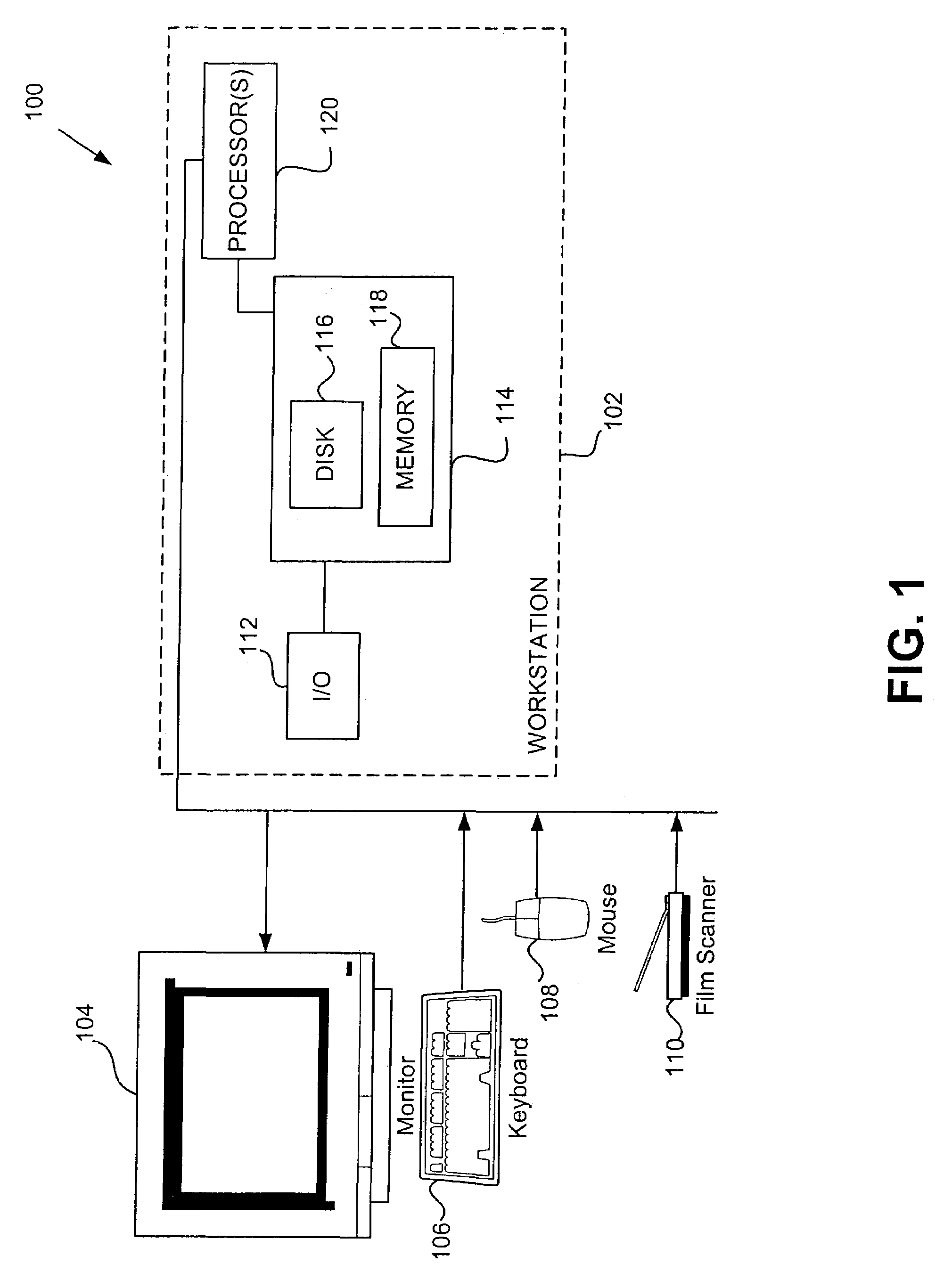

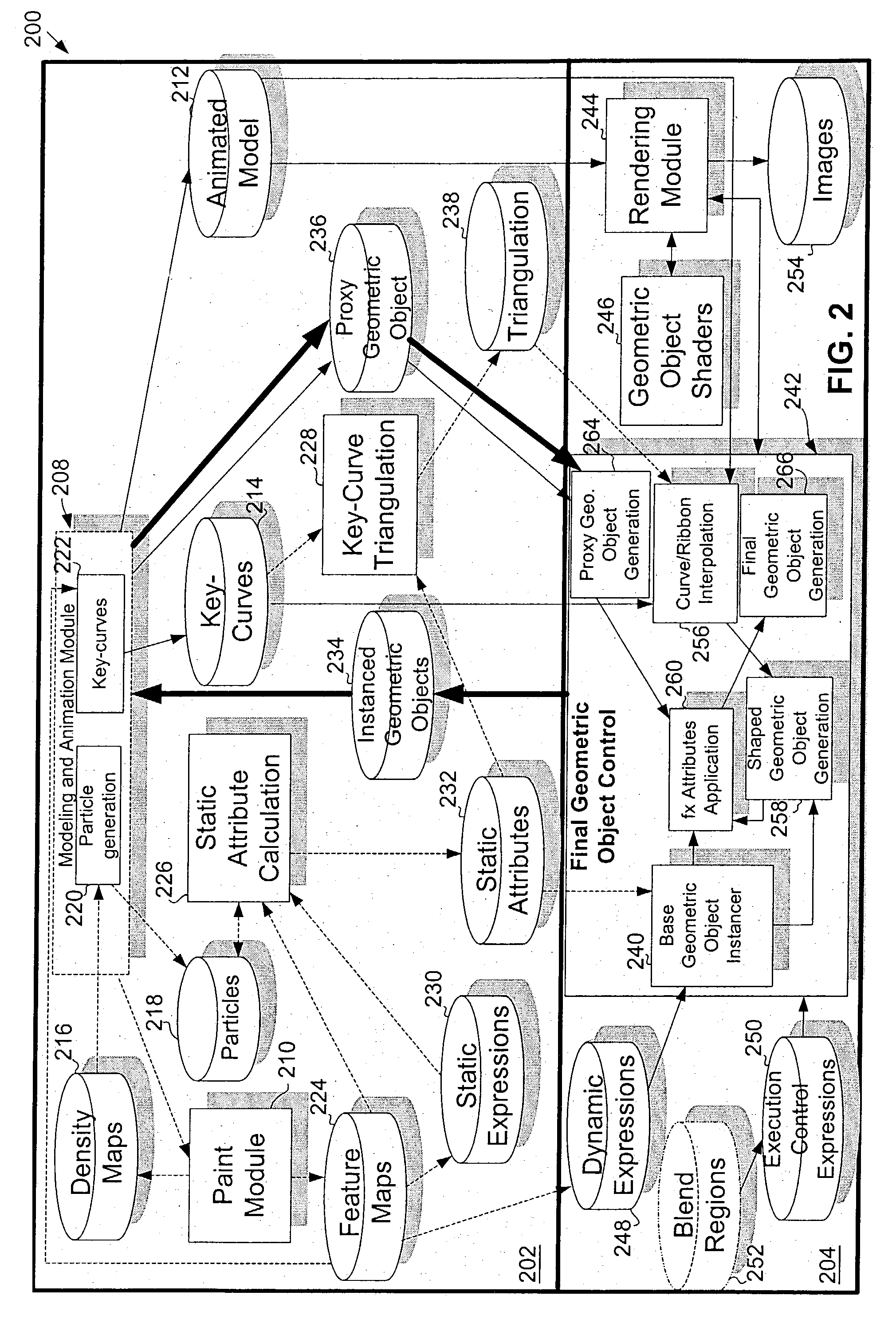

System and process for digital generation, placement, animation and display of feathers and other surface-attached geometry for computer generated imagery

ActiveUS20030179203A1Flexible and robust and efficient and easy to useCharacter and pattern recognitionCathode-ray tube indicatorsComputer generationComputer-generated imagery

A system and process for digitally representing a plurality of surface-attached geometric objects on a model. The system and process comprises generating a plurality of particles and placing the plurality of particles on a surface of the model. A first plurality of curves are generated and placed at locations on the model. Characteristics are defined for the first plurality of curves. A second plurality of curves is interpolated from the first plurality of curves at locations of the particles. A plurality of surface-attached geometric objects is generated that replaces all of the particles and the second plurality of curves.

Owner:SONY TOKYO JAPAN & SONY PICTURES ENTERTAINMENT

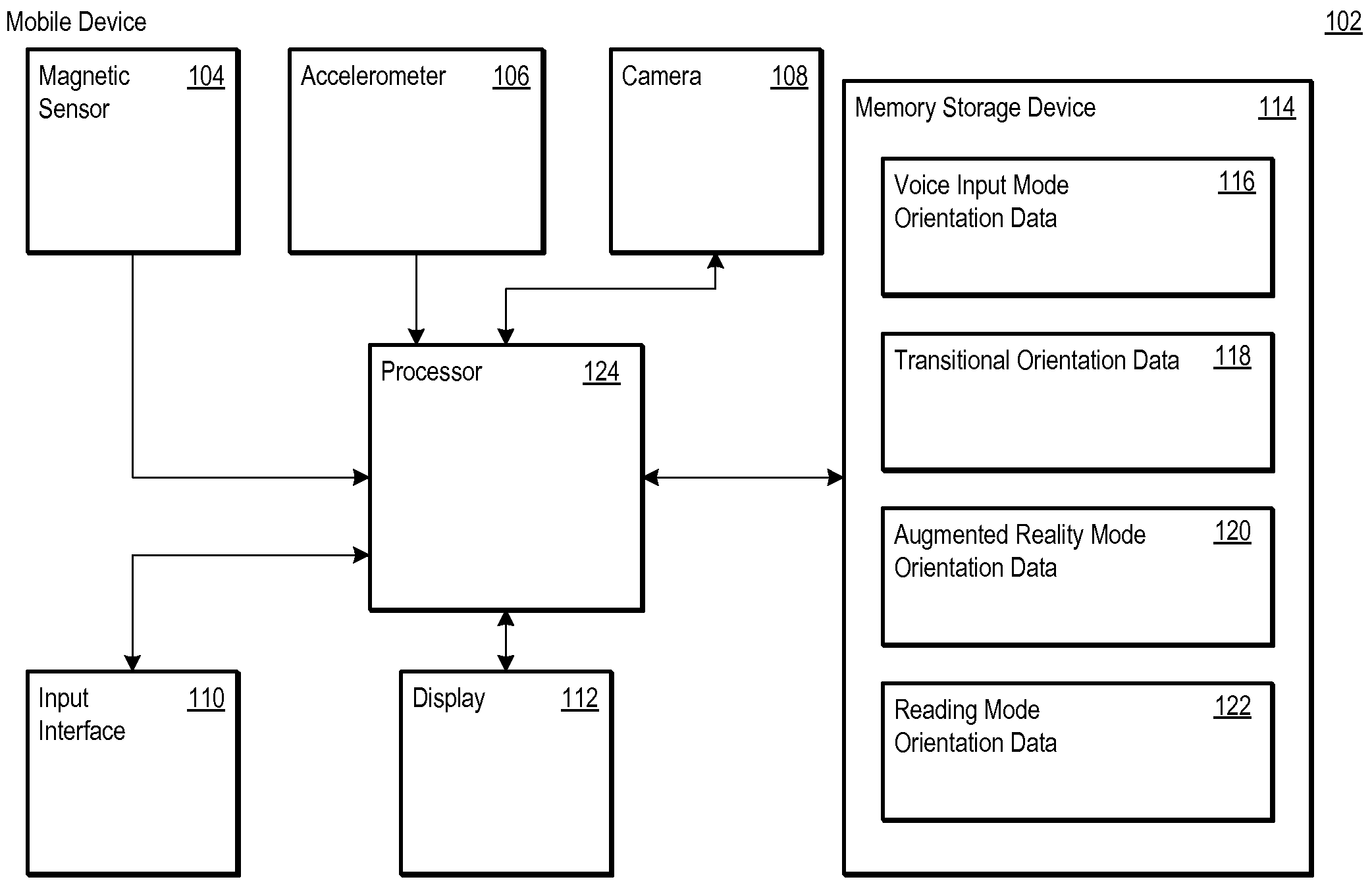

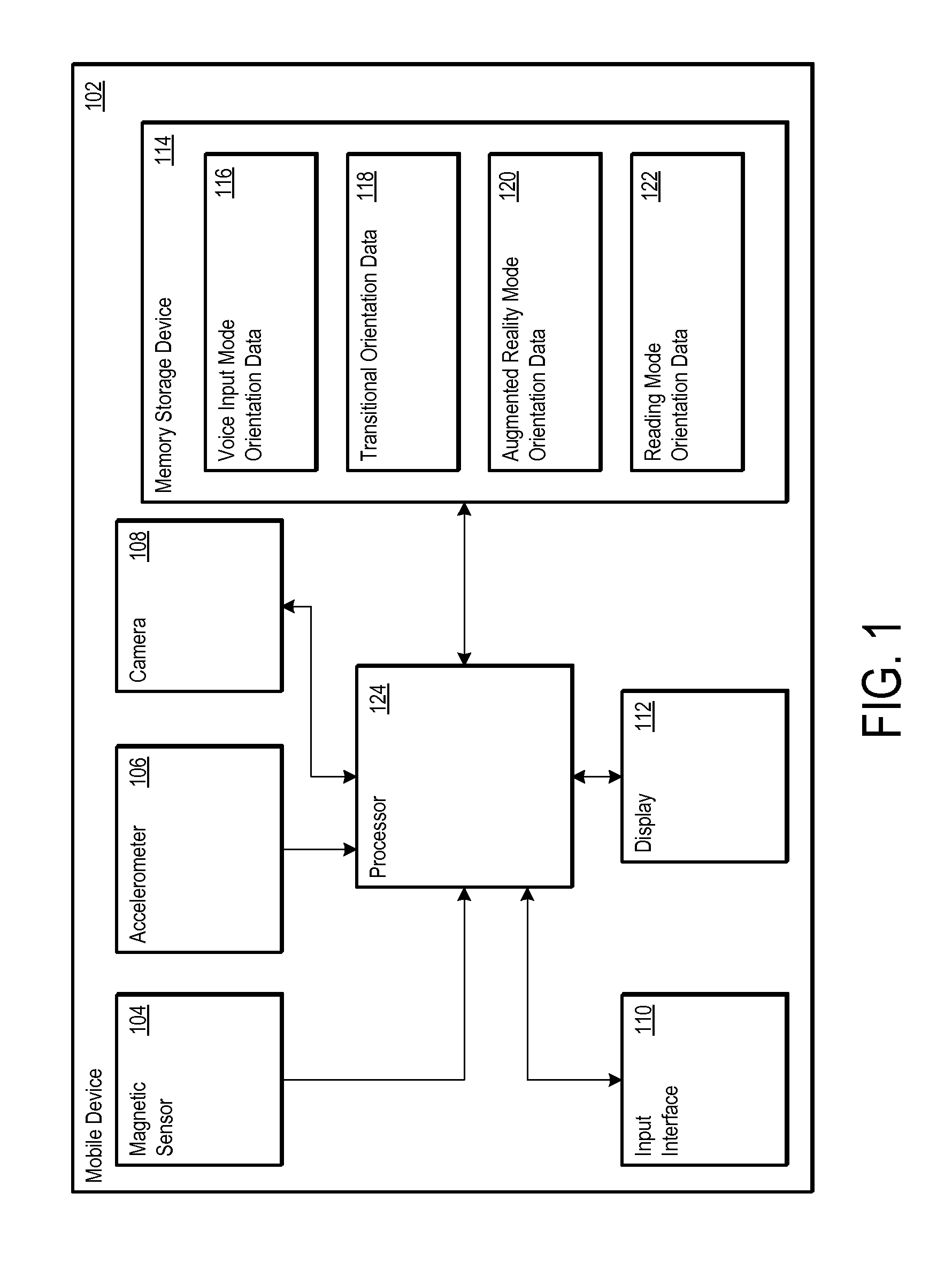

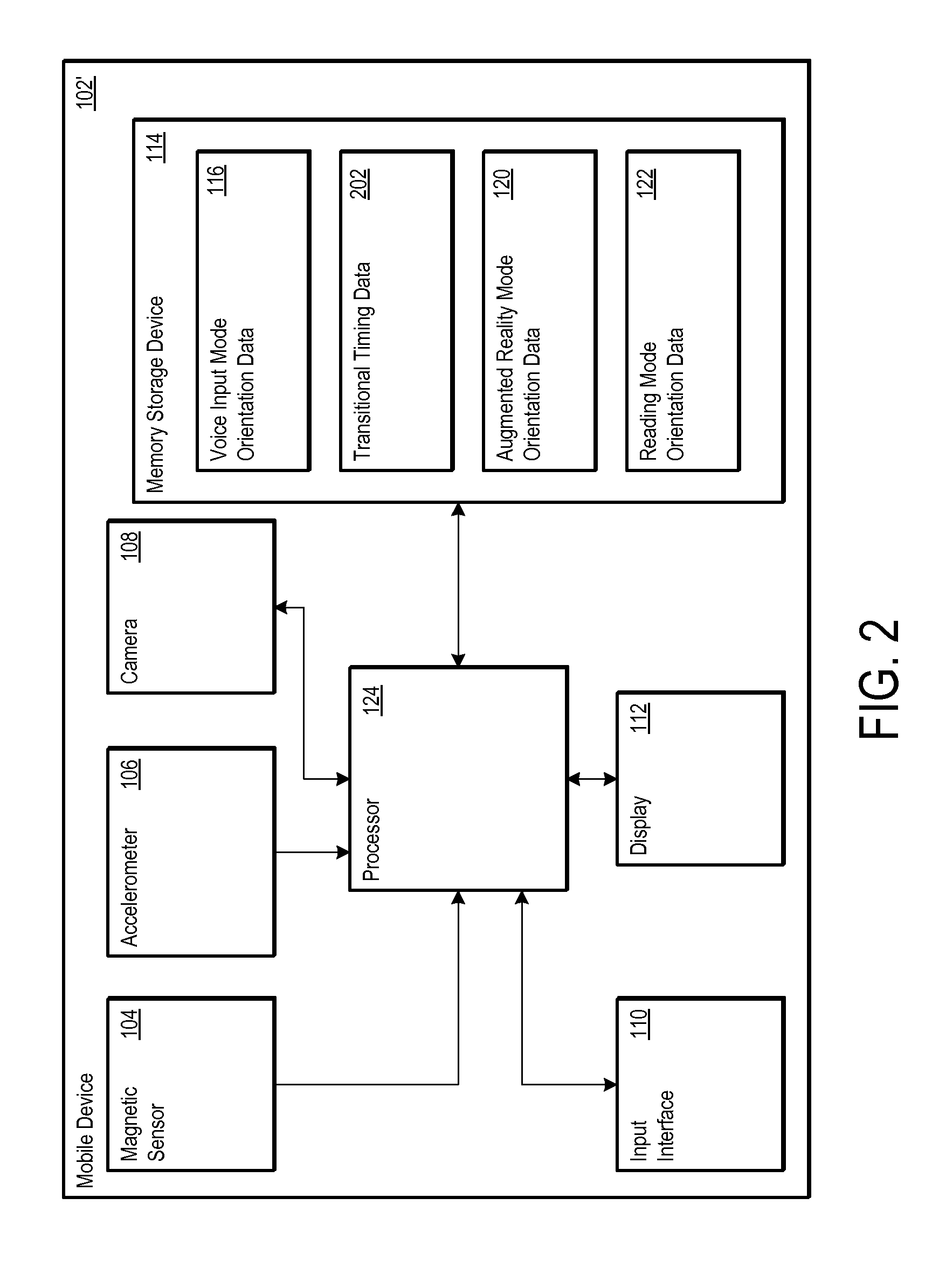

Switching between a first operational mode and a second operational mode using a natural motion gesture

A mobile device is operative to change from a first operational mode to a second or third operational mode based on a user's natural motion gesture. The first operational mode may include a voice input mode in which a user provides a voice input to the mobile device. After providing the voice input to the mobile device, the user then makes a natural motion gesture and a determination is made as to whether the natural motion gesture places the mobile device in the second or third operational mode. The second operational mode includes an augmented reality display mode in which the mobile device displays images recorded from a camera overlaid with computer-generated images corresponding to results output in response to the voice input. The third operational mode includes a reading display mode in which the mobile device displays, without augmented reality, results output in response to the voice input.

Owner:GOOGLE LLC

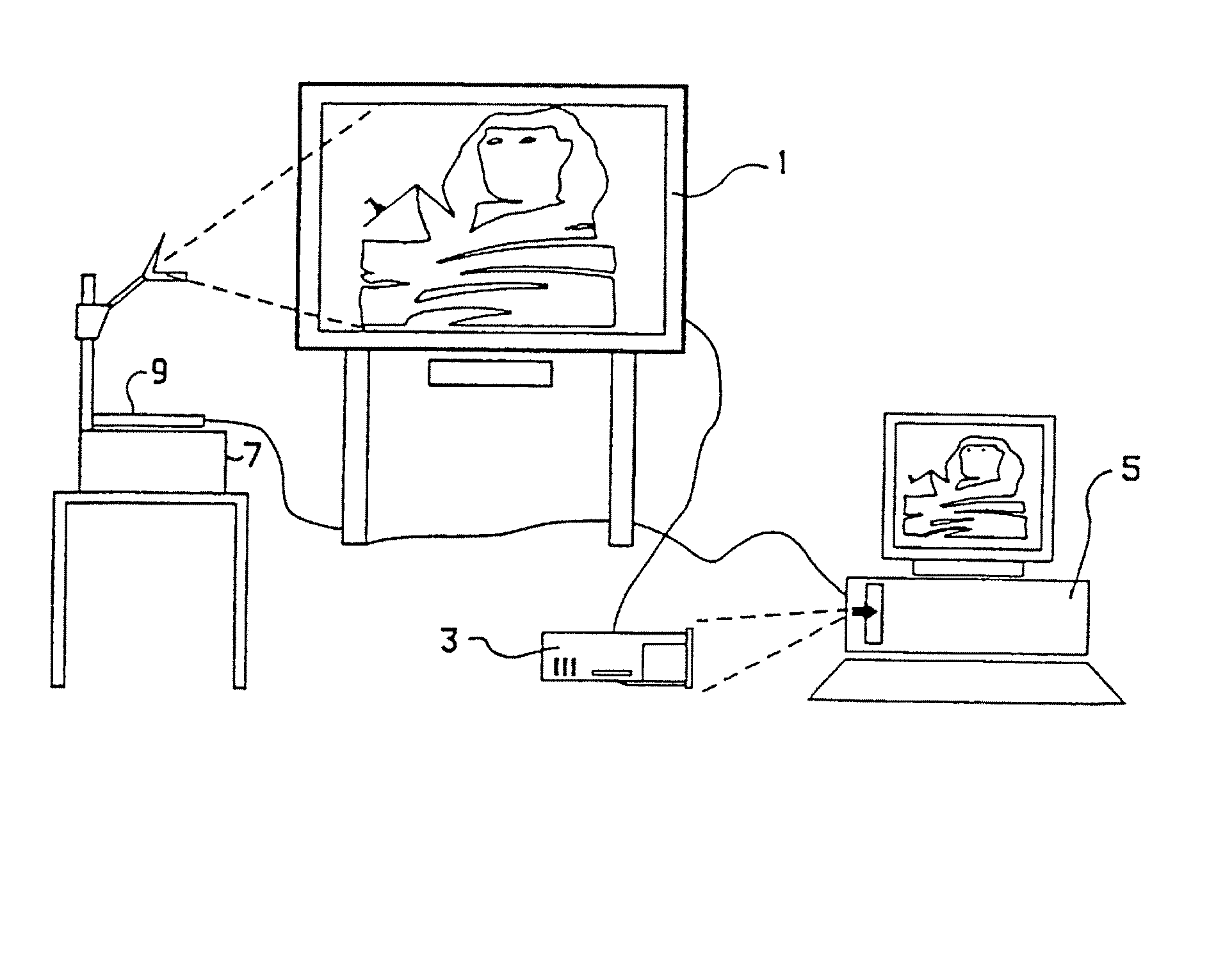

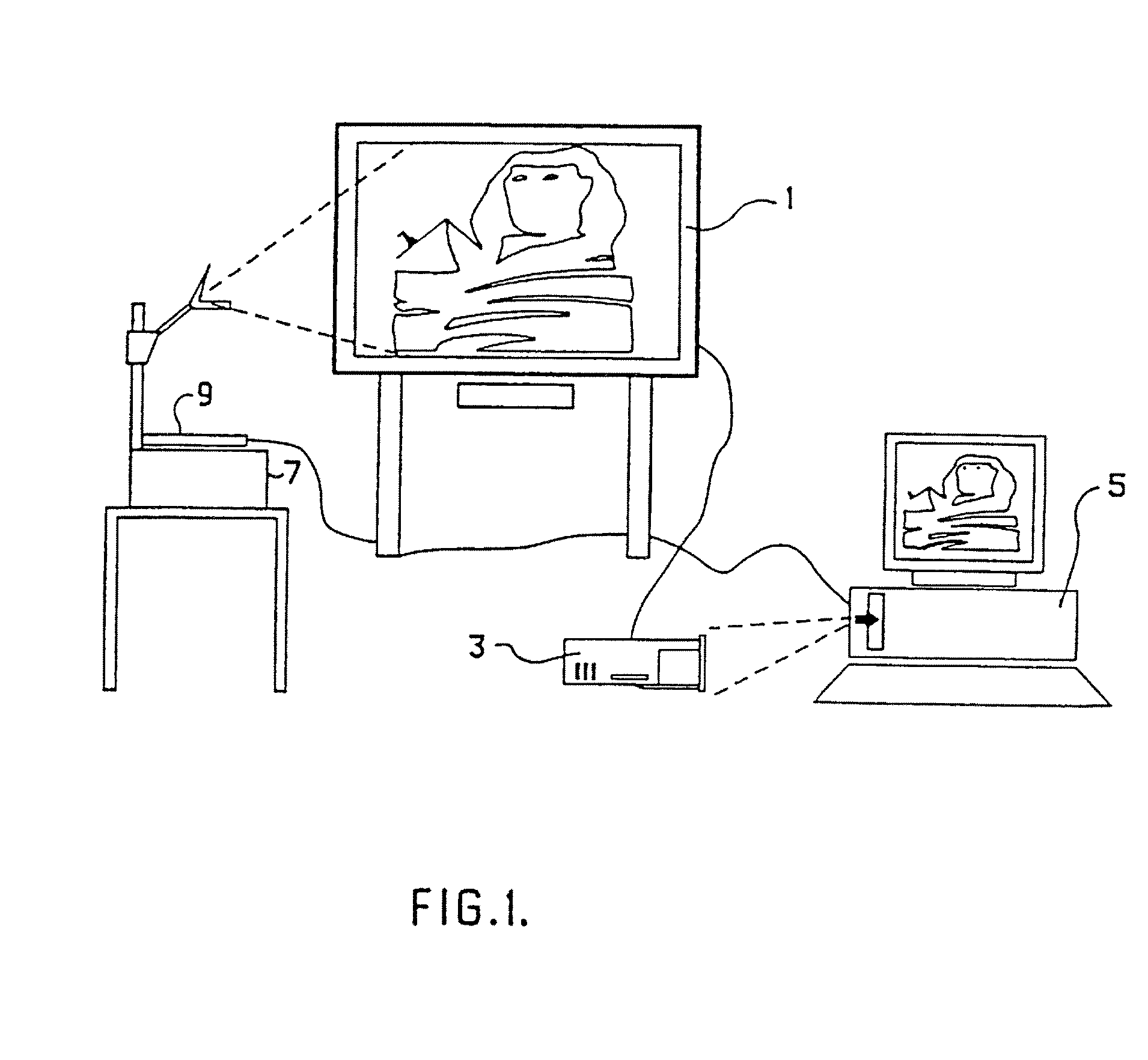

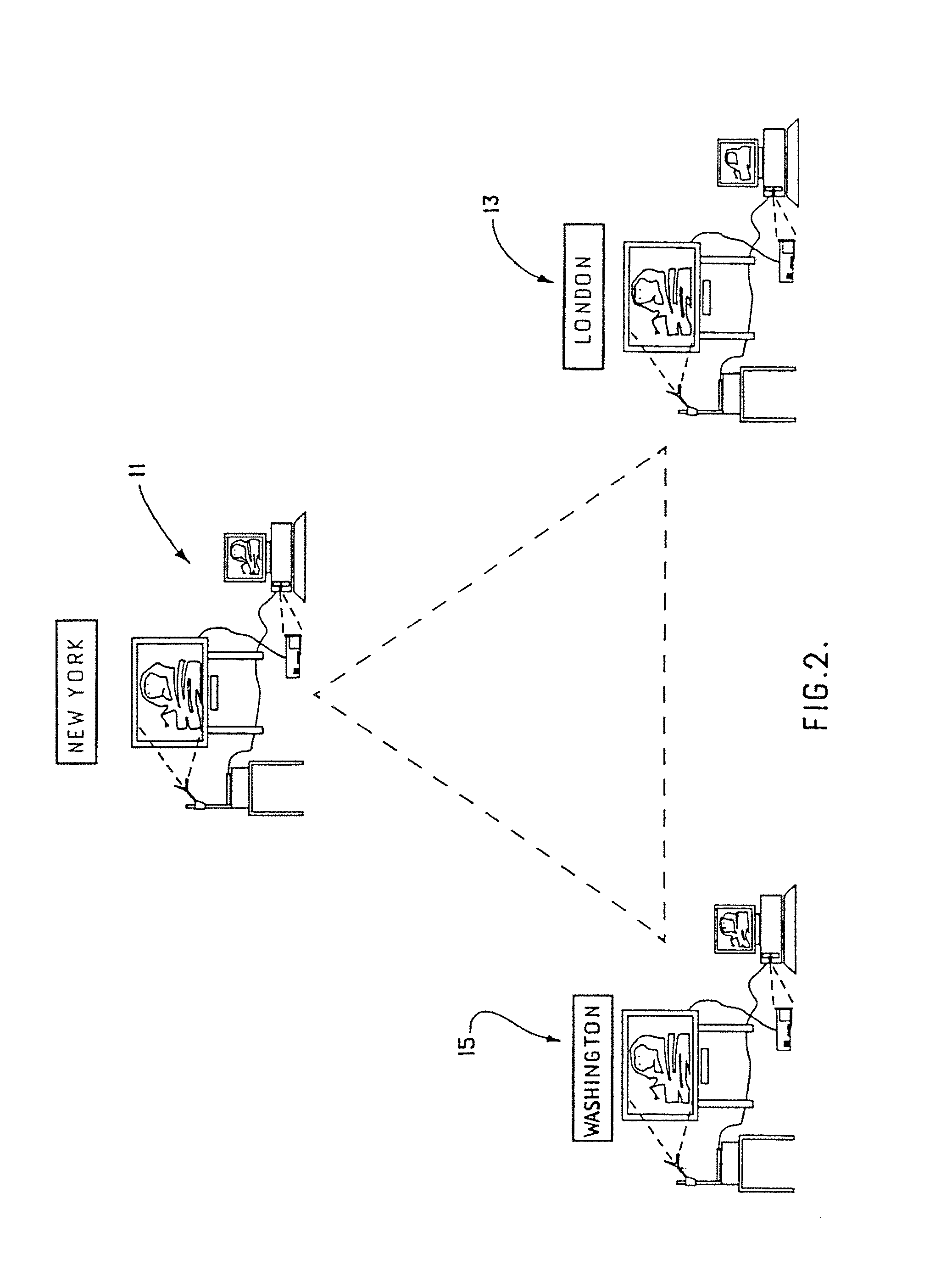

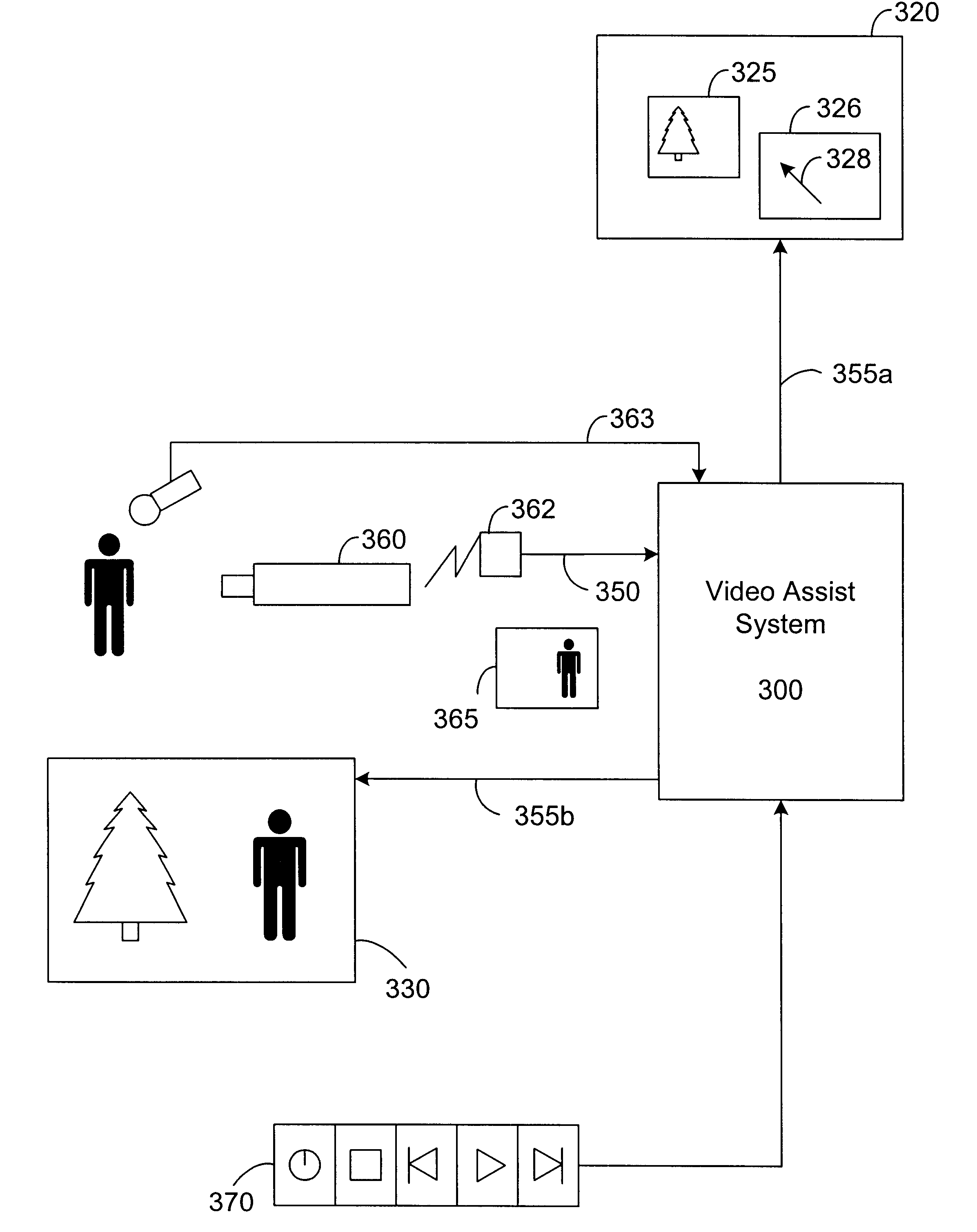

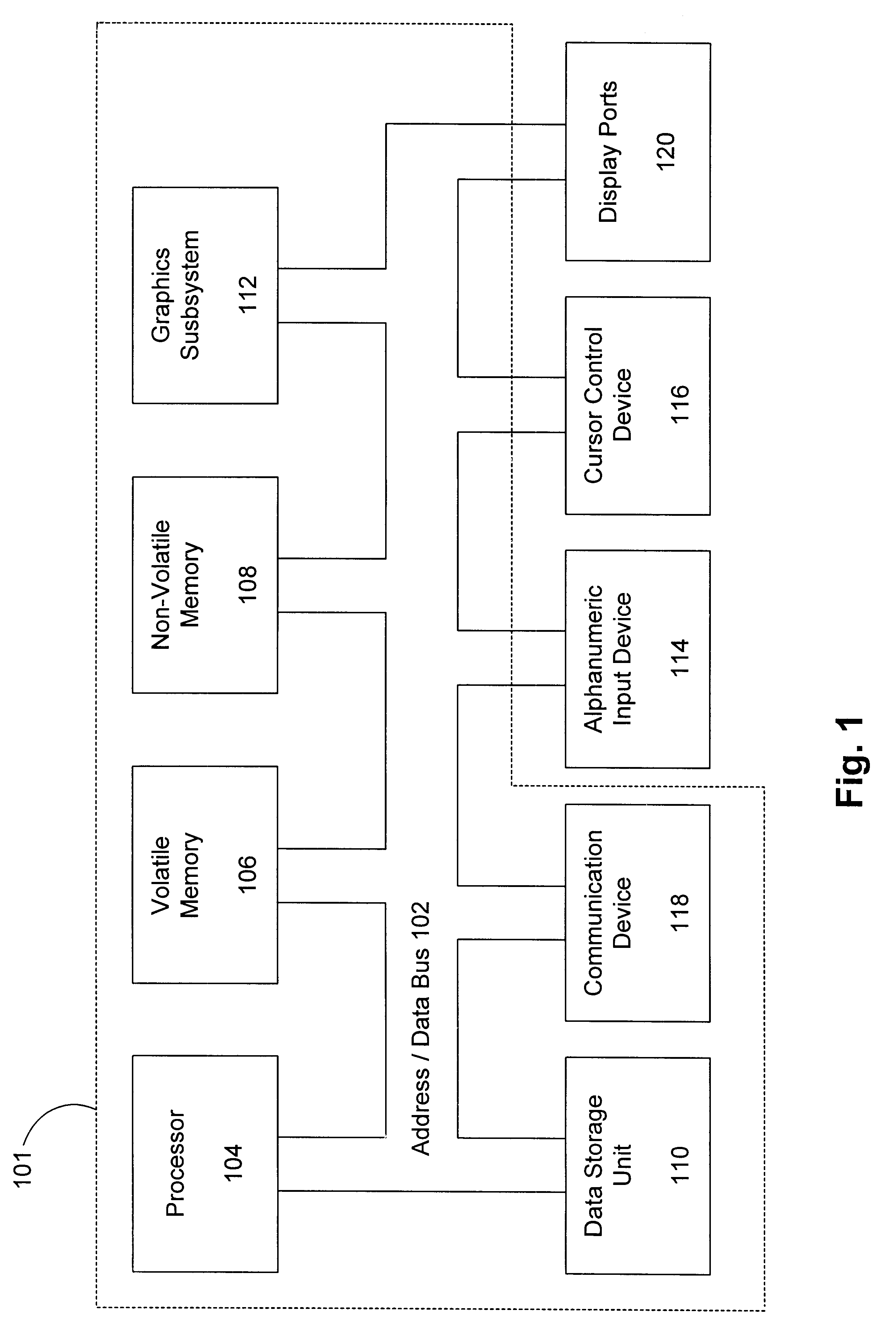

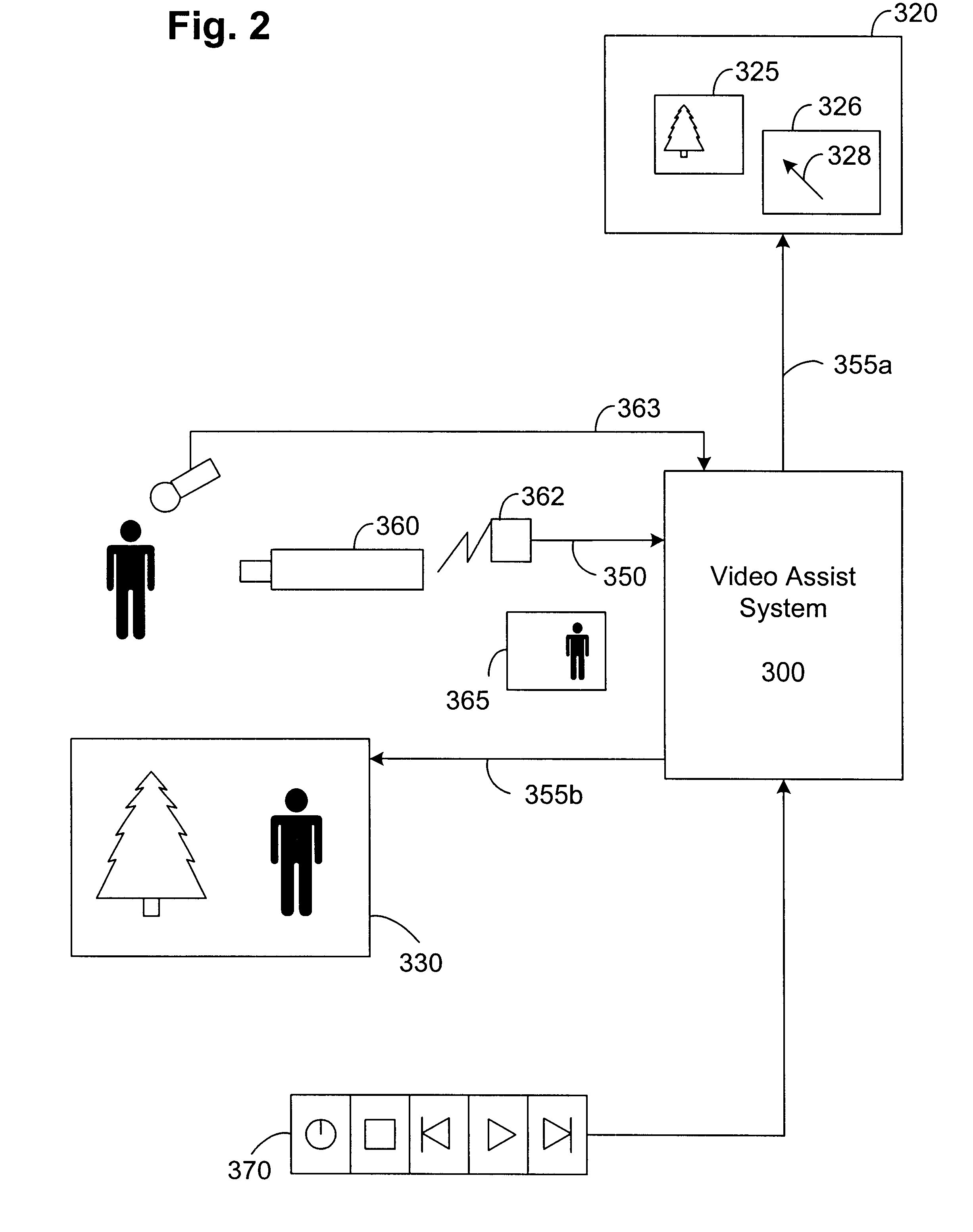

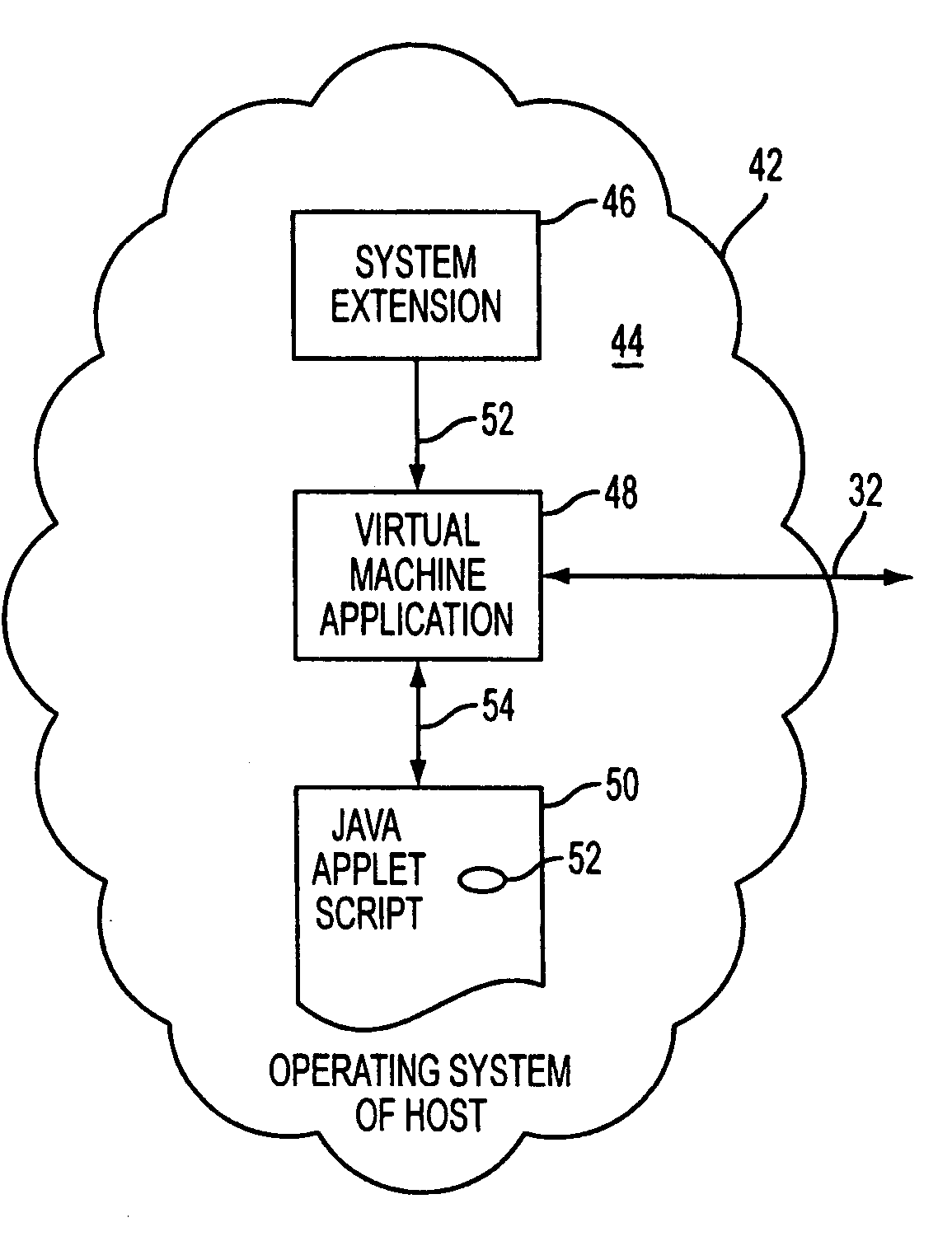

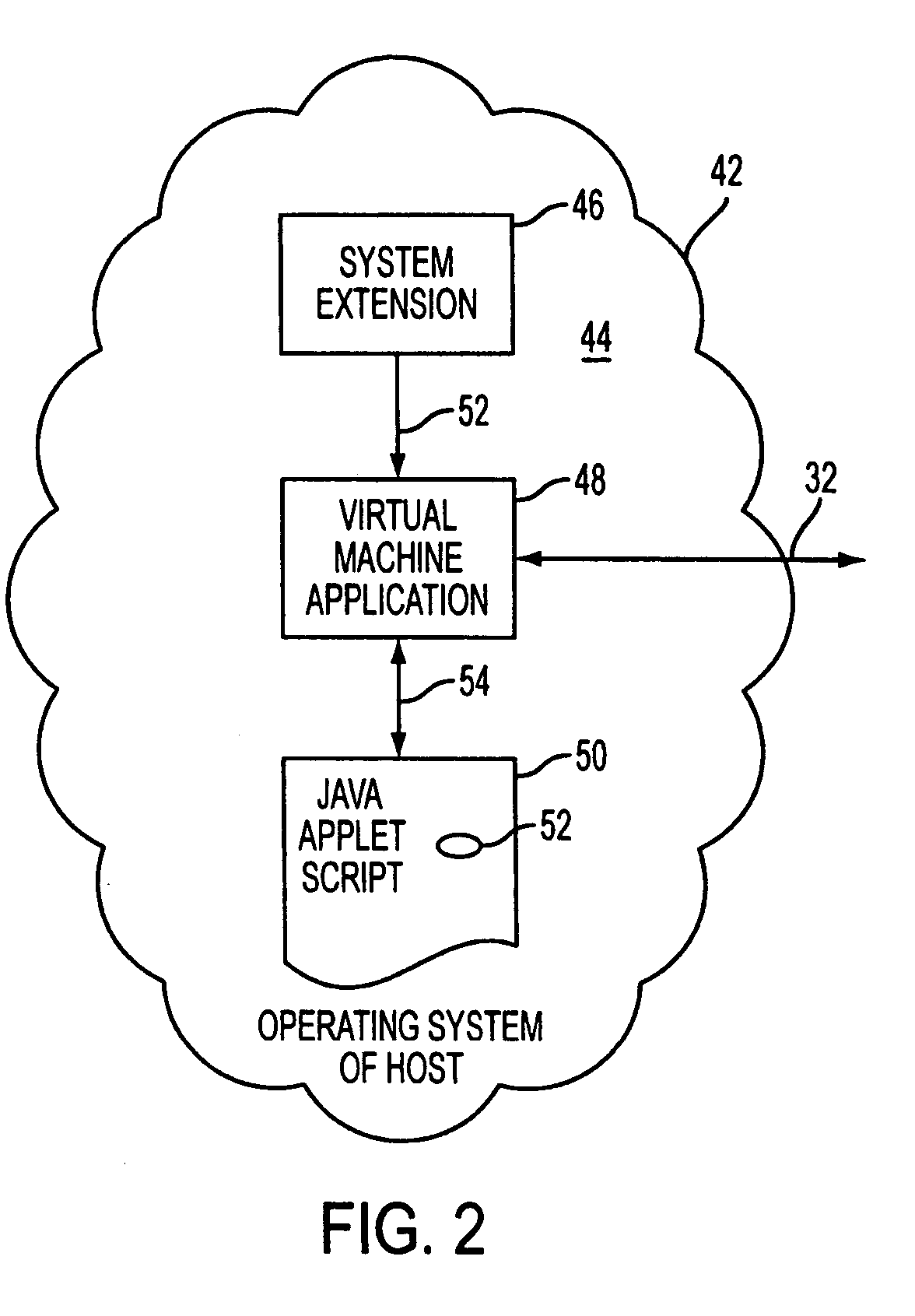

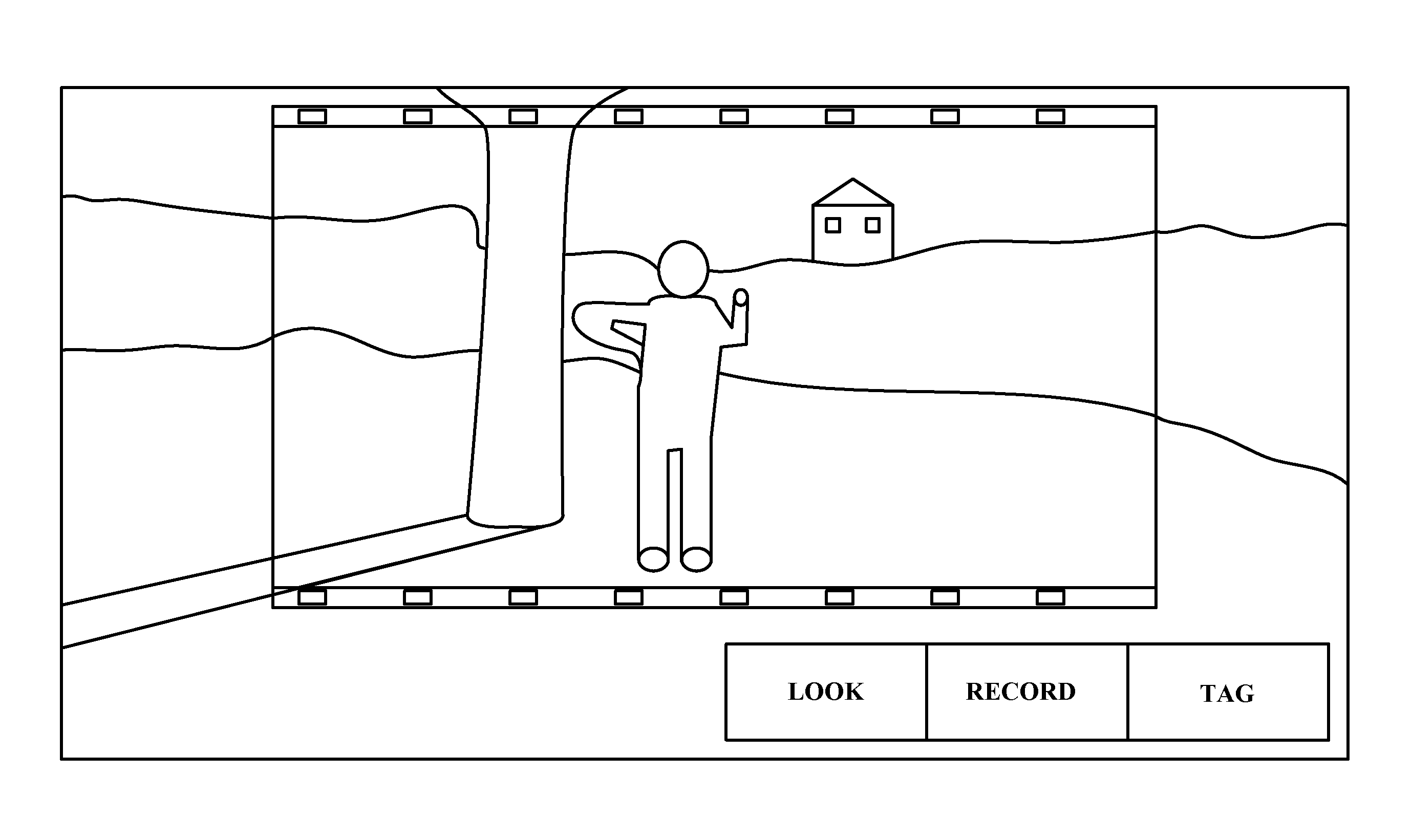

On-location video assistance system with computer generated imagery overlay

InactiveUS6570581B1Television system detailsColor television detailsComputer graphics (images)Computerized system

A video playback system for assisting on-location film production. One embodiment of the invention includes a computer system capable of generating computer generated imagery (CGI) and receiving live video feed from a camera. The computer system is also coupled to a first display screen for displaying computer generated images and a second display screen for displaying the live video feed overlaid with computer generated images. In one embodiment of the present invention, a portion of the first display screen containing computer generated images can be selected for overlaying on the live video feed. The video playback system of the present invention is also capable of storing the live video feed and the images resulted from overlaying the computer generated images and the live video feed. The present invention provides the advantages of allowing live / CGI composites to be played and viewed on the director's monitor for review while on-location.

Owner:MICROSOFT TECH LICENSING LLC

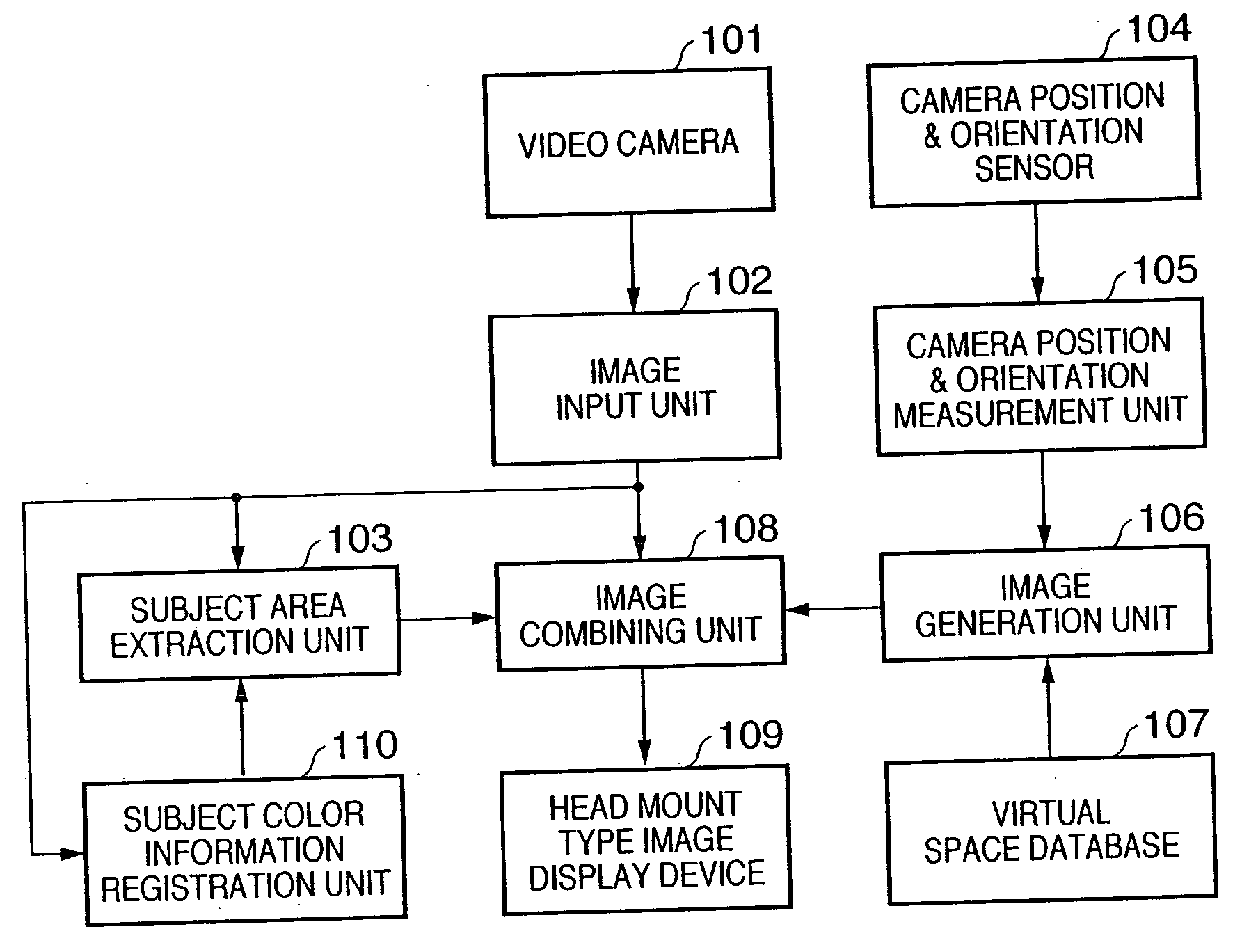

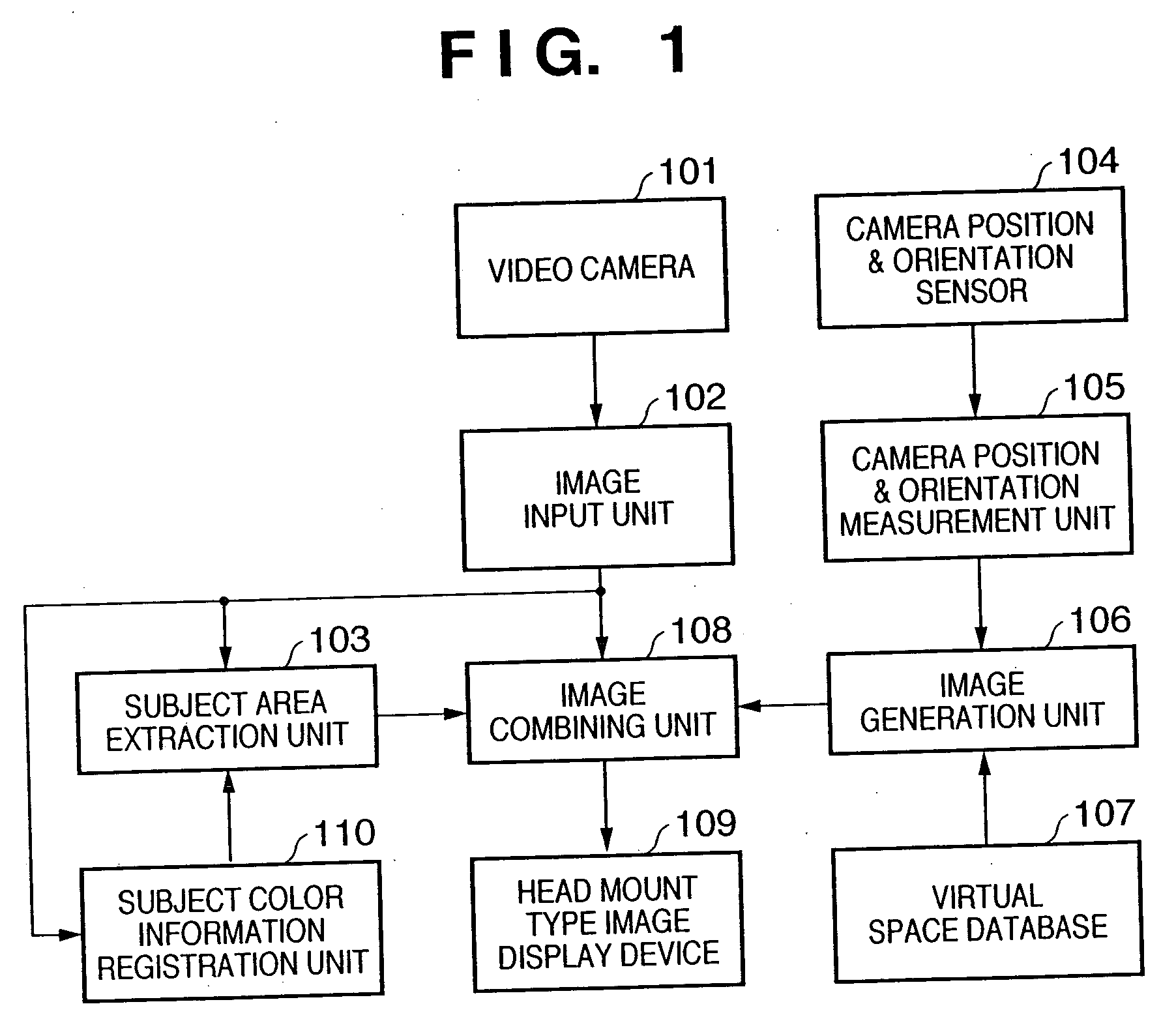

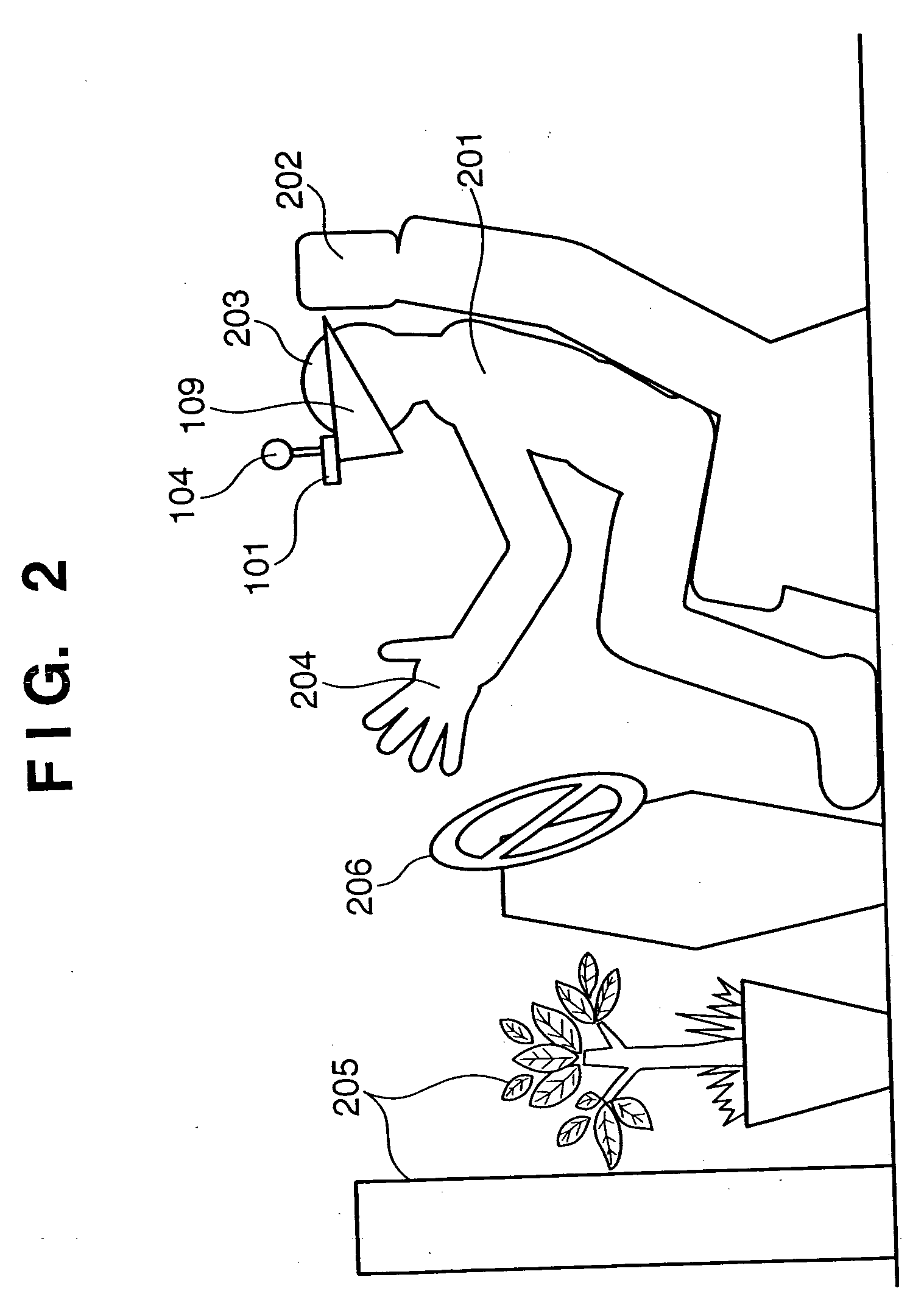

Correction of subject area detection information, and image combining apparatus and method using the correction

InactiveUS20050069223A1Cancel noiseEasy to operateImage enhancementImage analysisComputer graphics (images)Combined method

An image combining method for combining an image obtained by image sensing real space with a computer-generated image and displaying the combined image. Mask area color information is determined based on a first real image including an object as the subject of mask area and a second real image not including the object, and the color information is registered. The mask area is extracted from the real image by using the registered mask area color information, and the real image and the computer-generated image are combined by using the mask area.

Owner:CANON KK

Augmented reality system for supplementing and blending data

A system, method, and computer program product for automatically combining computer-generated imagery with real-world imagery in a portable electronic device by retrieving, manipulating, and sharing relevant stored videos, preferably in real time. A video is captured with a hand-held device and stored. Metadata including the camera's physical location and orientation is appended to a data stream, along with user input. The server analyzes the data stream and further annotates the metadata, producing a searchable library of videos and metadata. Later, when a camera user generates a new data stream, the linked server analyzes it, identifies relevant material from the library, retrieves the material and tagged information, adjusts it for proper orientation, then renders and superimposes it onto the current camera view so the user views an augmented reality.

Owner:SONY CORP

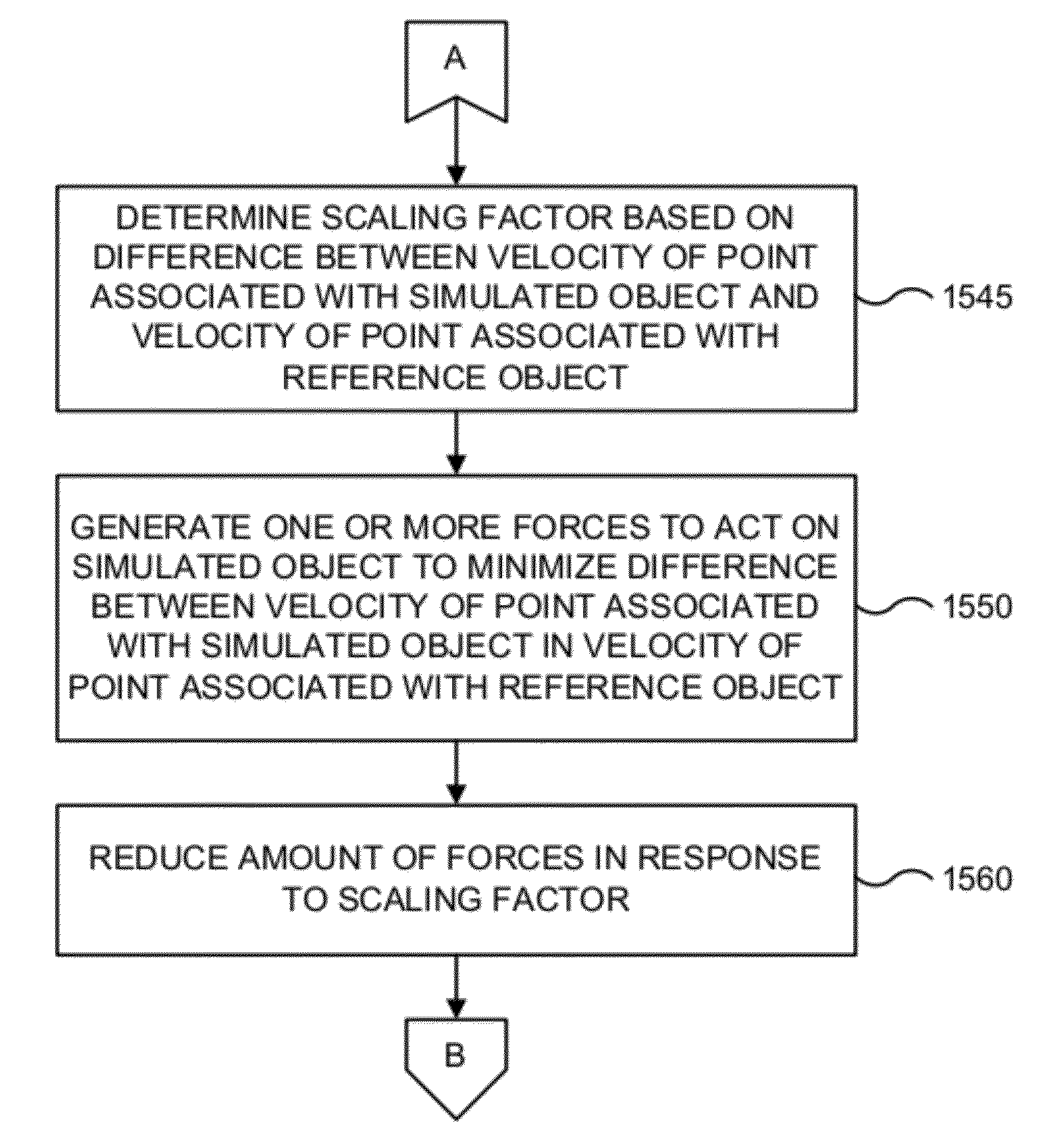

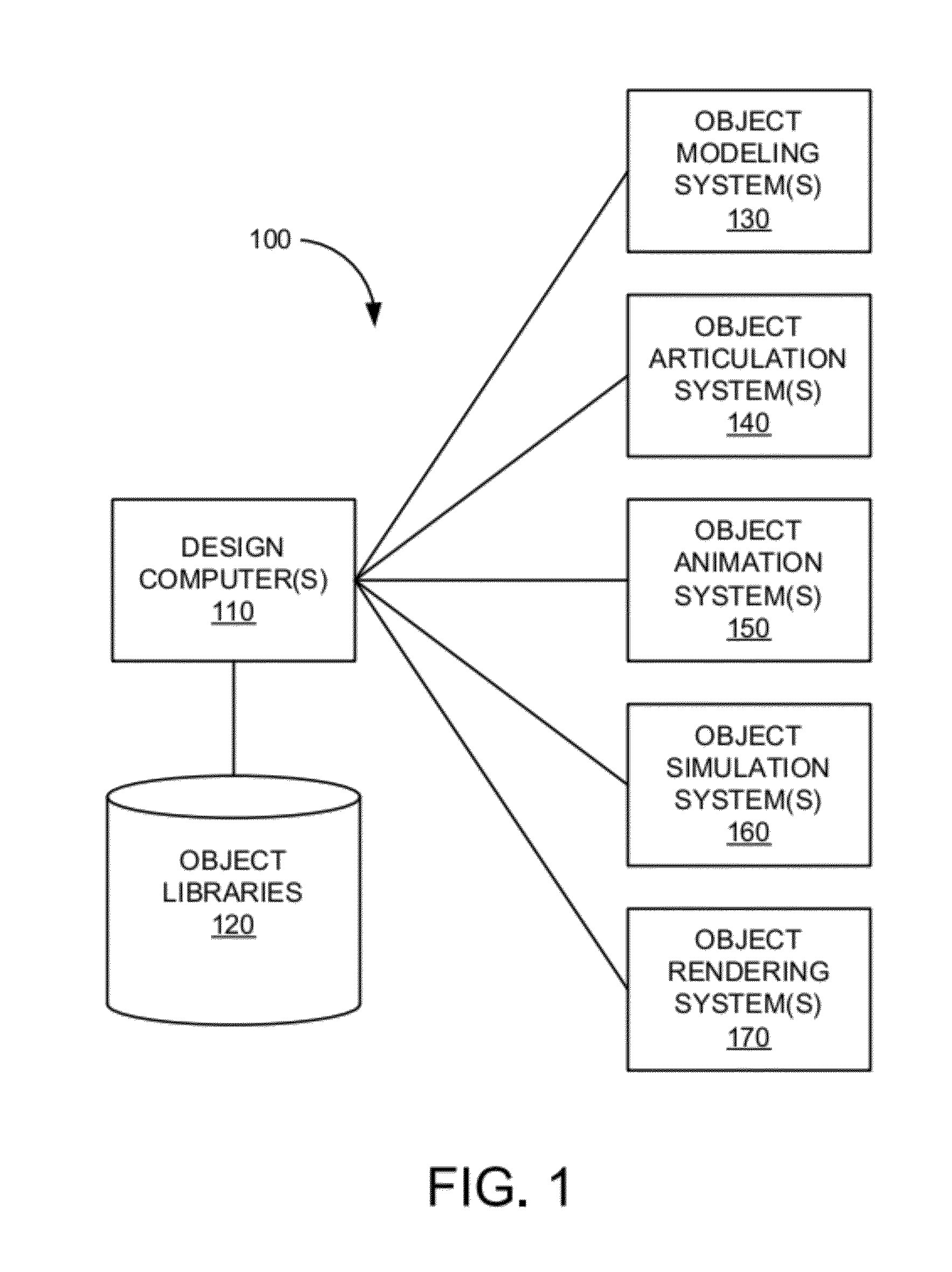

Shape preservation of simulated objects in computer animation

ActiveUS8269778B1Keep shapeInput/output for user-computer interactionComputation using non-denominational number representationComputer animationComputer-aided

Owner:PIXAR ANIMATION

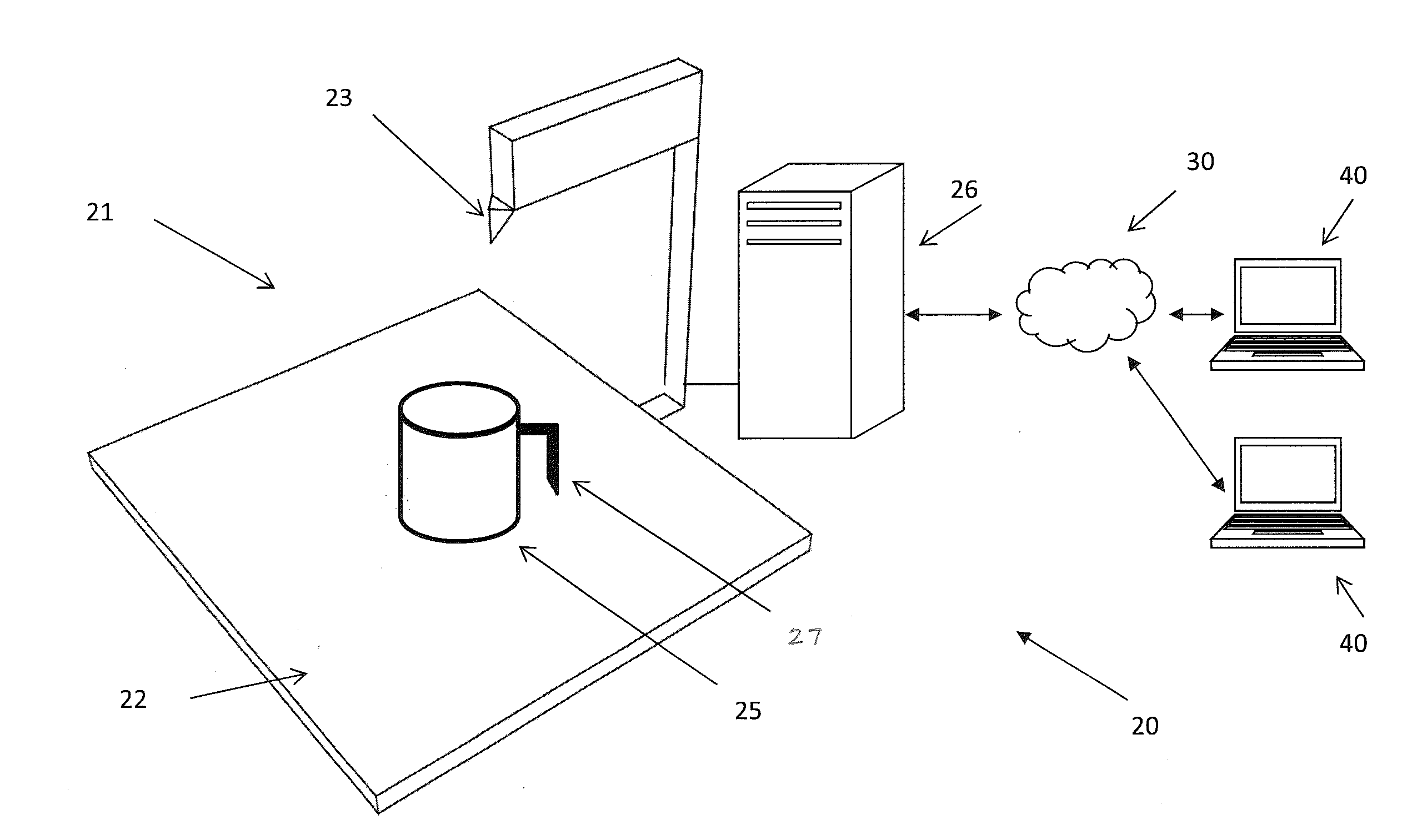

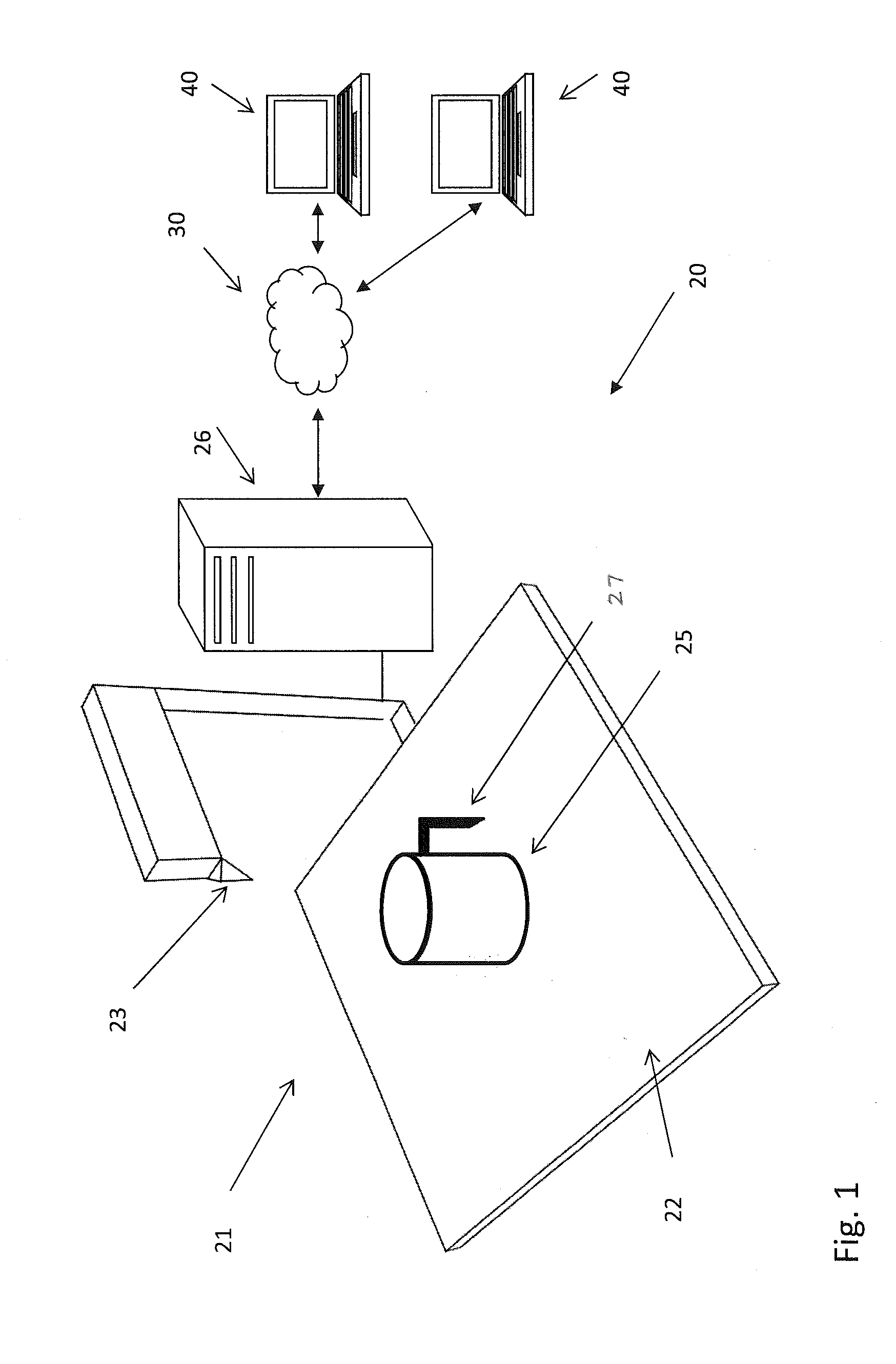

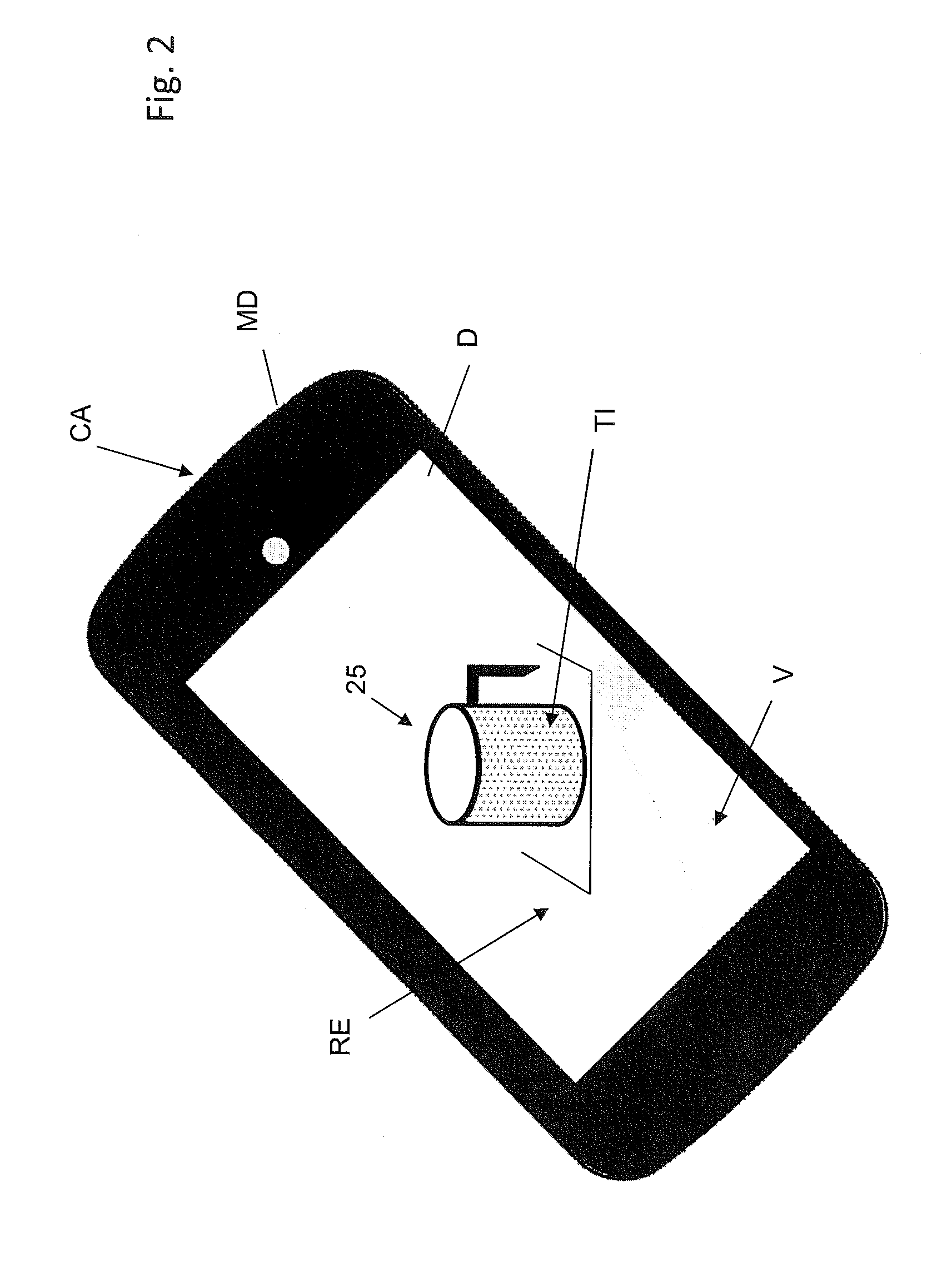

Method for visually augmenting a real object with a computer-generated image

InactiveUS20150042678A1Cathode-ray tube indicatorsInput/output processes for data processingComputer printingClient-side

A method and system for visually augmenting a real object with a computer-generated image that includes sending a virtual model in a client-server architecture from a client computer to a server via a computer network, receiving the virtual model at the server, instructing a 3D printer to print at least a part of the real object according to the virtual model, generating an object detection and tracking configuration configured to identify at least a part of the real object, receiving an image captured by a camera representing at least part of an environment in which the real object is placed, determining a pose of the camera with respect to the real object, and overlaying at least part of a computer-generated image with at least part of the real object.

Owner:METAIO GMBH

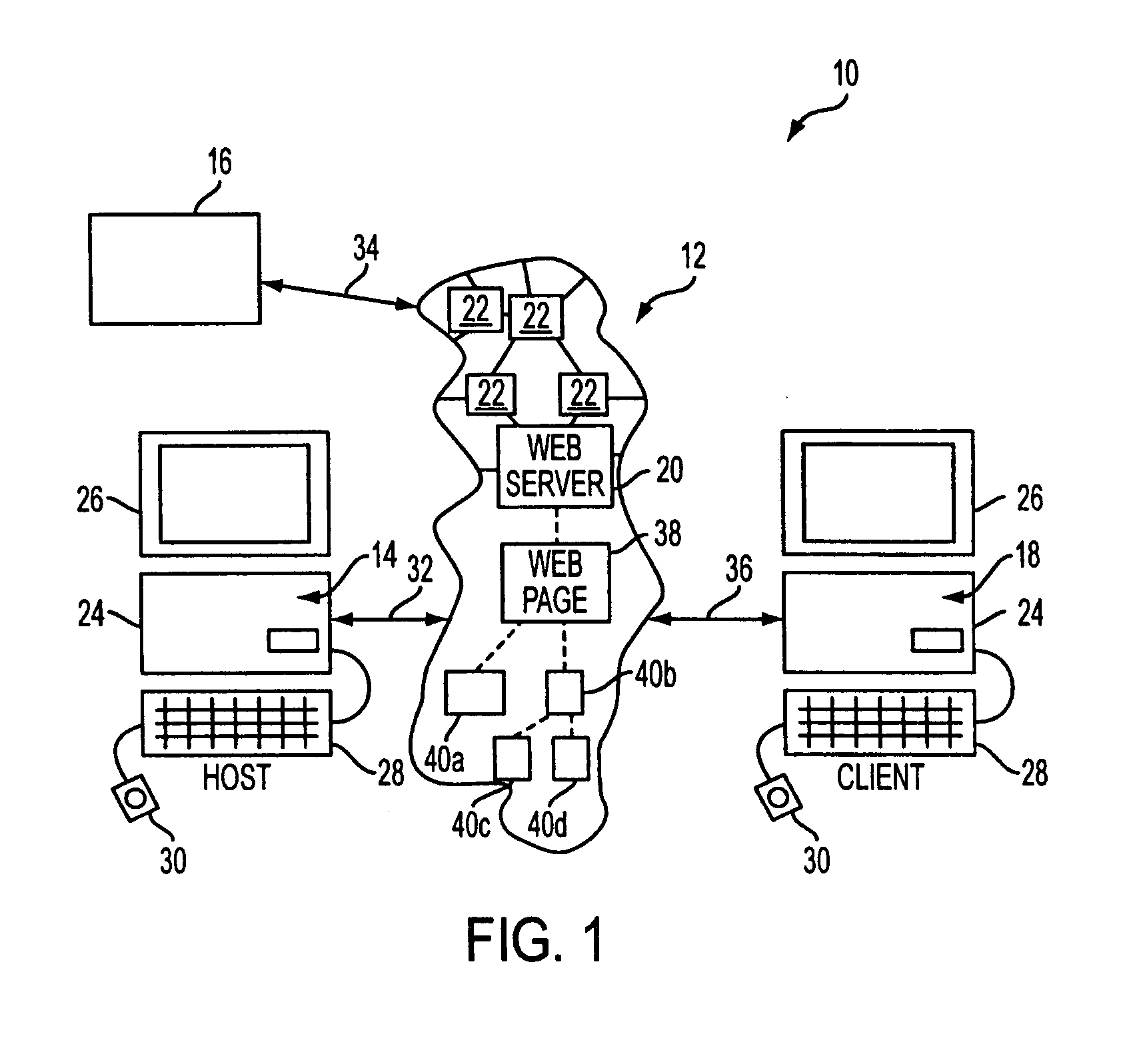

Method and apparatus for computing within a wide area network

ActiveUS20050210118A1Strong computing powerMaintain performanceMultiple digital computer combinationsTransmissionNetwork addressingNetwork address

A cluster computer system including multiple network accessible computers that are each coupled to a network. The network accessible computers implement host computer programs which permits the network accessible computers to operate as host computers for client computers also connected to the network, such that input devices of the client computers can be used to generate inputs to the host computers, and such that image information generated by the host computers can be viewed by the client computers. The system also includes a cluster administration computer coupled to the multiple network accessible computers to monitor the operation of the network accessible computers. A method for providing access to host computers by client computers over a computer network includes receiving a request for a host computer coupled to a computer network from a client computer coupled to the computer network, wherein the relationship of the host computer to the client computer is to be such that after the client computer becomes associated with a host computer, an input device of the client computer can be used to generate inputs to the host computer, and such that image information generated by the host computer can be viewed by the client computer. Next, a suitable host computer for the client computer is determined, and the client computer is informed of the network address of the suitable host computer.

Owner:INTELLECTUAL VENTURES II

Augmented reality virtual guide system

A system, method, and computer program product for automatically combining computer-generated imagery with real-world imagery in a portable electronic device by retrieving, manipulating, and sharing relevant stored videos, preferably in real time. A video is captured with a hand-held device and stored. Metadata including the camera's physical location and orientation is appended to a data stream, along with user input. The server analyzes the data stream and further annotates the metadata, producing a searchable library of videos and metadata. Later, when a camera user generates a new data stream, the linked server analyzes it, identifies relevant material from the library, retrieves the material and tagged information, adjusts it for proper orientation, then renders and superimposes it onto the current camera view so the user views an augmented reality.

Owner:SONY CORP

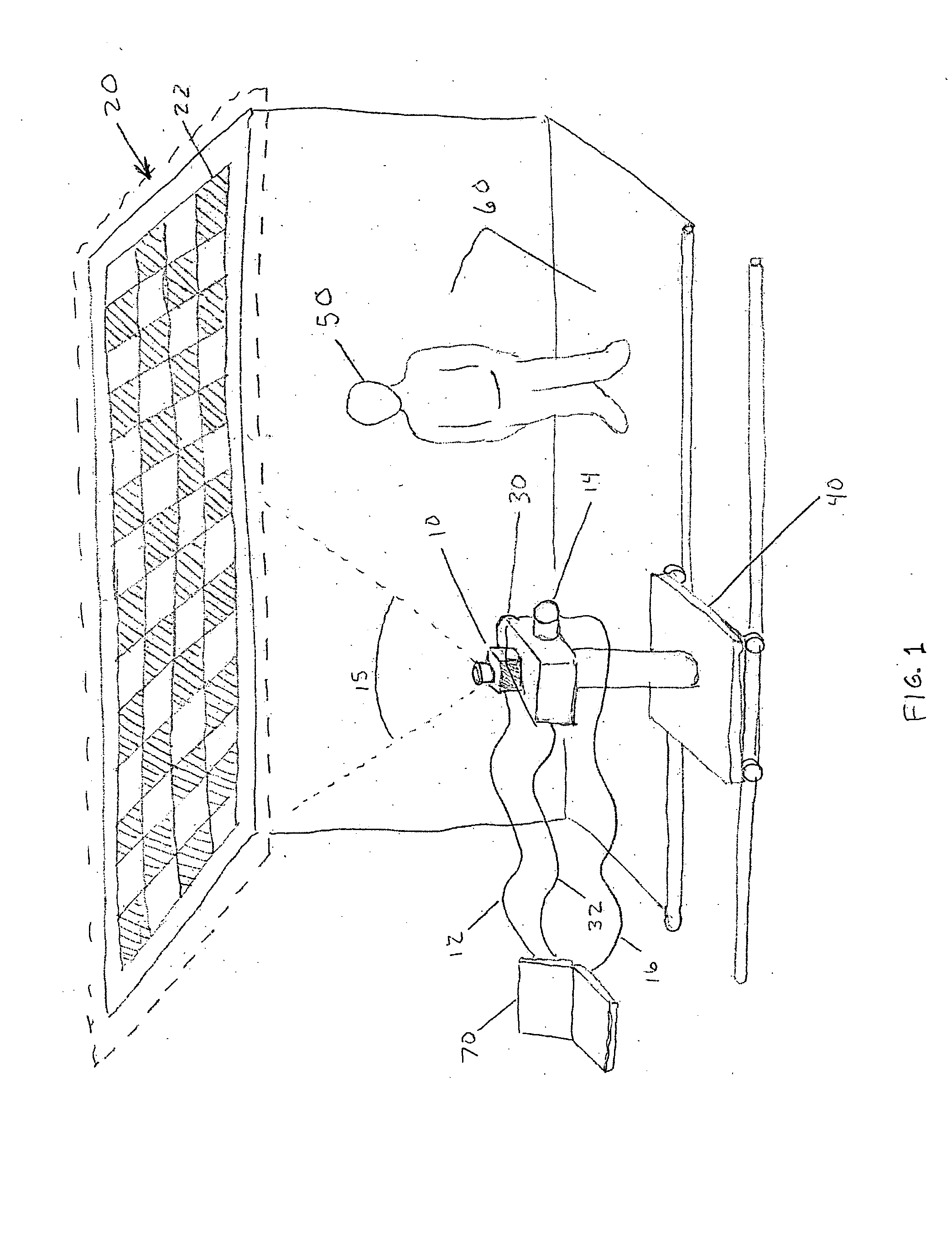

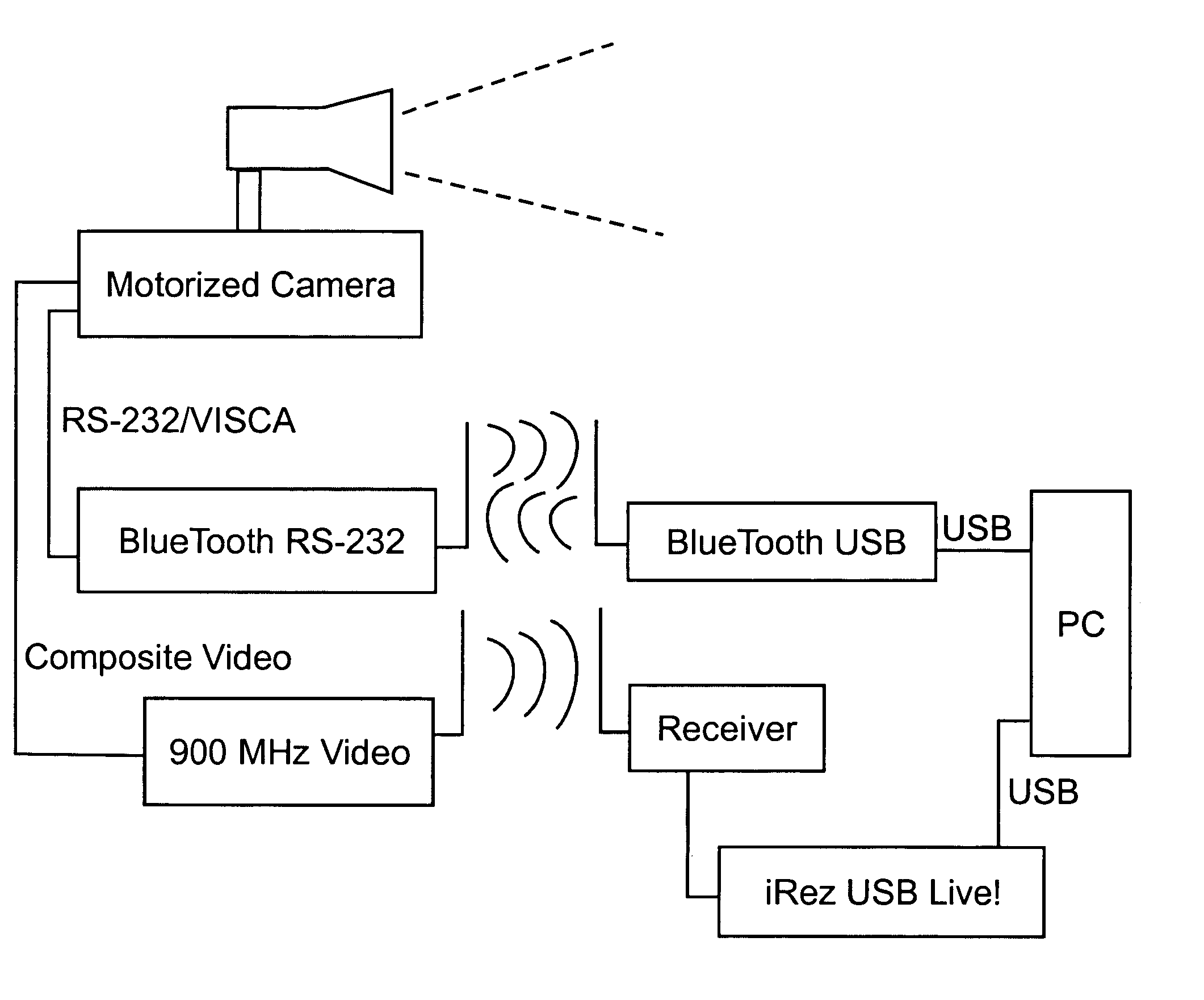

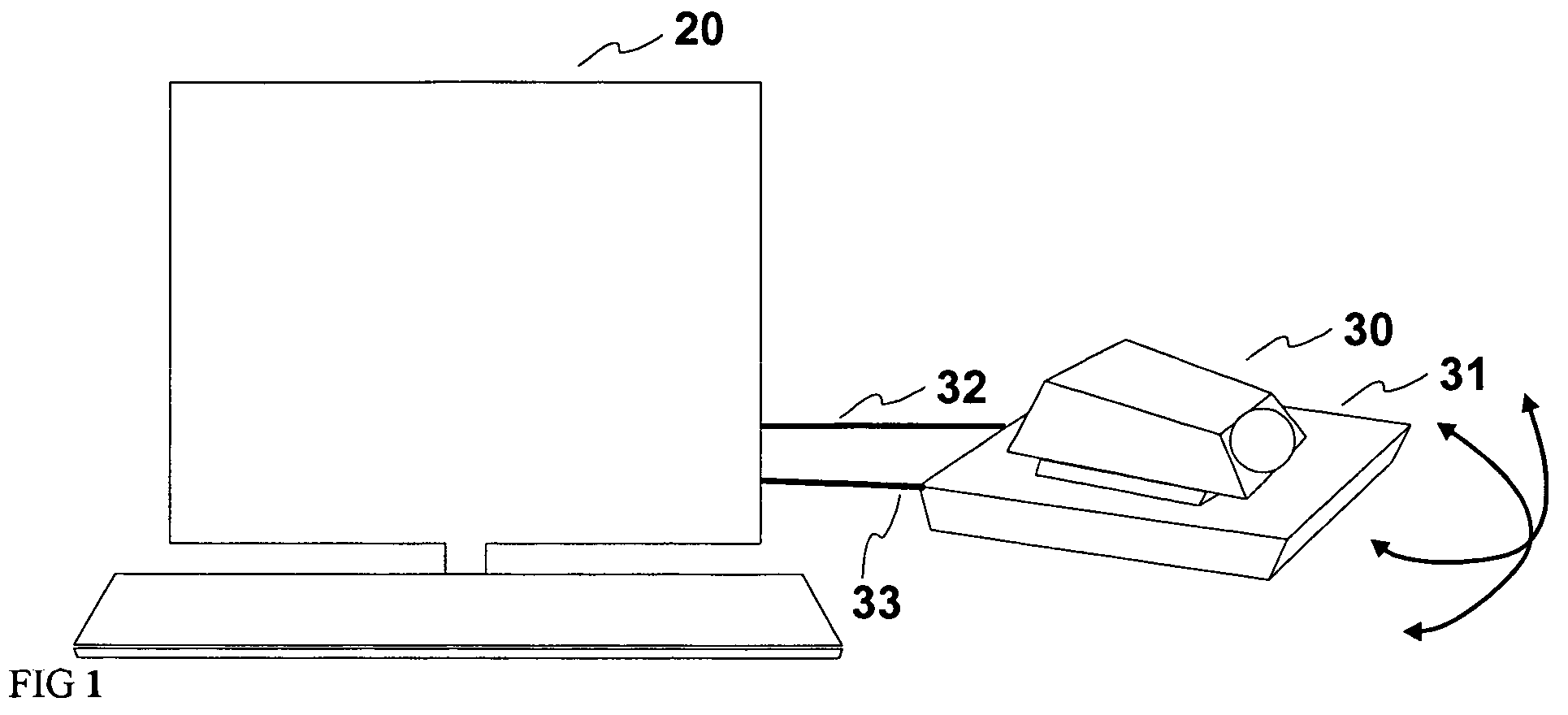

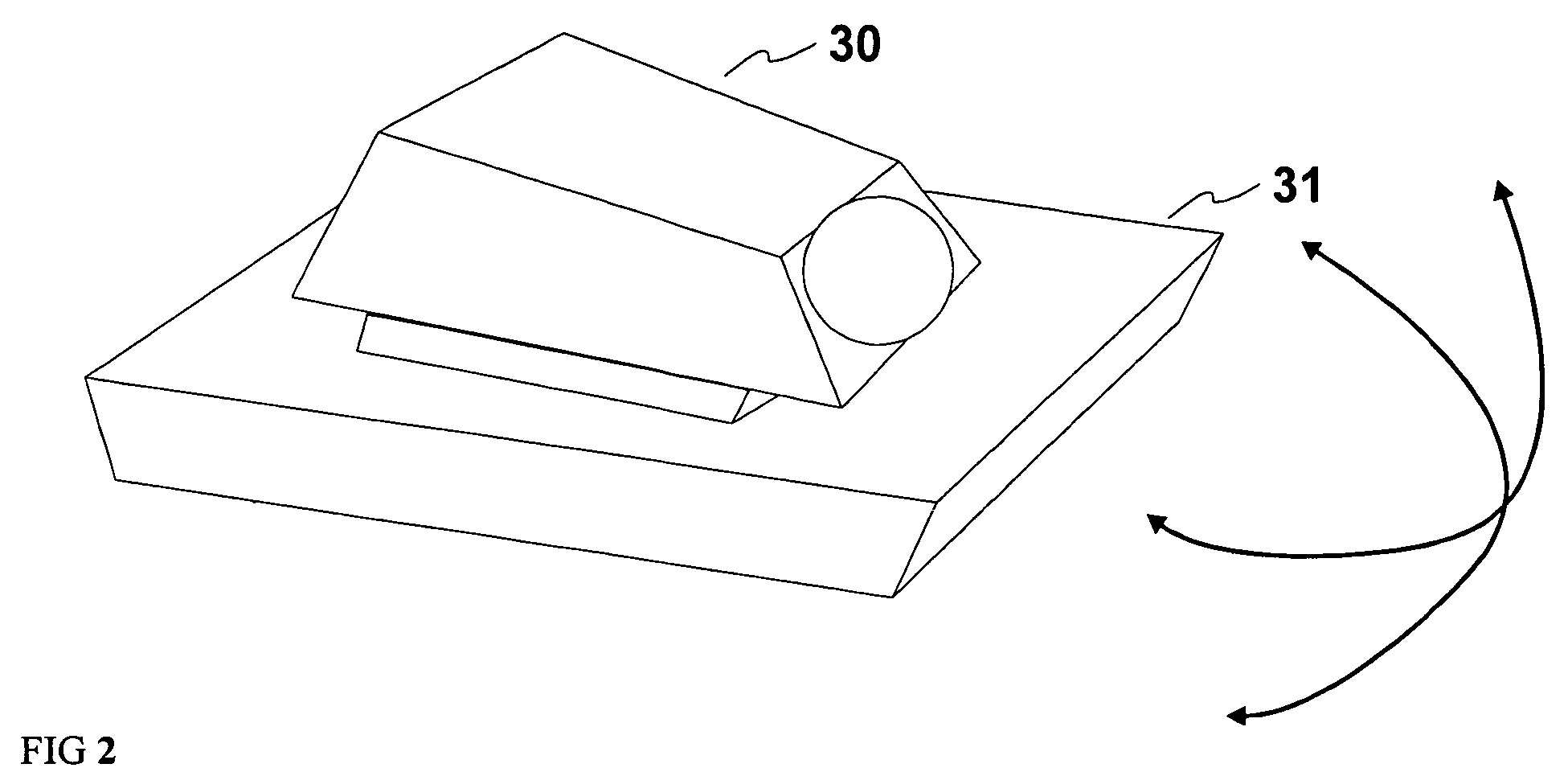

Method for using a wireless motorized camera mount for tracking in augmented reality

InactiveUS7071898B2Simple and compact and inexpensiveTelevision system detailsCathode-ray tube indicatorsVideo imageComputer-generated imagery

A method for displaying otherwise unseen objects and other data using augmented reality (the mixing of real view with computer generated imagery). The method uses a motorized camera mount that can report the position of a camera on that mount back to a computer. With knowledge of where the camera is looking, and its field of view, the computer can precisely overlay computer-generated imagery onto the video image produced by the camera. The method may be used to present to a user such items as existing weather conditions, hazards, or other data, and presents this information to the user by combining the computer generated images with the user's real environment. These images are presented in such a way as to display relevant location and properties of the object to the system user. The primary intended applications are as navigation aids for air traffic controllers and pilots in training and operations, and use with emergency first responder training and operations to view and avoid / alleviate hazardous material situations. However, the system can be used to display any imagery that needs to correspond to locations in the real world.

Owner:INFORMATION DECISION TECH

Augmented reality surveillance and rescue system

A system, method, and computer program product for automatically combining computer-generated imagery with real-world imagery in a portable electronic device by retrieving, manipulating, and sharing relevant stored videos, preferably in real time. A video is captured with a hand-held device and stored. Metadata including the camera's physical location and orientation is appended to a data stream, along with user input. The server analyzes the data stream and further annotates the metadata, producing a searchable library of videos and metadata. Later, when a camera user generates a new data stream, the linked server analyzes it, identifies relevant material from the library, retrieves the material and tagged information, adjusts it for proper orientation, then renders and superimposes it onto the current camera view so the user views an augmented reality.

Owner:SONY CORP

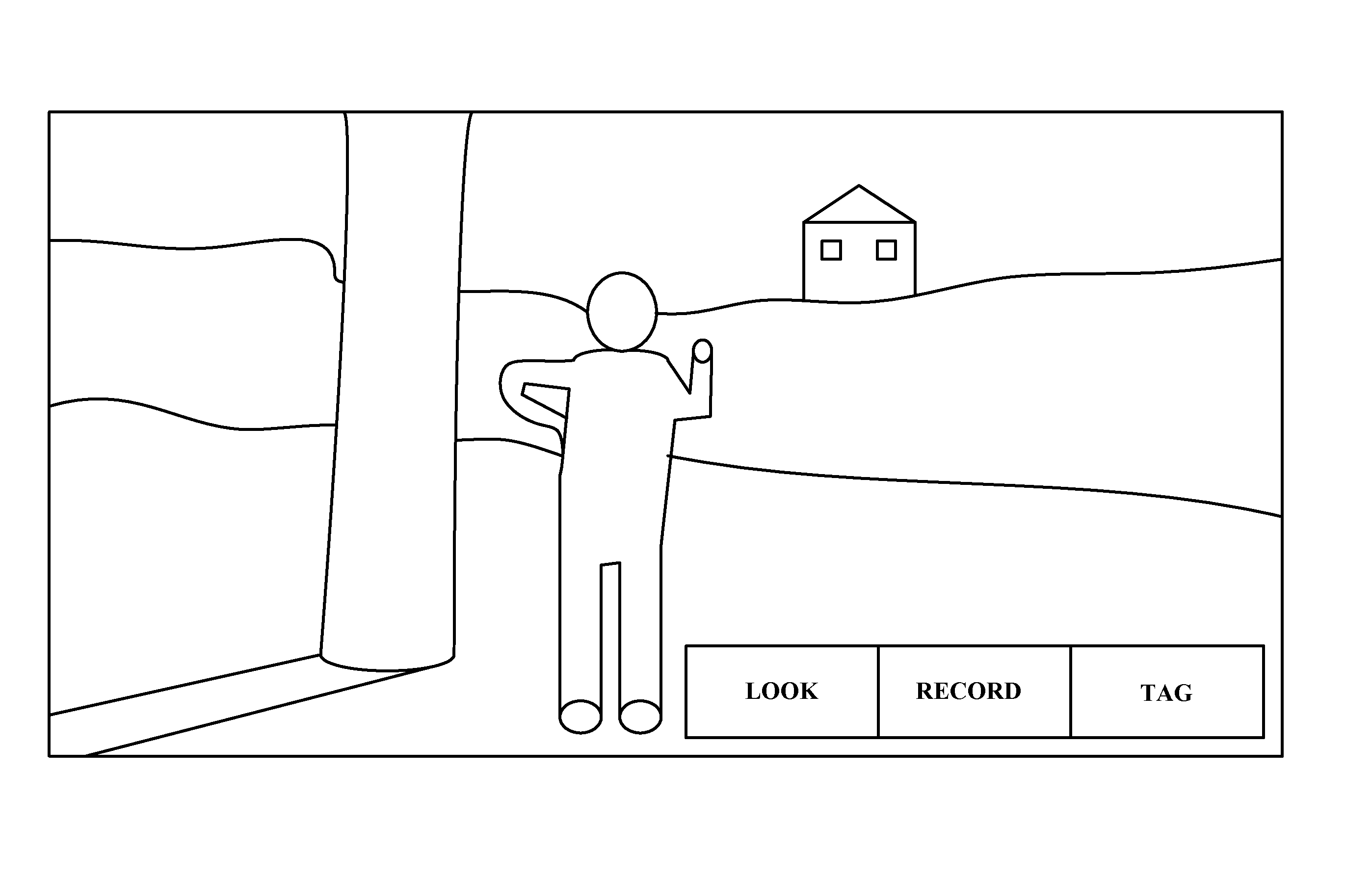

Augmented reality system for communicating tagged video and data on a network

A system, method, and computer program product for automatically combining computer-generated imagery with real-world imagery in a portable electronic device by retrieving, manipulating, and sharing relevant stored videos, preferably in real time. A video is captured with a hand-held device and stored. Metadata including the camera's physical location and orientation is appended to a data stream, along with user input. The server analyzes the data stream and further annotates the metadata, producing a searchable library of videos and metadata. Later, when a camera user generates a new data stream, the linked server analyzes it, identifies relevant material from the library, retrieves the material and tagged information, adjusts it for proper orientation, then renders and superimposes it onto the current camera view so the user views an augmented reality.

Owner:SONY CORP

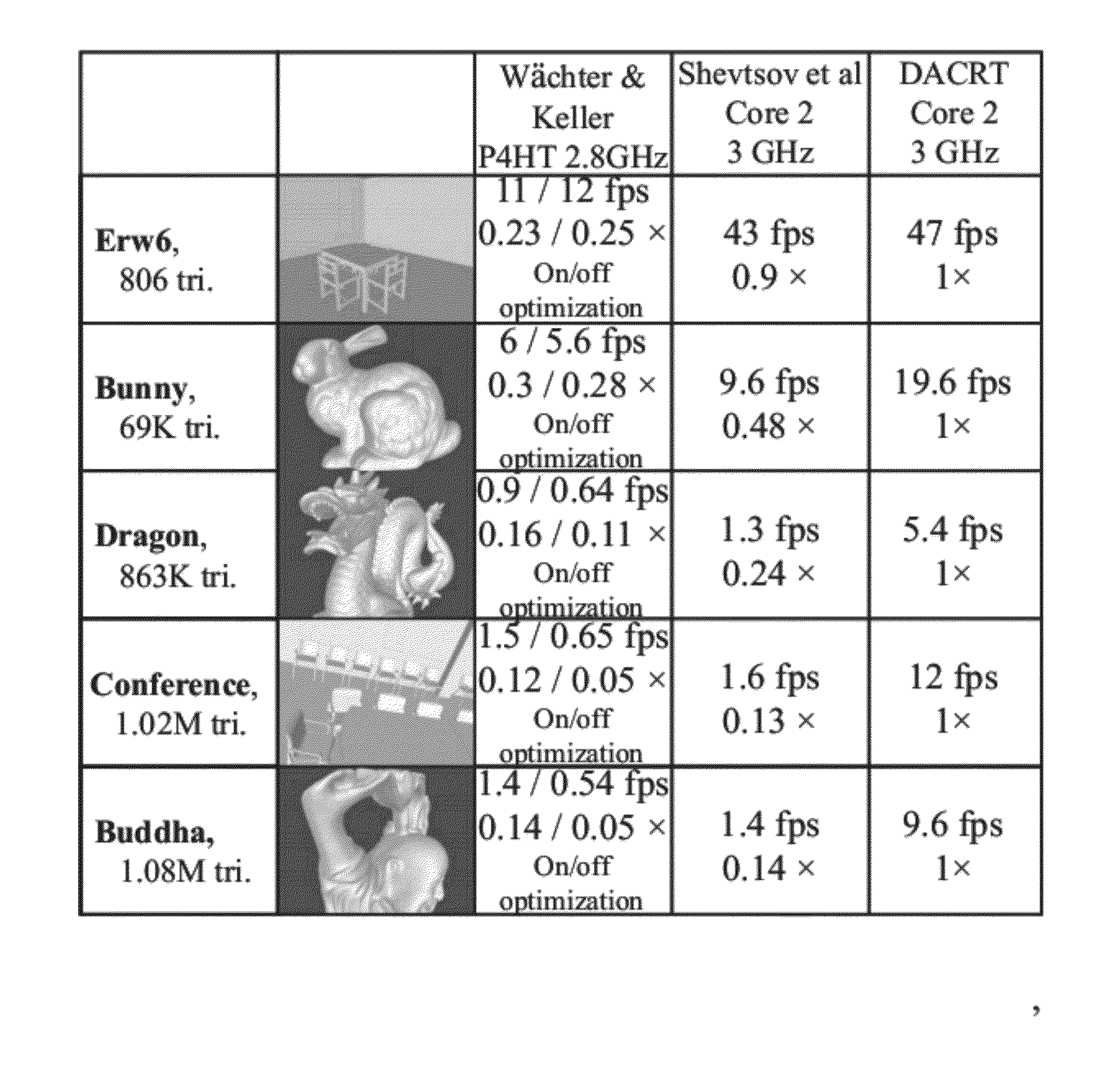

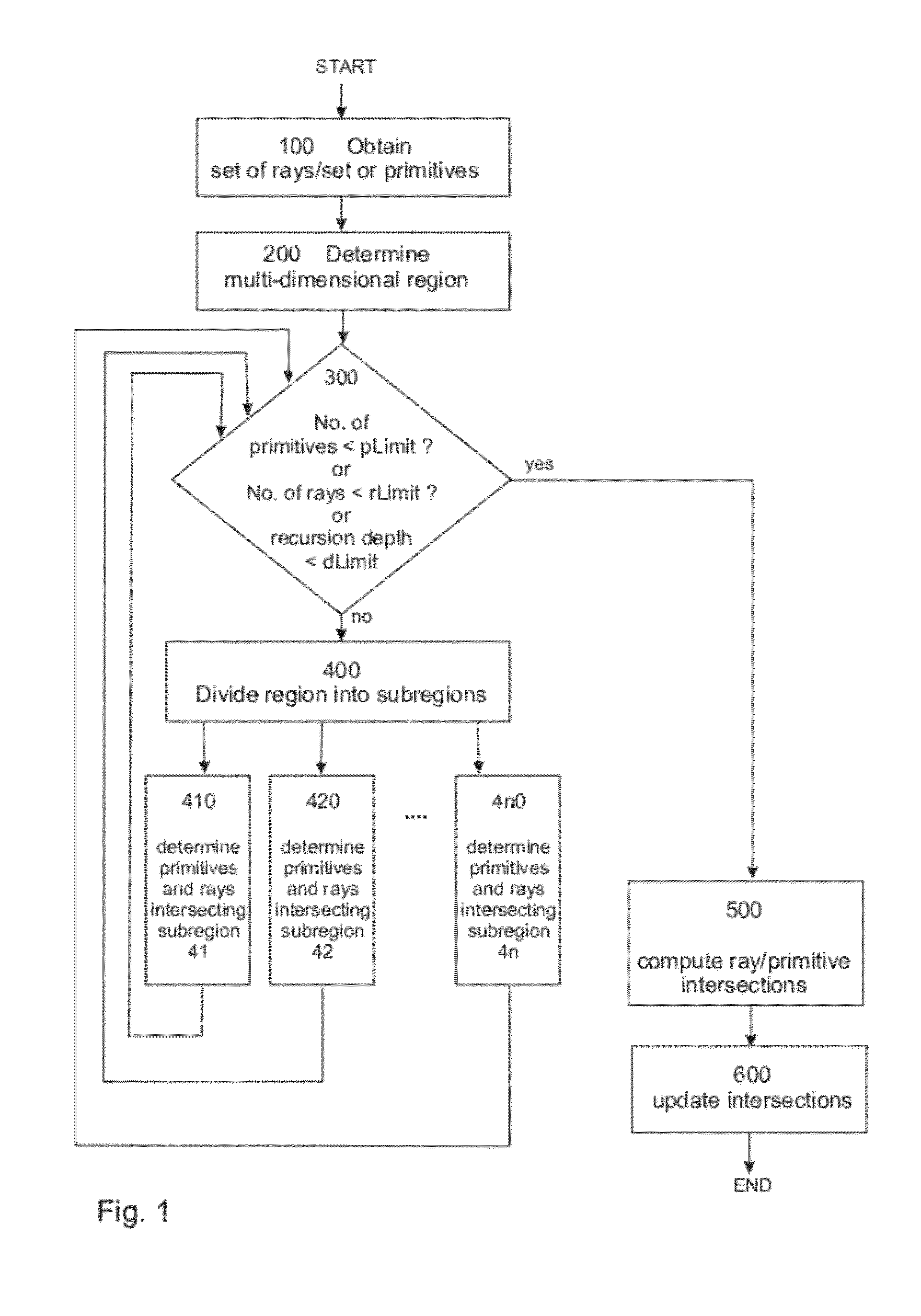

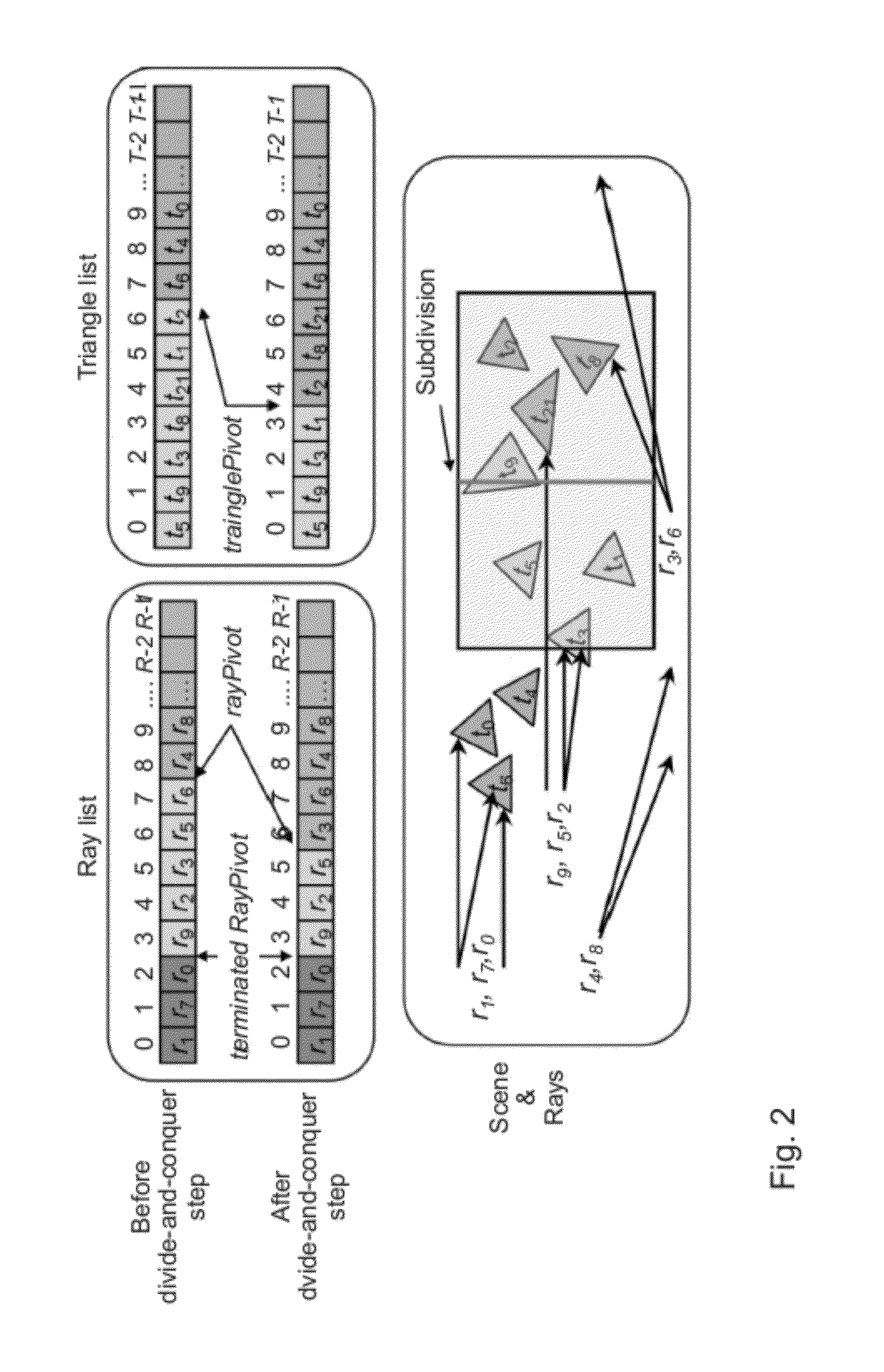

Direct Ray Tracing of 3D Scenes

Determining intersections between rays and triangles is at the heart of most Computer Generated 3D images. The present disclosure describes a new method for determining the intersections between a set of rays and a set of triangles. The method is unique as it processes arbitrary rays and arbitrary primitives, and provides the lower complexity typical to ray-tracing algorithms without making use of a spatial subdivision data structure which would require additional memory storage. Such low memory usage is particularly beneficial to all computer systems creating 3D images where the available on-board memory is limited and critical, and must be minimized. Also, a pivot-based streaming novelty allows minimizing conditional branching inherent to normal ray-tracing techniques by handling large streams of rays. In most cases, our method displays much faster times for solving similar intersection problems than preceding state of the art methods on similar systems.

Owner:IMAGINATION TECH LTD

Dynamic Augmented Reality Vision Systems

InactiveUS20140247281A1Improve the level ofLow level of reinforcingCathode-ray tube indicatorsClosed circuit television systemsVisual perceptionComputer generation

Imaging systems which include an augmented reality feature are provided with automated means to throttle or excite the augmented reality generator. Compound images are presented whereby an optically captured image is over late with a pewter generated image portion to form the complete augmented image for presentation to a user. Upon the particular conditions of the imager, imaged scene and the Imaging environment, these imaging systems include automated responses. Computer-generated images which are overlaid optically captured images are either bolstered all in the detail and content where an increase in information is needed, or they are tempered where a decrease in information is preferred as determined by prescribed conditions and values.

Owner:GEOVECTOR

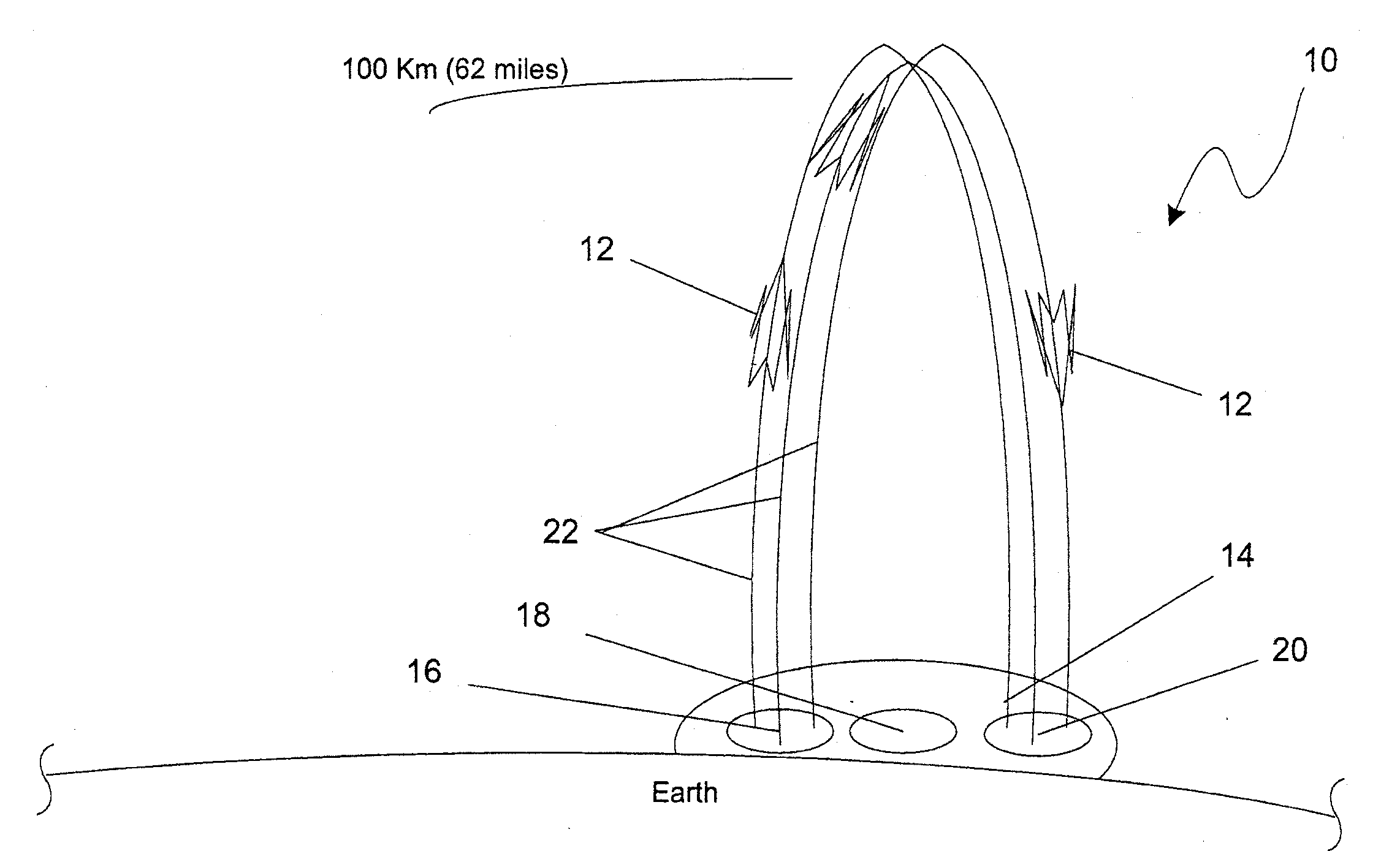

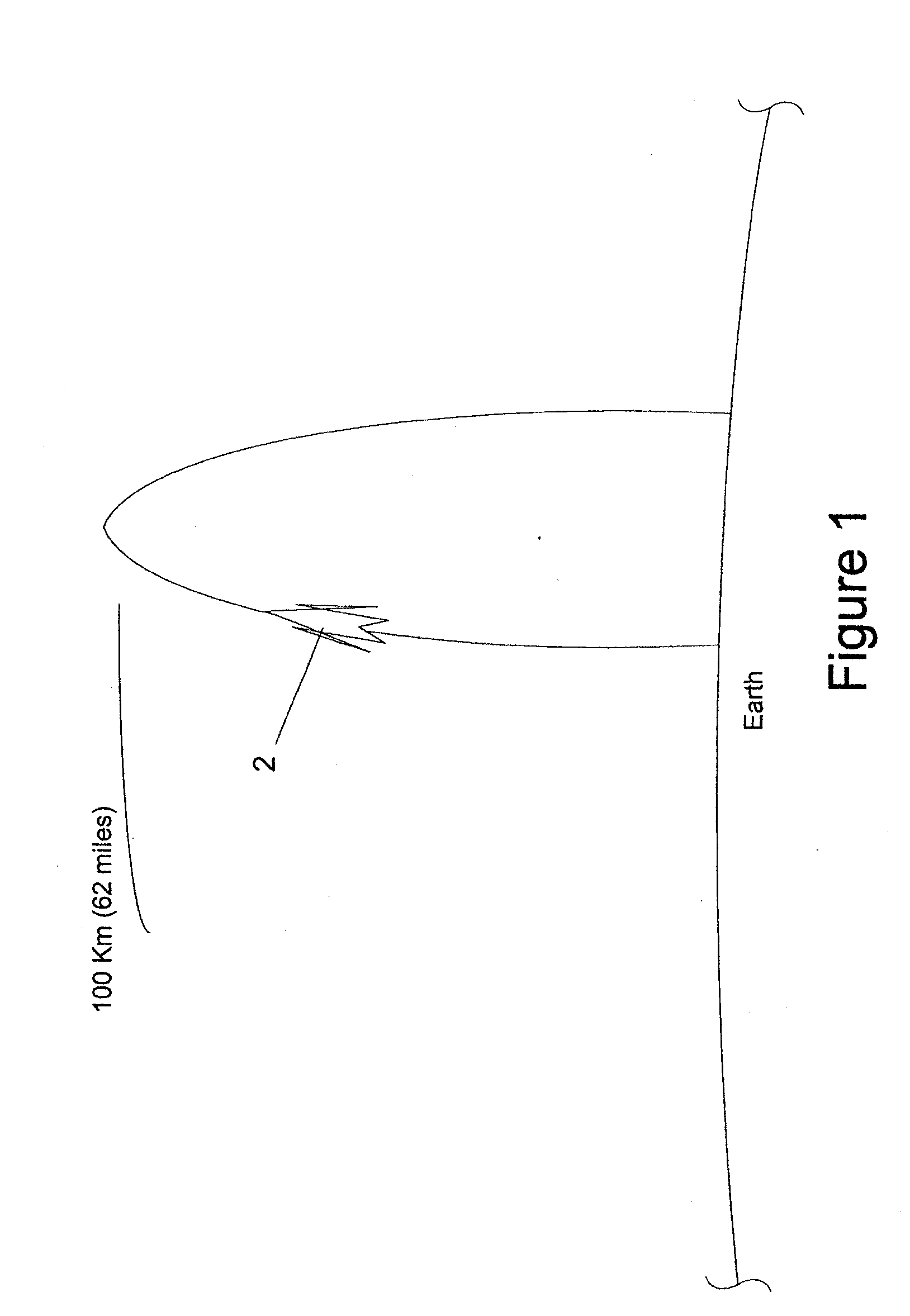

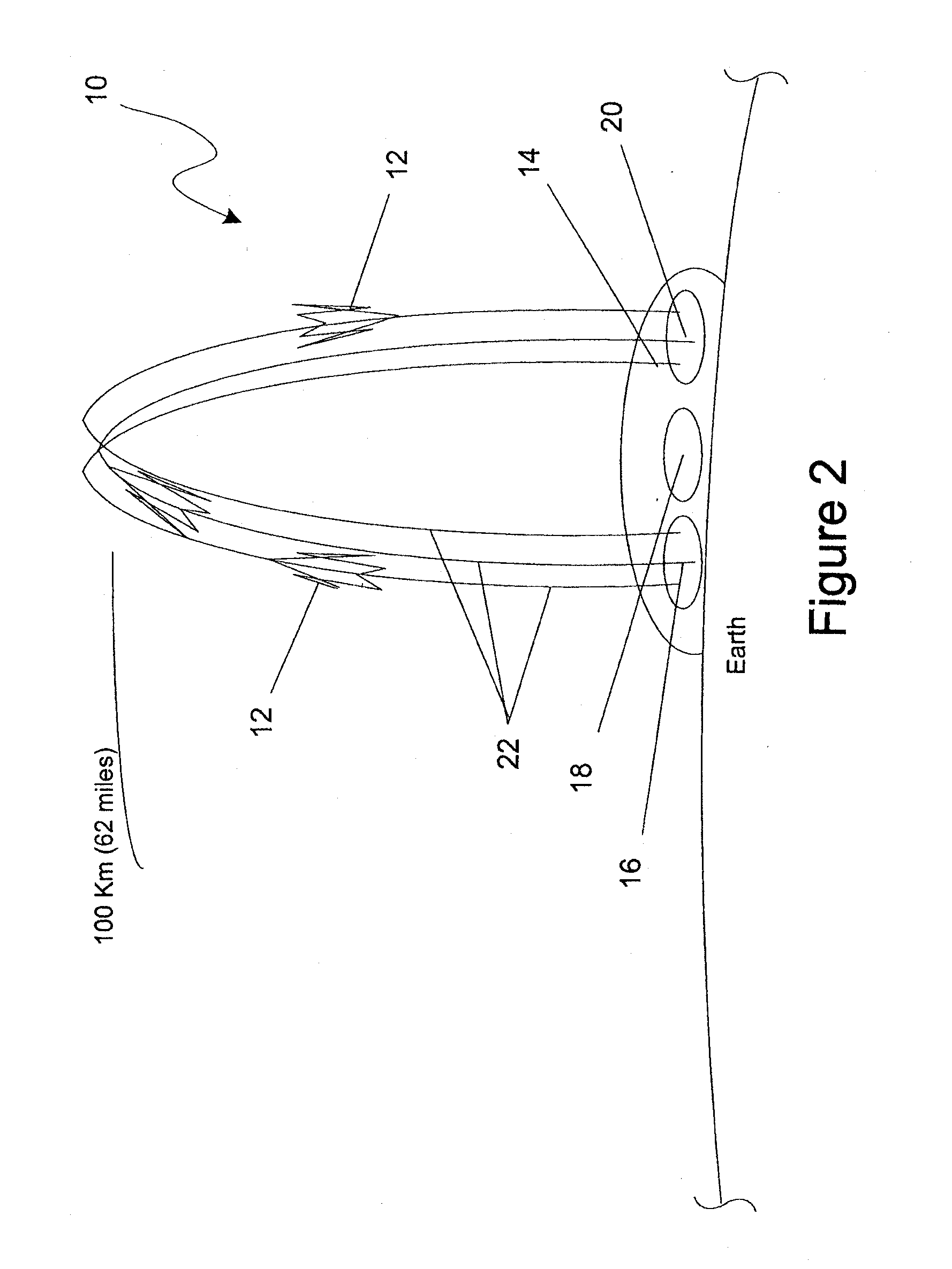

Rocket-powered vehicle racing information system

InactiveUS20070194171A1Improving pilot performanceImprove performanceRocket type power plantsSpace shuttlesRocketComputer-generated imagery

A rocket-powered race for entertaining spectators wherein computer-generated images are optionally provided to at-least partially define a race-course.

Owner:ROCKET RACING

Method for in-situ synchronous acquisition of two-dimensional distribution of active phosphor and dissolved oxygen in water, soil or sediment

The invention discloses a method for in-situ synchronous acquisition of two-dimensional distribution of active phosphor and dissolved oxygen in water, soil or sediment. The method utilizes a DGT device comprising a DGT-PO functional composite membrane. The DGT-PO functional composite membrane comprises a transparent support, a fluorescent sensing layer and a DGT fixed layer. The method comprises 1, based on a fluorescence analysis principle, acquiring a DO fluorescence intensity image of a composite membrane PO layer in real time through an image technology, and 2, treating the composite membrane, carrying out membrane color development, acquiring a SRP developing image of the DGT layer through a computer-generated imagery density measurement technology and carrying out metering and detection on the SRP and DO according to the acquired image. Through use of an improved membrane developing-computer-generated imagery technology and a RGB three-color rate quantification technology, active phosphor and dissolved oxygen in a matrix can be synchronously monitored in real time through DGT-PO double channels.

Owner:NANJING INST OF GEOGRAPHY & LIMNOLOGY

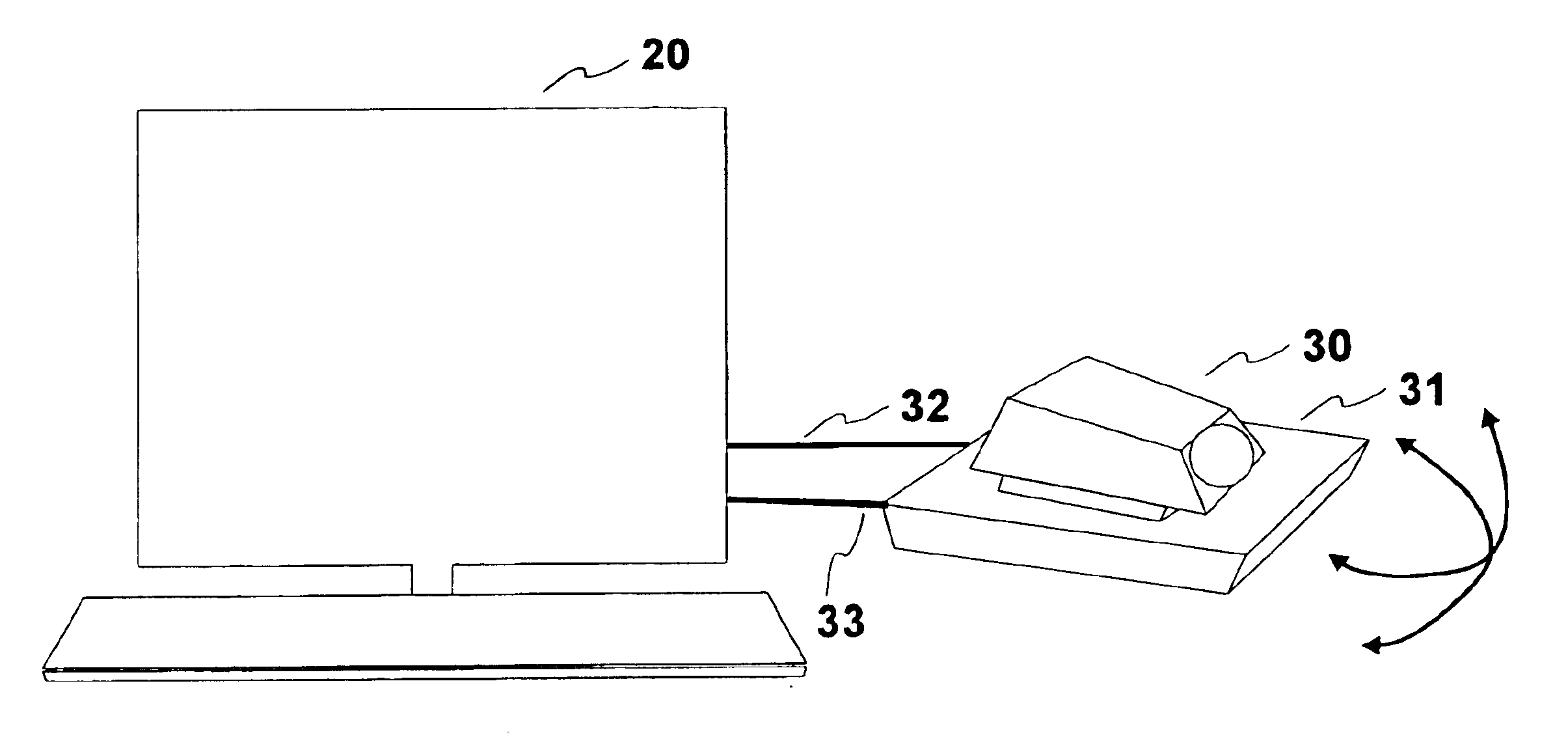

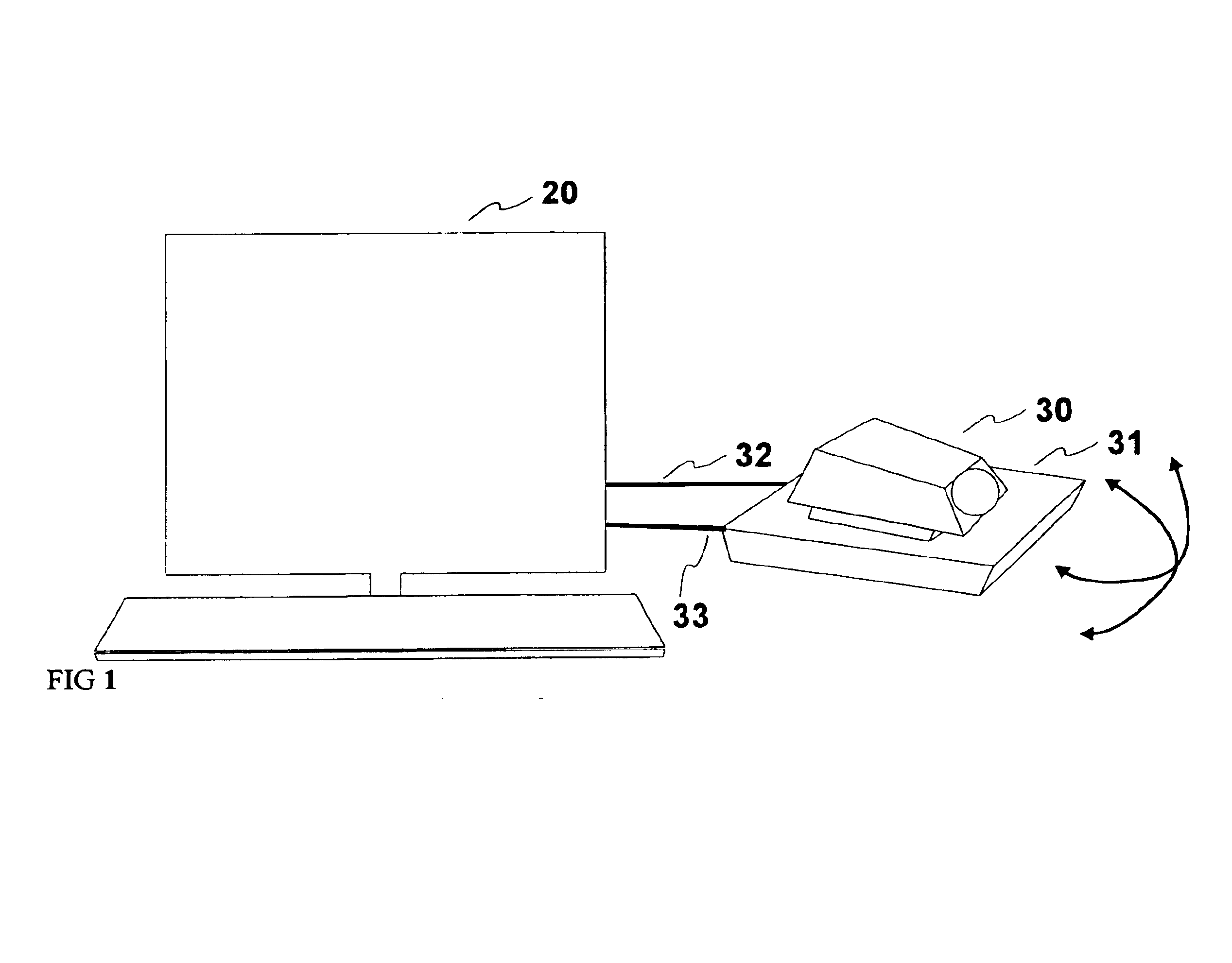

Method for using a motorized camera mount for tracking in augmented reality

InactiveUS6903707B2Input/output for user-computer interactionTelevision system detailsRoad traffic controlVideo image

The invention is a method for displaying otherwise unseen objects and other data using augmented reality (the mixing of real view with computer generated imagery). The method uses a motorized camera mount that can report the position of a camera on that mount back to a computer. With knowledge of where the camera is looking, and the size of its field of view, the computer can precisely overlay computer-generated imagery onto the video image produced by the camera. The method may be used to present to a user such items as existing weather conditions, hazards, or other data, and presents this information to the user by combining the computer generated images with the user's real environment. These images are presented in such a way as to display relevant location and properties of the object to the system user. The primary intended applications are as navigation aids for air traffic controllers and pilots in training and operations, and use with emergency first responder training and operations to view and avoid / alleviate hazardous material situations, however the system can be used to display any imagery that needs to correspond to locations in the real world.

Owner:INFORMATION DECISION TECH

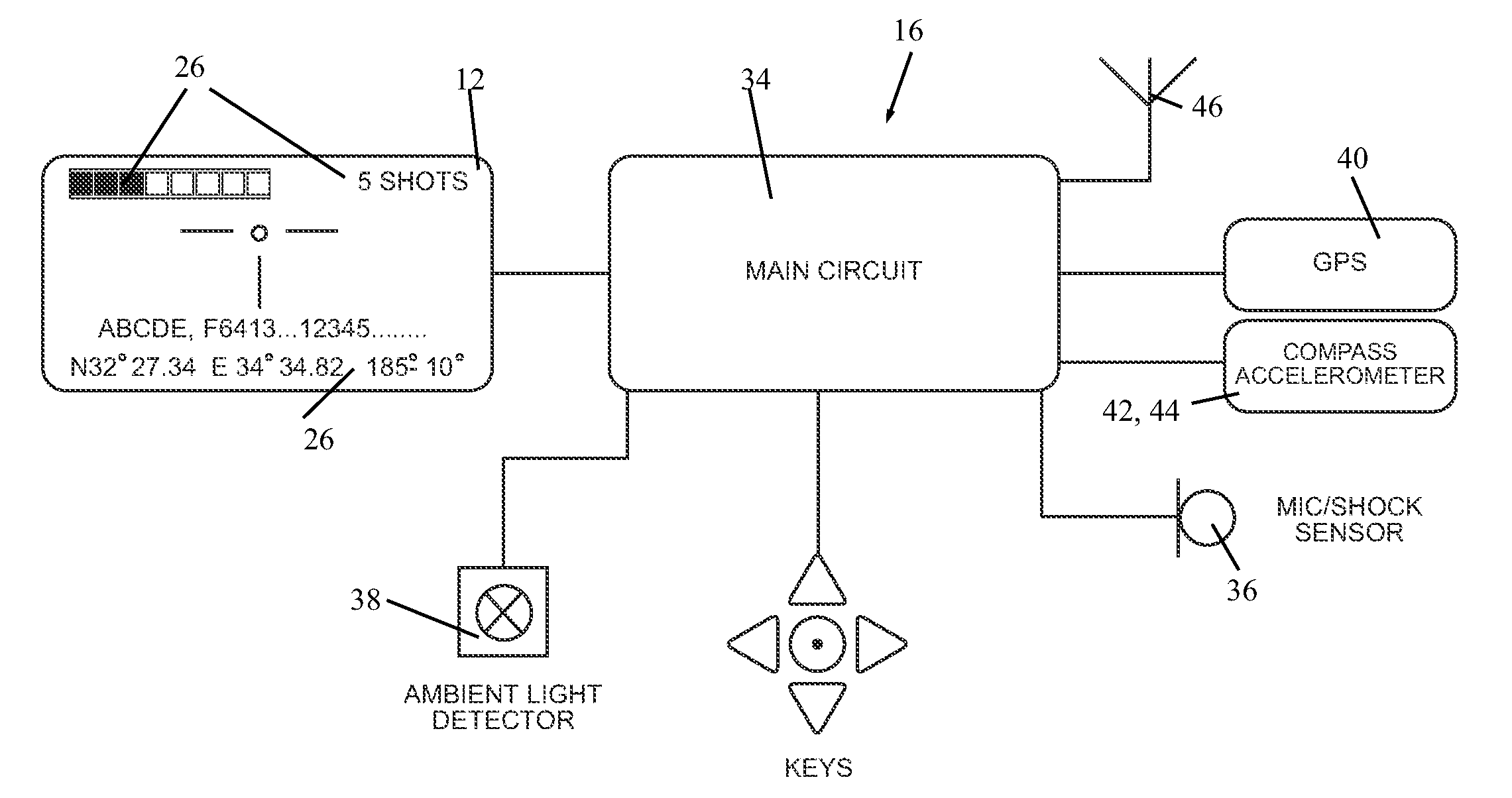

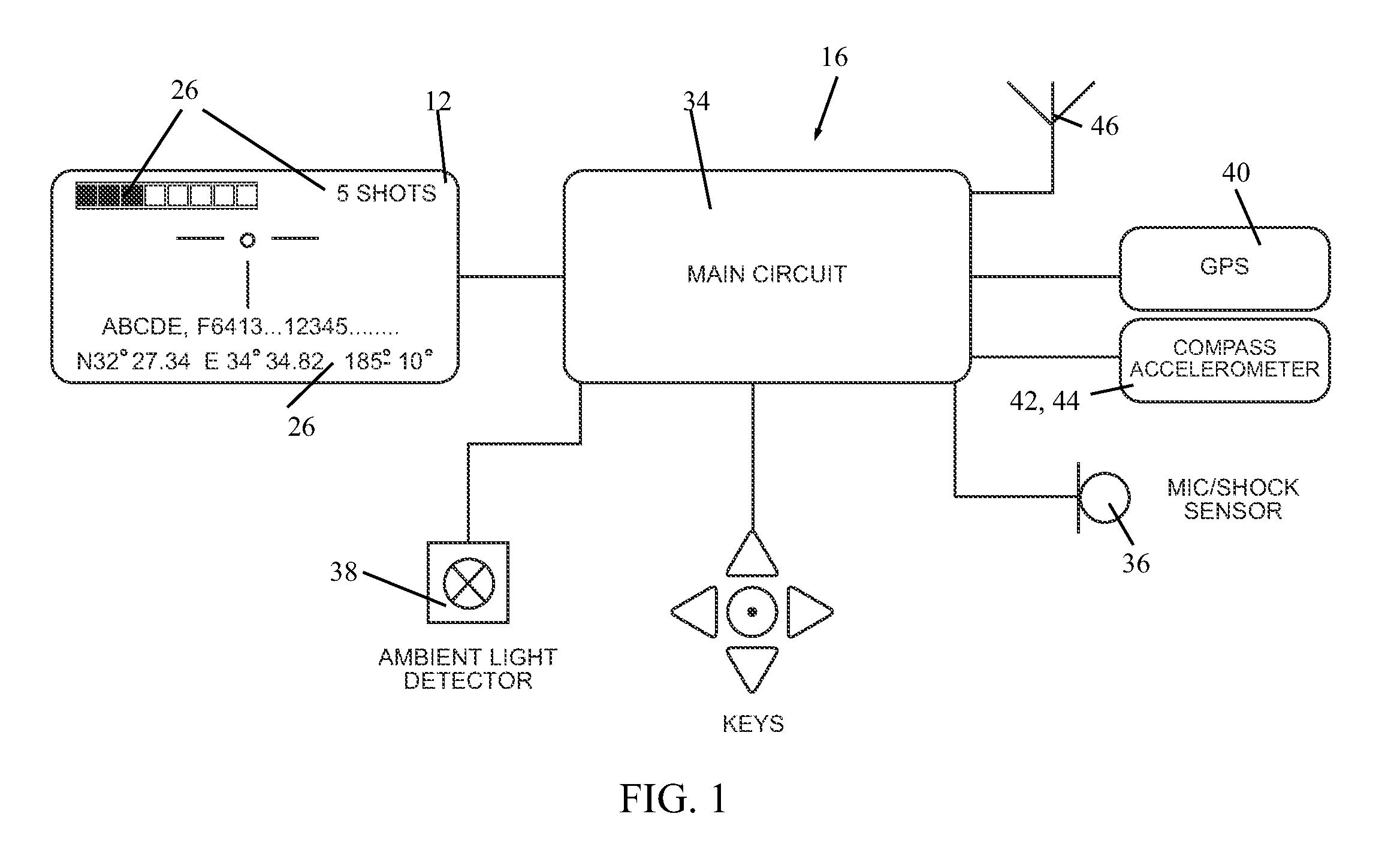

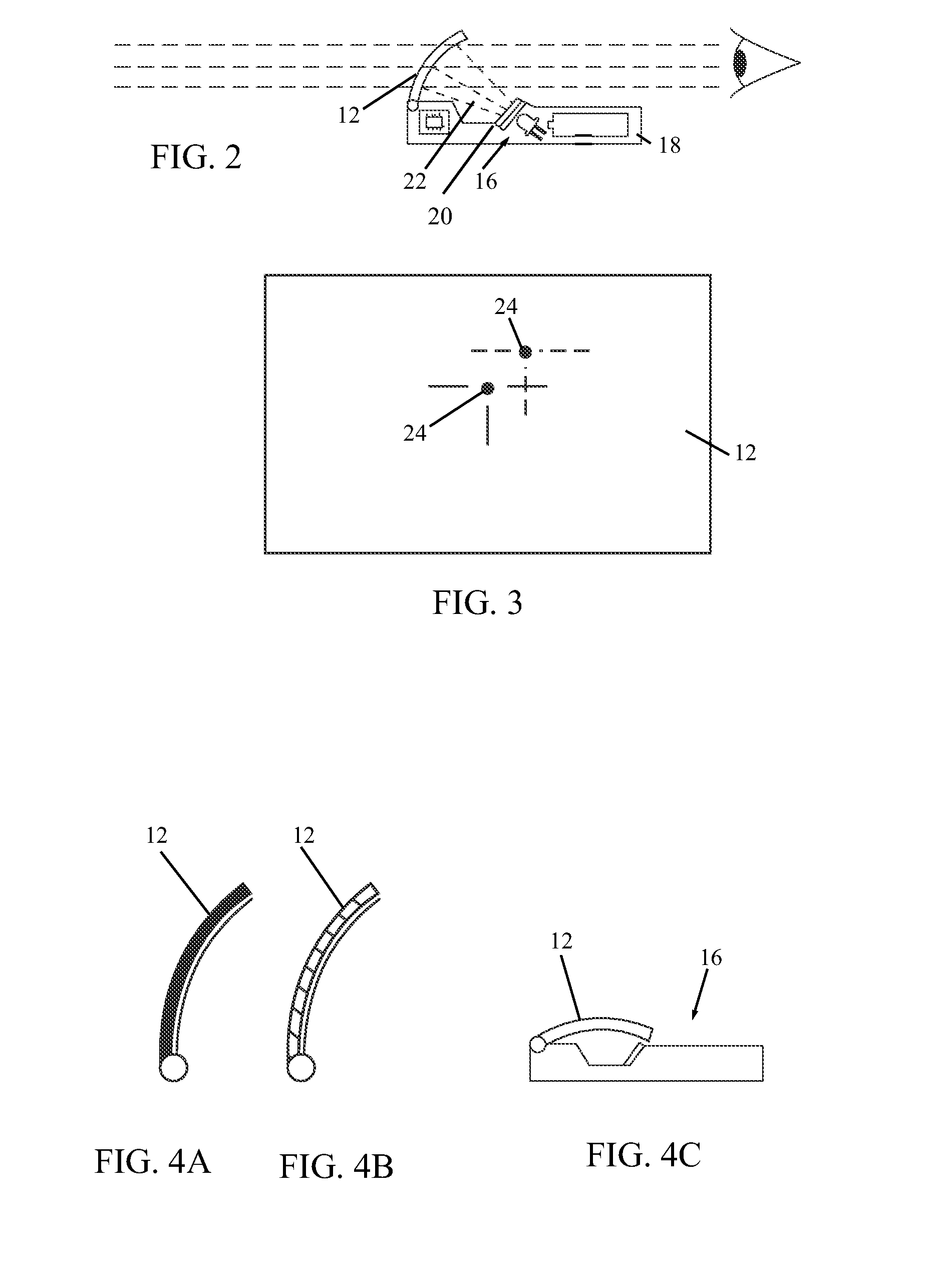

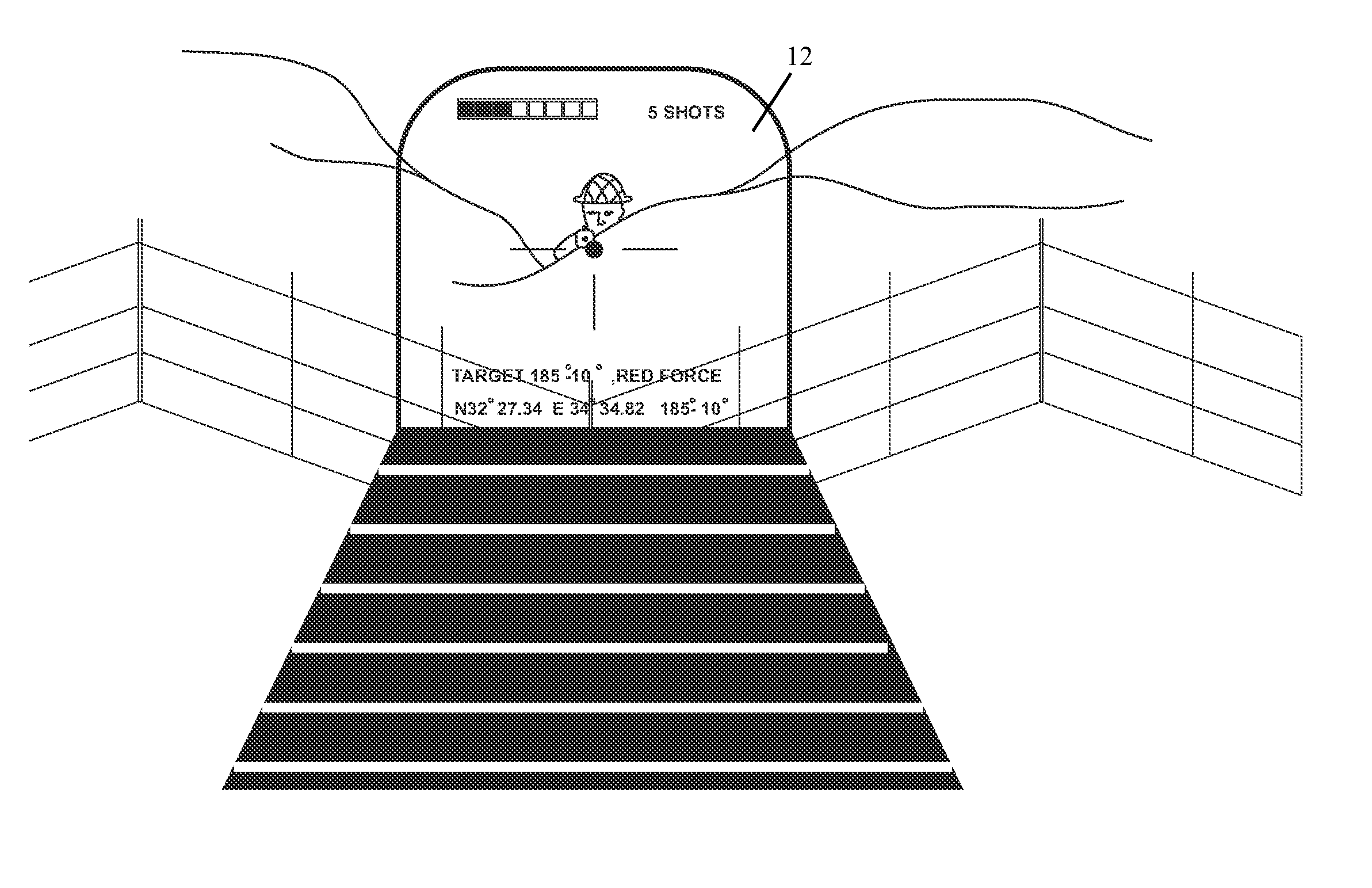

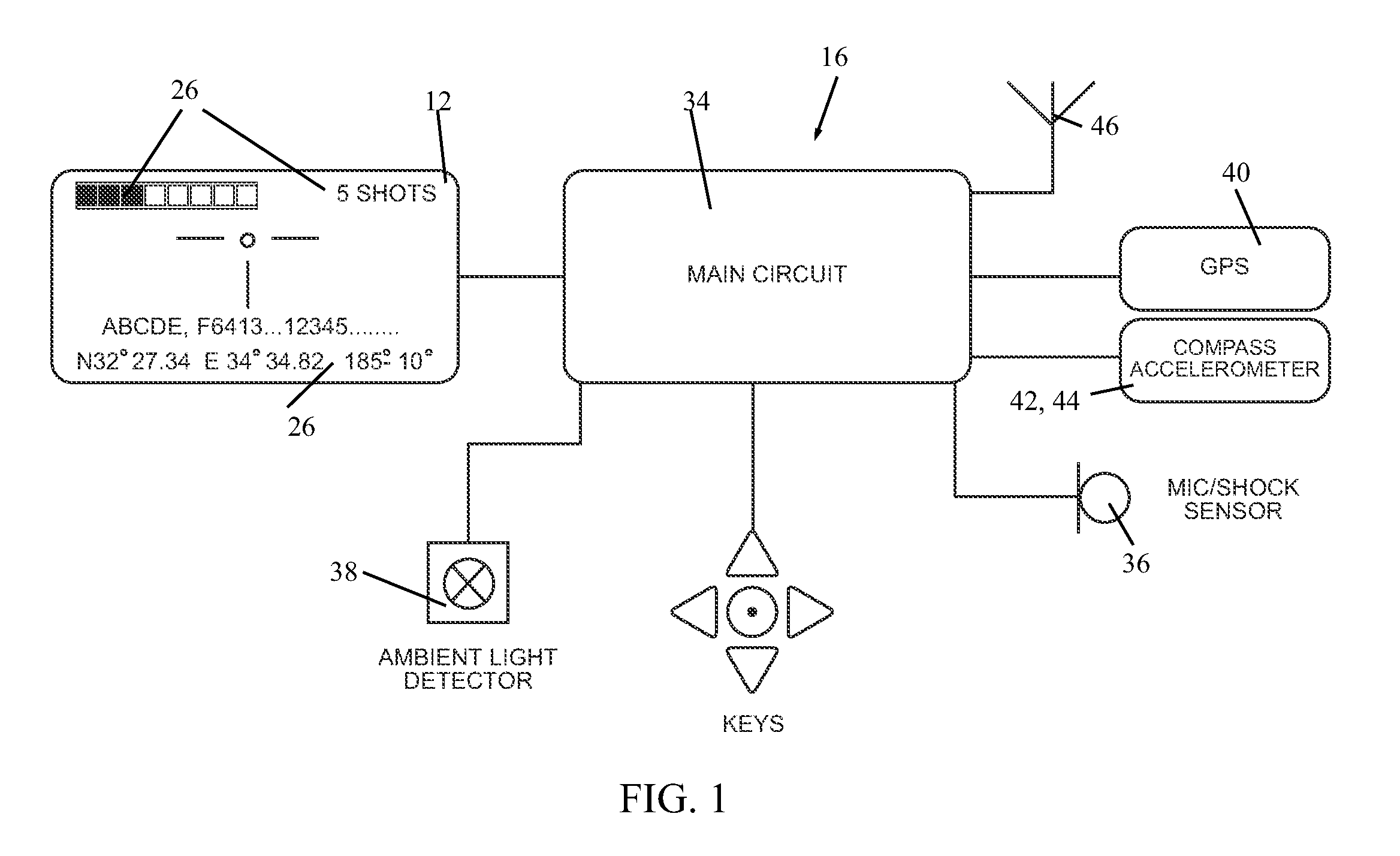

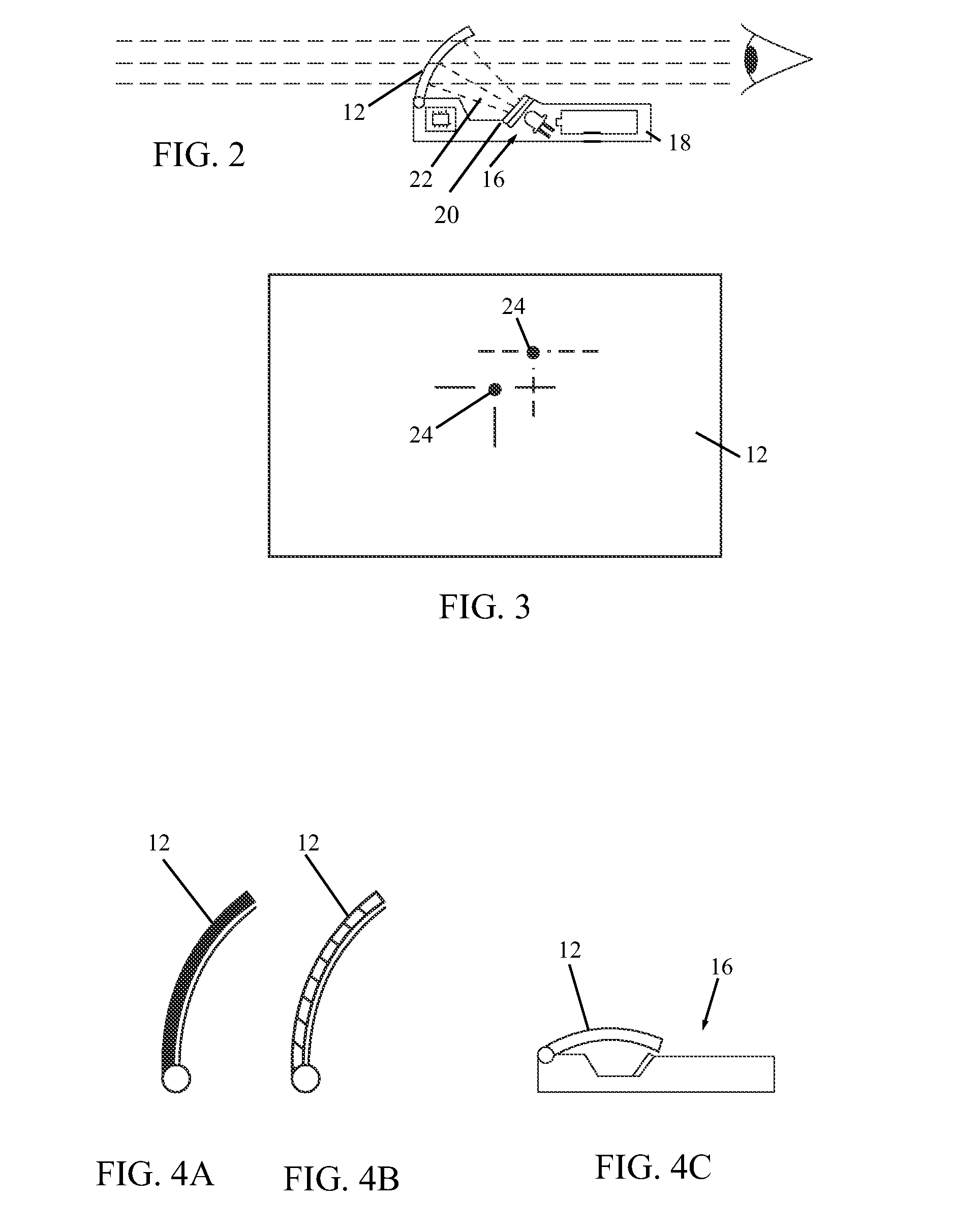

Reflex sight for weapon

A weapons reflex sight including a display substrate mounted on a weapon, and an optics module, disposed in a housing, the optics module including a computer-generated imagery (CGI) system and optical elements for generating images and projecting a beam of the images on the display substrate, the images including an aimpoint for aiming at a target and information related to use of the weapon.

Owner:SIROCCO VISION

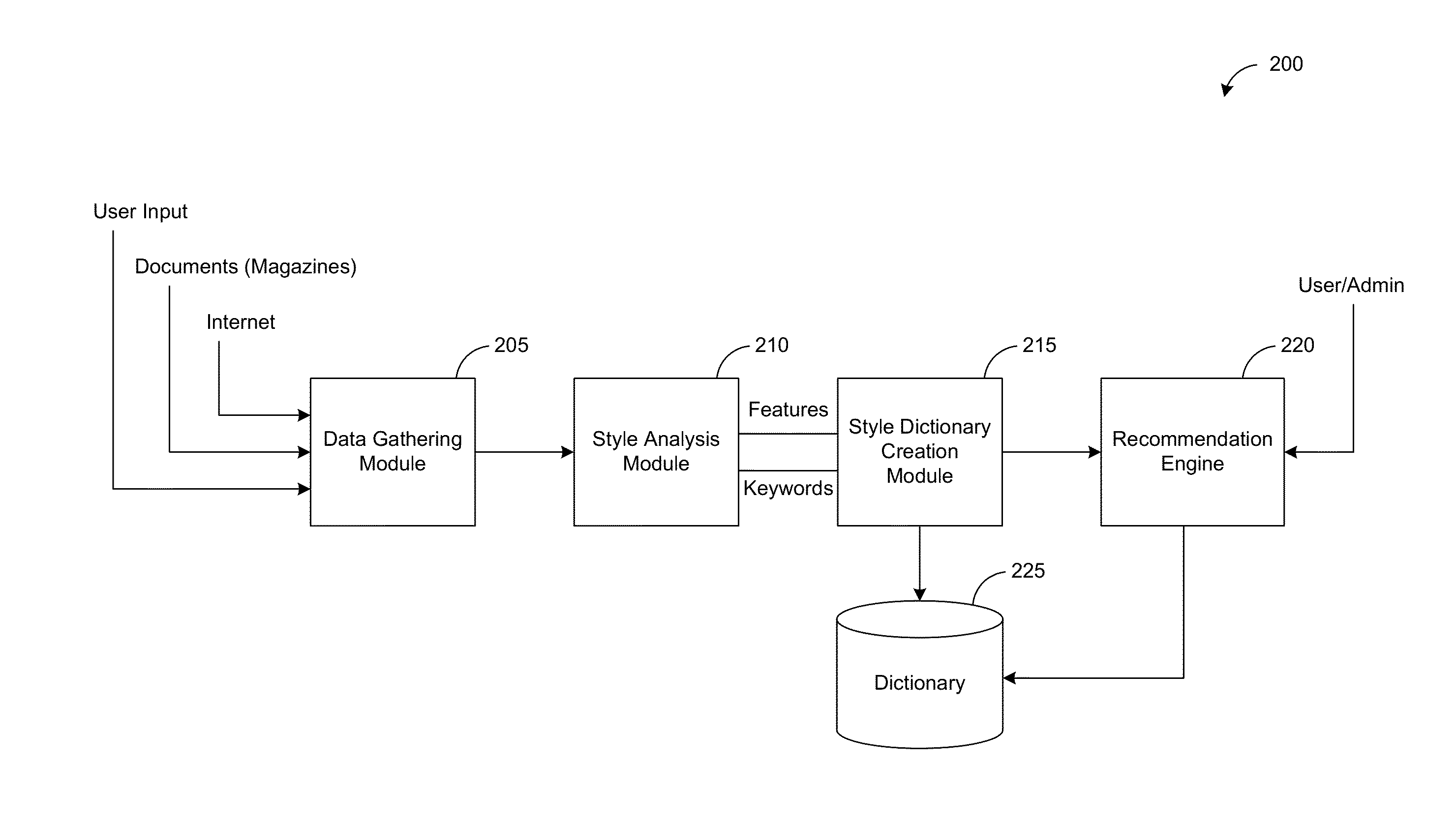

Discovering and presenting decor harmonized with a decor style

ActiveUS20140304265A1Facilitates analyzing user inputEasy to generate automaticallyDigital data processing detailsRelational databasesComputer graphics (images)Engineering

Technology is disclosed for discovering décor harmonized with a décor style (“the technology”). The décor includes décor items, e.g. artworks, paintings, pictures, artifacts, architectural pieces, arrangement of artworks, color selection, room décor, rugs, mats, furnishings, household items, fashion, clothes, jewelry, car interiors, garden arrangements etc. The technology facilitates analyzing user input to identify a décor style from a décor style dictionary, obtaining décor that harmonizes with décor style, and presenting a representation of the décor to the user. The décor style dictionary includes décor styles that are generated based on an analysis of content, including images and description of décor, from a plurality of sources. The décor styles can be based on a number of concepts, including a theme of the décor, a color / color palette, a mood of the person, a fashion era, a type of architecture, etc. The technology facilitates presentation of discovered décor using computer generated imagery techniques.

Owner:WALMART APOLLO LLC

Reflex sight for weapon

Owner:SIROCCO VISION

System and process for digital generation, placement, animation and display of feathers and other surface-attached geometry for computer generated imagery

InactiveUS7427991B2Character and pattern recognitionCathode-ray tube indicatorsAnimationComputer-generated imagery

A system and process for digitally representing a plurality of surface-attached geometric objects on a model. The system and process comprises generating a plurality of particles and placing the plurality of particles on a surface of the model. A first plurality of curves are generated and placed at locations on the model. Characteristics are defined for the first plurality of curves. A second plurality of curves is interpolated from the first plurality of curves at locations of the particles. A plurality of surface-attached geometric objects is generated that replaces all of the particles and the second plurality of curves.

Owner:SONY TOKYO JAPAN & SONY PICTURES ENTERTAINMENT

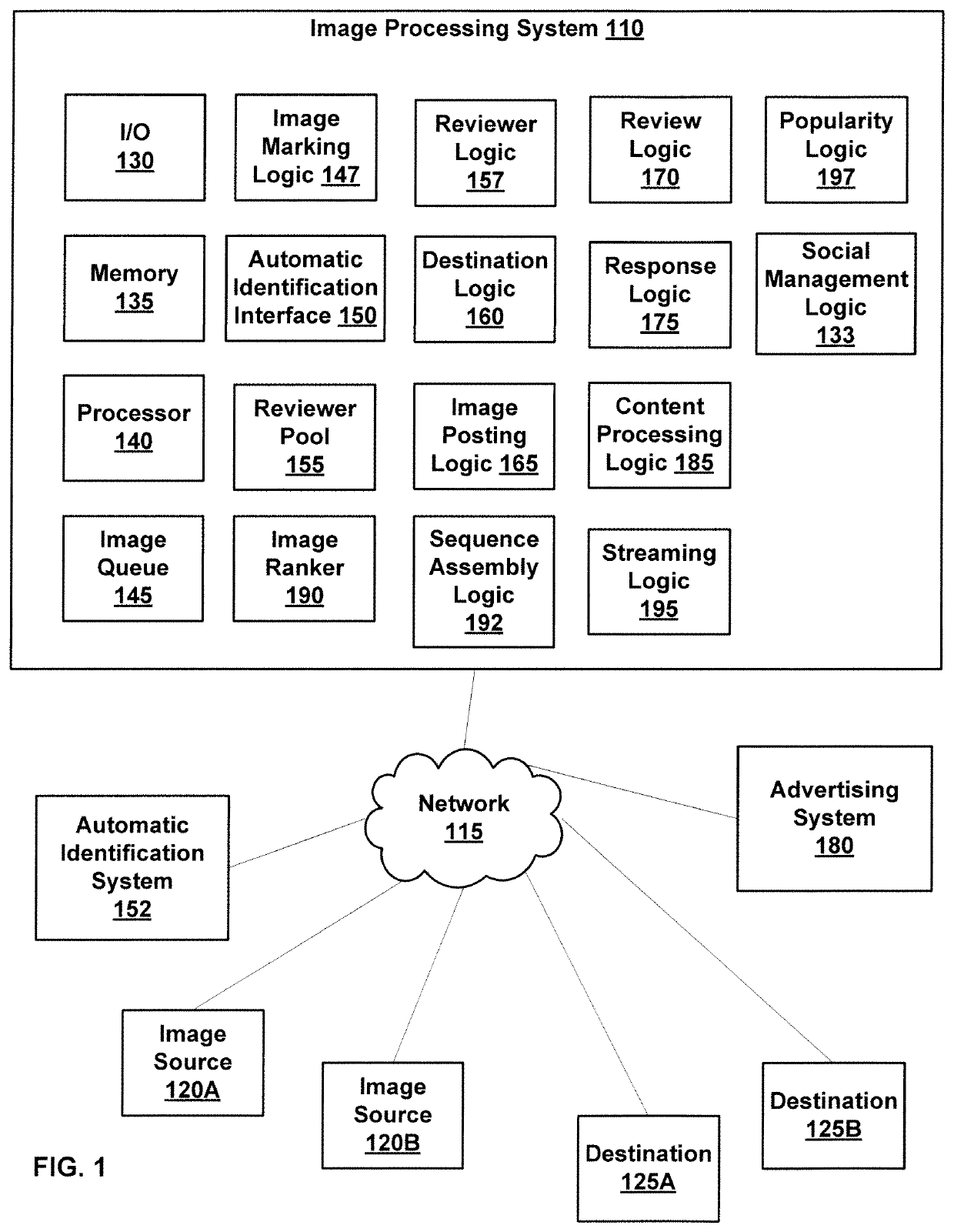

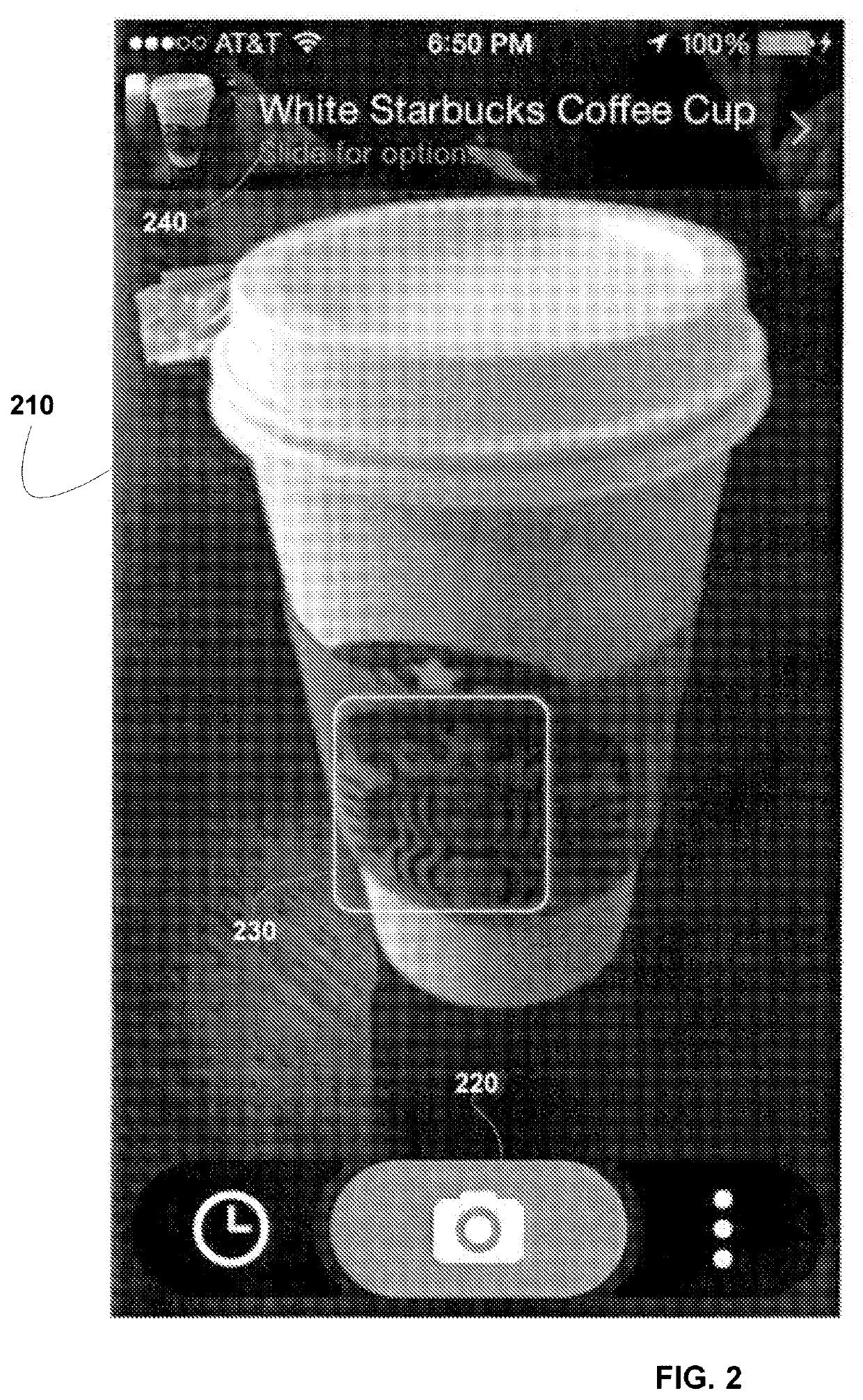

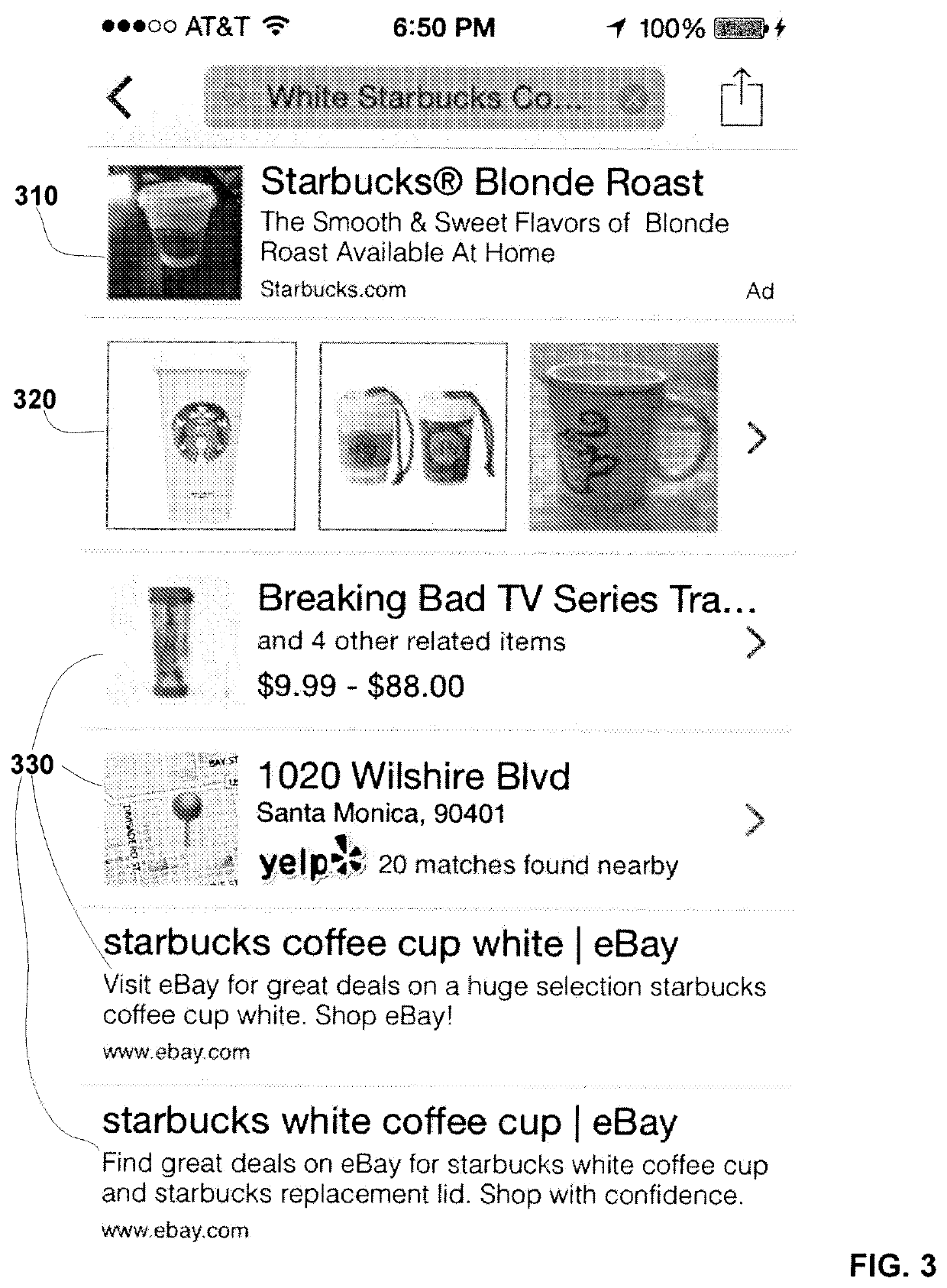

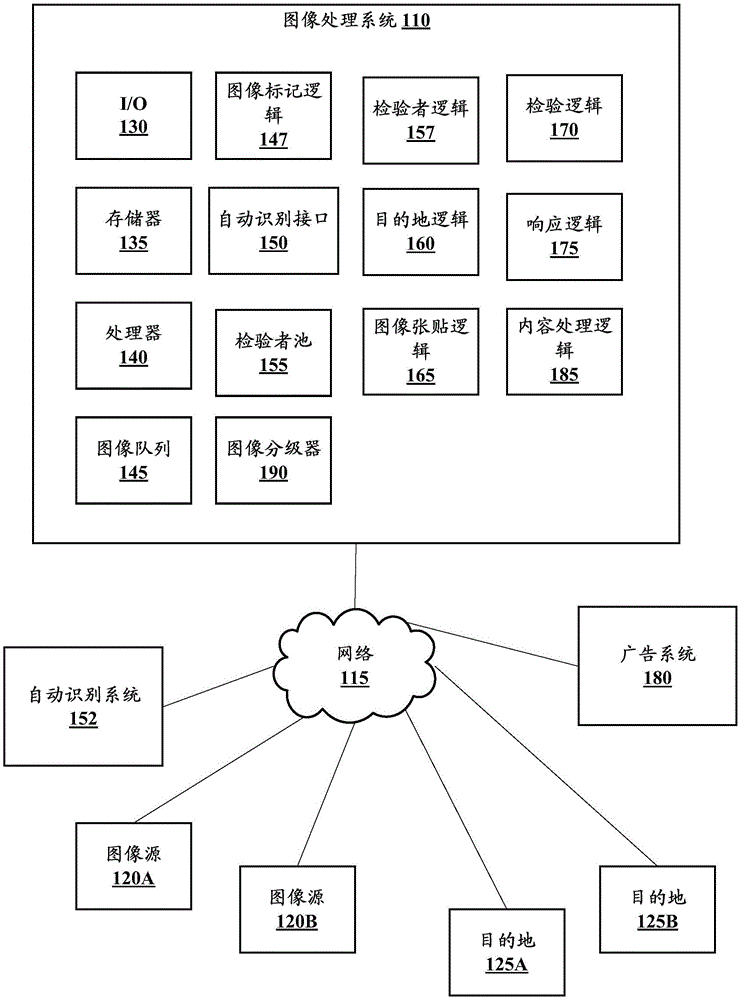

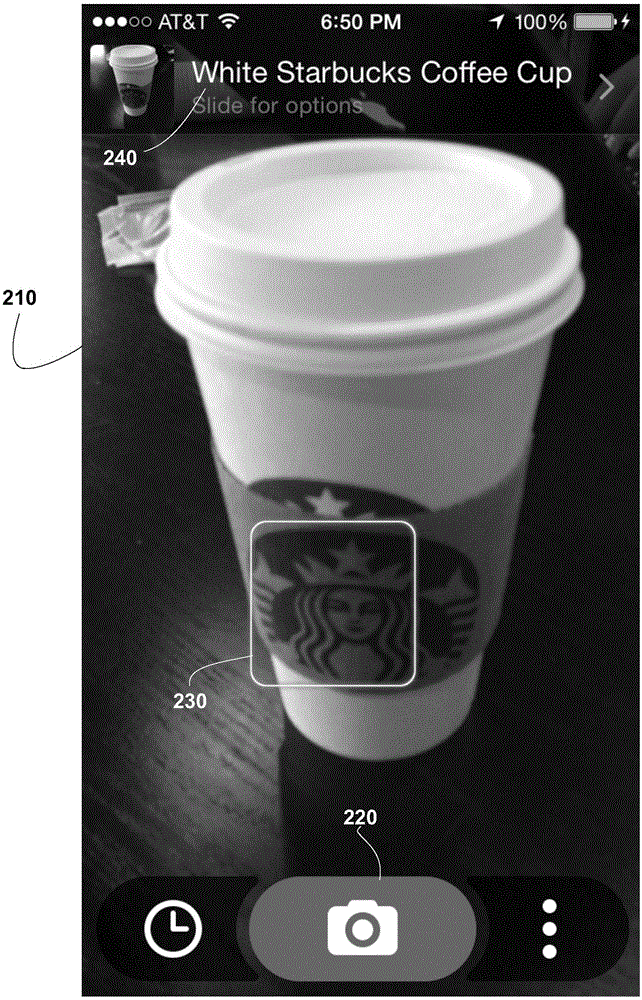

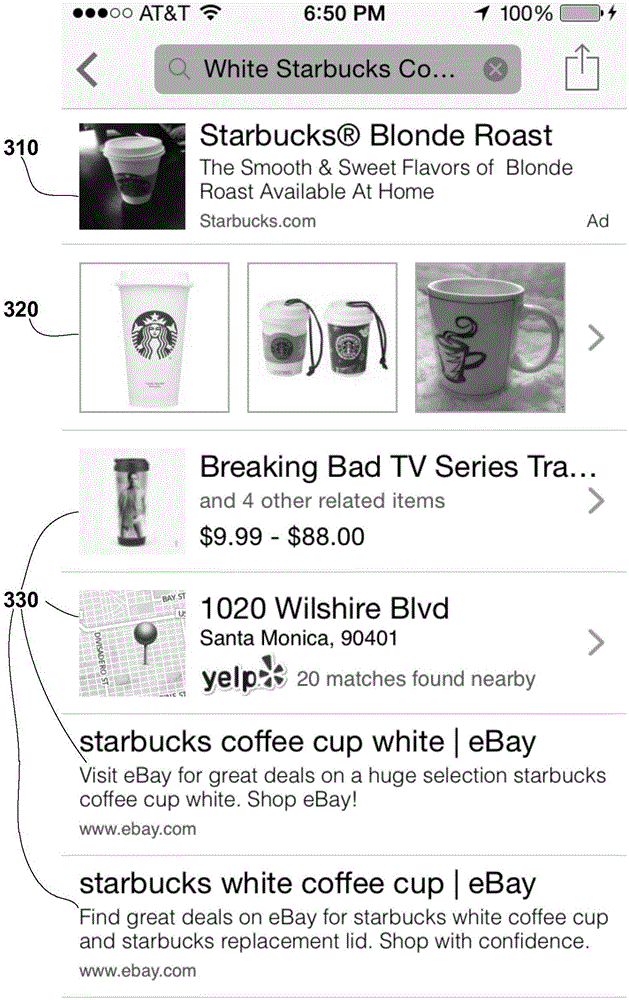

Client Based Image Analysis

PendingUS20220207274A1Low costImage be lowCharacter and pattern recognitionMetadata still image retrievalInternet searchingImaging analysis

An image recognition approach employs both computer generated and manual image reviews to generate image tags characterizing an image. The computer generated and manual image reviews can be performed sequentially or in parallel. The generated image tags may be provided to a requester in real-time, be used to select an advertisement, and / or be used as the basis of an internet search. In some embodiments generated image tags are used as a basis for an upgraded image review. A confidence of a computer generated image review may be used to determine whether or not to perform a manual image review. Images and their associated image tags are optionally added to an image sequence.

Owner:CLOUDSIGHT INC

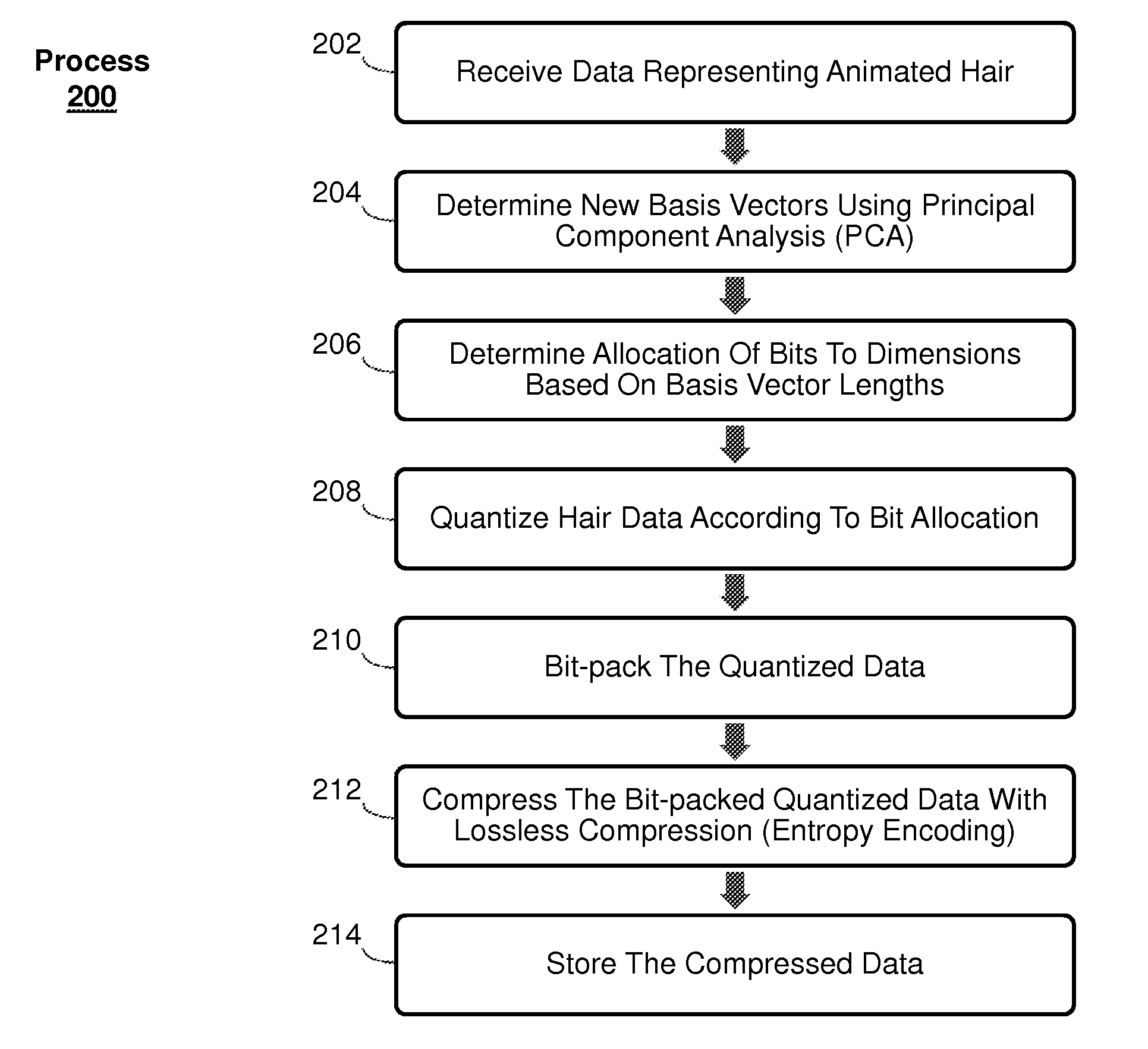

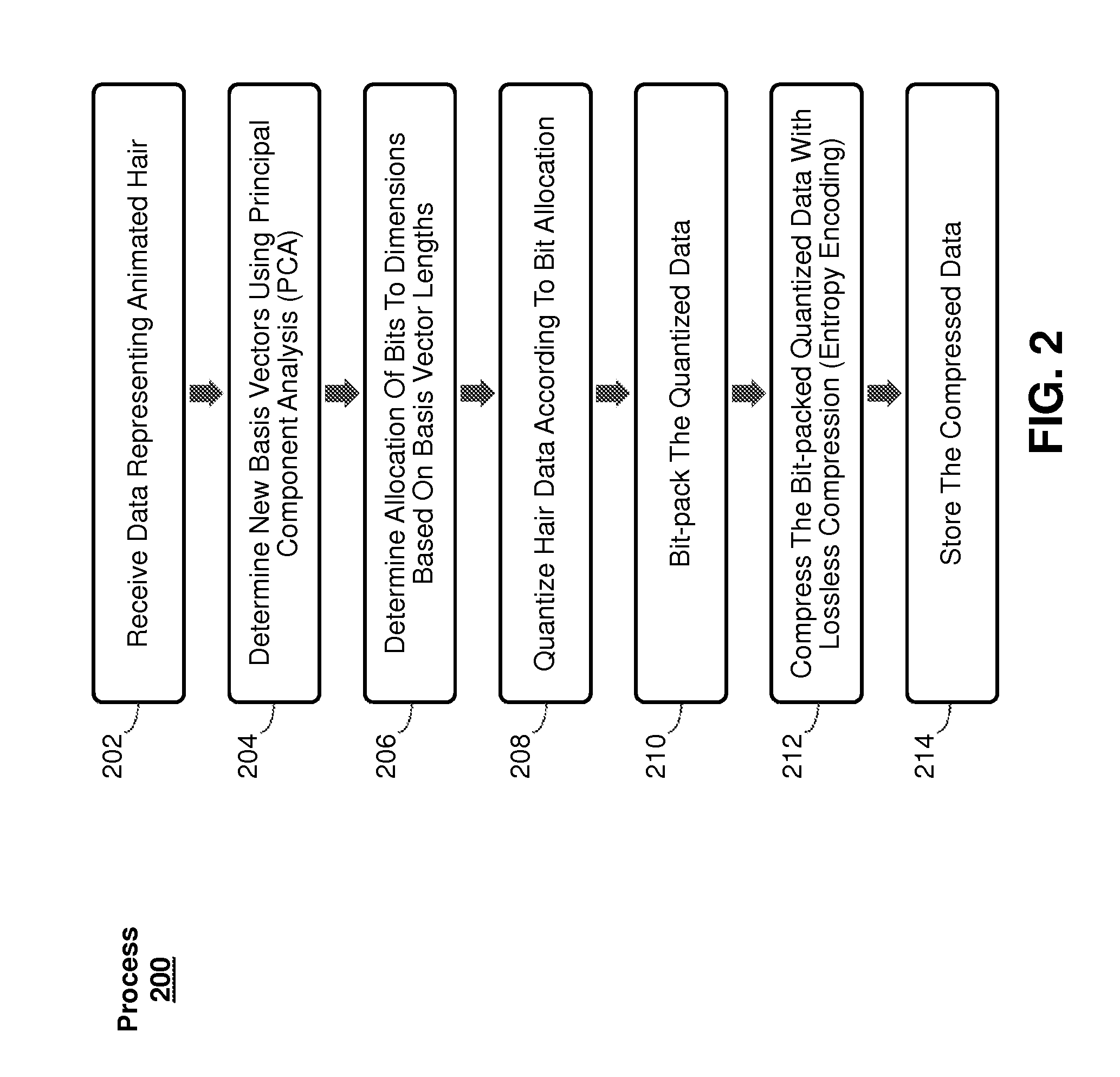

Compressing data representing computer animated hair

Data representing animated hair in a computer generated imagery (CGI) scene may be compressed by treating hair data as arrays of parameters. Hair data parameters may include control vertices, hair color, hair radius, and the like. A principal component analysis (PCA) may be performed on the arrays of hair data. PCA may yield new basis vectors, varying in length, with the largest basis vector corresponding to a new dimension with the largest variance in hair data. The hair data may be quantized based on the varying lengths of new basis vectors. The number of bits allocated for quantizing each new dimension corresponding to each new basis vector may be determined based on the relative lengths of new basis vectors, with more bits allocated to dimensions corresponding to longer basis vectors. The quantized hair data may be bit-packed and then compressed using lossless entropy encoding.

Owner:DREAMWORKS ANIMATION LLC

Image tag add system

InactiveCN105046630AImage data processing detailsSpecial data processing applicationsInternet searchingRadiology

Owner:CLOUDSIGHT INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com