Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

32 results about "UV mapping" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

UV mapping is the 3D modelling process of projecting a 2D image to a 3D model's surface for texture mapping. The letters "U" and "V" denote the axes of the 2D texture because "X", "Y" and "Z" are already used to denote the axes of the 3D object in model space.

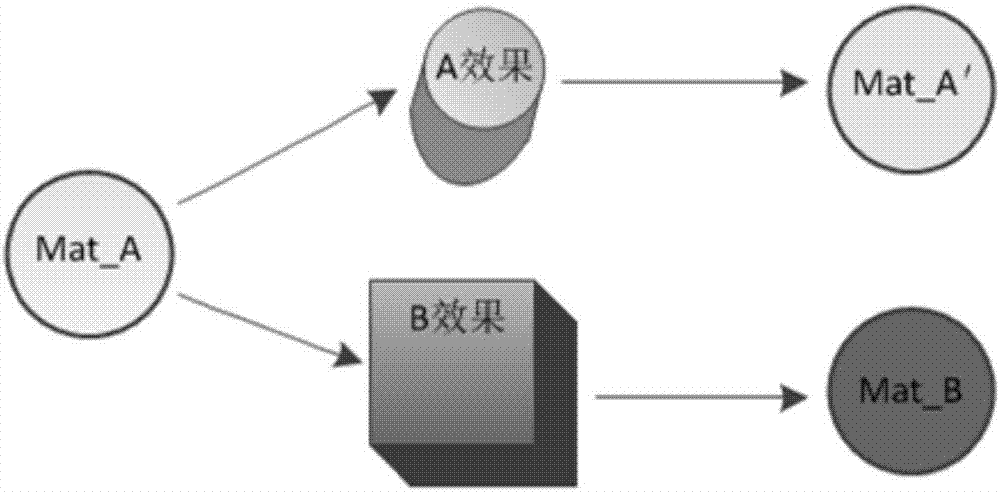

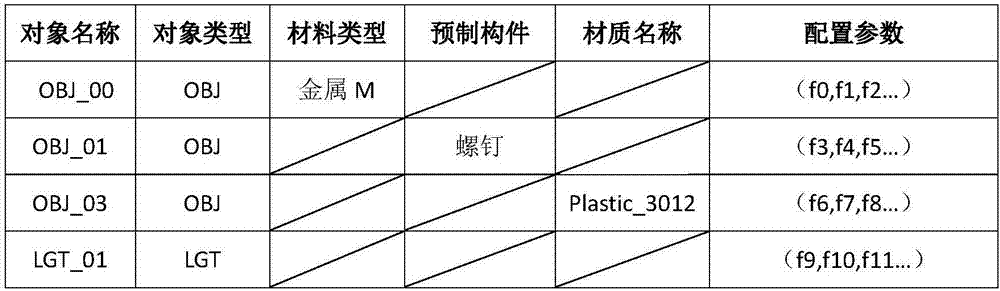

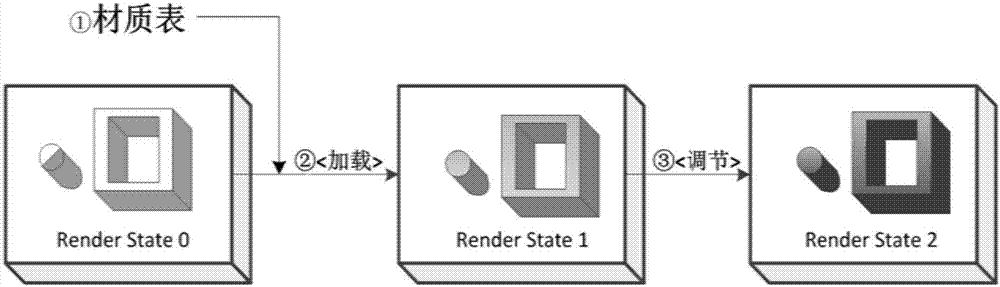

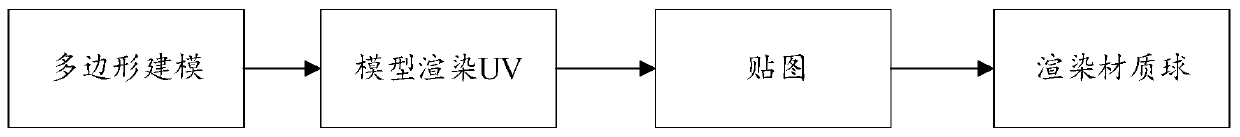

Fast rendering method for virtual scene and model

ActiveCN107103638AMaterial parameters can be modifiedImprove efficiencyImage rendering3D-image renderingUV mapping3D modeling

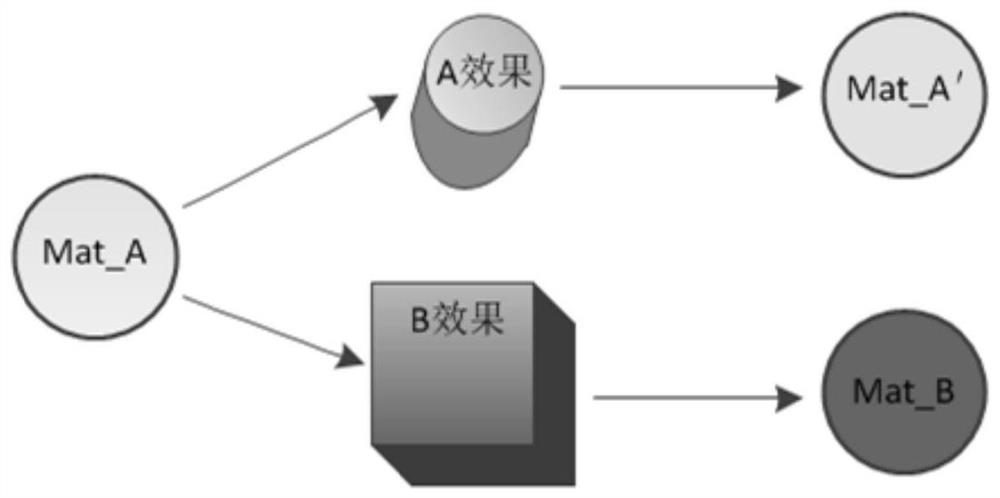

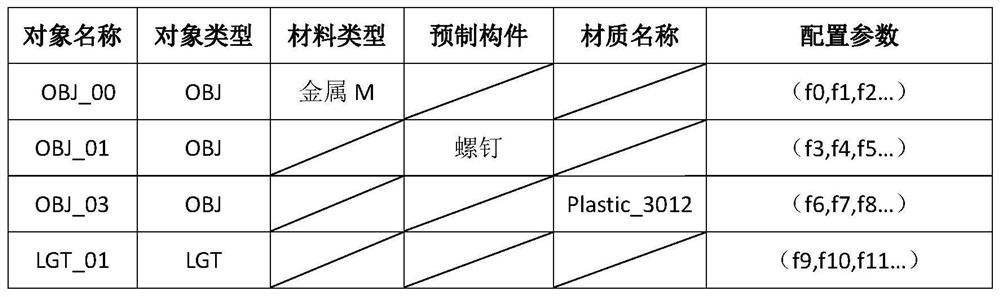

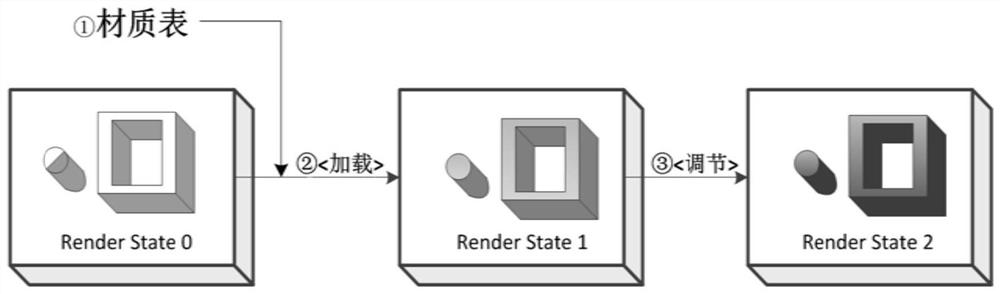

The invention discloses a fast rendering method and device for a virtual scene and a model. The method comprises steps of firstly obtaining the rendering request of the virtual scene and the model to be rendered and the standard material library; creating a read and write file including the corresponding relation among the scene parameter, the model and the material according to the rendering request, and selecting a material corresponding to the model to be rendered from the pre-established standard material library after loading; setting and adjusting the scene parameter according to the rendering request, rendering the model according to the selected material and adjusting the material parameter to complete the rendering satisfying the specified rendering request; when a single model corresponds to a plurality of materials, directly subjecting the plurality of materials to a three-dimensional presence UV mapping on the surface of the three-dimensional model; and generating a high light effect and its corresponding material by the hand-drawn trajectory according to the rendering request, and rendering the model's high light effect. The method has the advantages of high efficiency, openness, automation, integration of tools, light weight, sustainability, and the like on the basis of greatly improving the efficiency of virtual scene and model rendering.

Owner:HANGZHOU VERYENGINE TECH CO LTD

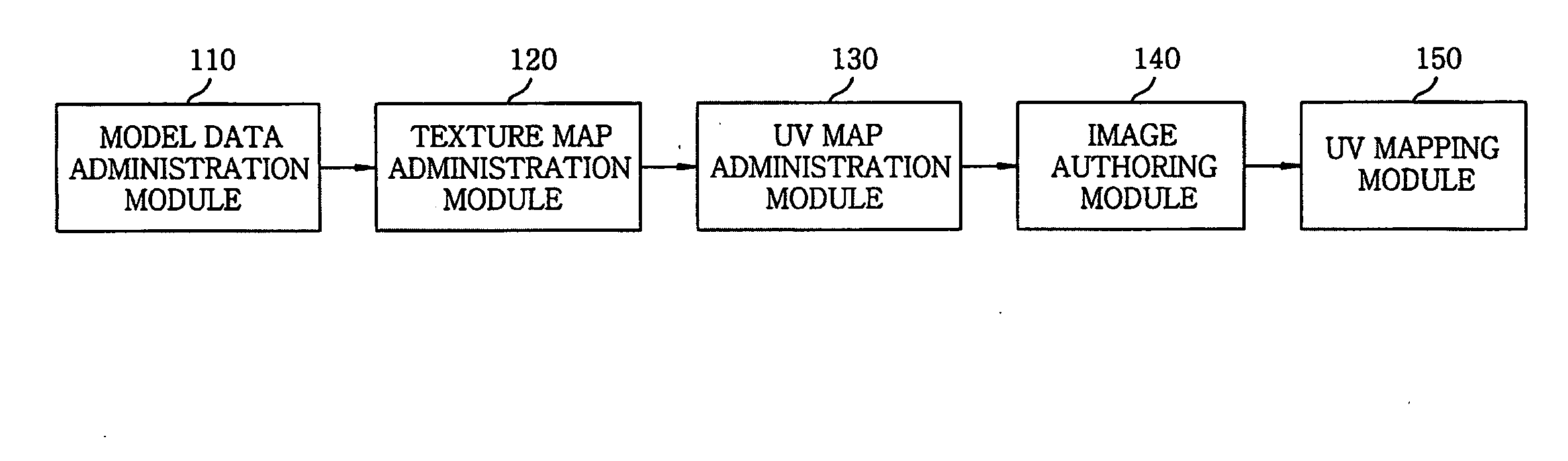

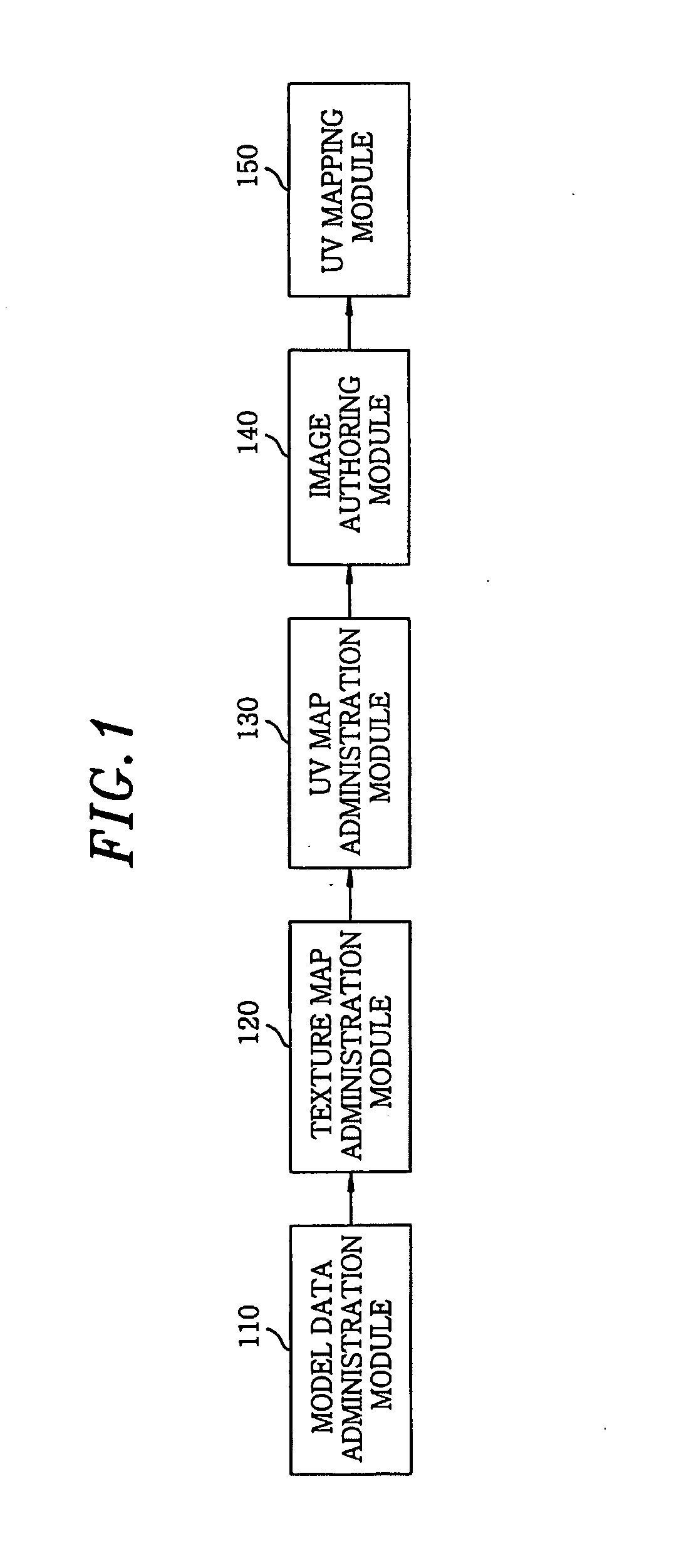

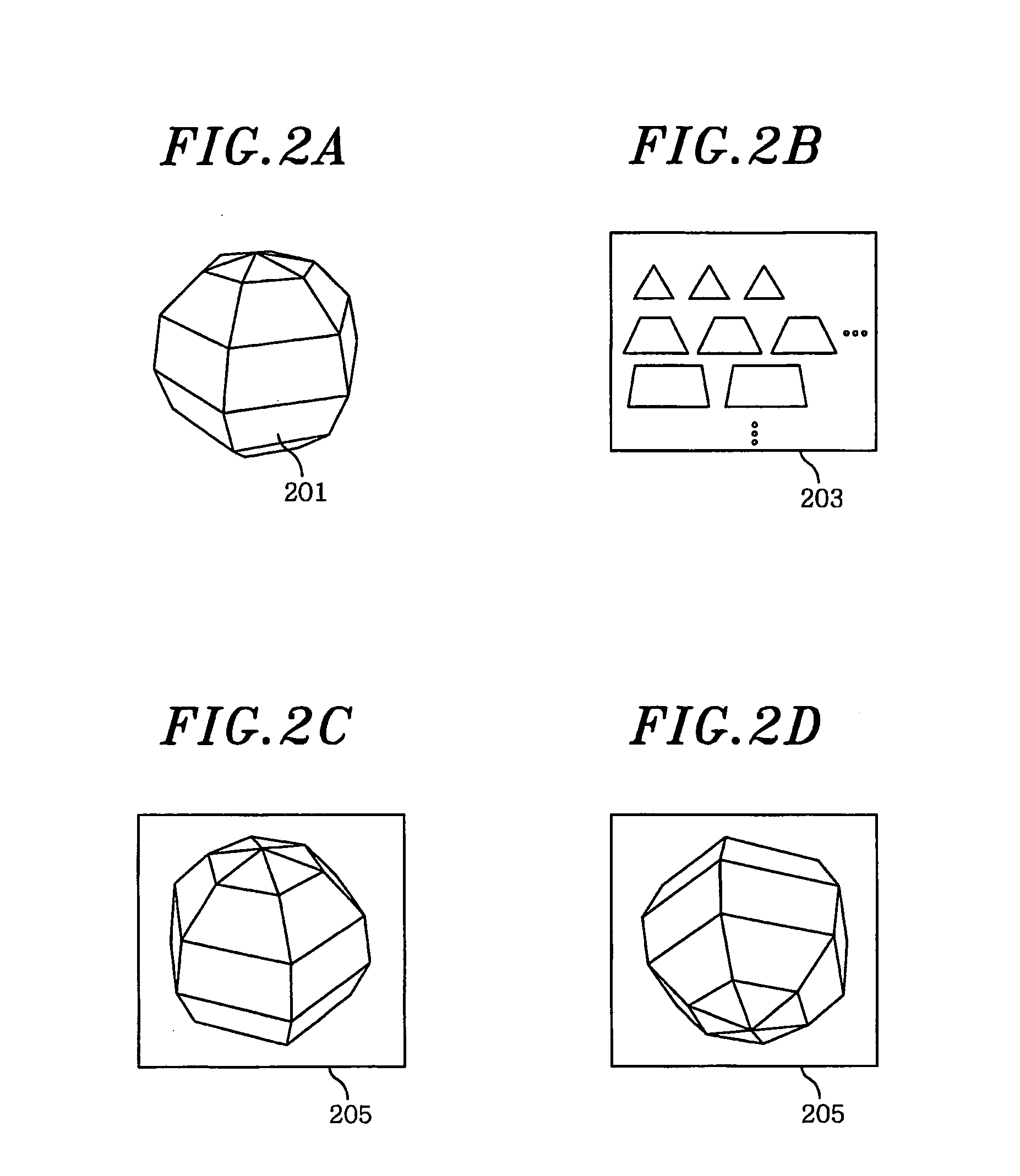

Method and system for texturing of 3D model in 2d environment

InactiveUS20090153577A1Unnecessary timeEliminate partial distortionCathode-ray tube indicators3D-image renderingTexture atlasProjection plane

Owner:ELECTRONICS & TELECOMM RES INST

Method and System for the 3D Design and Calibration of 2D Substrates

A system, program product, and method including scanning an object or entity with a three-dimensional (3D) scanning module of a computing system; providing at least one of a three-dimensional (3D) image model and a 3D mesh model from the scanned, rendered, created, or uploaded object or entity; retopologizing at least one of the three-dimensional (3D) image model and 3D mesh model; applying a UV mapping process to at least one of the retopologized three-dimensional (3D) image model and 3D mesh model, where the “U” and “V” of the UV are a two-dimensional axes of a UVW coordinate plane; projecting a two-dimensional (2D) image to at least one of the three-dimensional (3D) image model and 3D mesh model to visualize or determine if the two-dimensional (2D) image has the correct size relative to at least one of the three-dimensional (3D) image model and 3D mesh model.

Owner:PAULSON ETHAN BRYCE +1

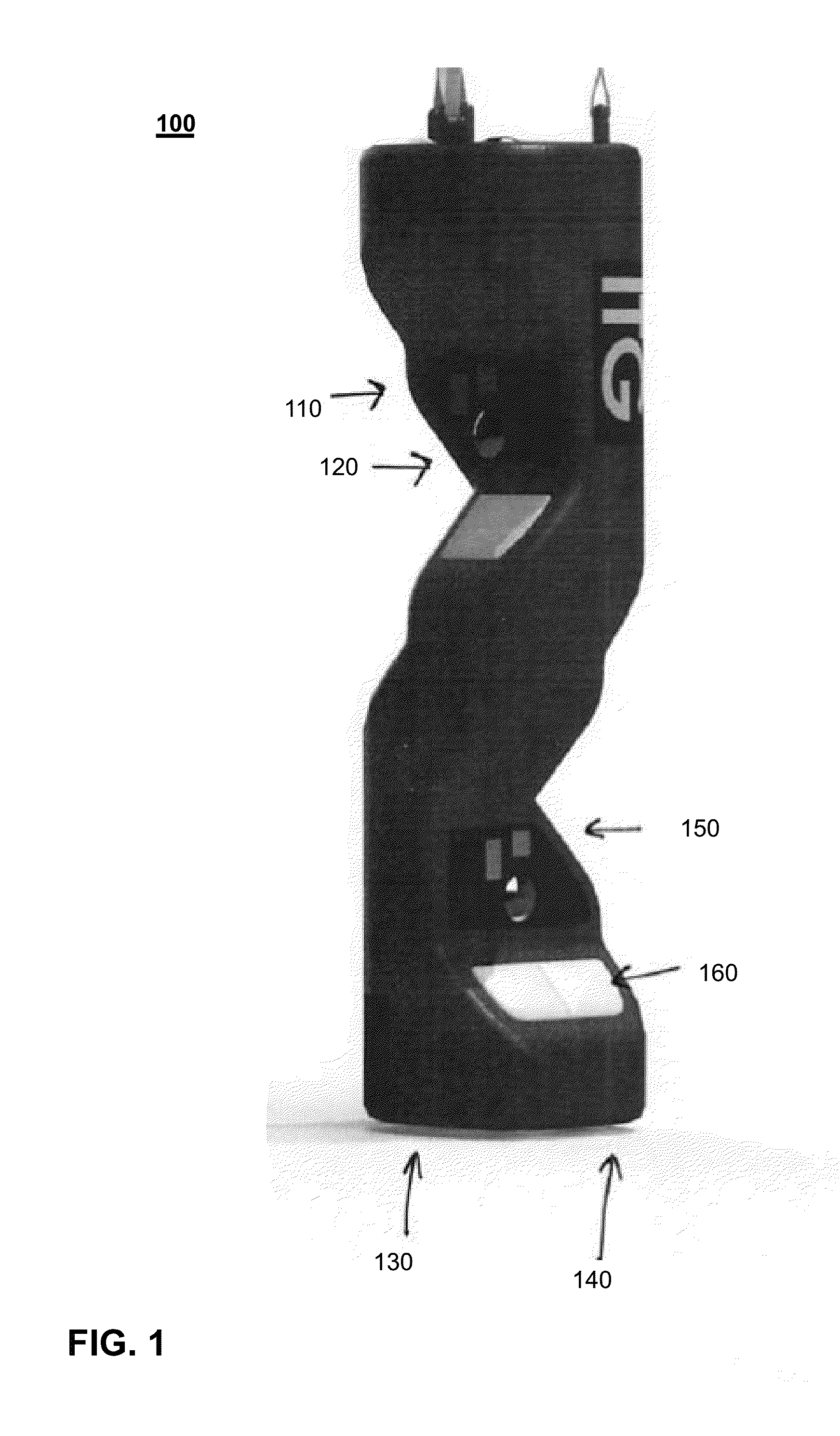

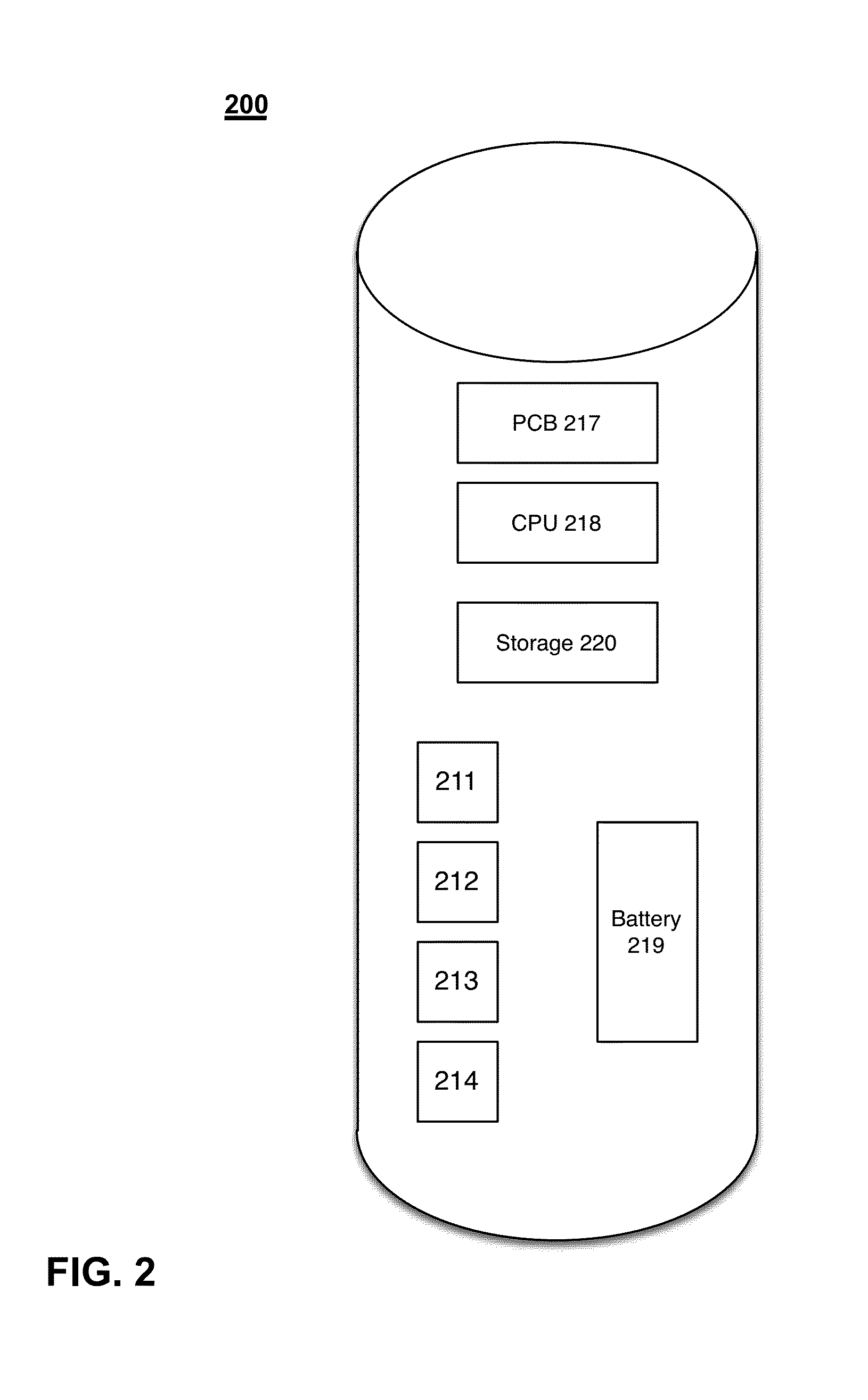

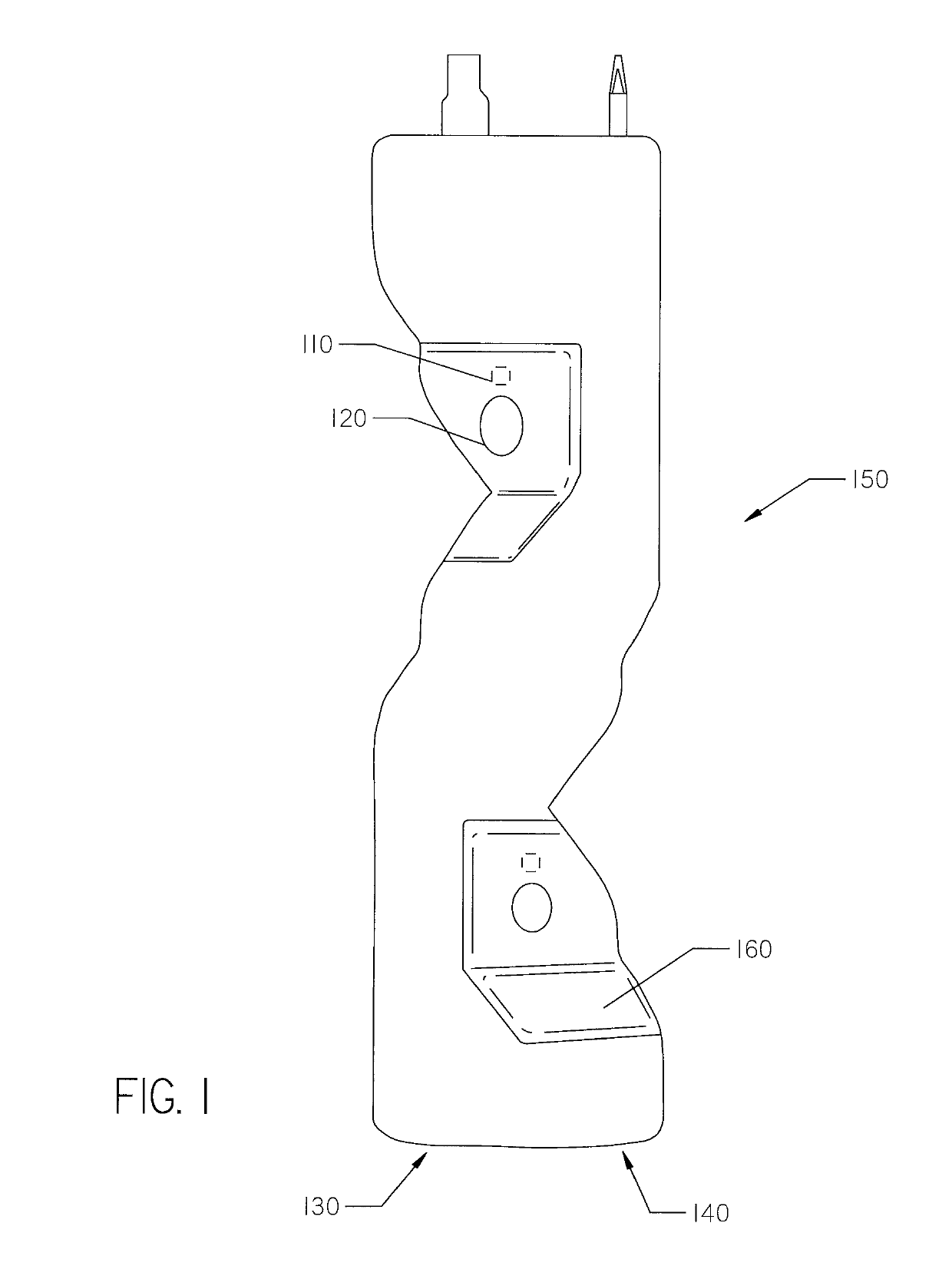

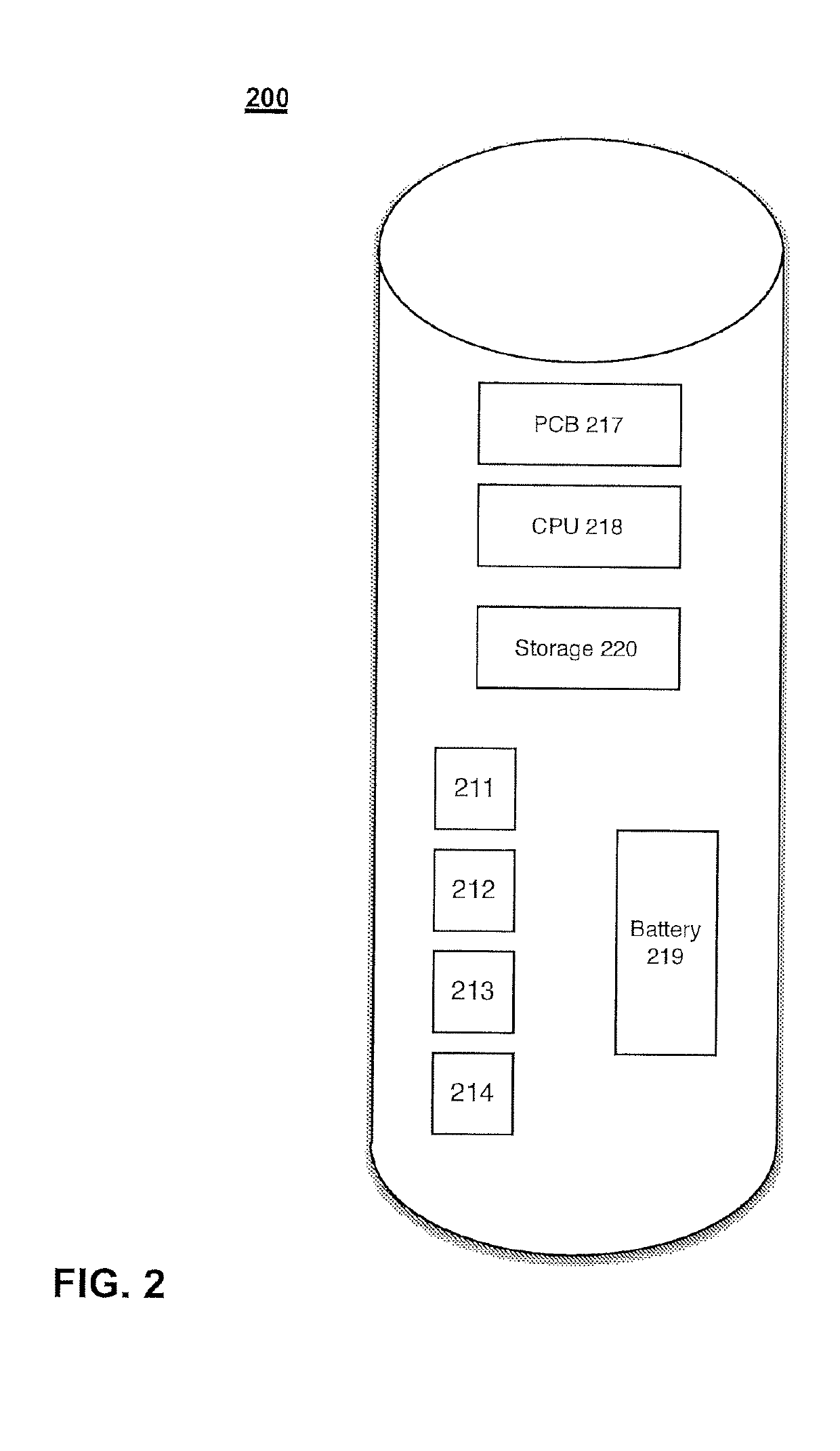

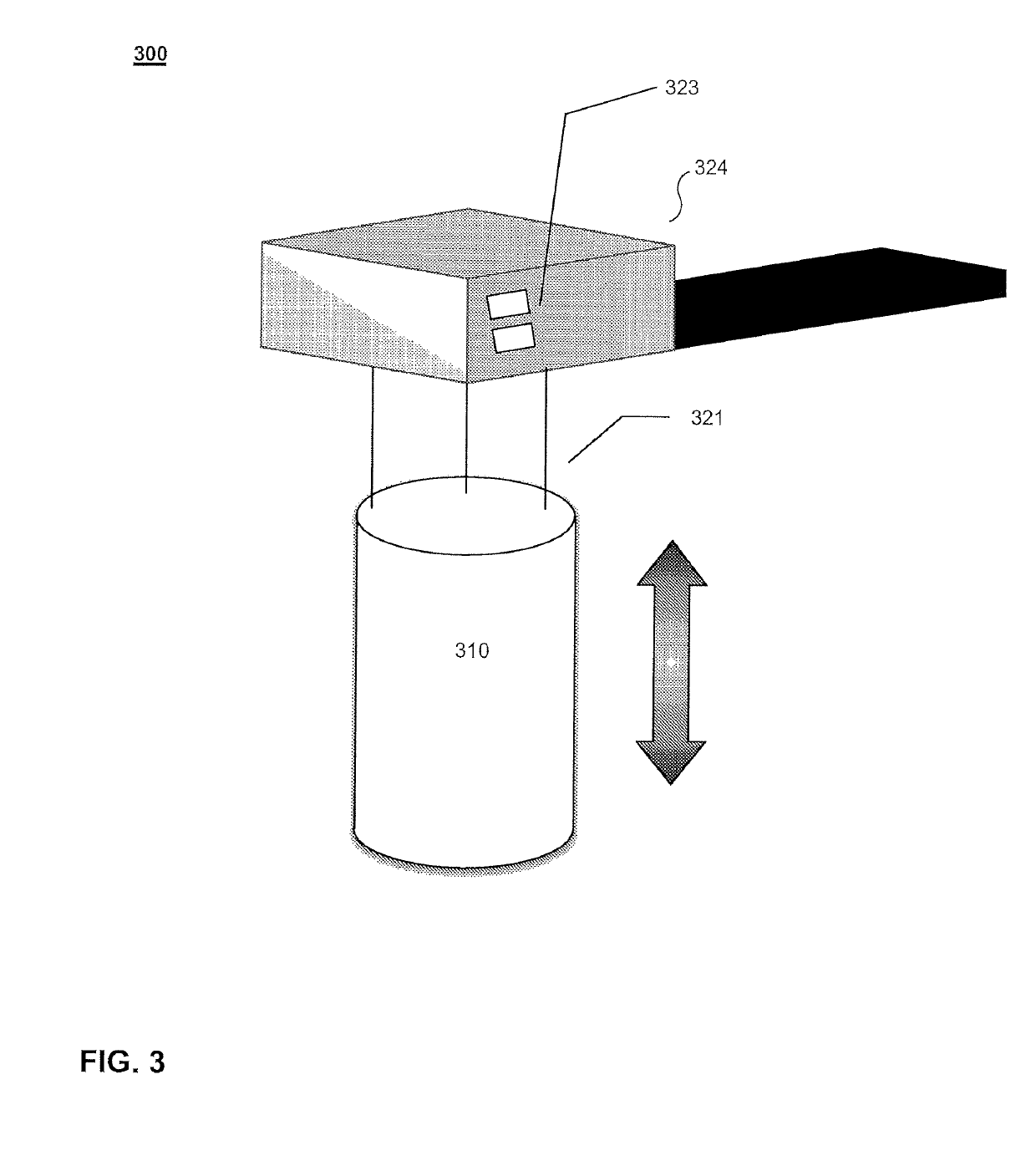

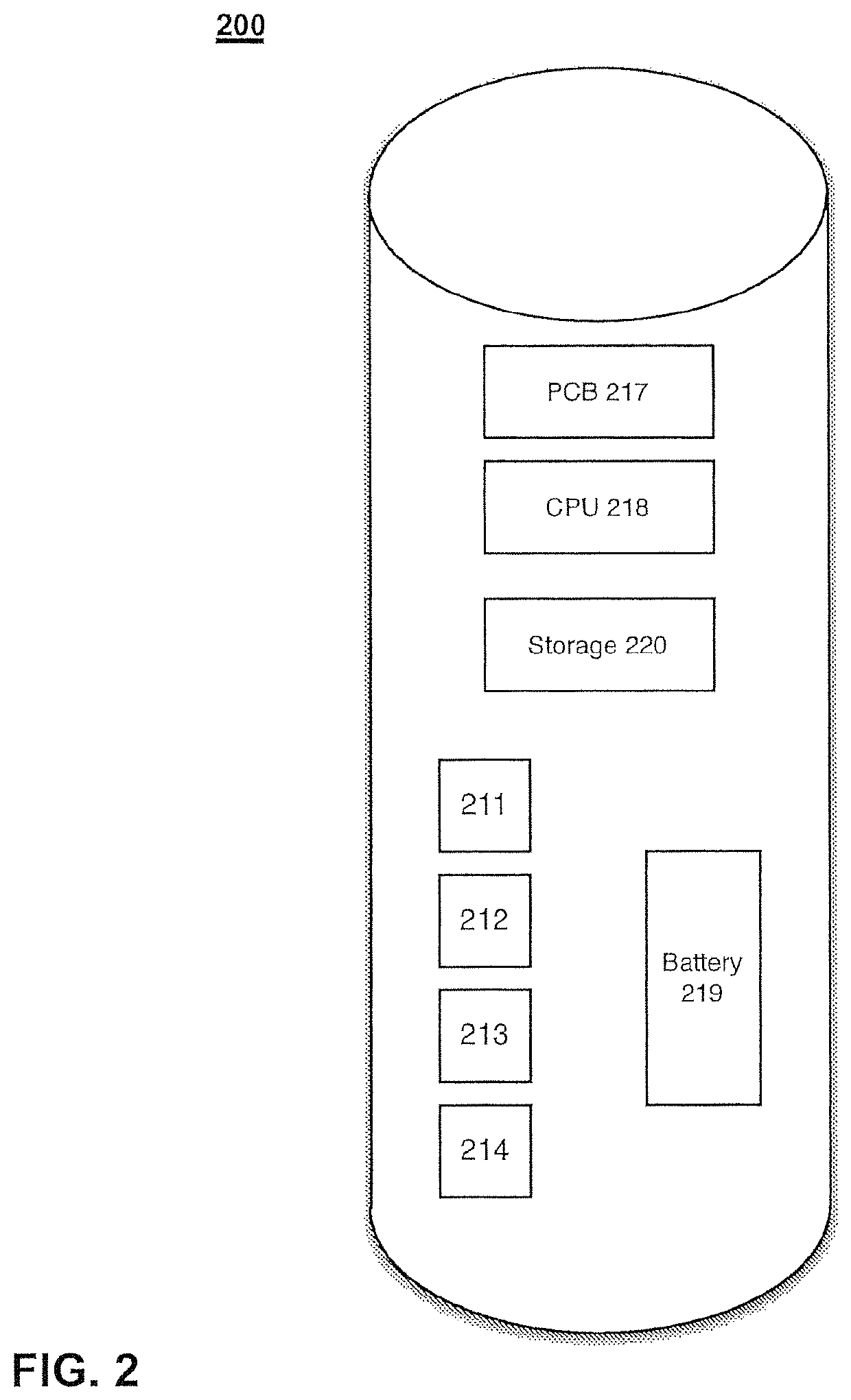

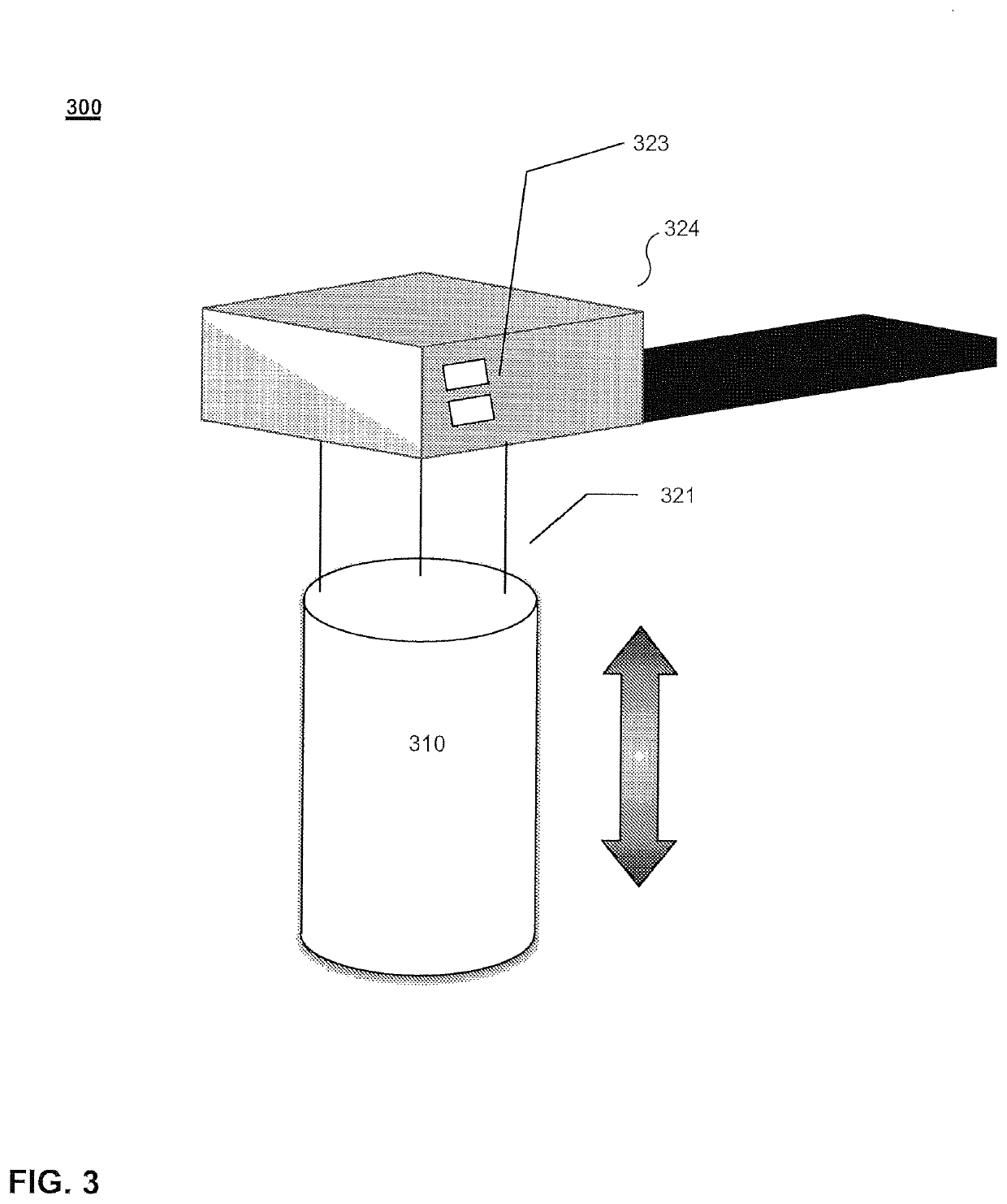

3D asset inspection

InactiveUS20160249021A1Facilitating subsequent abilityTelevision system detailsColor television details3d sensorOdometry

Systems and methods for physical asset inspection are provided. According to one embodiment, a probe is positioned to multiple data capture positions with reference to a physical asset. For each position: odometry data is obtained from an encoder and / or an IMU; a 2D image is captured by a camera; a 3D sensor data frame is captured by a 3D sensor, having a view plane overlapping that of the camera; the odometry data, the 2D image and the 3D sensor data frame are linked and associated with a physical point in real-world space based on the odometry data; and switching between 2D and 3D views within the collected data is facilitated by forming a set of points containing both 2D and 3D data by performing UV mapping based on a known positioning of the camera relative to the 3D sensor.

Owner:CHARLES MACHINE WORKS

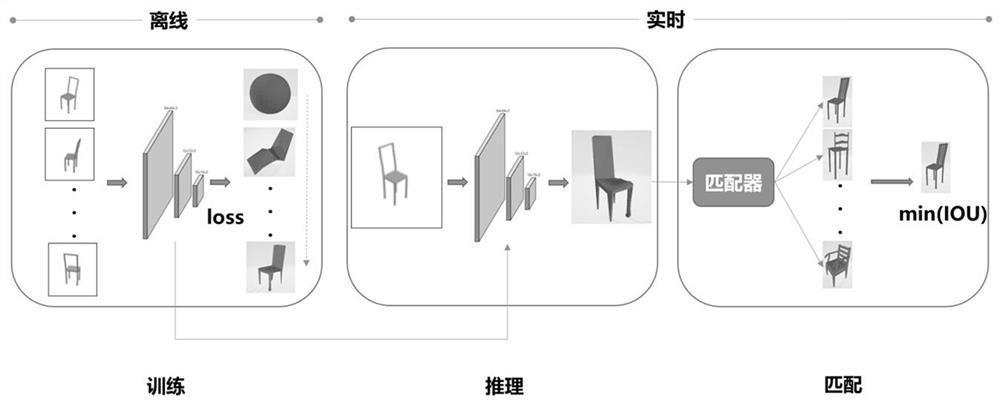

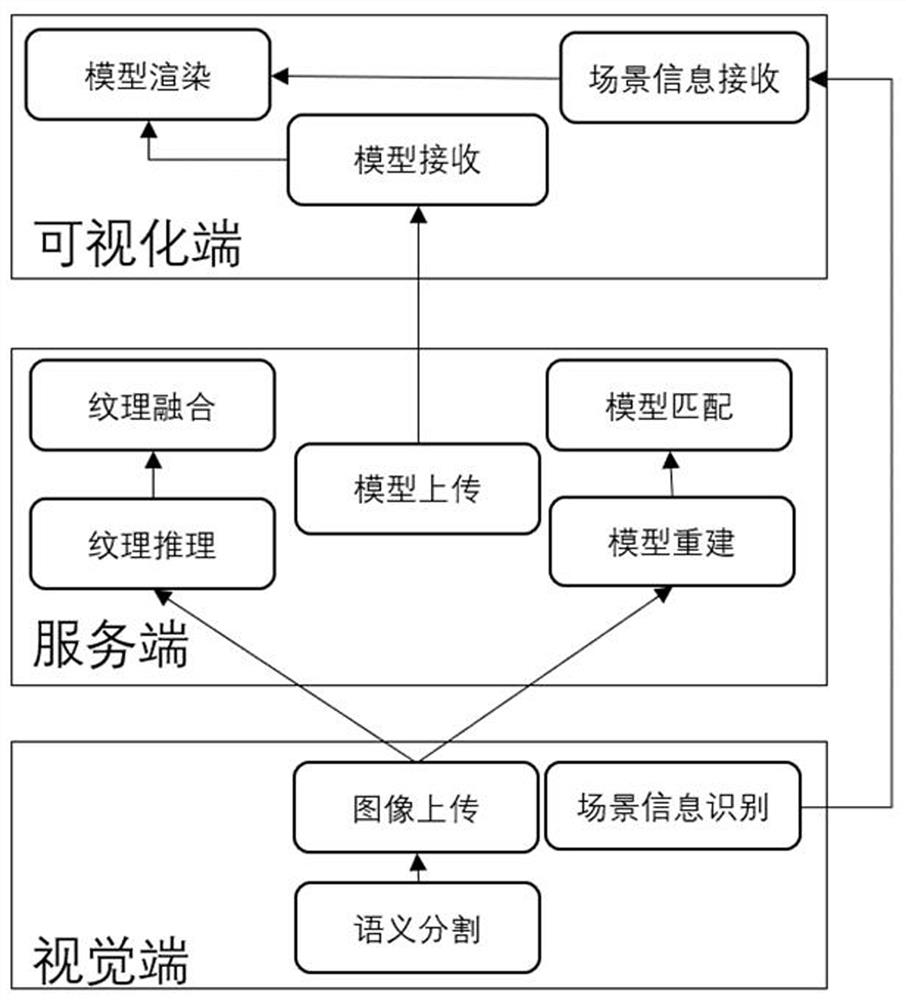

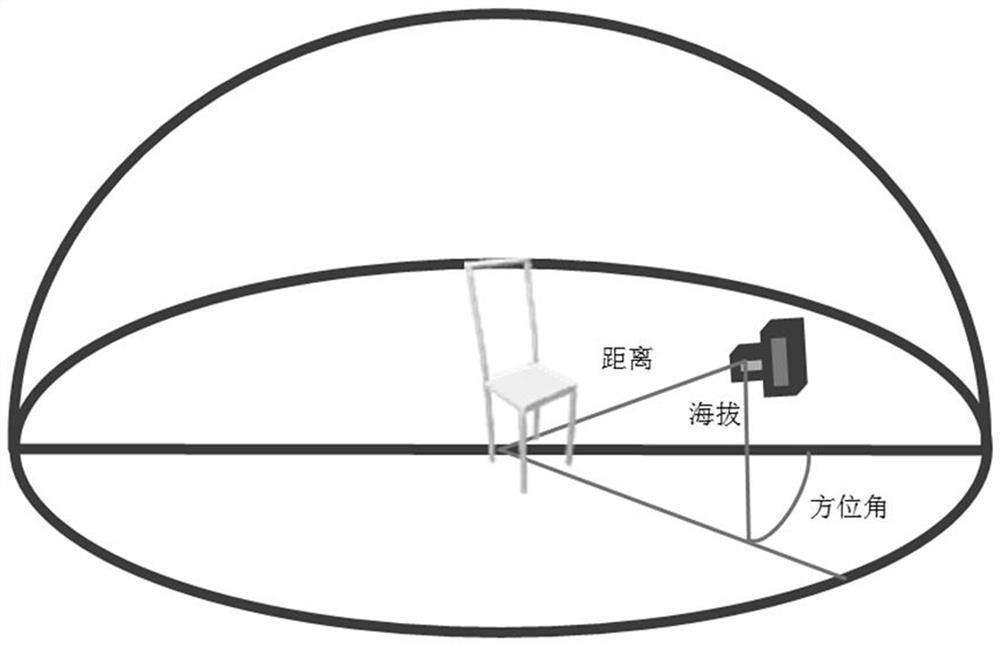

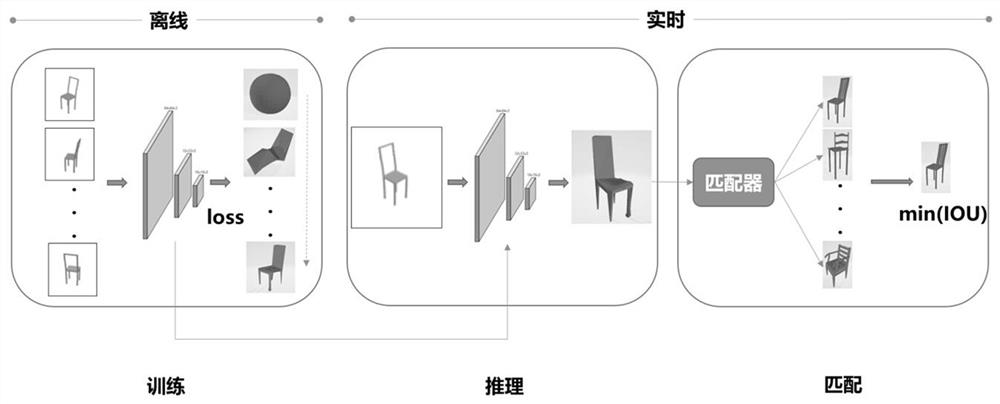

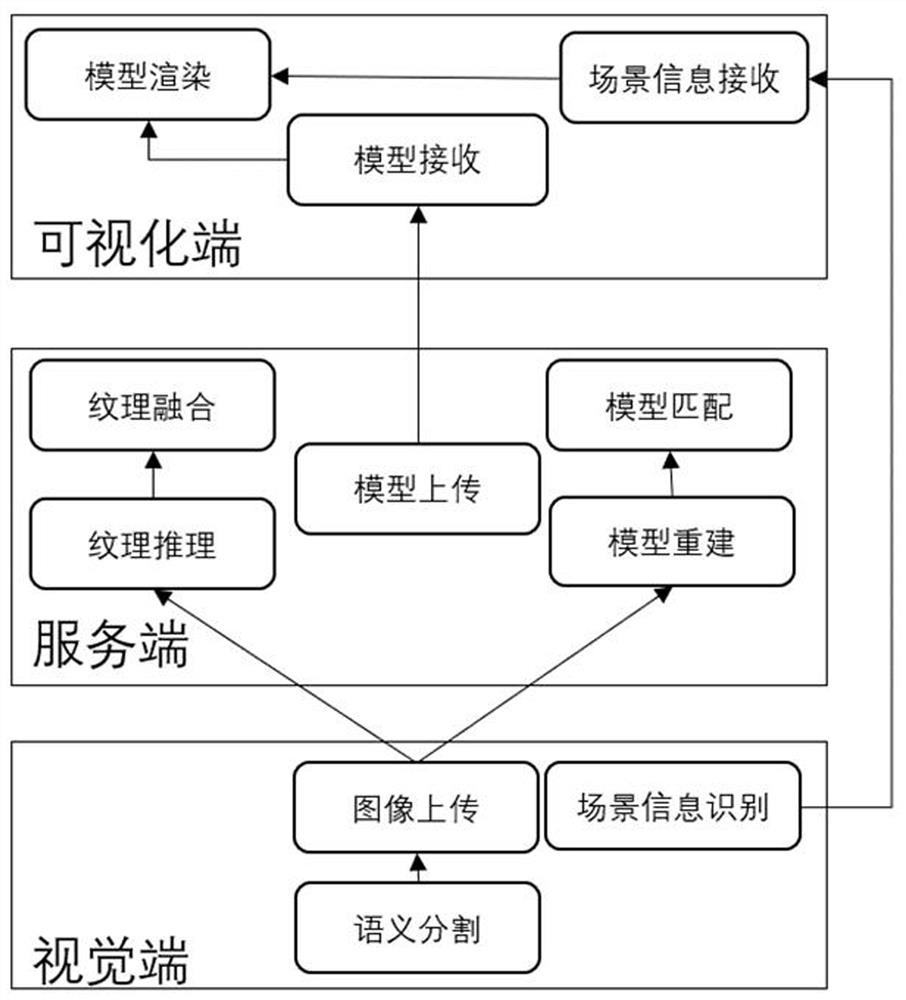

Digital twinning method and system based on 3D model matching

ActiveCN113822993AAutomate the buildQuick conversionCharacter and pattern recognition3D-image renderingPattern recognitionSilhouette

The invention discloses a digital twinning method and system based on 3D model matching. The method comprises four steps of model and texture training, model matching, texture fusion and scene placement. The model and texture training is an offline preprocessing step, and the model matching, the texture fusion and the scene placement are real-time processing steps. In the model training, a multi-viewpoint silhouette image is used to learn a three-dimensional structure, and polygon mesh three-dimensional reconstruction is realized. In the texture training, a texture flow is obtained through fixed UV mapping, and reasoning from 2D images to 3D textures is achieved. In the model matching, a reconstructed model and a model of a database are used for performing IOU iterative calculation, and the model with an IOU value closest to 1 is obtained as a matched specified model. In the texture fusion, the matched specified model is fused with the 3D texture flow subjected to texture reasoning to form a standard 3D model. In the scene placement, the 3D model is accurately placed in a 3D scene. According to the digital twinning method, the 3D model scene is automatically generated, and the digital twinning efficiency is improved.

Owner:ZHEJIANG LAB

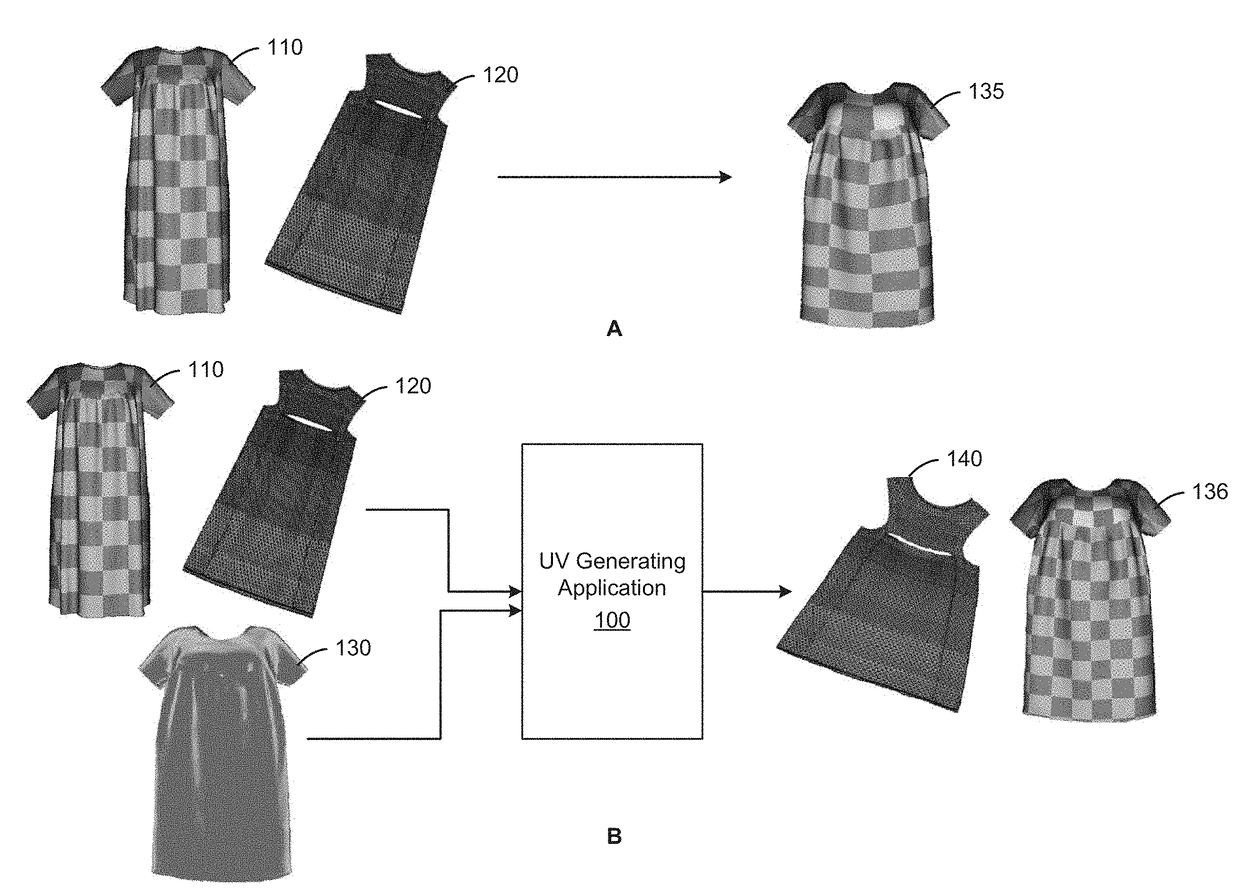

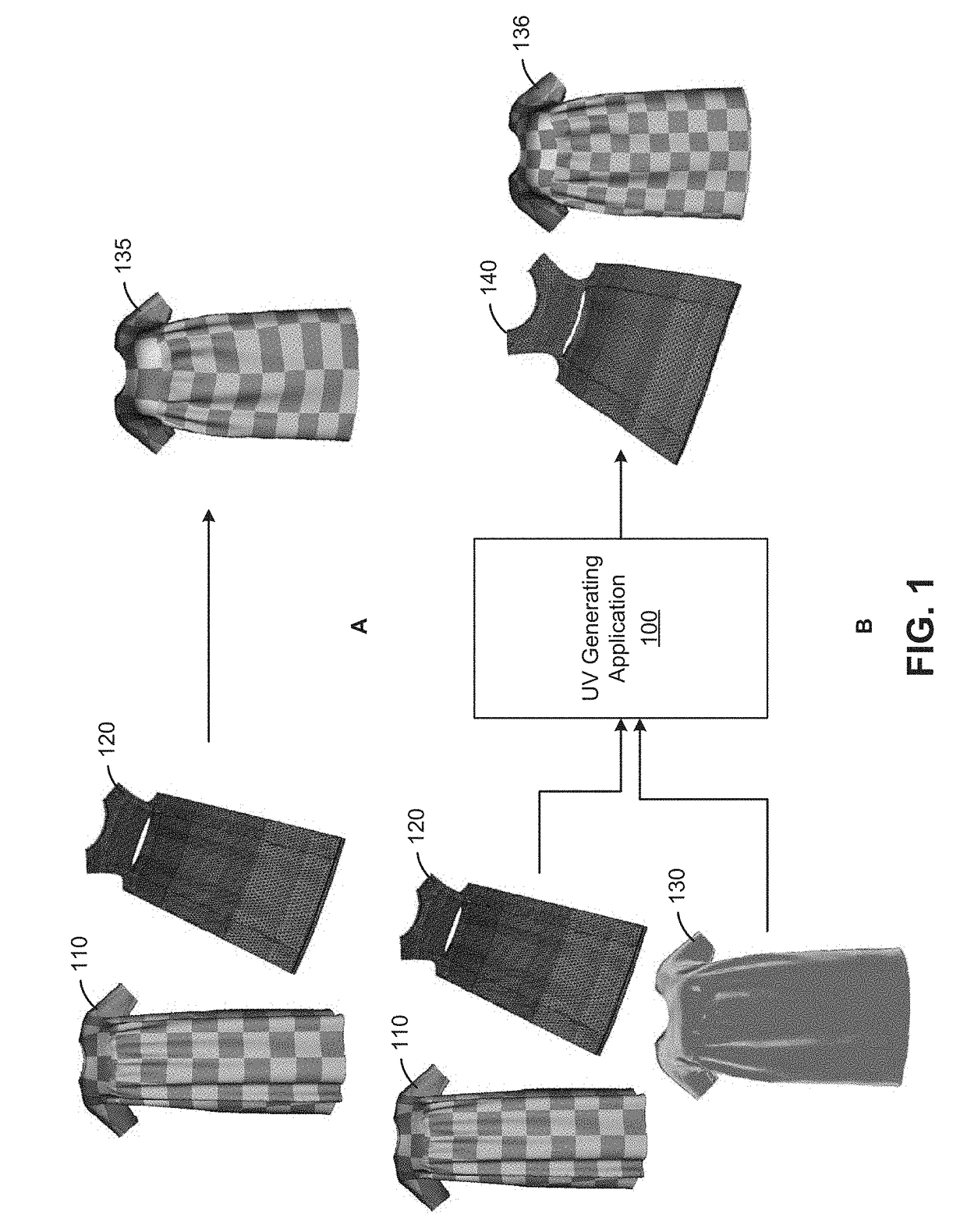

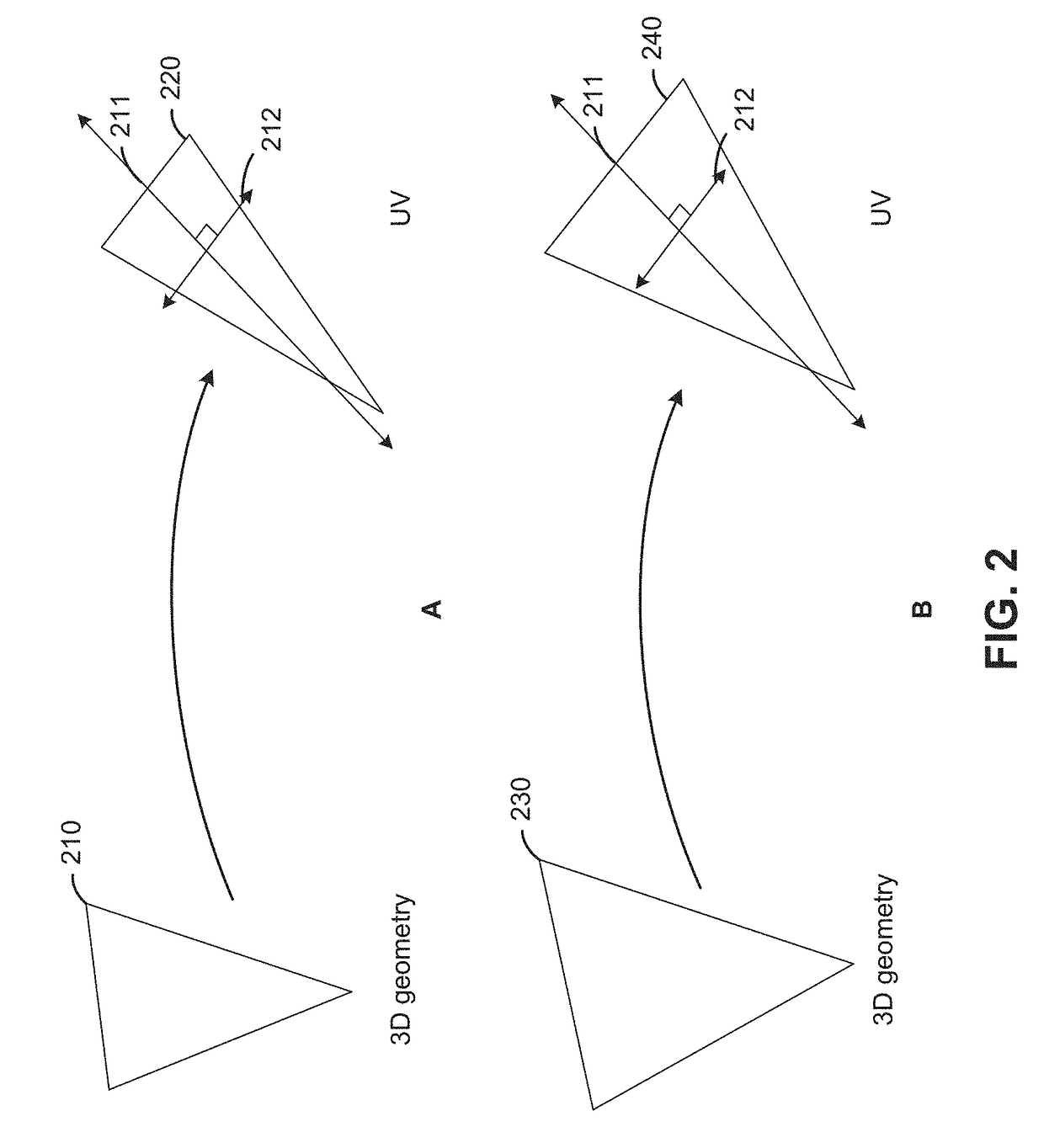

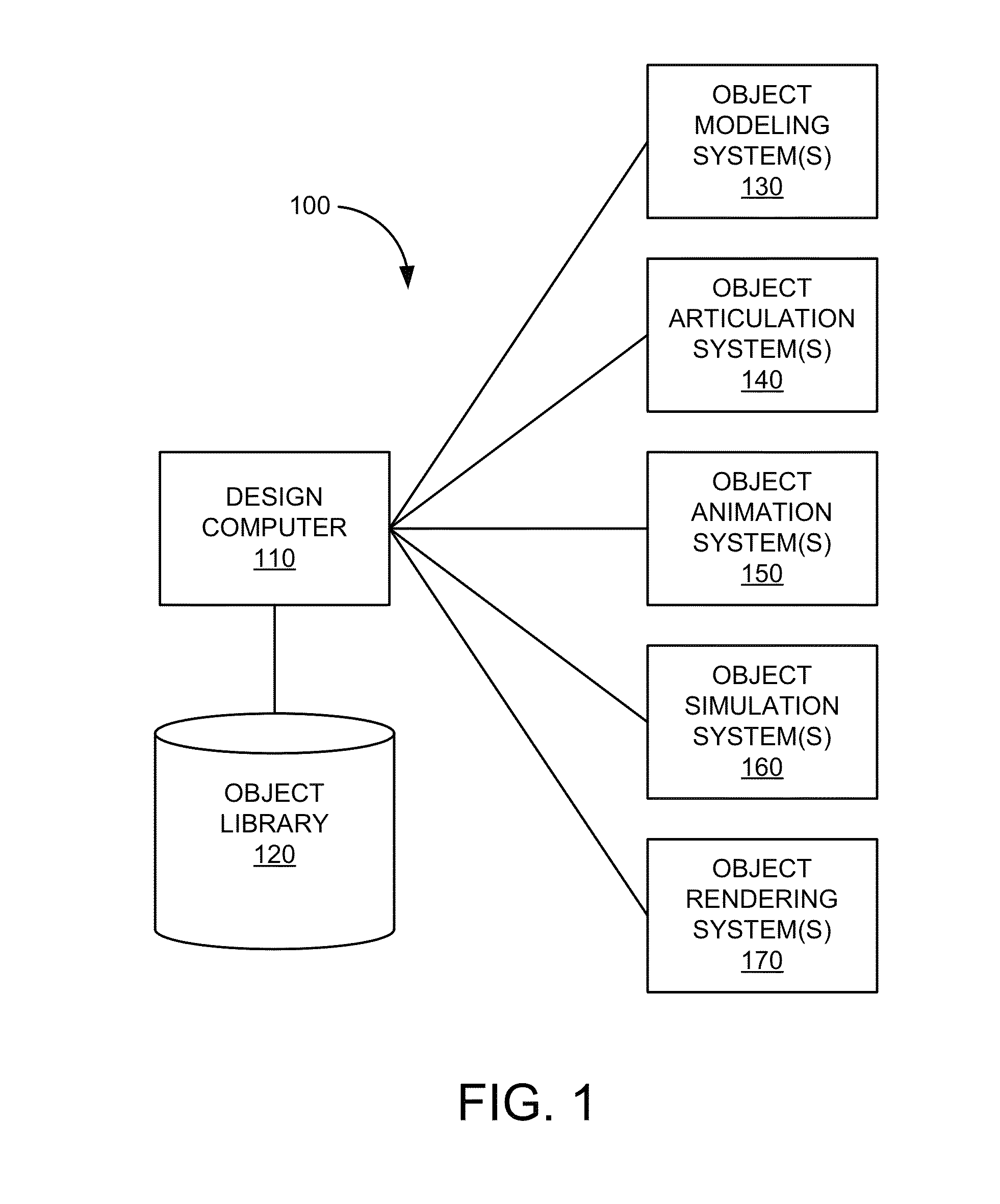

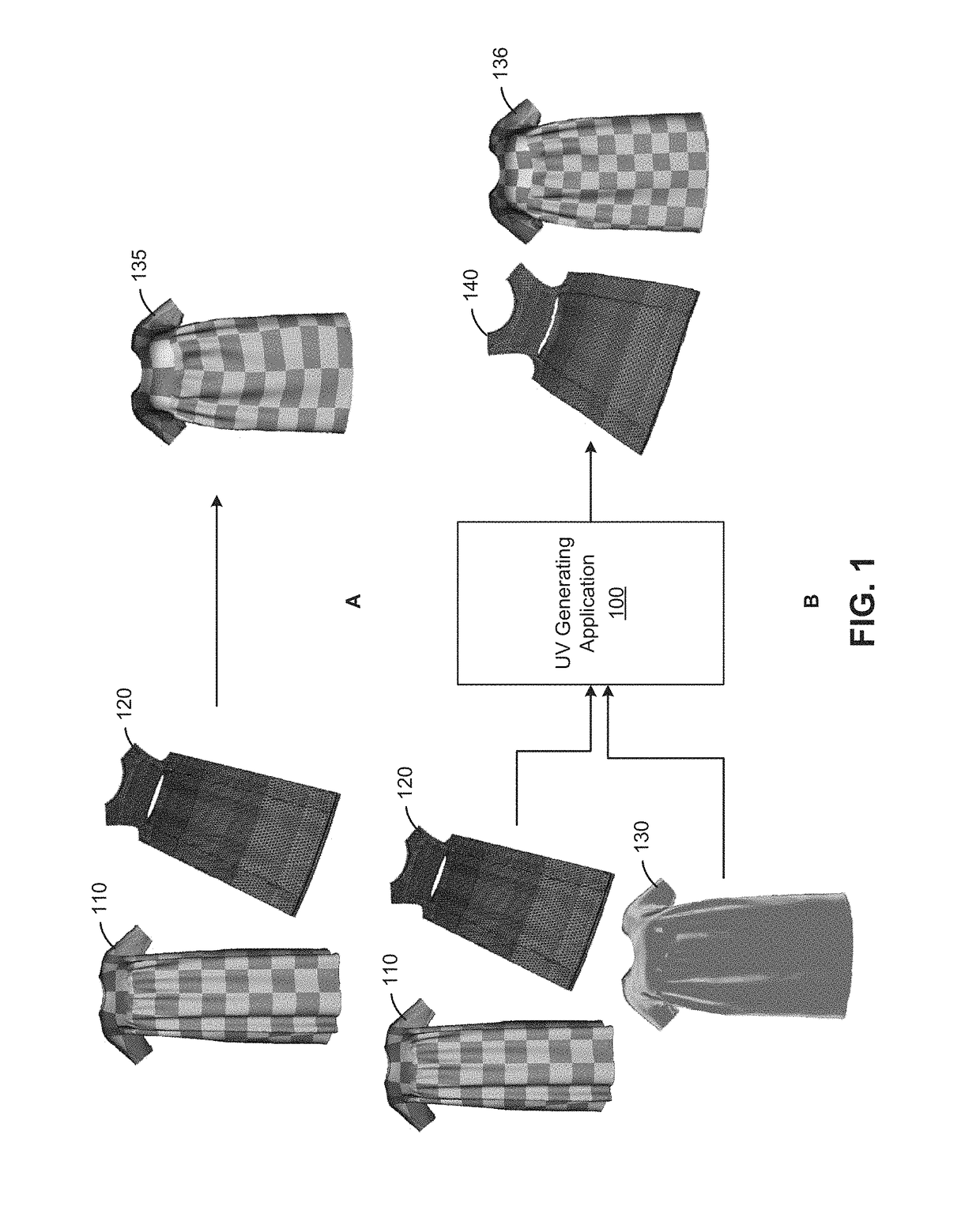

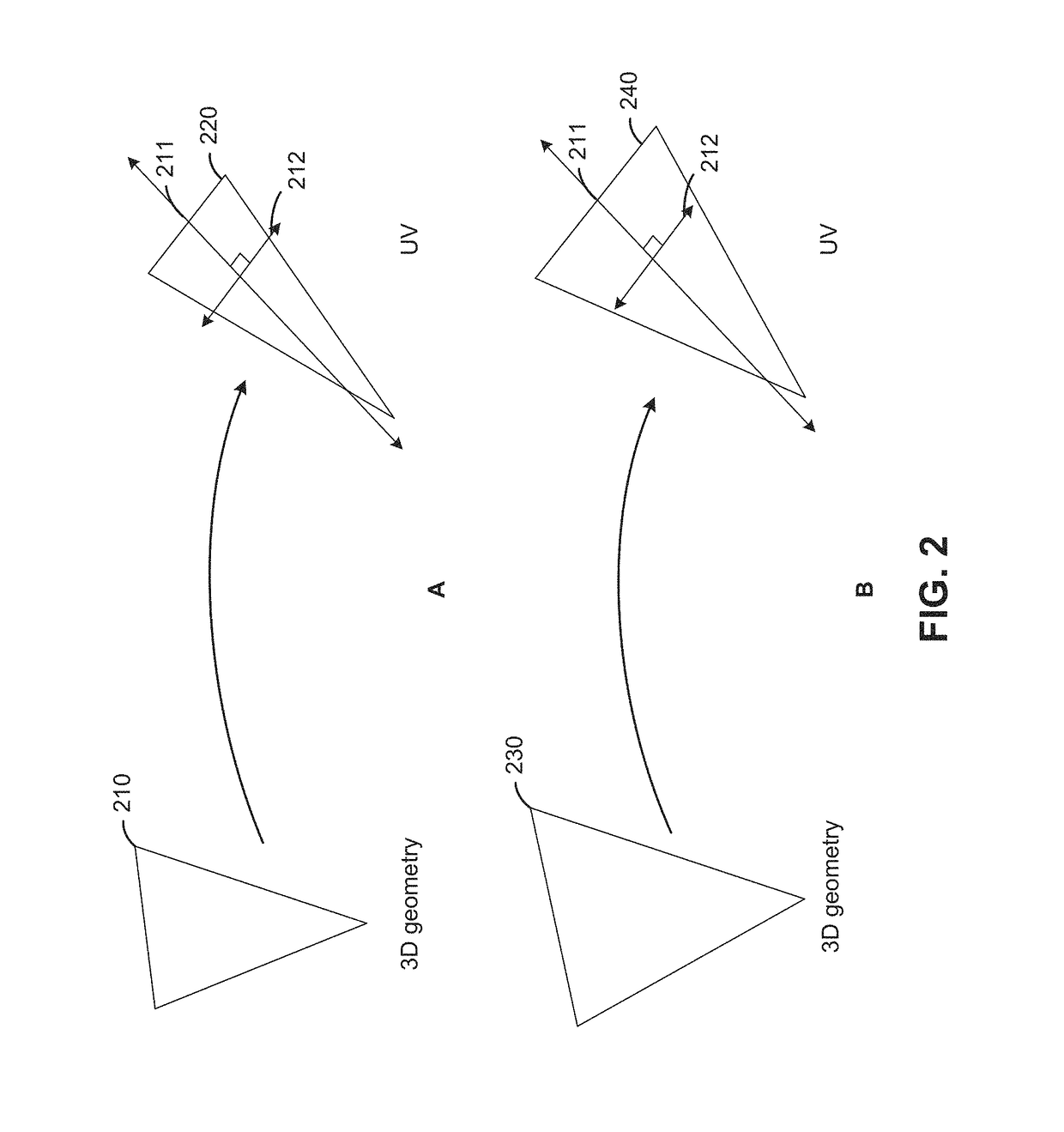

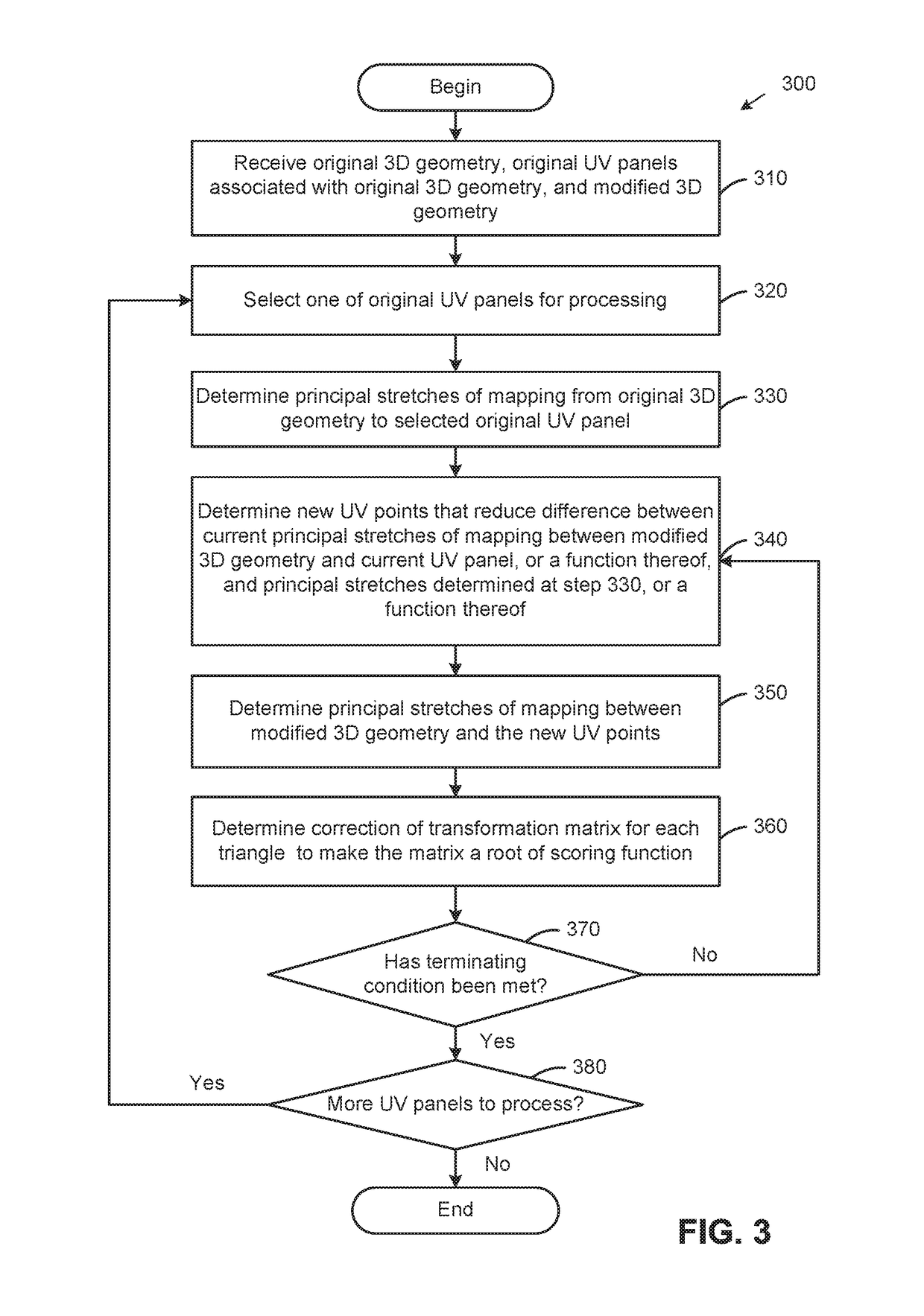

Generating UV maps for modified meshes

This disclosure provides an approach for automatically generating UV maps for modified three-dimensional (3D) virtual geometry. In one embodiment, a UV generating application may receive original 3D geometry and associated UV panels, as well as modified 3D geometry created by deforming the original 3D geometry. The UV generating application then extracts principle stretches of a mapping between the original 3D geometry and the associated UV panels and transfers the principle stretches, or a function thereof, to a new UV mapping for the modified 3D geometry. Transferring the principle stretches or the function thereof may include iteratively performing the following steps: determining new UV points assuming a fixed affine transformation, determining principle stretches of a transformation between the modified 3D geometry and the determined UV points, and determining a correction of a transformation matrix for each triangle to make the matrix a root of a scoring function.

Owner:PIXAR ANIMATION

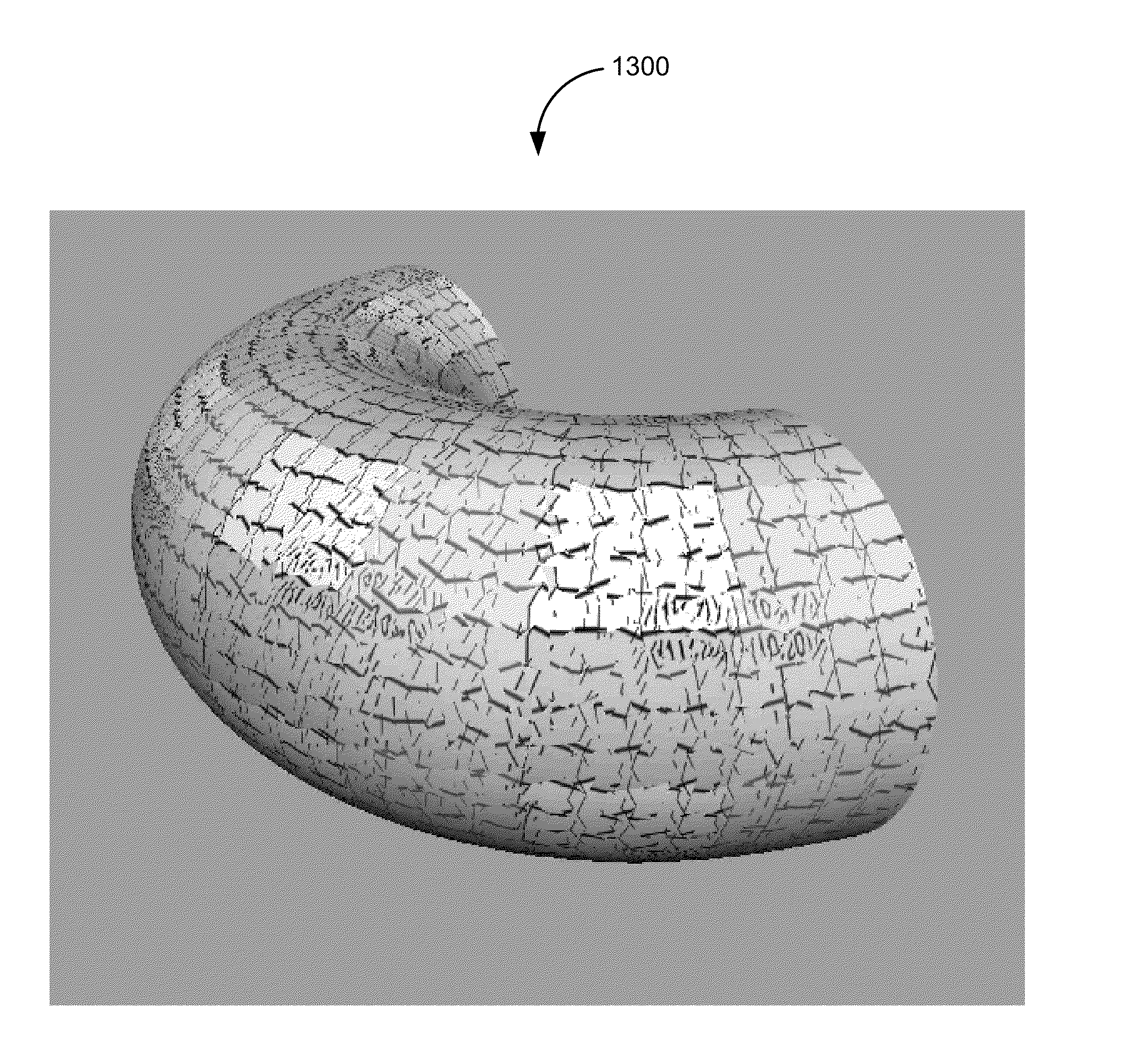

Fractured texture coordinates

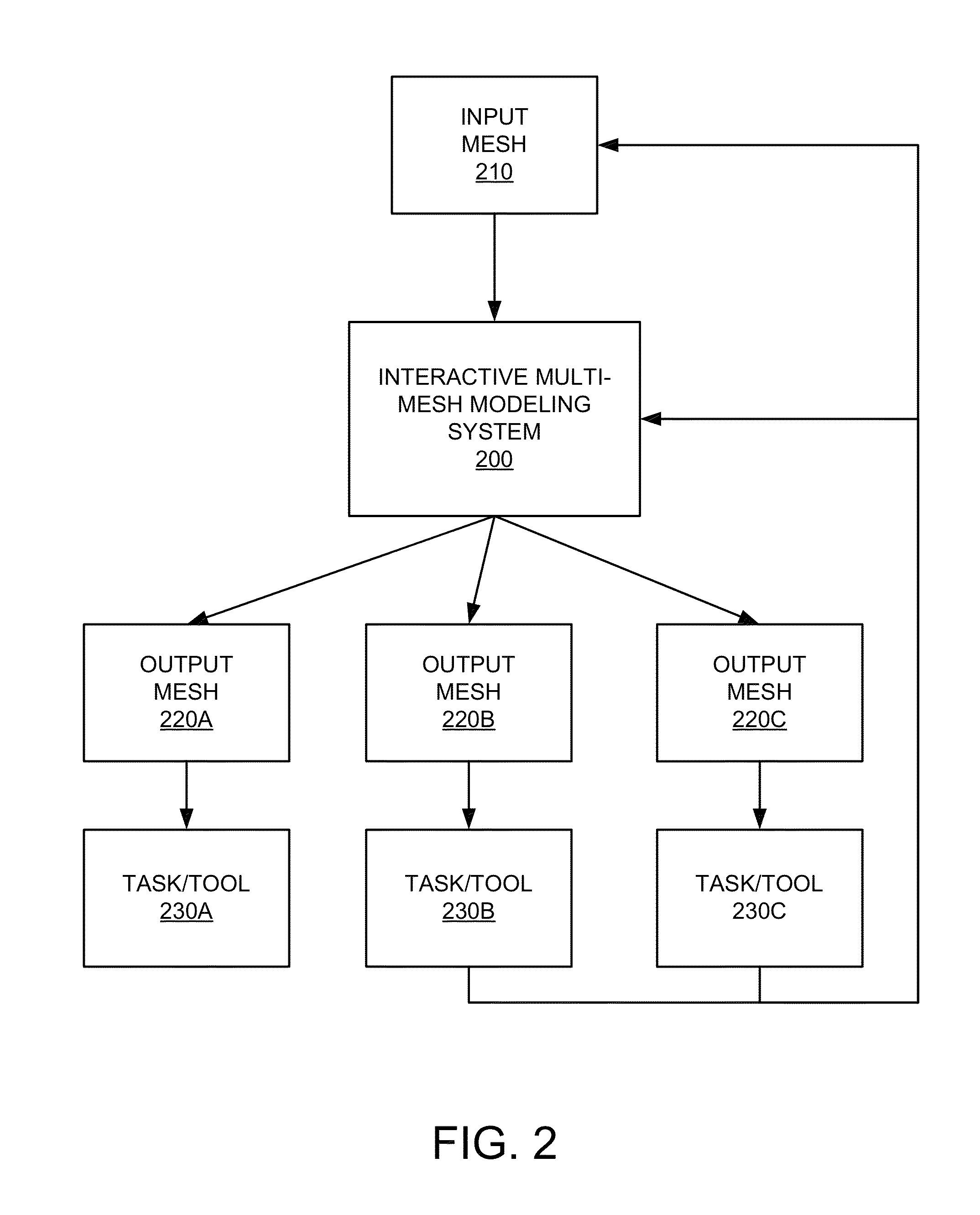

InactiveUS8681147B1Reduce distortion problemsCathode-ray tube indicators3D-image renderingUV mappingMulti mesh

In various embodiments, an interactive multi-mesh garment modeling system may allow a user to employ solid modeling techniques to create one or more representations of garment objects whose motions are typically determined by computer simulations. Accordingly, in one aspect, the interactive multi-mesh garment modeling system may automatically generate one or more meshes that satisfy the requirements for computer simulations from a source mesh modeled by a user using solid modeling techniques. For each polygon associated with a UV mapping of one of these meshes, gradients of U and V for the polygon can be determined with respect to a 3D representation of an object that are substantially orthogonal and of uniform magnitude and that approximate the original gradients of U and V for the polygon. In another aspect, each polygon in a plurality of polygons of a 2D parameterization of an object can be reshaped based on individually corresponding polygons in a 3D representation of the object.

Owner:PIXAR ANIMATION

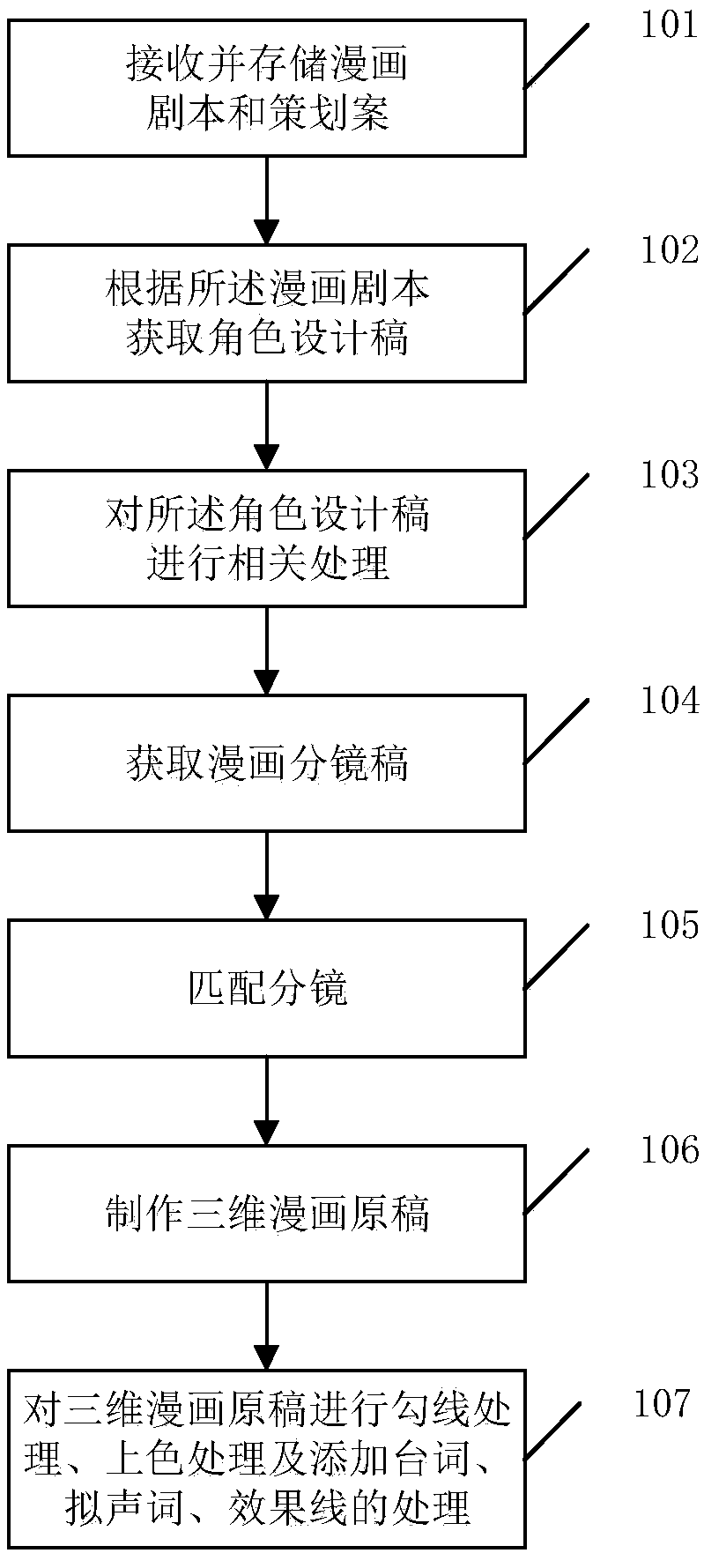

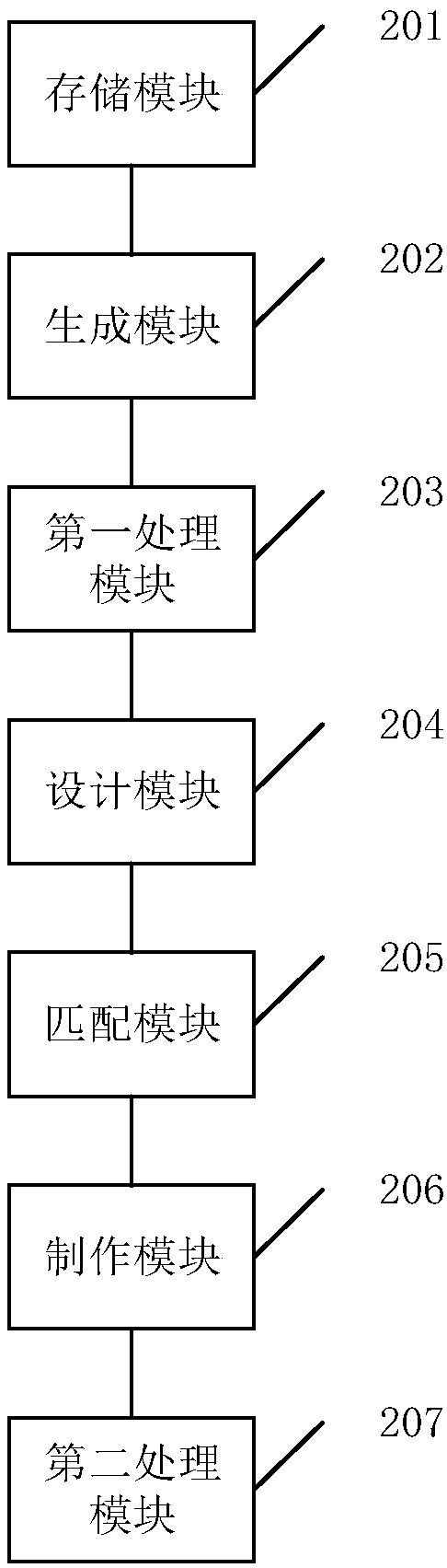

Making method and apparatus of three-dimensional comics

ActiveCN107798726AShorten production timeQuality improvementDetails involving 3D image data3D modellingStoryboardSimulation

The invention provides a making method and apparatus of three-dimensional comics. The method mainly comprises following steps: receiving and storing a comics script and a plan; designing a role draftaccording to the comics script; performing three-dimensional role modeling, UV extension, UV sticker drawing, three-dimensional role model skeleton binding, three-dimensional role model skinning, three-dimensional scene modeling and three-dimensional prop modeling according to the role design draft, and obtaining three-dimensional role, scene, and prop models through sticker, binding and skinning;performing comics storyboard designing and drawing on the comics script, the plan, and the three-dimensional role, scene and prop models to obtain a comics storyboard draft; performing movement modeling matching according to the storyboard by employing the three-dimensional role model, and producing a three-dimensional comics manuscript through rendering; making a comics sketch according to the three-dimensional comics manuscript; and performing outline drawing and coloring on the three-dimensional comics manuscript, and adding lines, onomatopoetic words and effect lines. According to the method and apparatus, the three-dimensional comics with large amount and excellent quality can be obtained.

Owner:刘芳圃 +1

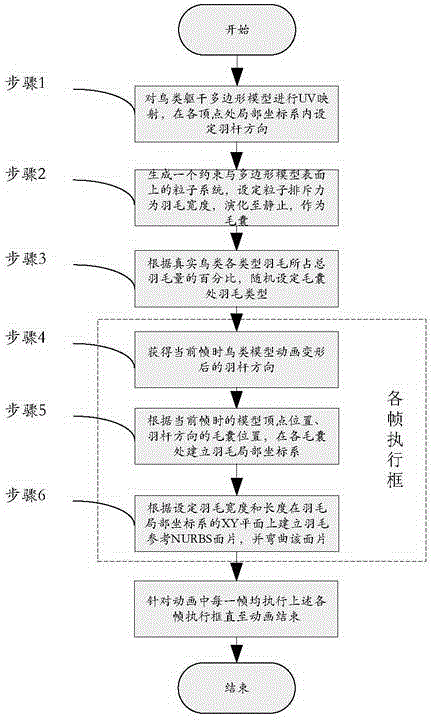

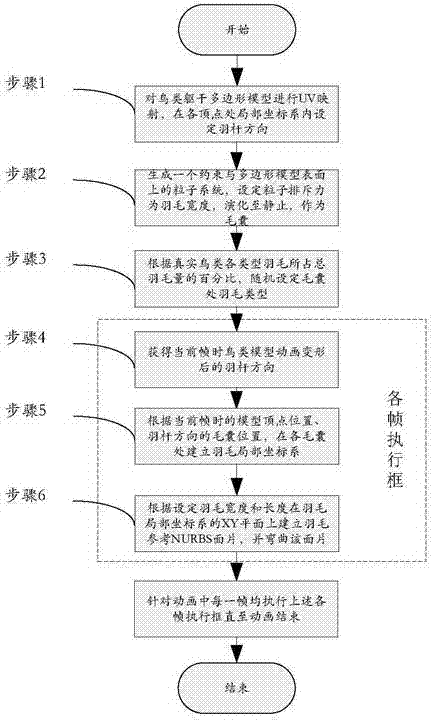

Real-time dynamic generating method for features on bird body model

The invention discloses a real-time dynamic generating method for features on a bird body model. The method comprises the specific steps that UV mapping is carried out on a polygon model of a bird body, a vertex local coordinate system is set up on each vertex, and direction vectors of feather rods in the features located in the vertex local coordinate systems are set; a particle system is generated, all particles are restrained on the faces of a polygon, repulsive force among the particles serves as the width of the features, after evolution is carried out to the static state, the positions of all the particles are the positions of folliculus pili, and the types of the features are determined randomly; after the bird model is animated and deformed, the vertex local coordinate systems are updated, the direction of the feature rods of the features at the moment of current frames is calculated, the positions of the folliculus pili serve as coordinate origins to set up a feature local coordinate system, feature reference NURBS surface patches are set up according to the set width and the set length, the features are generated on the NUBRS surface patches, and the steps are repeated for all the frames in animation. The method can achieve no-penetration covering among the features, and generate the dynamic features in real time.

Owner:北京春天影视科技有限公司

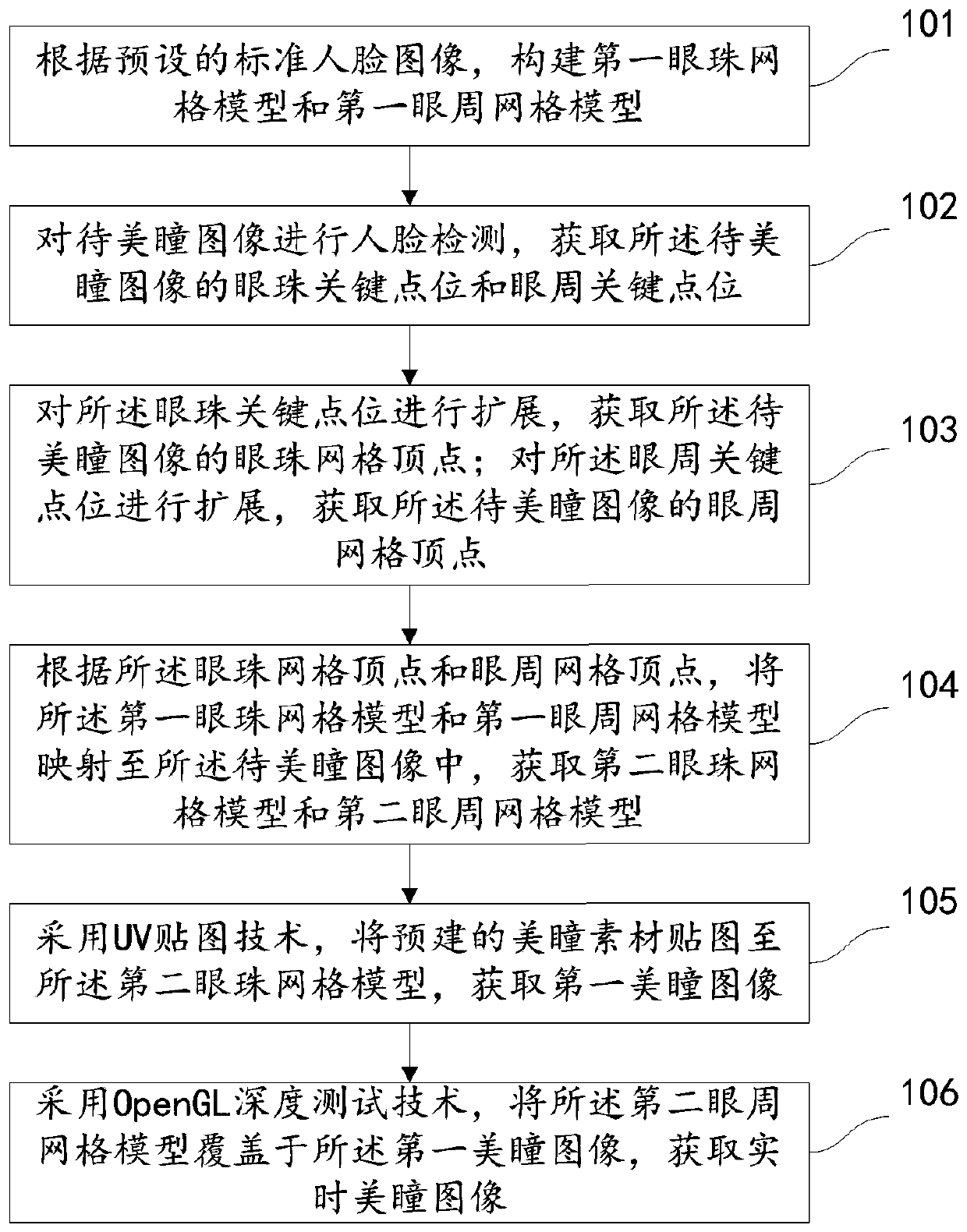

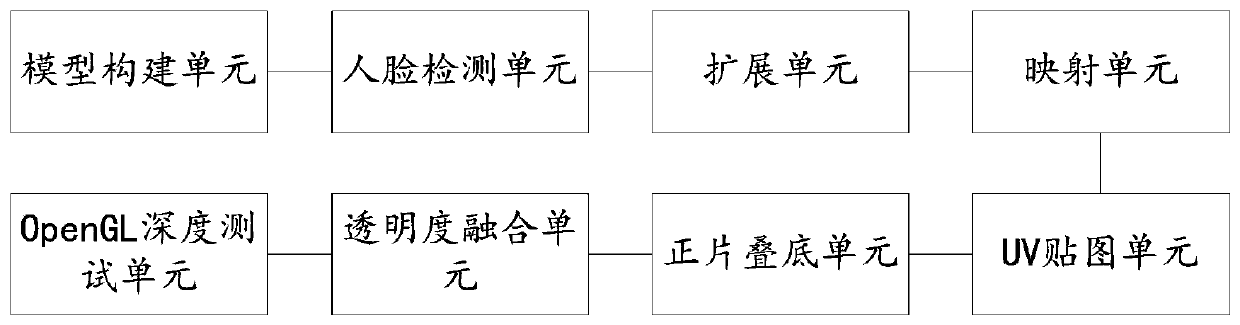

Real-time pupil beautifying method and device

PendingCN109785259AIncrease transparencyIdeal color contact lensesImage enhancementGeometric image transformationFace detectionPupil

The invention discloses a real-time pupil beautifying method and device, and the method comprises the steps: building a first eyeball grid model and a first periocular grid model according to a presetstandard face image; Carrying out face detection on the pupil image to be beautified, and obtaining eyeball key points and periocular key points of the pupil image to be beautified; Expanding the eyeball key point locations and the periocular key point locations to obtain eyeball grid vertexes and periocular grid vertexes; Mapping the first eyeball grid model and the first periocular grid model into the pupil image to be beautified, and obtaining a second eyeball grid model and a second periocular grid model; Mapping the pre-built cosmetic pupil material to a second eyeball grid model by adopting a UV mapping technology to obtain a first cosmetic pupil image; And adopting an OpenGL depth test technology to cover the second periocular grid model on the first pupil beautifying image to obtain a real-time pupil beautifying image. According to the technical scheme provided by the invention, the portrait can be beautified more conveniently and efficiently in real time on the premise of notchanging the shapes of the eyeballs in the image.

Owner:CHENDU PINGUO TECH

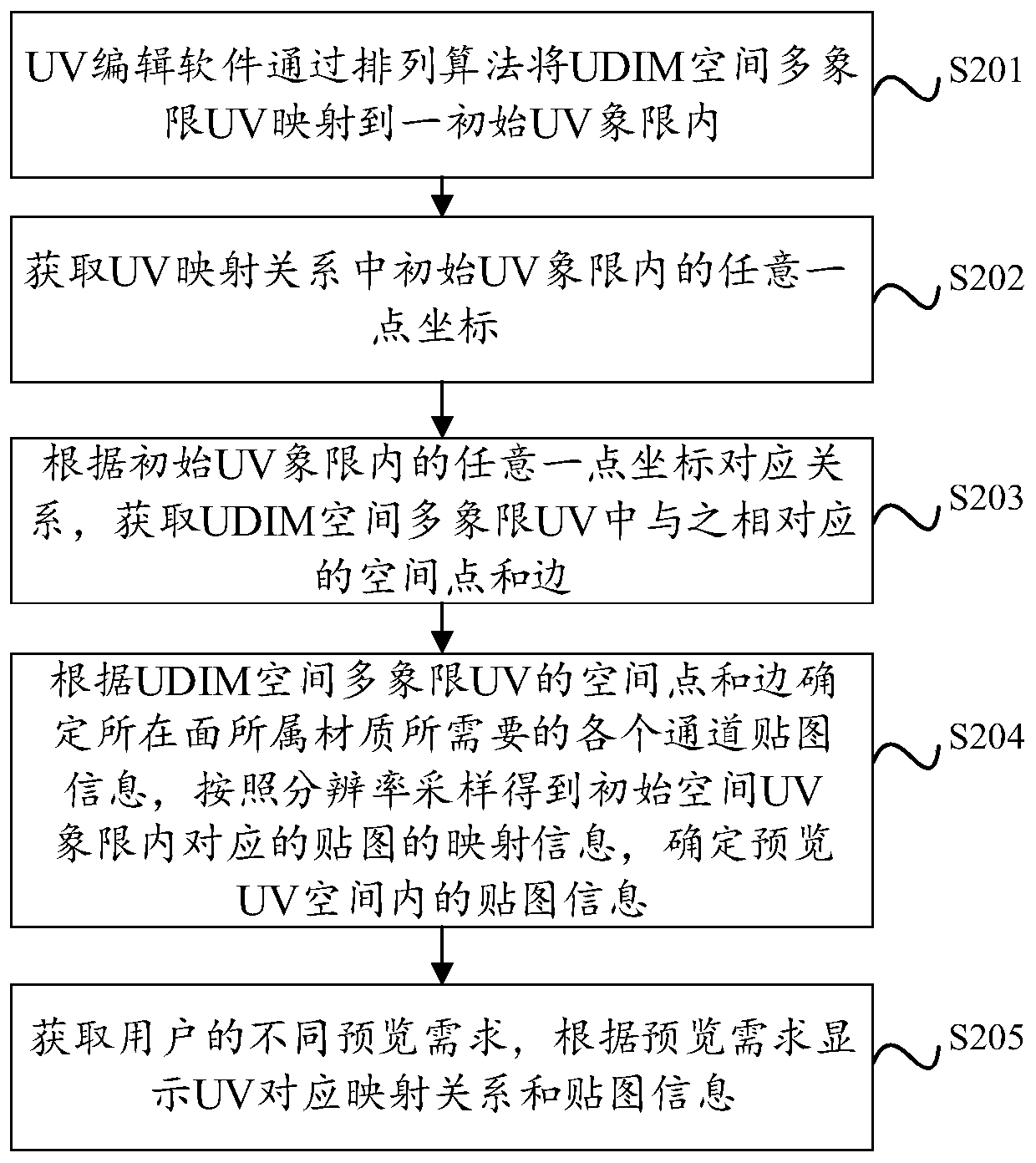

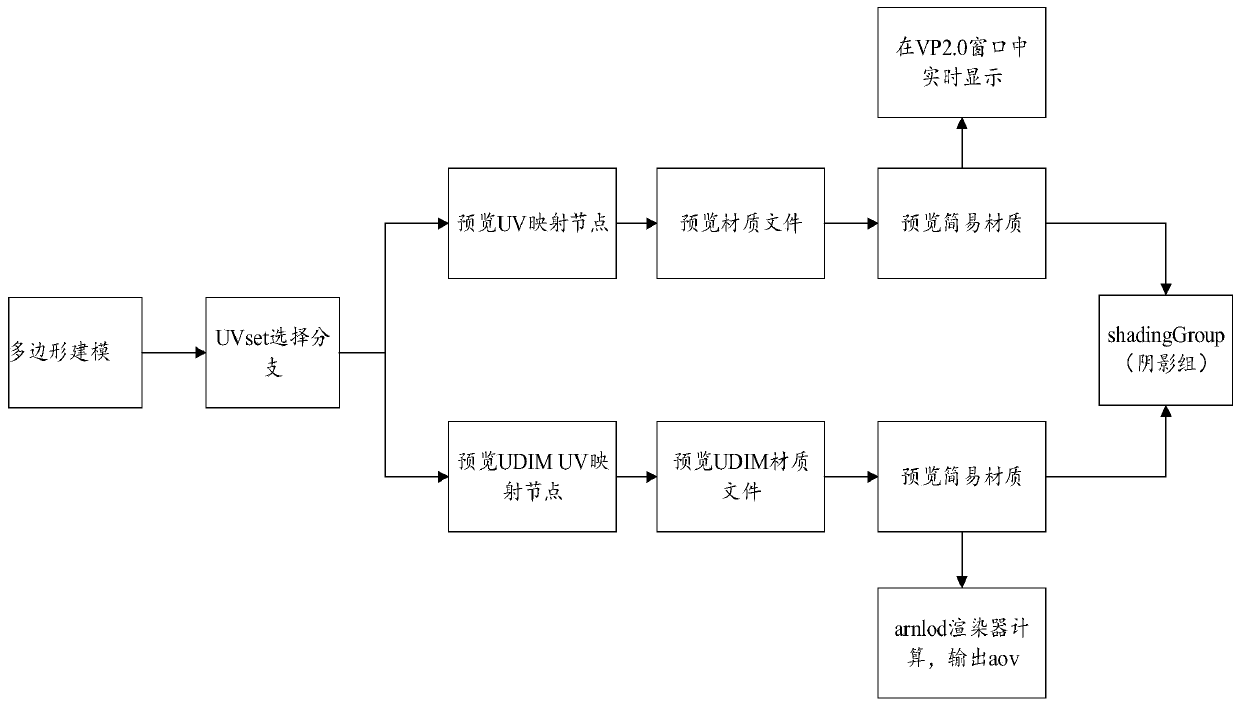

Method and device for efficiently previewing CG assets

ActiveCN111179390AImprove efficiencyFix production issuesAnimationManufacturing computing systemsImage resolutionEngineering

The invention provides a method and a device for efficiently previewing CG assets. The method comprises the steps of: mapping UDIM space multi-quadrant UV into an initial UV quadrant through adoptingan arrangement algorithm by means of UV editing software; acquiring any point coordinates in the initial UV quadrant in the UV mapping relation; according to the coordinates corresponding relation ofany point in the initial UV quadrant, acquiring a space point and an edge, corresponding to the point in the initial UV quadrant, in the UDIM space multi-quadrant UV; determining each piece of channelmapping information required by a material to which a plane belongs according to space points and edges of the UDIM space multi-quadrant UV, sampling according to resolution to obtain mapping information of a corresponding map in the initial space UV quadrant, and determining mapping information in a preview UV space; and acquiring different preview requirements of the user, and displaying the UVcorresponding mapping relationship and the mapping information according to the preview requirements. According to the method and the device, asset output of two downstream links can be provided without additionally increasing the workload, the manual time for UV making and mapping making in two times is saved, and the color preview effect basically consistent with the rendering effect can be obtained.

Owner:上海咔咖文化传播有限公司

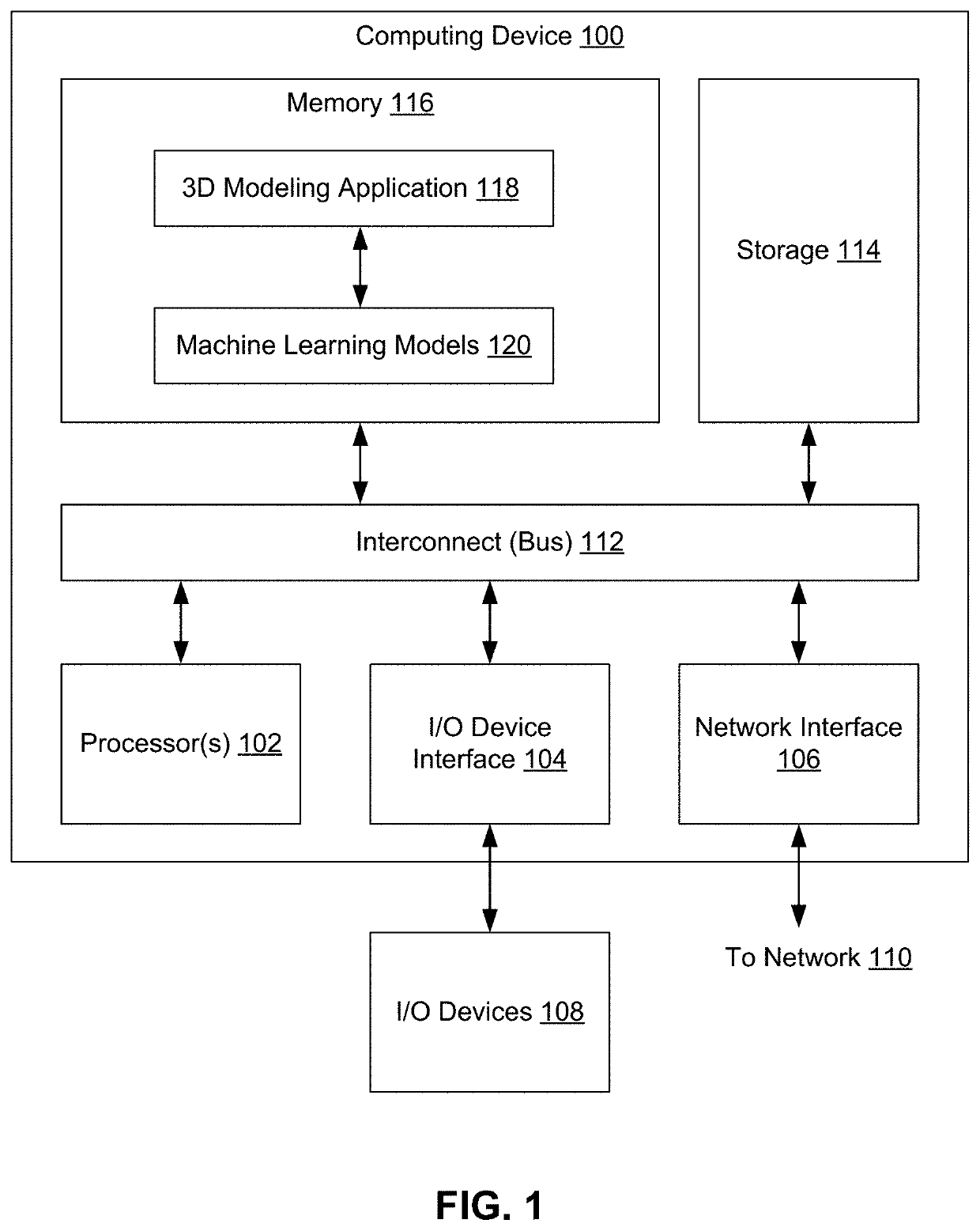

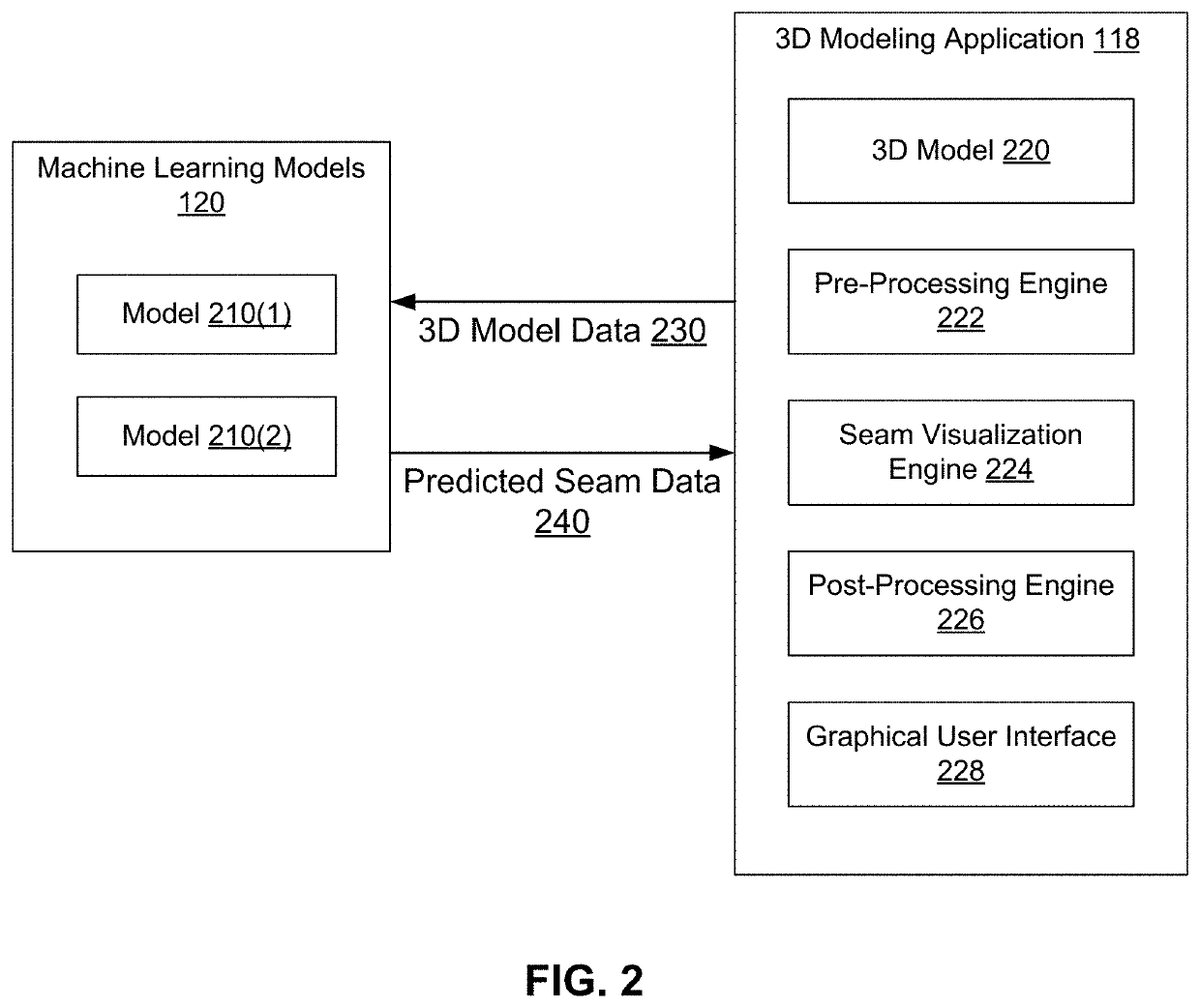

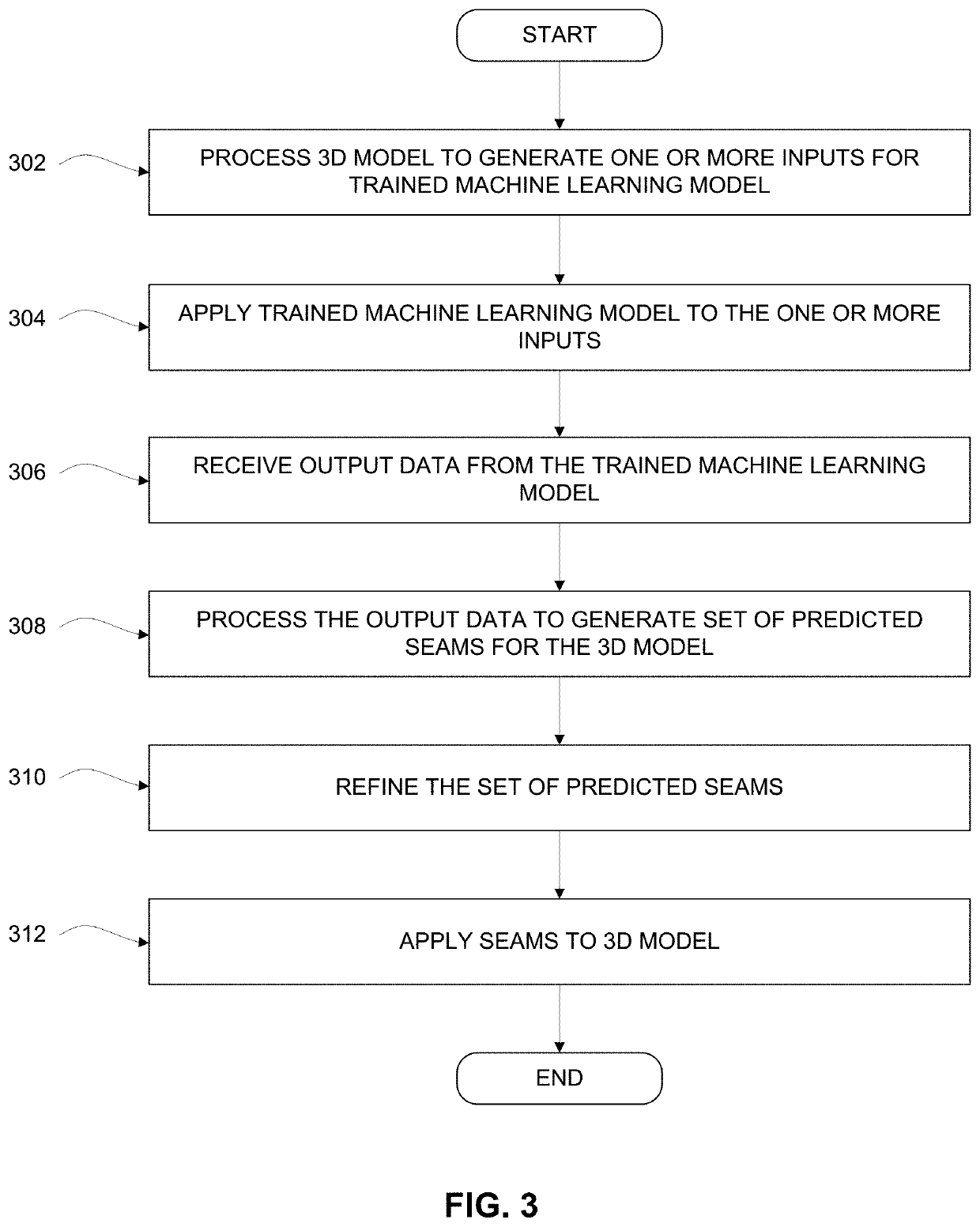

UV mapping on 3D objects with the use of artificial intelligence

PendingUS20220058859A1Reduce in quantityMinimize distortionImage enhancementImage analysisAlgorithmEngineering

Various embodiments set forth systems and techniques for generating seams for a 3D model. The techniques include generating, based on the 3D model, one or more inputs for one or more trained machine learning models; providing the one or more inputs to the one or more trained machine learning models; receiving, from the one or more trained machine learning models, seam prediction data generated based on the one or more inputs; and placing one or more predicted seams on the 3D model based on the seam prediction data.

Owner:AUTODESK INC

3D Asset Inspection

ActiveUS20190242696A1Facilitating subsequent abilityUsing optical meansElectromagnetic wave reradiationOdometry3d sensor

Systems and methods for physical asset inspection are provided. According to one embodiment, a probe is positioned to multiple data capture positions with reference to a physical asset. For each position: odometry data is obtained from an encoder and / or an IMU; a 2D image is captured by a camera; a 3D sensor data frame is captured by a 3D sensor, having a view plane overlapping that of the camera; the odometry data, the 2D image and the 3D sensor data frame are linked and associated with a physical point in real-world space based on the odometry data; and switching between 2D and 3D views within the collected data is facilitated by forming a set of points containing both 2D and 3D data by performing UV mapping based on a known positioning of the camera relative to the 3D sensor.

Owner:CHARLES MACHINE WORKS

Coherent roaming implementation method for virtual tourism three-dimensional simulation scene

PendingCN111208897AFree and smooth roamingFree and seamless roamingInput/output for user-computer interactionData processing applicationsDimensional simulationRoaming

The invention discloses a coherent roaming implementation method for a virtual tourism three-dimensional simulation scene, and belongs to the computer simulation technology, the method comprises the following steps: recording a panoramic video of a scene to be simulated at a rate of 20-60 frames per second; converting a video frame of the panoramic video into a UV map in a scene in a Unity 3D environment; establishing a 3D scene in the form of UV mapping animation; using a rocker controller configured with software to drag to rotate at all angles and controlling a predefined user to substitutefor a role to advance, retreat, advance left and right and steer; playing a panoramic video in a positive time sequence to be matched with a simulated advancing picture; matching a simulated backwardpicture through reverse playing; stretching the panoramic picture frame in a non-linear mode to achieve left-right advancing and steering in a matched mode; when the rocker is in a relaxed state, enabling the video to pause to the last frame of UV chartlet before the rocker is loosened and keeps still. Free, smooth and coherent roaming of a three-dimensional simulation scene is achieved, and thevisual reality sense and the interesting sense are improved.

Owner:浙江开奇科技有限公司

Generating UV maps for modified meshes

Owner:PIXAR ANIMATION

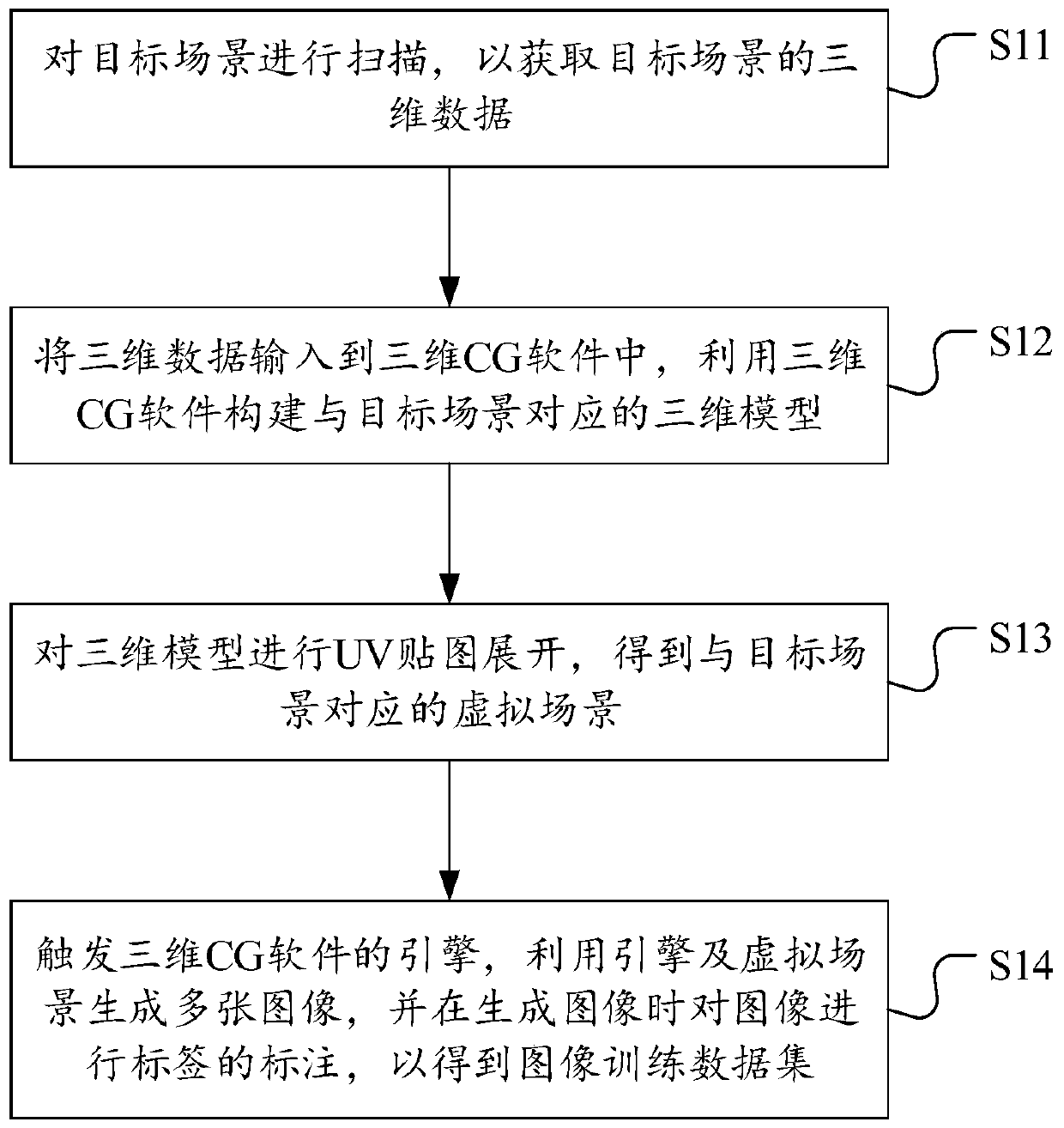

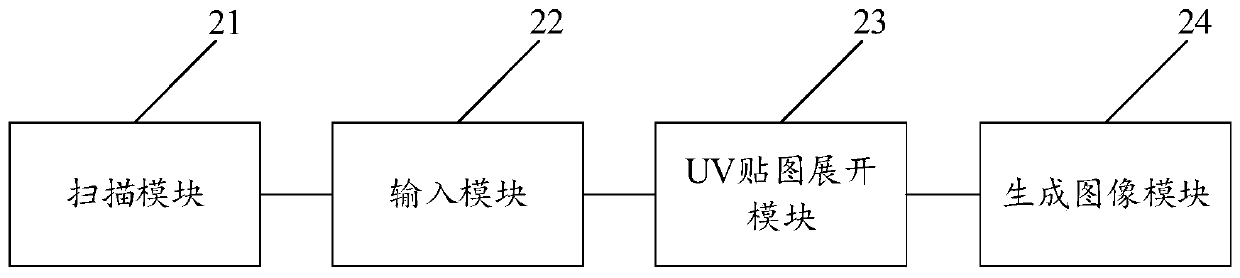

Image training data set generation method, device and equipment and medium

InactiveCN111047693ARealize automatic generationRealize automatic labelingCharacter and pattern recognition3D-image renderingData setRadiology

The invention discloses an image training data set generation method, device and equipment and a computer readable storage medium. The method comprises the steps: scanning a target scene, so as to obtain the three-dimensional data of the target scene; inputting the three-dimensional data into three-dimensional CG software, and constructing a three-dimensional model corresponding to the target scene by utilizing the three-dimensional CG software; performing UV mapping expansion on the three-dimensional model to obtain a virtual scene corresponding to the target scene; triggering an engine of the three-dimensional CG software, generating a plurality of images by utilizing the engine and the virtual scene, and labeling the images when the images are generated so as to obtain an image trainingdata set. According to the technical scheme, the generation efficiency of the image training data set is improved through automatic generation of the images and automatic labeling of the labels, andthe generation cost of the image training data set is reduced.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

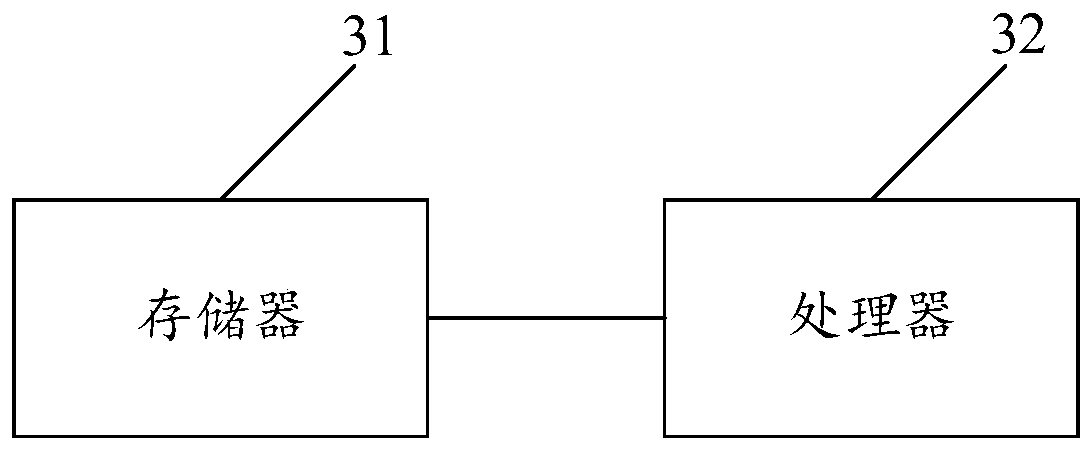

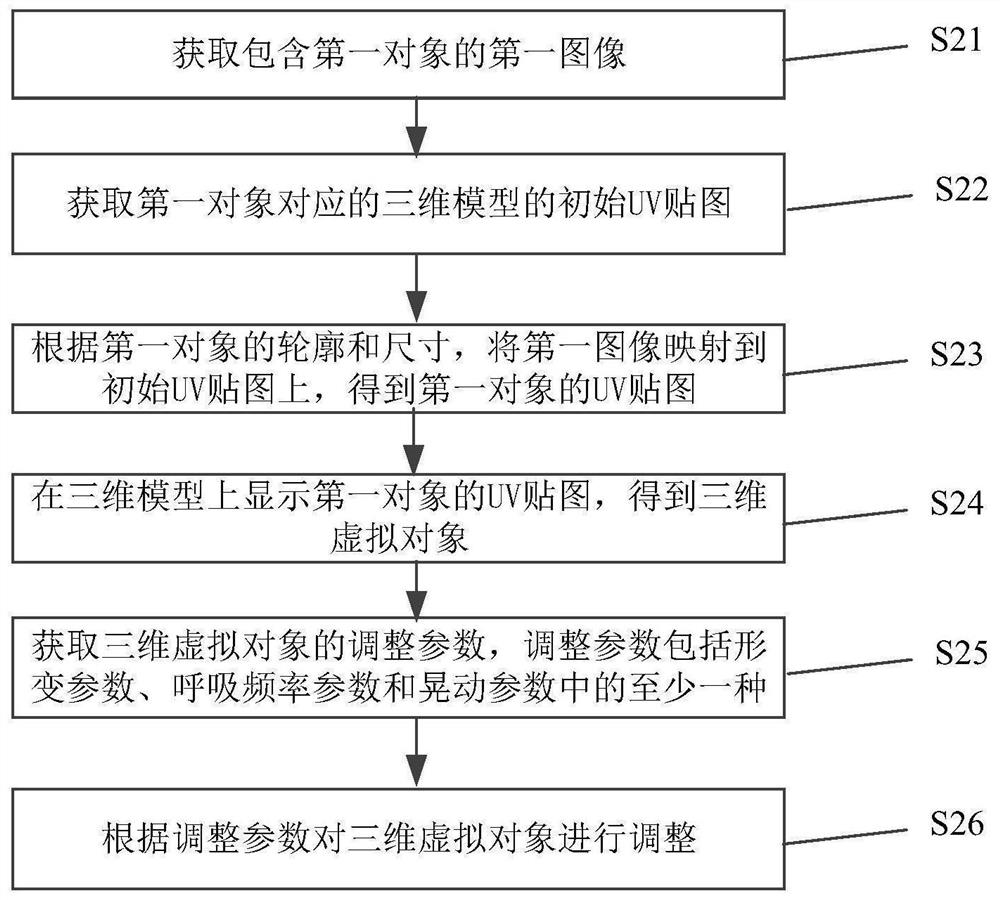

Virtual object control method and device, electronic equipment and storage medium

The invention relates to a virtual object control method and device, electronic equipment and a storage medium. The method comprises the steps of obtaining a first image containing a first object, obtaining an initial UV map of a three-dimensional model corresponding to the first object, mapping the first image to the initial UV map according to the contour and the size of the first object to obtain a UV map of the first object, displaying the UV map of the first object on the three-dimensional model to obtain a three-dimensional virtual object, obtaining adjusting parameters of the three-dimensional virtual object, the adjusting parameters comprising at least one of deformation parameters, breathing frequency parameters and shaking parameters, and adjusting the three-dimensional virtual object according to the adjusting parameters. According to the method, the reconstruction from the two-dimensional image containing the object to the three-dimensional virtual object is realized in a UV mapping mode, the operation is simple, and the expansion is convenient; and the three-dimensional virtual object can be adjusted according to the obtained adjustment parameters, so that the trueness and the user experience are improved.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

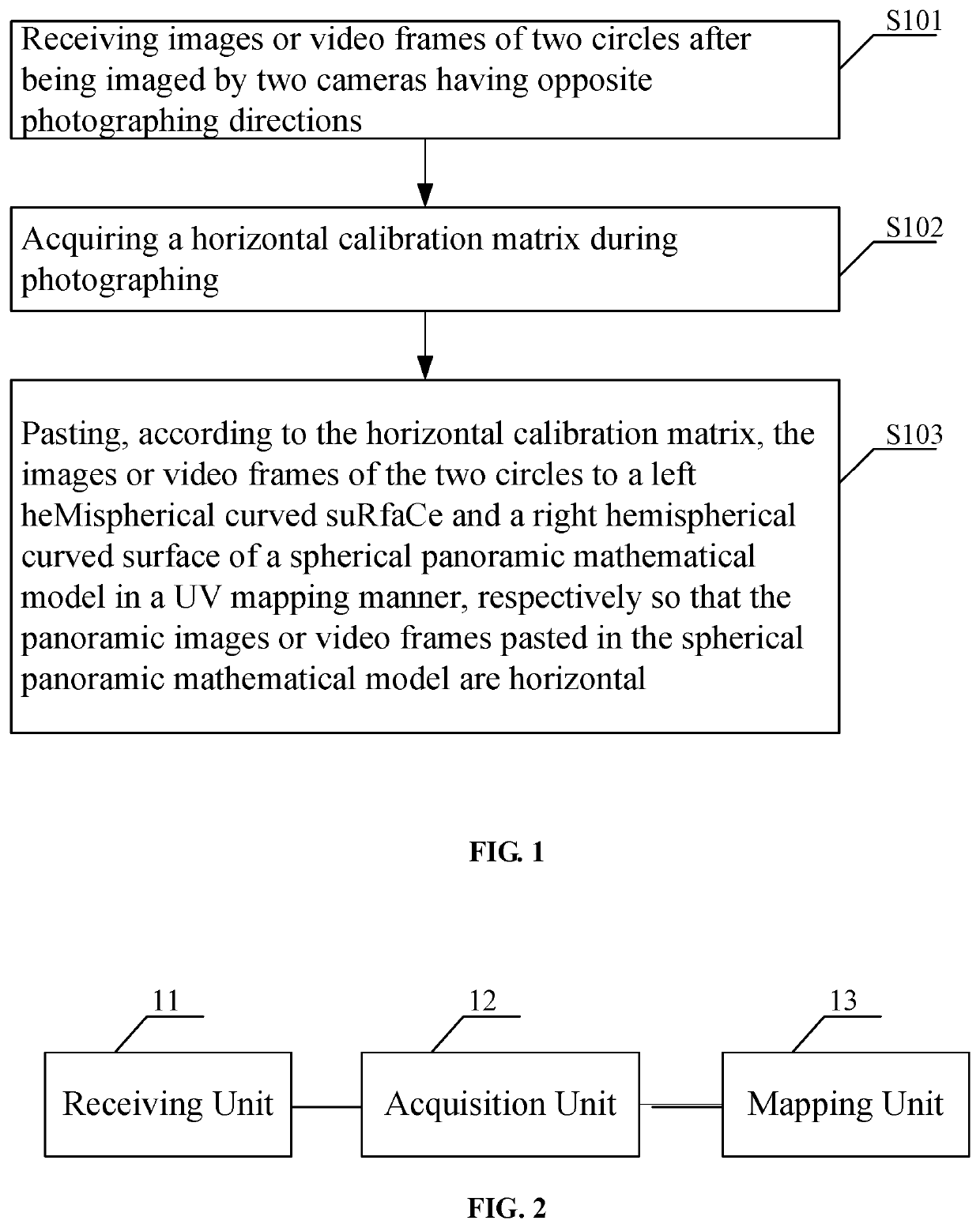

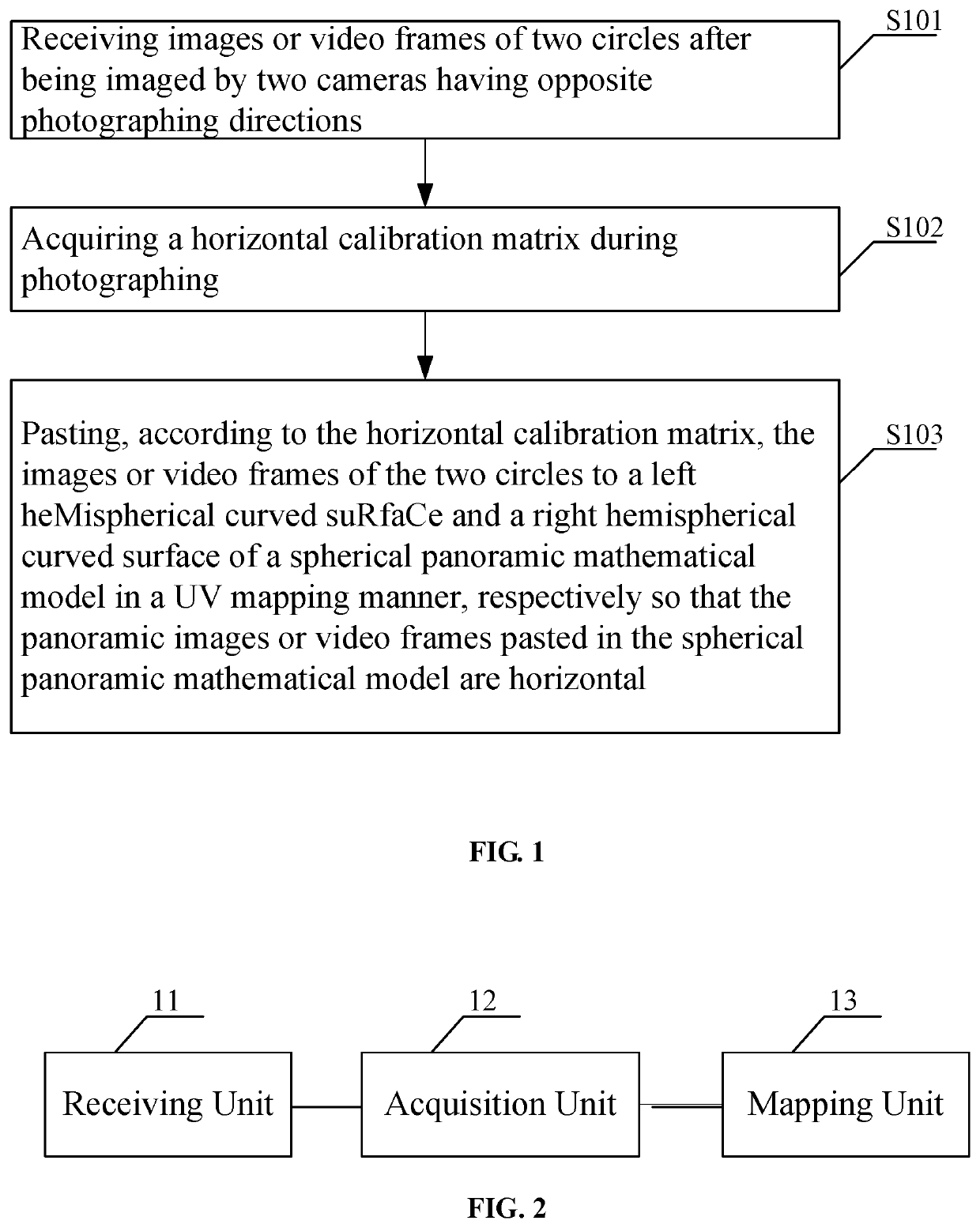

Horizontal calibration method and system for panoramic image or video, and portable terminal

The present invention provides a horizontal calibration method and system for a panoramic image or video, and a portable terminal. The method comprises: receiving images or video frames of two circles after being imaged by two cameras having opposite photographing directions; obtaining a horizontal calibration matrix during photographing; and pasting, according to the horizontal calibration matrix, the images or video frames of the two circles to a left hemispherical curved surface and a right hemispherical curved surface of a spherical panoramic mathematical model in a UV mapping manner, respectively so that the panoramic images or video frames pasted in the spherical panoramic mathematical model are horizontal. According to the present invention, when an image or a video frame photographed by a panoramic camera is not horizontal, the panoramic image or the video frame pasted in a spherical panoramic mathematical model is still horizontal.

Owner:SHENZHEN ARASHI VISION CO LTD

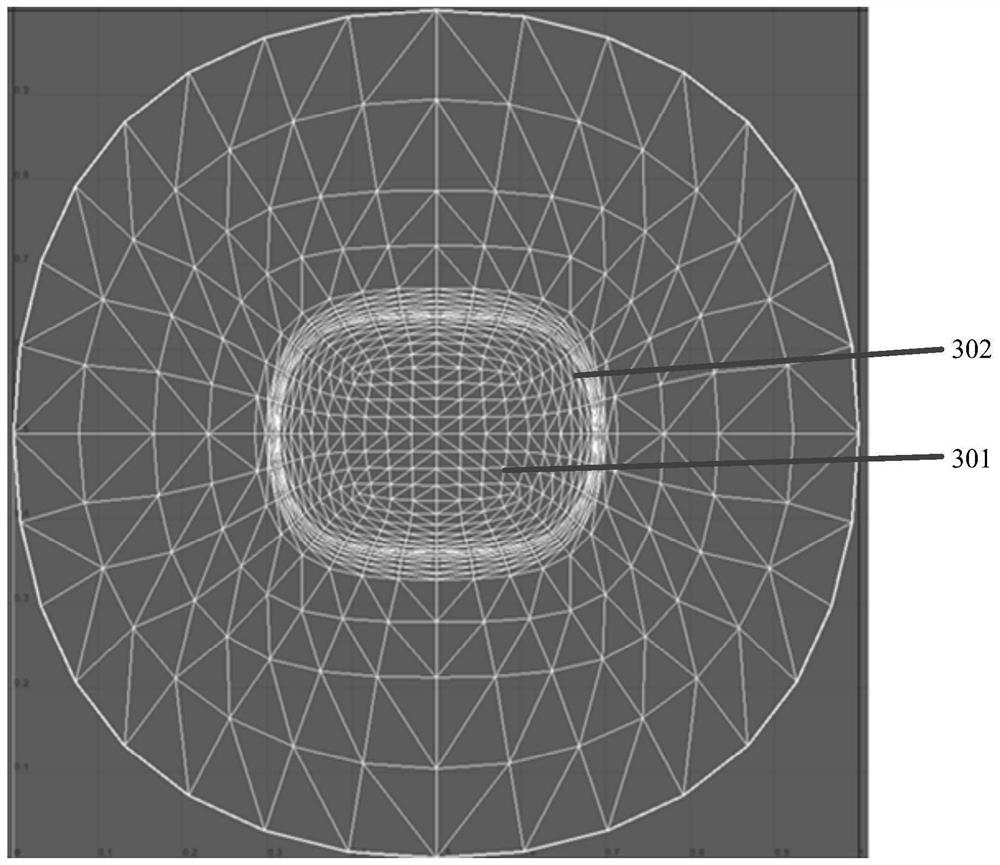

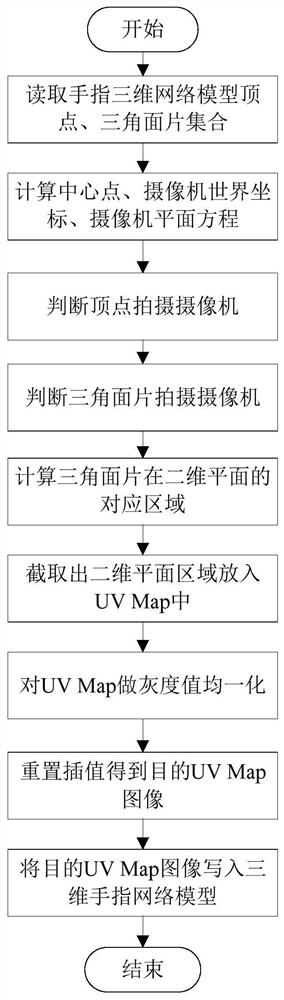

Finger three-dimensional model texture mapping method based on UV mapping

ActiveCN113012271ASolve the impact of finger recognitionAddressing the impact of identification3D-image rendering3D modellingPattern recognitionUV mapping

The invention provides a finger three-dimensional model texture mapping method based on UV mapping, and the method comprises the following steps: obtaining a coordinate set and a triangular patch set of all vertexes forming a finger contour from a finger three-dimensional grid model; selecting original points of coordinate systems of any three cameras to establish a space plane, and solving an equation of the space plane where all the cameras are located; mapping triangular patch textures of the model from three dimensions to two dimensions, and performing interpolation and average gray value processing; and writing the obtained two-dimensional texture information back to the texture-free finger model in a UV mapping manner to obtain a finger three-dimensional grid model with the texture information. The finger three-dimensional mesh model reconstructed by the method basically retains complete finger texture and fits actual human fingers, finger feature information can be less lost, the influence of finger recognition caused by finger postures and positions can be effectively solved, and the finger three-dimensional mesh model has a great effect on improvement of finger recognition precision.

Owner:SOUTH CHINA UNIV OF TECH

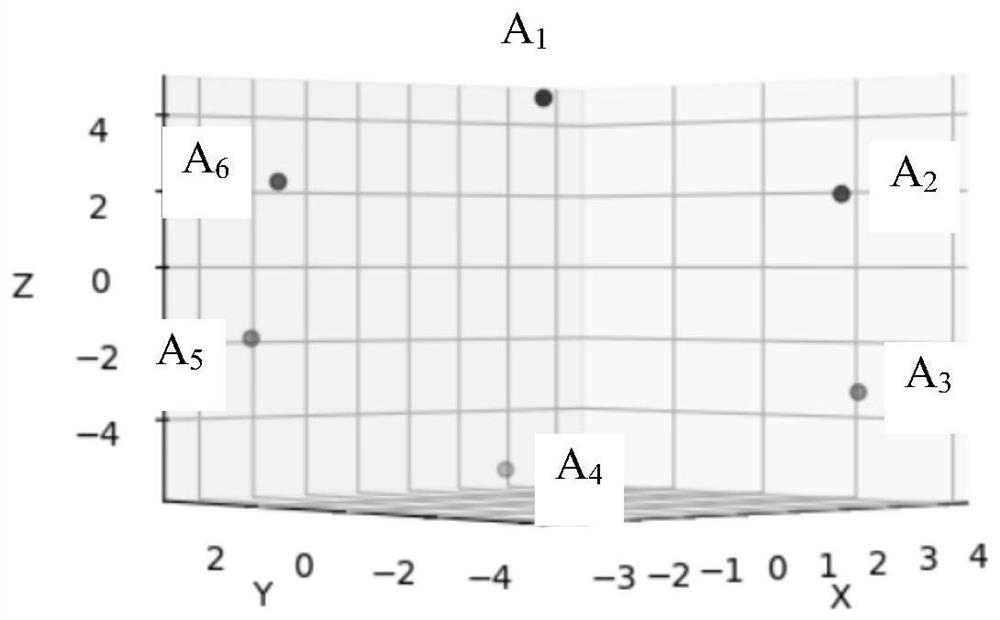

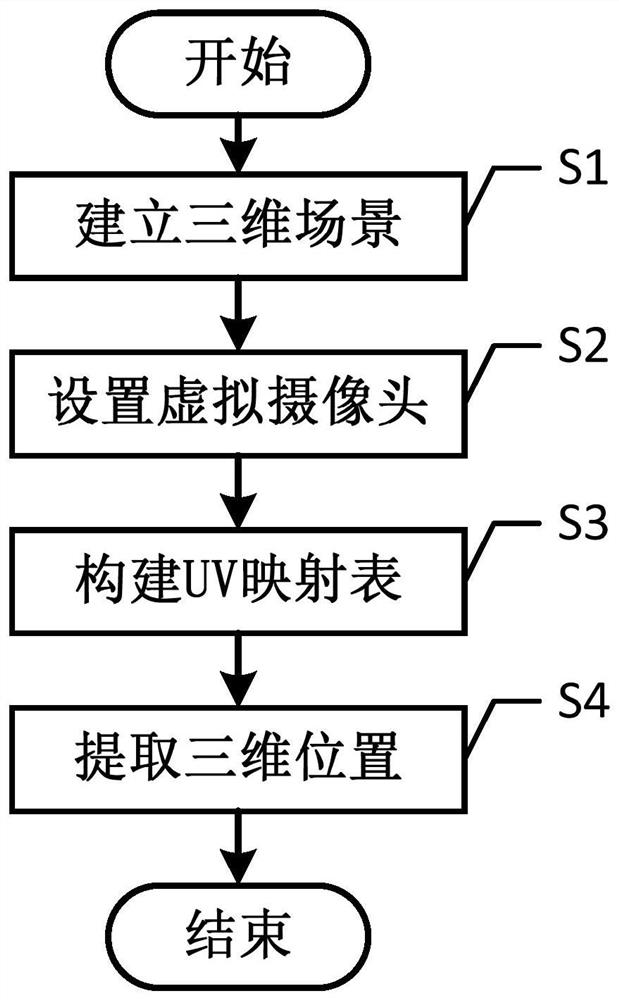

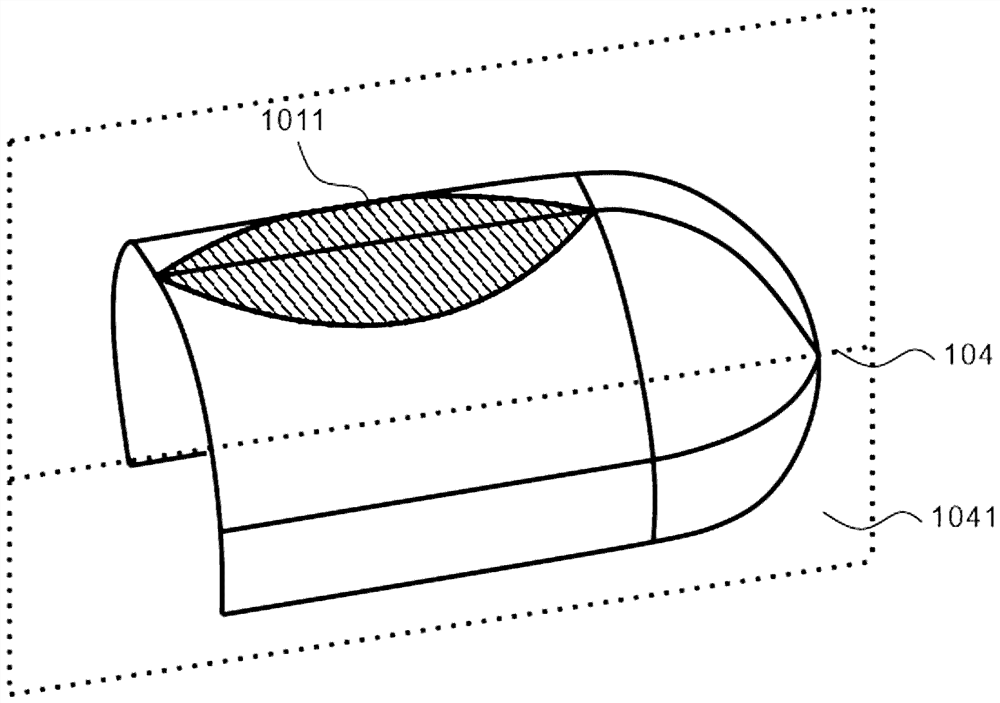

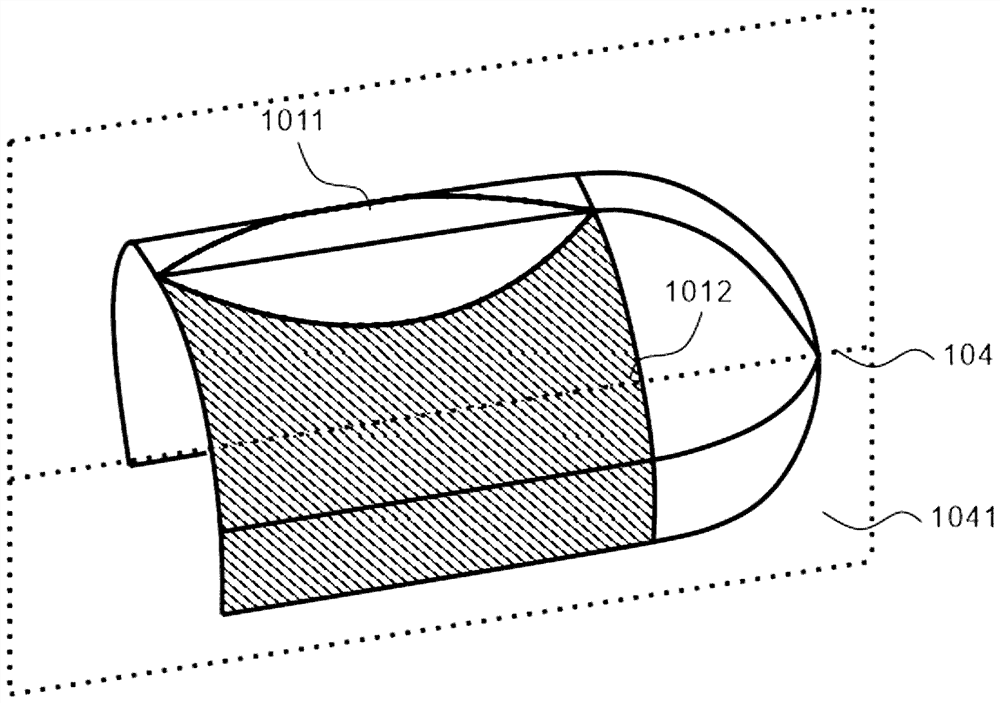

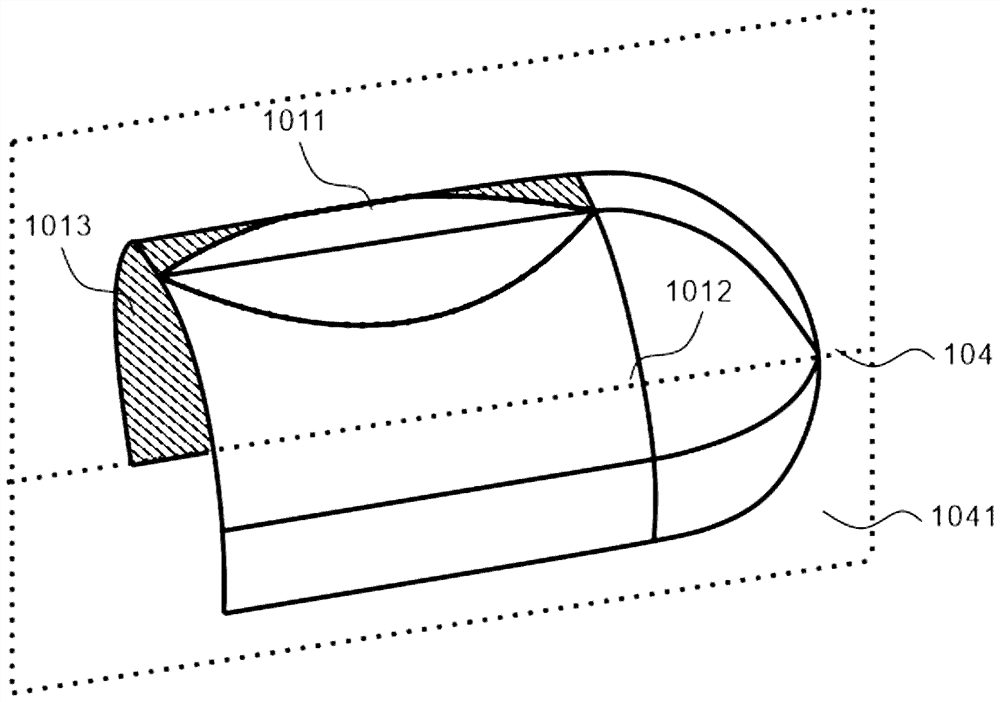

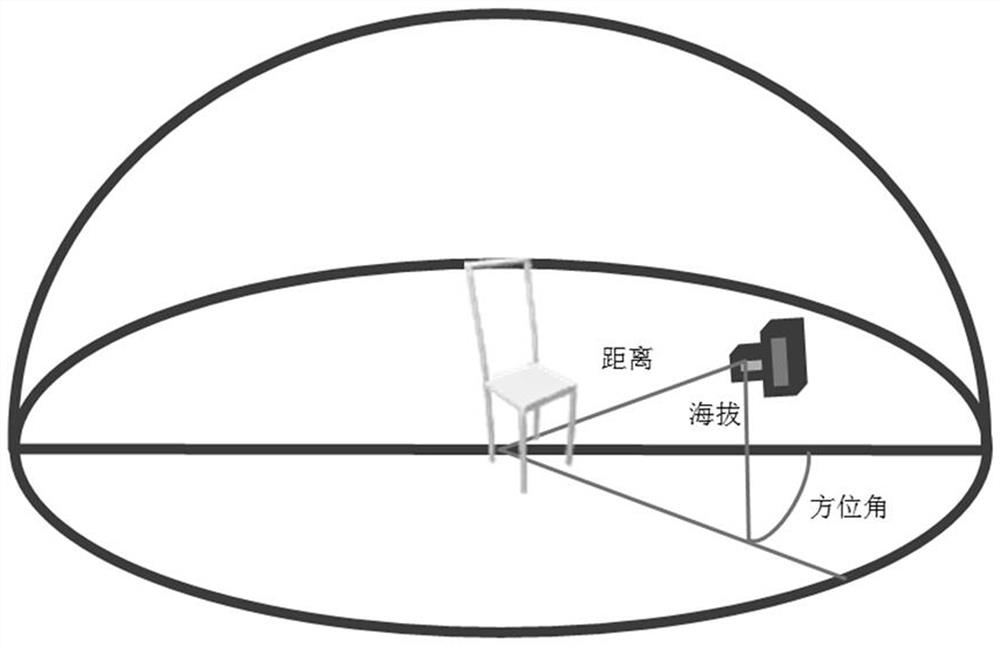

Video image three-dimensional position extraction method

PendingCN114220042AReduce labor costsMeet real-time requirementsCharacter and pattern recognitionRadiologyUV mapping

The invention discloses a video image three-dimensional position extraction method, which comprises the following steps of S1, establishing a three-dimensional scene of a real site through a 3D modeling tool; s2, setting a virtual camera at a corresponding position of the three-dimensional scene according to the position of the video image acquisition equipment of the real scene, and calibrating the angle between the video image acquisition equipment and the virtual camera; s3, constructing a UV mapping table for the three-dimensional scene through a Shader shader; s4, according to the UV mapping table, extracting a three-dimensional position corresponding to a pixel point of the video image shot by each virtual camera, and quickly finding the three-dimensional position through the pixel position of the video image; according to the invention, the problem of automatic extraction of the three-dimensional position of the video image is solved.

Owner:成都智鑫易利科技有限公司

Video processing method, device and related products based on 720 capsule screen

ActiveCN112203157BEasy to watch in real timeEasy to watchPicture reproducers using projection devicesSelective content distributionSurface displayFrame sequence

The embodiment of the present application provides a video processing method, device, and related products based on a 720 capsule screen. The method includes: converting the video to be played into a sequence of video frames arranged in chronological order, wherein the aspect ratio of the video frame image is The ratio range is 16:9 to 1:1.5; according to the aspect ratio of the video frame image, determine the corresponding UV conversion relationship; according to the determined UV conversion relationship, perform UV conversion on the video frame image to obtain the video frame UV map; according to the video frame Frame UV maps to generate video files for display on the inner surface of the 720 Capsule Screen. Through this solution, the traditional square film (the aspect ratio ranges from 16:9 to 1:1.5) can be viewed in a panoramic manner on the 720 capsule screen, and it can be correctly viewed on the 720 capsule screen , without distortion, so that users can get a very good panoramic and immersive video viewing experience when watching traditional square movies based on the 720 capsule screen.

Owner:首望体验科技文化有限公司

A method for real-time dynamic generation of feathers in a bird torso model

The invention discloses a real-time dynamic generating method for features on a bird body model. The method comprises the specific steps that UV mapping is carried out on a polygon model of a bird body, a vertex local coordinate system is set up on each vertex, and direction vectors of feather rods in the features located in the vertex local coordinate systems are set; a particle system is generated, all particles are restrained on the faces of a polygon, repulsive force among the particles serves as the width of the features, after evolution is carried out to the static state, the positions of all the particles are the positions of folliculus pili, and the types of the features are determined randomly; after the bird model is animated and deformed, the vertex local coordinate systems are updated, the direction of the feature rods of the features at the moment of current frames is calculated, the positions of the folliculus pili serve as coordinate origins to set up a feature local coordinate system, feature reference NURBS surface patches are set up according to the set width and the set length, the features are generated on the NUBRS surface patches, and the steps are repeated for all the frames in animation. The method can achieve no-penetration covering among the features, and generate the dynamic features in real time.

Owner:北京春天影视科技有限公司

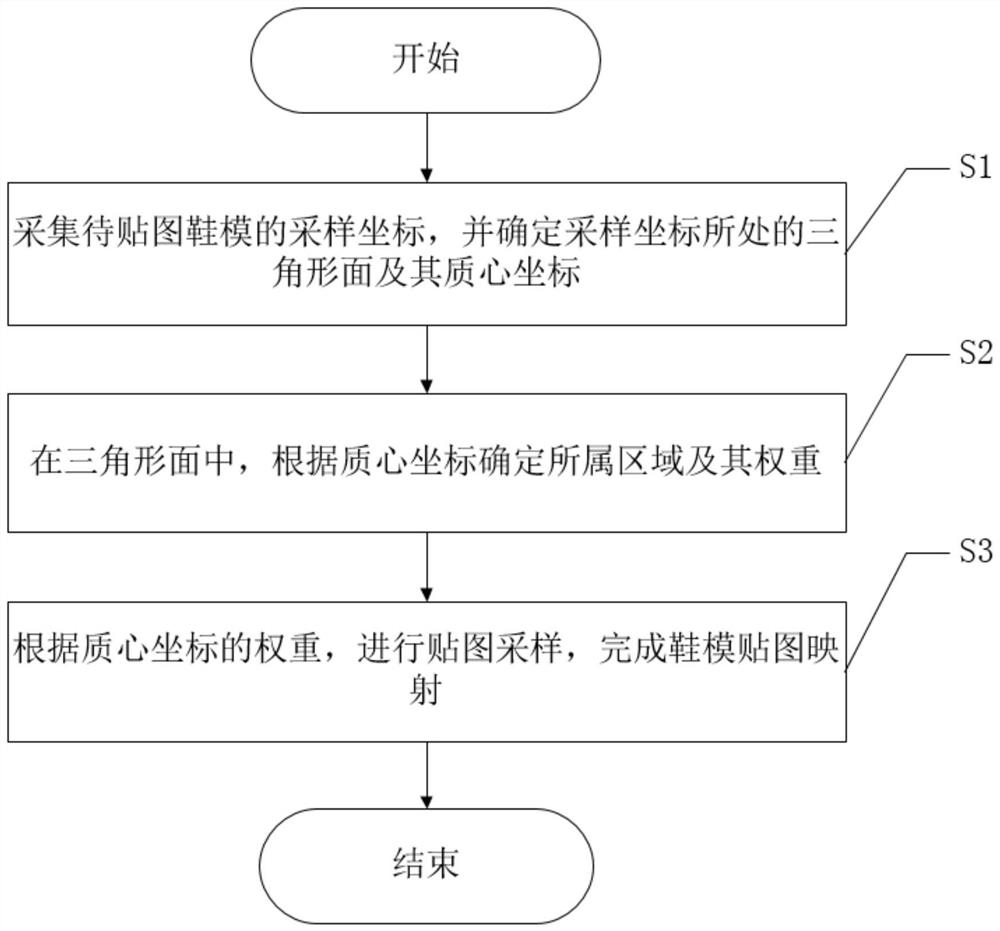

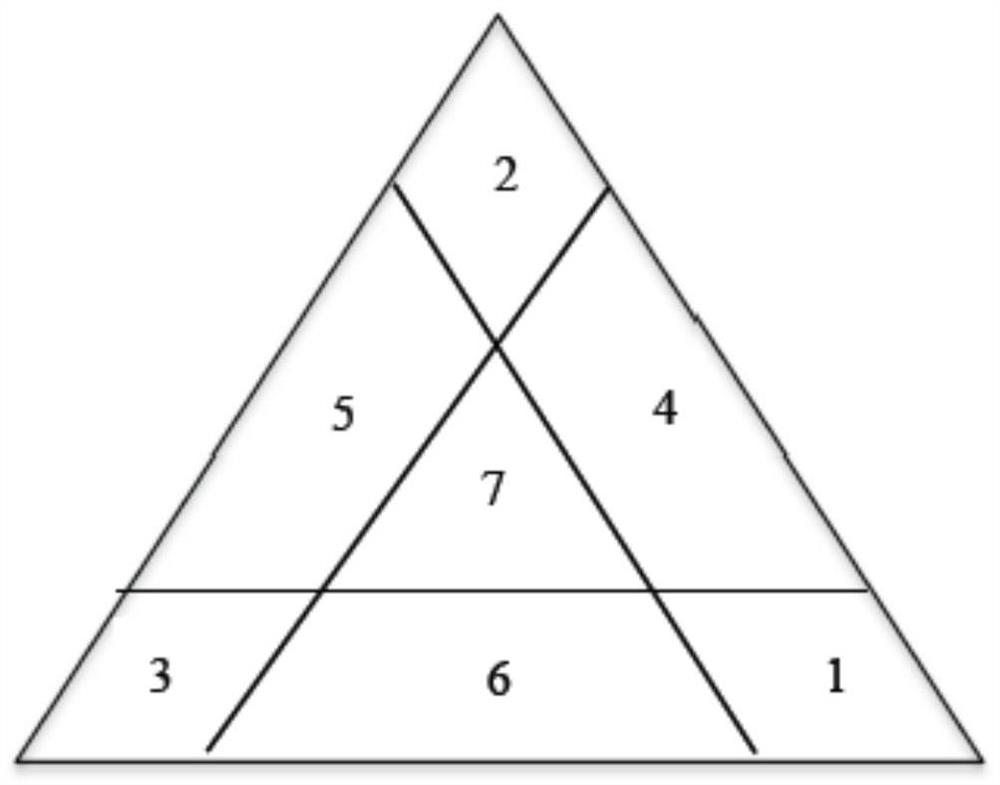

Intelligent shoe mold mapping method

PendingCN114357738AReduce licensing costsReduce labor costsDesign optimisation/simulationSpecial data processing applicationsEngineeringUV mapping

The invention discloses an intelligent shoe mold mapping method, which comprises the following steps of S1, collecting sampling coordinates of a to-be-mapped shoe mold, and determining a triangular surface where the sampling coordinates are located and centroid coordinates of the triangular surface; s2, in the triangular surface, determining an area to which the triangular surface belongs and the weight of the triangular surface according to the center-of-mass coordinates; s3, mapping sampling is carried out according to the weight of the center-of-mass coordinate, and shoe mold mapping is completed. According to the intelligent shoe mold map mapping method, a universal automatic model map uv mapping scheme is provided, and model employees do not need to manually make map uv data when making models, so that the labor cost, the time cost and the authorization cost of corresponding making software are greatly reduced.

Owner:成都中鱼互动科技有限公司

Horizontal calibration method and system for panoramic image or video, and portable terminal

The present invention provides a horizontal calibration method and system for a panoramic image or video, and a portable terminal. The method comprises: receiving images or video frames of two circles after being imaged by two cameras having opposite photographing directions; obtaining a horizontal calibration matrix during photographing; and pasting, according to the horizontal calibration matrix, the images or video frames of the two circles to a left hemispherical curved surface and a right hemispherical curved surface of a spherical panoramic mathematical model in a UV mapping manner, respectively so that the panoramic images or video frames pasted in the spherical panoramic mathematical model are horizontal. According to the present invention, when an image or a video frame photographed by a panoramic camera is not horizontal, the panoramic image or the video frame pasted in a spherical panoramic mathematical model is still horizontal.

Owner:SHENZHEN ARASHI VISION CO LTD

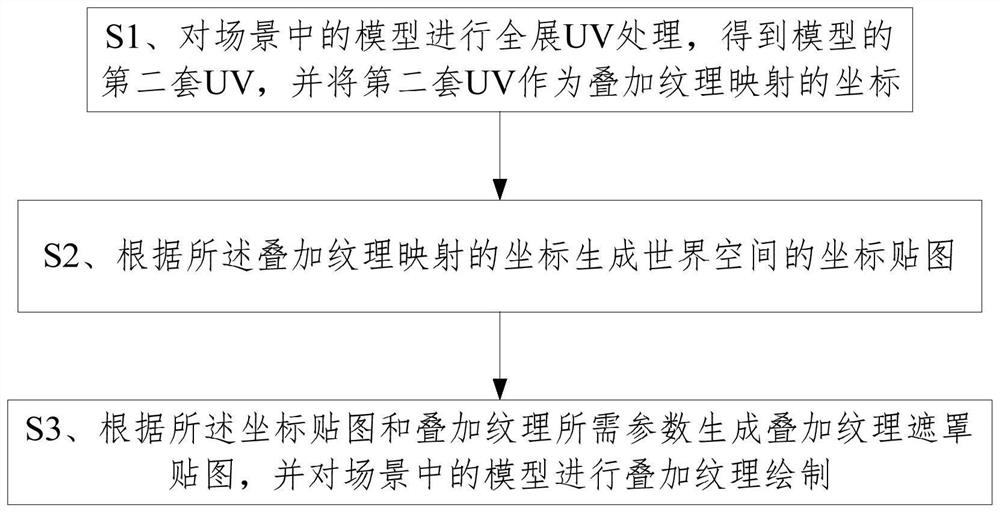

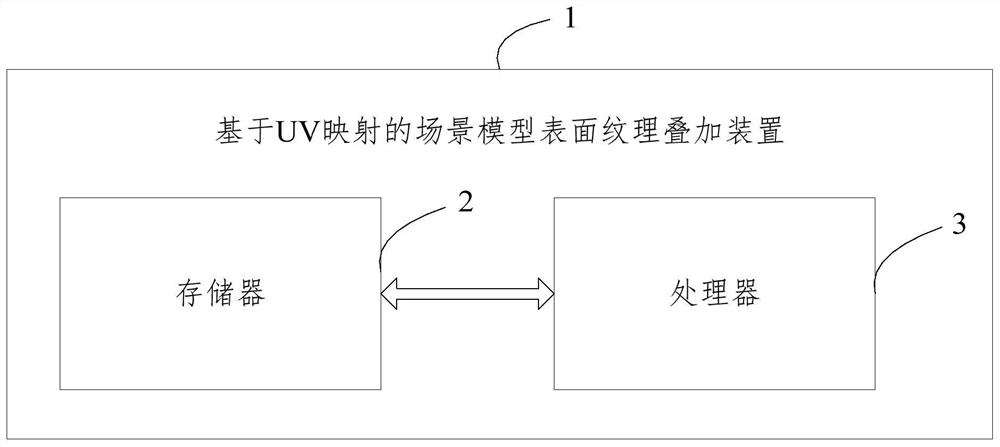

Scene model surface texture superposition method and device based on UV mapping

The invention provides a scene model surface texture superposition method and device based on UV mapping, and the method comprises the steps: carrying out the full-expansion UV processing of a model in a scene, obtaining a second set of UV of the model, and enabling the second set of UV to serve as the coordinates of the superposition texture mapping; generating a coordinate map of a world space according to the coordinates of the superimposed texture mapping; according to the method, a coordinate map is obtained, a superposition texture mask map is generated according to the coordinate map and parameters required for texture superposition, superposition texture drawing is performed on a model in a scene, and a second set of UV is generated by using a full-display scene model, so that UV map mapping of a world coordinate space is realized, the map can cover the surface of the model in a fitting manner, and the rendering effect is improved.

Owner:FUJIAN SHUBO INFORMATION TECH CO LTD

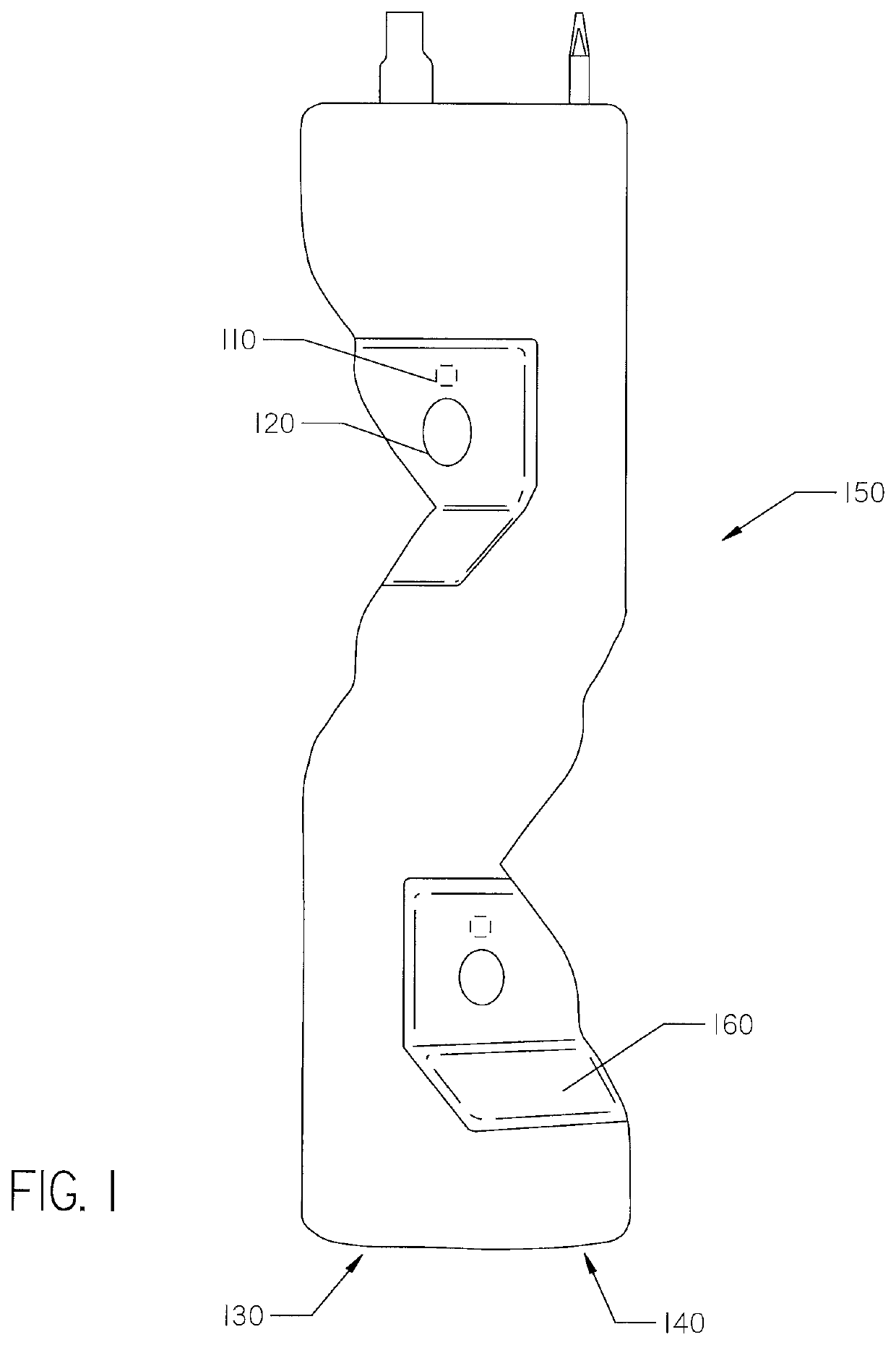

3D asset inspection

ActiveUS11067388B2Facilitating subsequent abilityUsing optical meansElectromagnetic wave reradiation3d sensorOdometry

Systems and methods for physical asset inspection are provided. According to one embodiment, a probe is positioned to multiple data capture positions with reference to a physical asset. For each position: odometry data is obtained from an encoder and / or an IMU; a 2D image is captured by a camera; a 3D sensor data frame is captured by a 3D sensor, having a view plane overlapping that of the camera; the odometry data, the 2D image and the 3D sensor data frame are linked and associated with a physical point in real-world space based on the odometry data; and switching between 2D and 3D views within the collected data is facilitated by forming a set of points containing both 2D and 3D data by performing UV mapping based on a known positioning of the camera relative to the 3D sensor.

Owner:CHARLES MACHINE WORKS

A digital twin method and system based on 3D model matching

ActiveCN113822993BAutomate the buildQuick conversionCharacter and pattern recognition3D-image renderingPattern recognitionSilhouette

The invention discloses a digital twin method and system based on 3D model matching. The method includes four steps: model and texture training, model matching, texture fusion, and scene placement; wherein, model and texture training is an offline preprocessing step, and model matching, texture Fusion and scene placement are real-time processing steps. The training of the model uses multi-viewpoint silhouette images to learn the 3D structure, and realizes the 3D reconstruction of the polygonal mesh; the training of the texture obtains the texture flow through a fixed UV mapping, and realizes the reasoning from the 2D image to the 3D texture. Model matching uses the reconstructed model and the database model to perform IOU iterative calculation, and obtains the model whose IOU value is closest to 1 as the specified model after matching. Texture fusion is to fuse the specified model after matching with the 3D texture stream after texture inference to form a standard 3D model. Scene placement is to place the 3D model accurately in the 3D scene. This digital twin method automatically generates 3D model scenes and improves the efficiency of digital twins.

Owner:ZHEJIANG LAB

A custom pattern forming method

ActiveCN109375886BEasy to useHigh precisionGeometric image transformationDigital output to print unitsPersonalizationImage resolution

The invention relates to a custom pattern forming method. The user selects a work on the platform, and inputs the work into the custom pattern area of a blank canvas on the computer; the position of the work in the 3D model is projected through the UV mapping function; the work is synthesized into Generate a 3D real object preview in the 3D model; after confirming the 3D real object preview, calculate the deformation amount of the image converted into the printing area; the computer adjusts the pattern parameters to be printed according to the deformation amount of the printing area image; the computer transmits the adjusted printing pattern parameters to The controller of the digital printing machine; the controller of the digital printing machine controls the inkjet head to complete an inkjet action, and an inkjet action will print out the adjusted printing pattern. The vamp prepared according to the steps of the method has the advantages of strong personalization, no damage to the original shoe, small image deformation, high pixel resolution, and bright colors.

Owner:程志鹏

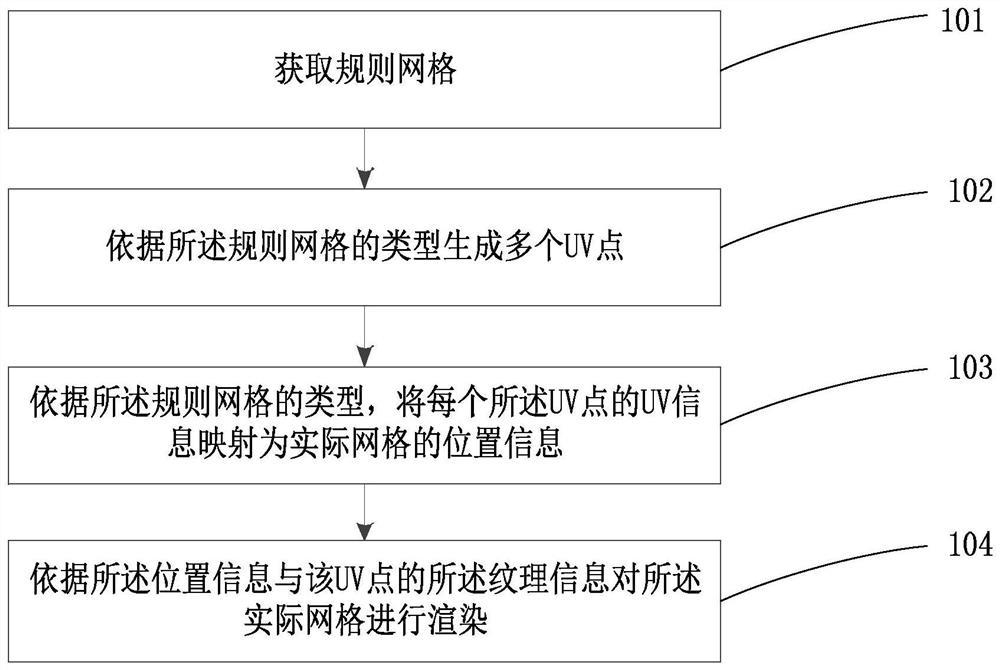

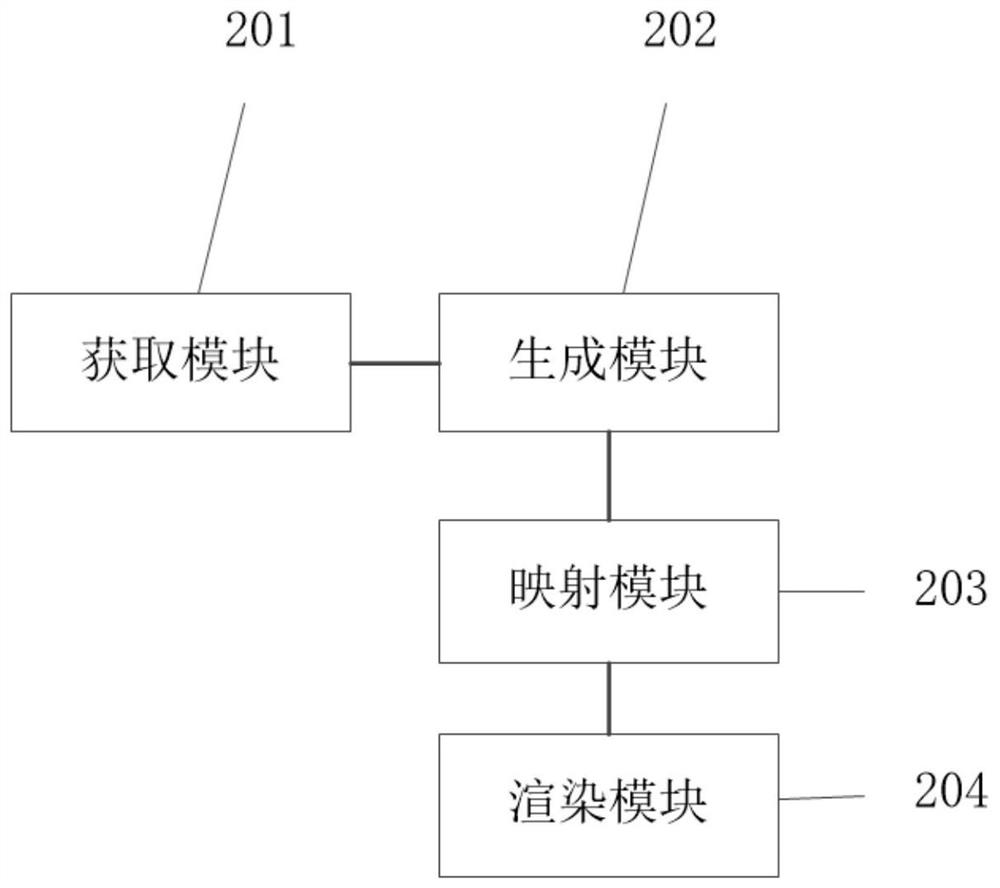

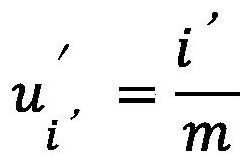

UV mapping method based on rasterization rendering and cloud equipment

PendingCN113744382ARealize the rendering effectSmall amount of calculation3D-image renderingComputer resourcesRegular grid

The invention belongs to the technical field of computers, and particularly relates to a UV mapping method based on rasterization rendering and cloud equipment. The method comprises the steps of: obtaining a regular grid; generating a plurality of UV points according to the type of the regular grid, each UV point comprising UV information and texture information; mapping the UV information of each UV point into position information of an actual grid according to the type of the regular grid; and rendering the actual grid according to the position information and the texture information of the UV points. The method has the advantages that compared with a traditional mapping method, the calculation amount of the UV mapping method can be reduced, computer resources can be fully utilized by accelerated operation of the method through a GPU, and the effect of modifying and adjusting the UV points in real time is achieved. The invention further provides cloud equipment to execute the UV mapping method based on rasterization rendering, so that the effect of rendering the actual grid by using the texture information of the regular grid is achieved.

Owner:广州引力波信息科技有限公司

A Fast Rendering Method for Virtual Scene and Model

ActiveCN107103638BImprove efficiencyIncrease richnessImage rendering3D-image renderingUV mappingBloom

The invention discloses a fast rendering method and device for a virtual scene and a model. The method comprises steps of firstly obtaining the rendering request of the virtual scene and the model to be rendered and the standard material library; creating a read and write file including the corresponding relation among the scene parameter, the model and the material according to the rendering request, and selecting a material corresponding to the model to be rendered from the pre-established standard material library after loading; setting and adjusting the scene parameter according to the rendering request, rendering the model according to the selected material and adjusting the material parameter to complete the rendering satisfying the specified rendering request; when a single model corresponds to a plurality of materials, directly subjecting the plurality of materials to a three-dimensional presence UV mapping on the surface of the three-dimensional model; and generating a high light effect and its corresponding material by the hand-drawn trajectory according to the rendering request, and rendering the model's high light effect. The method has the advantages of high efficiency, openness, automation, integration of tools, light weight, sustainability, and the like on the basis of greatly improving the efficiency of virtual scene and model rendering.

Owner:HANGZHOU VERYENGINE TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com