Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

95 results about "Expressed emotion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Expressed emotion (EE), is a measure of the family environment that is based on how the relatives of a psychiatric patient spontaneously talk about the patient. It is a psychological term specifically applied to psychiatric patients, and differs greatly from the daily use of the phrase "emotion expression" or another psychological concept "family expressiveness"; frequent communication and natural expression of emotion among family members is a conducive, healthy habit.

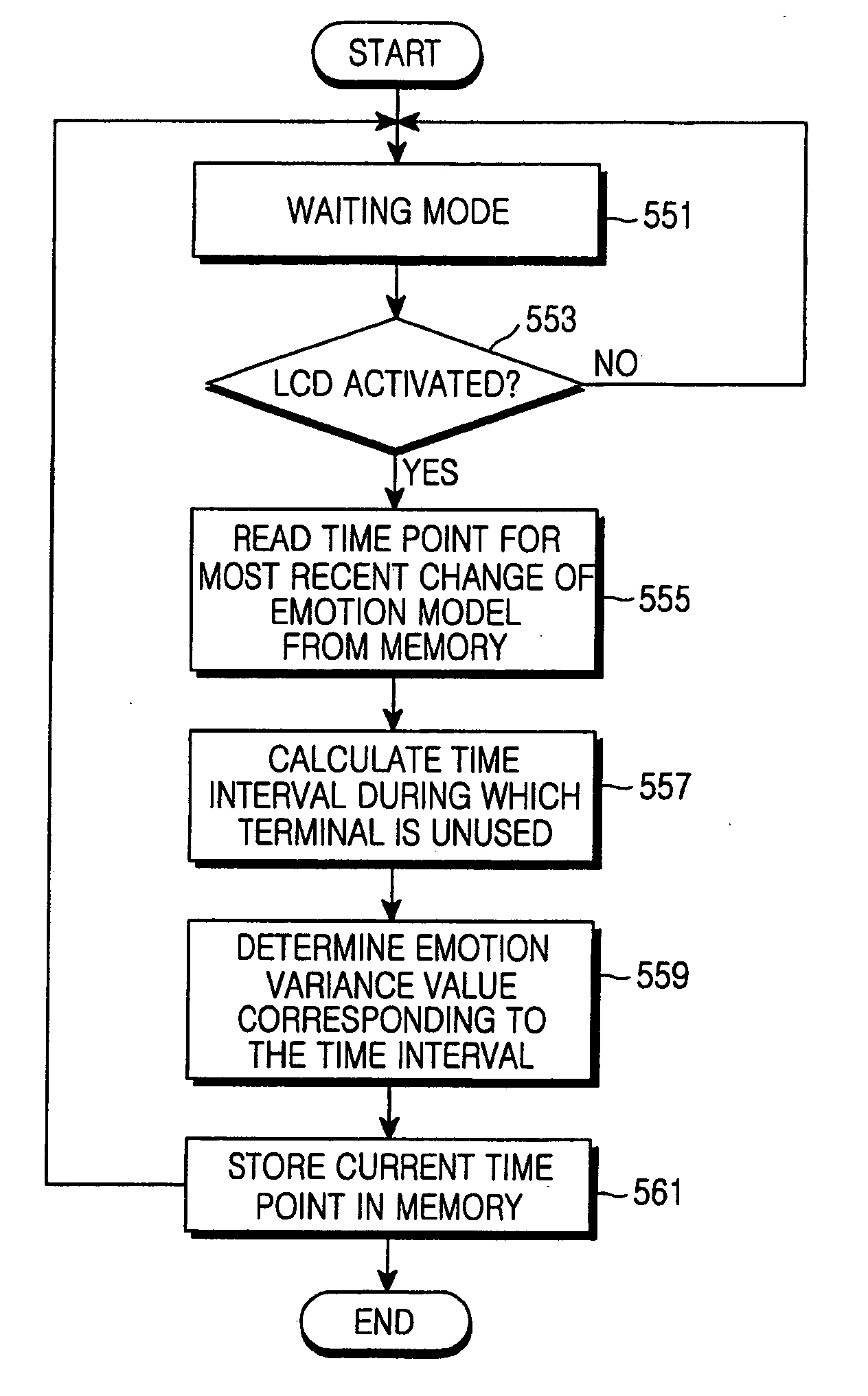

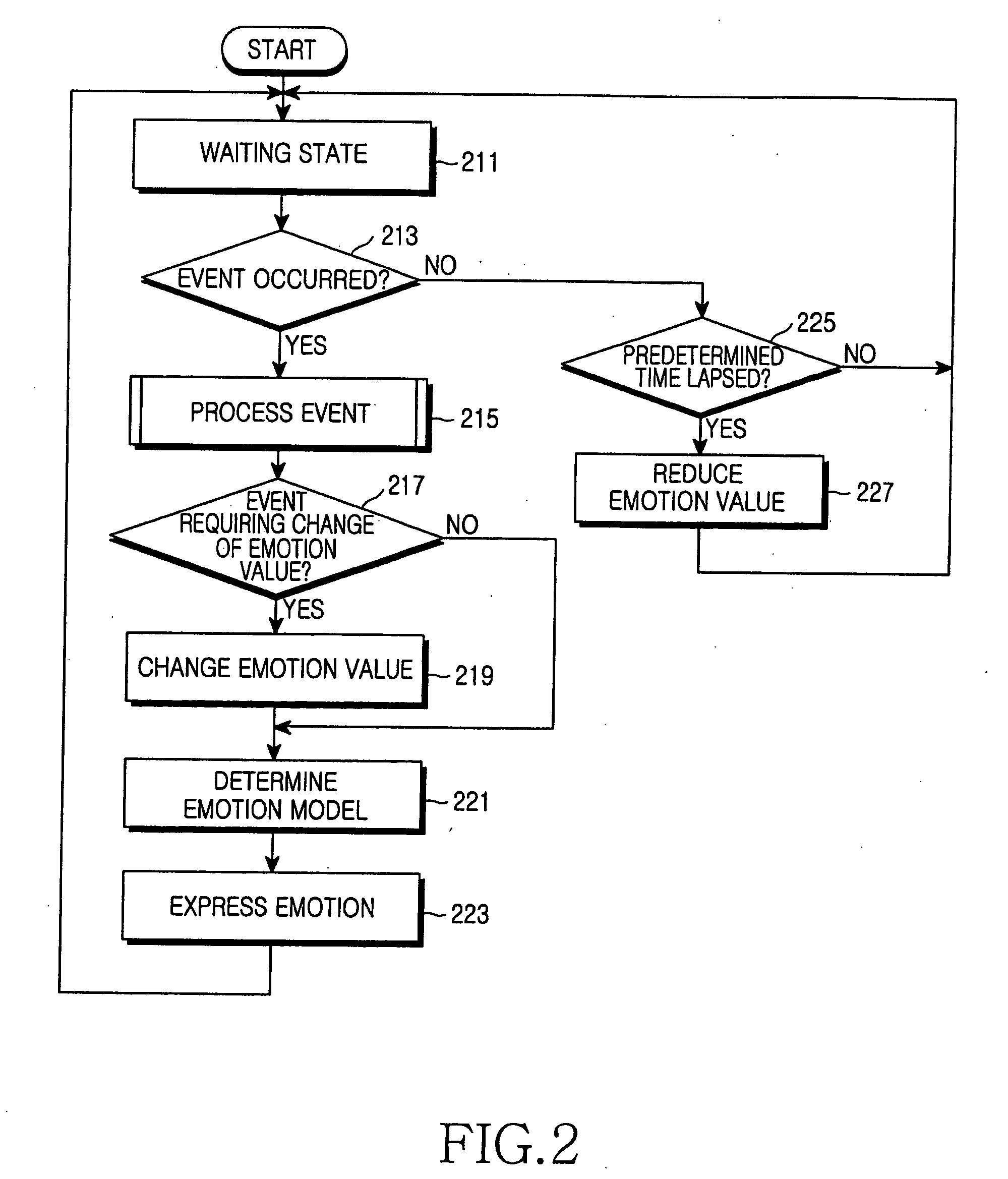

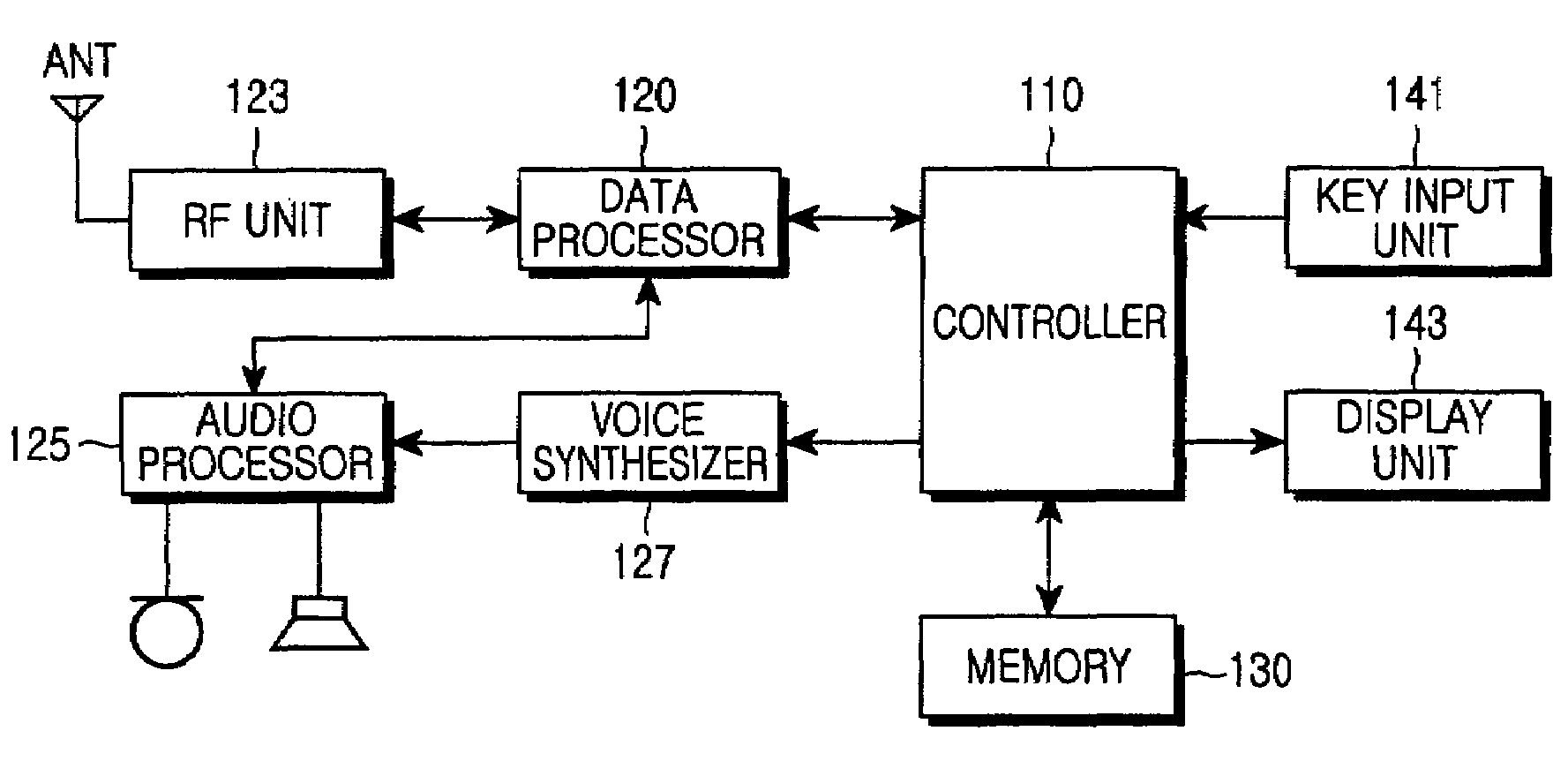

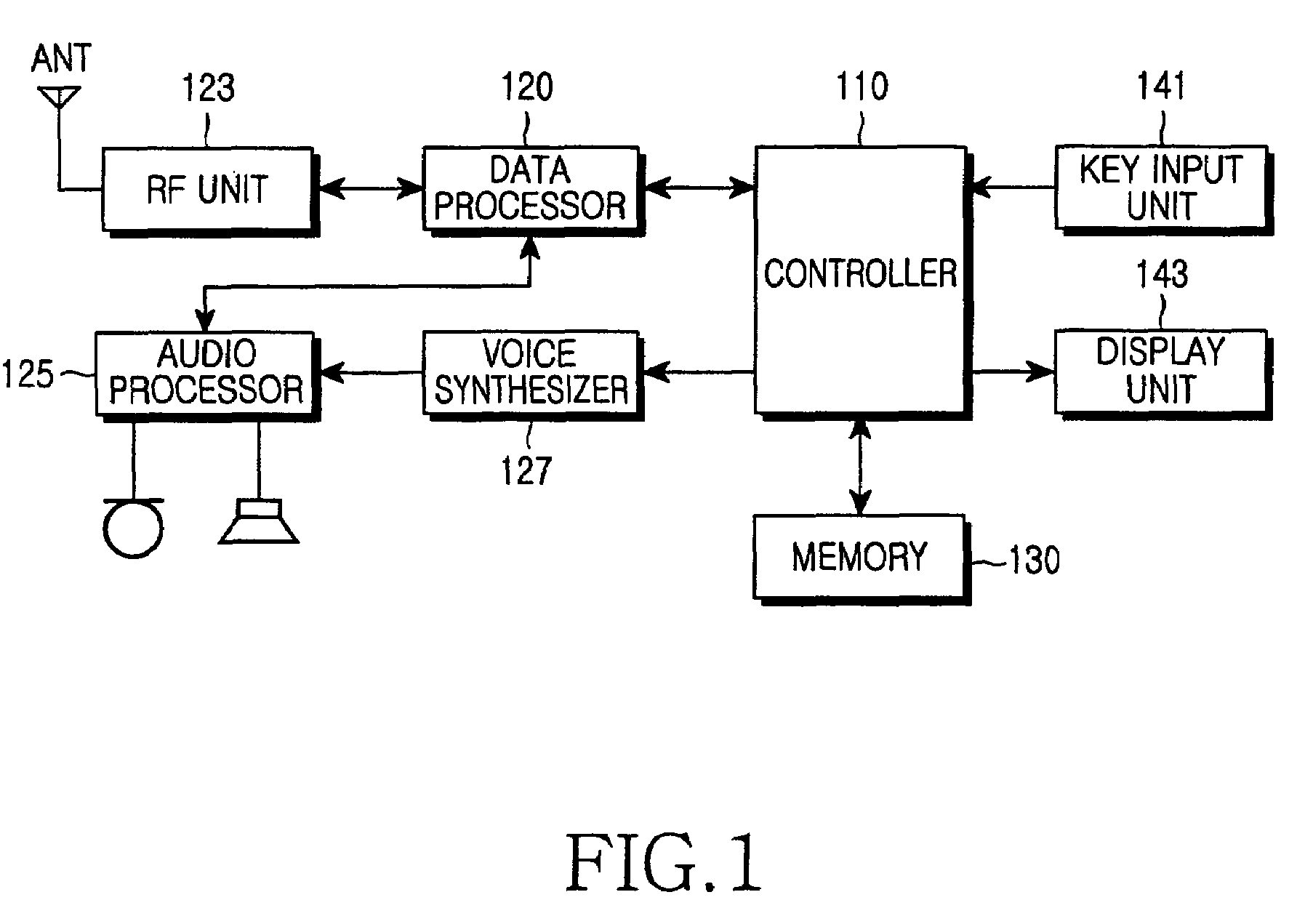

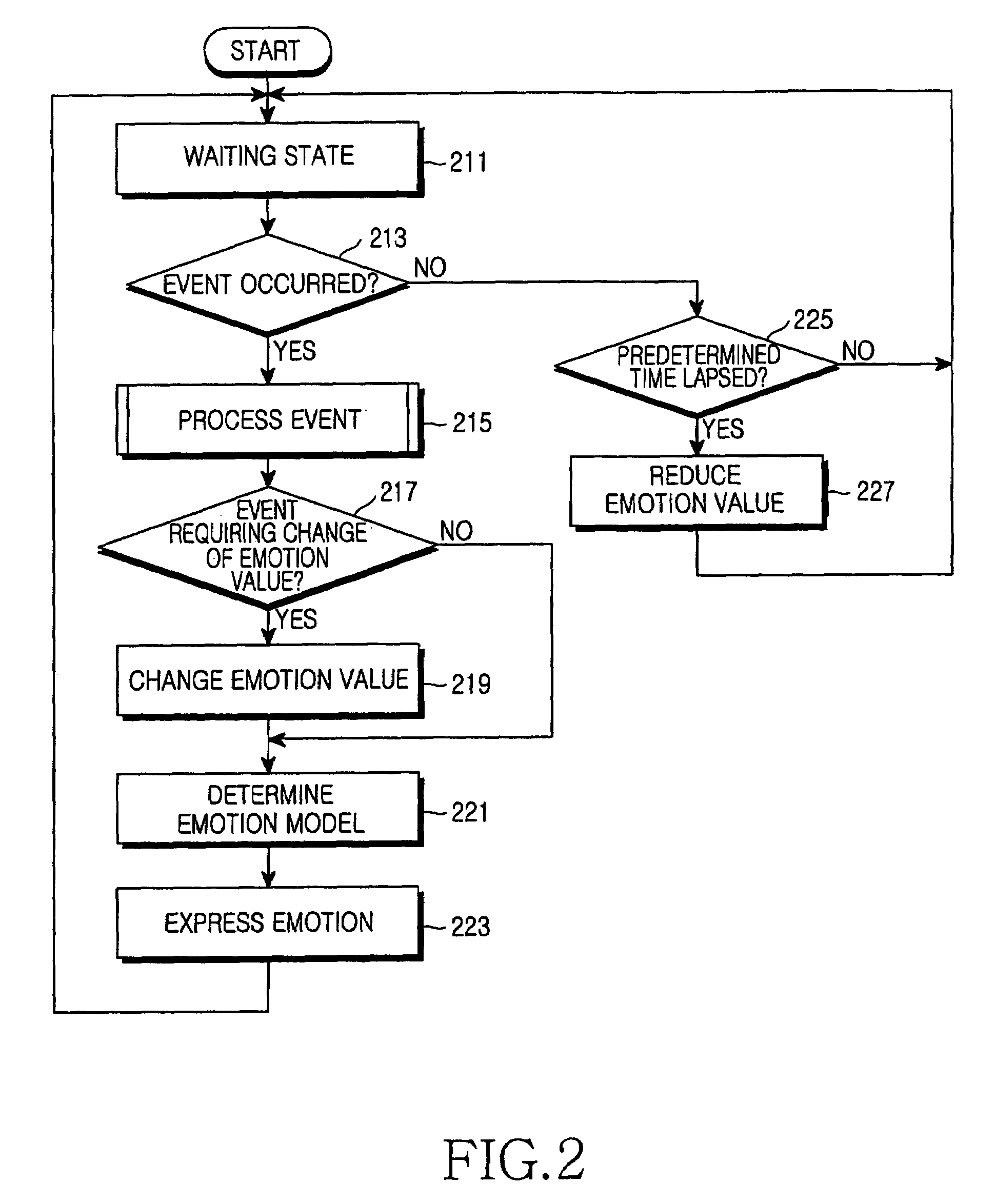

Device and method for displaying a status of a portable terminal by using a character image

Owner:SAMSUNG ELECTRONICS CO LTD

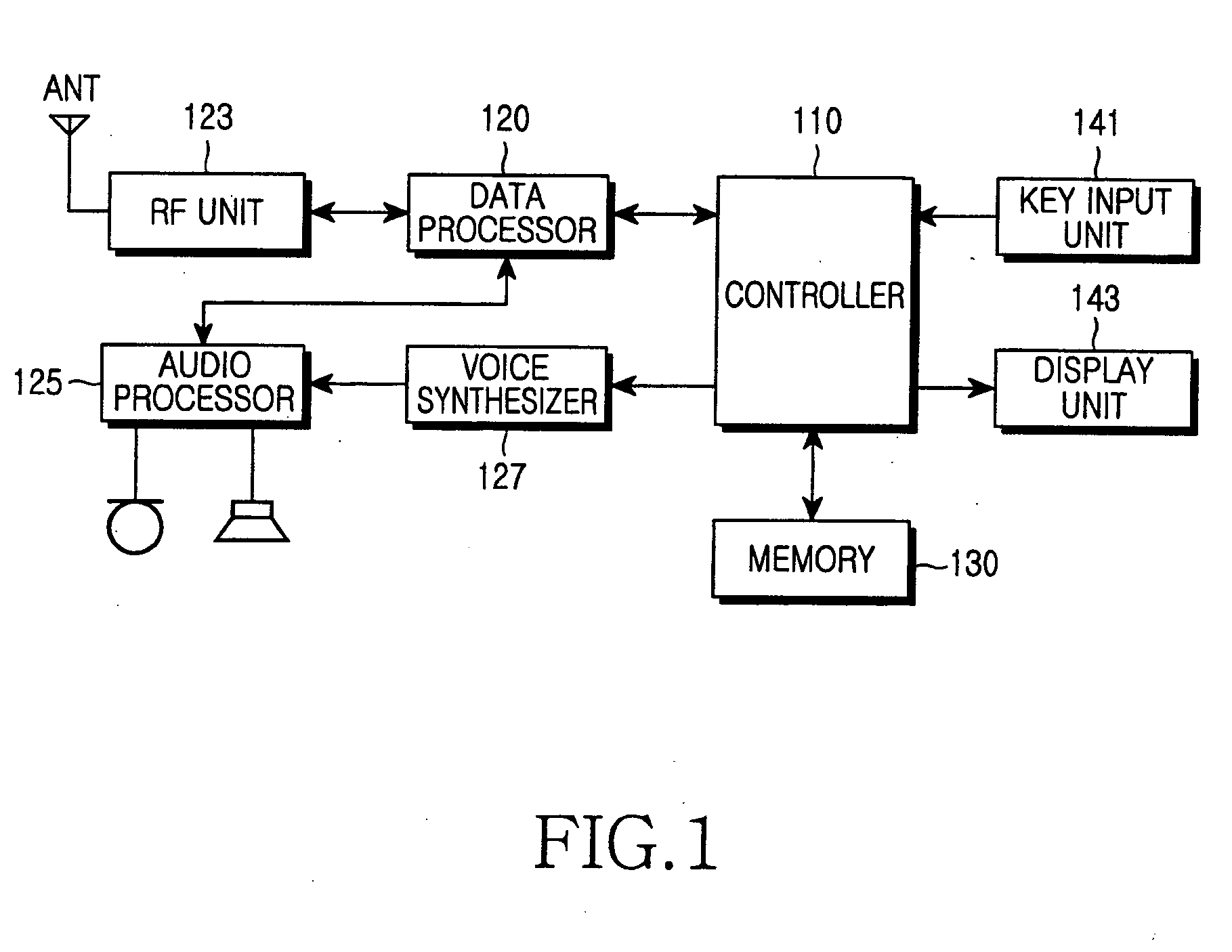

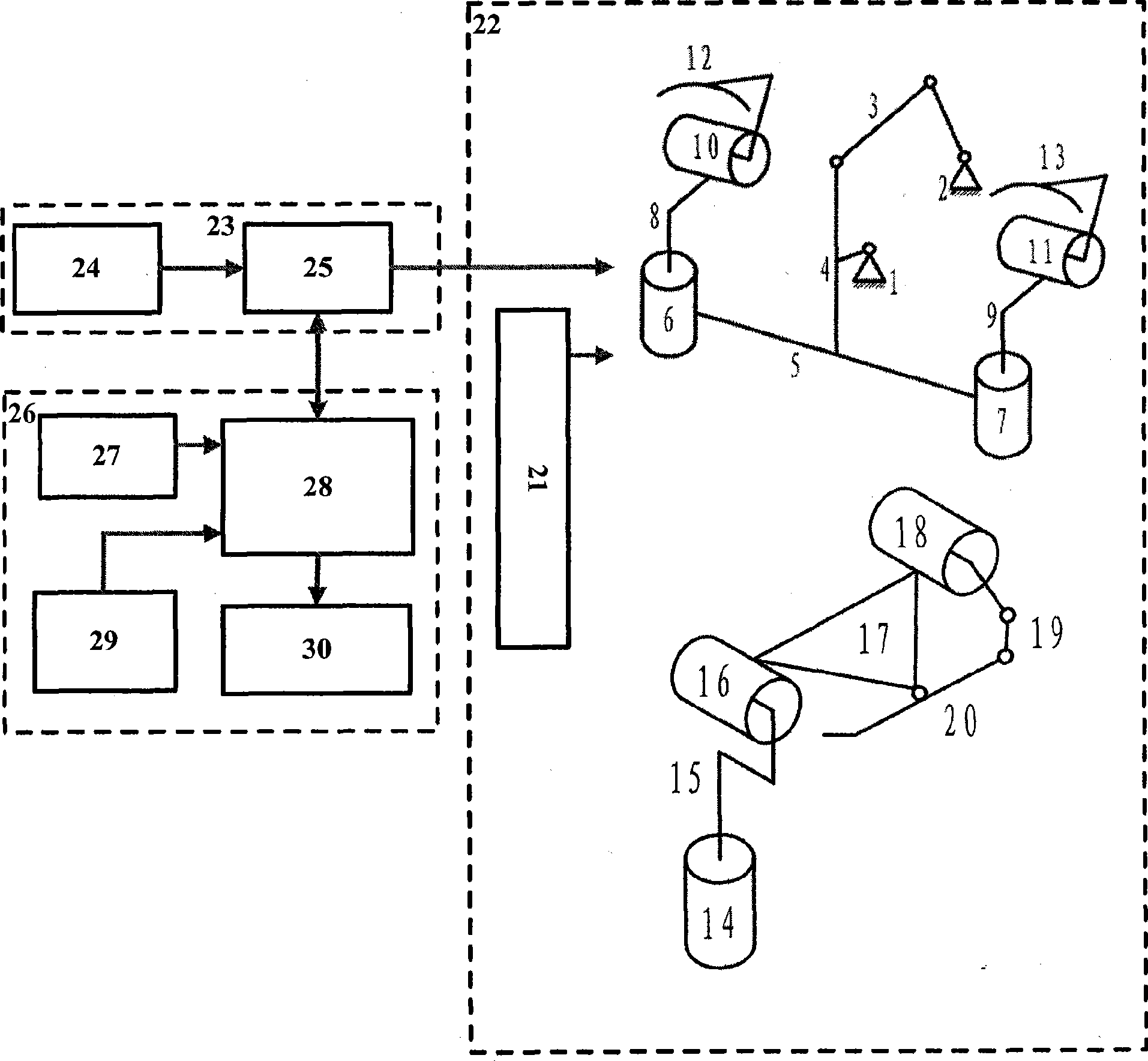

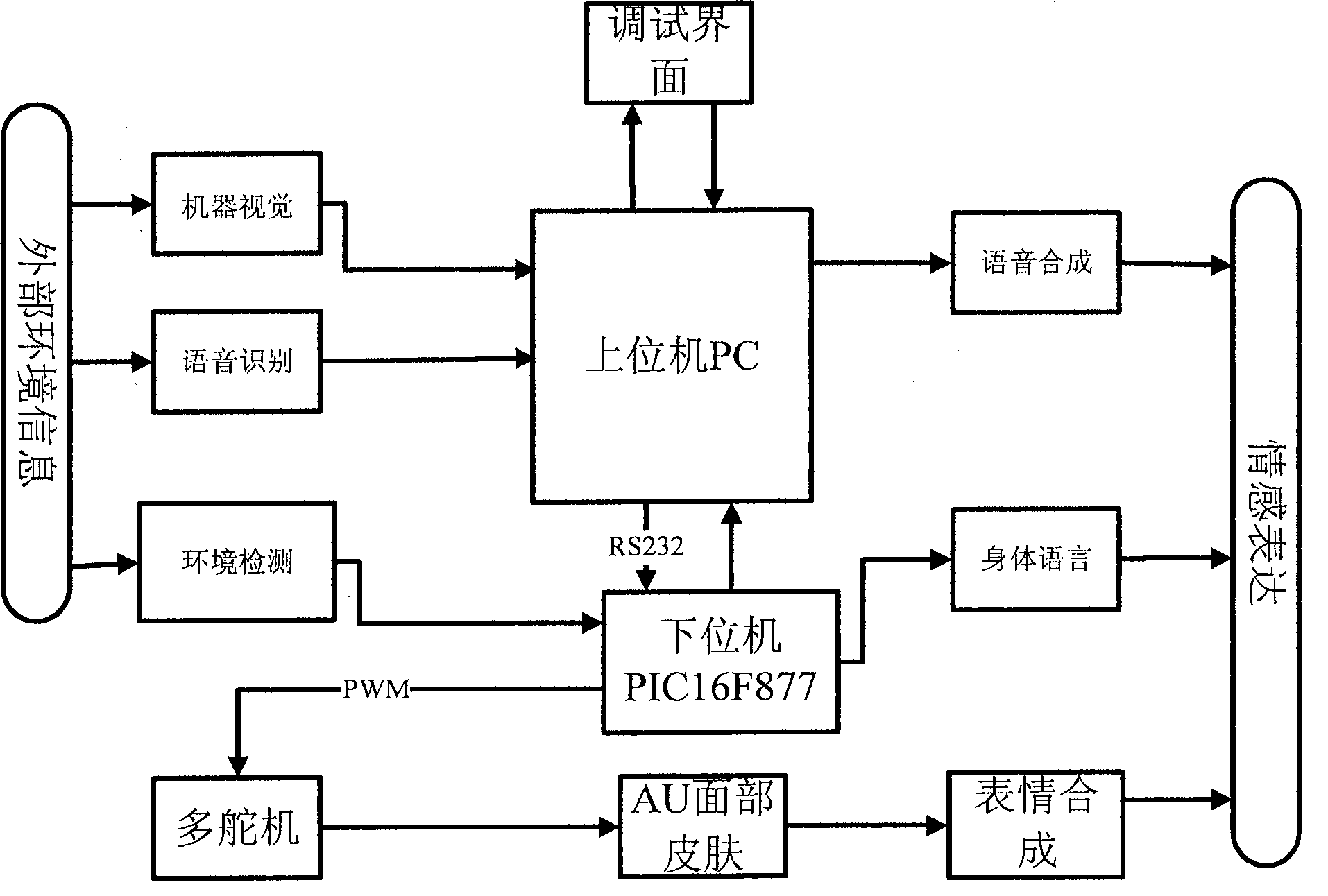

Emotional robot system

The invention relates to an emotion robot system, in particular to a robot which can generate human-simulated facial expression and can interact with people. The emotion robot system is composed of a head part system of the robot with six facial expressions and a software platform which takes PC as a control center; the emotion robot detects the information of external environment by the equipment such as an infrared sensor, a mic, a camera and the like. The PC carries out emotive feature extraction by the collected information of the external environment, and then voice emotion is analyzed and the facial expression of human face is detected, and then the emotion expressed by the robot is determined. The emotion robot expresses the emotion by voice output, facial expression and body language. The PC sends out instructions to a singlechip by serial ports, and the singlechip drives the motor to move for generating the facial expression and the body language of the robot after receiving the instructions. The emotion robot system can be used for domestic service robots, guest-greeting robots, explication robots and the man-to-machine interaction research platform.

Owner:UNIV OF SCI & TECH BEIJING

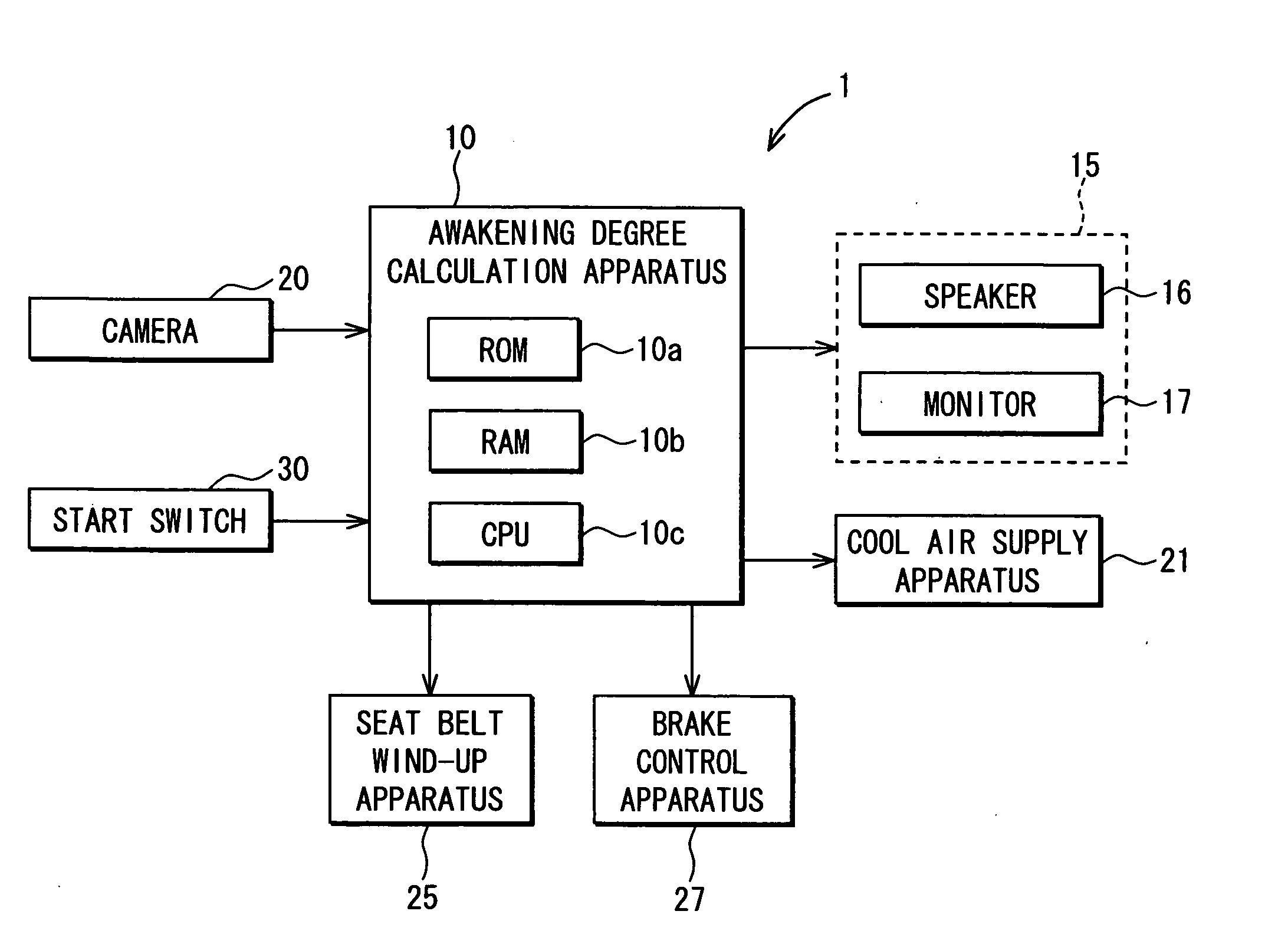

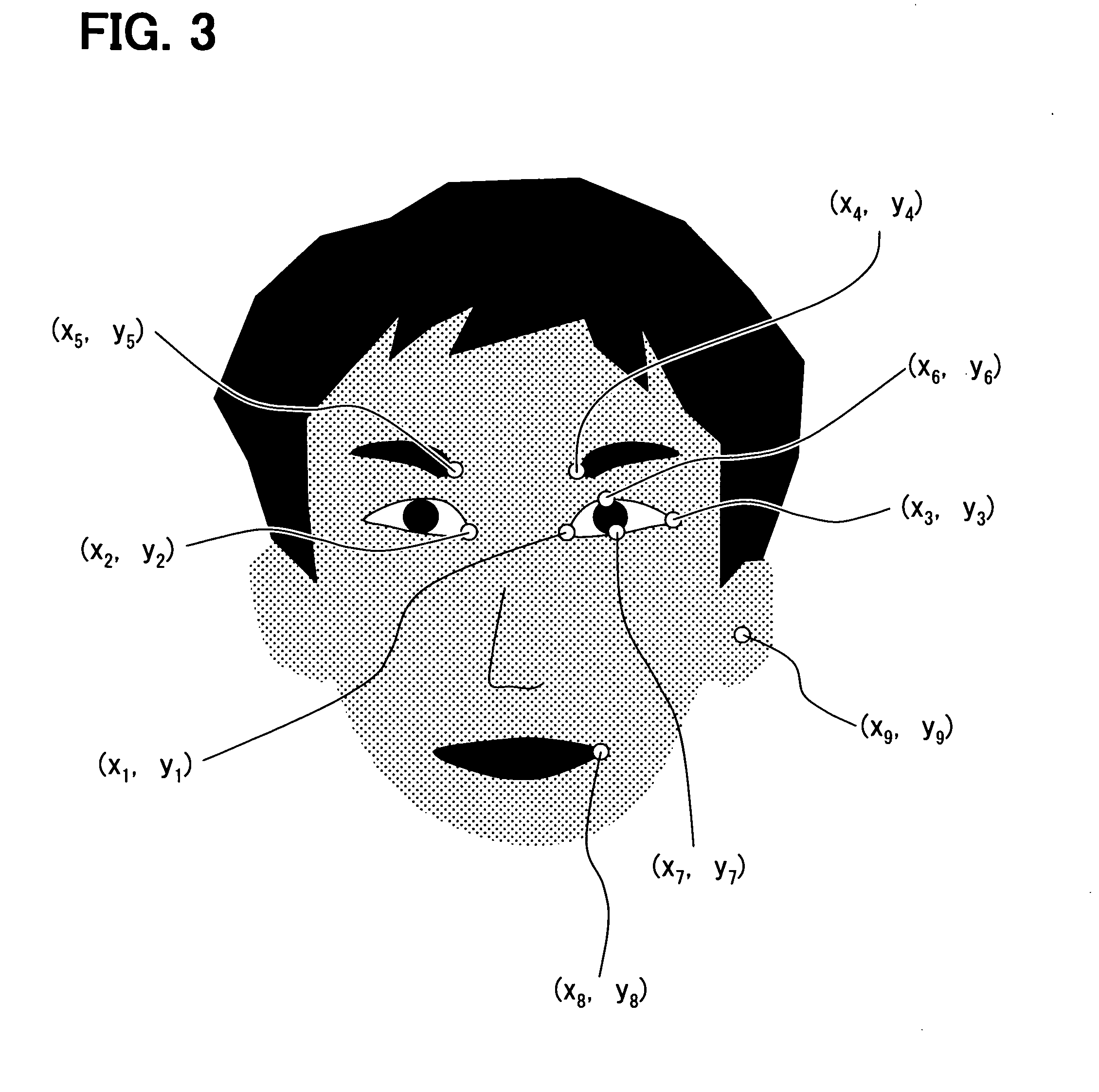

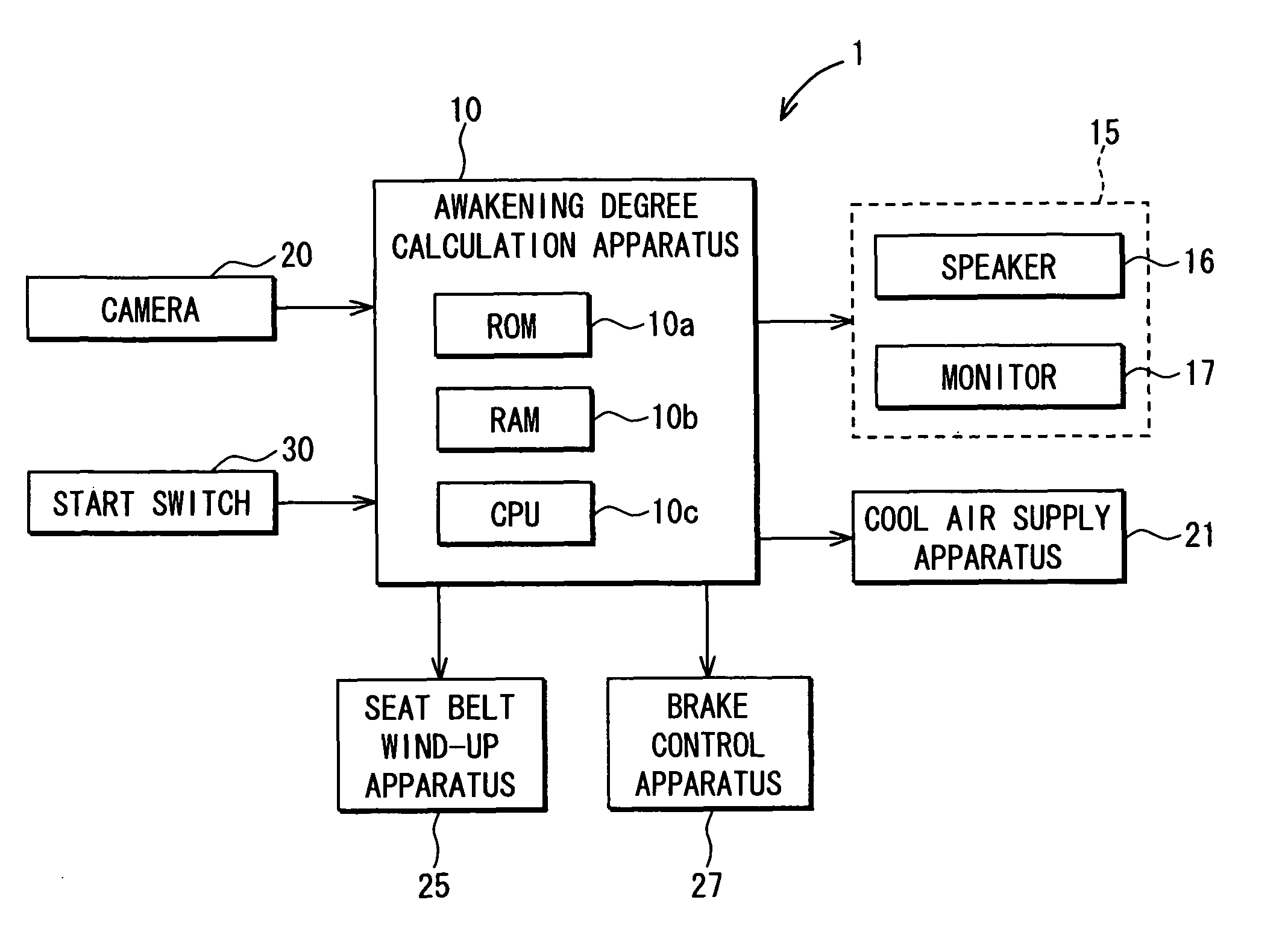

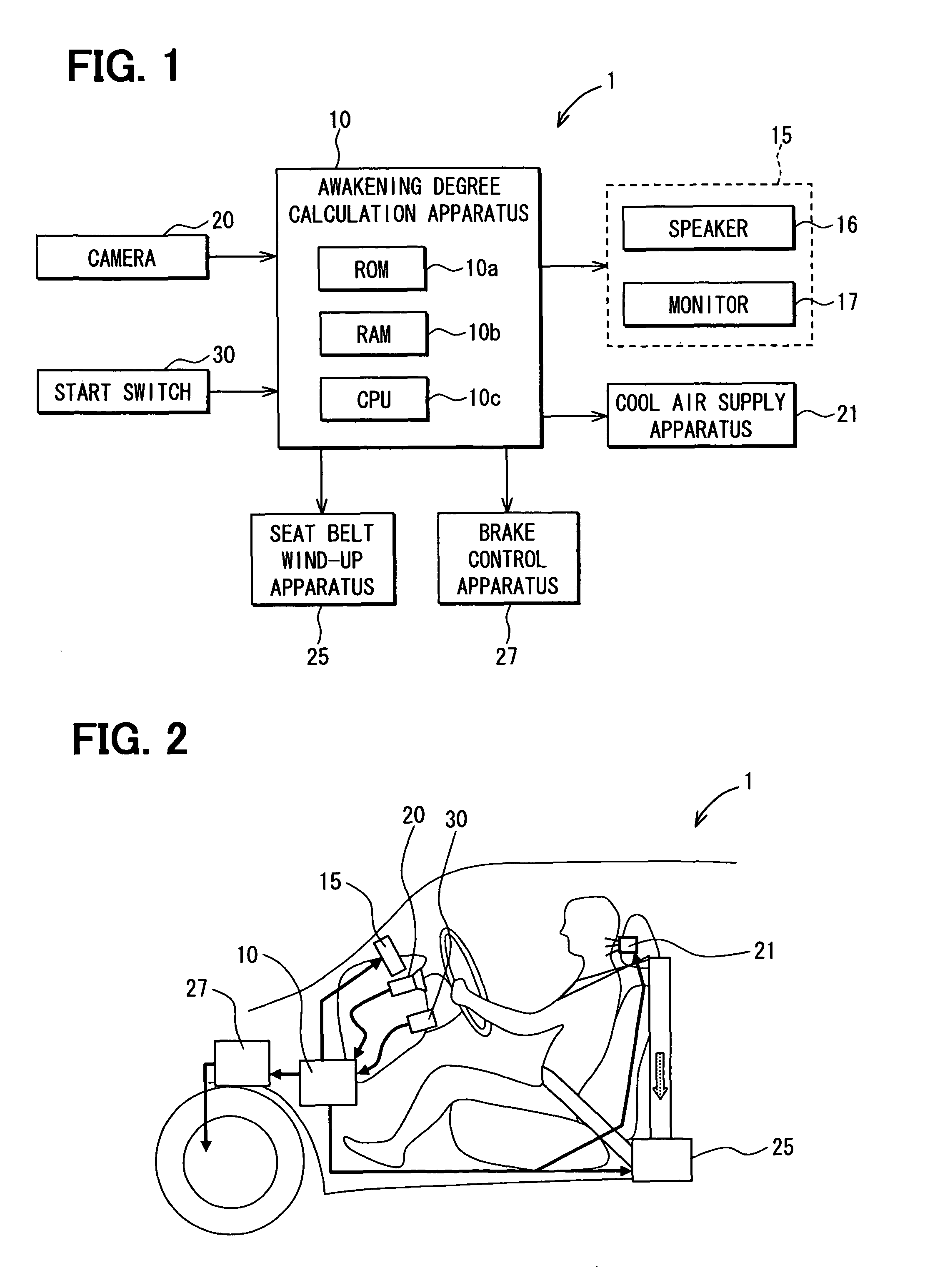

Drowsiness alarm apparatus and program

ActiveUS20080238694A1Reduce the possibilityAvoid performanceCharacter and pattern recognitionDiagnostic recording/measuringPhysical medicine and rehabilitationDriver/operator

A drowsiness alarm apparatus and a program for decreasing the possibility of incorrectly issuing an alarm due to an expressed emotion is provided. A wakefulness degree calculation apparatus performs a wakeful face feature collection process to collect a wakefulness degree criterion and an average representative feature distance. A doze alarm process collects a characteristic opening degree value and a characteristic feature distance. The wakefulness degree criterion is compared with the characteristic opening degree value to estimate a wakefulness degree. The average representative feature distance is compared with the characteristic feature distance to determine whether the face of the driver expresses a specific emotion. If the face expresses the emotion, alarm output is inhibited. When the face does not express the emotion, an alarm is output in accordance with the degree of decreased wakefulness so as to provide an increased alarm degree for the driver if necessary.

Owner:DENSO CORP

Device and method for displaying a status of a portable terminal by using a character image

Owner:SAMSUNG ELECTRONICS CO LTD

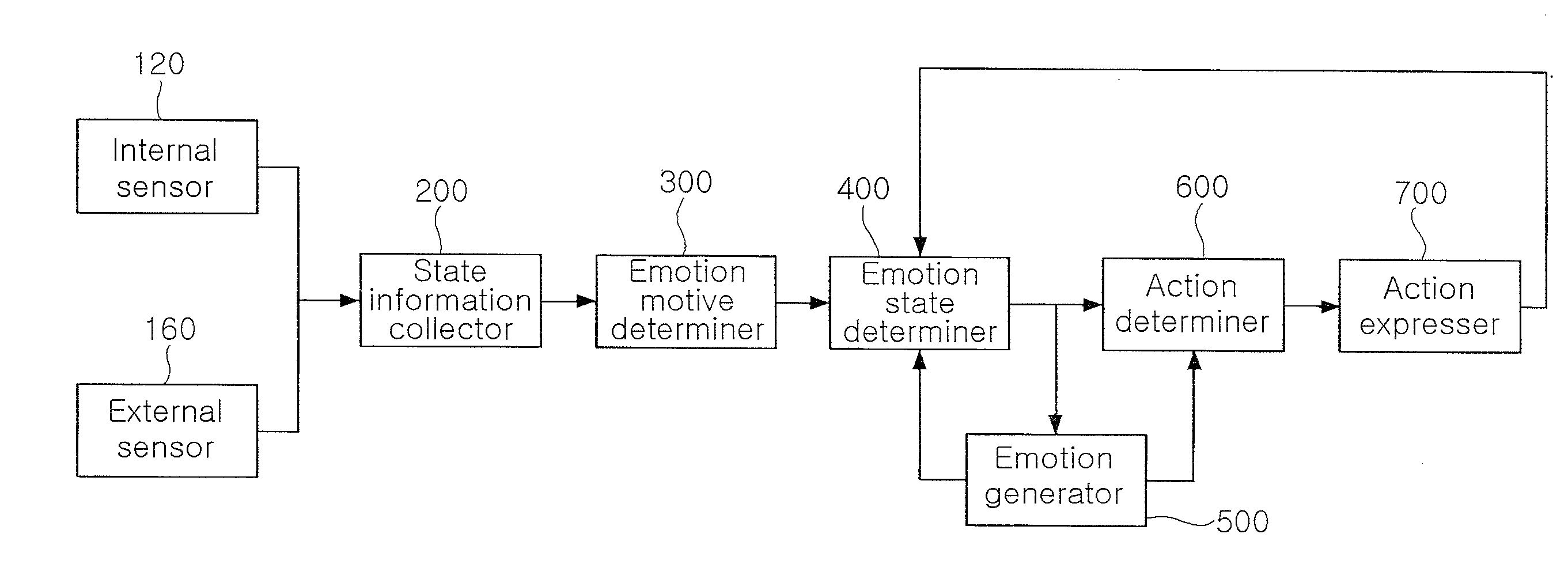

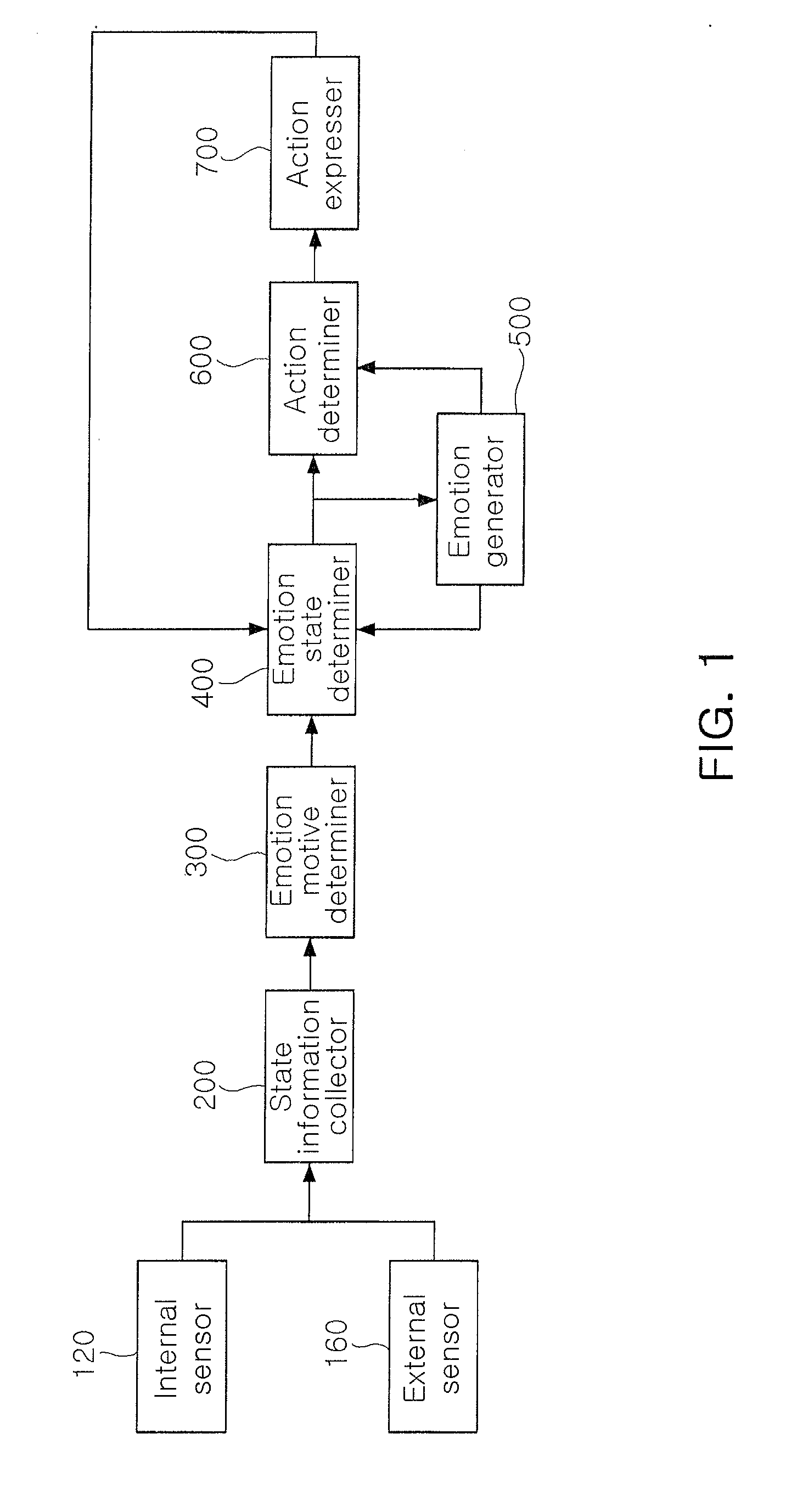

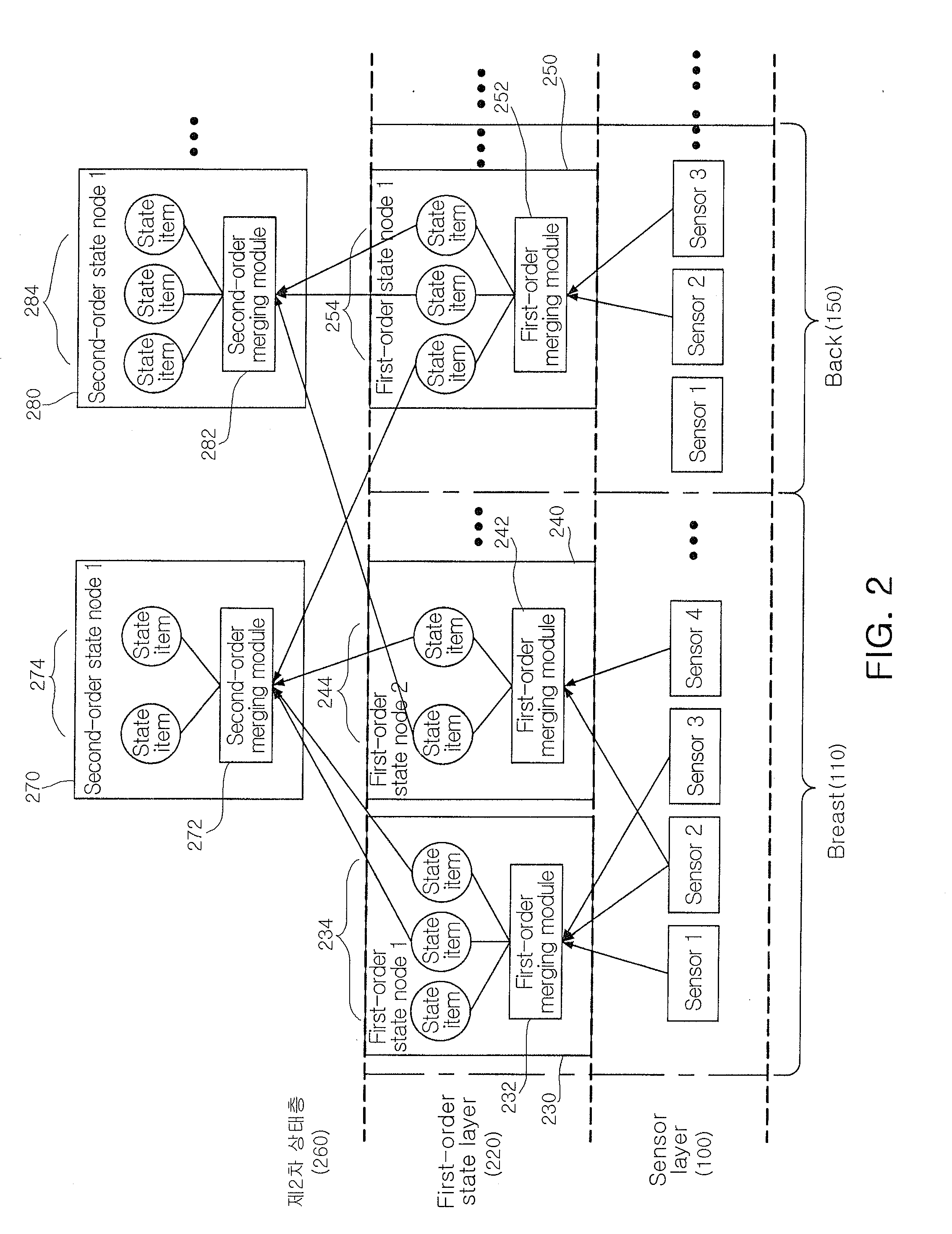

Apparatus and method for expressing emotions in intelligent robot by using state information

InactiveUS20080077277A1Accurate expressionMeet actual needsProgramme-controlled manipulatorArtificial lifePattern recognitionInformation Harvesting

An apparatus and method for expressing emotions in an intelligent robot. In the apparatus, a plurality of different sensors sense information about internal / external stimuli. A state information collector processes the detected information about the internal / external stimuli in a hierarchical structure to collect external state information. An emotion motive determiner determines an emotion need motive on the basis of the external state information and the degree of a change in an emotion need parameter corresponding to internal state information. An emotion state manager extracts available means information for satisfaction of the generated emotion needs motive and state information corresponding to the available means information. An emotion generator generates emotion information on the basis of a feature value of the extracted state information. An action determiner determines action information for satisfaction of the emotion need motive on the basis of the extracted state information and the generated emotion information. An action expresser expresses an action corresponding to the action information through a corresponding actuator on the basis of the determined action information.

Owner:ELECTRONICS & TELECOMM RES INST

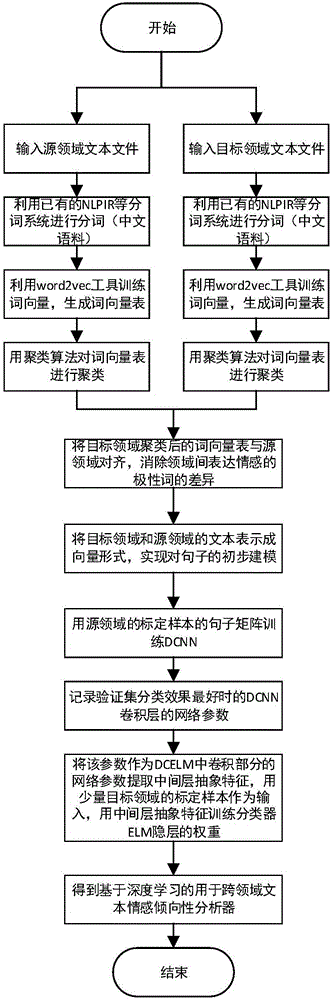

Method for establishing large-scale cross-field text emotion orientation analysis framework

ActiveCN106096004AImprove robustnessSolve the problem of being stuck in a local optimumData miningSpecial data processing applicationsLocal optimumAlgorithm

The invention discloses a method for establishing a large-scale cross-field text emotion orientation analysis framework. The method comprises the steps that precise word segmentation is carried out on sample documents in a source field and sample documents in a target field to form two word vector tables; word vectors are clustered, and the fields are aligned; primary sentence modeling is carried out on calibration samples in the source field through the word vectors and serves as input of DCELM, and interlayer abstraction features of text vectors are extracted through convolution operation; convolution layer parameters obtained when the classification effect of a verification set is best are recorded and serve as parameters of a DCELM network convolution layer; finally, interlayer abstraction features of calibration samples, extracted through DCNN, of a small number of target fields are used for training implicit layer parameters of a classifier ELM to establish the large-scale cross-field text emotion orientation analysis framework. By means of the technical scheme, the difference of words for expressing emotion polarities among the fields is eliminated on the sample layer, the defects that on a full-connection layer, local optimization is easily caused and the generalization ability is weak are effectively solved, and the anti-interference performance of a model is improved.

Owner:河北广潮科技有限公司

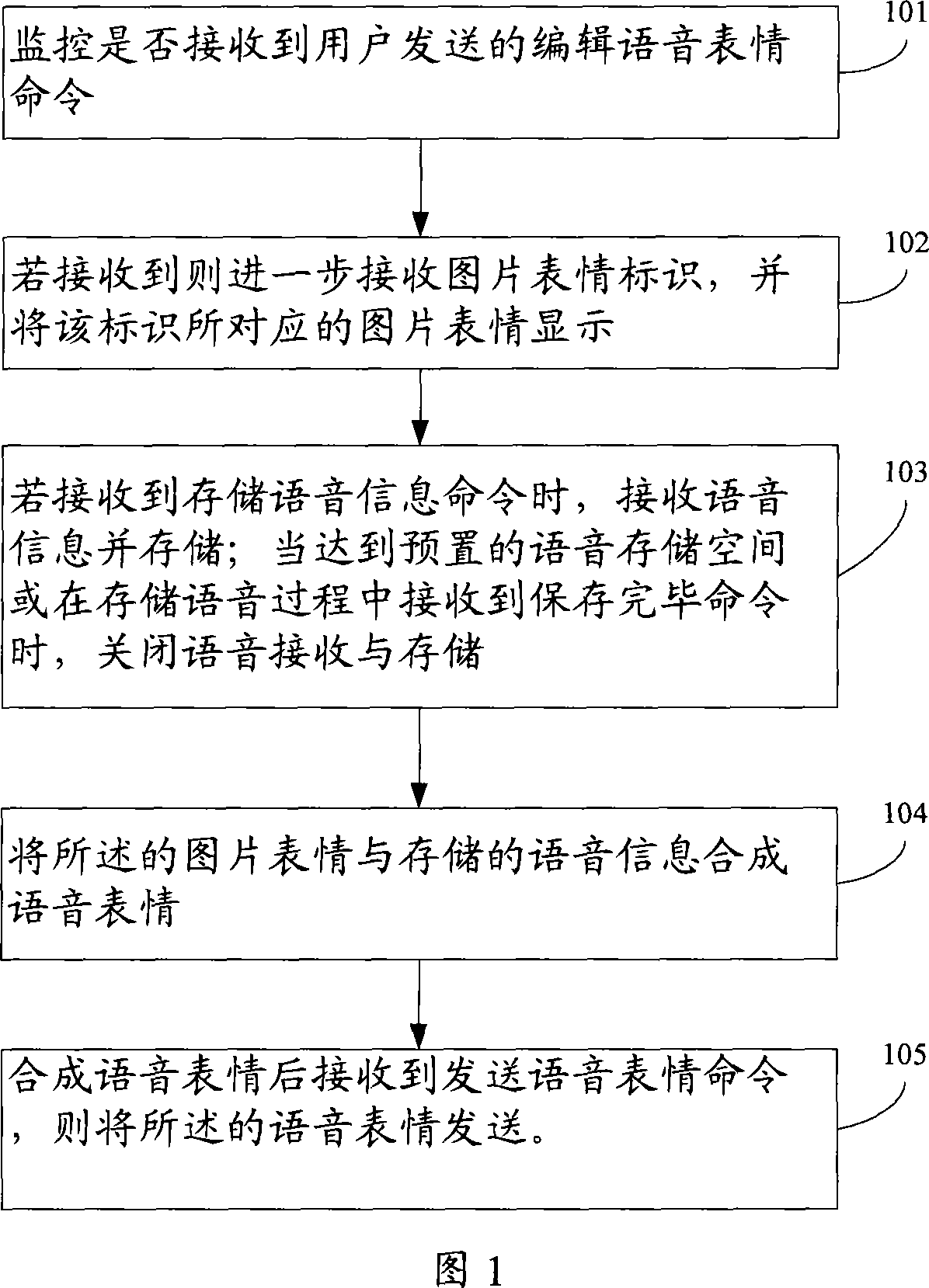

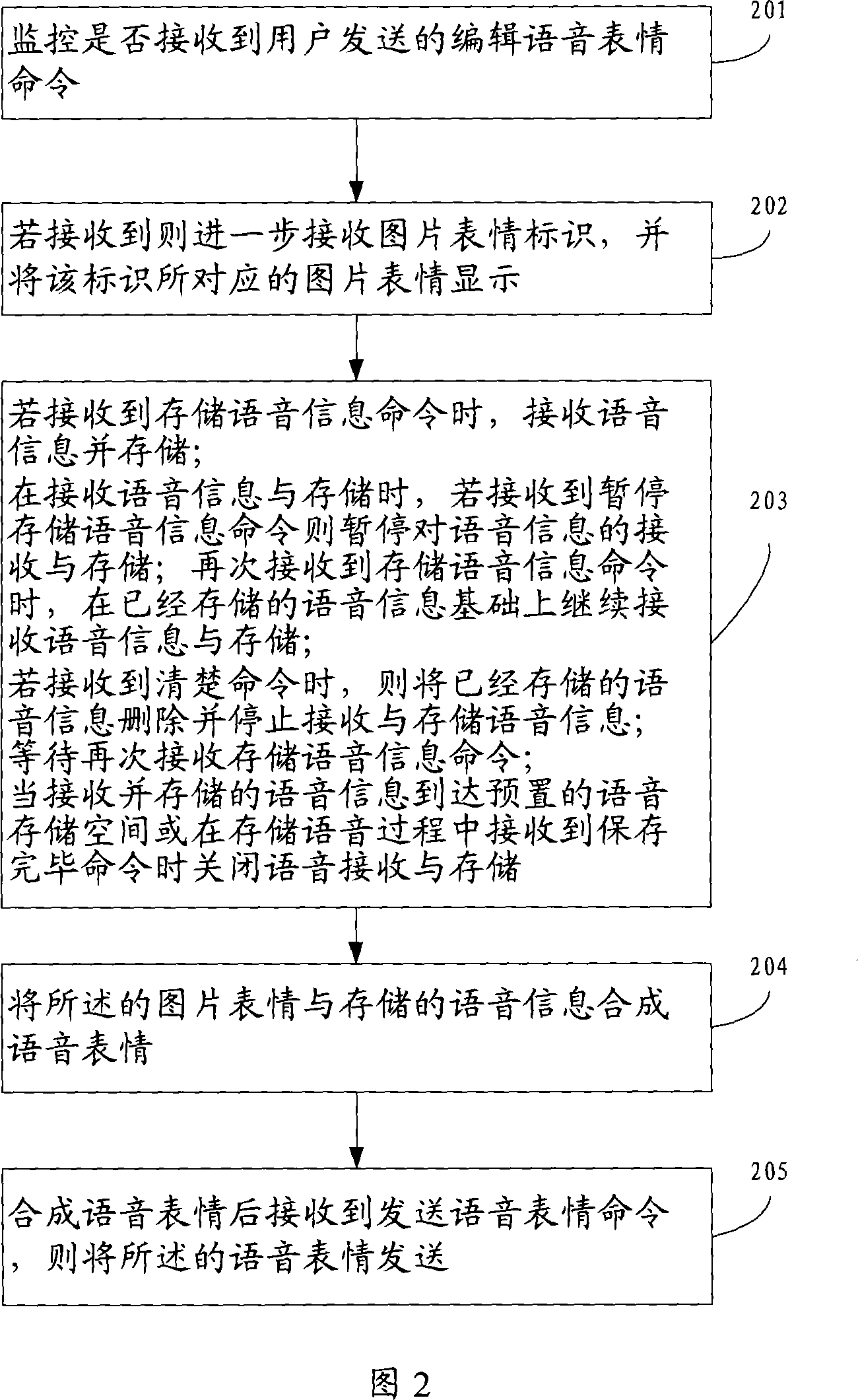

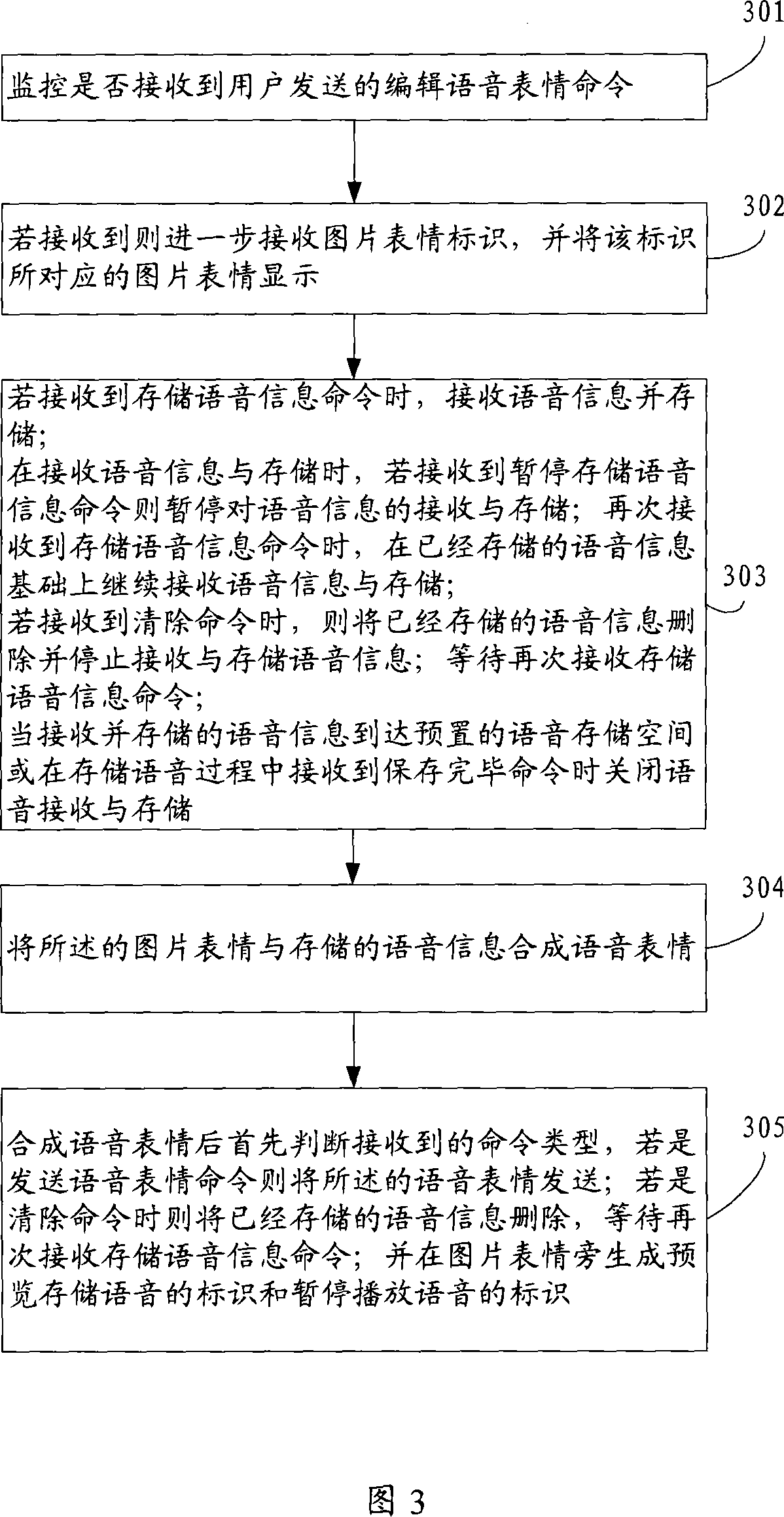

Exchange method for instant messaging tool and instant messaging tool

ActiveCN101072207AImprove satisfactionRich communication methodsData switching networksExpressed emotionSpeech sound

The method includes procedures: monitoring whether command of editing voice expression is received; if yes, then further receiving ID of photo expression (PE), and displaying expression of photo corresponding to the ID of PE; when receiving command of storing voice information (VI), the method receives and stores VI; when voice storage reaches to the prearranged voice storage space, or the finish save command is received in procedure of storing voice, the method closes receiving and storing voice, and synthesizes voice expression from the said PE and stored VI. Corresponding to the method, the invention also discloses an instant communication tool including reception unit, storage unit, display unit, first control unit, and transmission unit. Storing words the user wants to say into voice expression, the invention combines the voice expression with PE so as to express emotion and meaning vividly and visually, enrich modes of intercommunion, and raise degree of satisfaction.

Owner:TENCENT TECH (SHENZHEN) CO LTD

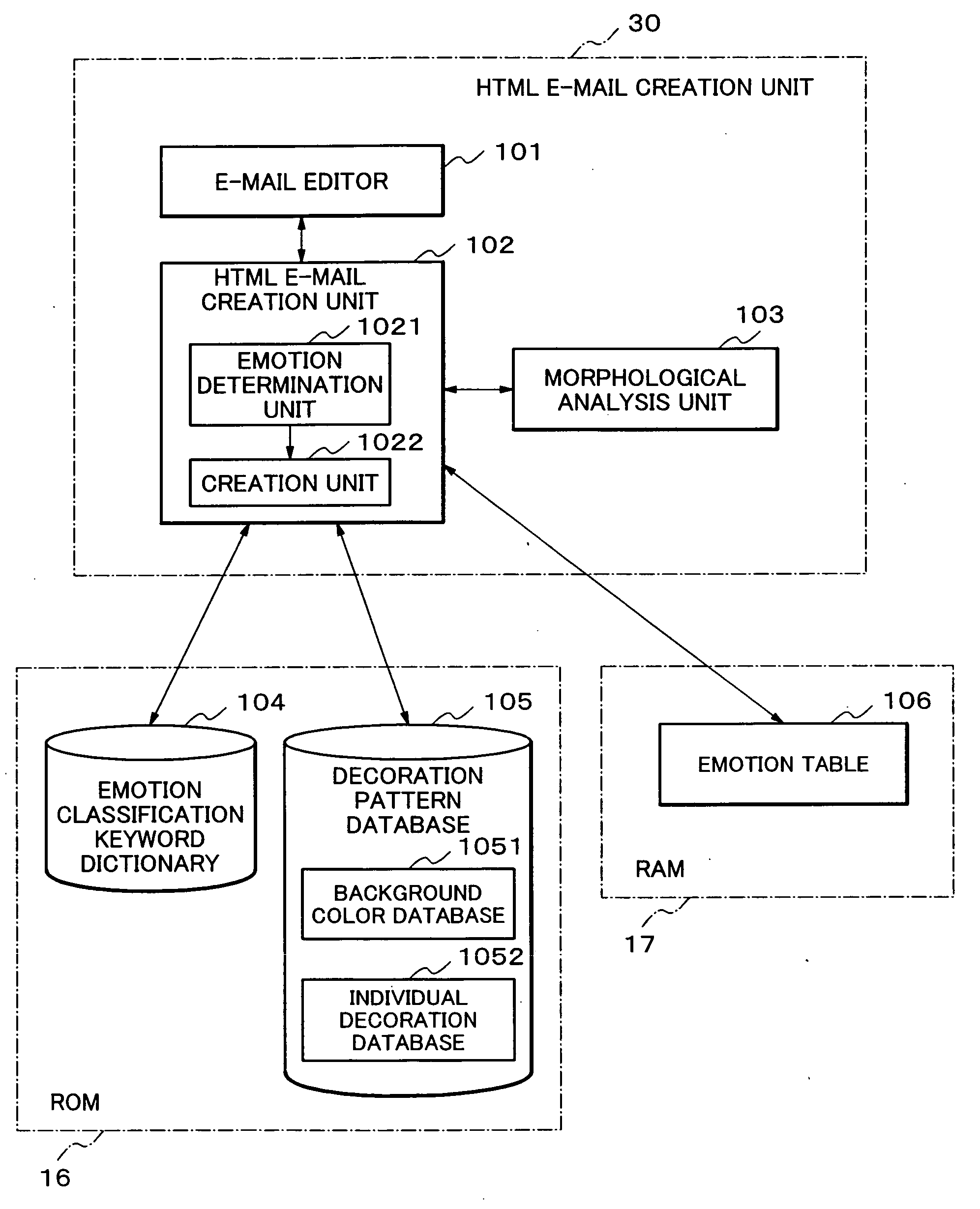

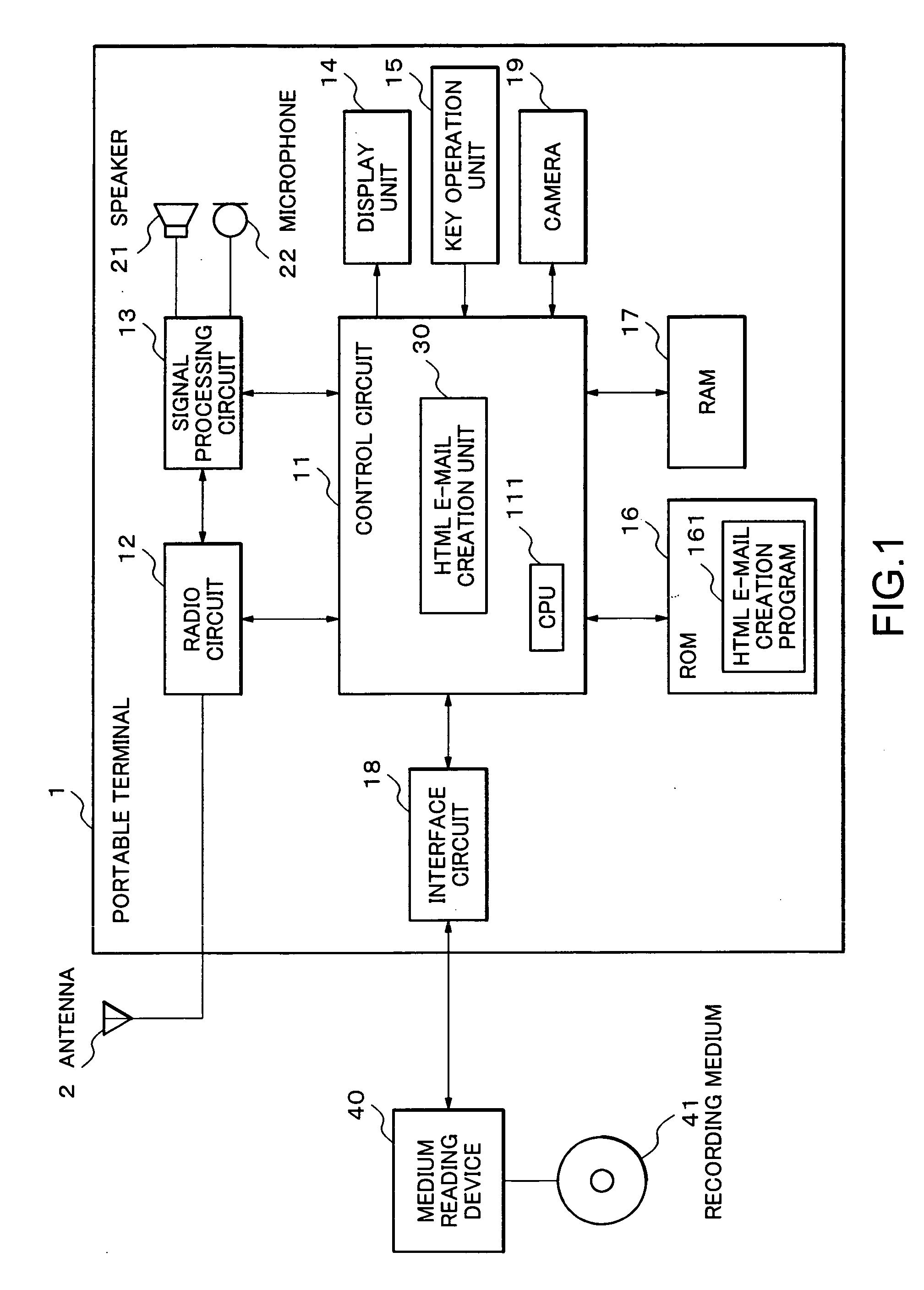

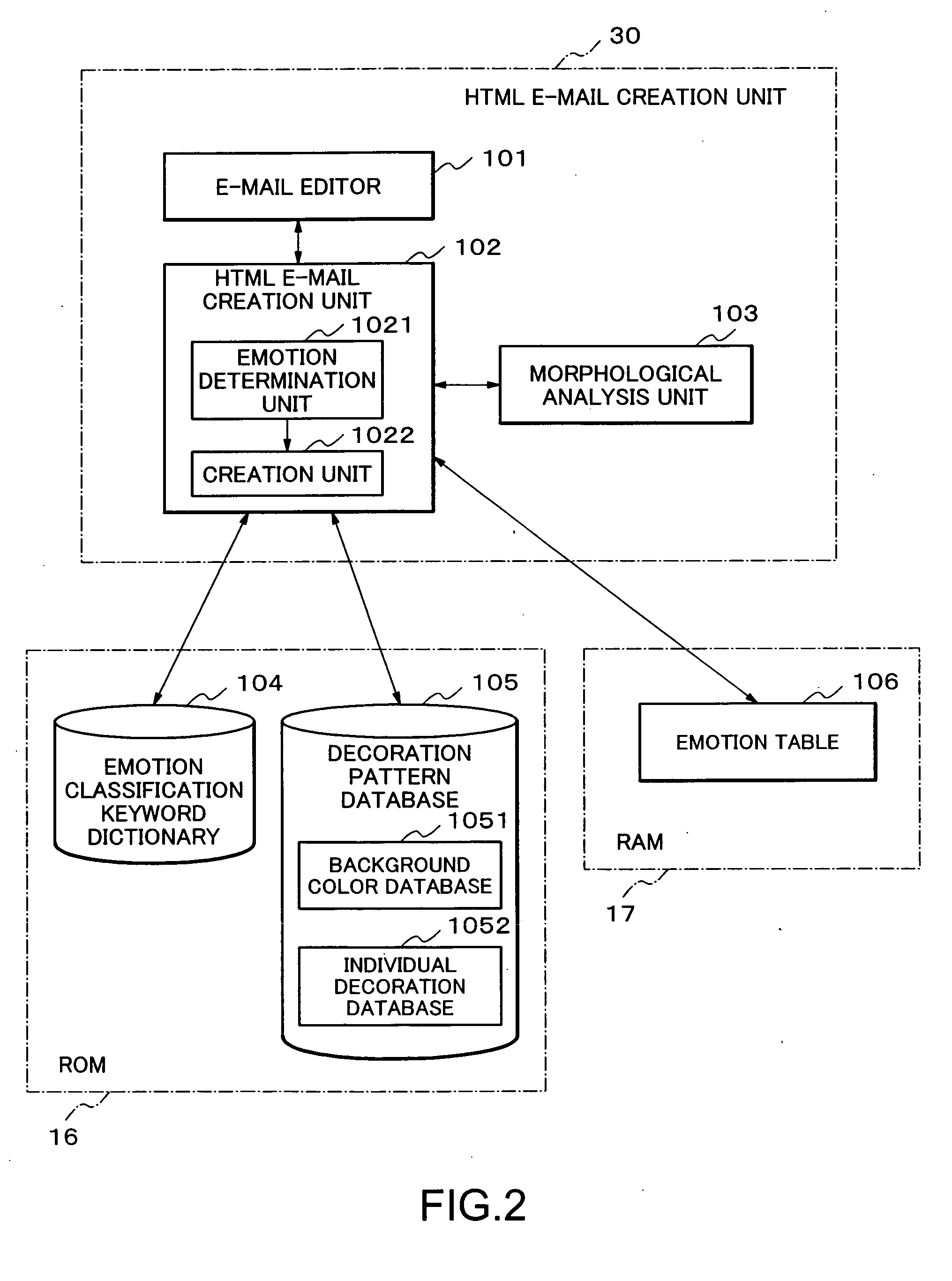

HTML e-mail creation system, communication apparatus, HTML e-mail creation method, and recording medium

InactiveUS20060129927A1Digital computer detailsNatural language data processingPaper documentDocument preparation

A HTML e-mail creation system for creating a HTML e-mail message includes a display unit and a control circuit. The control circuit extracts one or plural character strings expressing emotions from text-formatted document information; determines an overall emotion of the HTML e-mail document information based on the one or plural character strings; creates the HTML-formatted document information in which decoration corresponding to the determined overall emotion is applied to the one or plural character strings; and controls to displays the created HTML-formatted document information on the display unit.

Owner:NEC CORP

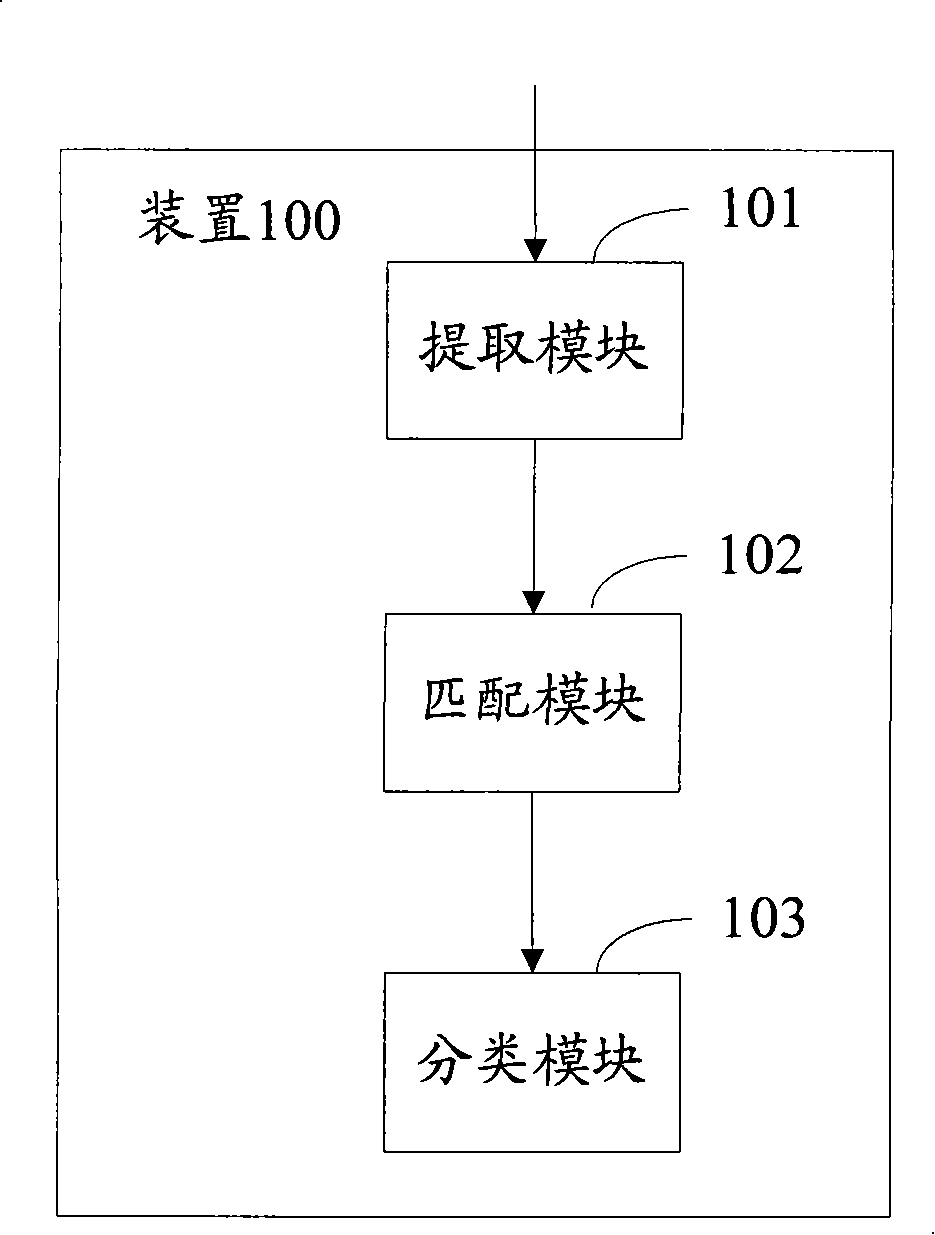

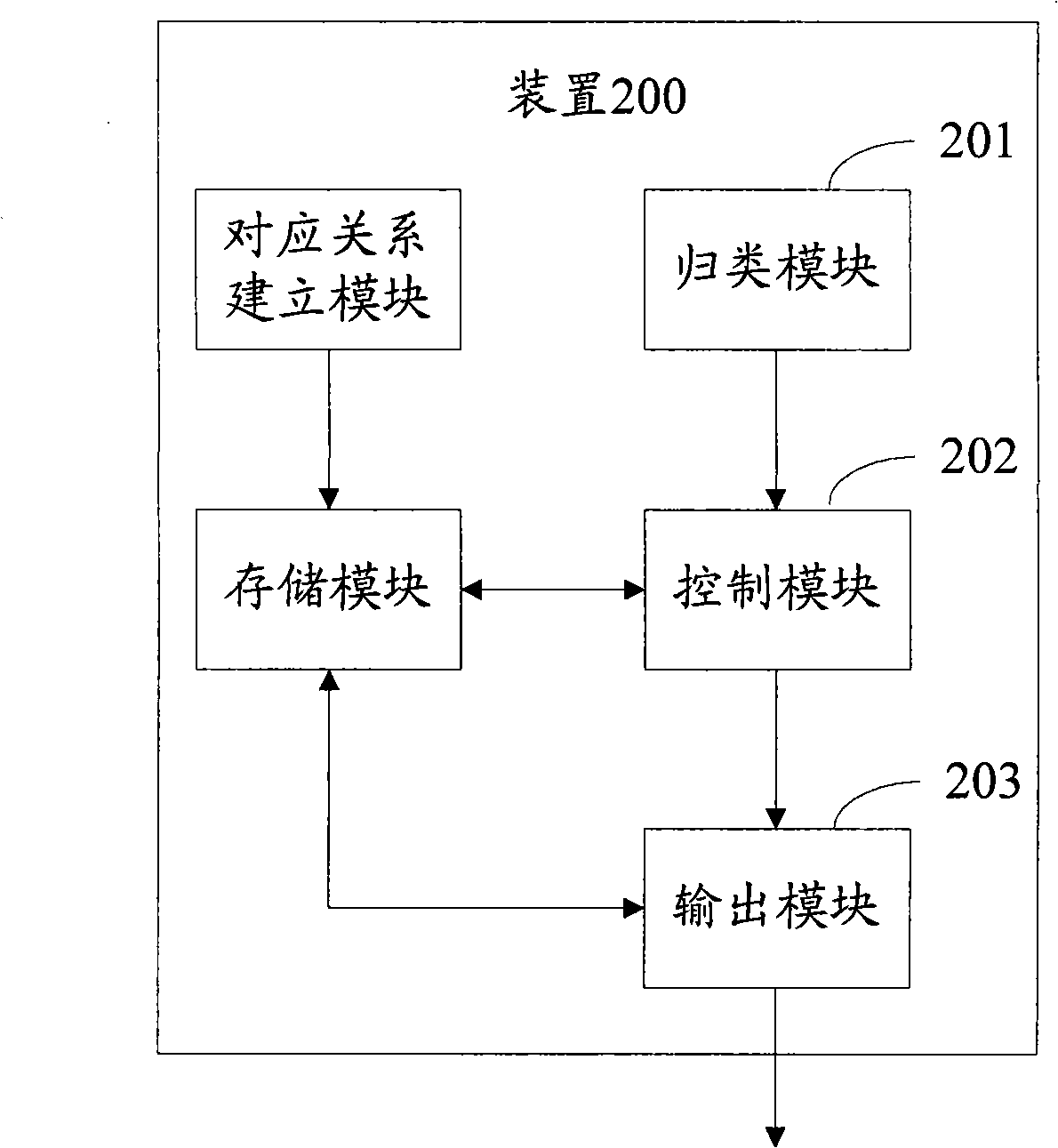

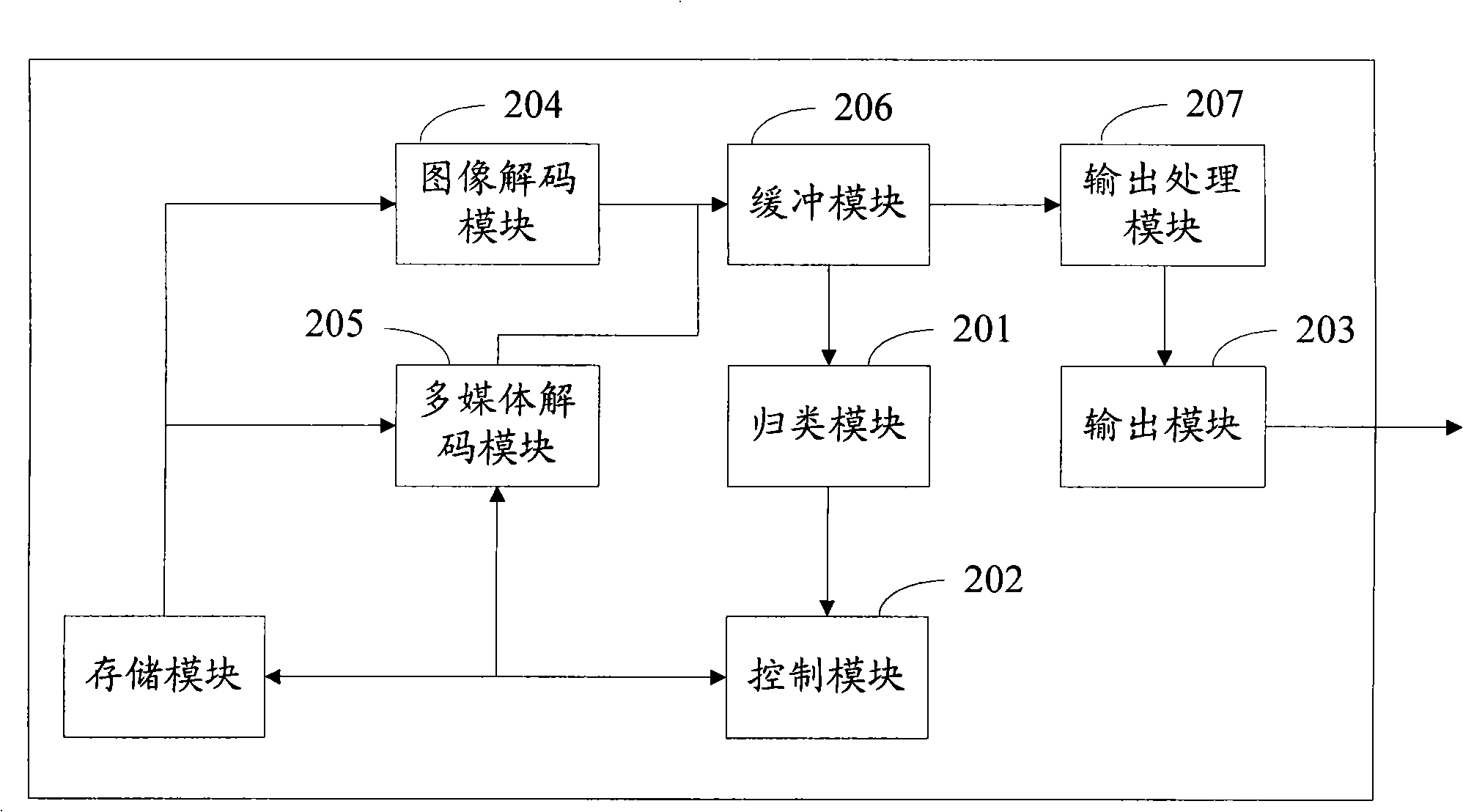

Method and device for outputting image

ActiveCN101271528AImprove experienceCharacter and pattern recognitionSpecial data processing applicationsPattern recognitionExpressed emotion

The invention discloses an image output method which is used for playing a multimedia content relating to emotion according to the emotion expressed by an image when the image is output to improve the user experience. The method is that: the content of the image is analyzed and obtained and the sort of the emotion corresponding to the image is determined; the required multimedia content is determined according to the sort of the emotion corresponding to the image and the corresponding relationship between the sort of the emotion and the multimedia content; and the determined multimedia content is output when the image is output. A device which corresponds to a method for the output image is also disclosed by the invention.

Owner:北京中星天视科技有限公司

Drowsiness alarm apparatus and program

ActiveUS7821409B2Reduce the possibilityAvoid performanceCharacter and pattern recognitionDiagnostic recording/measuringPhysical medicine and rehabilitationDriver/operator

A drowsiness alarm apparatus and a program for decreasing the possibility of incorrectly issuing an alarm due to an expressed emotion is provided. A wakefulness degree calculation apparatus performs a wakeful face feature collection process to collect a wakefulness degree criterion and an average representative feature distance. A doze alarm process collects a characteristic opening degree value and a characteristic feature distance. The wakefulness degree criterion is compared with the characteristic opening degree value to estimate a wakefulness degree. The average representative feature distance is compared with the characteristic feature distance to determine whether the face of the driver expresses a specific emotion. If the face expresses the emotion, alarm output is inhibited. When the face does not express the emotion, an alarm is output in accordance with the degree of decreased wakefulness so as to provide an increased alarm degree for the driver if necessary.

Owner:DENSO CORP

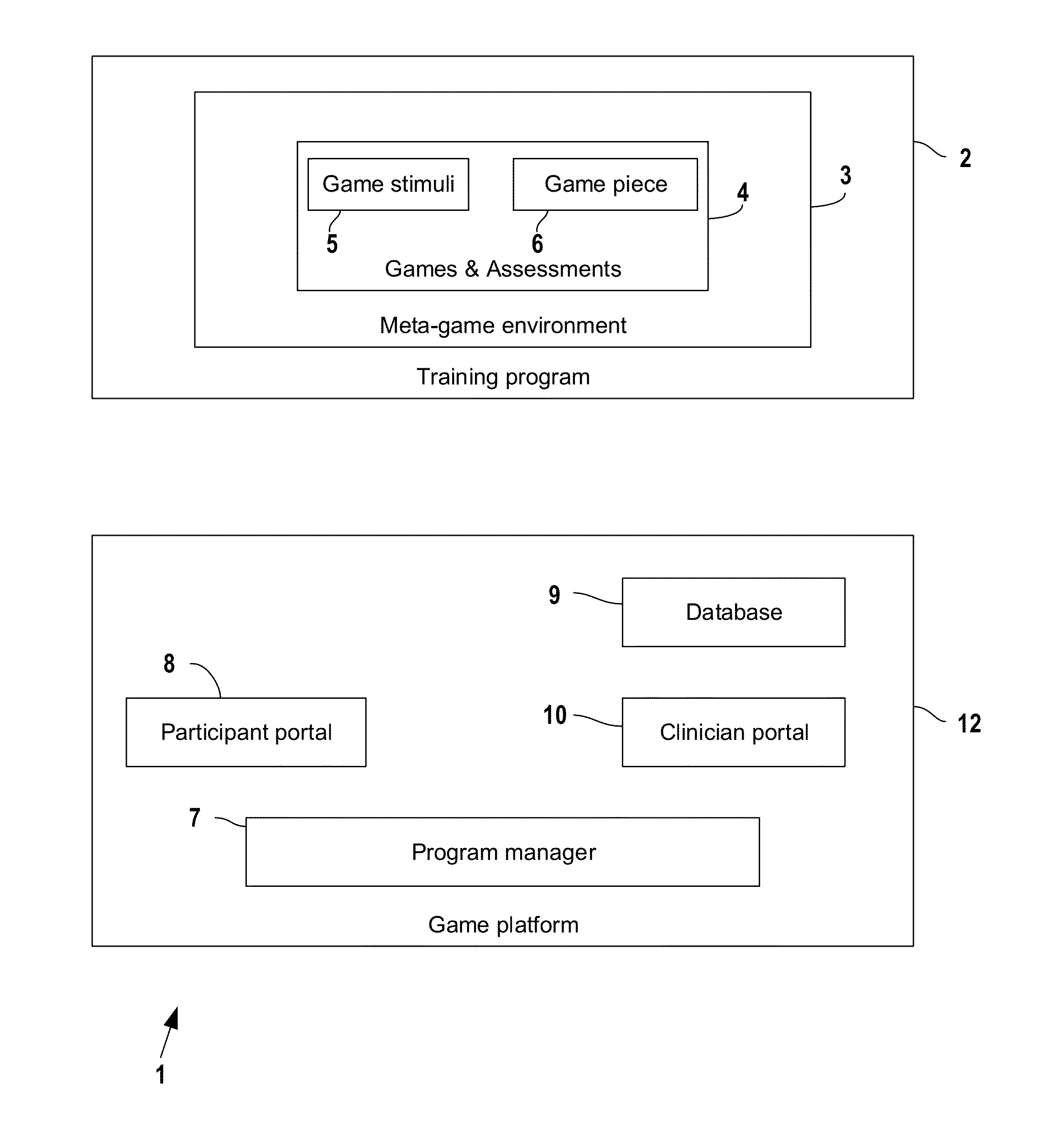

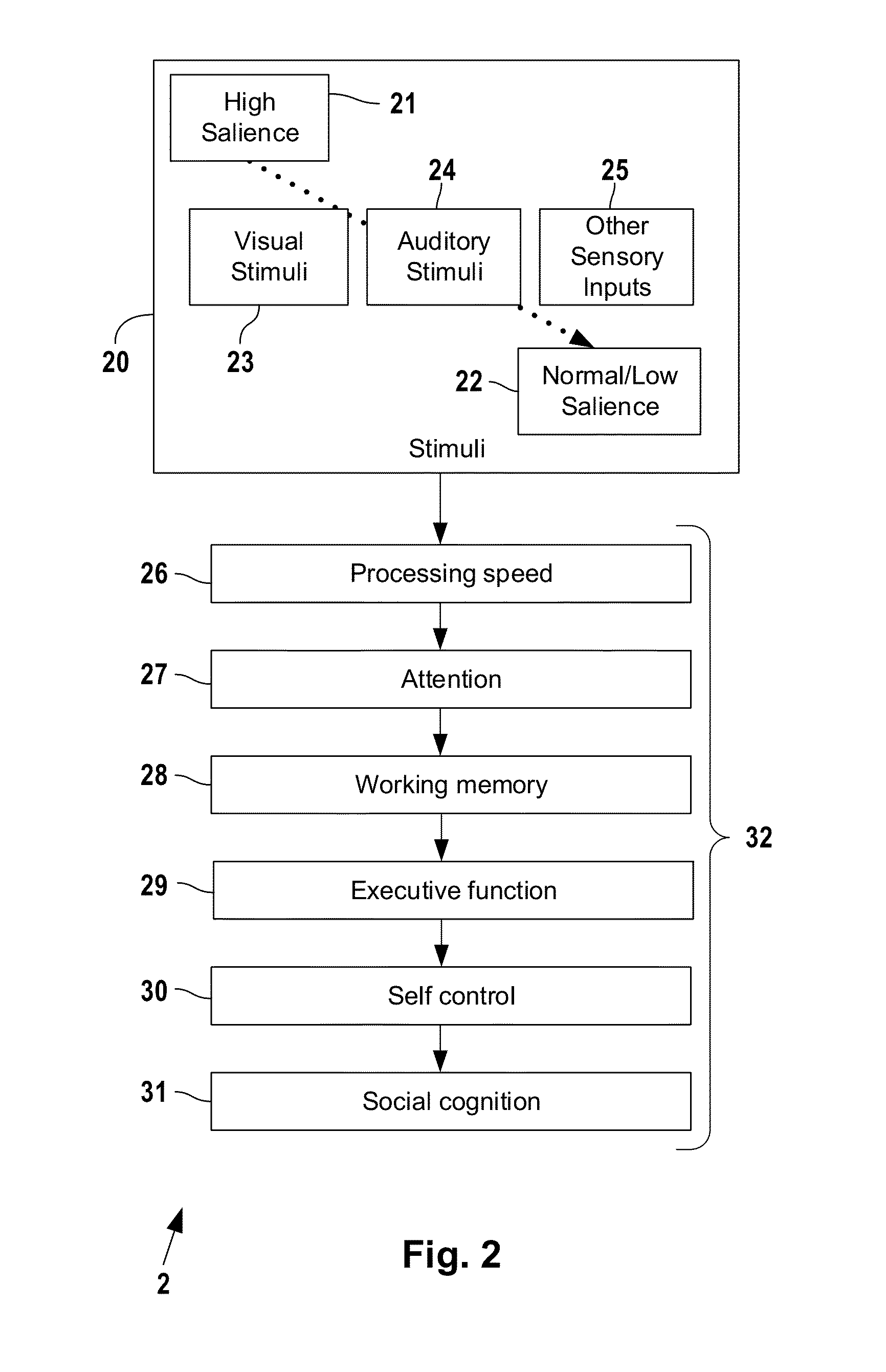

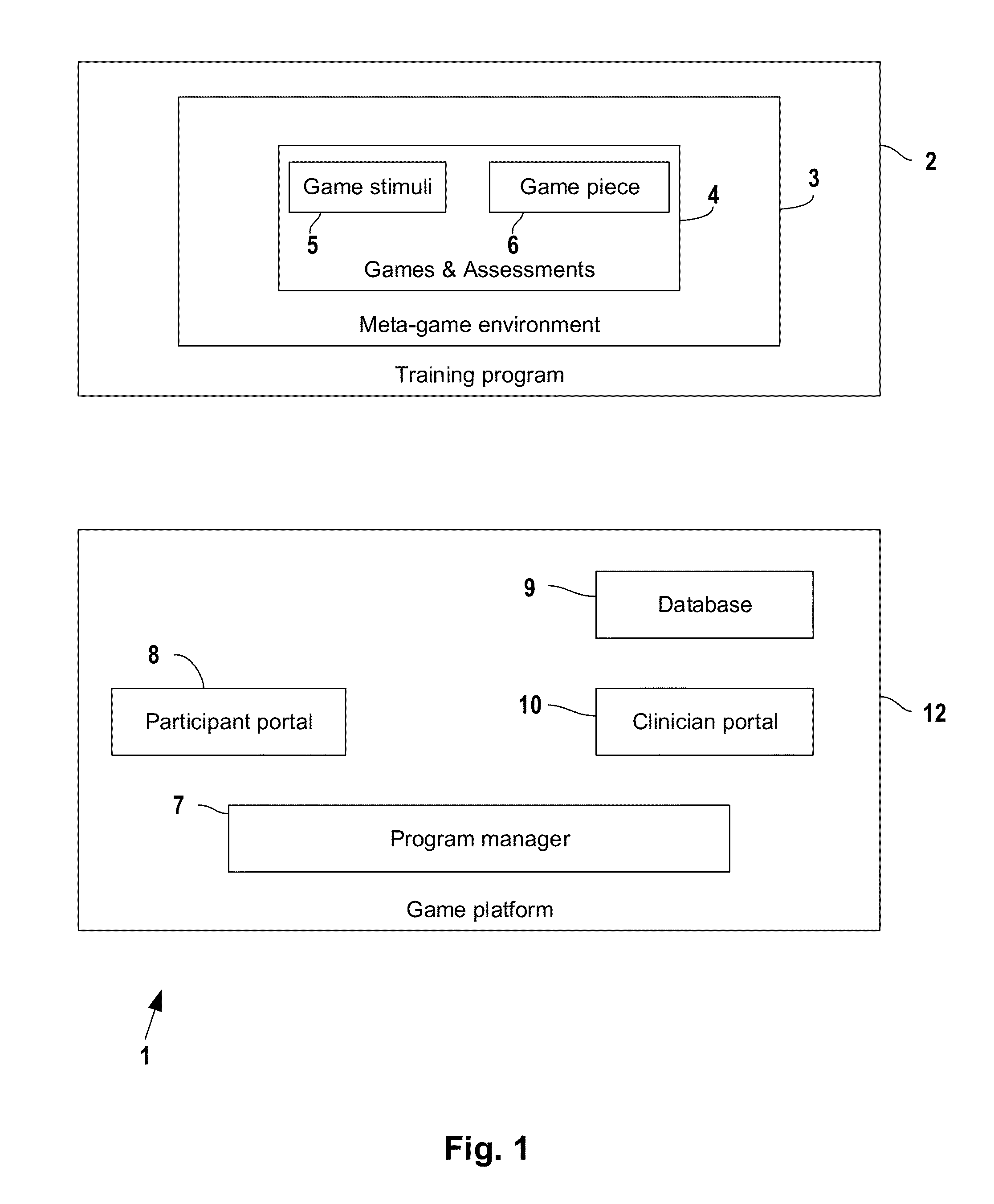

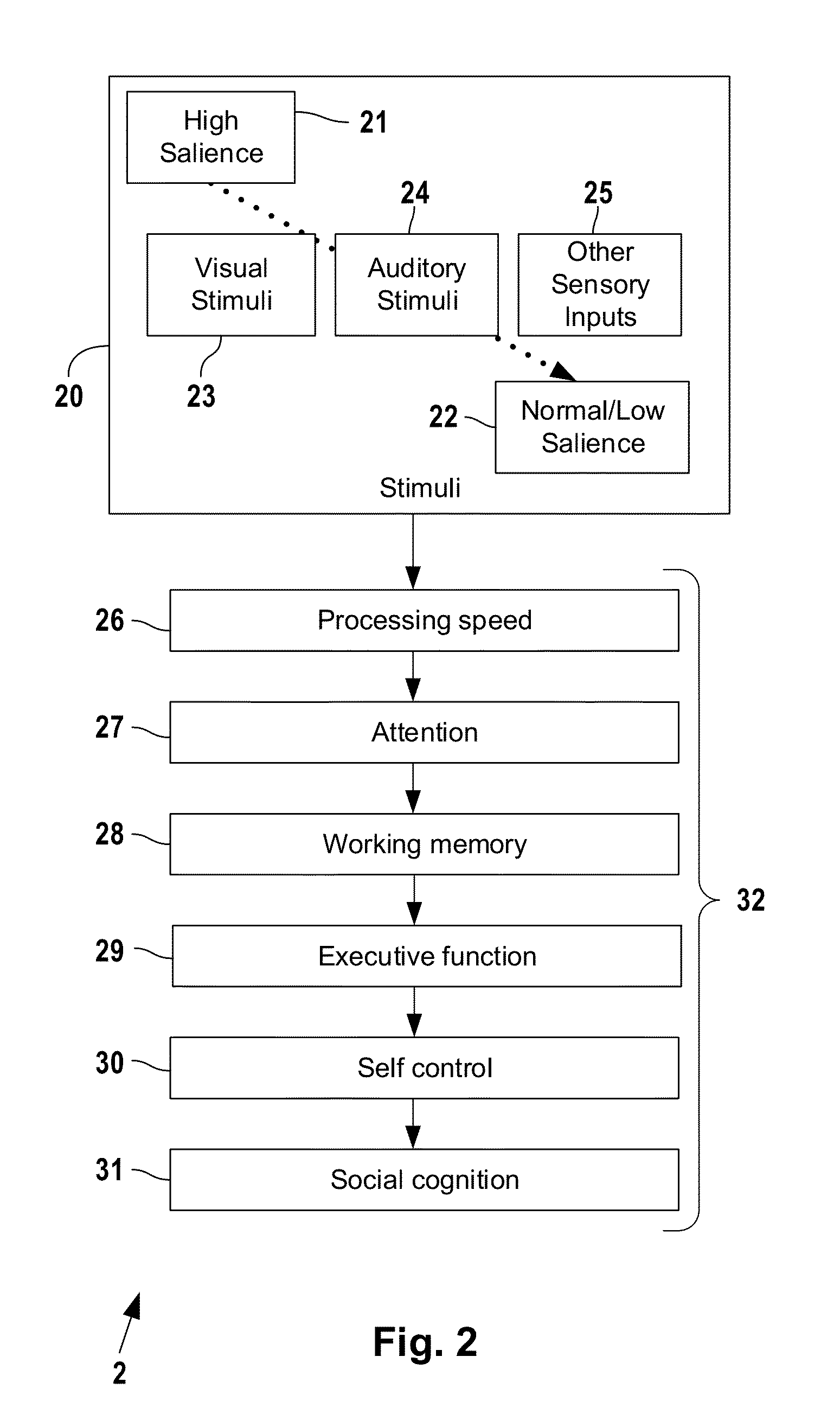

Neuroplasticity games for social cognition disorders

A training program is configured to systematically drive neurological changes to overcome social cognitive deficits. Various games challenge the participant to observe gaze directions in facial images, match faces from different angles, reconstruct face sequence, memorize social details about sequentially presented faces, identify smiling faces, find faces whose expression matches the target, identify emotions implicitly expressed by facial expressions, match pairs of similar facial expressions, match pairs of emotion clips and emotion labels, reconstruct sequences of emotion clips, identify emotional prosodies of progressively shorter sentences, match sentences with tags that identify emotional prosodies with which they are expressed, identify social scenarios that best explain emotions expressed in video clips, answer questions about social interactions in multi-segmented stories, choose expressions and associated prosodies that best describe how a person would sound in given social scenarios, and / or understand and interpret gradually more complex social scenes.

Owner:POSIT SCI CORP

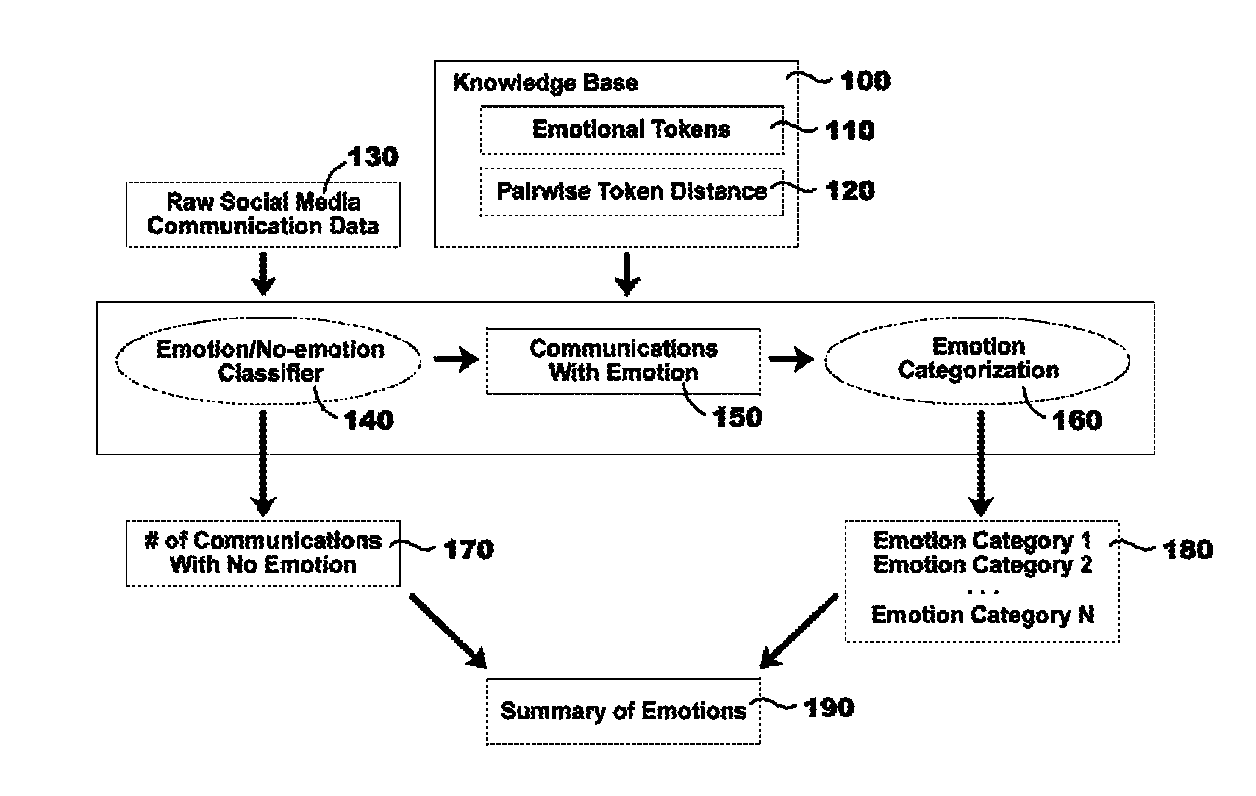

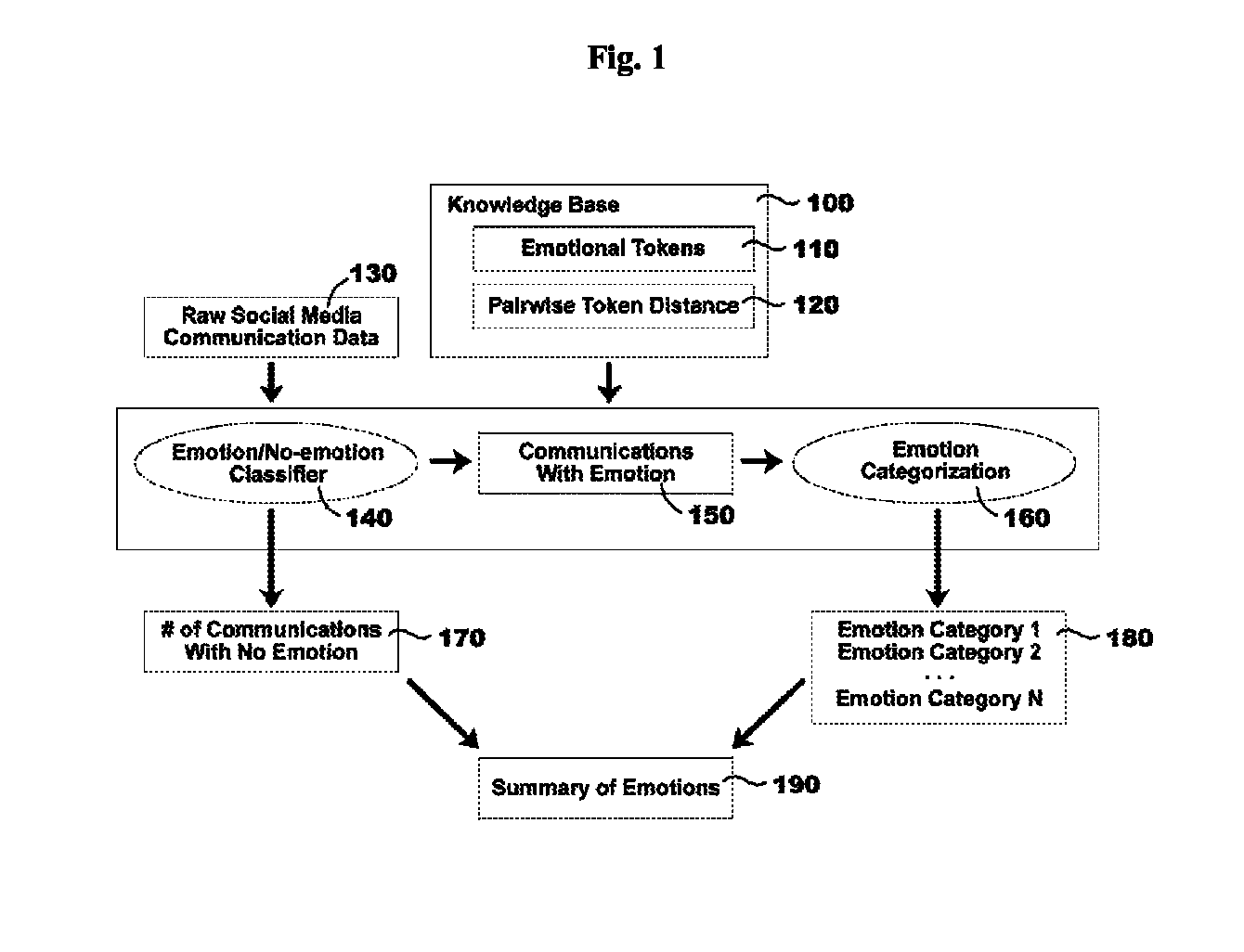

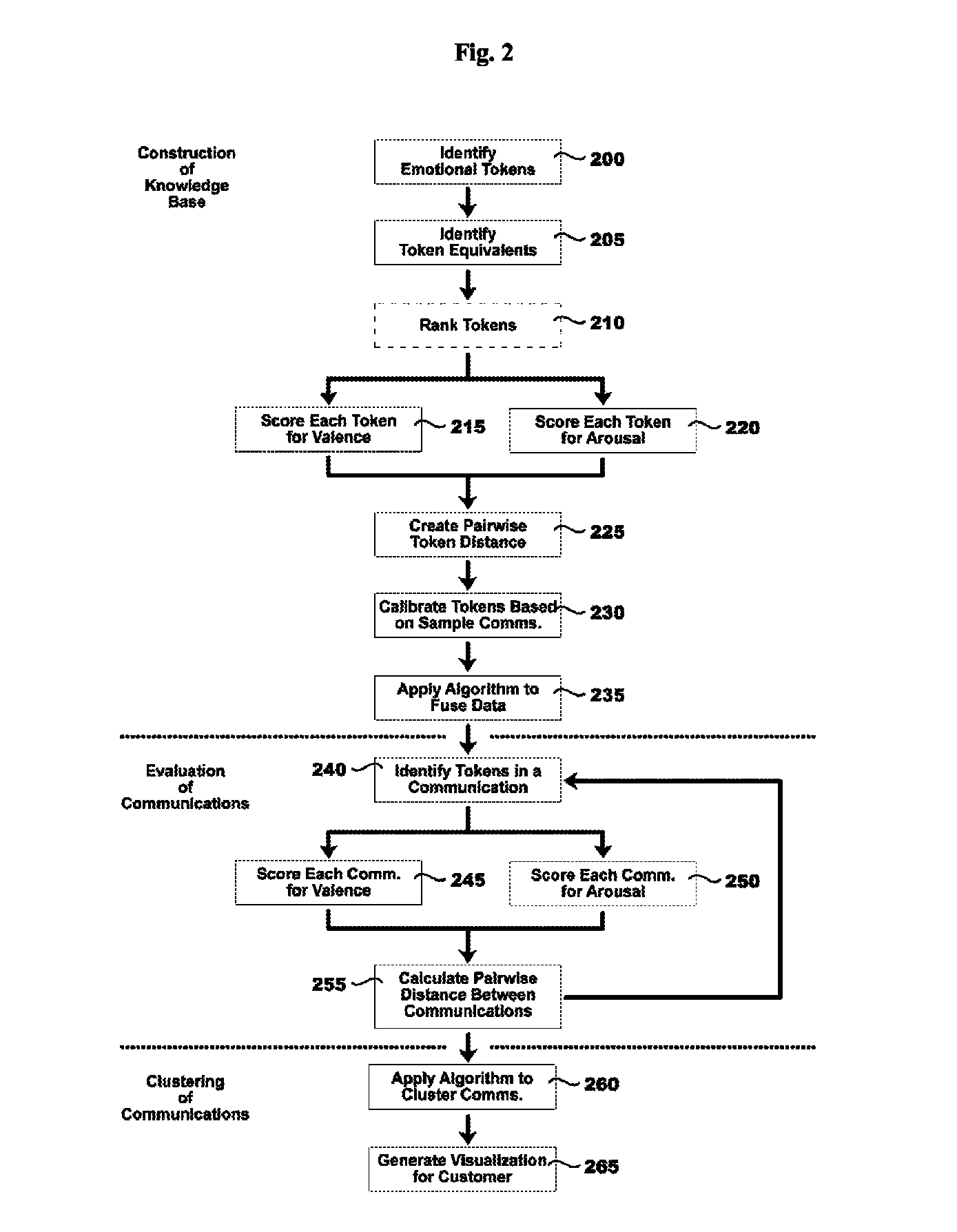

Automated emotional clustering of social media conversations

ActiveUS9430738B1Eliminate costlyEliminate time-consuming workKnowledge representationSocial mediaMachine learning

Disclosed are platforms, systems, media, and methods for implementing a methodological framework for automatically categorizing and summarizing emotions expressed in social chatter by using a “knowledge base” of emotional words / phrases as an input to define a distance metric between conversations and conducting hierarchical clustering based on the distance metric.

Owner:MASHWORK INC

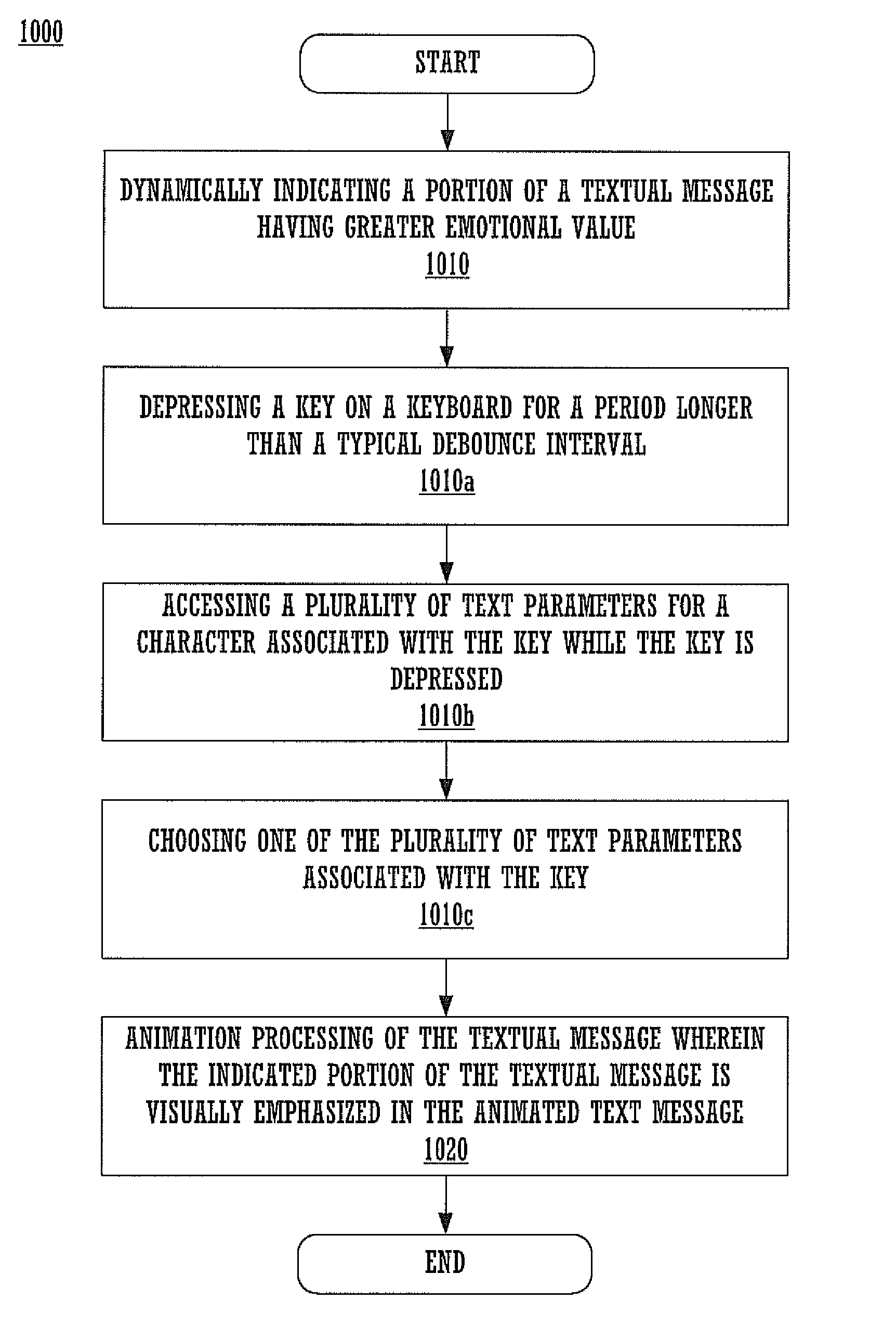

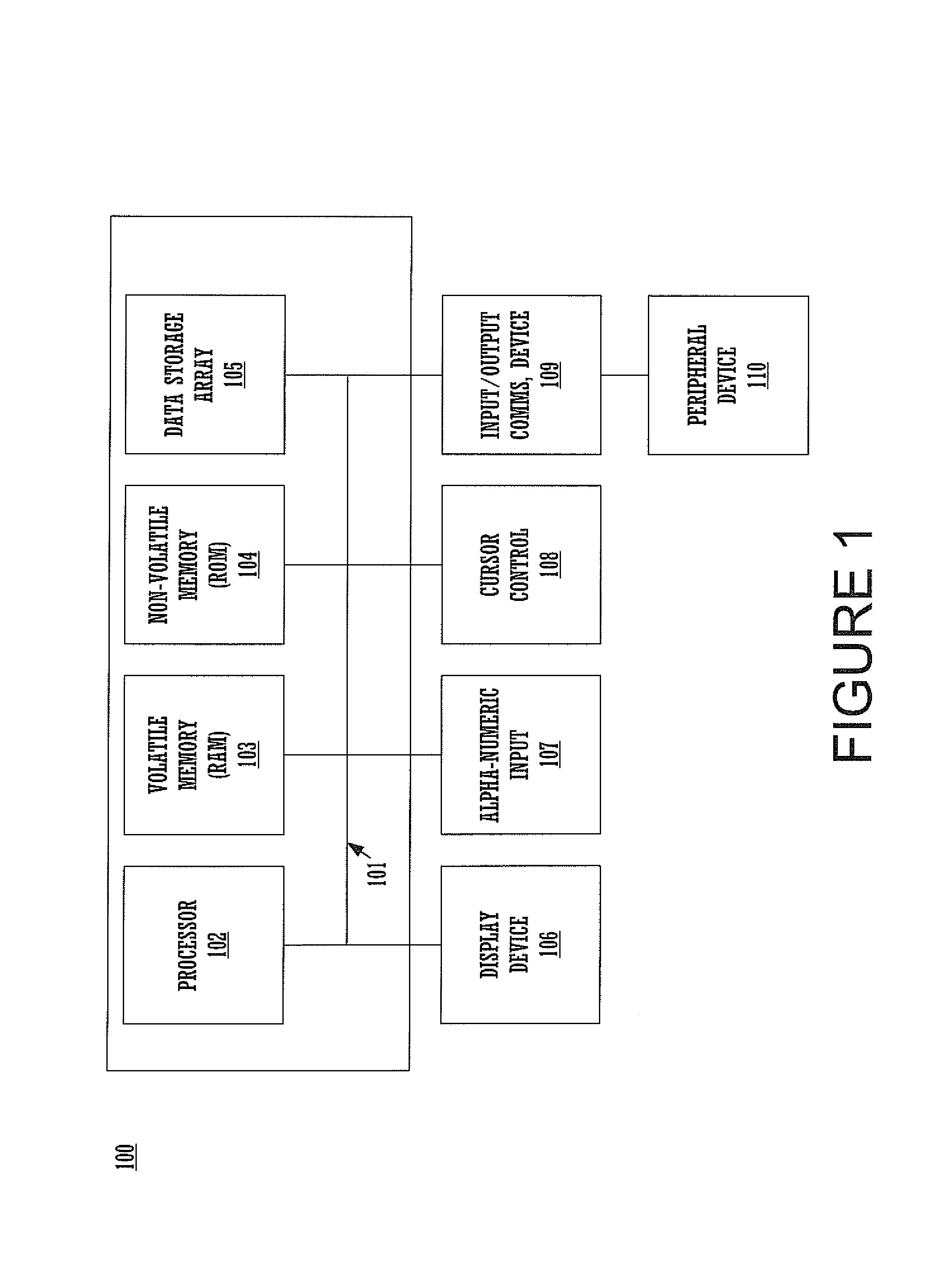

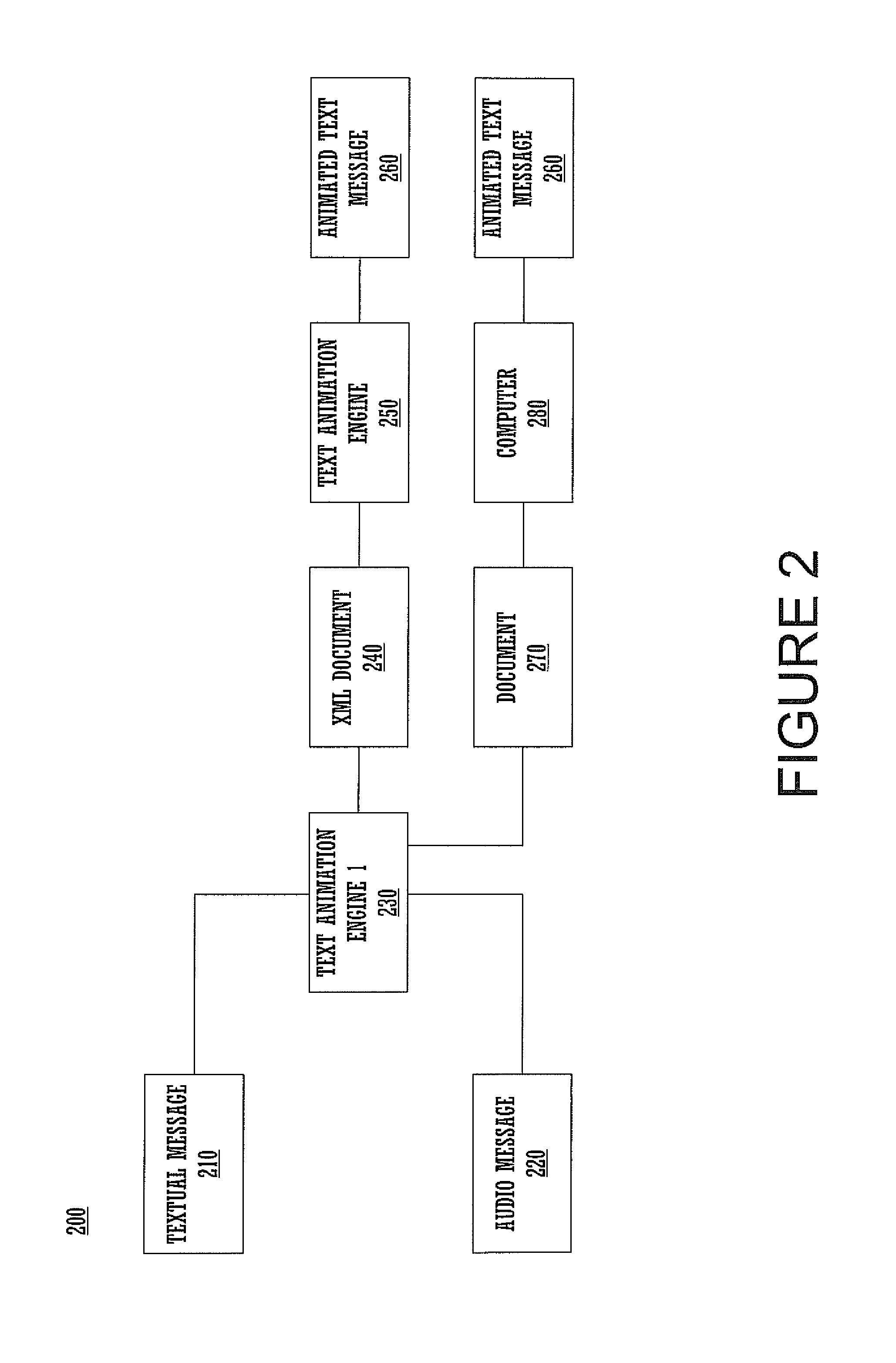

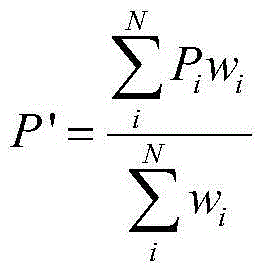

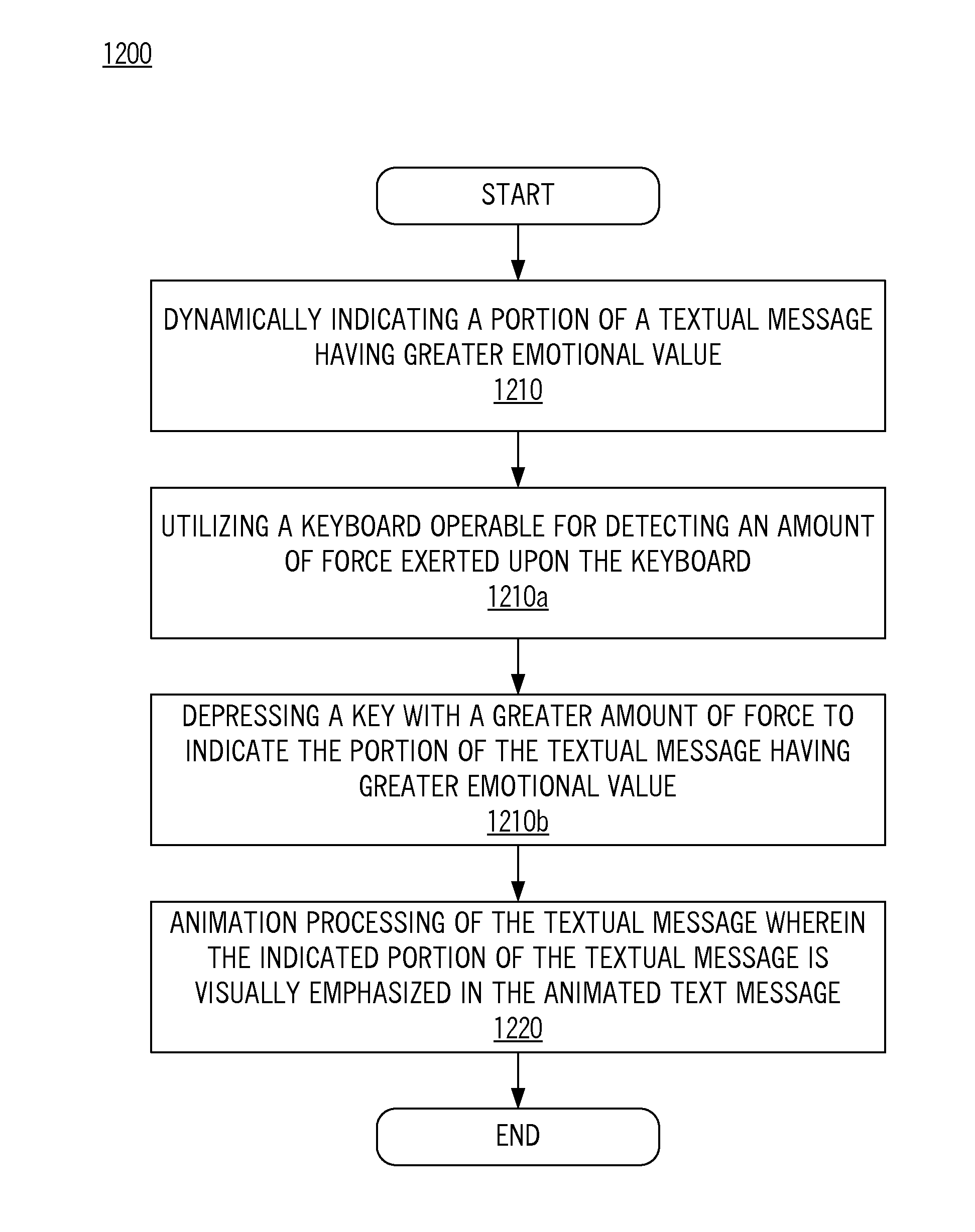

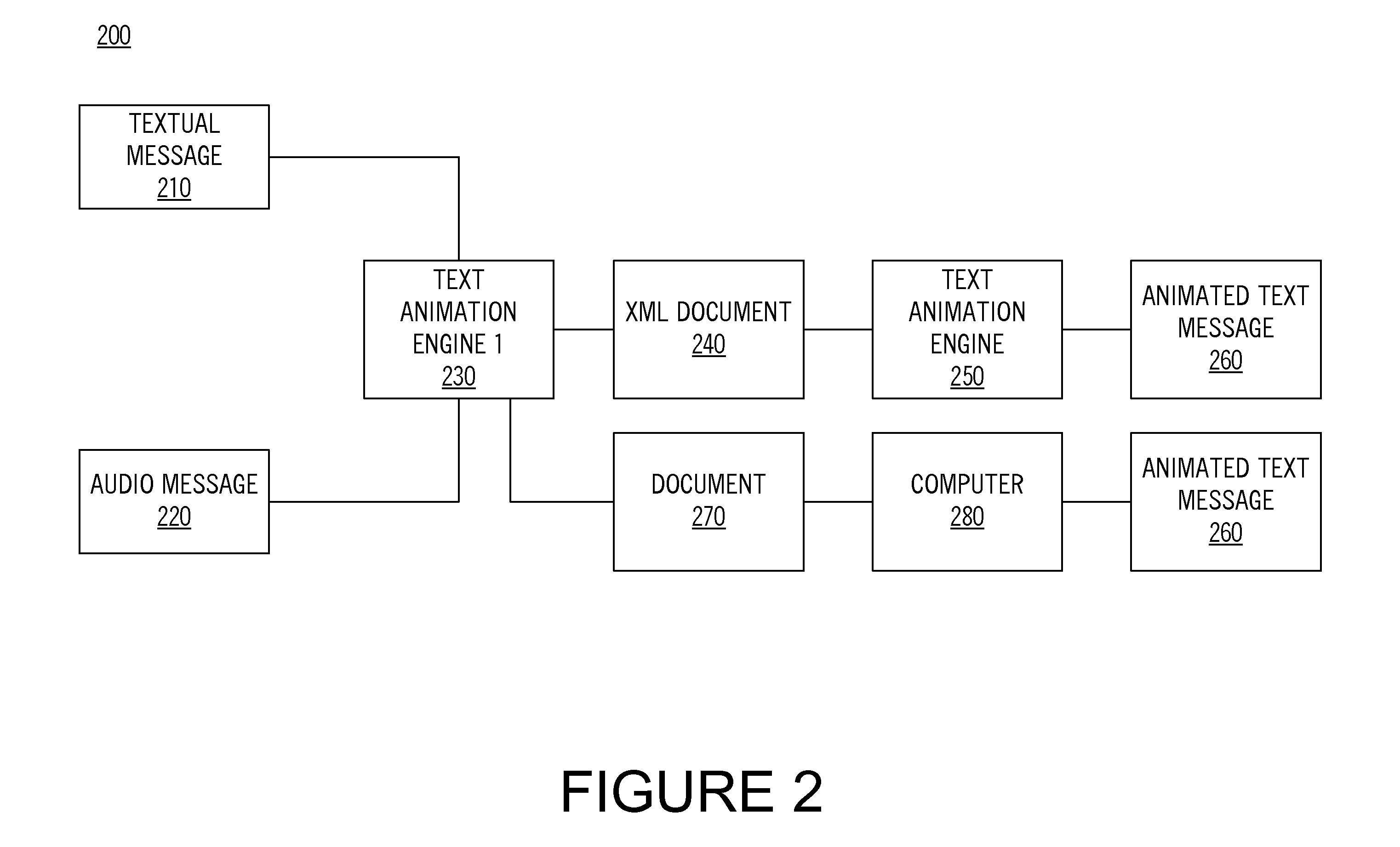

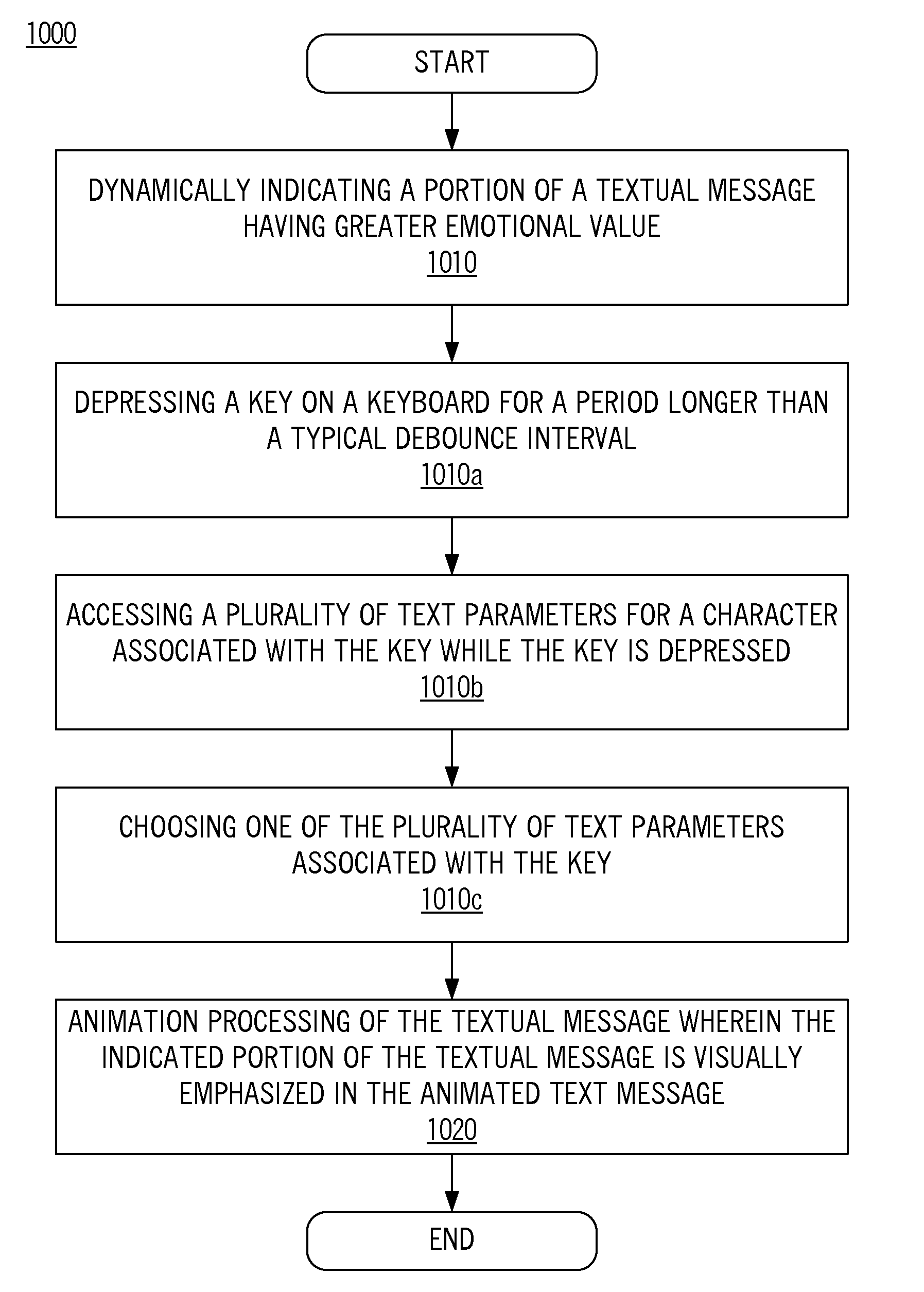

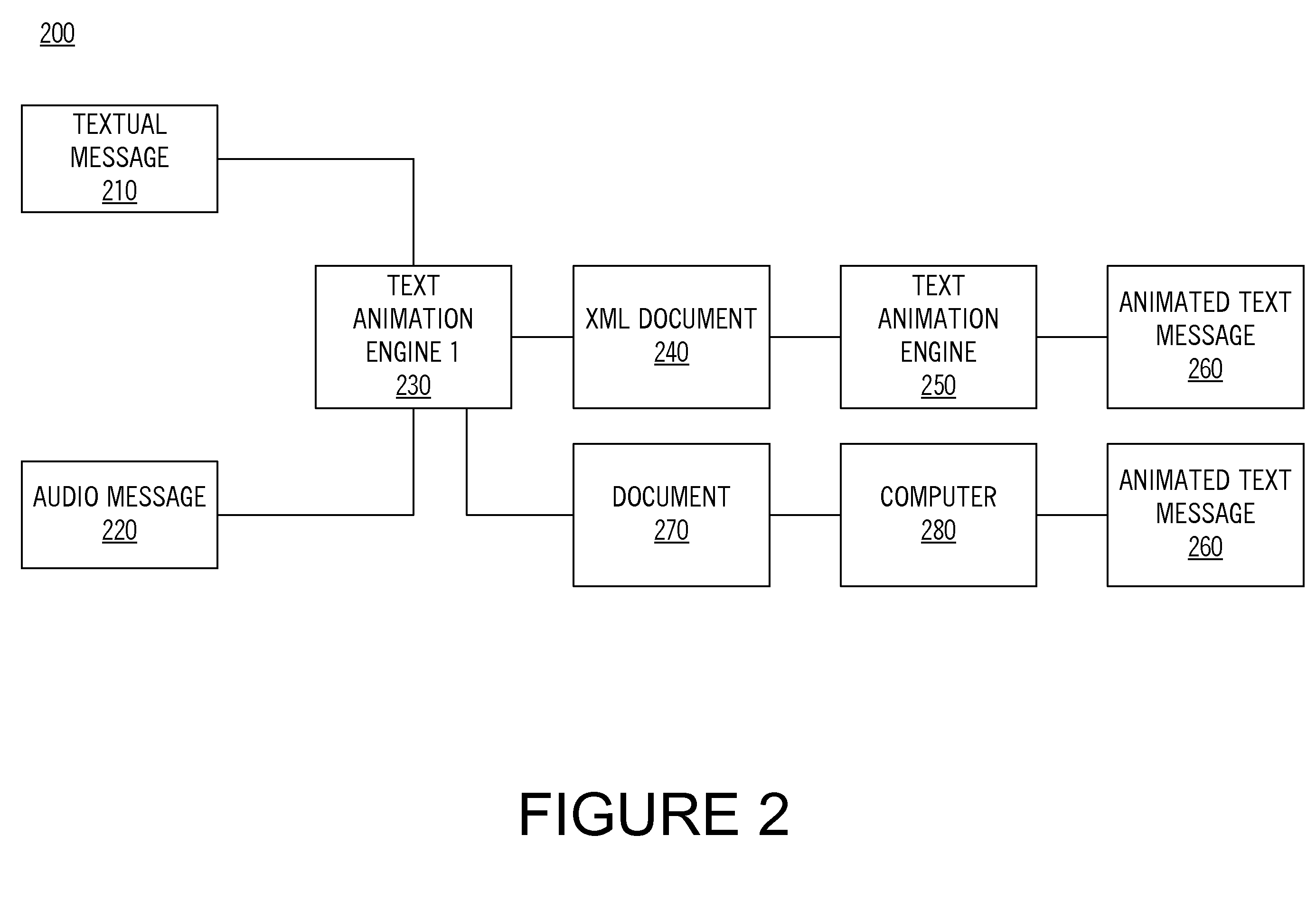

Method for expressing emotion in a text message

InactiveUS7853863B2Facilitate dynamically indicating emotionHeightened emotional valueImage analysisBiological modelsAnimationExpressed emotion

In one embodiment of the present invention, while composing a textual message, a portion of the textual message is dynamically indicated as having heightened emotional value. In one embodiment, this is indicated by depressing a key on a keyboard for a period longer than a typical debounce interval. While the key remains depressed, a plurality of text parameters for the character associated with the depressed key are accessed and one of the text parameters is chosen. Animation processing is then performed upon the textual message and the indicated portion of the textual message is visually emphasized in the animated text message.

Owner:SONY CORP +1

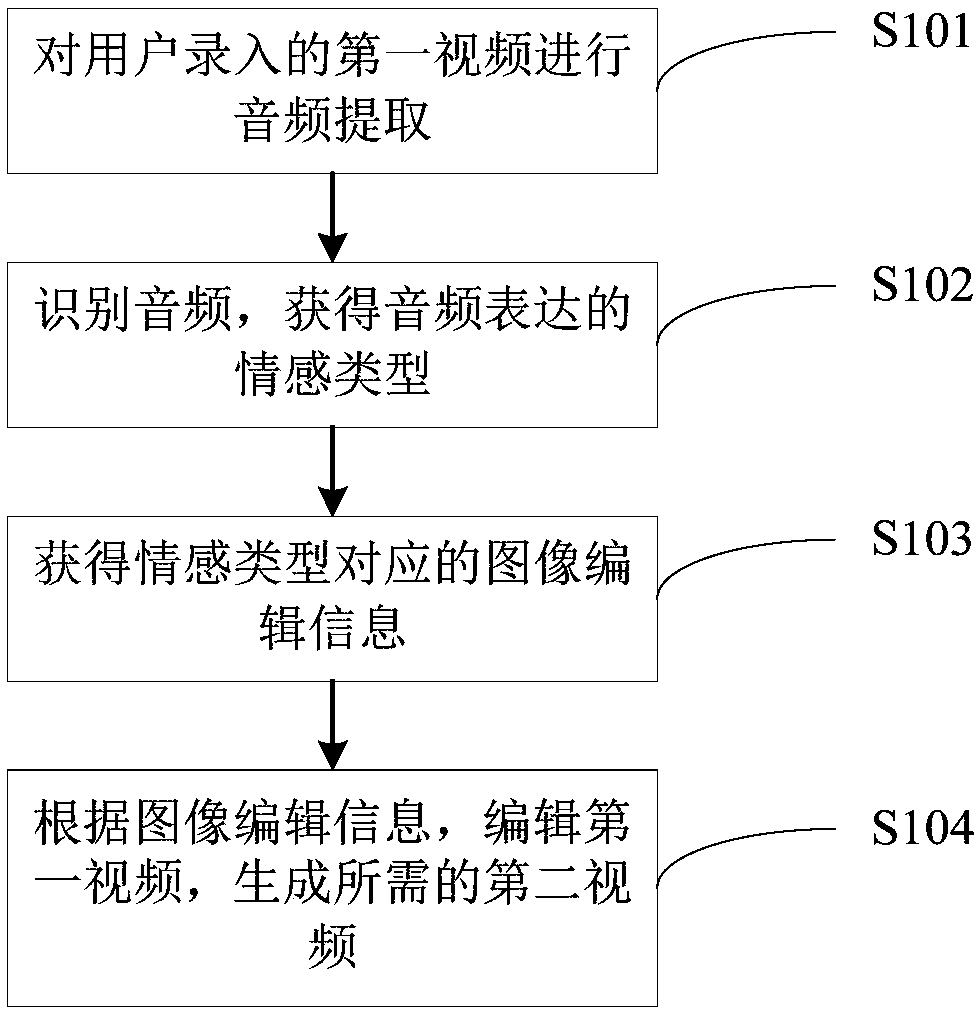

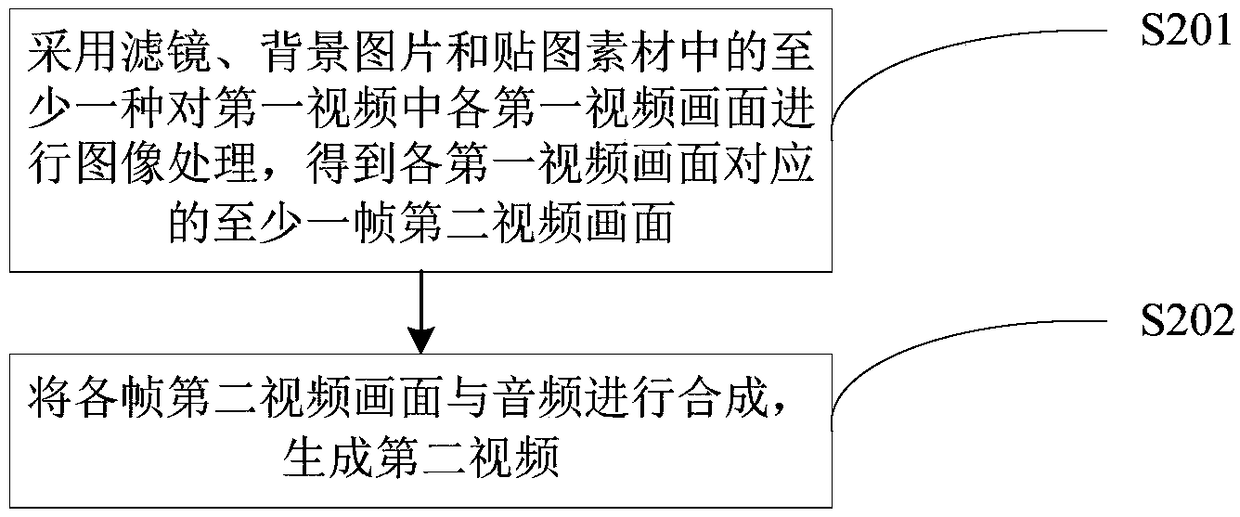

Video generating method and apparatus thereof, electronic device, and storage medium

InactiveCN109120992AReduce labor costsImprove editing efficiencyTelevision system detailsColor television detailsVideo recordExpressed emotion

The invention provides a video generating method. The method comprises: carrying out audio extraction on a first video recorded by a user; identifying the audio data to obtain an emotion type of the audio expression; acquiring image editing information corresponding to the emotion type; and editing the first video based on the image editing information to generate a needed second video. Therefore,the video image is automatically edited and processed according to the emotion expressed by the background sound of the video, so that technical problems of complicated video editing and processing operation and high labor cost in the prior art are solved. In addition, the embodiment of the invention further provides a video generating apparatus, an electronic device, and a computer-readable storage medium.

Owner:北京乐蜜科技有限责任公司

Method and device for adding punctuation in voice recognition, computer device and storage medium

PendingCN108831481AReal-time outputAccurate tone typeSpeech recognitionExpressed emotionSpeech sound

The invention discloses a method and device for adding punctuation in voice recognition, a computer device and a storage medium. The method comprises: performing voice recognition on an acquired to-be-recognized voice, synchronously detecting a mute segment in the to-be-recognized voice, and determining whether the time length of the mute segment exceeds a first time length; if so, outputting a text sequence before the mute segment, and inserting a comma or period into a corresponding position in the text sequence according to the time length of the mute segment; performing voice recognition on the acquired to-be-recognized voice after the mute segment, and correcting the comma or period inserted into the text sequence according to a preset discrimination model. The method and device are used for improving the output efficiency and the accuracy of the punctuation in voice recognition, so as to achieve a purpose of improving voice recognition efficiency, accurately breaking sentences and accurately expressing emotions.

Owner:PING AN TECH (SHENZHEN) CO LTD

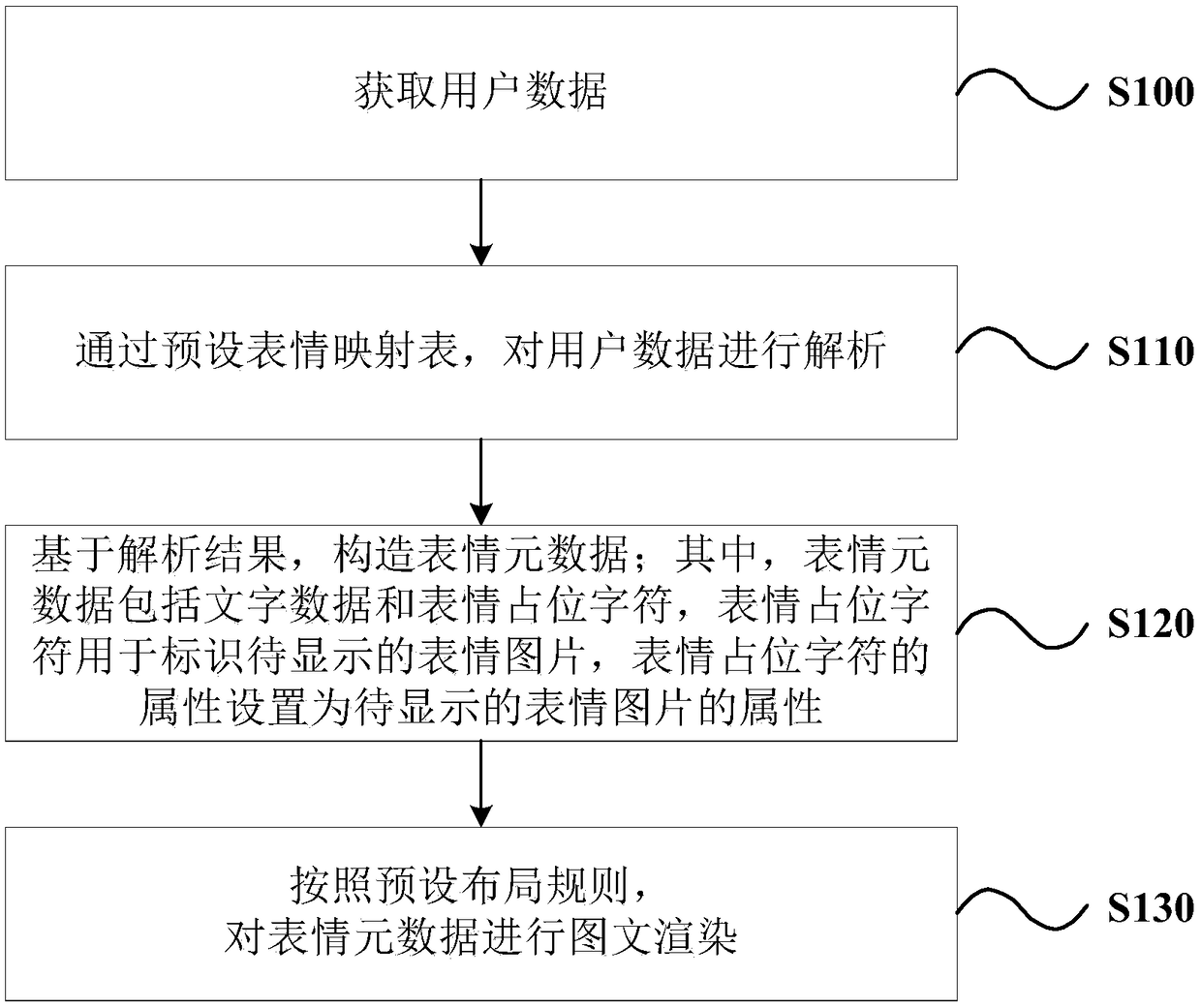

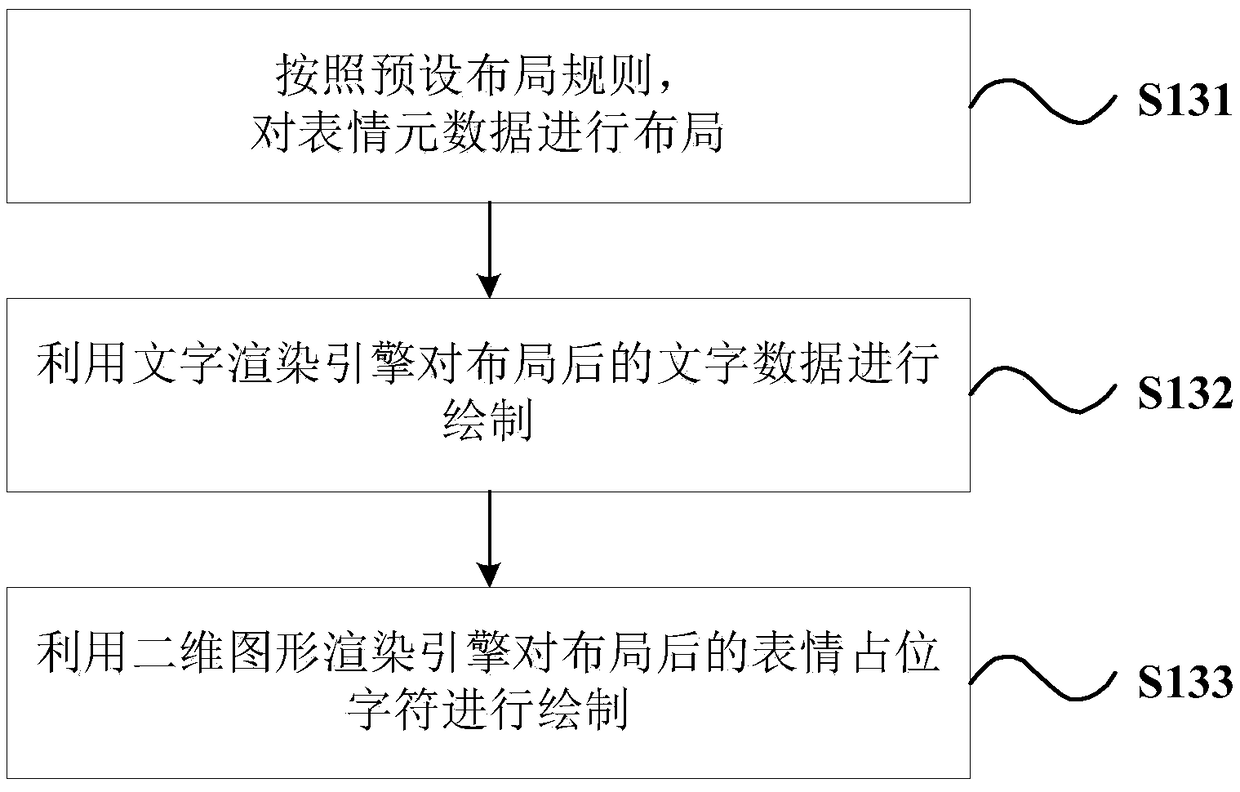

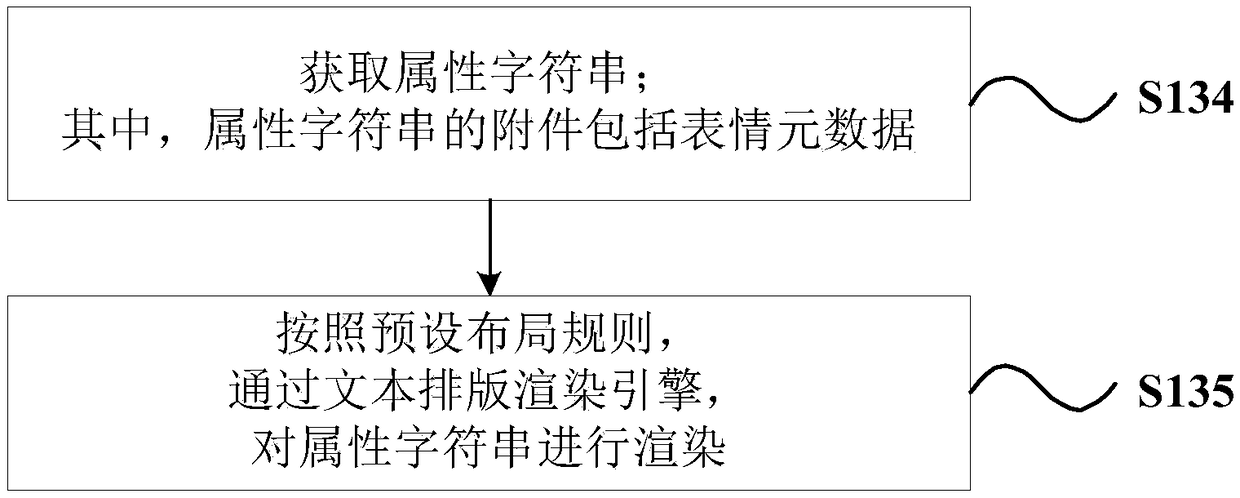

Graph-text mixed typesetting method and device, computer readable storage medium and terminal

PendingCN108805960ARich contextIn line with the habit of expressing emotionEditing/combining figures or textAmbiguityComputer terminal

The invention discloses a graph-text mixed typesetting method and device, a computer readable storage medium and a terminal. The graph-text mixed typesetting method comprises the following steps: acquiring user data; parsing the user data through a preset expression mapping table; constructing expression metadata based on a parsing result, wherein the expression metadata comprise character data and an expression placeholder character, wherein the expression placeholder character is used for identifying a to-be-displayed expression picture, and the attribute of the expression placeholder character is set as the attribute of the to-be-displayed expression picture; and performing graph-text rendering on the expression metadata according to a preset layout rule. According to the graph-text mixed typesetting method provided by the embodiment of the invention, the technical problem of how to improve the visual experience of a user is solved, and the context of user comments is enriched, which is easier to read than simple characters, can avoid the ambiguity of saying nothing, and can determine the expression picture corresponding to an emotional element; and besides, the graph-text mixedtypesetting method conforms to the habit of expressing emotions by the user, and improves the interaction amount and activity of the user in a comment area.

Owner:BEIJING BYTEDANCE NETWORK TECH CO LTD

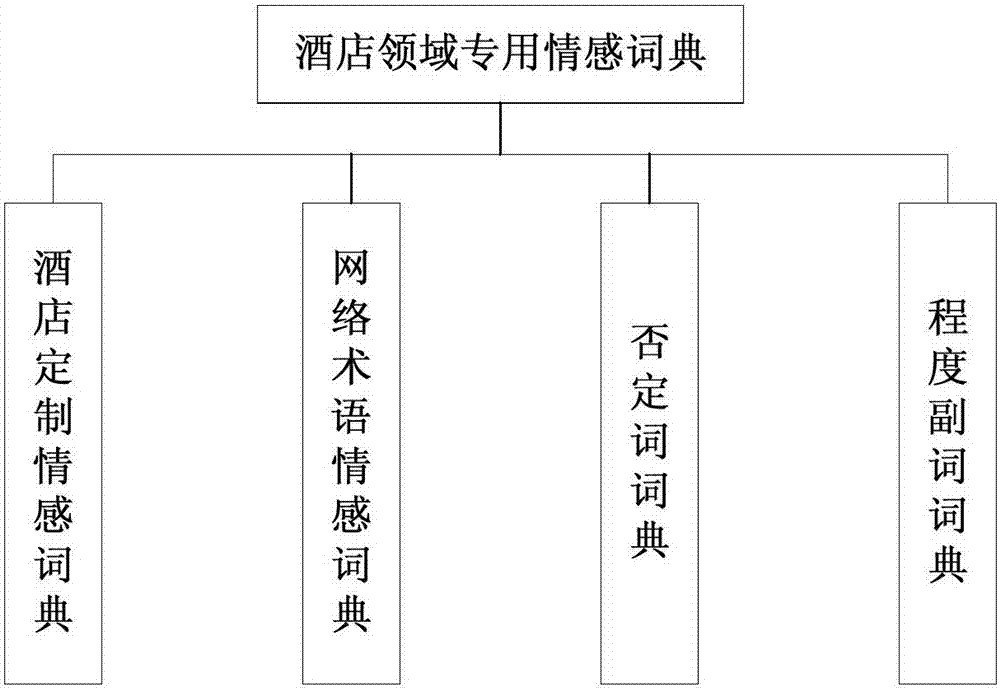

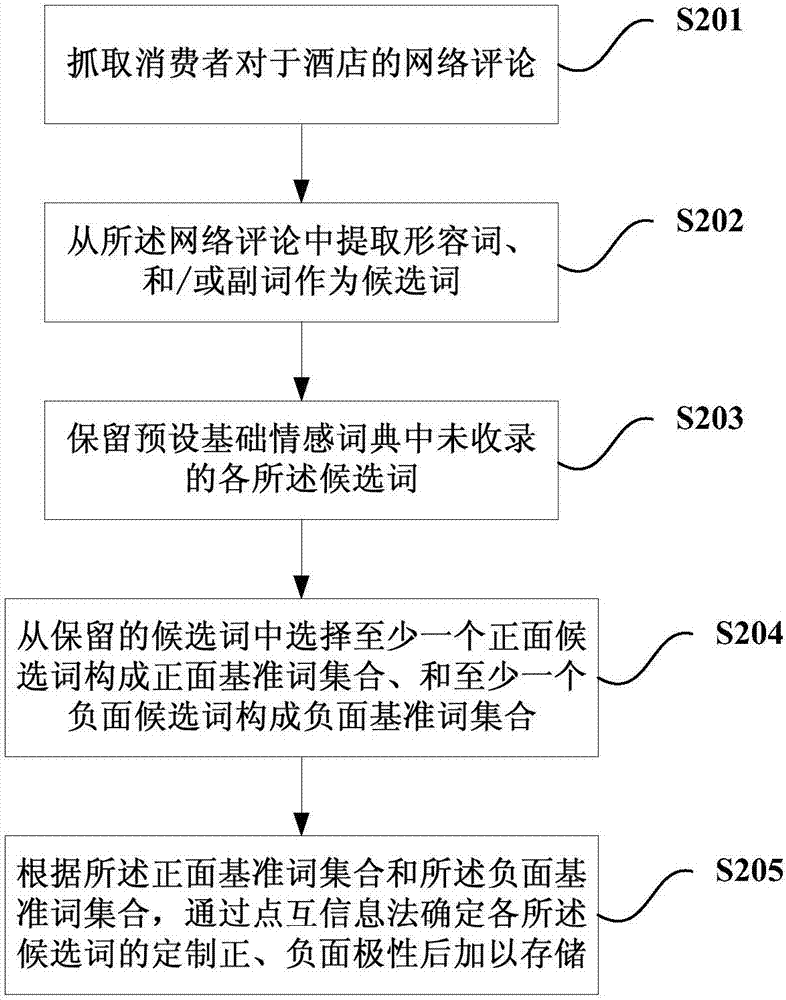

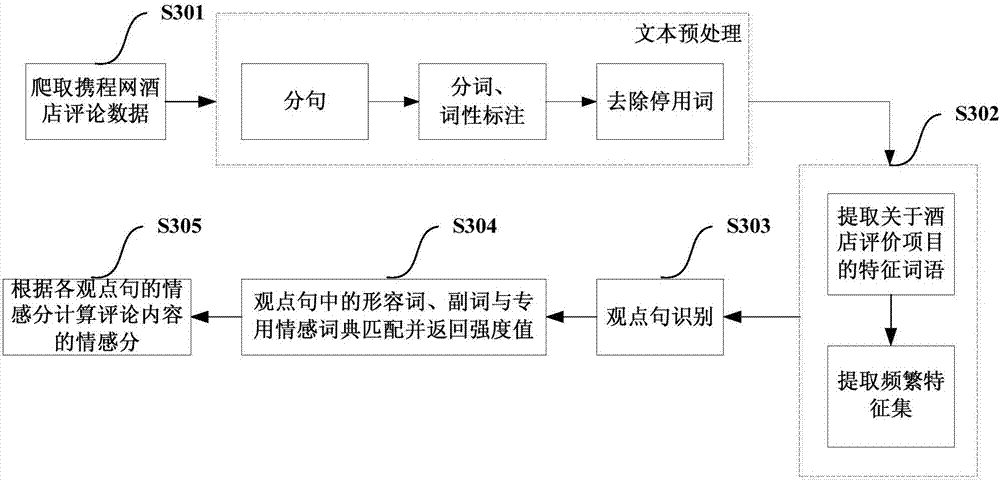

Hotel emotion dictionary establishment method, comment emotion analysis method and system

InactiveCN107203520AOvercoming the inadequacy of only knowing whether reviews are generally positive or negativeSemantic analysisSpecial data processing applicationsPattern recognitionExpressed emotion

The invention provides a hotel emotion dictionary establishment method, comment emotion analysis method and system. The hotel emotion dictionary establishment method, comment emotion analysis method and system comprise establishment of a hotel custom-made emotion dictionary, a network term emotion dictionary, a privative word dictionary and a degree adverb dictionary, wherein the hotel custom-made emotion dictionary is used for grabbing customer network comments about a hotel, extracting adjectives and / or adverbs from the network comments as candidate words, preserving candidate words not included in a preset basic emotion dictionary, selecting at least one positive candidate word to form a positive basic standard word collection and at least one negative candidate word to form a negative basic standard word collection from the non-included candidate words, and determining and storing the custom-made positive or negative property of the candidate words according to the positive basic standard word collection and the negative basic standard word collection; the network term emotion dictionary is used for collecting and storing non-included positive network popular words and negative network popular words, which are used for expressing emotion, in the preset basic emotion dictionary; the privative word dictionary is used for collecting and storing privative words, and the degree adverb dictionary is used for collecting and storing adverbs of degree. The hotel emotion dictionary establishment method, comment emotion analysis method and system can provide strong technical support for the emotion analysis of the hotel network comments.

Owner:SHANGHAI ADVANCED RES INST CHINESE ACADEMY OF SCI

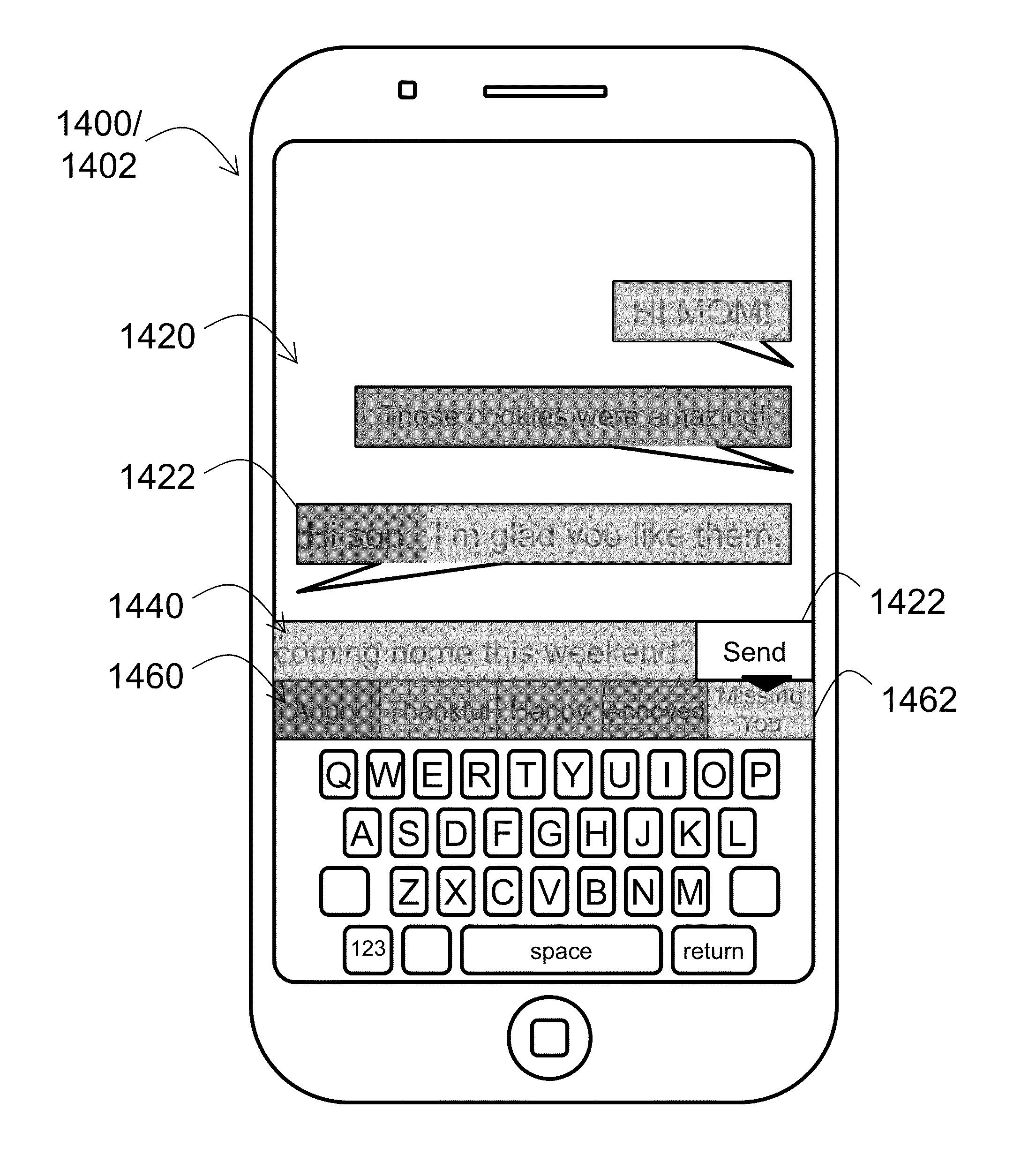

Emotive Text Messaging System

InactiveUS20140195619A1Quickly and accurately conveyFacilitates creating text messagesData switching networksUser inputDisplay device

A system for expressing emotion in respective users' text messages, the system comprising: respective user text generation engines for generating emotive text messages according to respective user entered text and respective user selected emotional values associated with the text; respective user interfaces for permitting the users to enter the text and select the associated emotional value to be used in generating the emotive text message. respective displays for displaying the emotive text to respective users; wherein the emotive text is displayed as the written text against a background having a color according to the emotional value associated with the written text.

Owner:HODJAT FARHANG RAY

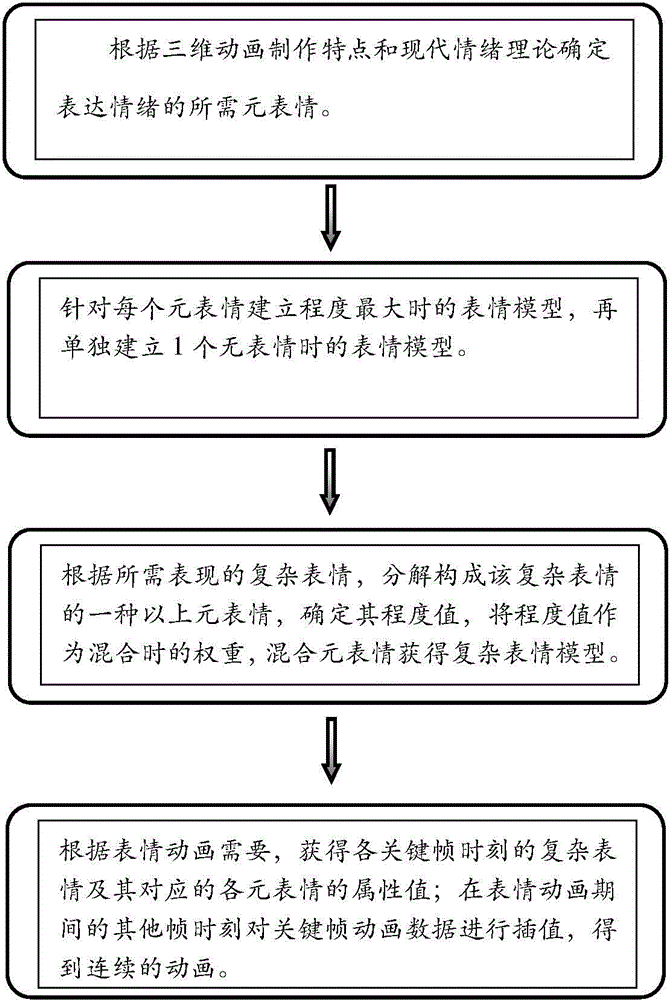

Expression generation method for three-dimensional cartoon character based on element expression

InactiveCN104599309AClose to consistent emotional meaningConsistent emotional meaningAnimationAnimationAlgorithm

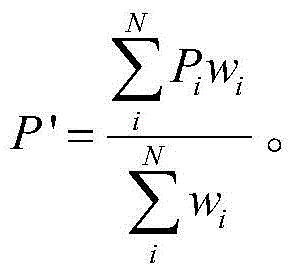

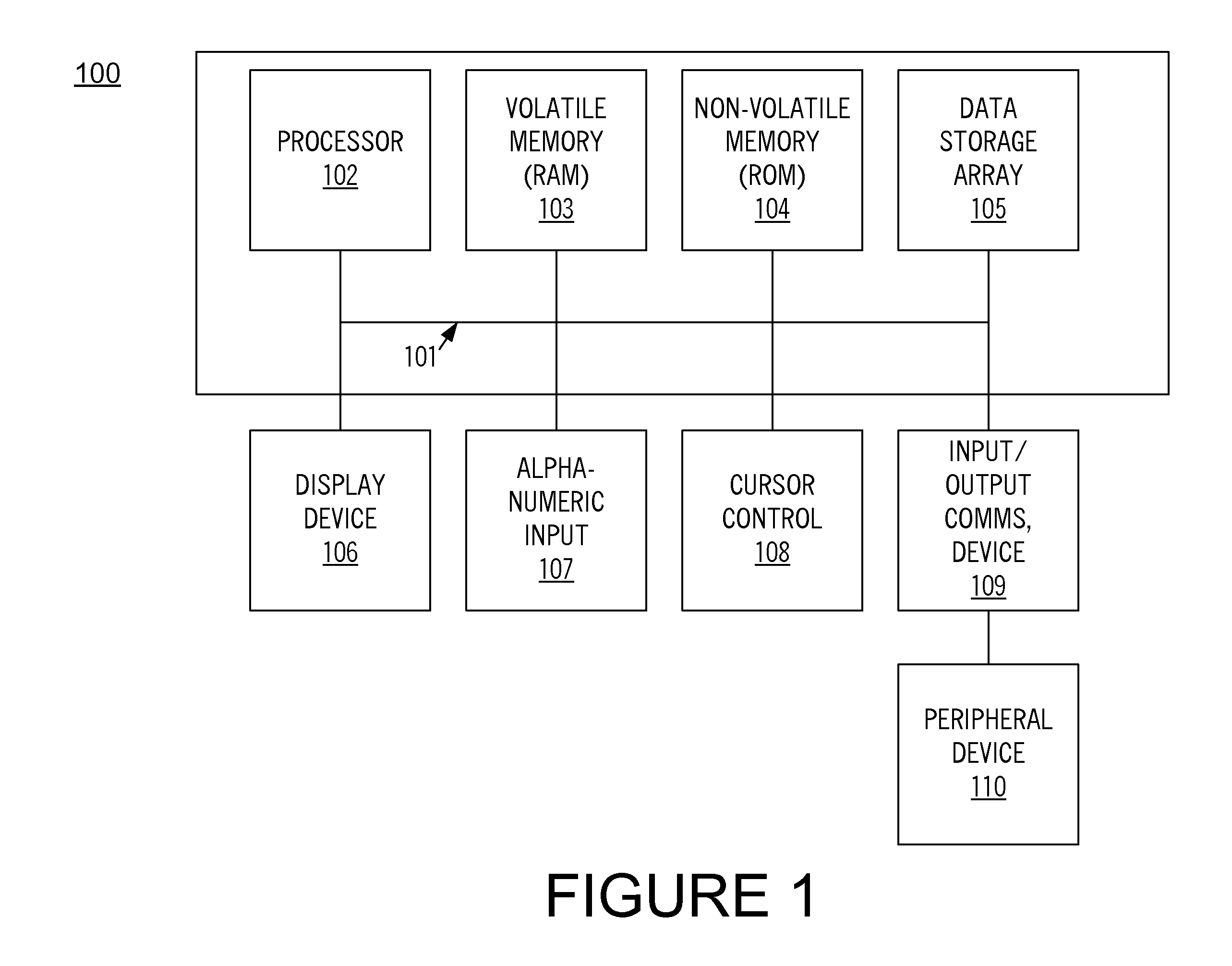

The invention discloses an expression generation method for a three-dimensional cartoon character based on element expression. The expression generation method for the three-dimensional cartoon character based on the element expression includes that step 1, confirming several kinds of element expressions for expressing emotions according to three-dimensional cartoon manufacturing features and a modern sentiment theory; step 2, building an expression model for each element expression with the maximum degree, and separately building an expression model without expression; step 3, decomposing more than one element expression of a complex expression to be expressed, confirming the degree value thereof, and using the degree value as weight to mix with each element expression to obtain an expression model of the complex expression; step 4, setting a key frame animation with the element expression degree to obtain the expression animation. The expression generation method for the three-dimensional cartoon character based on the element expression is capable of quickly and consistently generating the character expression animation, and the character expression animation can be reused among different characters.

Owner:北京春天影视科技有限公司

Method for expressing emotion in a text message

InactiveUS20110055675A1Facilitate dynamically indicating emotionsImage analysisBiological modelsAnimationExpressed emotion

In one embodiment of the present invention, while composing a textual message, a portion of the textual message is dynamically indicated as having heightened emotional value. In one embodiment, this is indicated by depressing a key on a keyboard for a period longer than a typical debounce interval. While the key remains depressed, a plurality of text parameters for the character associated with the depressed key are accessed and one of the text parameters is chosen. Animation processing is then performed upon the textual message and the indicated portion of the textual message is visually emphasized in the animated text message.

Owner:SONY CORP +1

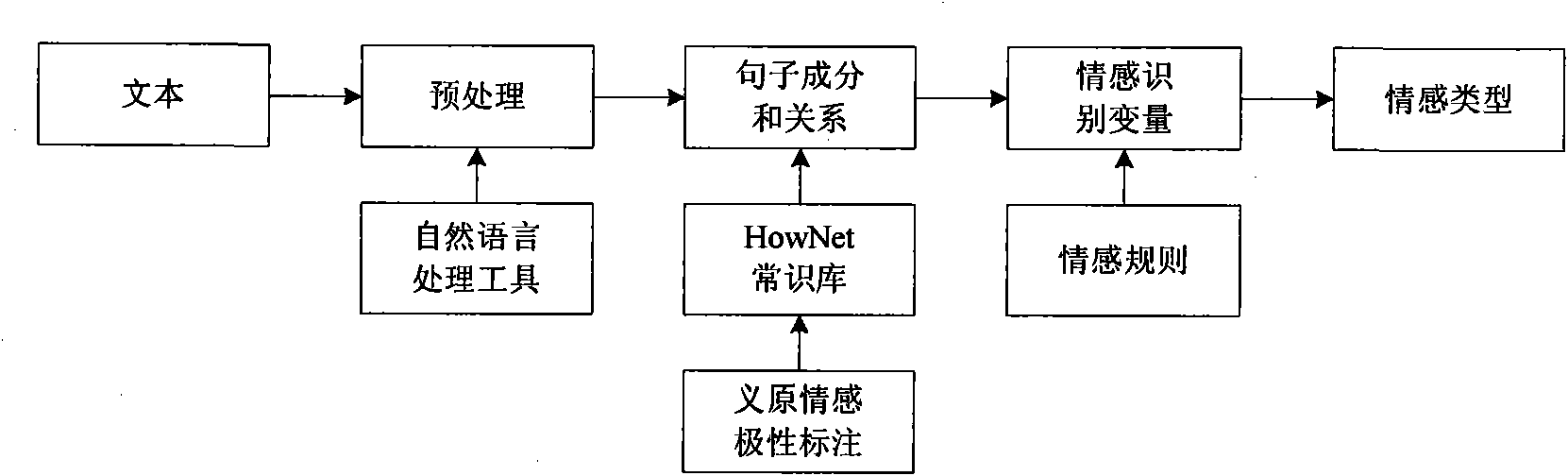

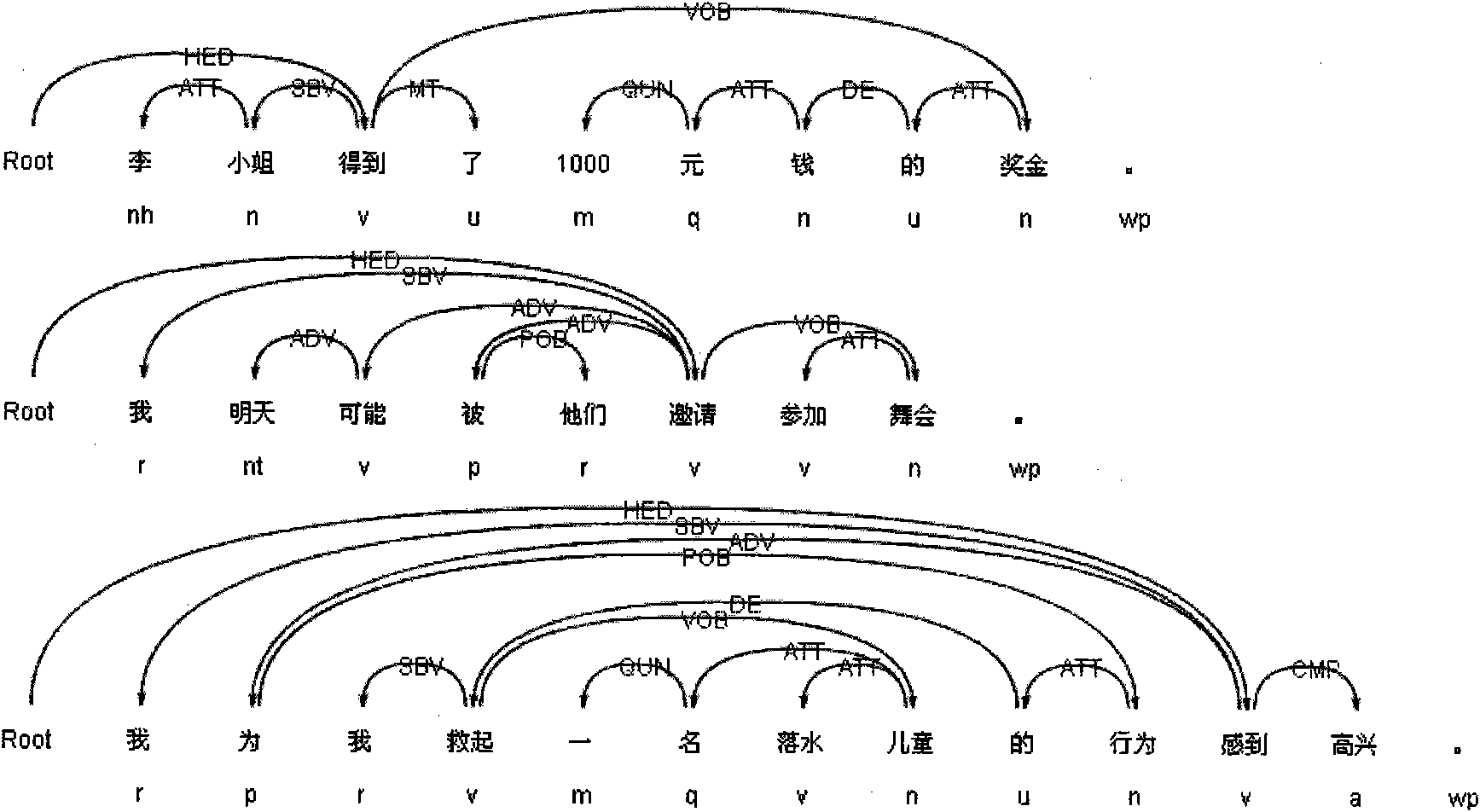

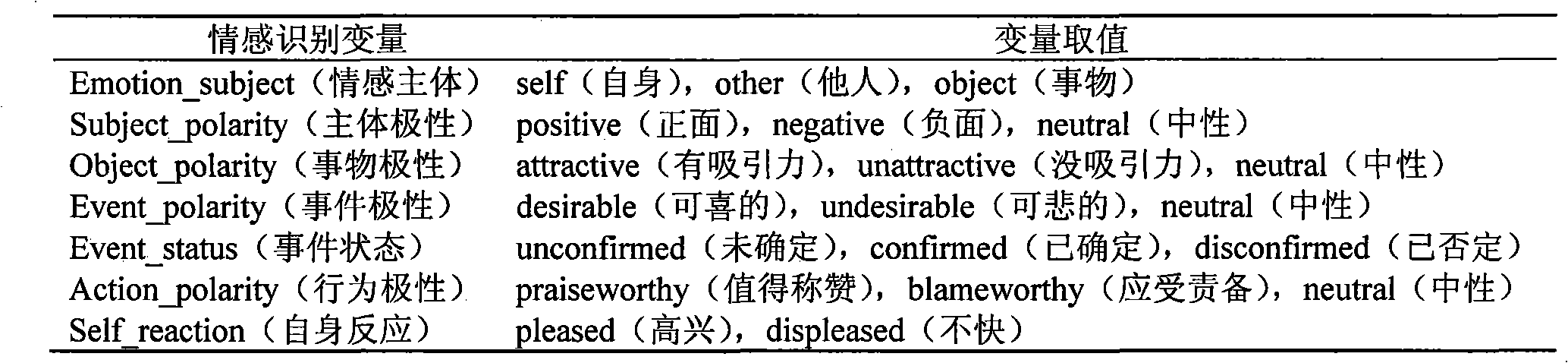

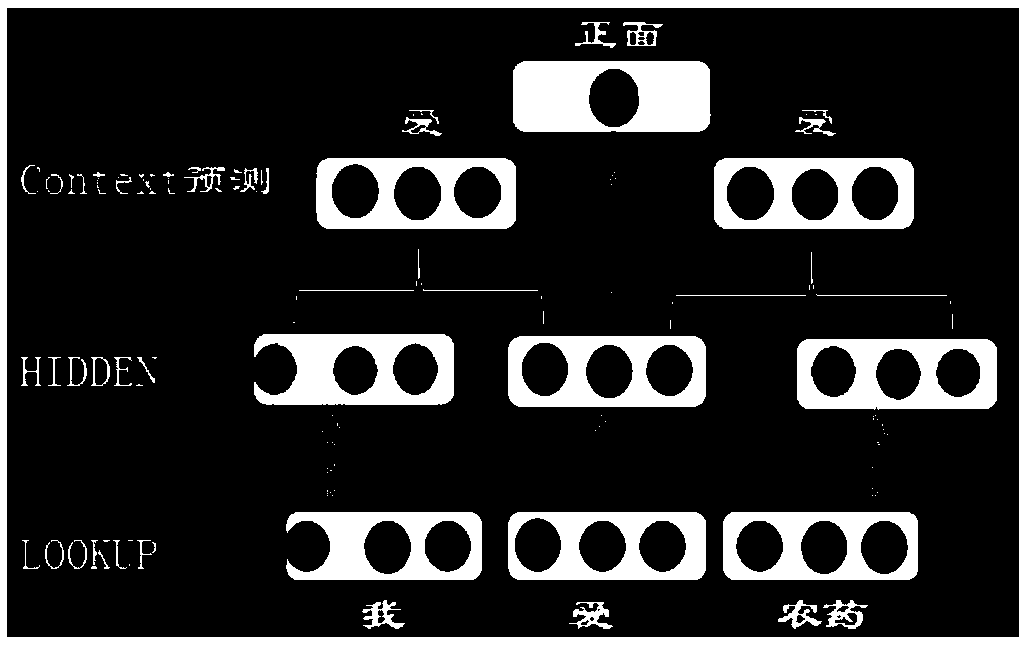

Cognitive evaluation theory-based Chinese text emotion recognition method

InactiveCN101901212ASolve the problems of few categories of emotion recognition and low recognition accuracyImprove accuracySpecial data processing applicationsExpressed emotionEvaluation theory

The invention provides a cognitive evaluation theory-based Chinese text emotion recognition method, which comprises the following steps of: 1) preprocessing a Chinese text by using a natural language processing tool to obtain the dependency of sentence constituents; 2) determining emotion recognition variables contained in a sentence according to a method for determining the emotion recognition variables, and assigning the sentence constituents to the emotion recognition variables; 3) determining the values of the emotion recognition variables according to a method for assigning the emotion recognition variables; and 4) determining an emotion style expressed by the sentence according to an emotion rule. The Chinese text emotion recognition method has higher accuracy for recognizing Chinese text emotion and can recognize 22 emotion styles.

Owner:BEIHANG UNIV

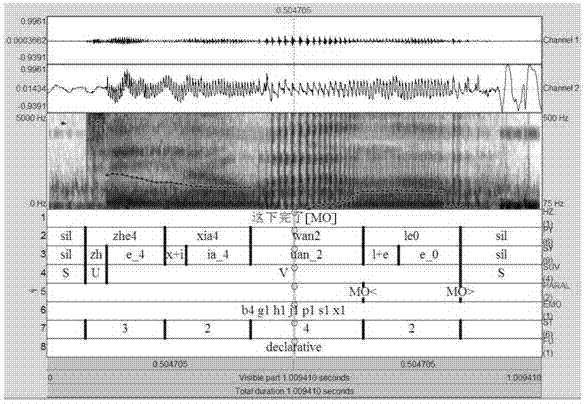

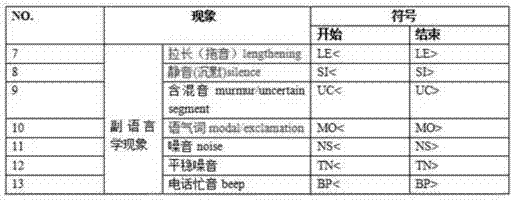

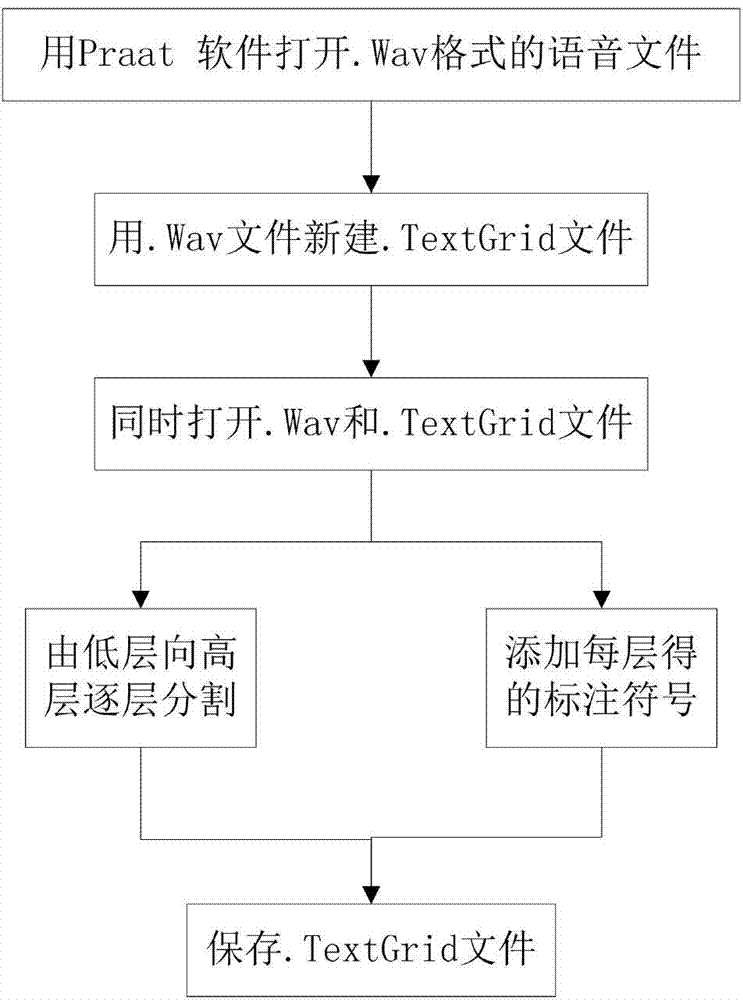

Voice annotation method for Chinese speech emotion database combined with electroglottography

InactiveCN104732981AAvoid noise disturbanceAccurate segmentationSpeech analysisInformation layerSyllable

The invention provides a voice annotation method for a Chinese speech emotion database combined with an electroglottography. The main annotation content of the voice annotation method comprises eight layers of information which are simultaneously annotated on each voice. The eight layers of information comprises that a first layer is a text conversion layer, speaking content of a speaker and corresponding paralanguage information are made clear; a second layer is a syllable layer, a regular spell and a tone of each syllable are annotated; a third layer is an initial / final consonant layer, initial / final consonants of the syllable layer are annotated separately, and meanwhile tone information is marked; a fourth layer is an unvoiced sound, voiced sound and silence layer, and unvoiced sounds, voiced sounds and silences of the voices are segmented combined with the electroglottography; a fifth layer is a paralanguage information layer, and paralanguage information included in each voice is annotated; a sixth layer is an emotion layer, and according to emotion status which is expressed by the speaker, each voice is annotated with information comprising seven kinds of emotions and expression degrees of each kind of the emotions; a seventh layer is a stress index layer, and intensity information of pronunciation of each voice is annotated; an eighth layer is a statement function layer, and a statement type of each statement is annotated.

Owner:BEIHANG UNIV

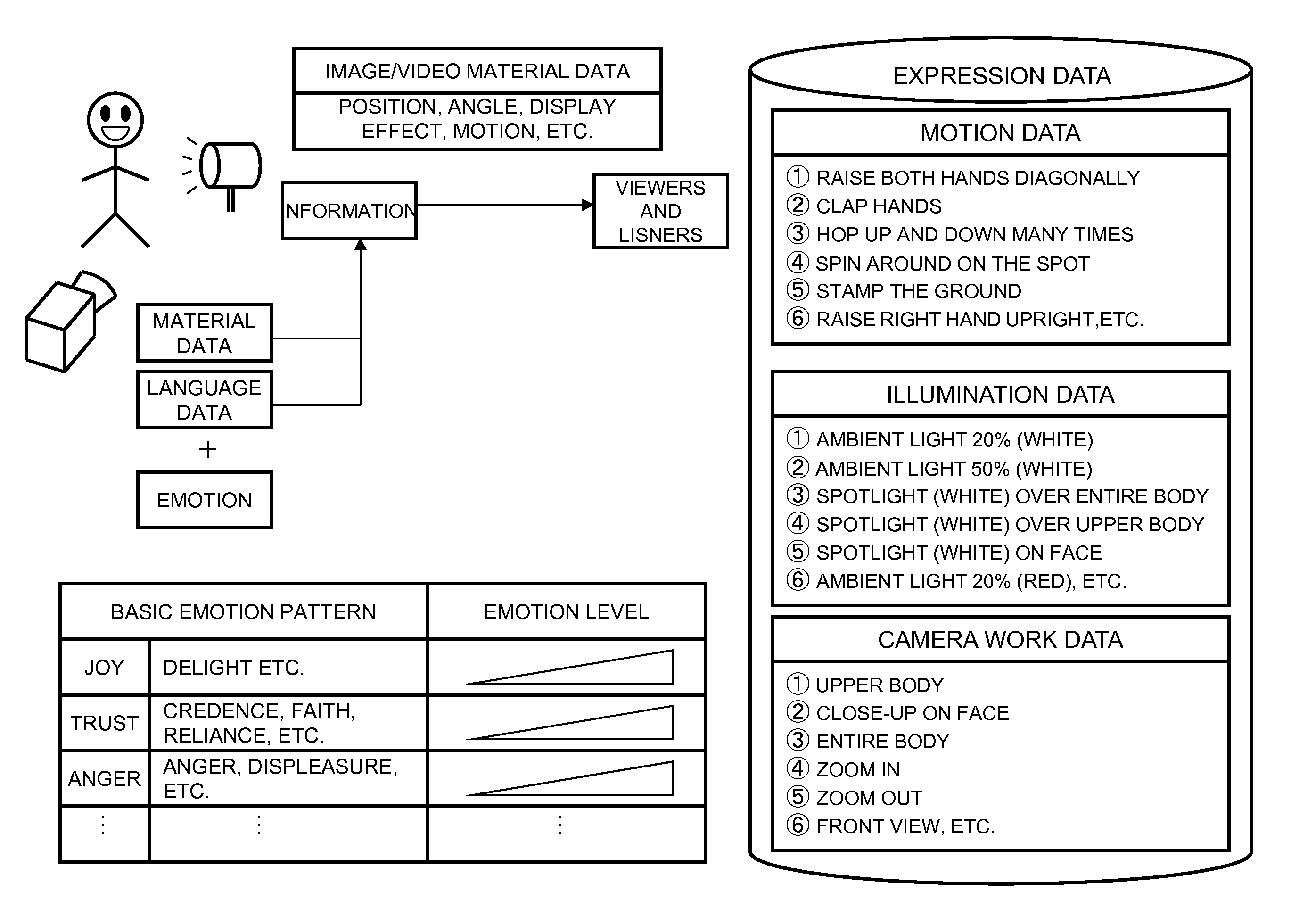

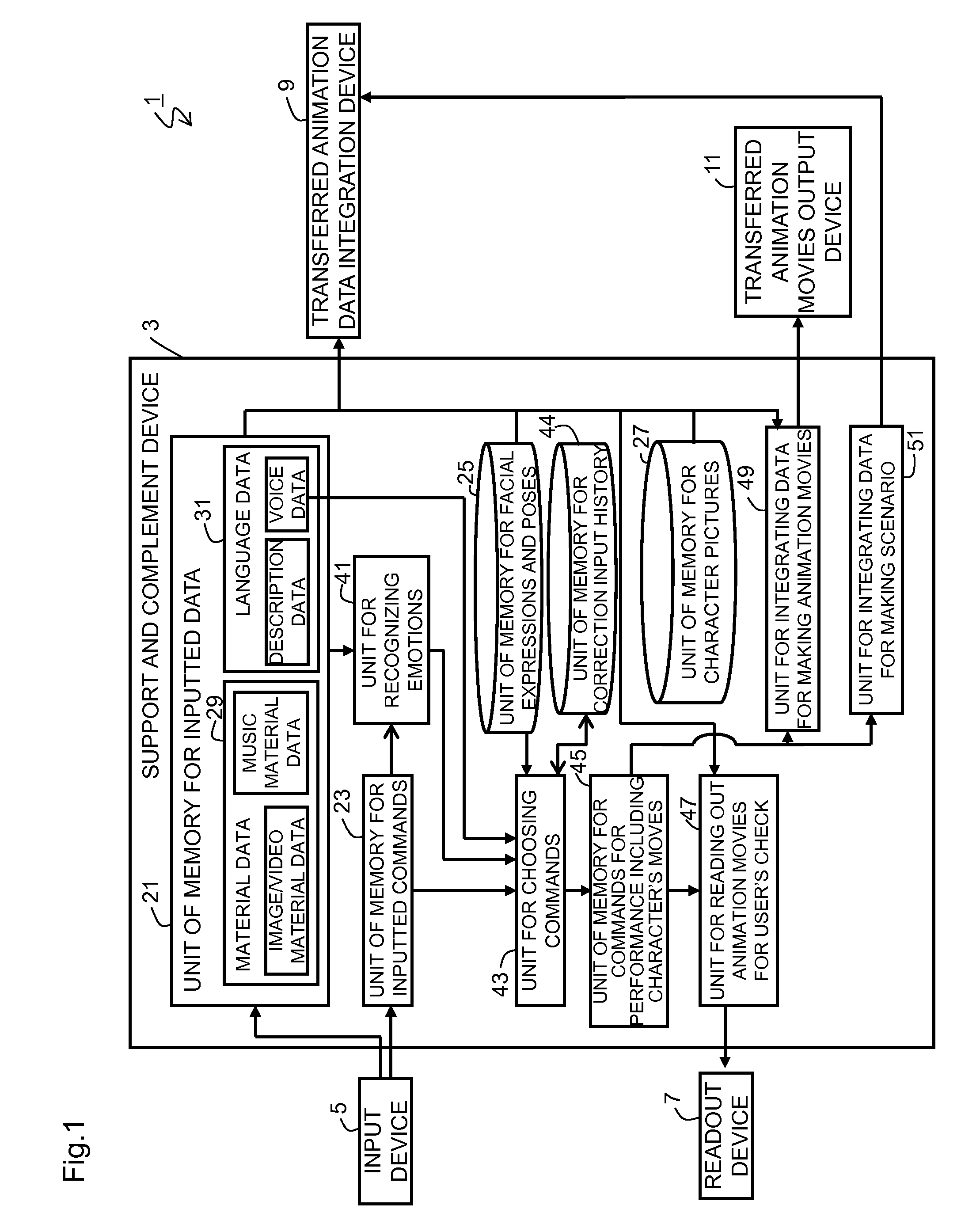

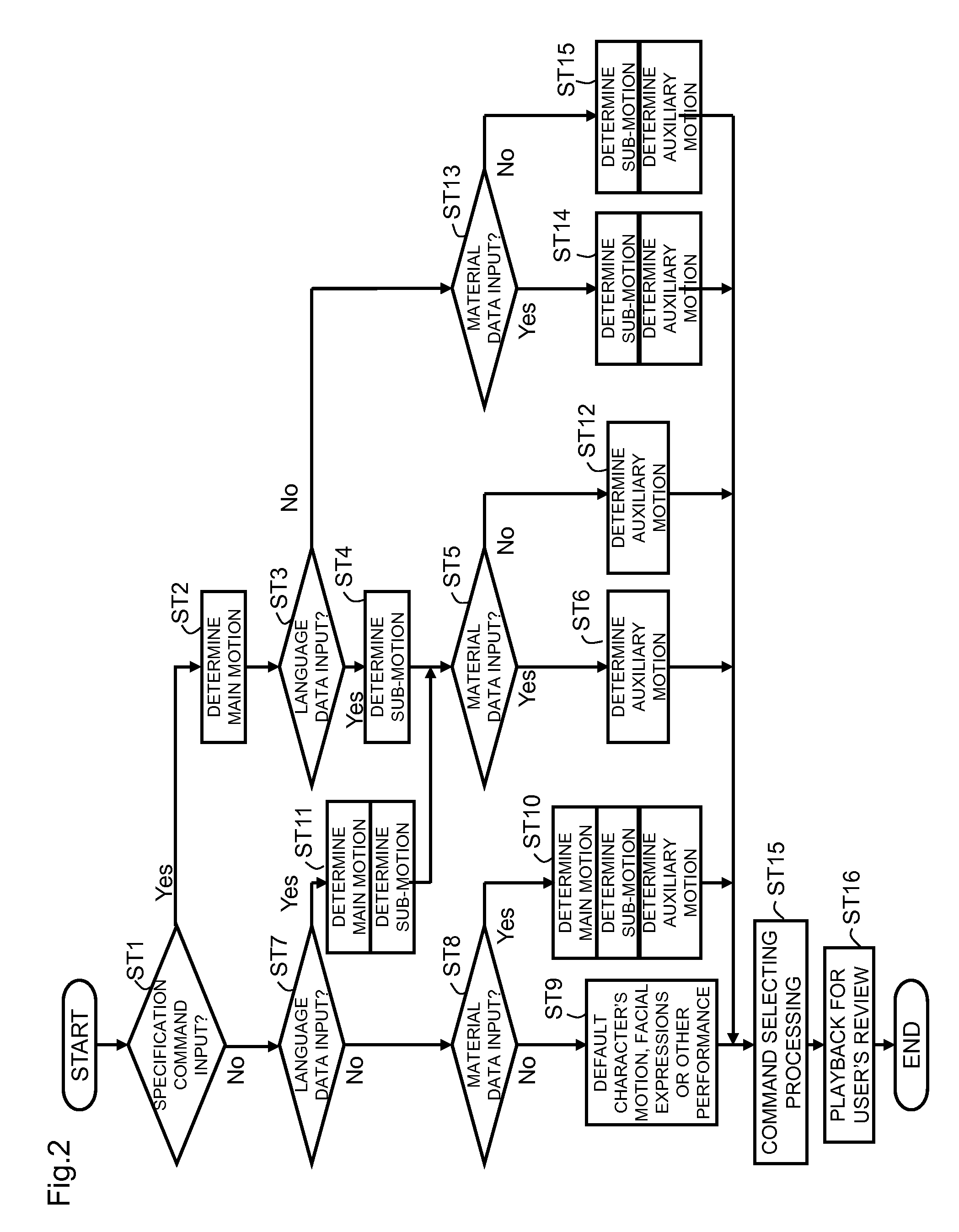

Support and complement device, support and complement method, and recording medium

ActiveUS20140002464A1Reduce motion errorsAvoid error introductionSpeech analysisAnimationUser inputAnimation

A support and complement device etc., are provided, which appropriately generates the character's motion for content introduction in a simple manner. The user inputs a command to an input device, which specifies the character's motion used for content introduction. The support and complement device complements the command input operation. The content includes material data and language data. The language data includes voice data to be emitted by the character. A unit for recognizing emotions analyzes the material data and the language data, and deduces the emotion pattern to be expressed by the character. A unit for choosing commands determines the character's motion using the deduced emotion pattern, command, and voice data, and generates a motion command. A unit for reading out animation movies for user's check instructs a readout device to display a proposed animation movie generated by the unit for choosing commands, thereby allowing the user to review it.

Owner:BOND

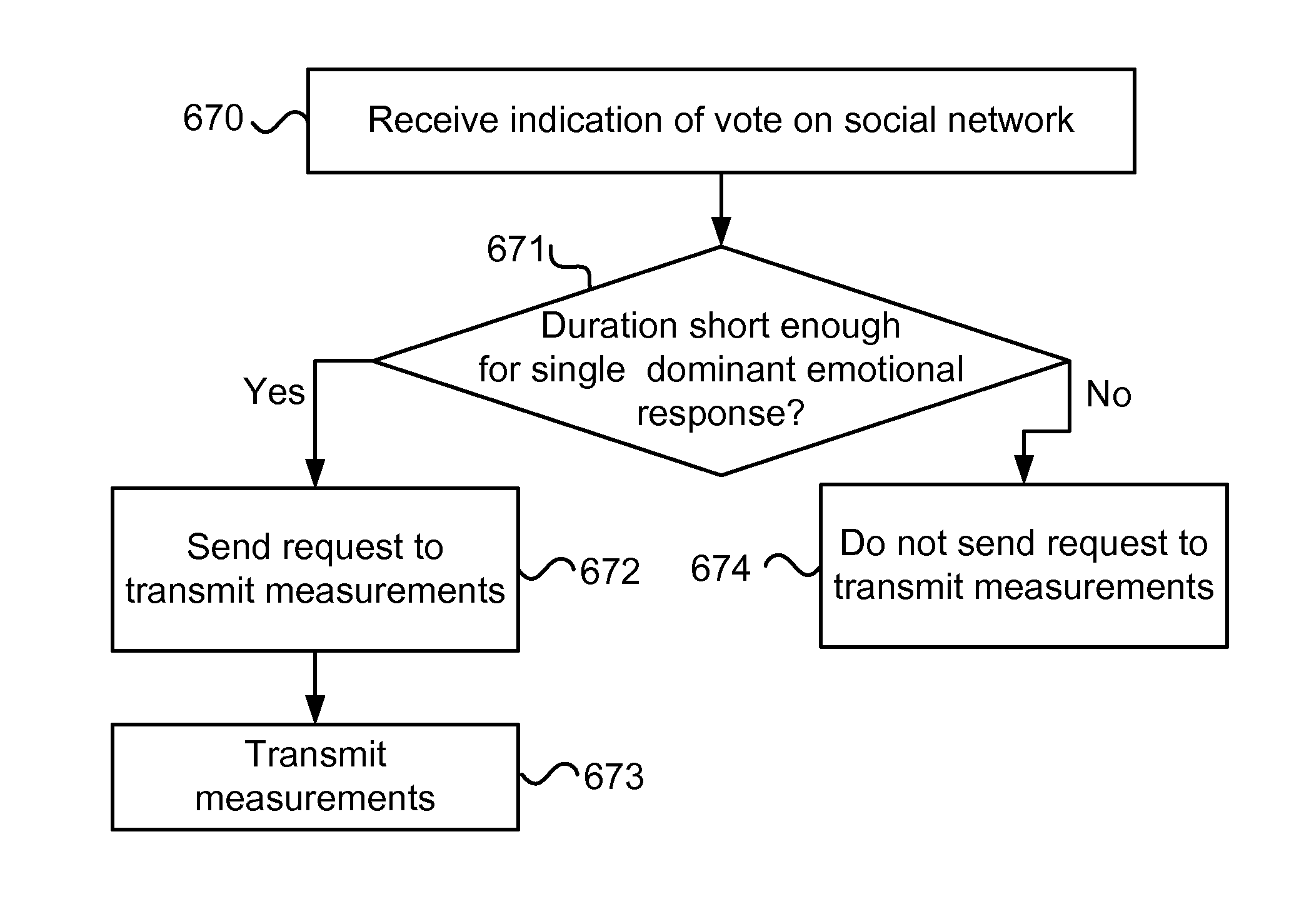

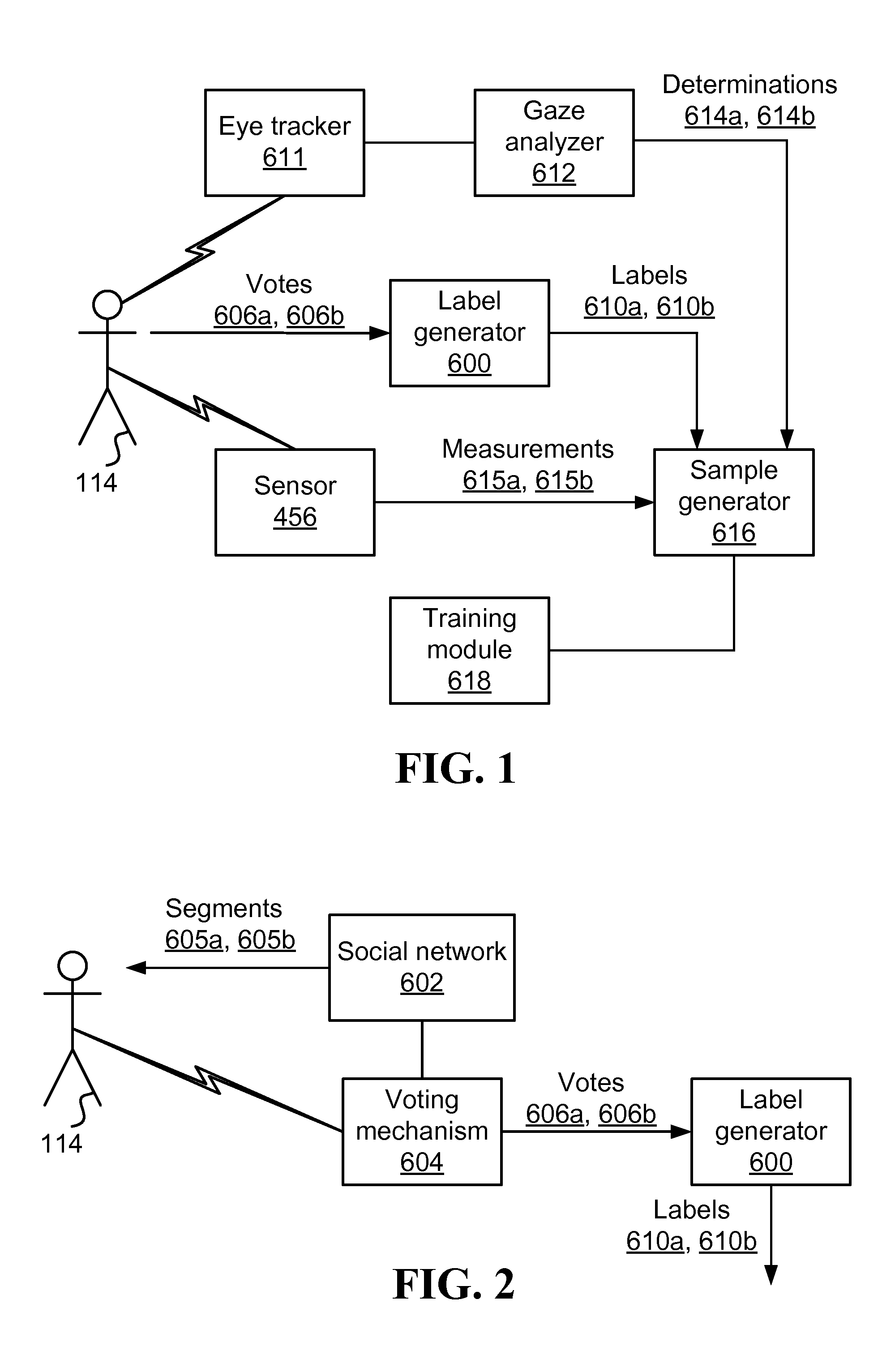

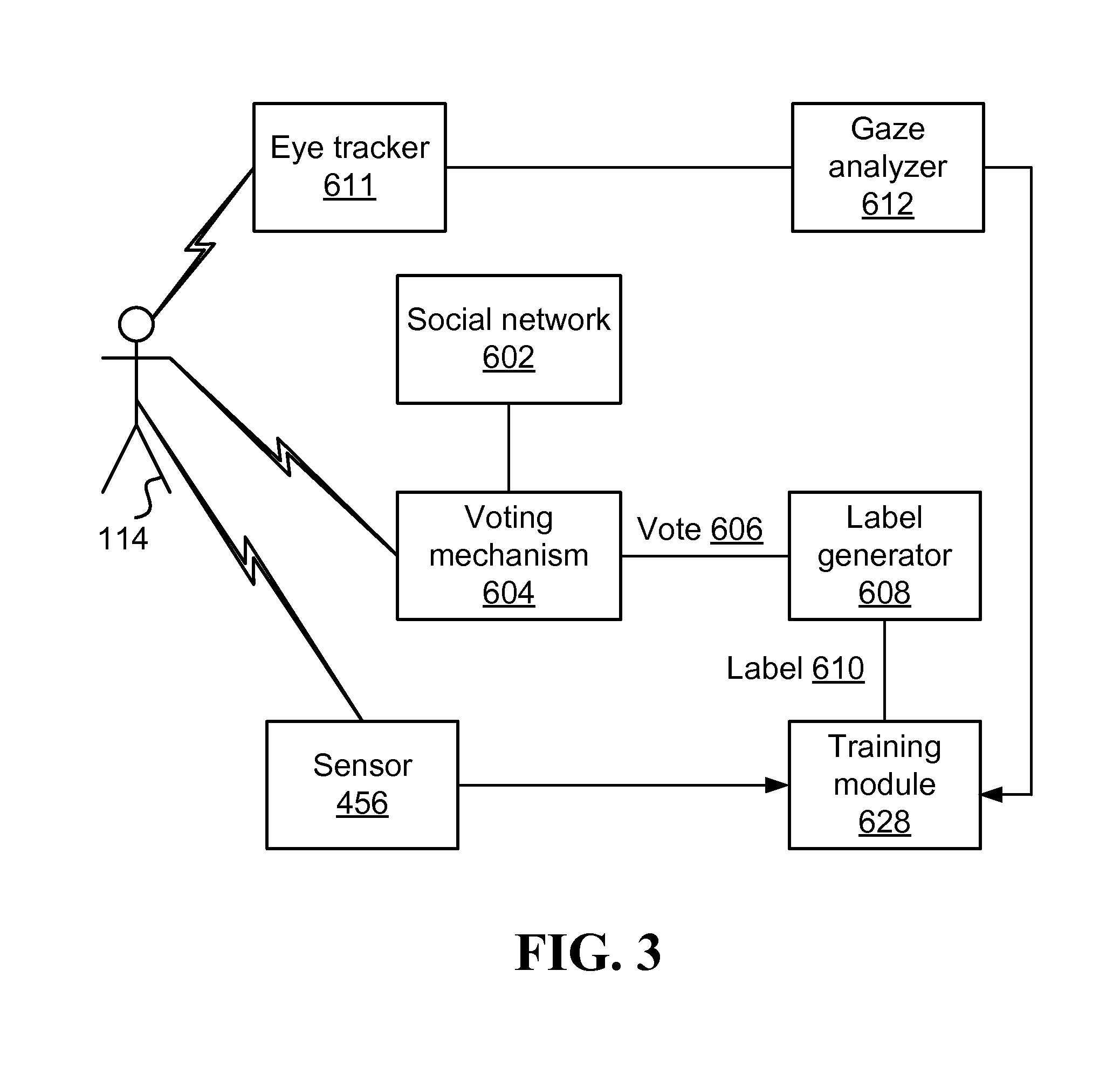

Collecting naturally expressed affective responses for training an emotional response predictor utilizing voting on a social network

ActiveUS20150039405A1Affecting responseVoting apparatusData processing applicationsSocial networkExpressed emotion

Collecting naturally expressed affective responses for training an emotional response. In one embodiment, a label generator is configured to receive a vote of a user on a segment of content consumed by the user on a social network. The label generator determines whether the user consumed the segment during a duration that is shorter than a predetermined threshold, and utilizes the vote to generate a label related to an emotional response to the segment. The predetermined threshold is selected such that, while consuming the segment in a period of time shorter than the predetermined threshold, the user is likely to have a single dominant emotional response to the segment. A training module receives the label and measurement of an affective response of the user taken during the duration, and trains an emotional response predictor with the measurement and the label.

Owner:AFFECTOMATICS

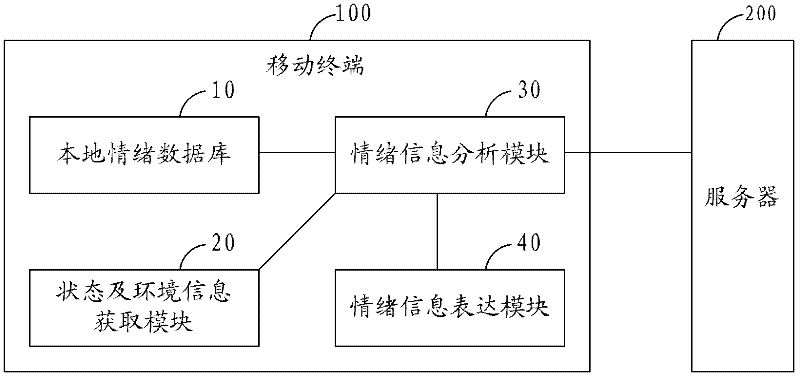

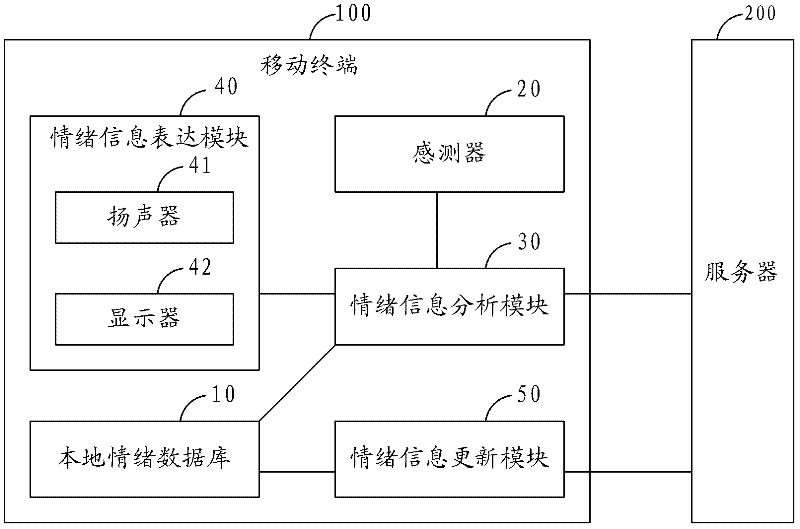

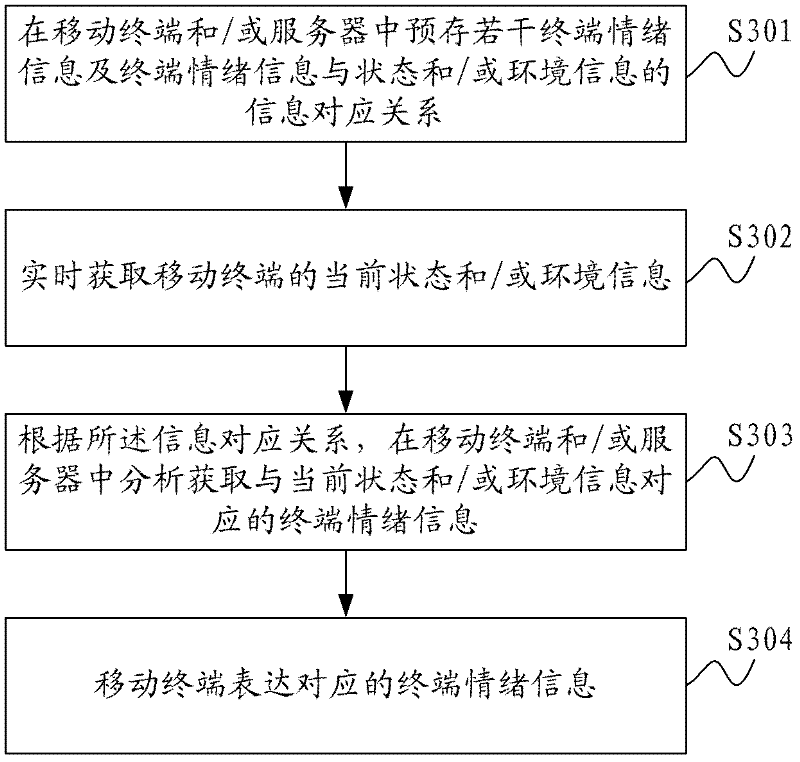

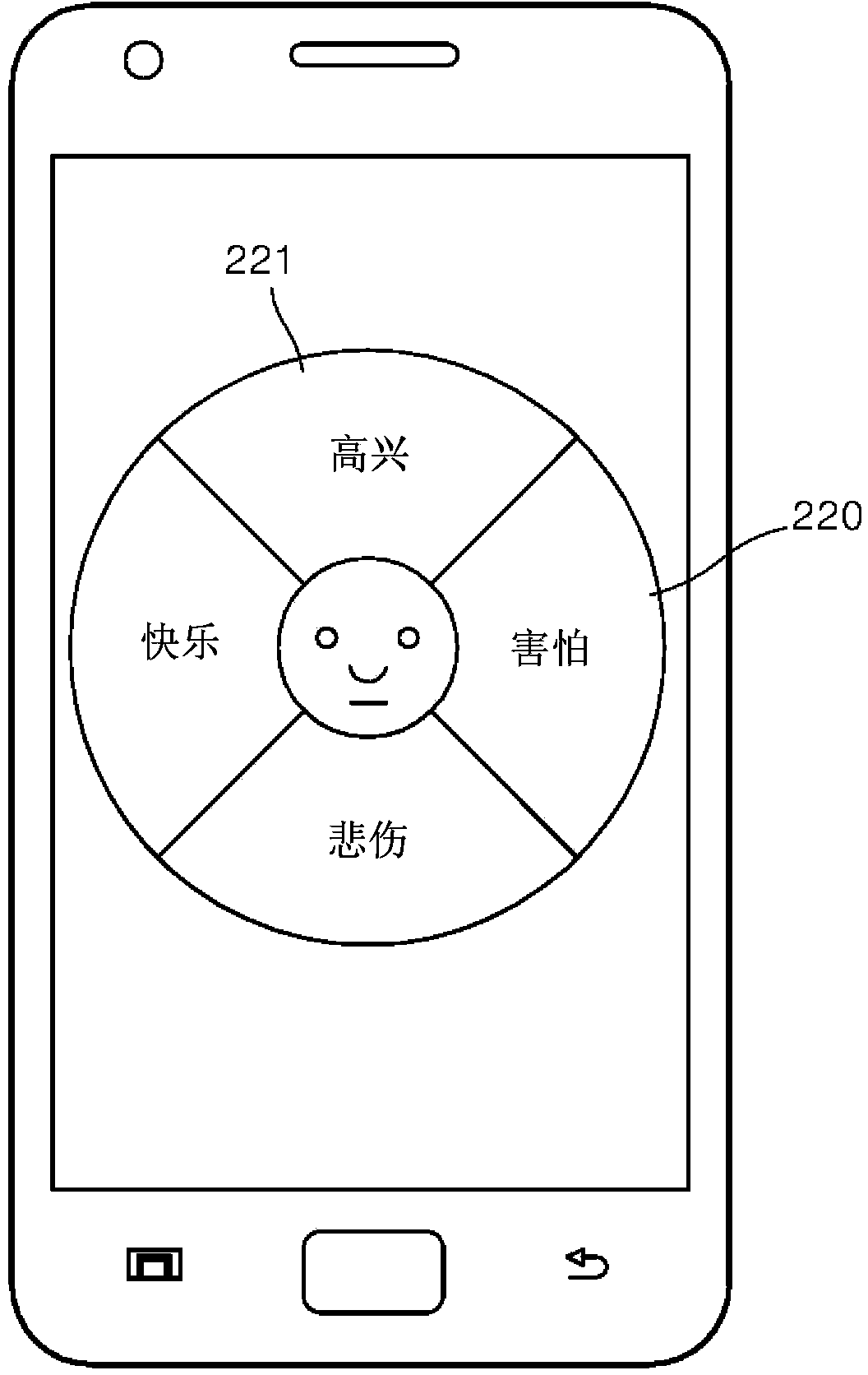

Emotion expression method and system of mobile terminal and mobile terminal

InactiveCN102438064ASolve limited storage spaceImprove experienceSubstation equipmentWireless commuication servicesExpressed emotionComputer science

The invention is suitable for the technical field of communication, providing an emotion expression method of mobile terminal, comprising the steps of: prestoring multiple terminal emotion information and the information congruent relationship between the terminal emotion information and the terminal state and / or environment information in a mobile terminal and / or server; real-time obtaining the current state and / or environment information of the mobile terminal; according to the information congruent relationship, in the mobile terminal and / or server, analyzing to obtain the terminal emotion information corresponding to the terminal current state and / or environment information; said mobile terminal expressing the corresponding terminal emotion information. Correspondingly, the invention also provides an emotion expression system of mobile terminal and a mobile terminal. Thus, the invention can express the emotion by sensing the state and the environment change and has rich expressed emotion information content.

Owner:YULONG COMPUTER TELECOMM SCI (SHENZHEN) CO LTD

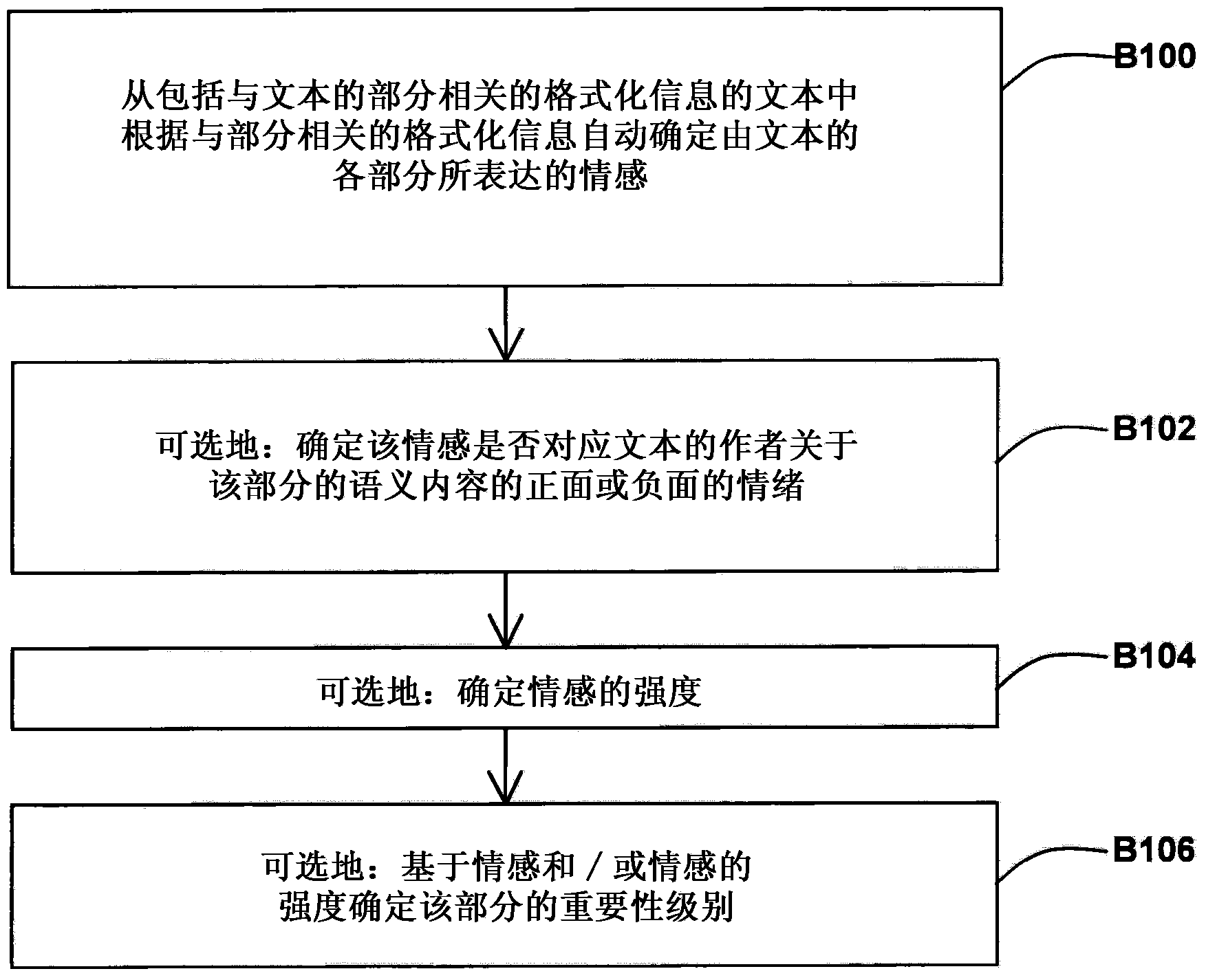

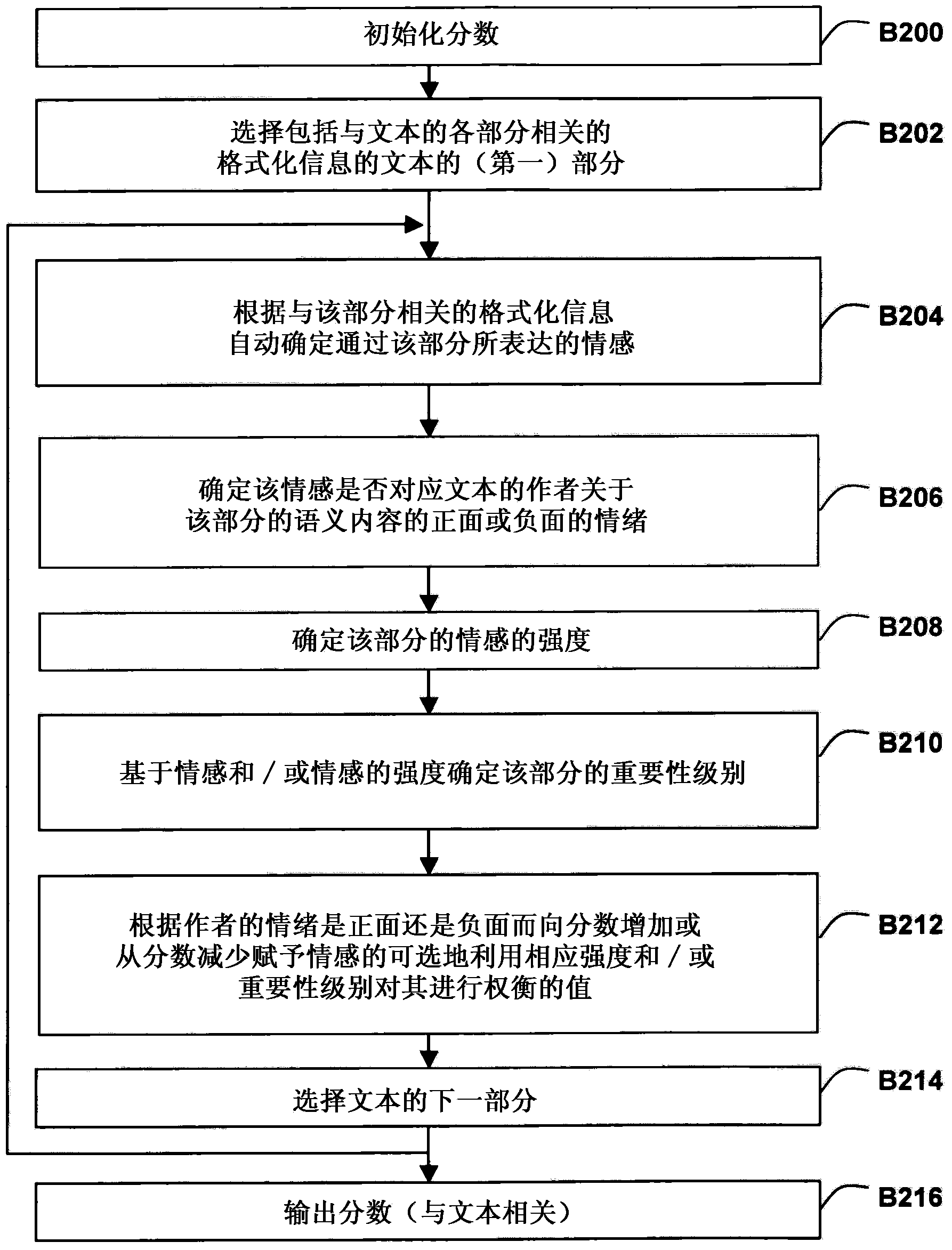

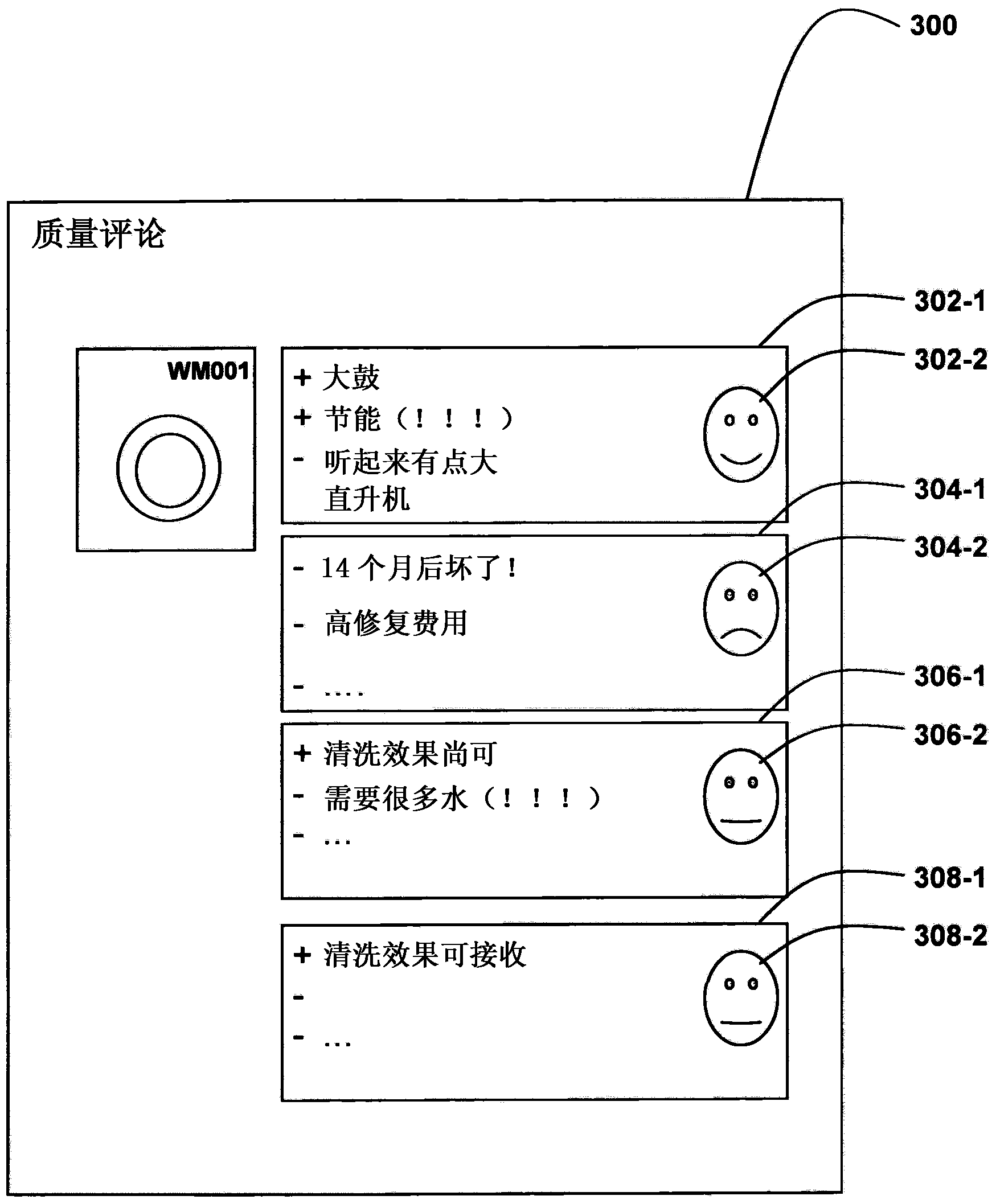

A method for determining a sentiment from a text

Method for determining a sentiment, including determining, from a text including formatting information related to parts of the text, a sentiment expressed by at least one of the parts, wherein the sentiment is determined automatically using a microprocessor and depends on the formatting information related to the at least one of the parts.

Owner:SONY CORP

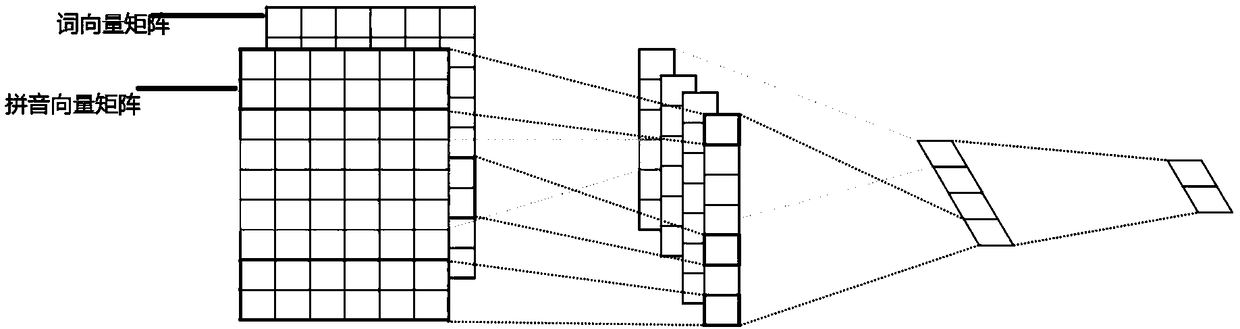

Language text processing method and device and storage medium

ActiveCN109271493AImprove accuracyPrevent deviationText database queryingExpressed emotionText processing

The invention discloses a language text processing method and device and a storage medium, which are used for improving the accuracy of the emotion polarity analysis result expressed by the language text. The language text processing method comprises the following steps: obtaining the language text to be processed; Word segmentation is performed on the language text to be processed to obtain a first word segmentation object, including words obtained by word segmentation and corresponding phonetic alphabet; in accordance with the result of word segmentation, utilizing a vector conversion modelto convert the first word segmentation object into a first word segmentation object vector, the vector conversion model being obtained by training the first word segmentation object contained in the first sample data according to the distance between the first word segmentation object in the first sample data and the affective polarity label of the first word segmentation object; and according tothe first word segmentation object vector, utilizing the emotion polarity prediction model to predict the corresponding emotion polarity type of the language text to be processed, the emotion polarityprediction model being obtained by using the second sample data with emotion polarity labels.

Owner:TENCENT TECH (SHENZHEN) CO LTD

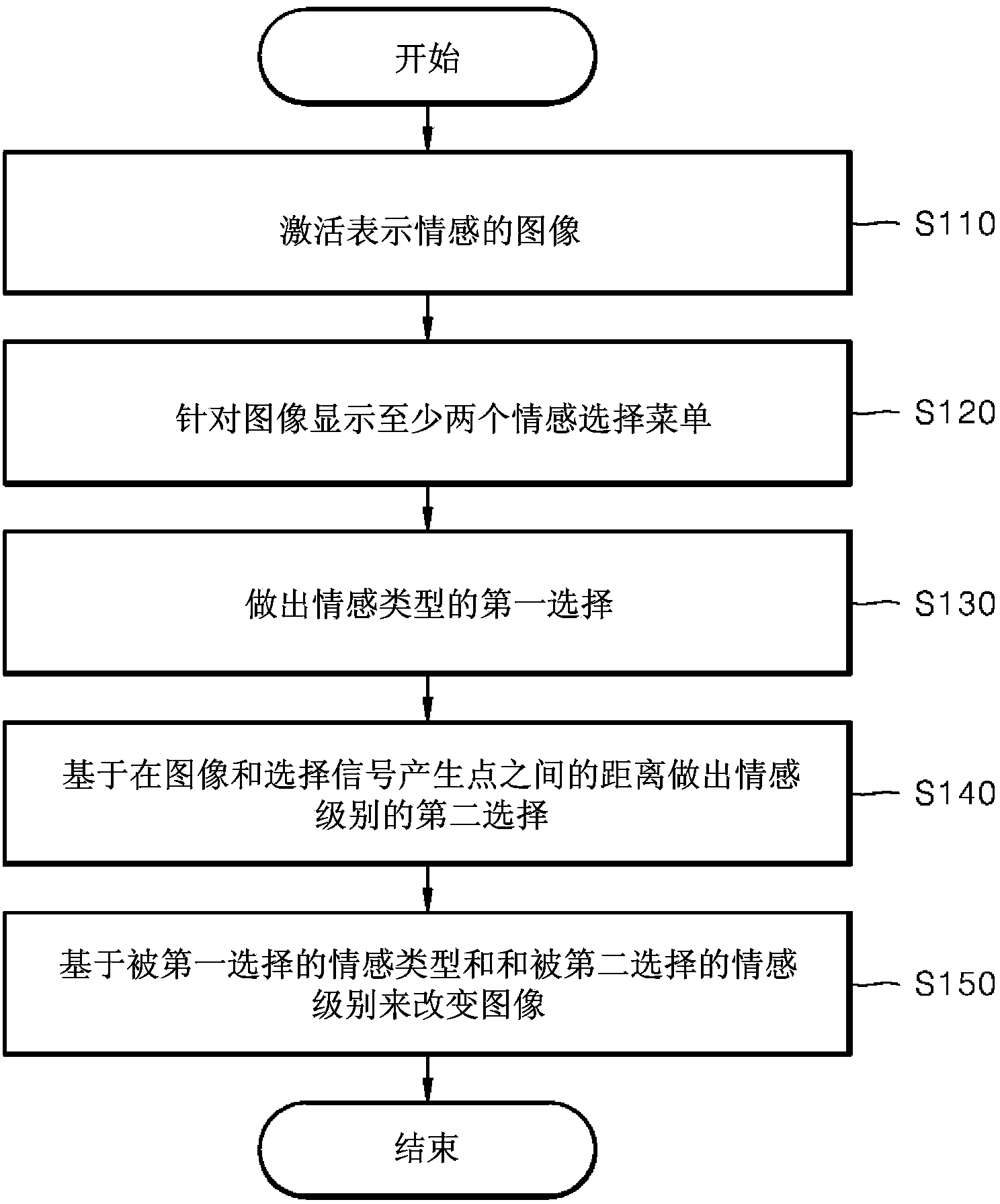

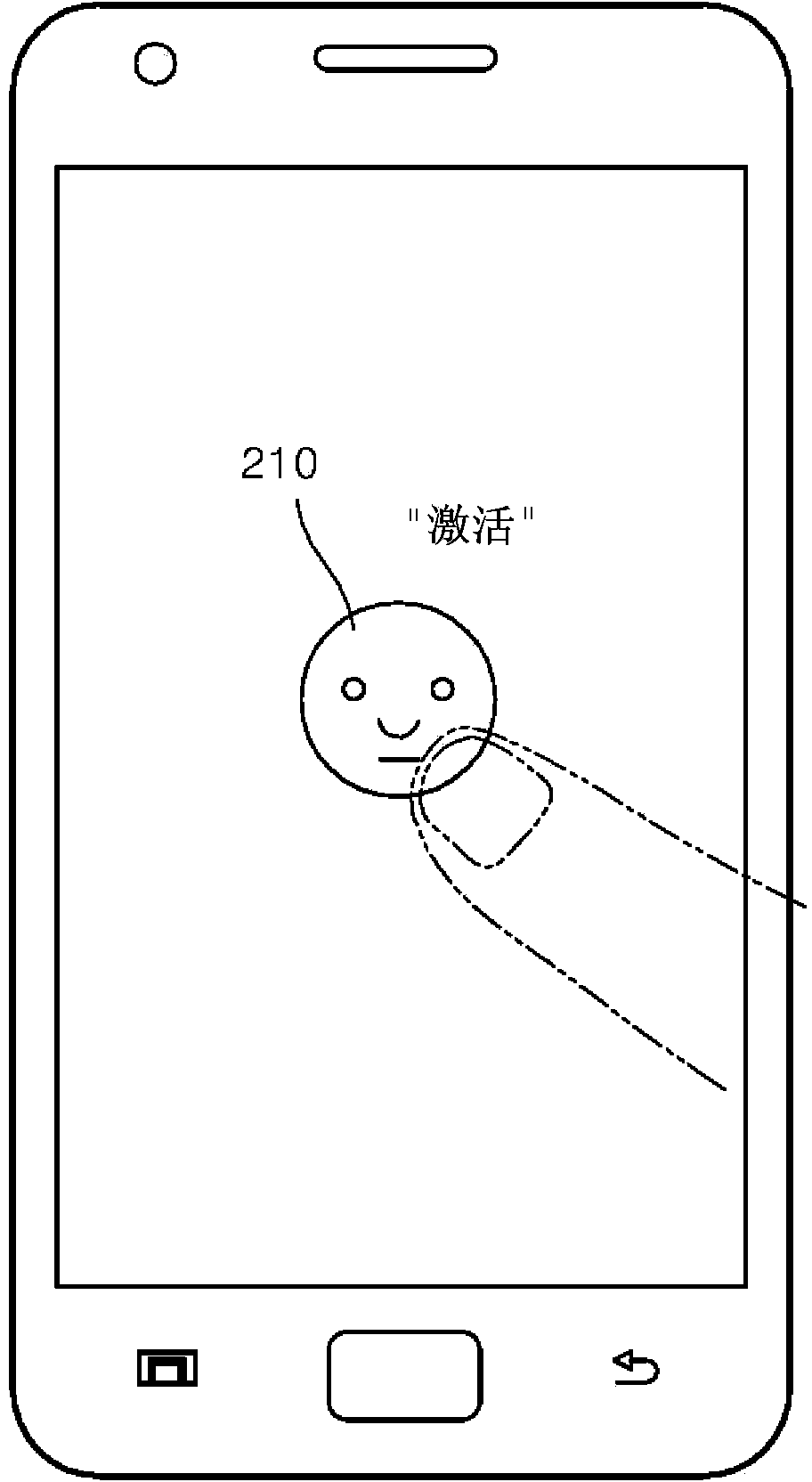

Method and system for expressing emotion during game play

ActiveCN104252291AEffective and fast emotional communicationQuick responseVideo gamesInput/output processes for data processingPattern recognitionDisplay device

The present disclosure provides a method for expressing an emotion during game play that may determine and transmit a user emotion effectively and make a prompt response to an emotion expression of an opponent in a fast-paced game situation, and a control apparatus therefor. The present invention provides a method for expressing a user emotion during game play, the method comprising: displaying an emotion image received from an opponent terminal on a display of a terminal in which a game is played; displaying at least two emotion menus with respect to the displayed emotion image; making a first selection of an emotion type based on a user selection signal for any one of the displayed at least two emotion menus; making a second selection of an emotional scale based on a distance between a location on the display where the user selection signal is generated and the emotion image; changing the emotion image based on the first selected emotion type and the second selected emotional scale; and generating the changed emotion image into a response emotion image and transmitting the response emotion image to the opponent terminal.

Owner:笑门信息科技有限公司

Neuroplasticity games for social cognition disorders

A training program is configured to systematically drive neurological changes to overcome social cognitive deficits. Various games challenge the participant to observe gaze directions in facial images, match faces from different angles, reconstruct face sequence, memorize social details about sequentially presented faces, identify smiling faces, find faces whose expression matches the target, identify emotions implicitly expressed by facial expressions, match pairs of similar facial expressions, match pairs of emotion clips and emotion labels, reconstruct sequences of emotion clips, identify emotional prosodies of progressively shorter sentences, match sentences with tags that identify emotional prosodies with which they are expressed, identify social scenarios that best explain emotions expressed in video clips, answer questions about social interactions in multi-segmented stories, choose expressions and associated prosodies that best describe how a person would sound in given social scenarios, and / or understand and interpret gradually more complex social scenes.

Owner:POSIT SCI CORP

Method for expressing emotion in a text message

InactiveUS20110055440A1Facilitate dynamically indicating emotionsImage analysisNatural language data processingAnimationExpressed emotion

In one embodiment of the present invention, while composing a textual message, a portion of the textual message is dynamically indicated as having heightened emotional value. In one embodiment, this is indicated by depressing a key on a keyboard for a period longer than a typical debounce interval. While the key remains depressed, a plurality of text parameters for the character associated with the depressed key are accessed and one of the text parameters is chosen. Animation processing is then performed upon the textual message and the indicated portion of the textual message is visually emphasized in the animated text message.

Owner:SONY CORP +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com