Voice annotation method for Chinese speech emotion database combined with electroglottography

An electroglottic map and voice annotation technology, applied in speech analysis, instruments, etc., can solve the problems of lack of unified standards and complex emotions in the emotional voice database, and achieve comprehensive and detailed annotation information, comprehensive annotation information, and accurate segmentation. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

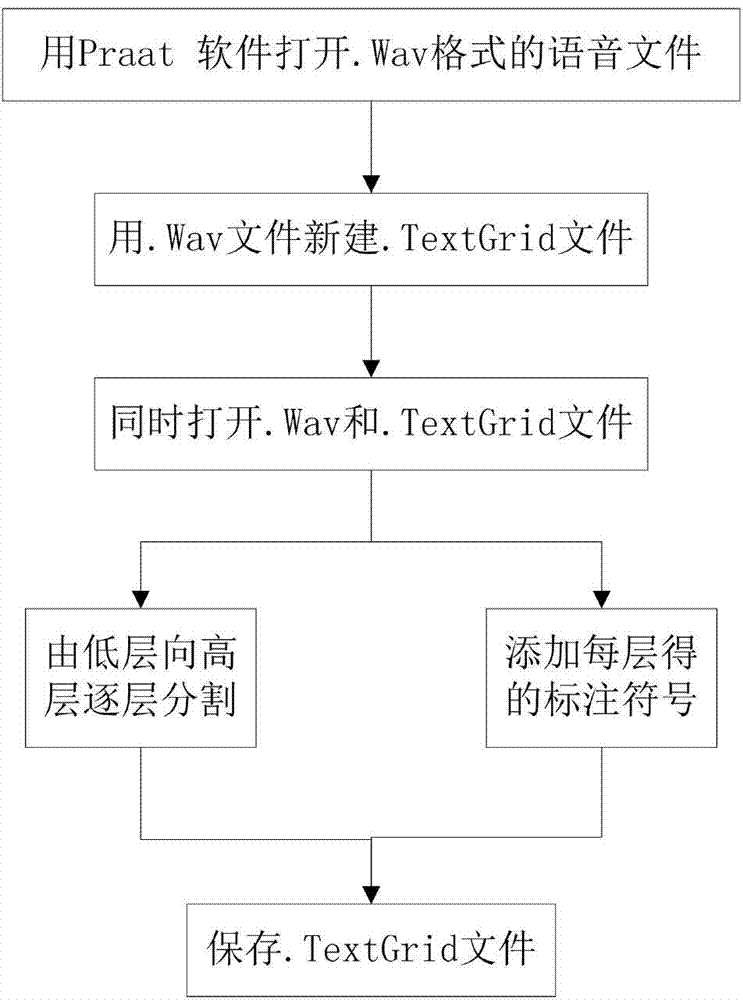

[0023] The technical solution of the present invention will be further described below in conjunction with the accompanying drawings.

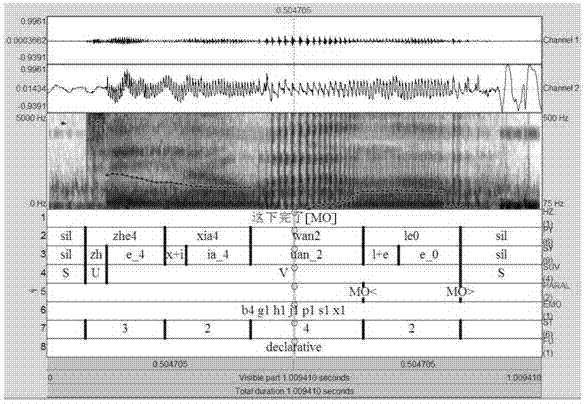

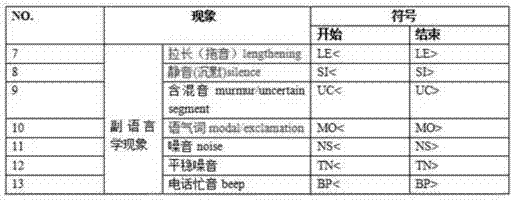

[0024] The present invention proposes a voice labeling method of a Chinese speech emotion database combined with electroglottograms. On the basis of collecting voice signals, the electroglottogram signals are collected at the same time. The electroglottogram directly reflects the vibration information of the vocal cords, avoiding Noise interference during channel modulation and sound propagation, thereby improving the accuracy of speech annotation. The main labeling content of this voice labeling method includes marking eight layers of information for each voice at the same time, which are: the first layer, the text conversion layer, to clarify the speaker's speech content and the corresponding paralinguistic information; the second layer, the syllable layer, labeling The regular pinyin and tone of each syllable; the third layer, the consonant...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com