Aerial photography data target positioning method and system based on deep learning

A technology of target positioning and deep learning, which is applied in the field of image processing and target detection of aviation systems, can solve the problems of low accuracy rate and low automatic detection rate, and achieve high accuracy rate, high target positioning accuracy, and good robust performance Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0048] Such as figure 1 As shown, a deep learning-based aerial data target positioning method includes the following steps:

[0049] S1. Obtain aerial photography data, and preprocess the aerial photography data;

[0050] S2. Input the aerial photography data into the pre-trained neural network; output the target type and target position;

[0051] S3. Acquiring body orientation information, image capture information, and target position; calculating target positioning information through body orientation information, image capture information, and target position.

[0052] Further, the aerial photography data may be picture data and / or video data.

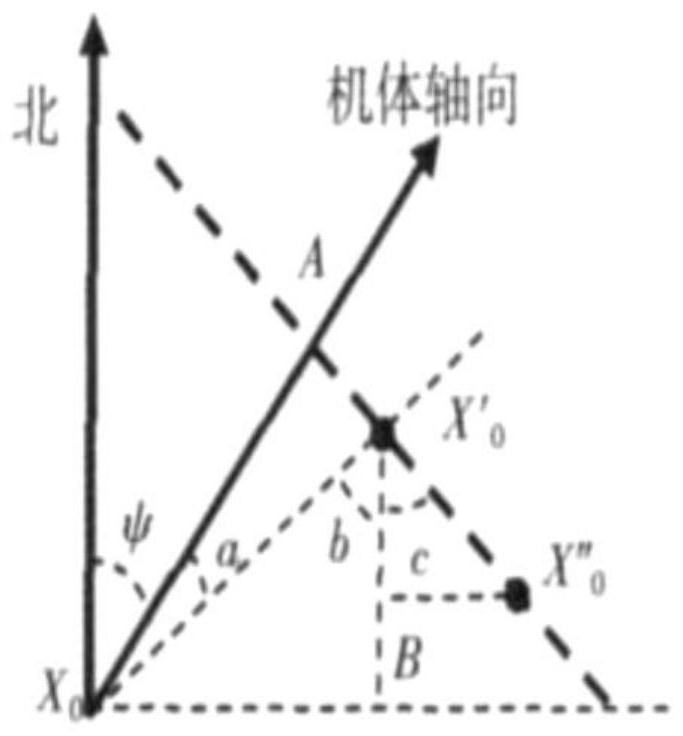

[0053] The body orientation information is used to represent the attitude and position of the body when the UAV is shooting the current aerial data, which can be directly obtained from the inertial navigation system of the body; the image shooting information is used to represent the posture and position of the shooting equipment...

Embodiment 2

[0086] This embodiment provides an aerial photography positioning system based on deep learning, which may include: a raw data management module: used to collect and manage aerial photography data such as images and videos used for model training, realize storage, query, access, and extraction functions, and can support . Files in jpg, .png, .tif, .avi, .mp4, .tfw, .xml and other formats; data enhancement and expansion module: used for data enhancement and expansion of aerial data such as images and videos; data processing and feature extraction module: used for Data analysis, preprocessing, and auxiliary network training to achieve feature extraction; feature data management module: used to store and manage feature model data for model training; model training module: used to build deep learning network layers and training optimization; target recognition and detection Output module: used for feature data and deep convolutional neural network to complete target recognition, po...

Embodiment 3

[0088] For testing the actual effect of the present invention, test by Google map simulation software. Among them, the posture of the drone is as follows: Figure 4 Shown; the parameter information of the UAV is as follows Figure 5 shown. Set the latitude of the drone: 30.63080924, the longitude of the drone: 104.08204021, the heading is 10° east by north, the pitch angle is 5° vertical, the roll angle is 0°, and the cruising altitude is 3000 meters; select a university track and field stadium as the inspection Go out target, utilize relevant tool to derive aerial photograph image and airframe orientation information, image capture information; Wherein the pixel of the aerial photograph image derived is 1280*720, and target center pixel is (649,463), width and height are 44*71 pixel; Practical institute of the present invention The proposed method calculates that the longitude and latitude results of the image center point are: latitude 30.63013788, longitude 104.08251741. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com