Multi-machine multi-card hybrid parallel asynchronous training method for convolutional neural network

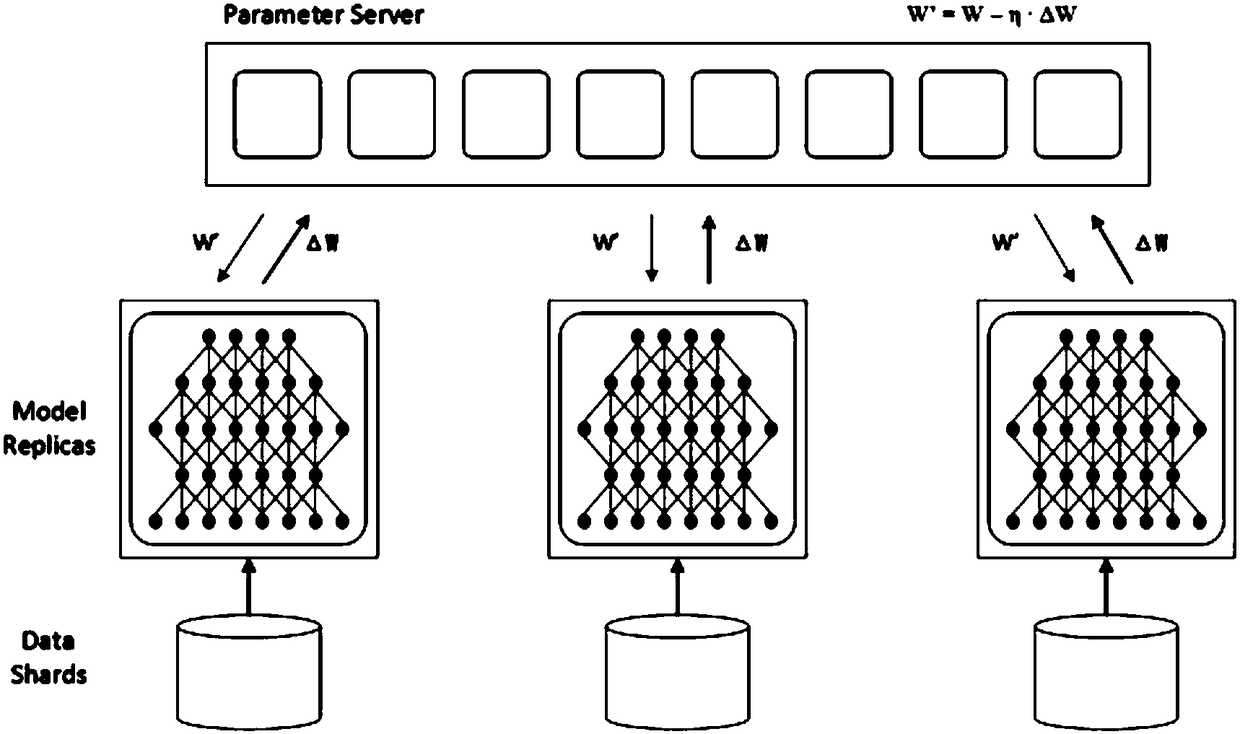

A convolutional neural network, multi-machine multi-card technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve the problems of long waiting time for synchronization and low GPU utilization, and reduce waiting time , improve training efficiency, and increase the number of GPUs

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0047] A multi-machine multi-card hybrid parallel asynchronous training method oriented to convolutional neural networks proposed in this embodiment includes the following steps:

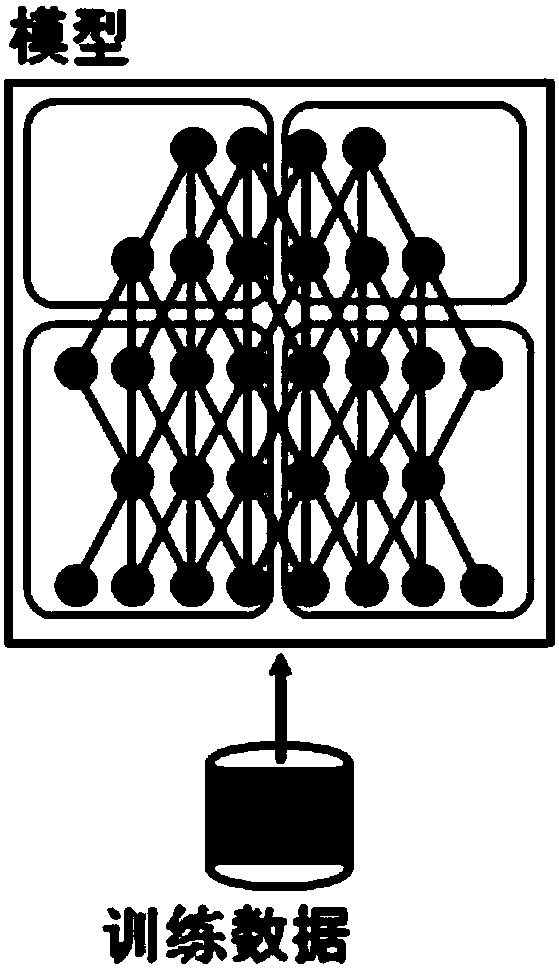

[0048] Step S1: Build a CNN model, set training parameters, and ensure that each machine and each GPU can run normally, and that there is no abnormality in network communication.

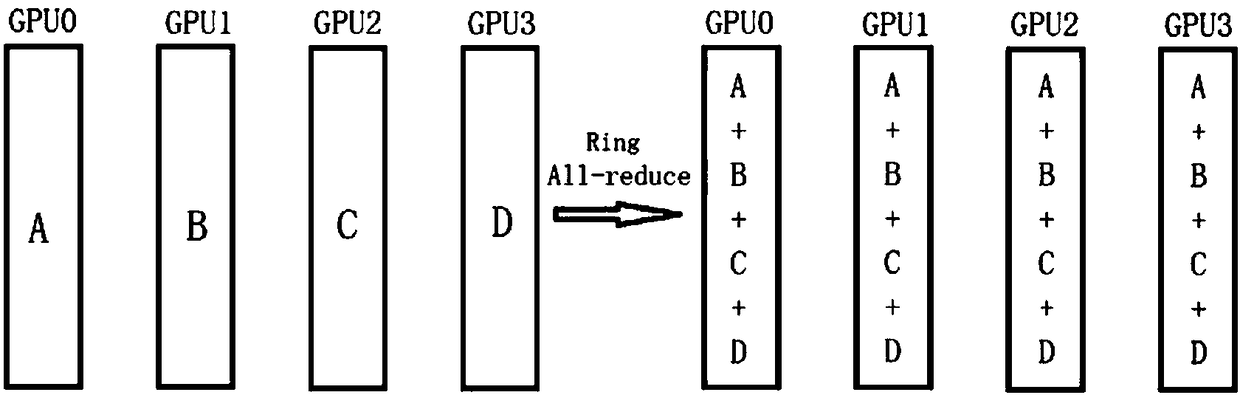

[0049] Step S2: Change the last layer to model parallelism, then a complete model is divided into 4 slices, which are calculated on 4 GPUs respectively, and communication of model parameters is no longer required. In the traditional GPU parallel method, the last layer is also data parallel, but the present invention adopts the All-gather algorithm of GPU, so that all data information (all picture features in this embodiment) can be obtained on each GPU. The GPU can train its own model slices based on all the data information, ensuring that each part of the model is learned from all the data.

[0050] The further implemen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com