Design method for Cache control unit of protocol processor

A protocol processor and control unit technology, applied in the computer field, can solve the problems of reducing protocol processing efficiency, unable to synchronize data, increasing system delay, etc., to achieve the effect of processing complete pipeline, improving throughput, and reducing blocking

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

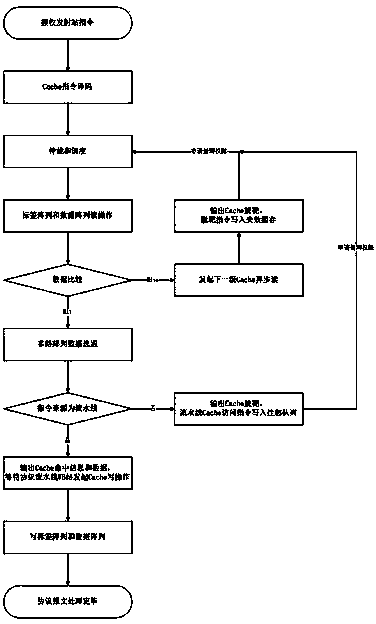

[0031] The method of the present invention is described in detail below with reference to the accompanying drawings.

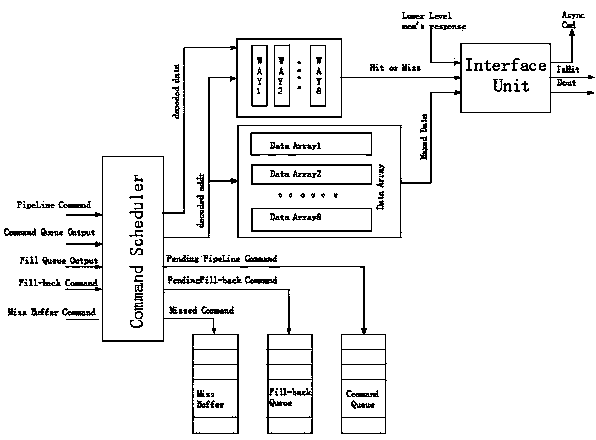

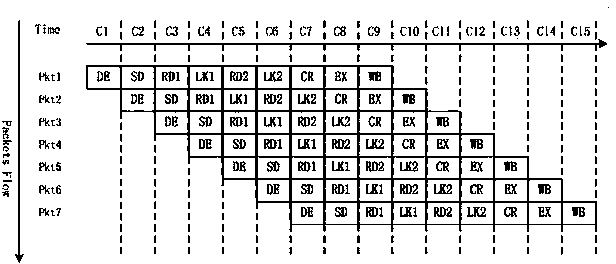

[0032] attached figure 1 Describes the functional module division of the protocol processing pipeline Cache control unit, in which the scheduling module (Command Scheduler) receives five different sources (pipeline access command, pipeline command pending queue, backfill command, backfill command pending queue, invalidation buffer command) Cache accesses instructions, arbitrates and schedules them. The invalidation buffer module (Miss Buffer) buffers the missing (Miss) instruction, and waits for the refill to be reactivated. The fill-back queue (Fill-back Queue) stores the backfill commands that have not obtained processing authority, and waits for the punching to be successfully reprocessed. The tag array (Tag Array) carries out the indexing of the multi-way group connected Cache, and calculates the hit information and the multi-way selection signal of the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com