Video frame insertion method based on spatio-temporal joint attention

An attention and video frame technology, applied in the fields of video processing, slow motion generation, and video post-processing, it can solve the problems of motion edge artifacts, inaccurate frame insertion results, etc., to improve accuracy, improve video frame insertion speed, The effect of low network parameters

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] Below in conjunction with the accompanying drawings and specific embodiments, the present invention is described in further detail:

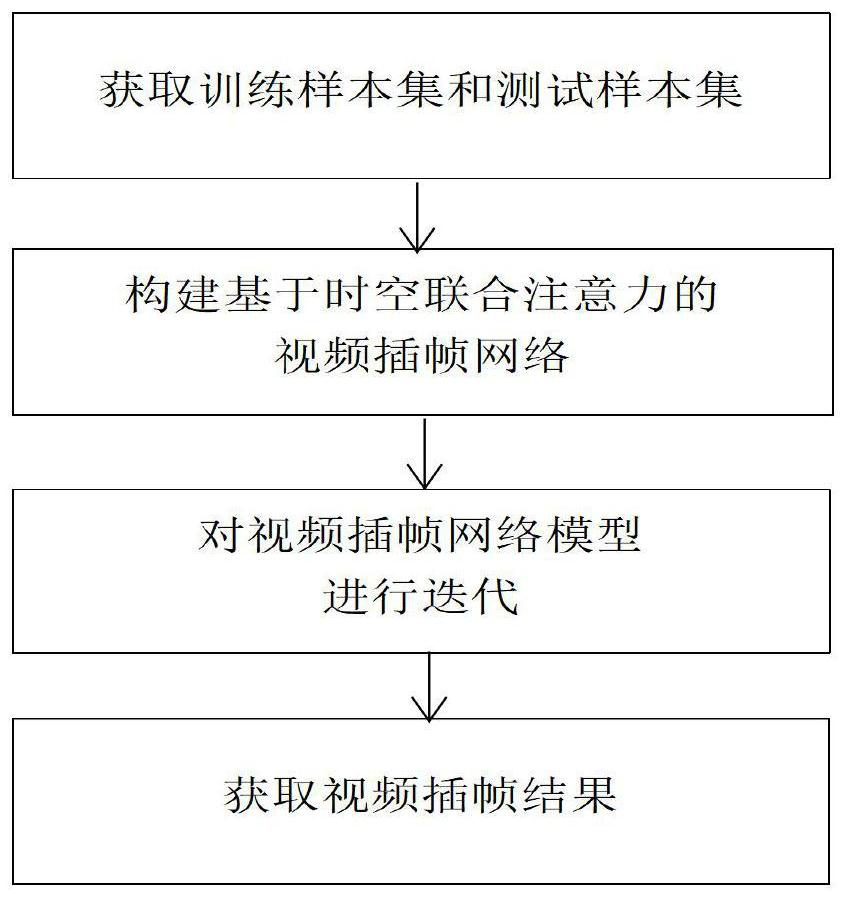

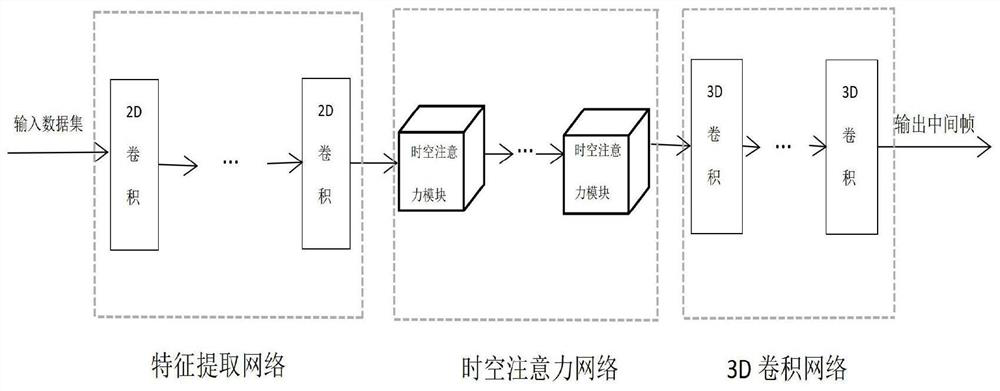

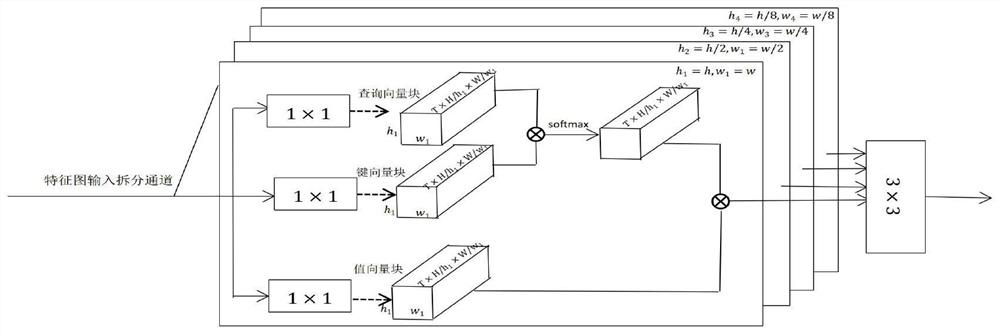

[0030] refer to figure 1 , the present invention comprises the steps:

[0031] Step 1) Obtain training sample set and test sample set:

[0032] For each selected V original videos including L image frames, each frame image is clipped through a clipping window with a size of H×W to obtain a video frame sequence corresponding to each original video after preprocessing, where H= 448, W=256 represent the length and width of the cropping window respectively, and mark the odd-numbered image frames and the even-numbered image frames in the video frame sequence corresponding to each original video after preprocessing, respectively, and then R image frames video frames with markers V 1 ={V 1 r |1≤r≤R} as the training sample set, the remaining S image frames are labeled with the video frame sequence As a test sample set, L=7, V=7564, R=3782,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com