Text processing method and system based on parallel zero-redundancy long short-term memory network

A long-short-term memory and text processing technology, applied in unstructured text data retrieval, neural learning methods, electrical digital data processing, etc., can solve problems such as unfixed number of cycles, one-way propagation, and non-support for parallel computing, etc., to achieve The effect of high abstraction, improved accuracy and improved efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

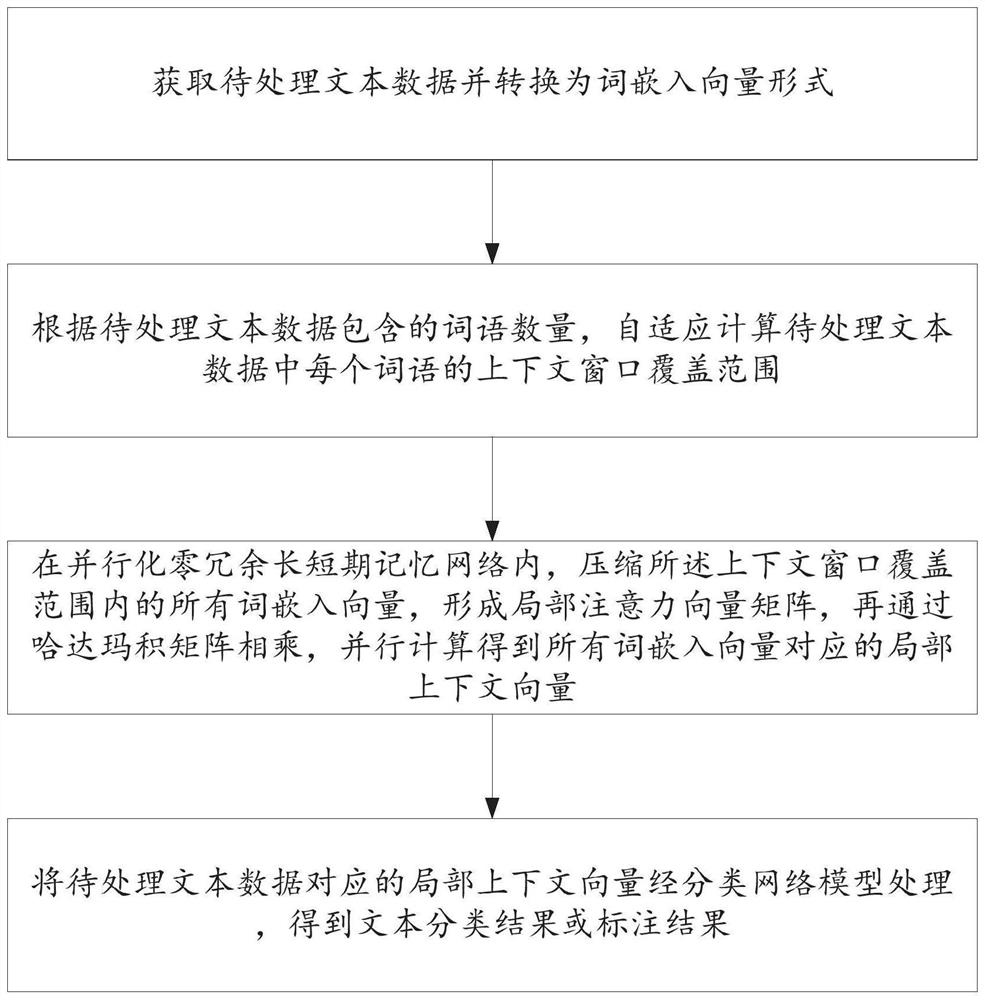

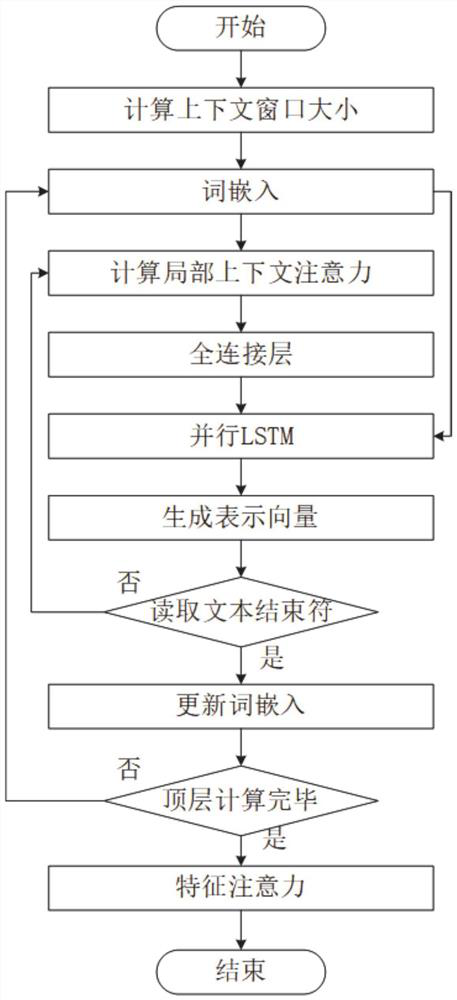

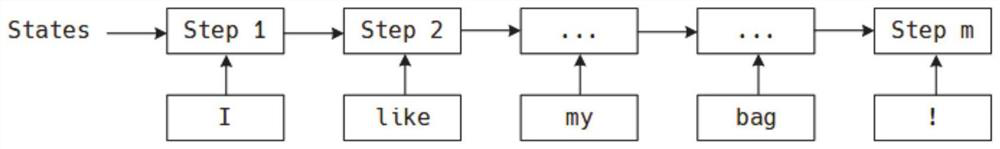

[0075] Such as figure 1 As shown, this embodiment provides a text processing method based on a parallelized zero-redundancy long-short-term memory network, which specifically includes the following steps:

[0076] Step 1: Obtain the text data to be processed and convert it into word embedding vector form.

[0077] Step 2: Adaptively calculate the context window coverage of each word in the text data to be processed according to the number of words contained in the text data to be processed.

[0078] In this embodiment, the calculation process of the coverage of the context window is:

[0079] According to the number of words contained in the text data to be processed and the number of layers of the parallelized zero-redundancy long-short-term memory network and then rounded up, the context window coverage of each word in the text data to be processed is obtained.

[0080] Wherein, the parallelized zero-redundancy long-short-term memory network is pre-trained, and the number ...

Embodiment 2

[0136] This embodiment provides a text processing system based on a parallelized zero-redundancy long-short-term memory network, which specifically includes the following modules:

[0137] (1) A word embedding vector conversion module, which is used to obtain text data to be processed and convert it into a word embedding vector form.

[0138] (2) A context window determination module, which is used to adaptively calculate the context window coverage of each word in the text data to be processed according to the number of words contained in the text data to be processed.

[0139] Wherein, the size of the coverage of the context window determines how many semantic features each word embedding vector corresponds to.

[0140] In the context window determination module, the calculation process of the context window coverage is:

[0141] According to the number of words contained in the text data to be processed and the number of layers of the parallelized zero-redundancy long-shor...

Embodiment 3

[0146] This embodiment provides a computer-readable storage medium, on which a computer program is stored, and when the program is executed by a processor, the text processing method based on the parallelized zero-redundancy long-short-term memory network as described in the first embodiment is implemented. in the steps.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com