Feature visualization method and system of convolutional neural network model based on sparse attention

A convolutional neural network and attention technology, applied in the field of image classification feature visualization, can solve the problems of being unable to locate the largest contribution of image classification results, unable to explain the classification results, etc., to facilitate classification decision-making reasons, improve image classification accuracy, and improve The effect on classification accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

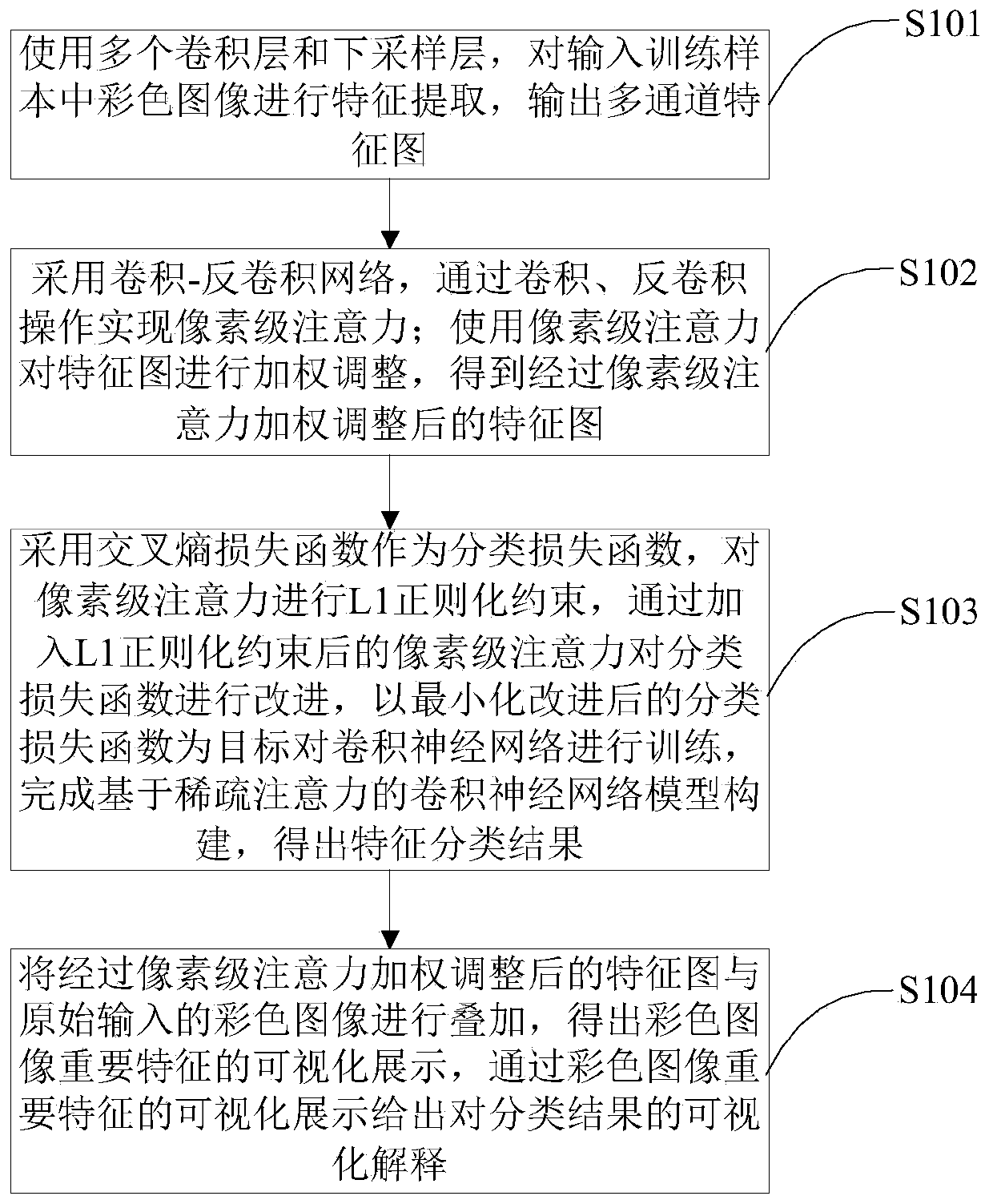

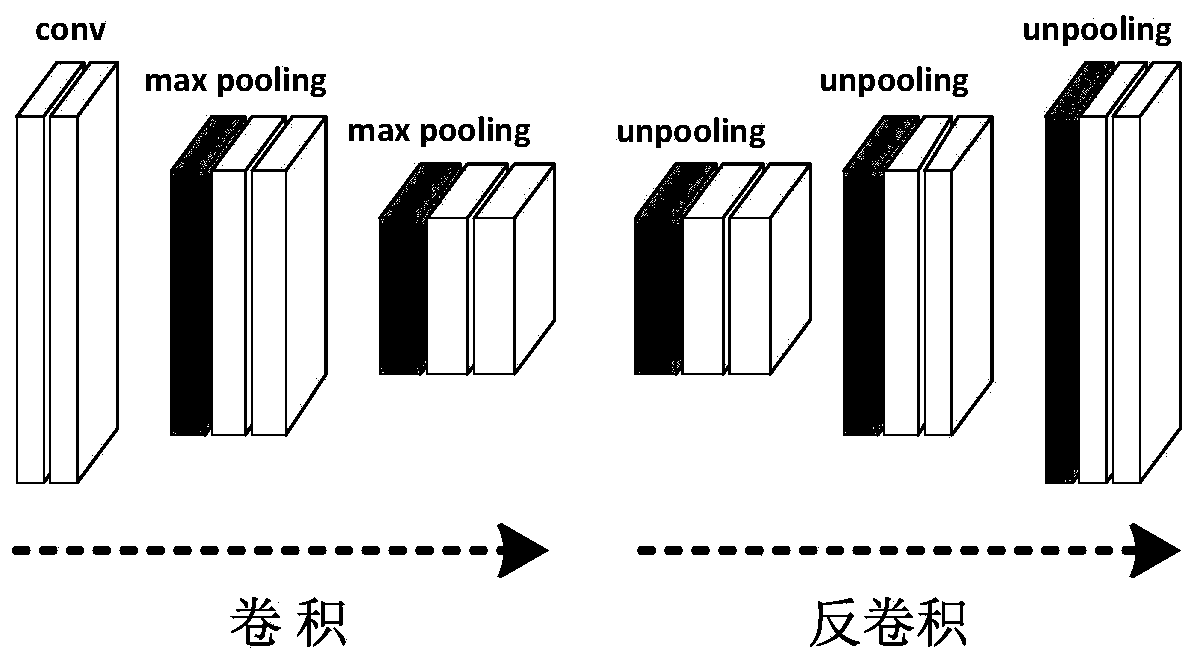

[0060] Such as figure 1 As shown, a feature visualization method based on a convolutional neural network model with sparse attention, including:

[0061] Step S101: using multiple convolutional layers and downsampling layers to extract features from the color images in the input training samples, and output multi-channel feature maps; the training samples are composed of multiple color images and corresponding category labels;

[0062] Specifically, you can design a convolutional layer that meets certain requirements, or you can use the feature extraction part of the commonly used convolutional neural network structure, such as AlexNet, VGGNet, ResNet and other convolutional neural networks and their variants.

[0063] For the input image N represents the number of samples, and the feature extraction process can be formalized as follows:

[0064] F = CONV(x; θ)

[0065] where x i represents the i-th color image, y i means x i Corresponding category label, feature map F ...

Embodiment 2

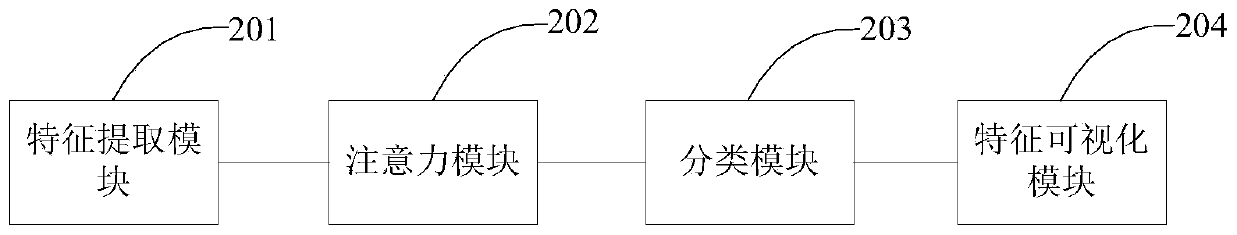

[0094] Such as image 3 As shown, a feature visualization system based on a convolutional neural network model with sparse attention, including:

[0095] The feature extraction module 201 is used to use multiple convolutional layers and down-sampling layers to perform feature extraction on the color image in the input training sample, and output a multi-channel feature map; the training sample is composed of a plurality of color images and corresponding category labels;

[0096] The attention module 202 is used to adopt the convolution-deconvolution network to realize pixel-level attention through convolution and deconvolution operations; use pixel-level attention to perform weighted adjustments on feature maps, and obtain weighted and adjusted pixel-level attention After the feature map;

[0097] The classification module 203 is used to adopt the cross-entropy loss function as the classification loss function, and perform L1 regularization constraints on the pixel-level atte...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com