Data processing method and device based on AI chip

A data processing and chip technology, which is applied in the field of data processing methods and equipment based on AI chips, can solve the problems of waste of computing resources and low data processing efficiency of AI chips, and achieve the goals of improving efficiency, realizing parallel computing, and reducing mutual waiting time Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

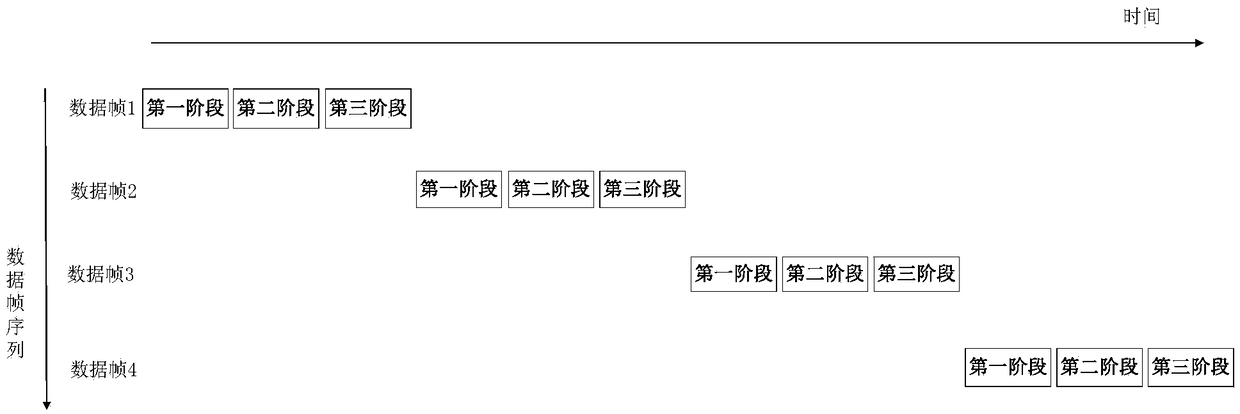

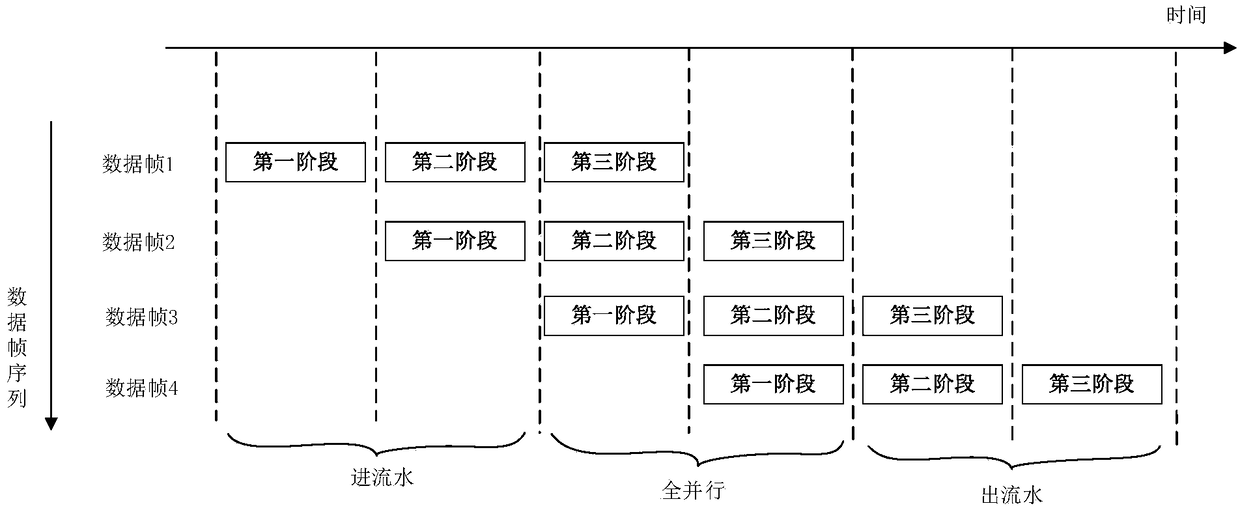

[0034] figure 1 It is a schematic diagram of data processing of an existing AI chip provided by Embodiment 1 of the present invention, figure 2 It is a schematic diagram of the pipeline structure of AI chip data processing provided by Embodiment 1 of the present invention. The embodiment of the present invention provides a data processing method based on the AI chip to solve the problems in the prior art that the CPU and the NPU wait for each other to cause waste of computing resources and AI chip data processing efficiency is low. The method in this embodiment is applied to an AI chip. The AI chip includes at least a first processor, a second processor, and a third processor. The third processor is used to perform neural network model calculations. The third processor includes multiple core.

[0035] Wherein, the third processor is a processor for calculating the neural network model, and the third processor includes multiple cores. For example, the third processor ma...

Embodiment 2

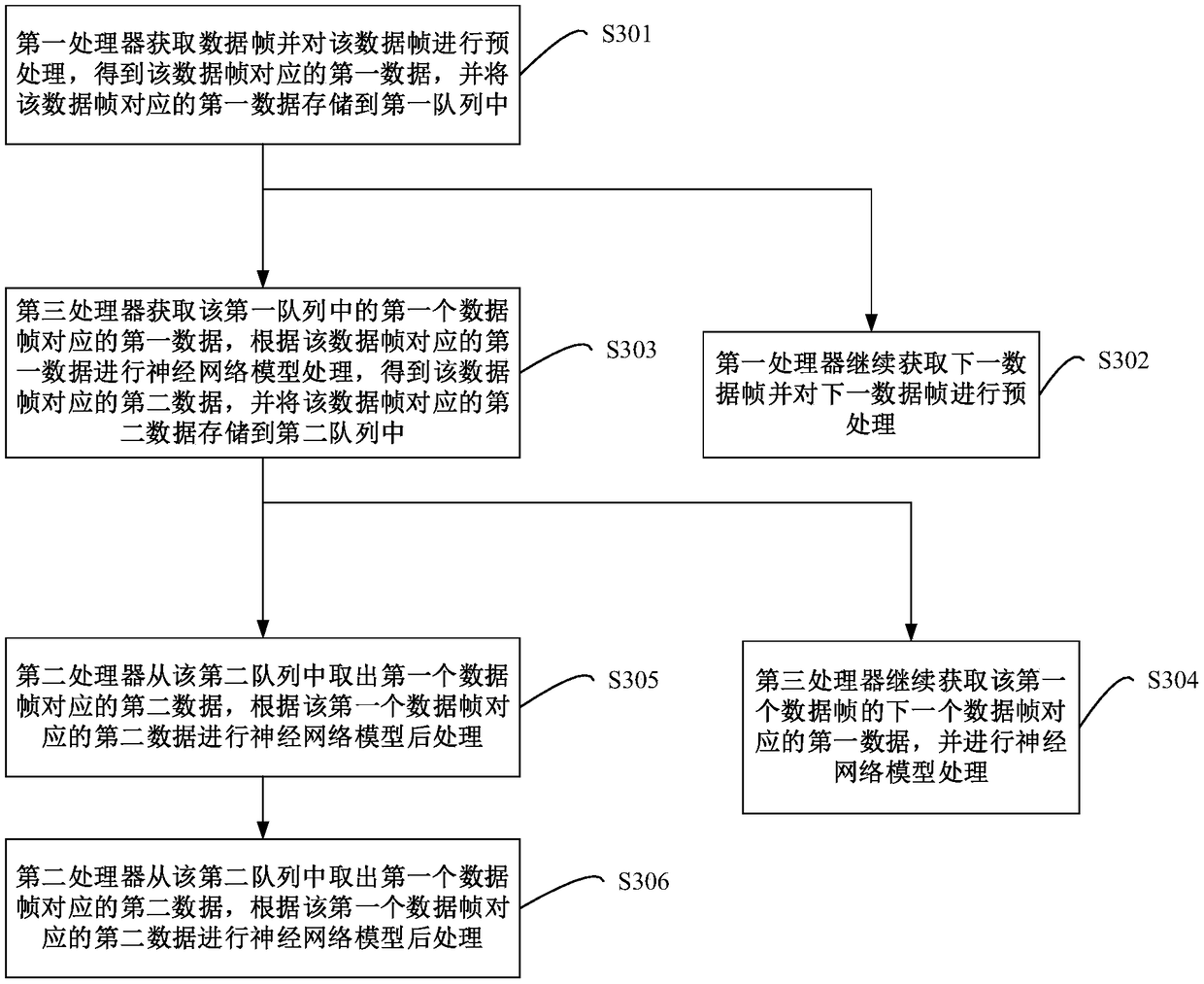

[0047] image 3 It is a flow chart of the AI chip-based data processing method provided by Embodiment 2 of the present invention. On the basis of the first embodiment above, in this embodiment, the specific process of the first processor, the second processor and the third processor performing the three stages of processing simultaneously will be described in detail. like image 3 As shown, the specific steps of the method are as follows:

[0048] Step S301, the first processor acquires a data frame and preprocesses the data frame to obtain first data corresponding to the data frame, and stores the first data corresponding to the data frame in a first queue.

[0049] In this embodiment, after the first processor performs preprocessing on the data frame, first data corresponding to the data frame is obtained, and the first data corresponding to the data frame is a processing result obtained by performing the first-stage processing on the data frame. The first data correspo...

Embodiment 3

[0080] Figure 4 It is a schematic structural diagram of the AI chip provided by Embodiment 3 of the present invention. The AI chip provided in the embodiment of the present invention can execute the processing flow provided in the embodiment of the data processing method based on the AI chip. like Figure 4 As shown, the AI chip 40 includes: a first processor 401, a second processor 402, a third processor 403, a memory 404, and computer programs stored in the memory.

[0081] The AI chip data processing pipeline is divided into the following three stages of processing: data acquisition and preprocessing, neural network model processing, and neural network model postprocessing; the processing of the three stages is a parallel pipeline structure.

[0082] When the first processor 401 , the second processor 402 and the third processor 403 run the computer program, the AI chip-based data processing method provided in any one of the above method embodiments is implem...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com