GPU core program reorganization and optimization method based on memory access divergence

A core program and optimization method technology, applied in the direction of multi-program device, program control device, resource allocation, etc., can solve the problem of low execution efficiency of GPUKernel

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

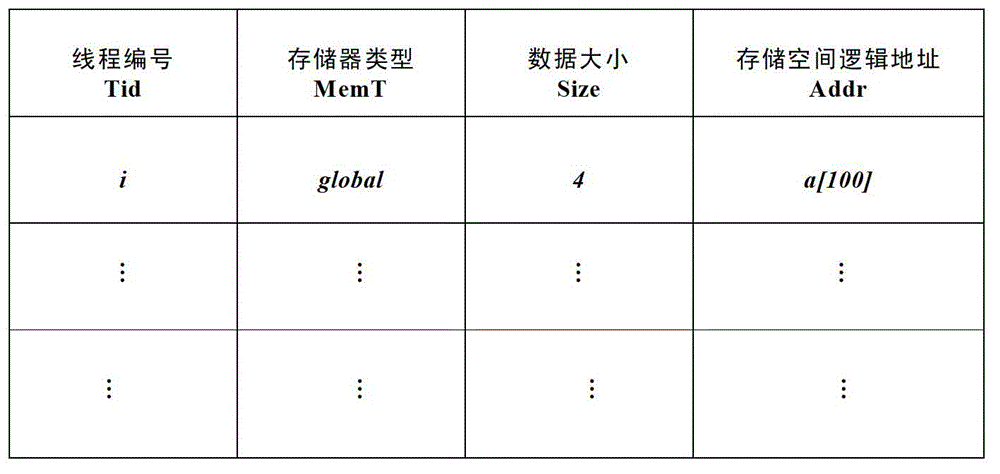

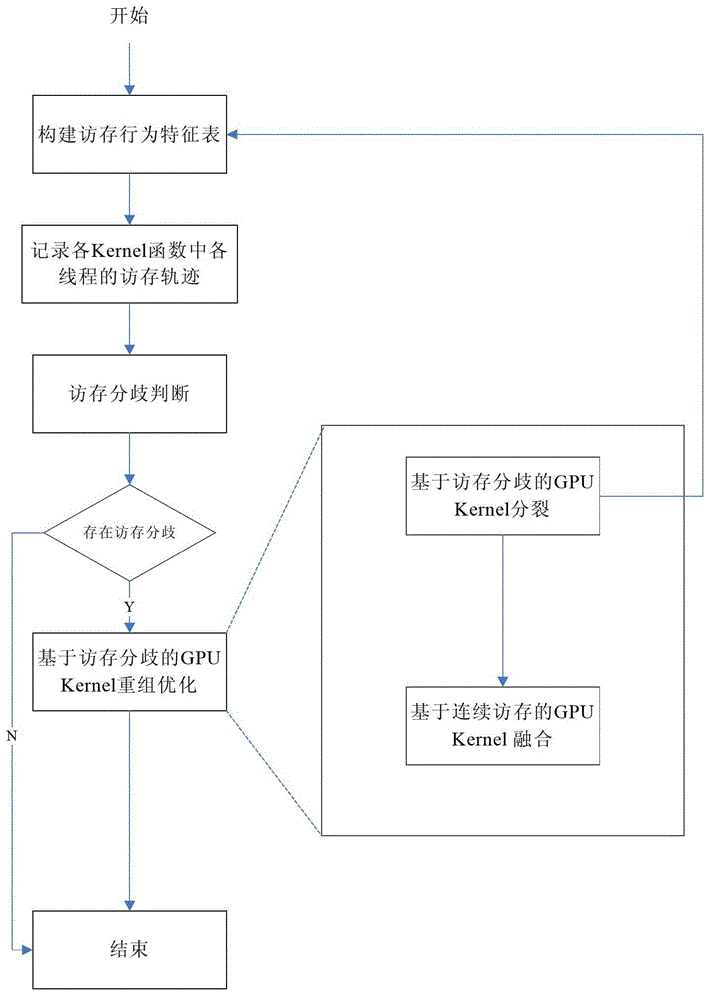

[0069] figure 1 In order to fetch the behavior feature table structure, the specific feature table establishment method is as follows:

[0070] Create a memory access behavior feature table for each Kernel function of the GPU program. The memory access behavior feature table contains four fields, namely: thread number Tid, memory type MemT accessed by the thread, data size accessed by the thread, and accessed Storage space logical address Addr. The thread number Tid represents the unique number of the thread in the Kernel function domain; the memory type MemT accessed by the thread represents the memory type accessed by the thread, and the memory type includes global memory Global, shared memory SharedMemory, texture memory TextureMemory and constant memory ConstantMemory; The data size Size indicates the number of bytes of storage space occupied by the data accessed by the thread; the logical address Addr of the storage space accessed by the thread indicates the address spac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com