Multi-target detection method and device based on multi-modal information fusion

A detection method and target detection technology, applied in neural learning methods, character and pattern recognition, biological neural network models, etc., can solve problems such as inability to make full use of the correlation between multimodal data, complex network structure, and sensitive data alignment , to achieve high algorithm efficiency, solve the problem of excessive computing cost, and improve efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

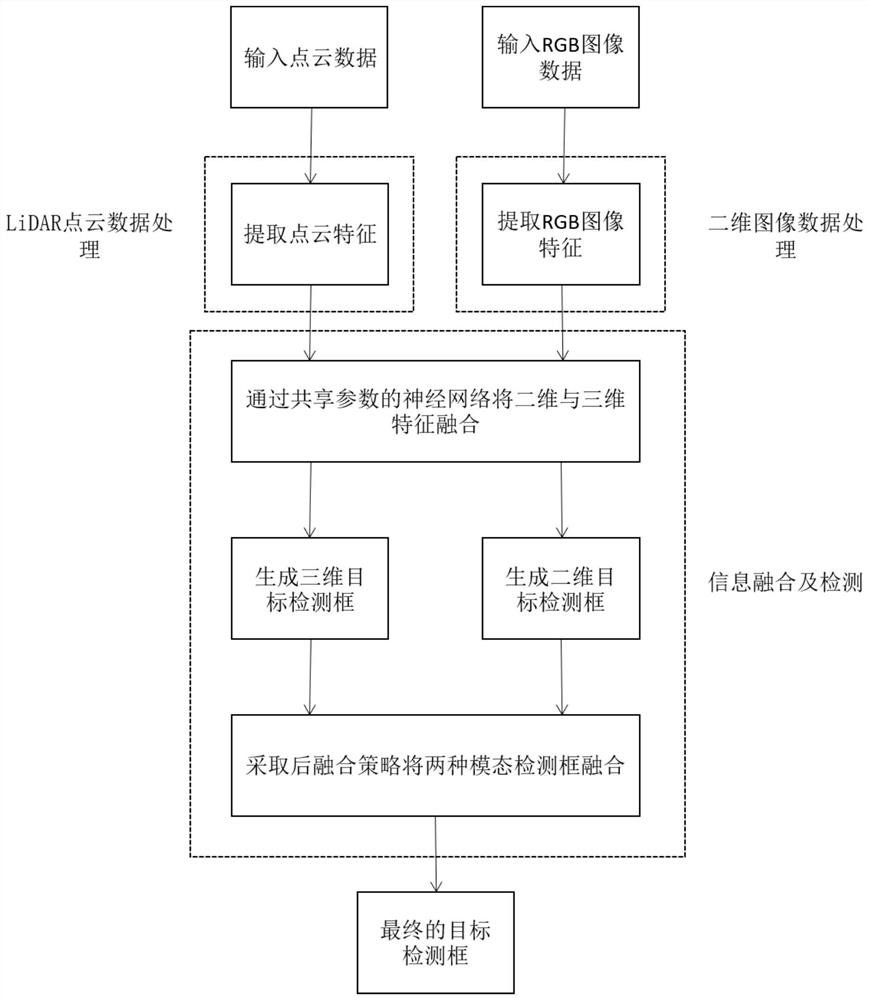

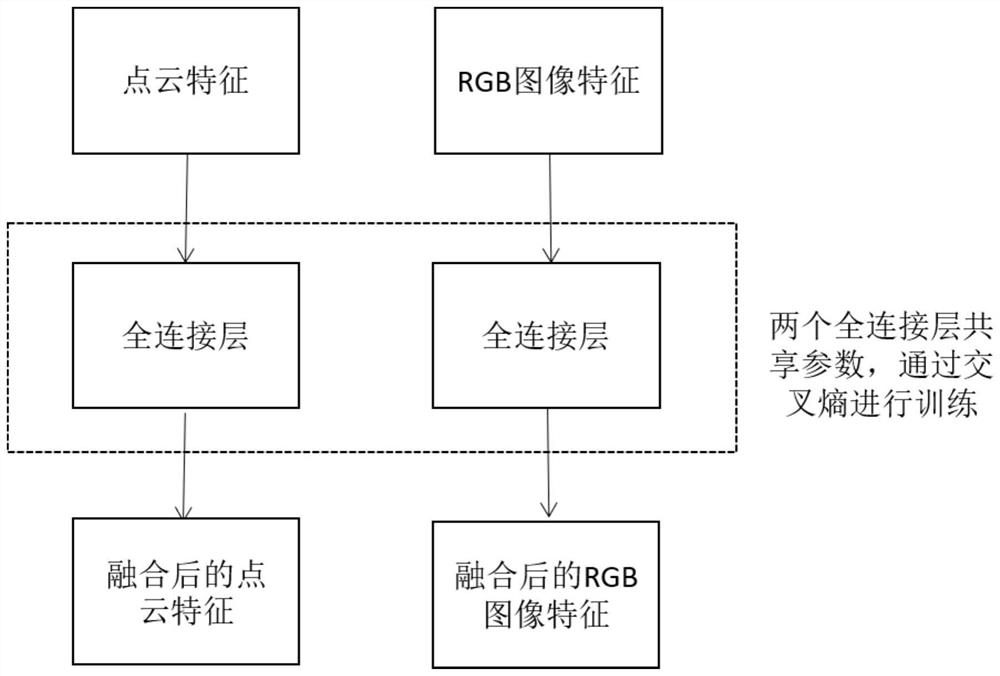

[0045] A multi-target detection method based on multi-modal information fusion, see figure 1 , the method includes the following steps:

[0046] 101: Process LiDAR point cloud data, and extract LiDAR point cloud features, that is, three-dimensional feature maps;

[0047] According to the sparsity of LiDAR data, the embodiment of the present invention adopts a resampling method and adds sampling points, which can increase the density of data to a certain extent, thereby improving the effect of the three-dimensional feature map and the effectiveness of detection.

[0048] 102: Perform two-dimensional image data processing on the RGB image, and output RGB image features through a feature extraction network, that is, a two-dimensional feature map;

[0049] Since two-dimensional images naturally lack three-dimensional information, in the detection stage after feature extraction, it is necessary to correlate with three-dimensional information based on spatial position and pixel info...

Embodiment 2

[0055] The scheme in embodiment 1 is further introduced below in conjunction with specific examples and calculation formulas, see the following description for details:

[0056] 201: Process LiDAR point cloud data, and output LiDAR point cloud features, that is, three-dimensional feature maps;

[0057] Specifically, the point cloud is evenly grouped into several voxels, and the sparse and uneven point cloud is converted into a dense tensor structure. The list of voxel features is obtained by stacking the voxel feature coding layer. The voxel features are aggregated in the enlarged receptive field, and the LiDAR point cloud features are output, that is, the three-dimensional feature map.

[0058] 202: Perform two-dimensional image data processing on the RGB image, and output RGB image features through a feature extraction network, that is, a two-dimensional feature map;

[0059] Specifically, a uniform grouping operation is performed on the two-dimensional RGB image, and the w...

Embodiment 3

[0093] Below in conjunction with specific example, the scheme in embodiment 1 and 2 is carried out feasibility verification, see the following description for details:

[0094] The KITTI dataset is used to evaluate the performance of the algorithm. The KITTI dataset is currently the largest algorithm evaluation dataset in the world for autonomous driving scenarios, including 7481 point clouds and images for training and 7518 point clouds and images for testing, including: cars, pedestrians and bicycles three categories of people. For each category, the detection results are evaluated according to three difficulty levels: easy, medium, and difficult. The three difficulty levels are determined according to the target size, occlusion state, and truncation level. The algorithm is comprehensively evaluated, and the training data is subdivided into a training set and a validation set, resulting in 3712 data samples for training and 3769 data samples for validation. After splitting...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com