Action recognition method based on event camera

An action recognition and event technology, applied in the field of computer vision, can solve the problems of difficult network training, large amount of input data, inoperability, etc., and achieve the effect of strong robustness, little redundancy, and strong real-time performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] Below in conjunction with accompanying drawing and embodiment the method of the present invention is further described:

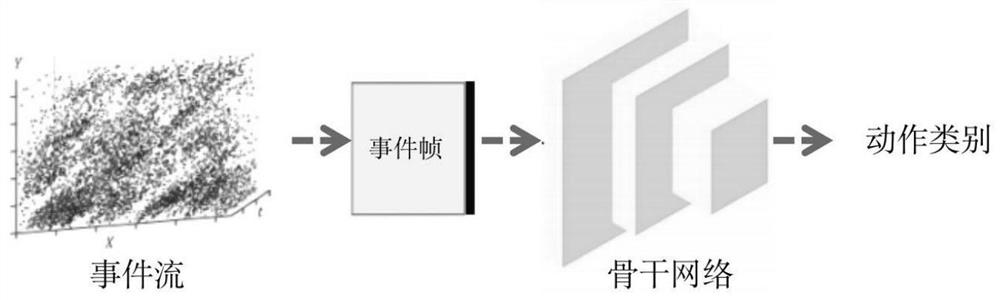

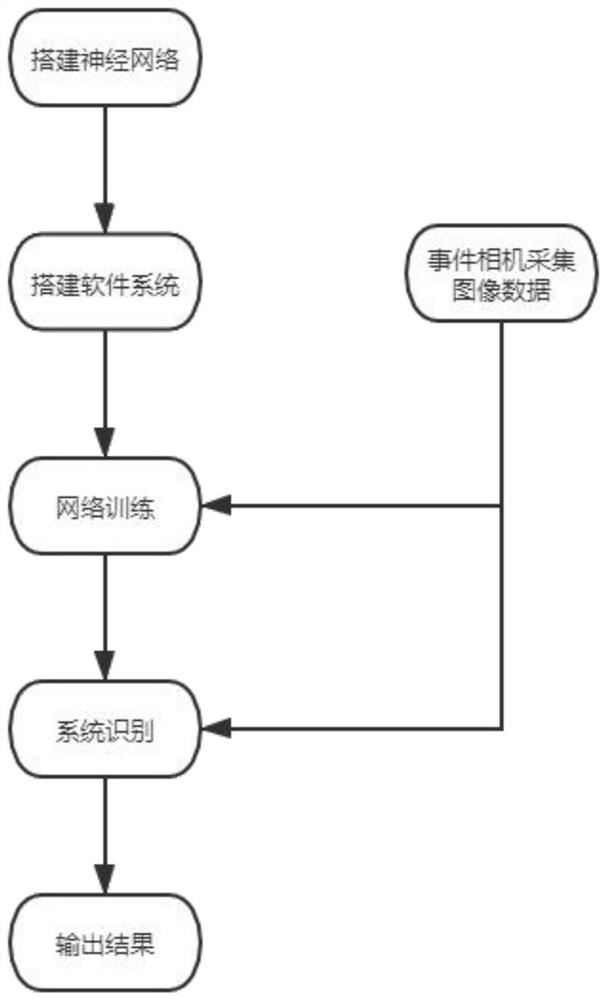

[0038] Such as figure 1 , figure 2 As shown, an action recognition method based on an event camera, the steps are as follows:

[0039] Step 1: Build the collection hardware.

[0040] This patent uses a DAVIS346 event camera as a collection device, uses a tripod to fix the camera in an indoor scene, connects the camera to a computer through a USB interface, and uses a DV platform to collect data. The acquisition time of the action is two seconds. For each action, three kinds of lighting conditions, namely overexposure, normal and underexposure, can be collected separately to verify the insensitivity of the event camera to light intensity. Each type of action should be controlled by a different People have done it multiple times in different scenarios.

[0041] The category of motion capture is category C, and specific constraints are made accordi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com