Compression method and device of neural network model

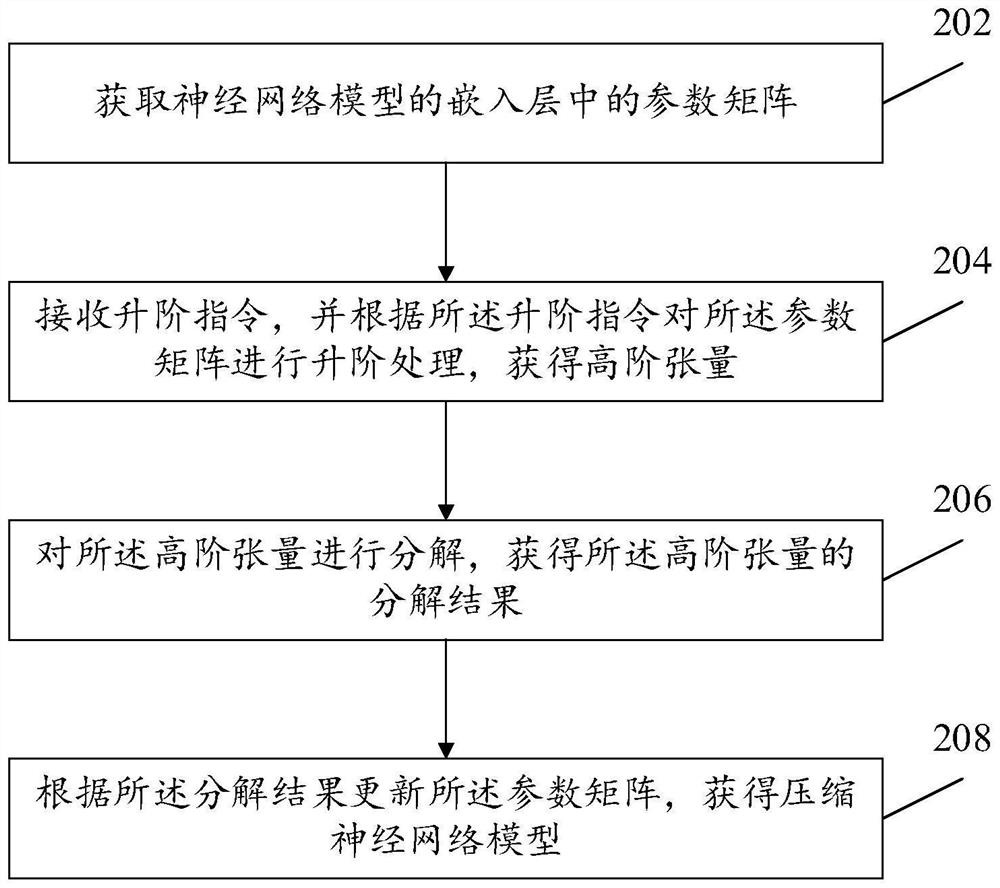

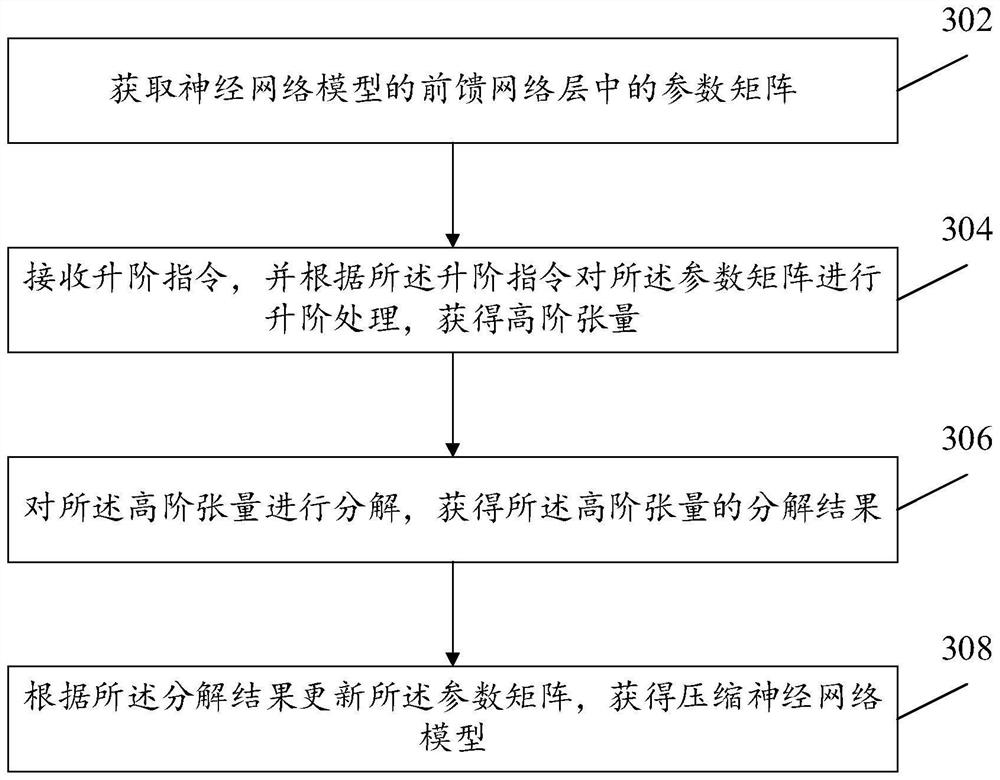

A technology of neural network model and compression method, which is applied in the field of neural network model compression, which can solve the problems of large amount of parameters, redundancy, and large volume of translation models, etc., and achieve the effect of reducing volume and parameter amount

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

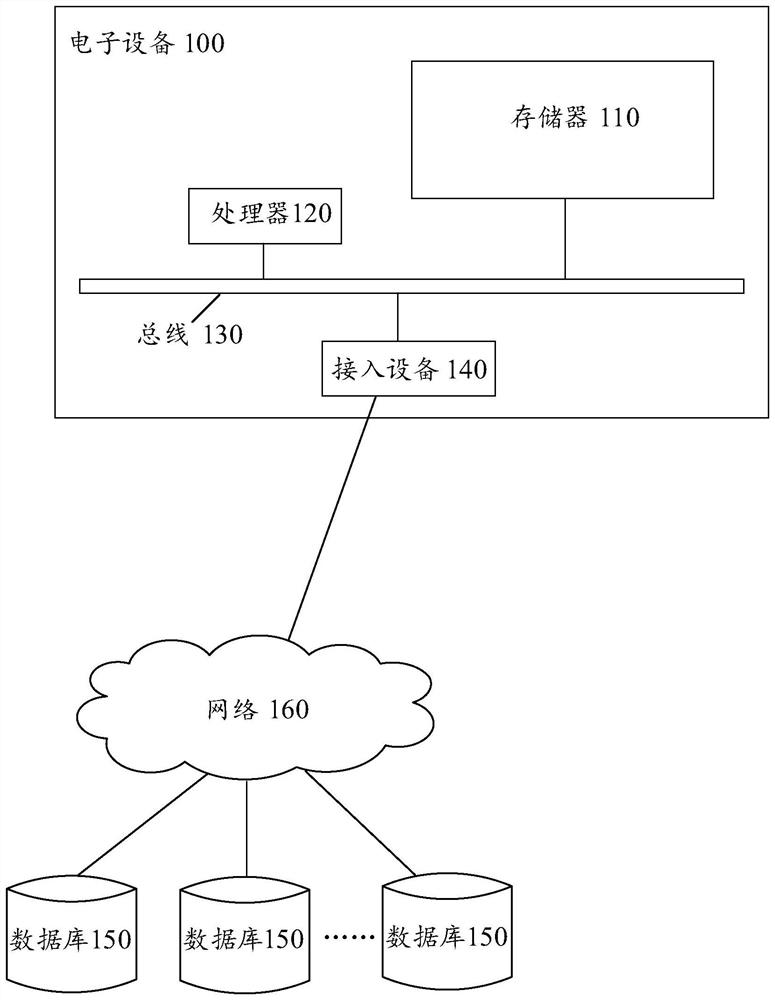

[0095] In the following description, numerous specific details are set forth in order to provide a thorough understanding of the application. However, the present application can be implemented in many other ways different from those described here, and those skilled in the art can make similar promotions without violating the connotation of the present application. Therefore, the present application is not limited by the specific implementation disclosed below.

[0096] Terms used in one or more embodiments of the present application are for the purpose of describing specific embodiments only, and are not intended to limit the one or more embodiments of the present application. As used in one or more embodiments of this application and the appended claims, the singular forms "a", "the", and "the" are also intended to include the plural forms unless the context clearly dictates otherwise. It should also be understood that the term "and / or" used in one or more embodiments of th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com