Systems and methods for two-dimensional fluorescence wave propagation onto surfaces using deep learning

A fluorescence and propagation matrix technology, applied in neural learning methods, fluorescence/phosphorescence, scientific instruments, etc., can solve problems such as aberrations, reduced photon efficiency of fluorescent signals, increased cost and complexity of optical settings, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

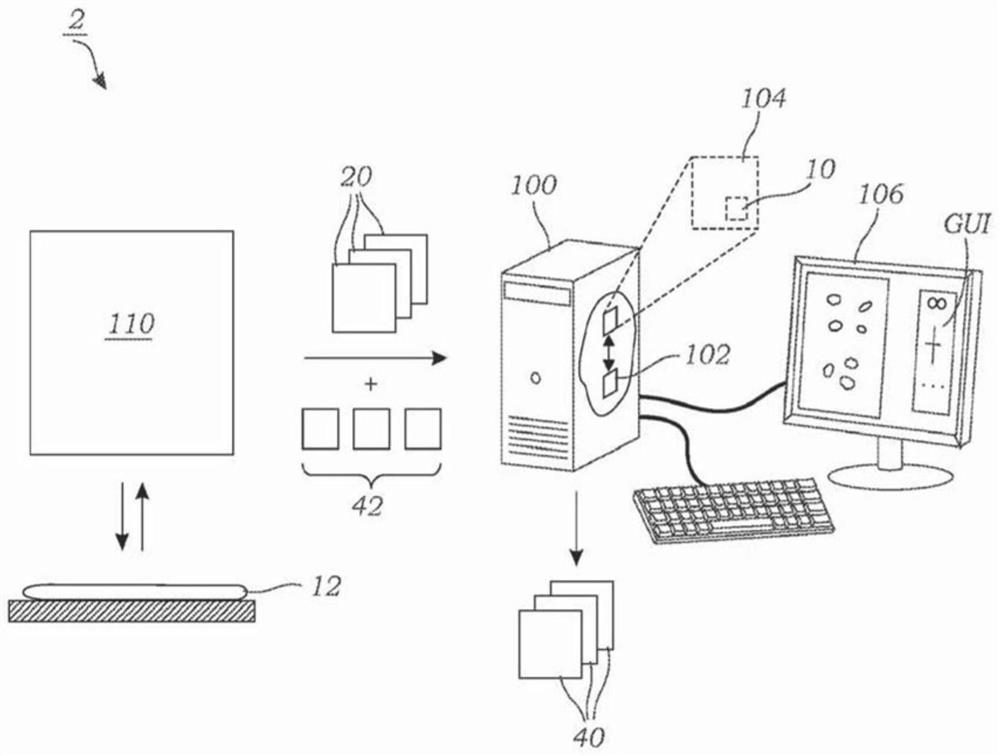

[0066] figure 1 One embodiment of system 2 is shown that uses trained deep neural network 10 to generate one or more fluorescence output images 40 of sample 12 (or objects in sample 12) that are digitally propagated to one or more Multiple user-defined or automatically generated surfaces. System 2 includes computing device 100 including one or more processors 102 and image processing software 104 including trained deep neural network 10 . As explained herein, computing device 100 may include a personal computer, laptop computer, tablet PC, remote server, application specific integrated circuit (ASIC), etc., although other computing devices (eg, including one or more graphics processing units) may be used (GPU) devices).

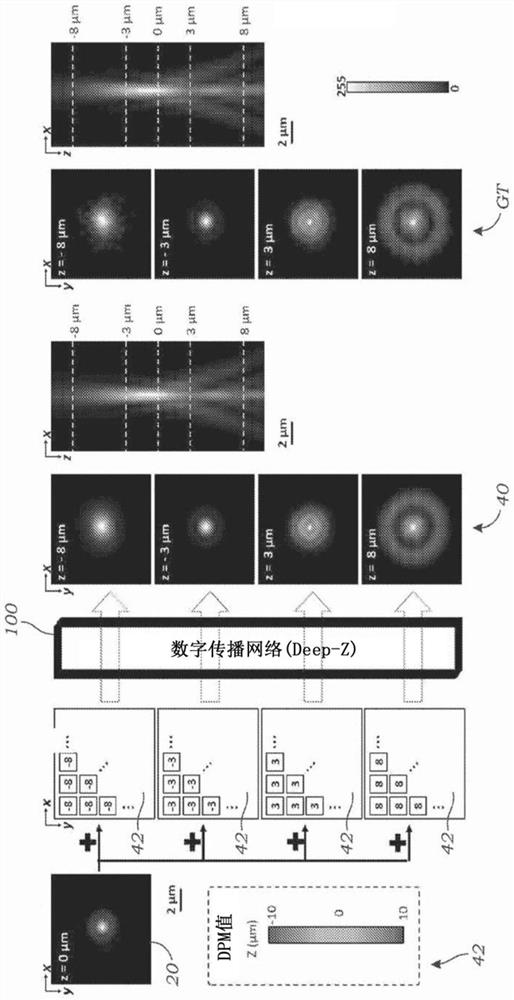

[0067] In some embodiments, a series or time series of output images 40 is generated, eg, a time-lapse video clip or movie of sample 12 or objects therein. The trained deep neural network 10 receives one or more fluorescence microscope input images 20 of t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com