Real-time image semantic segmentation method based on lightweight convolutional neural network

A convolutional neural network and semantic segmentation technology, which is applied in the field of real-time image semantic segmentation, can solve problems such as hindering practical applications and low reasoning efficiency, and achieve the effects of meeting real-time processing requirements, enhancing discrimination ability, and reducing model parameters

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0046] A real-time image semantic segmentation method based on a lightweight convolutional neural network, comprising the following steps:

[0047] S1. Construct a lightweight convolutional neural network, including the following steps:

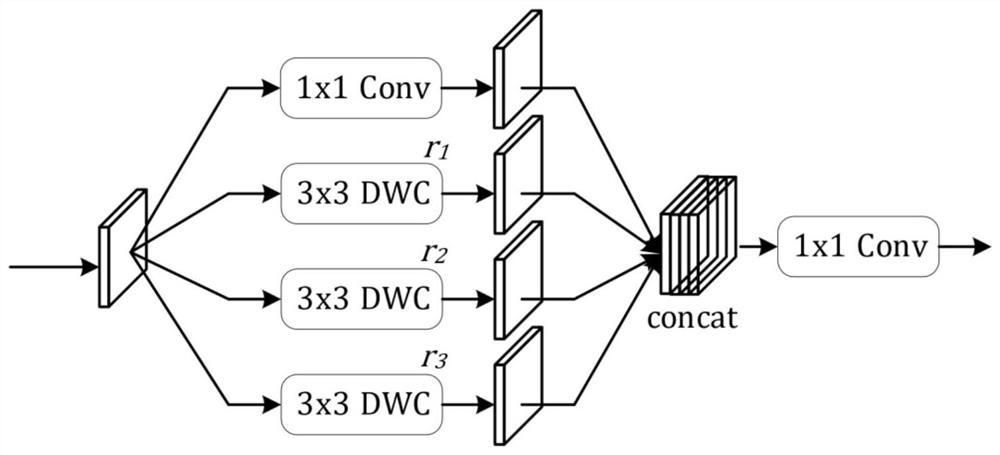

[0048] S1.1. Build a multi-scale processing unit for obtaining multi-scale features of pixels;

[0049] Such as figure 1 As shown, the multi-scale processing unit includes 4 parallel convolutional layer branches, which are standard 1×1 convolutions, and the dilation rate is {r 1 ,r 2 ,r 3}’s 3 dilated convolutions; the dilated convolutions are also depth-wise convolutions; the multi-scale processing unit connects 4 parallel convolutional layer branch outputs in the channel dimension, through a standard 1 The output is obtained after ×1 convolutional mapping; the multi-scale processing unit has a total of 2 convolutional layers.

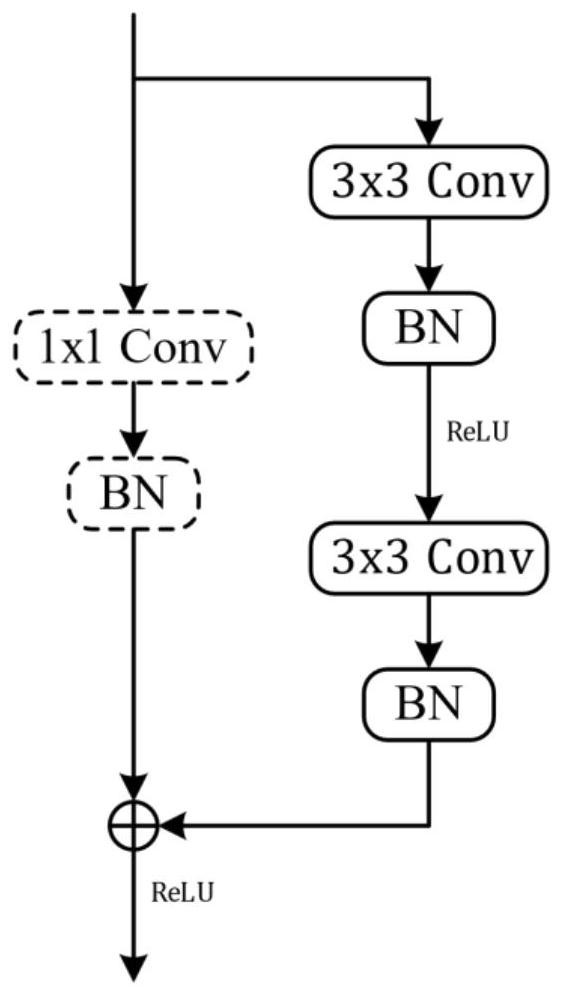

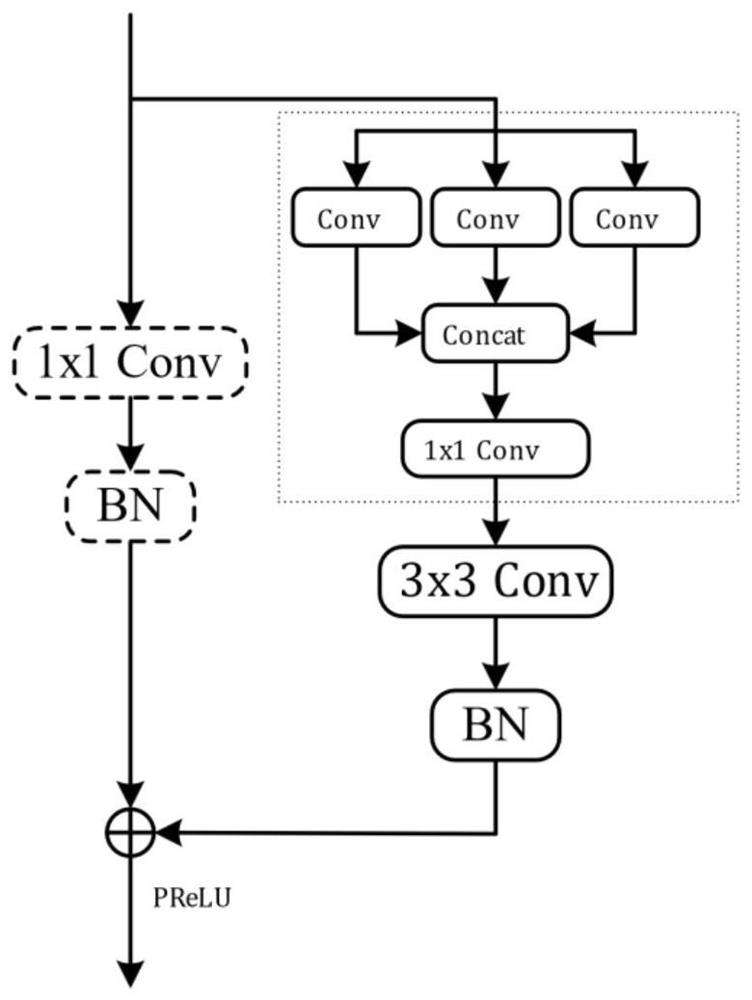

[0050] S1.2. Use the built multi-scale processing unit to replace the first standard 3×3 convolution of the b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com