Security defense method for data manipulation attacks in federated learning

A security defense and data technology, applied in neural learning methods, machine learning, digital data protection, etc., can solve problems such as difficult detection and troubleshooting of attack methods, hidden safety hazards, lack of threats, etc., to achieve wide application prospects and research value, low Effects of Computational Overhead, Securing Data, and Securing Neural Network Models

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] The present invention will be further explained below in conjunction with the accompanying drawings and specific embodiments.

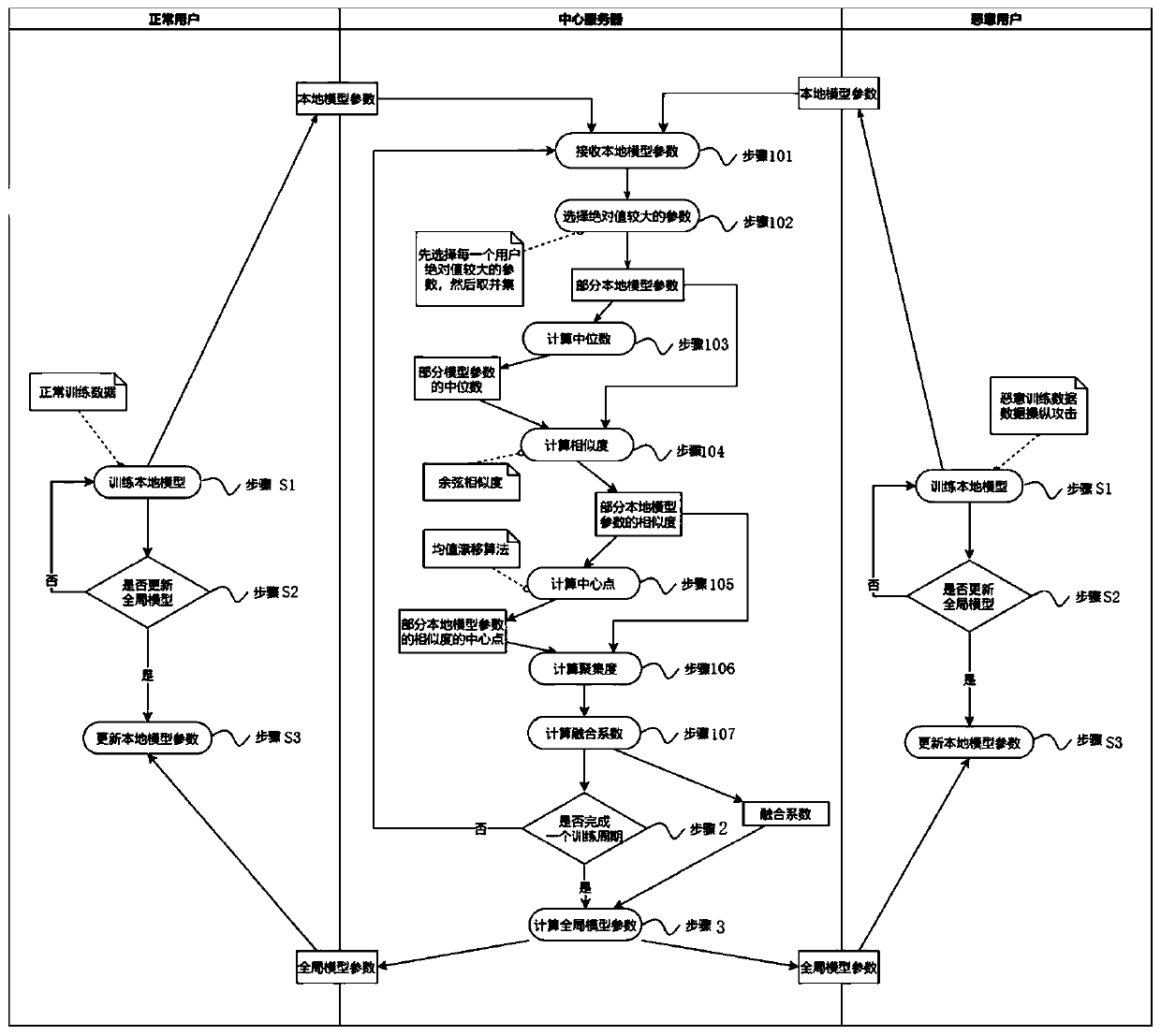

[0043] The embodiment of the invention discloses an implementation method of a security training framework for defending against data manipulation attacks in federated learning.

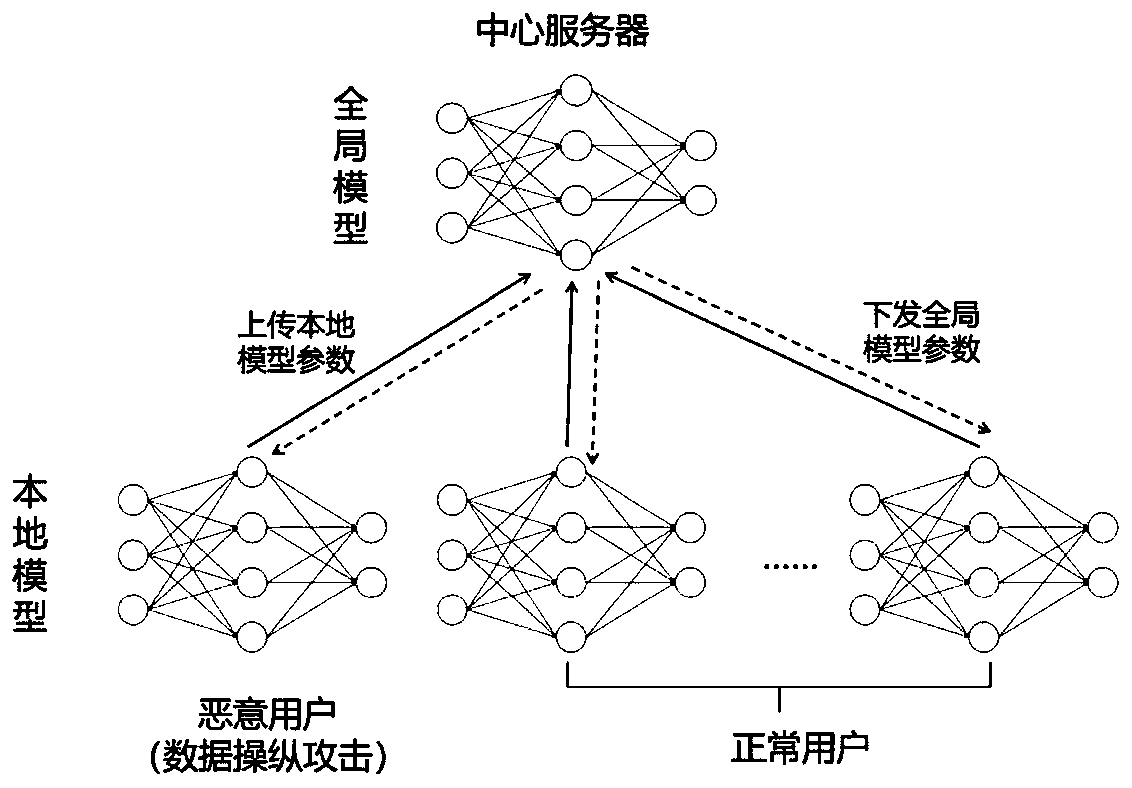

[0044] The federated learning data manipulation attack provided by the embodiment of the present invention, such as figure 1 Shown: It includes three execution subjects: several normal users, a malicious user and a central server. The normal users, malicious users and the central server jointly perform federated learning to complete specified image classification tasks. The normal users hold some normal training data for training normal local models, and the malicious users hold some normal training data and some malicious training data for data manipulation attacks to train malicious local models. A user's training data is private and not disclosed to the public. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com