Deep neural network multi-path reasoning acceleration method for edge intelligent application

A deep neural network and multi-path technology, applied in the field of deep learning model inference optimization acceleration, can solve problems such as low latency, unconsidered computing resource load, and model inference accuracy decline, so as to reduce the impact of network load and adapt to real-time performance requirements and avoid redundant calculations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

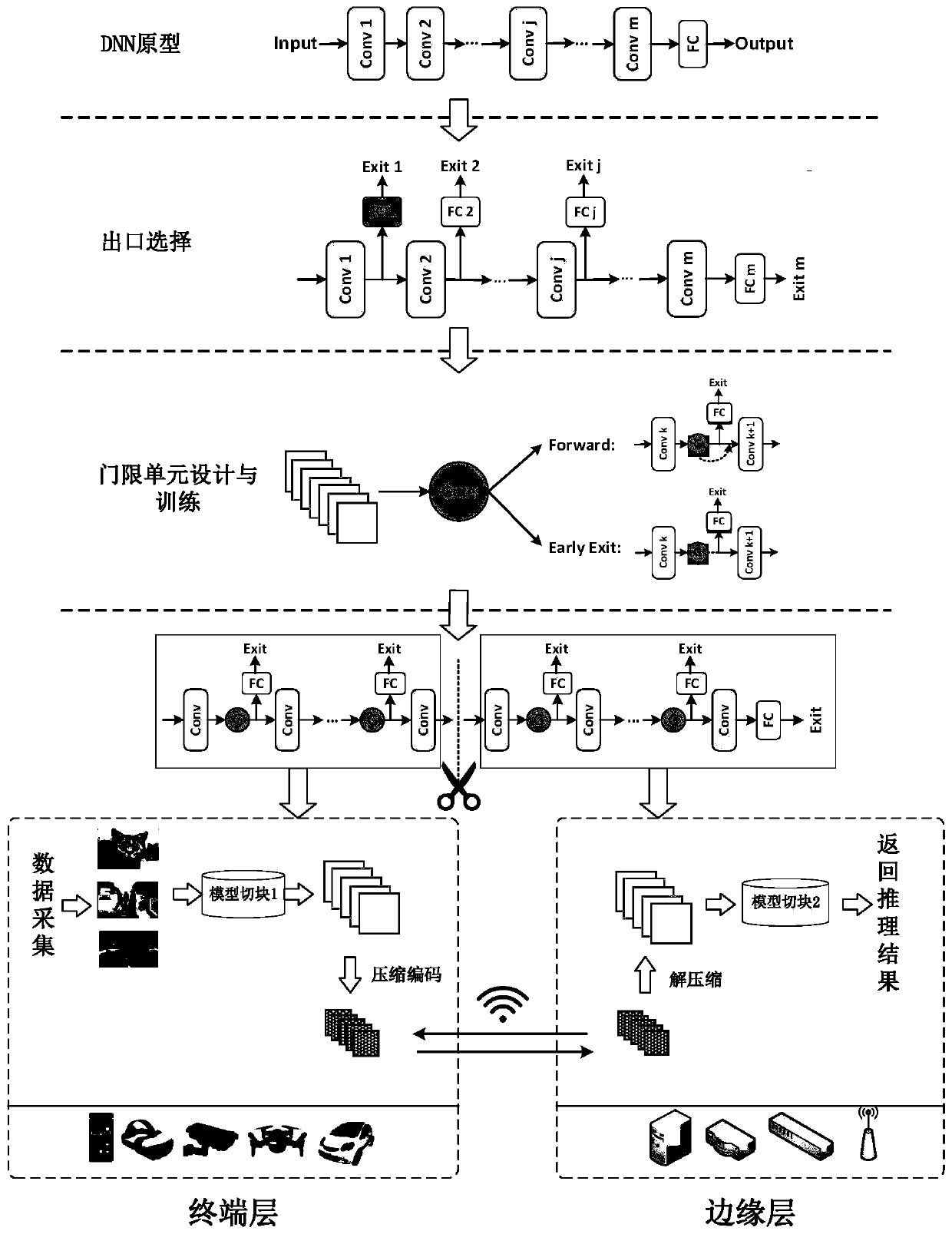

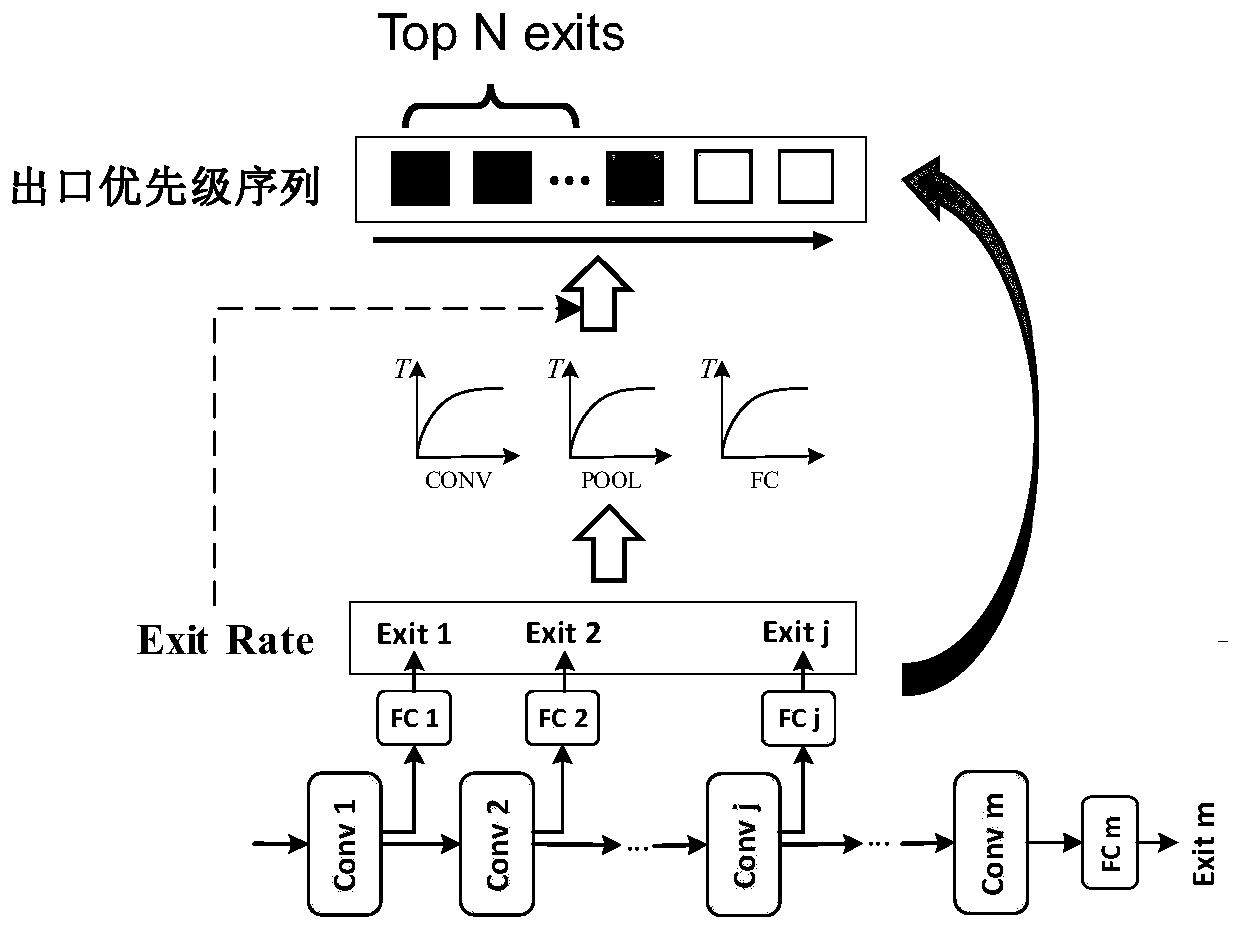

[0086] Embodiment 1: as figure 2 As shown, in the outlet selection step, it is necessary to analyze the inference benefits brought by adding outlets after each convolutional layer based on the deep neural network prototype. The convolutional layer is the key layer for feature extraction in deep neural networks, and it takes up a large part of the calculation. In order to calculate the inference benefit of the exit of each layer, on the existing training data set, the model backbone and the classifier (fully connected layer) of each layer exit are trained with the same training intensity. In order to reduce the coupling between the exit and the model backbone, The exit classifier is trained using a separate loss function. Using the cross-validation method, the accuracy rate that can be achieved by the export of each layer can be obtained. In general, the accuracy rate will increase with the increase of the depth of the exit, and the amount of calculation will increase at the...

Embodiment 2

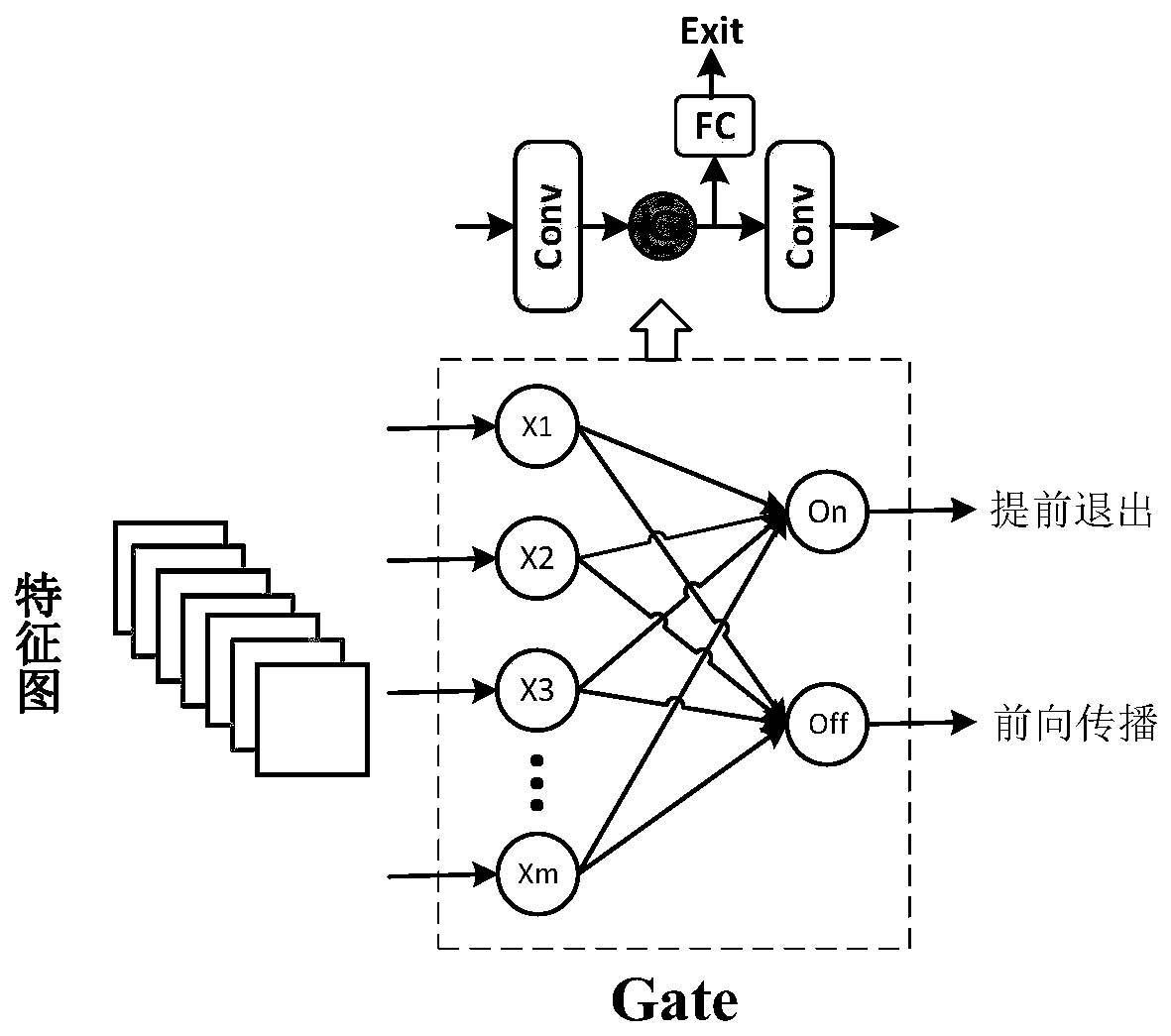

[0096] Embodiment 2: as image 3 As shown, based on the multi-path model constructed by the above algorithm, the present invention sets a threshold unit between the model backbone and the exit classifier to perform multi-path inference decision-making, thereby eliminating a large number of redundant calculations in DNN and shortening the inference delay. In the threshold unit training, it is necessary to make a training set of the threshold unit, including the following three steps:

[0097] Step 1: The original training set is input into the multi-path model, and the classification results are obtained through each inference path.

[0098] Step 2: Extract the intermediate feature map x generated before the sample exits on each path, as the training set Gate_Dateset of the gate unit.

[0099] Step 3: The label is initialized as an all-zero sequence R=. According to the classification result, if the serial number of the shortest path with correct classification is k, that is, ...

Embodiment 3

[0109] Embodiment 3: as Figure 4 As shown, the intermediate feature data is compressed and encoded before being transmitted to the edge layer to reduce the transmission overhead. For data-intensive applications, this step can effectively weaken the constraints on the overall calculation in the transmission stage when the network load is heavy. The use of lossless or lossy compression methods depends on the sparsity ratio of the feature maps of each layer.

[0110] The present invention uses lossless compression for feature maps with relatively large sparseness, and uses lossy compression information entropy for feature maps with relatively small sparseness. By calculating the information entropy of the feature map of each layer, the change of the sparse ratio of the feature map in each layer can be directly judged. Set the entropy threshold threshold, and divide the feature maps of each layer into two categories: the feature maps lower than or equal to the threshold are clas...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com