Data buffering system with load balancing function

A technology of data caching and load balancing, which is applied in digital data processing, special data processing applications, memory address/allocation/relocation, etc. It can solve problems such as unquantifiable program execution efficiency, waste of memory, waste of memory space, etc., to achieve Improve the memory addressing speed, reduce the number of I/O, and realize the effect of dynamic balance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The specific implementation manners according to the present invention will be described below in conjunction with the accompanying drawings.

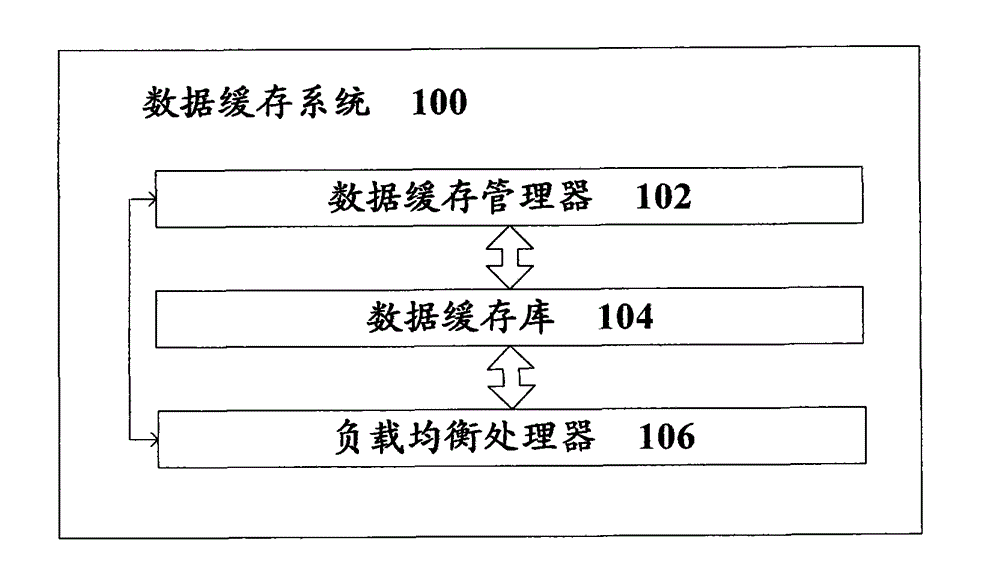

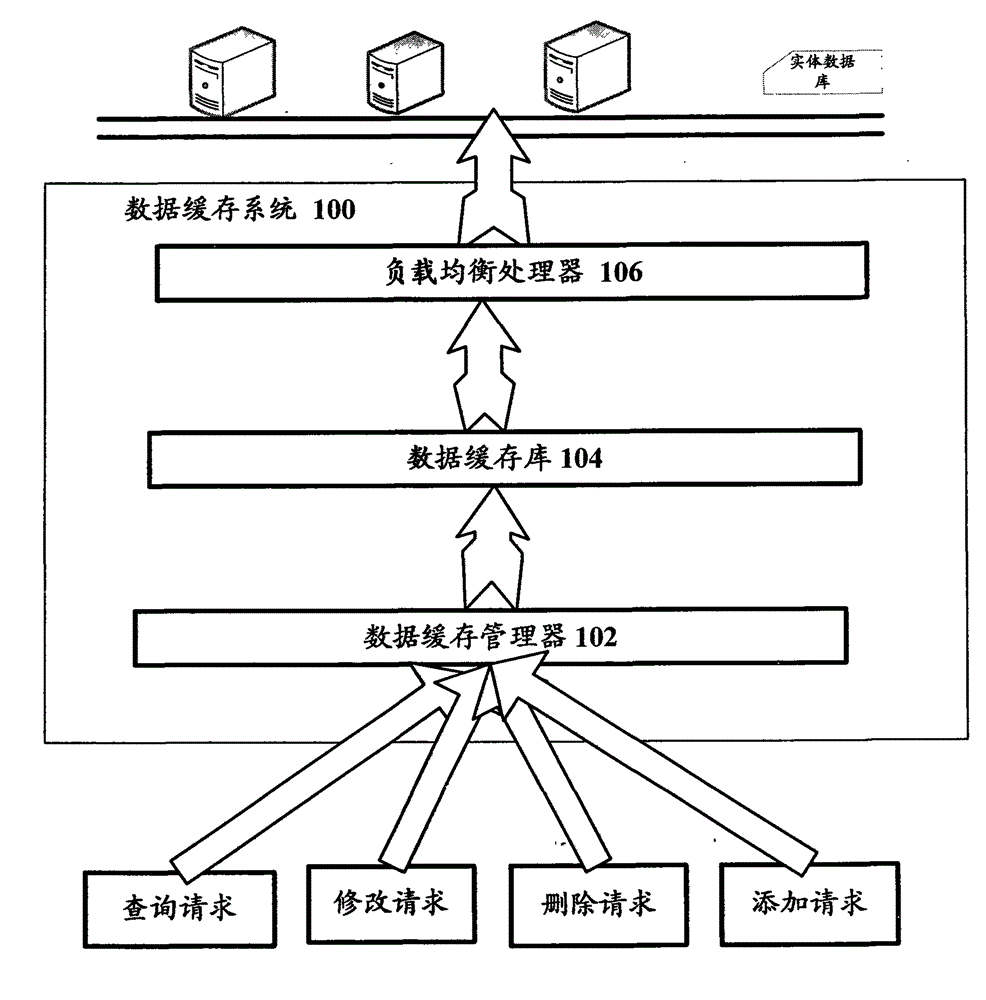

[0035] figure 1 A logical block diagram of the data caching system according to the present invention is shown.

[0036] The data cache system 100 with load balancing function according to the present invention includes a data cache manager 102 , a data cache library 104 and a load balancing processor 106 . The data cache manager 102 is used to respond to a data acquisition request from the outside, and sends a data acquisition instruction to the data cache library 104 in response to the data acquisition request, and judges whether the data cache library 104 stores corresponding data, and if the judgment result is yes , then retrieve the corresponding data from the data cache library 104; if the judgment result is no, send a request to the load balancing processor 106 to obtain a database server. In response to the data storag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com