Bimodal fusion emotion recognition method based on video image and voice

An emotion recognition and video image technology, applied in the field of emotion recognition, can solve the problems of difficult acquisition of physiological parameters, difficulty in improving recognition accuracy, and manual feature extraction, so as to optimize nonlinear processing capabilities, realize real-time emotion recognition, and achieve accurate recognition. The effect of rate increase

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0014] The technical solutions in the embodiments of the present application will be described below with reference to the drawings in the embodiments of the present application.

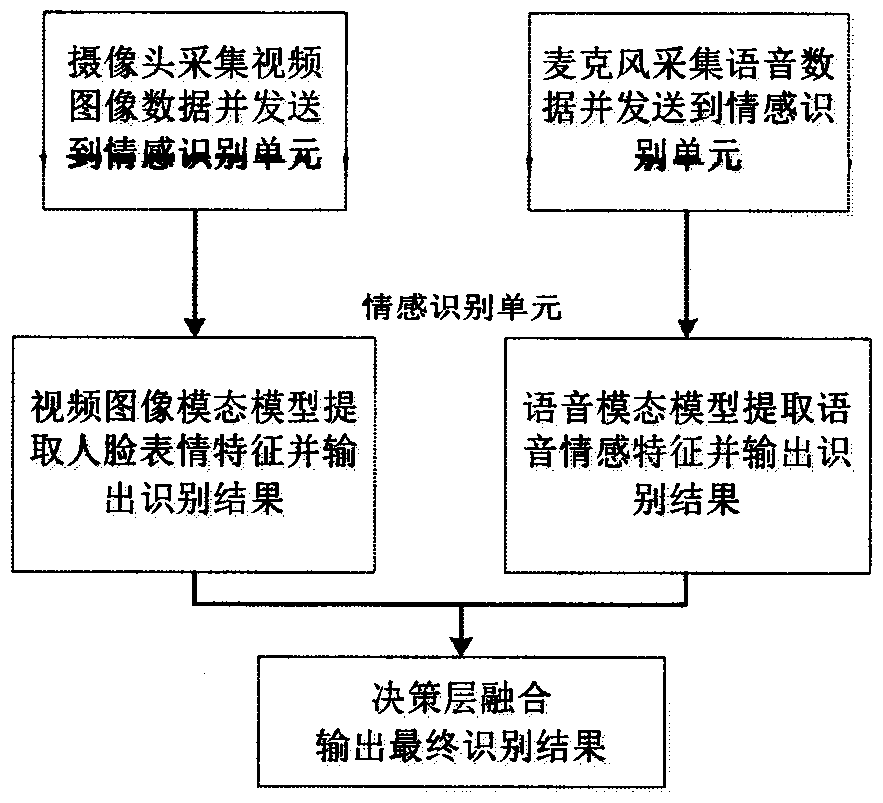

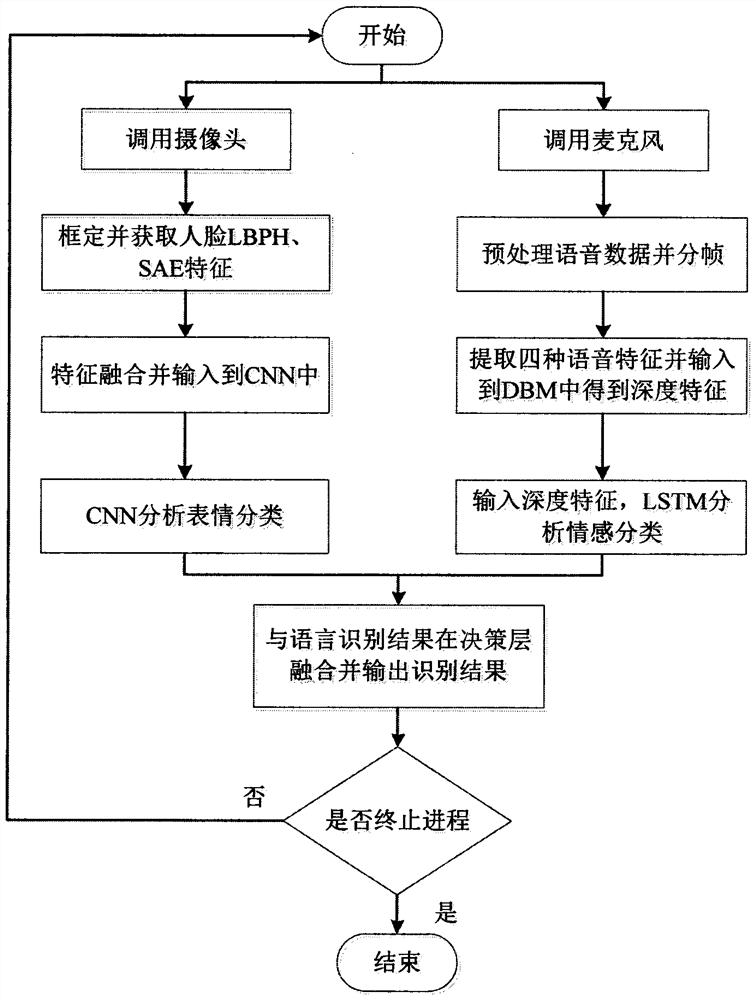

[0015] The invention provides a dual-mode fusion emotion recognition method based on video image and voice, the method is as follows: the image training data set is input into the improved convolutional neural network model for training to obtain the video image modal model; the voice training data set is Input the improved long-term and short-term memory neural network model for training to obtain the voice mode model; collect real-time video images and language information from the camera and microphone, and send them to the emotion recognition unit; the emotion recognition unit includes video image mode and voice mode , the recognition results of the two modalities are respectively obtained, and the recognition process is carried out on the trained neural network model; the recognition results are...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com