Lane line classification method and system adopting cascade network

A technology of cascade network and classification method, applied in the field of computer vision/lane line detection, can solve the problems of unfavorable real-time performance, time-consuming, large memory, etc. sexual effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0048] A method for classifying lane lines using a cascaded network, the method comprising:

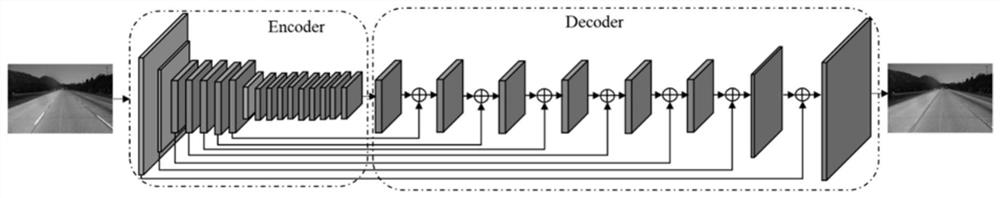

[0049] Step S1: preprocess the ERFNet network to obtain the UERFNet network and train the UERFNet network, and use the trained UERFNet network as the lane line positioning network; select ERFNet as the benchmark network, and the ERFNet network includes 23 layers, of which 1-16 layers are encoders. device), 17-23 layers are decoder (decoder). In order to make the scale extracted by the network reach the size of the original image, the deocder stage of ERFNet directly uses the upsampling operation to reach the size of the original image. Among them, the ERFNet network is preprocessed, including:

[0050] Upsampling the output of the 16th layer of the ERFNet network, the upsampling result is input to the 17th layer of the ERFNet network, and the output of the first layer of the ERFNet network is input to its 23rd layer, and the output of the second layer of the ERFNet network is input ...

Embodiment 2

[0080] Corresponding to Embodiment 1, Embodiment 2 of the present invention also provides a lane line classification system using a cascade network, and the system includes:

[0081] The lane line positioning network building block is used to preprocess the ERFNet network to obtain the UERFNet network and train the UERFNet network, and use the trained UERFNet network as the lane line positioning network;

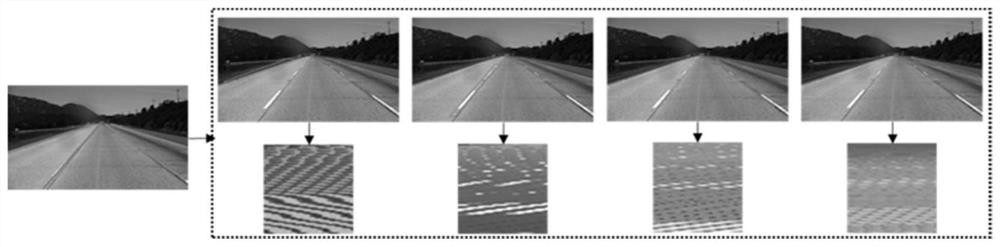

[0082] The position point acquisition module is used to input the image of the lane line to be classified into the lane line positioning network to obtain the position point of each lane line;

[0083] The feature extraction module is used to extract the pixel value corresponding to the position point of each lane line in the original image to form a feature map of each lane line;

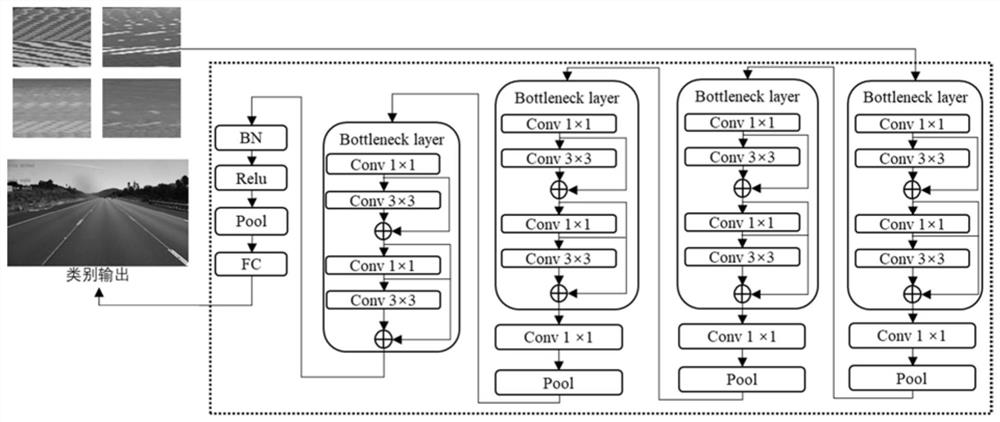

[0084] Lane classification network building block, used for cascading multiple bottleneck layers and fully connected layers to build a lane classification network;

[0085] The lane category acq...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com