Method for guiding video coding by utilizing scene semantic segmentation result

A technology of semantic segmentation and video coding, applied in neural learning methods, digital video signal modification, image analysis, etc., can solve the problems of decreased prediction accuracy, limited coding efficiency, high computational complexity, and improved the compression ratio.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] As mentioned above, the present application proposes a video encoding method guided by the result of scene semantic segmentation. The specific implementation manner of the present application will be described below with reference to the accompanying drawings.

[0039] The video encoding method involves semantically segmenting video scenes and applying the segmentation results to video encoding, including three steps: 1. Semantic image segmentation; 2. Semantic video segmentation; 3. Adaptive video encoding guided by video semantic segmentation results . Each step is described separately below.

[0040] 1. Image Semantic Segmentation

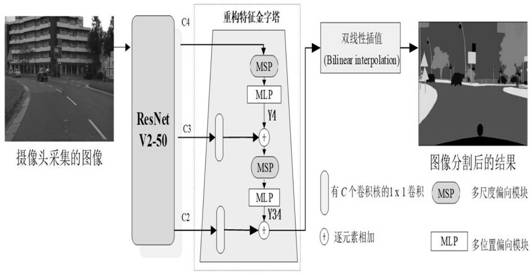

[0041] (1) Multi-scale and multi-position biased image semantic segmentation network

[0042] figure 1 Describes the overall architecture of the multi-scale and multi-position biased image semantic segmentation network model used in this application. Using ResNetV2-50 as the basic network, the semantic segmentation results of images ar...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com