Memory allocation method of neural network

A technology of memory allocation and neural network, which is applied in the fields of computer and artificial intelligence, can solve problems such as unsuitable use and labor time, and achieve the effects of complete automation, reduced memory size, and convenient use

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] The technical solutions of the present invention will be further described below in conjunction with the accompanying drawings and embodiments. It should be noted that the drawings are only used for illustration and should not be construed as limiting the patent. Meanwhile, the present invention can be implemented in various forms and should not be limited by the embodiments set forth herein. The following embodiments are provided to make the present invention easier to understand and more completely presented to those skilled in the art.

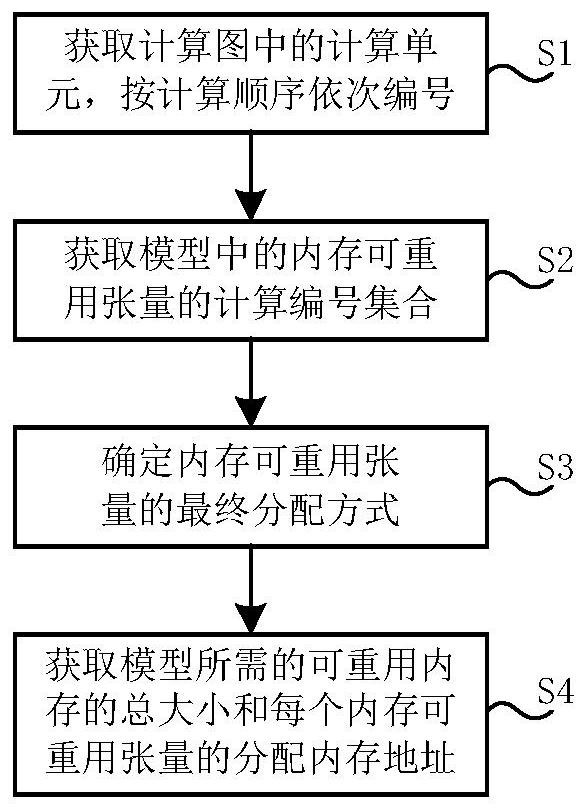

[0043] Such as figure 1 A neural network memory allocation method is shown, specifically:

[0044] S1. Obtain the calculation units in the calculation graph, and number each calculation unit in turn according to the calculation order; the details are as follows:

[0045] S11. Traversing the neural network calculation graph, removing the operation unit whose data storage in the memory of the input tensor and the output tensor is ex...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com