Method and device for reducing first-layer convolution calculation delay of CNN accelerator

An accelerator and first-layer technology, which is applied in the parallel design of convolutional neural network accelerators and the field of hardware accelerated convolutional neural networks. Utilization rate, reduced calculation delay, and obvious acceleration effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

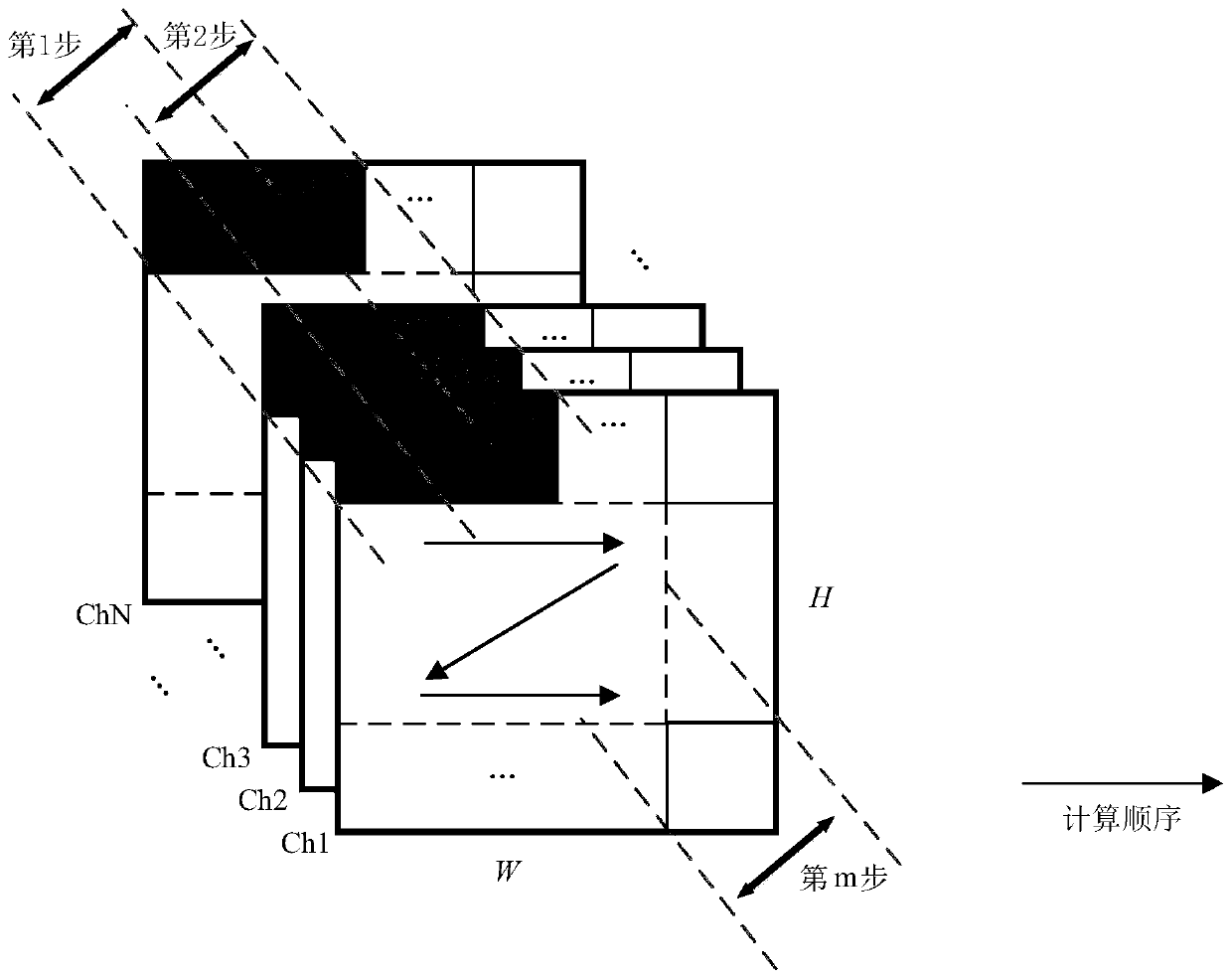

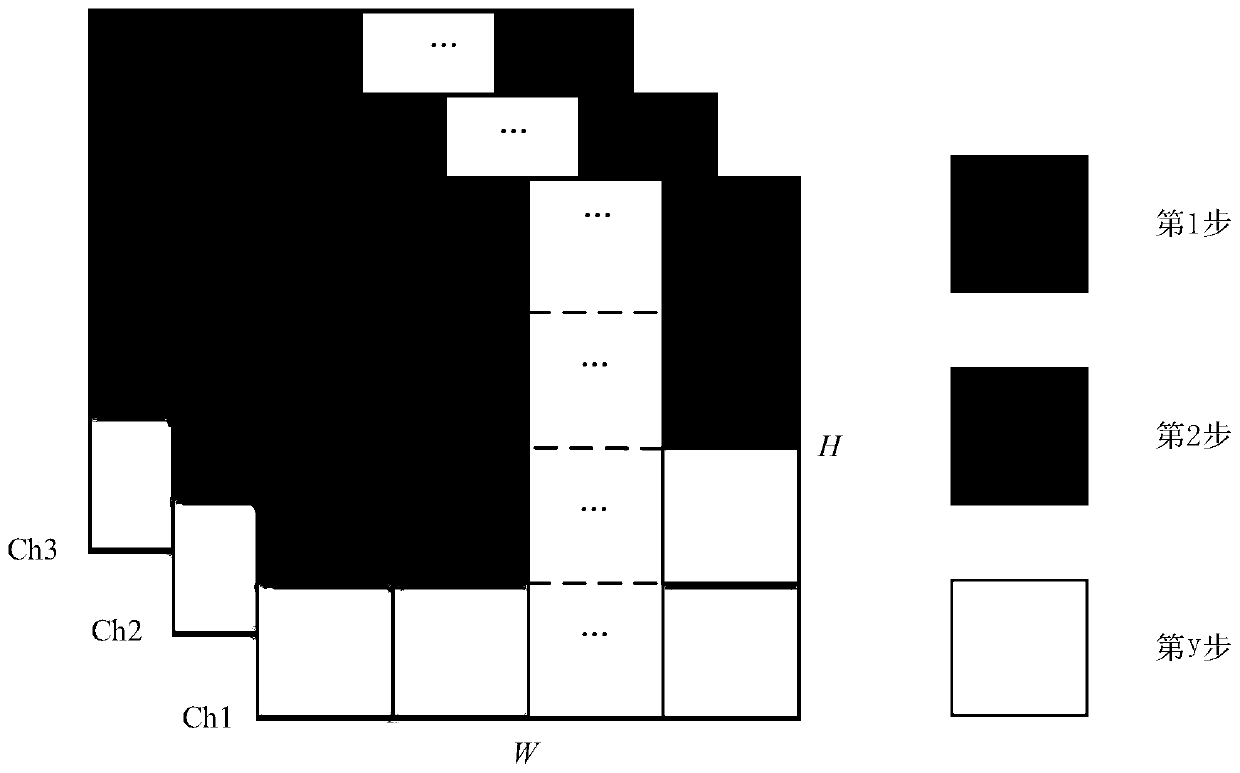

[0028] The present invention mainly aims at the problem of large first-layer convolution calculation delay in the current single computing engine architecture, and improves the calculation parallelism by optimizing the calculation mode of the first-layer convolution, thereby effectively reducing the calculation delay of the first-layer convolution.

[0029] For this reason, the technical solution adopted by the present invention is: increase the computational parallelism of the convolution of the first layer. That is, when calculating the convolution of the first layer, not only parallel calculations are performed from different channel directions, but also different feature blocks in the same channel are also calculated in parallel; for other convolutional layers, only parallel calculations are performed from different channel directions. The corresponding hardware architecture is as follows: every 3 convolutions form a group, followed by a first-level addition tree, a judgmen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com