Compression method of neural language model based on tensor decomposition technology

A language model and tensor decomposition technology, applied in the field of neural language model compression, can solve problems such as lack of model parameters and too many model parameters, and achieve the effect of increasing the scale

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

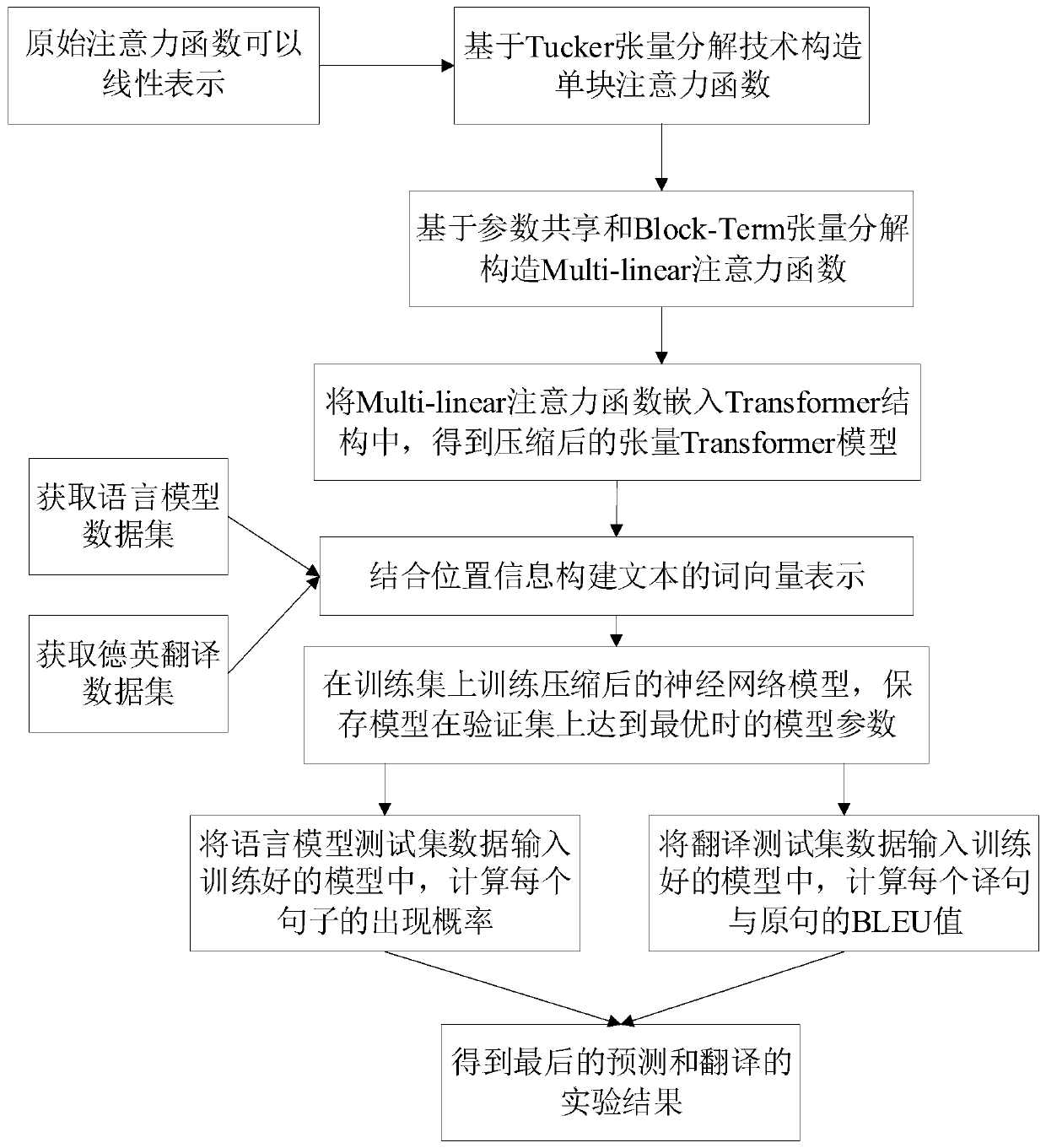

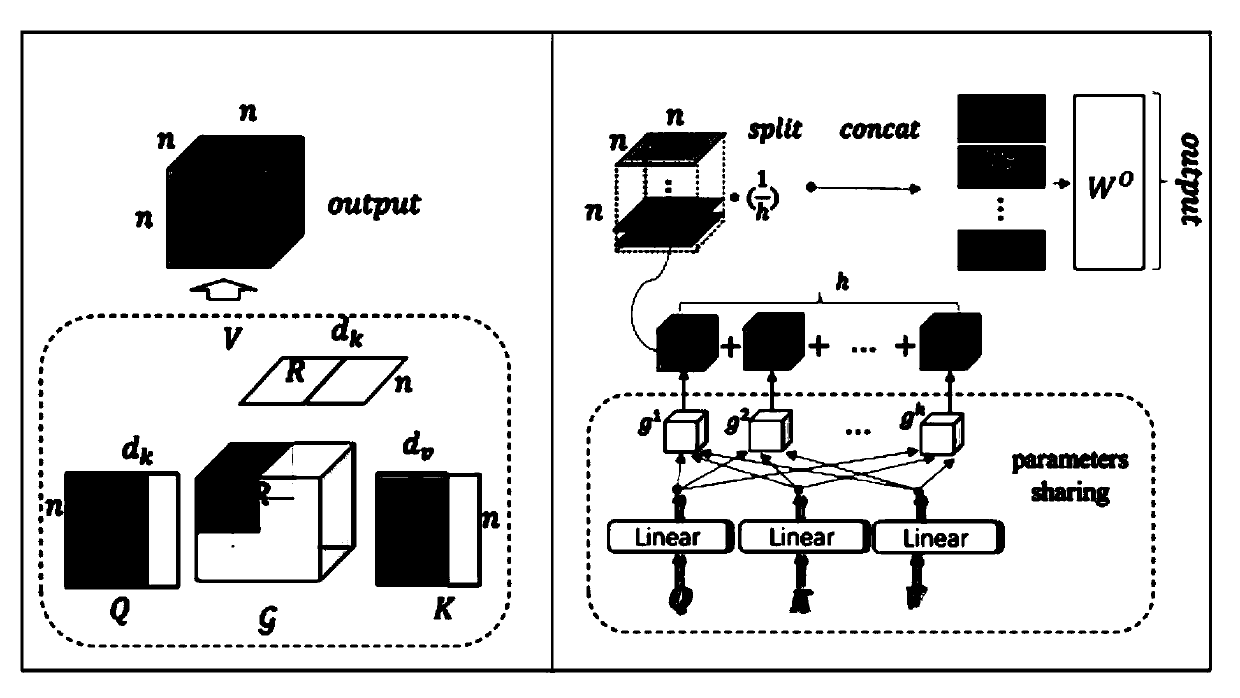

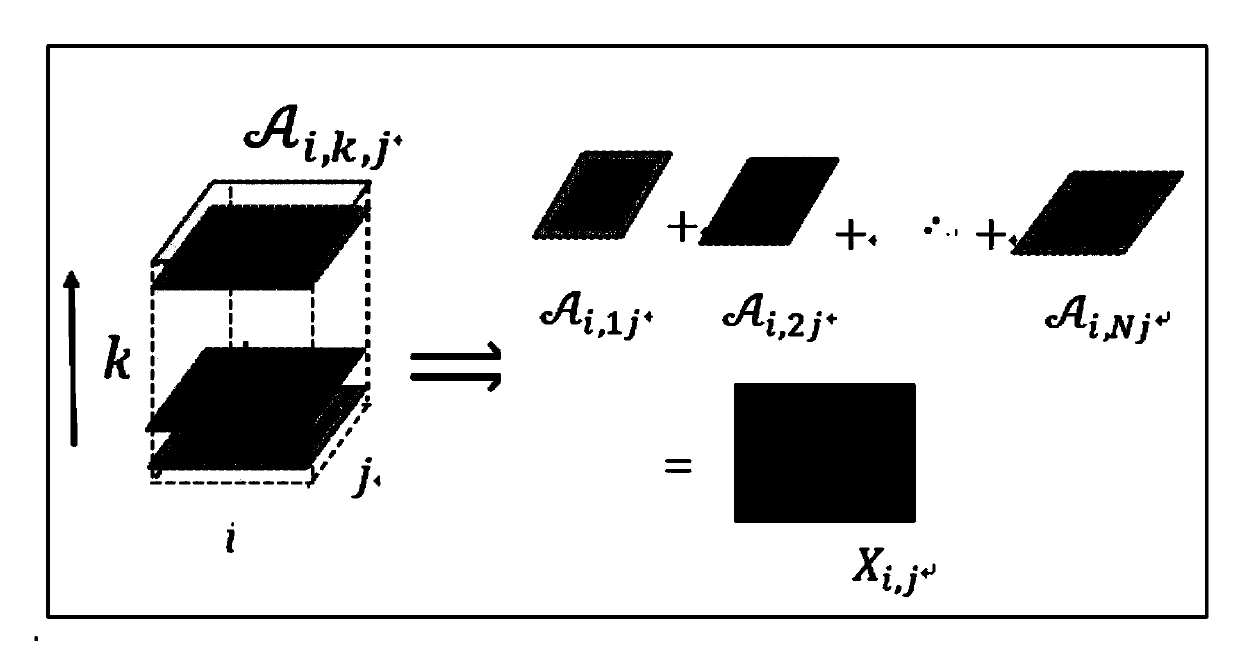

[0036] The technical scheme of the present invention is described in further detail below in conjunction with accompanying drawing, but protection scope of the present invention is not limited to the following description. figure 1 The flow chart of the analysis method of this compressed model proposed by this method is shown; figure 2 A neural network model diagram designed by the present invention is shown; image 3 A schematic diagram showing tensor representations that can reconstruct the original attention.

[0037] The invention discloses a method for compressing neural language model parameters based on tensor decomposition. Due to the outstanding performance of the language model of the self-attention mechanism in natural language processing tasks, the pre-training (Pre-training) language model of the encoder-decoder (Encoder-Decoder) structure based on the self-attention mechanism has become a hot topic in the field of natural language processing. Research hotspots...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com