Semantic similarity feature extraction method based on double selection gates

A technology of semantic similarity and feature extraction, applied in semantic analysis, natural language data processing, instruments, etc., can solve the problems that users cannot quickly find keyword-related information, unsatisfactory search result quality, and information error matching, etc. Achieve the effect of solving the problem of network gradient disappearance and explosion, avoiding the influence of semantic similarity judgment, and improving matching efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

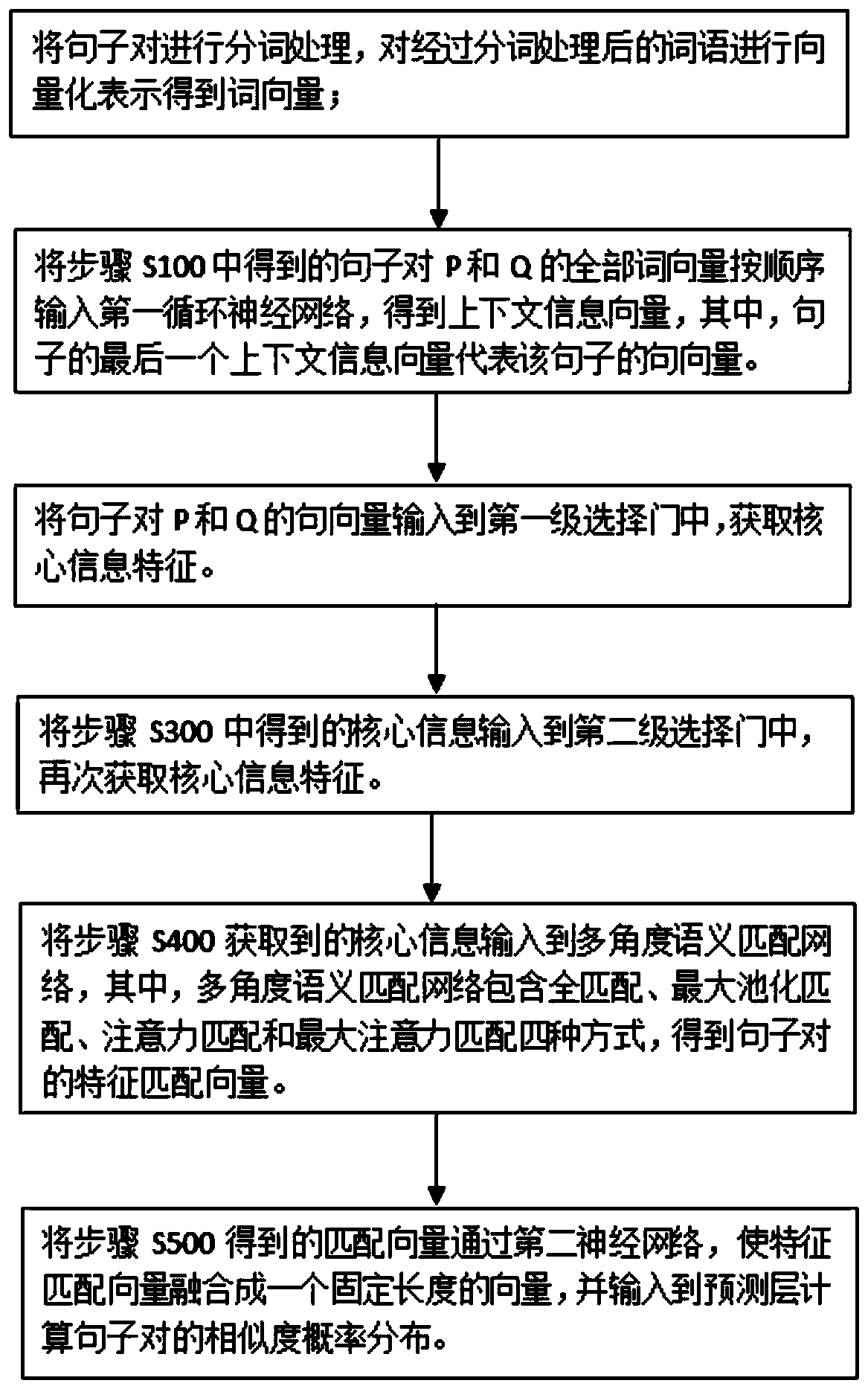

[0049] see figure 1 , the present invention provides a method for extracting semantic similarity features based on double selection gates, comprising:

[0050] S100. Perform word segmentation processing on P and Q of the sentence to be processed, and perform vectorized representation on the words after word segmentation processing to obtain word vectors.

[0051] The word segmentation processing in step S100 is the process of dividing the words in the sentence into reasonable word sequences that conform to the contextual meaning. It is one of the key technologies and difficulties in natural language understanding and text information processing, and it is also a part of the semantic similarity model. an important processing link. The problem of word segmentation in Chinese is more complicated. The reason is that there is no obvious mark between words. The use of words is flexible, varied, and has rich semantics, which is prone to ambiguity. According to research, the main di...

Embodiment 2

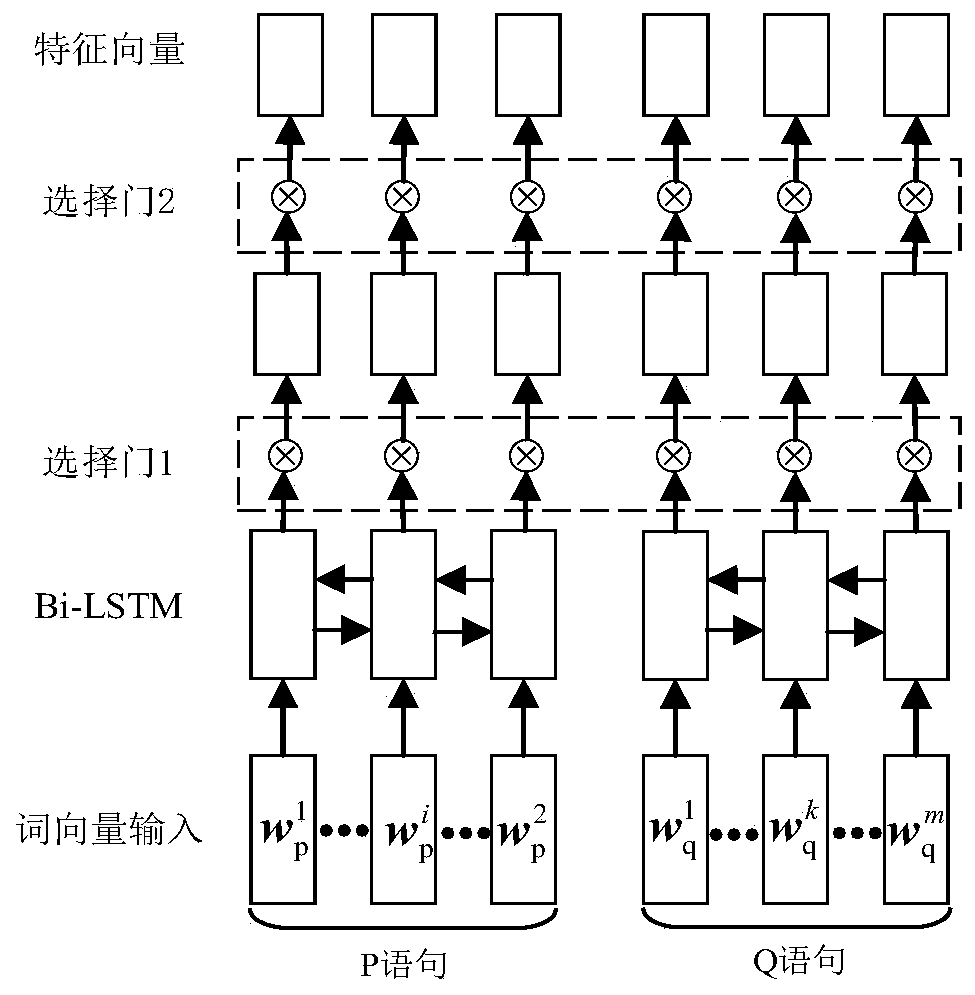

[0074] On the basis of Embodiment 1, the first cyclic neural network is composed of a layer of unidirectional LSTM network and a layer of bidirectional LSTM network, each layer includes a plurality of connected LSTM cell modules, according to the input gate in the LSTM cell module, The forget gate, update gate and filter output gate process the current input information and the previous moment output information. The first layer of the first recurrent neural network includes a plurality of connected unidirectional LSTM cell modules for obtaining the state vector of each word. The second layer of the first recurrent neural network includes multiple connected bidirectional LSTM cell modules, which are used to obtain sentence context information vectors.

[0075] In this method, first, the words and context information of the sentence are modeled through the first recurrent neural network, and the state vector at the corresponding time of each word of the sentence and the context...

Embodiment 3

[0085] On the basis of Embodiment 1 or 2, the dual selection gate includes two selection gate structures, and the two selection gates have different structures and parameters. Through different selection gates, it is beneficial to filter out redundant information in sentences and obtain core information more accurately. The calculation formula of the first layer selection gate is as follows:

[0086] s=h n ;

[0087] sGate i =σ(W s h i +U s s+b);

[0088]

[0089]In the above formula, the sentence vector is constructed using the sentence context hidden vector, and the hidden layer h of the sentence is taken n For the sentence vector s, sGate i is the gate vector, W s and U s Is the weight matrix, b is the bias vector, σ is the sigmoid activation function, is the dot product between elements.

[0090] The second layer selection gate calculates the context vector at time t, using the sentence vector at the previous time and the hidden layer state h′ of the select...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com