Automatic text abstract generation method based on dynamic word vectors

An automatic text and word vector technology, applied in neural learning methods, unstructured text data retrieval, text database browsing/visualization, etc., can solve the problem of long text length, low quality and efficiency of text summary generation, and time-consuming training. and other problems to achieve the effect of high accuracy and fluency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

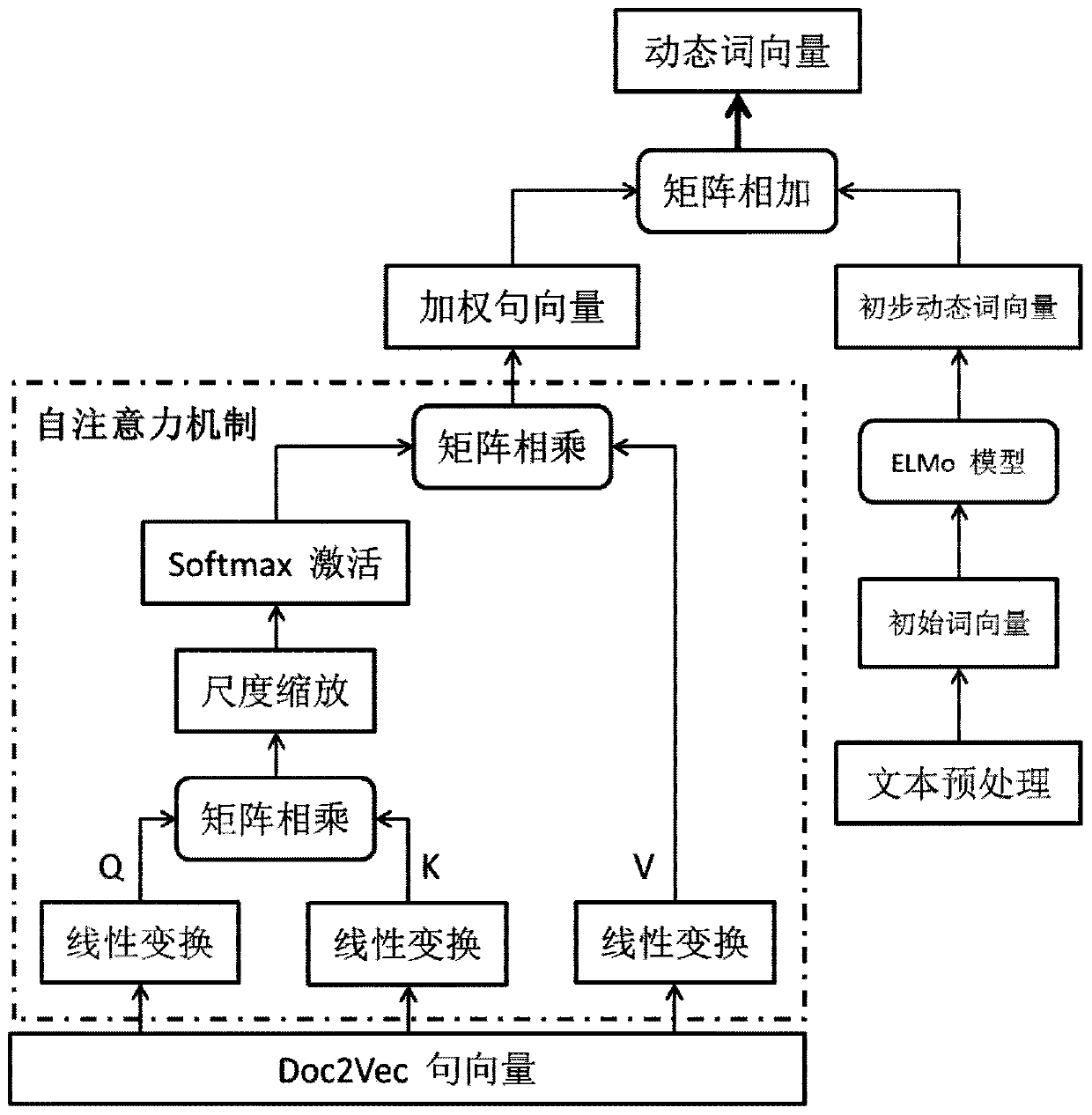

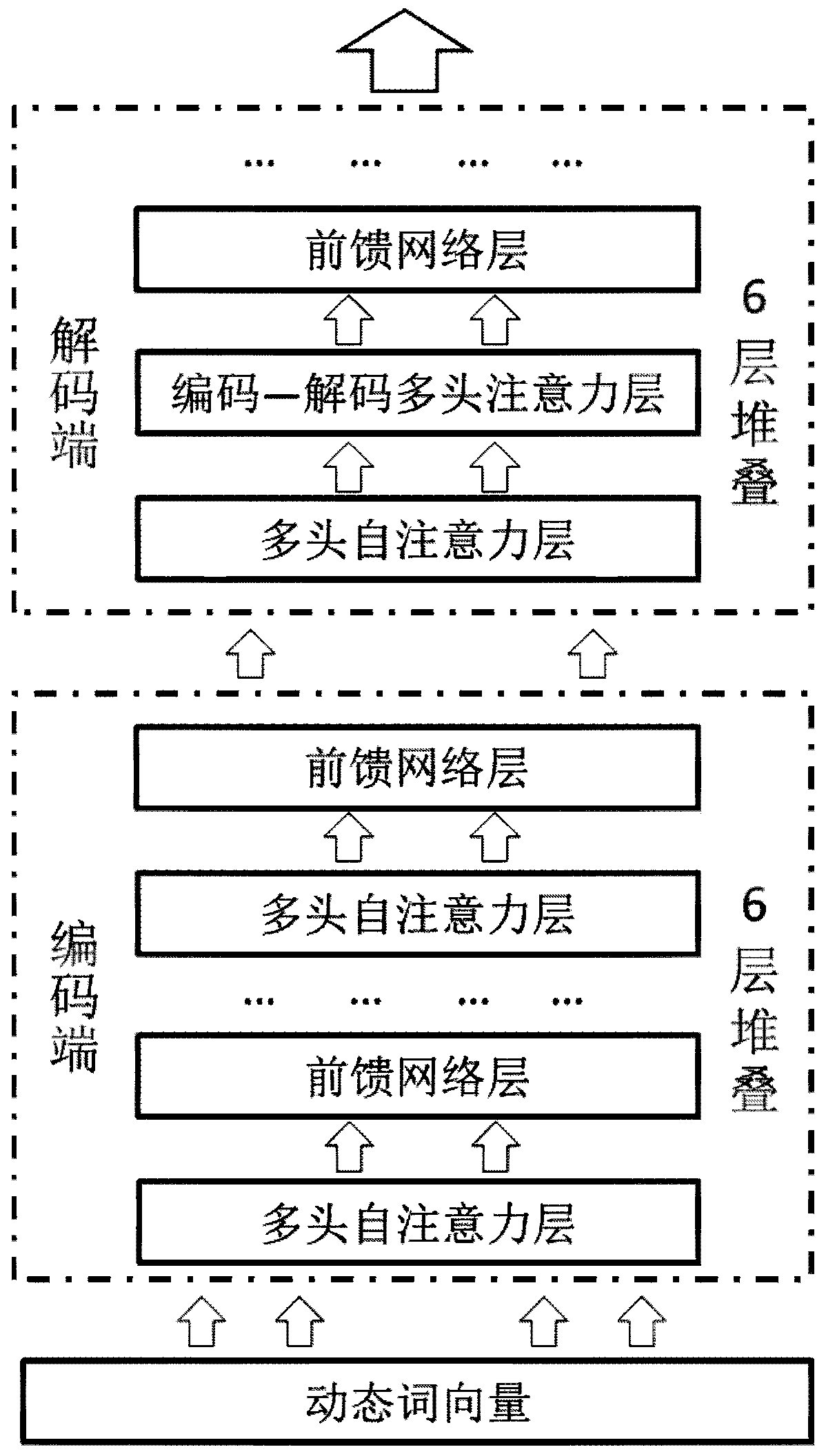

[0013] refer to figure 1 . According to the present invention, the text is first preprocessed by the text preprocessing module, including word segmentation operation, high-frequency word filtering and part-of-speech tagging, and the processed text is generated into an initial word vector; then the initial word vector is input into the ELMo model module to generate Preliminary dynamic word vector; at the same time, input the text into the Doc2Vec sentence vector module to obtain the sentence vector of each sentence, and then input the sentence vector into the self-attention mechanism module to calculate the importance weight of each sentence to the summary result to generate a weighted sentence vector. Use the weighted sentence vector as the environmental feature vector of each word; then add this environmental feature vector to the initial dynamic word vector to obtain the final dynamic word vector, and input this dynamic word vector into the Transformer neural network model t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com