Motion recognition system based on machine learning and radar combination

An action recognition and machine learning technology, applied in the field of communication, can solve the problems of low recognition accuracy and the inability of the action recognition system to update itself.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

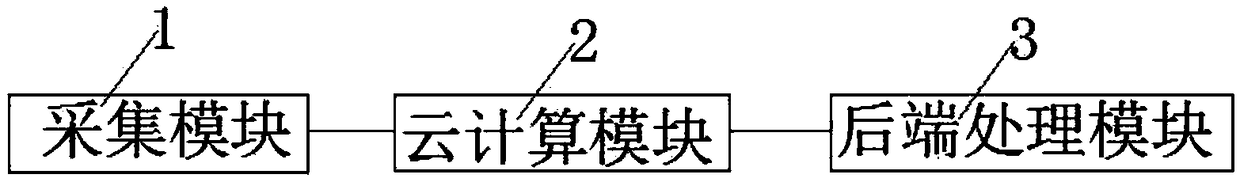

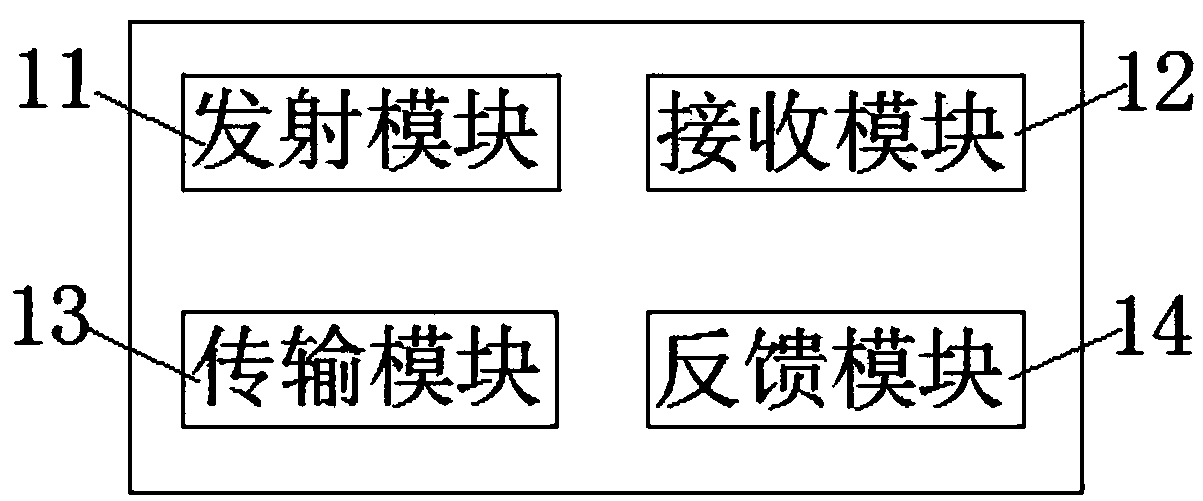

[0037] The action recognition system based on the combination of machine learning and radar in this embodiment includes an acquisition module 1, which is used to send and receive radar signals, and process the received signals, and send the processed information signals to the cloud computing module 2; the cloud computing module 2 , for calculating the signal received from the acquisition module 1 according to a data processing algorithm to obtain an action recognition result, hardware parameters and algorithm-related parameters, and sending the action recognition result and the hardware parameters to the back-end processing module 3, Store the relevant parameters of the algorithm locally; the back-end processing module 3 is used to send an adjustment command to the acquisition module 1 according to the action recognition result and the hardware parameters to realize the adaptive adjustment of its signal processing unit, and Send a control command to the external device accordi...

Embodiment 2

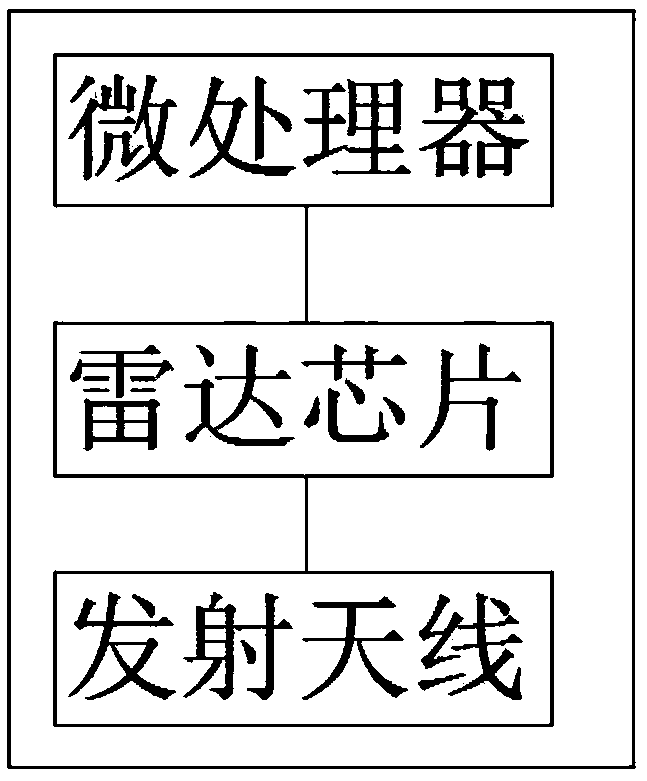

[0047] The radar transmission signal 110 is radiated by the transmitting antenna or antenna array as a frequency-modulated continuous wave of a certain frequency and a specific waveform to the space. The frequency can optionally be 24 GHz, and other frequencies can also be used according to the application scenario. The waveform can optionally be a sawtooth wave or a triangular wave. After receiving the echo signal 111 and radiating microwaves to the space from the radar transmitting signal 110, it is reflected by objects in the space and received by the reflective antenna or antenna matrix, and outputs the output of the input channel and the output channel to the rear end. The signal filtering and amplifying module 112 is composed of a programmable filter and a programmable amplifier. The signal received by the receiving echo signal 111 is band-pass filtered by the filter in turn, and the amplifier is amplified with low noise to obtain an intermediate frequency signal with more...

Embodiment 3

[0049] When the cloud receives the data and caches 210 , the data is moved to the data storage area of the cloud server for data storage 211 . After the storage is completed, the historical data 212 in the data storage area is read for data processing. After the data reading is completed, fast Fourier transform 213 is performed on the data, and the signals of the input channel and the output channel based on the time domain are converted into two-dimensional signals based on the frequency domain. Import the signal after the process 213 into the convolutional neural network for training 214 . After 214 is completed, the recognition result 215 of this action and better hardware parameters 216 can be obtained. The motion recognition results 215 and 216 are transmitted to the local 217 via wireless transmission with better hardware parameters.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com