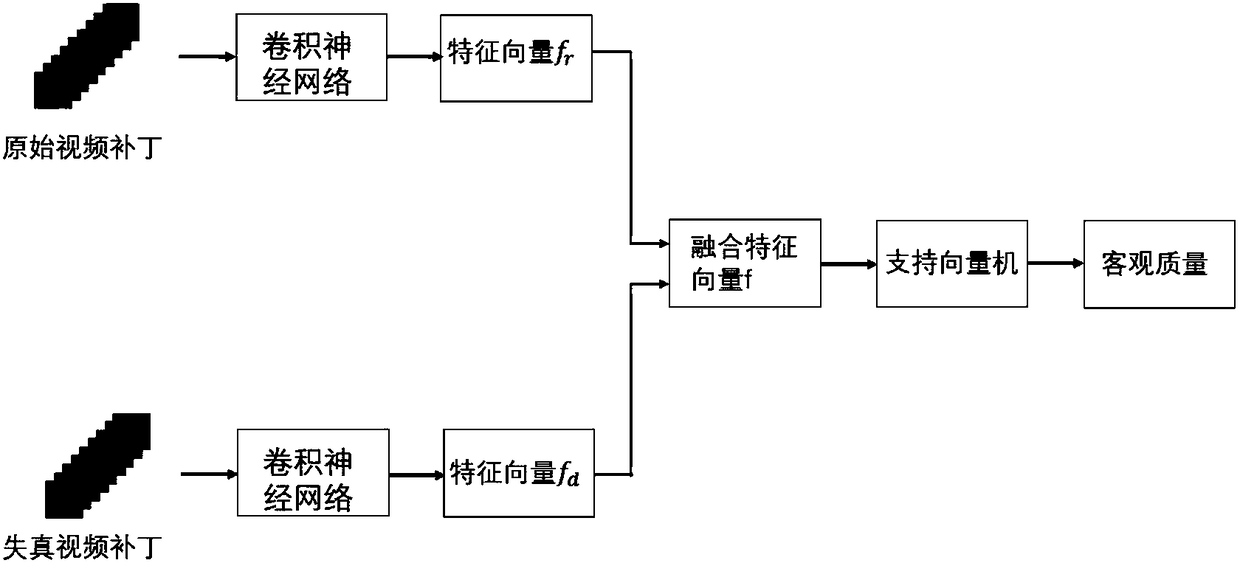

Full-reference virtual-reality video quality evaluation method based on convolutional neural networks

A convolutional neural network and virtual reality technology, applied in the field of virtual reality video quality evaluation, can solve the problems of no VR video standard and objective evaluation system, and achieve the effect of simple video preprocessing, easy operation, and accurate reflection

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

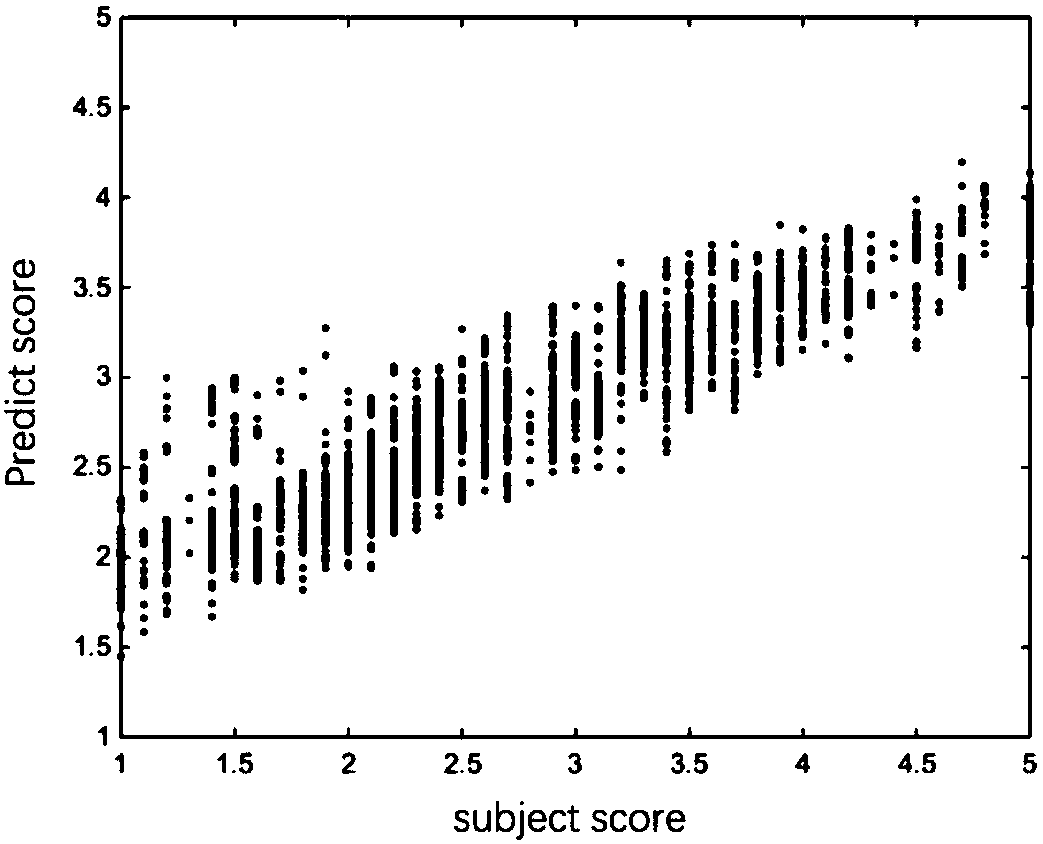

Examples

Embodiment Construction

[0017] In order to make the technical solution of the present invention clearer, the specific implementation manners of the present invention will be further described below. The present invention is concretely realized according to the following steps:

[0018] Step 1: Construct difference video V according to the principle of stereo perception d . First grayscale each frame of the original VR video and the distorted VR video, and then use the left video V l with the right video V r Get the required difference video. Compute the sum value video V at the video position (x, y, z) d The value of is shown in formula (1):

[0019] V d (x,y,z)=|V l (x,y,z)-V r (x,y,z)|(1)

[0020] Step 2: Divide the VR differential video into blocks to form video patches, thereby expanding the capacity of the dataset. Specifically, one frame is extracted every eight frames from all VR difference videos, and a total of N frames are extracted. A square image block with a size of 32×32 pixe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com