Real Sense-based facial expression animation driving method

A technology of facial expressions and driving methods, which is applied in animation production, image data processing, instruments, etc., can solve problems such as low efficiency, complex processing, and poor precision, so as to improve robustness, improve real-time performance, and reduce conversion gray The effect of the degree diagram on the process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

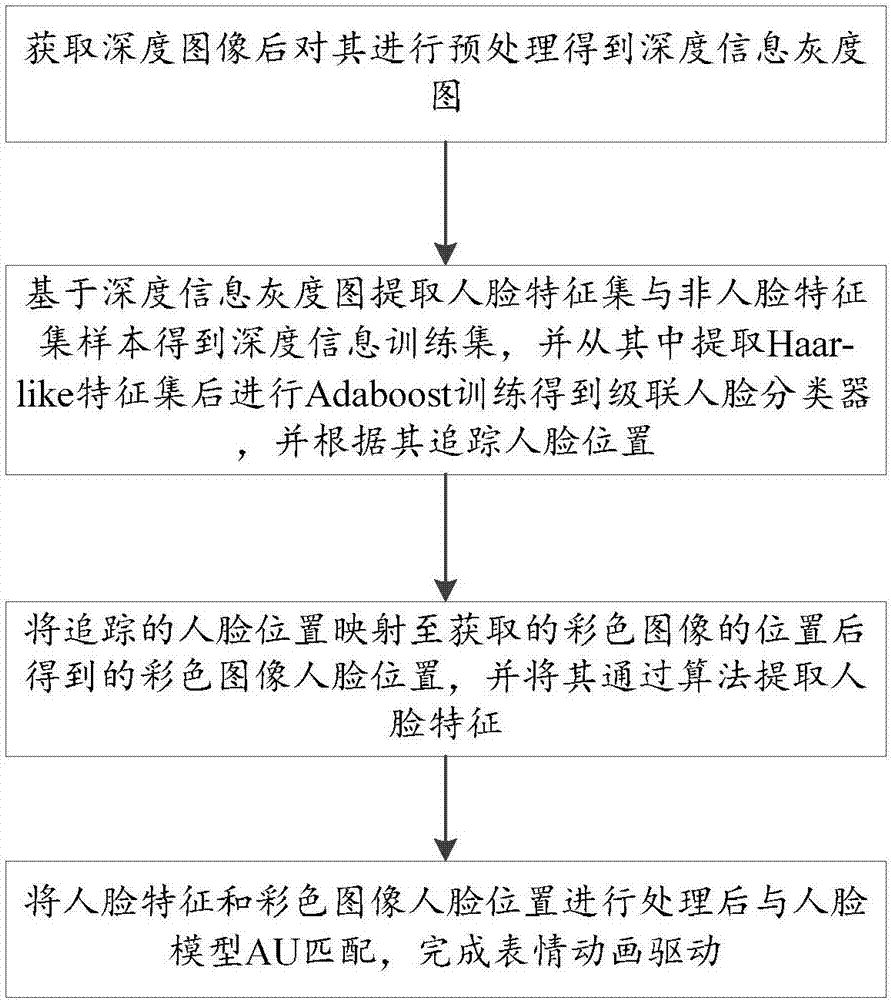

[0041] A method for driving facial expression animation based on RealSense, comprising the steps of:

[0042] Step 1: Obtain the depth image and preprocess it to obtain the depth information grayscale image;

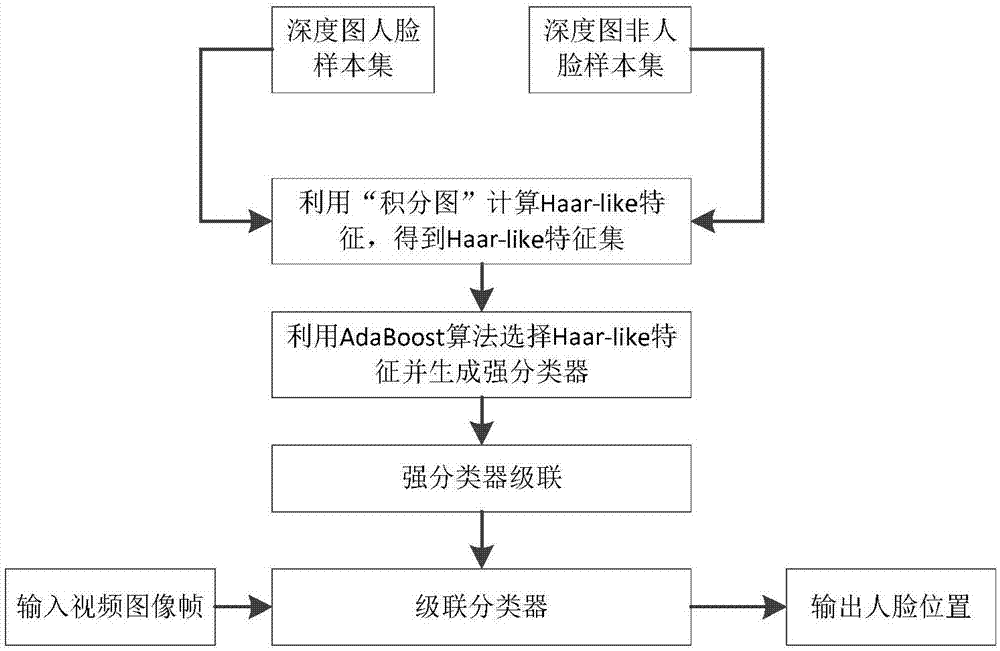

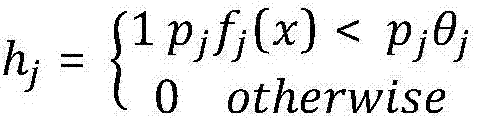

[0043] Step 2: Extract the face feature set and non-face feature set samples based on the depth information grayscale image to obtain the depth information training set, and extract the Haar-like feature set from it to perform Adaboost training to obtain a cascaded face classifier, and according to It tracks the position of the face;

[0044] Step 3: Map the tracked face position to the obtained color image face position, and extract face features through an algorithm;

[0045] Step 4: After processing the face features and the face position of the color image, match it with the face model AU to complete the expression animation drive.

Embodiment 2

[0047] Step 1: Obtain the depth image and preprocess it to obtain the depth information grayscale image;

[0048] Initialize the RealSense parameters, start the device to obtain 640*480 color images and depth information images, where the depth value d of the depth image ranges from 0.2 meters to 2 meters, set a reasonable depth threshold range according to the depth information, remove background noise, and output a rectangular target of pixels to be detected Area I, where the coordinates of the upper left vertex of the rectangular area are (x0, y0) and the length of the rectangle is recorded as width0, and the width value is recorded as height0; the depth image is converted into a depth grayscale image, and the pixel point p is converted to p=255-0.255* (d-200), the grayscale image is normalized;

[0049]Step 2: Extract the face feature set and non-face feature set samples based on the depth information grayscale image to obtain the depth information training set, and extrac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com