Blocked convolution optimization method and device for convolution neural network

A technology of convolutional neural network and optimization method, applied in the direction of neural learning method, biological neural network model, neural architecture, etc., can solve problems such as processing bottlenecks, achieve the effects of improving efficiency, alleviating resource constraints, and reducing delay

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] Preferred embodiments of the present invention are described below with reference to the accompanying drawings. Those skilled in the art should understand that these embodiments are only used to explain the technical principles of the present invention, and are not intended to limit the protection scope of the present invention.

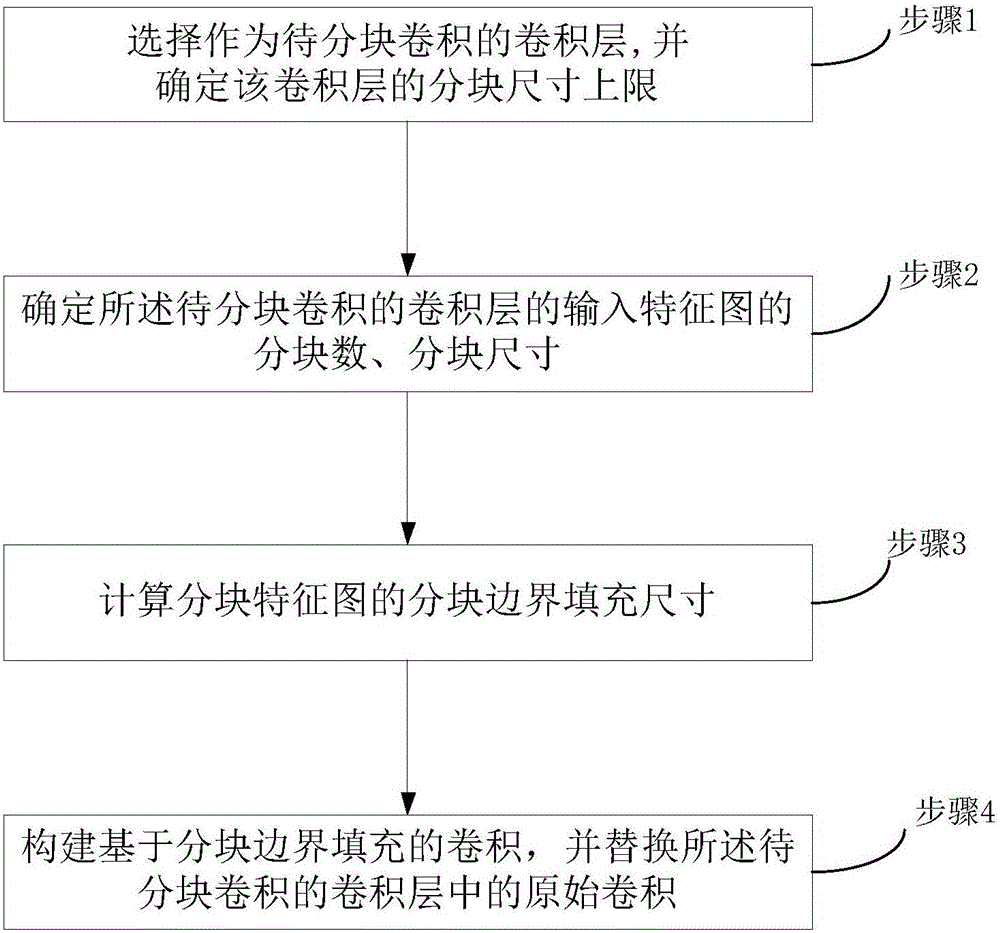

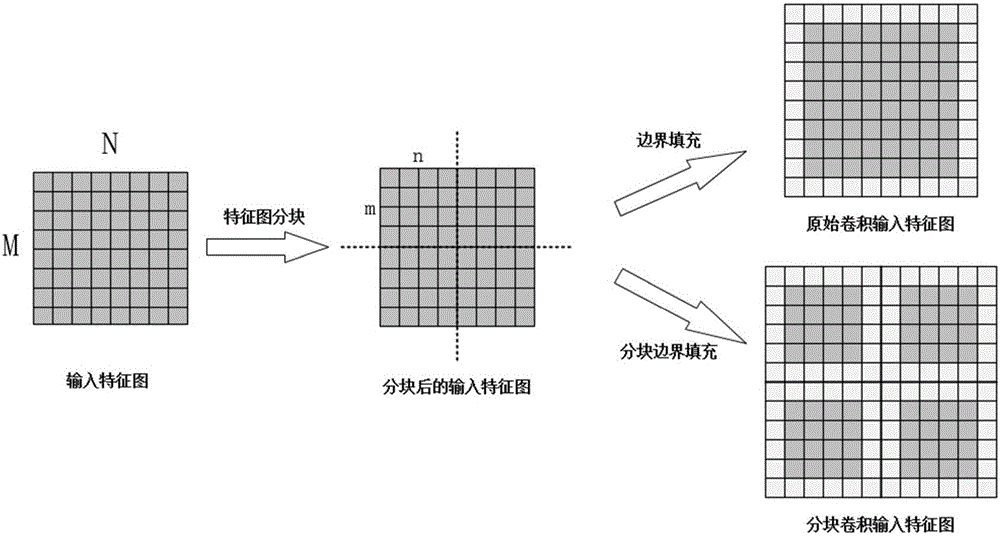

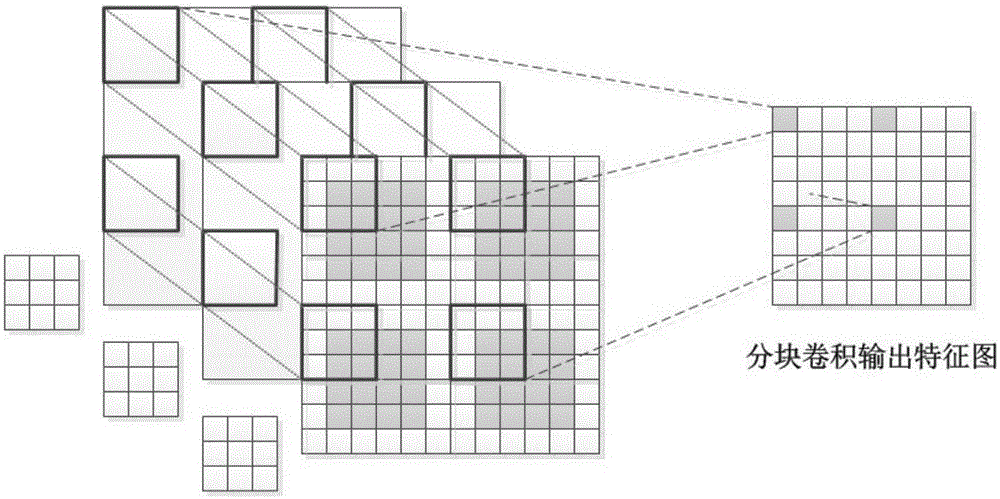

[0050] The block convolution optimization method of the convolutional neural network of the embodiment of the present invention, such as figure 1 shown, including:

[0051] Step 1. Based on the preset convolutional neural network model, select the convolutional layer to be divided into blocks, and determine the upper limit of the block size of the convolutional layer;

[0052] Step 2, according to the input feature map size and the upper limit of the block size obtained in step 1, determine the number of blocks and the block size of the input feature map of the convolutional layer to be block-convolved;

[0053] Step 3, based on the number o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com