Method and system for breath sound identification based on machine learning

A machine learning, breath sound technology, applied in neural learning methods, instruments, stethoscopes, etc., to assist clinical research, intelligent disease analysis and identification, and facilitate clinical research.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach 1

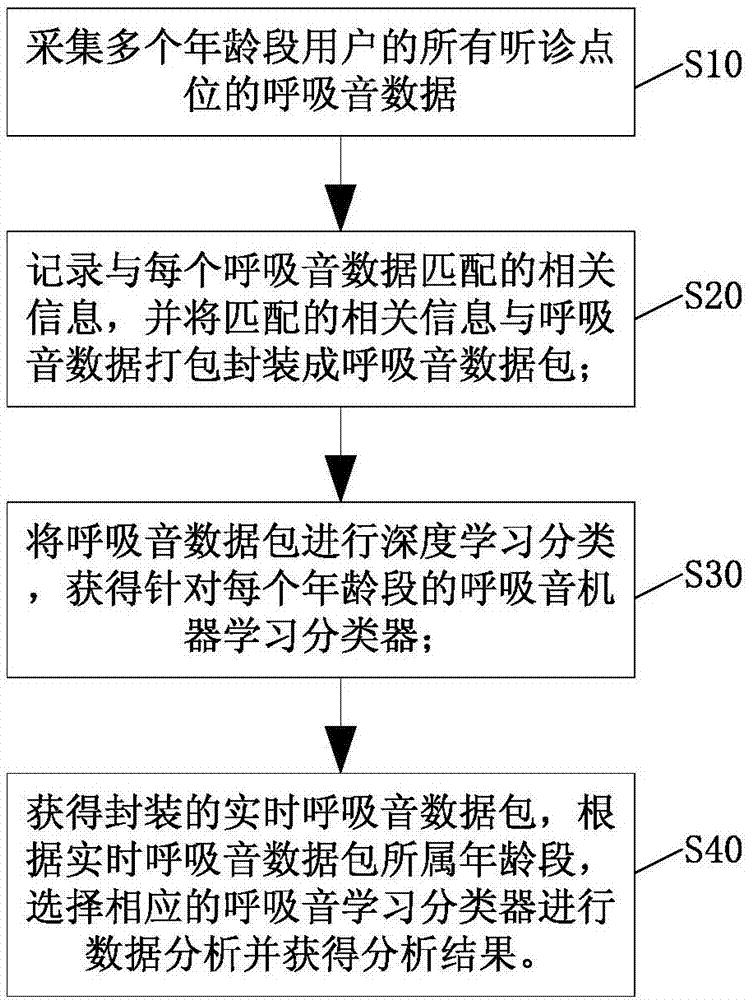

[0047] Such as figure 1 As shown, the present invention provides a kind of breathing sound discrimination method based on machine learning, and it comprises the following steps:

[0048] S10, collecting breath sound data of all auscultation points of users of multiple age groups;

[0049] S20, recording relevant information matched with each breath sound data, and packaging the matched relevant information and breath sound data into a breath sound data packet;

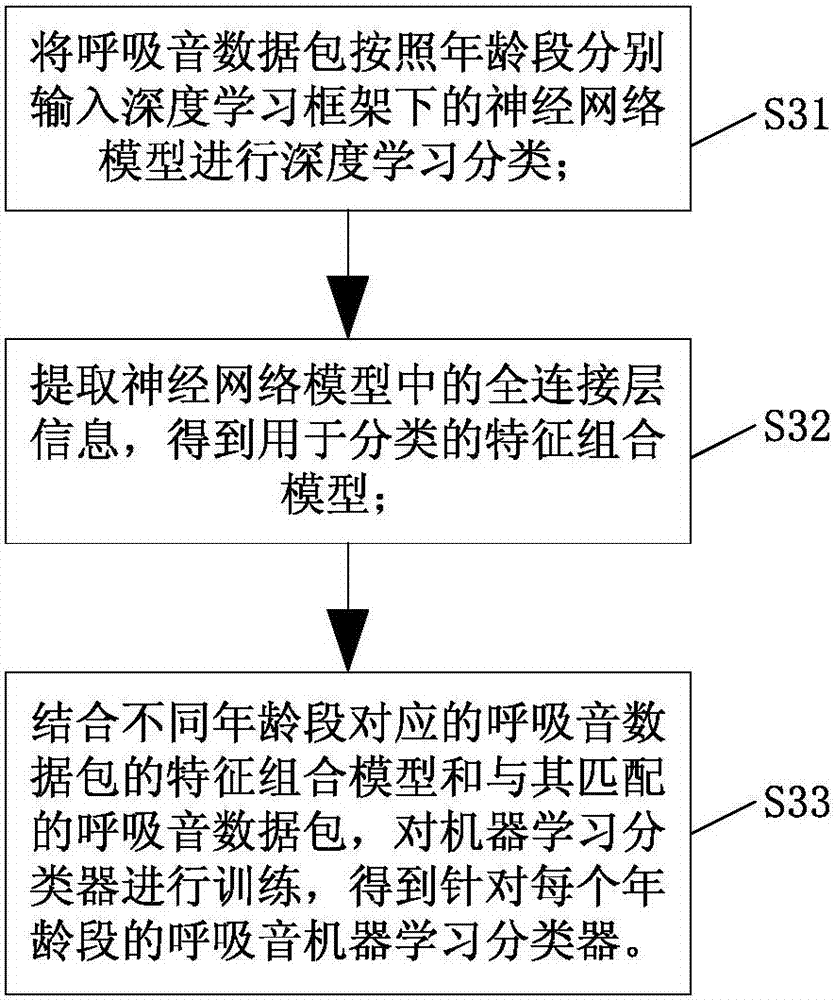

[0050] S30, performing deep learning classification on the breath sound data packet, and obtaining a breath sound machine learning classifier for each age group;

[0051] S40. Obtain the encapsulated real-time breath sound data package, and select a corresponding breath sound learning classifier to perform data analysis according to the age group to which the real-time breath sound data package belongs, and obtain an analysis result.

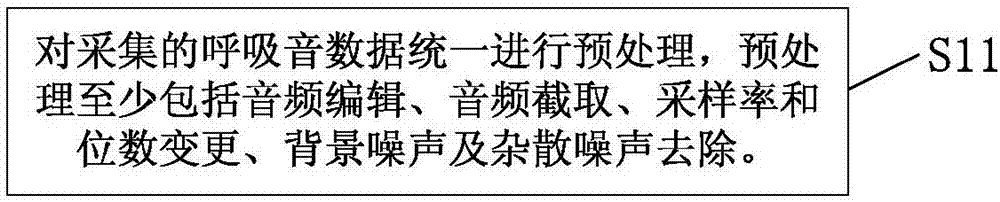

[0052] In the above embodiment, in step S10, considering that the probability of o...

Embodiment approach 2

[0065] On the basis of Embodiment 1, the embodiment of the present invention provides a breath sound identification system based on machine learning, such as Figure 5 As shown, it includes an electronic stethoscope 10 , a handheld operating terminal 20 , a data analysis server 30 and a database 40 .

[0066] The electronic stethoscope 10 is used to collect breath sound data of all auscultation points of users in multiple age groups involved in step S10, and may also record relevant information matching the collected breath sound data mentioned in step S20. For example, while collecting breath sounds at all auscultation points, the doctor selects the auscultation user's personal information including at least age, sex, age, height, weight, and auscultation points through the electronic stethoscope 10, including at least blood pressure, blood sugar, heart rate, etc. Blood oxygen, disease history, smoking history and other detected health status information, as well as other inf...

Embodiment 1

[0076] An electronic stethoscope 10 is provided with related information such as the user's age, gender, auscultation point, and auscultation time, and transmits breath sound data and related information to the user's mobile phone (ie, the user's hand-held operating terminal 21) during transmission. The electronic stethoscope 10 communicates with the mobile phone through bluetooth, and has two-way data communication. The mobile APP is installed on the mobile phone end, accesses the Internet through wireless mode, and communicates with the data service server 50 . The data analysis server 30 , the database 40 and the data service server 50 are all integrated into the cloud server 60 .

[0077] Utilize electronic stethoscope 10, gather the breath sound data of user auscultation point, at first transmit to the APP of user's mobile phone, then by the APP of user's mobile phone through data service server 50, with the other end doctor's mobile phone (medical hand-held operating ter...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com