Time-dimension video super-resolution method based on deep learning

A deep learning and super-resolution technology, applied in the field of image processing, can solve the problems of unsatisfactory stability and accuracy of video images, and insufficient use of structural similarity, etc., to achieve the goals of reducing computational complexity, improving stability, and improving accuracy Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

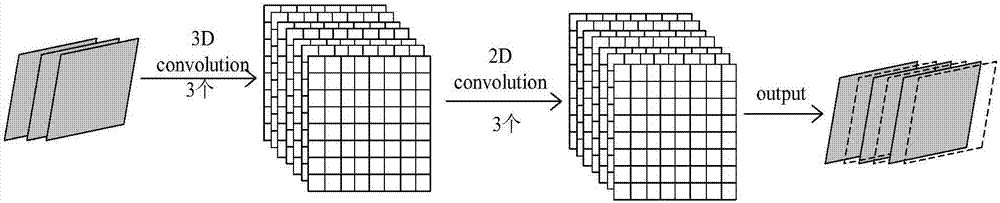

[0028] Embodiments and effects of the present invention will be further described in detail below in conjunction with the accompanying drawings.

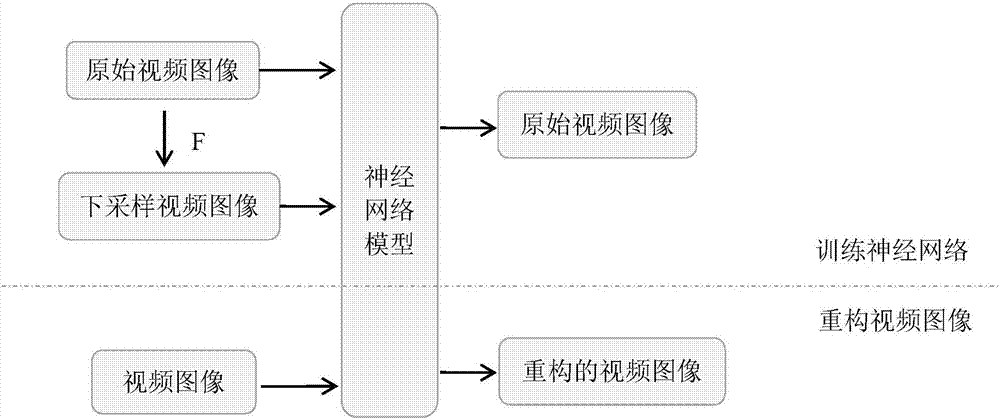

[0029] refer to figure 1 , the present invention is based on the time-dimensional video super-resolution method of deep learning, and its realization steps are as follows:

[0030] Step 1, get the color video image set S.

[0031] (1a) From a given database, select a color video image set S={S with a sample number of 464814 1 ,S 2 ,...,S i ,...,S 464814}, convert S to a grayscale video image set, that is, the original video image set X={X 1 ,X 2 ,...,X i ,...,X 464814},in, Represents the i-th original video image sample, 1≤i≤464814, M represents the size of the original video image block, M=576, L h Indicates the number of image blocks in each sample of the original video image set, L h = 6;

[0032] (1b) Use the downsampling matrix F to directly downsample the original video image set X to obtain the downsampled video ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com