Object volume calculating method based on Kinect

A volume calculation and object technology, applied in the field of computer vision, can solve problems such as high cost and long measurement time, and achieve the effect of satisfying automation, solving labor intensity and improving measurement accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

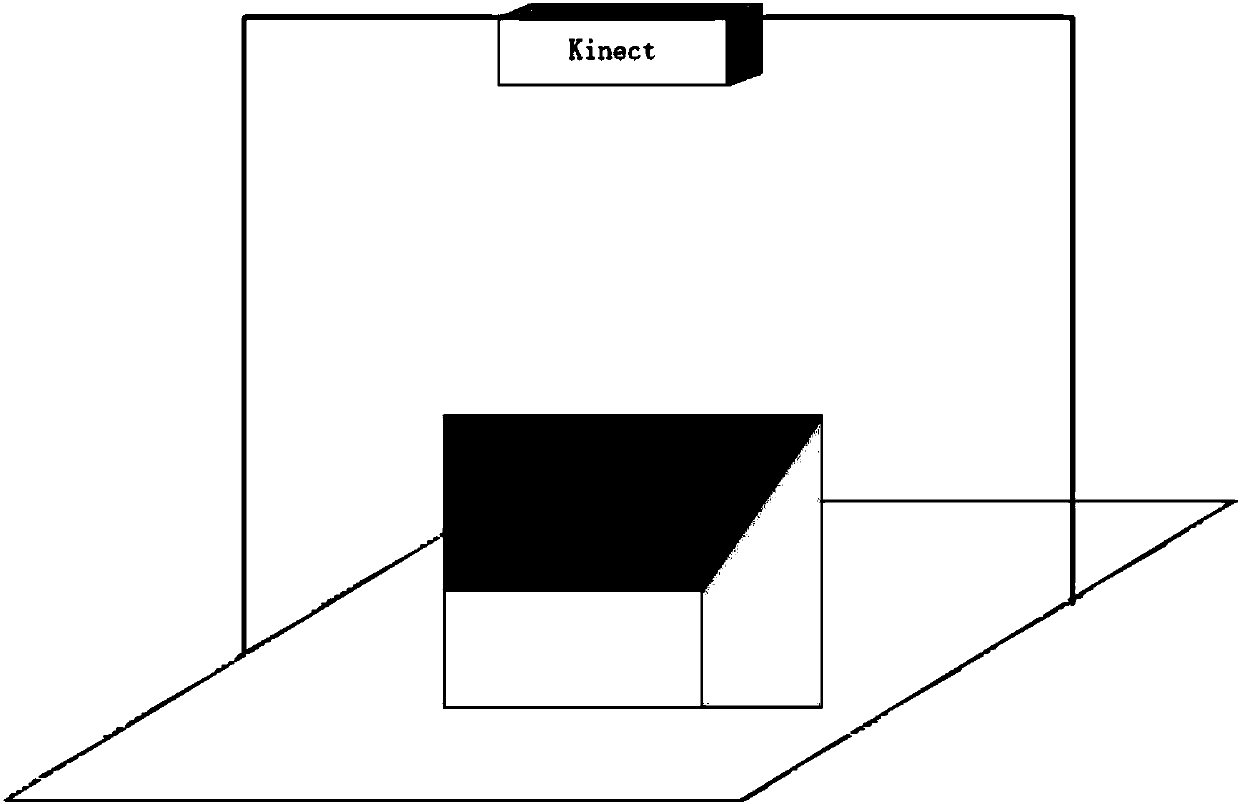

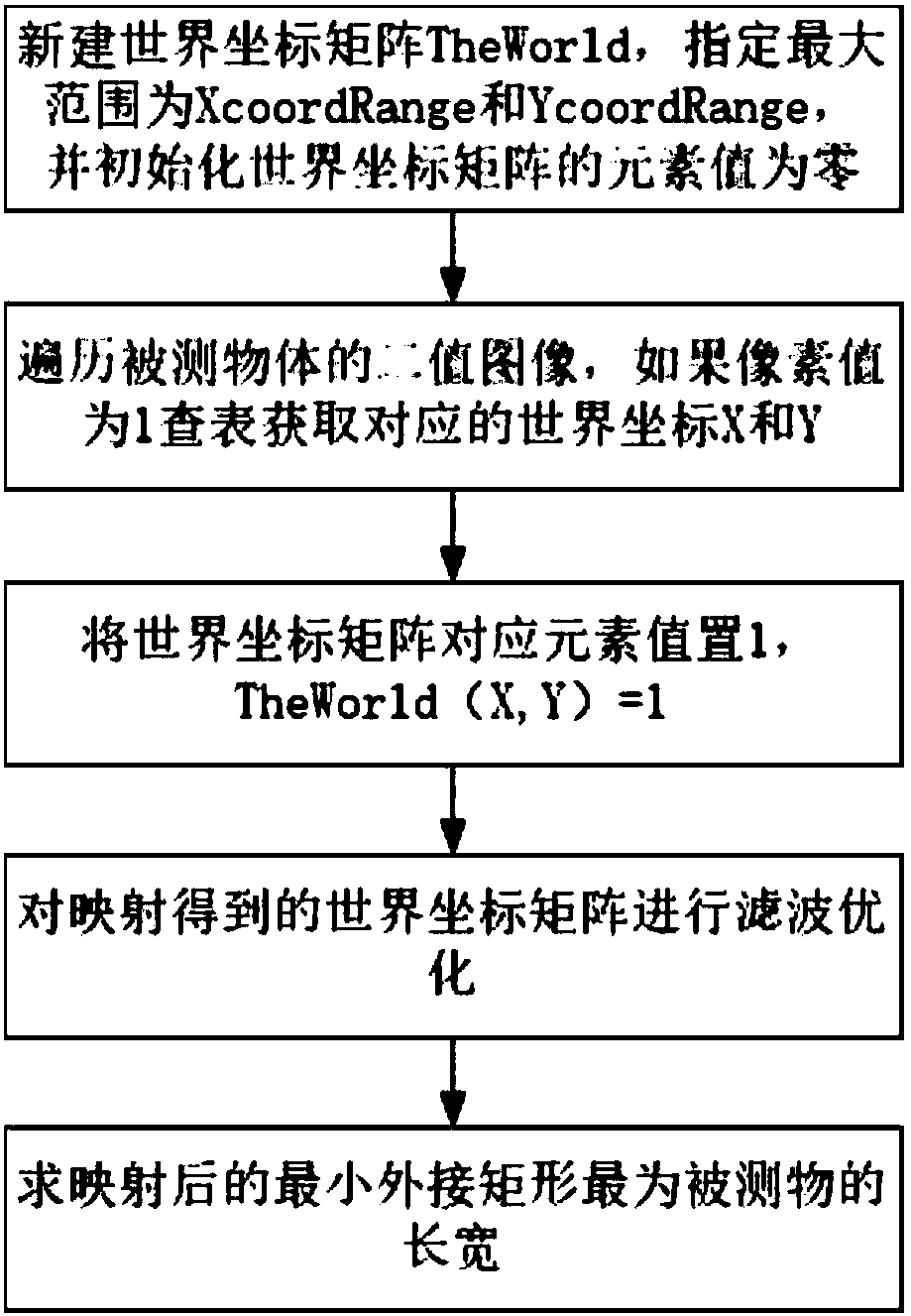

[0062] (1a) Utilize Kinect to collect the foreground depth image and the foreground color image that comprise the measured object and the background depth image and the background color image of the measuring platform that does not contain the measured object;

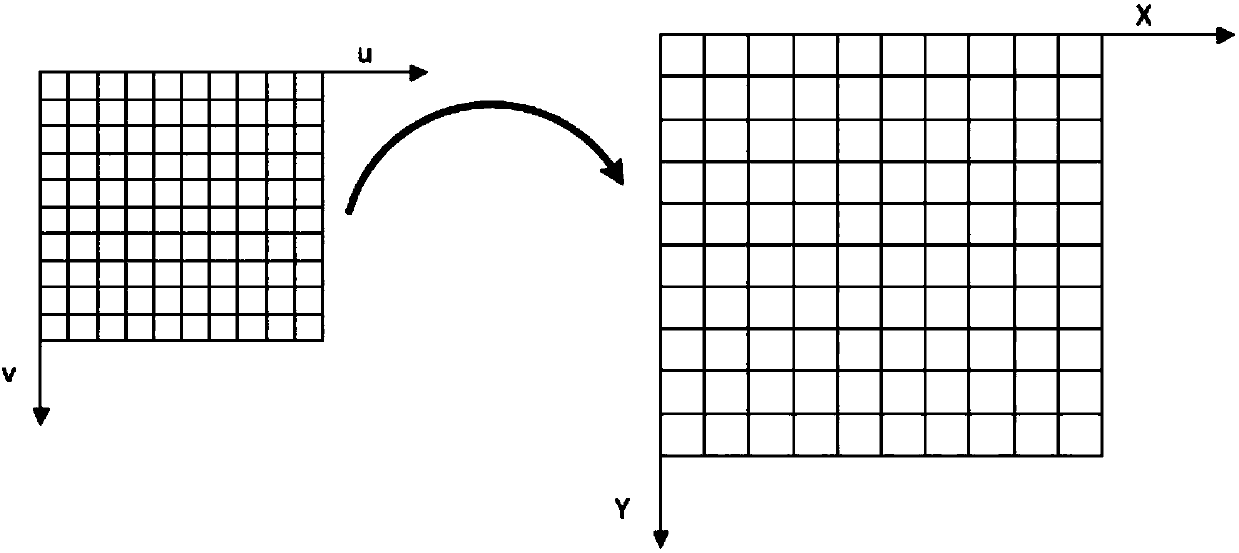

[0063] (1b) Carry out checkerboard calibration to the color camera of Kinect, calculate the internal parameters and external parameters of the camera through the relationship between the image coordinate system and the world coordinate system, and the general camera model can be expressed as shown in formula (I):

[0064]

[0065] In formula (I), K is only related to the internal structure of the camera, called the internal parameters of the camera; R, t is only related to the orientation of the camera relative to the world coordinate system, called the external parameters of the camera, R is the rotation matrix, and t is the translation Matrix, [x y] is the coordinate of image pixel point, and [X Y Z] is the coordin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com