A sparse matrix storage method on simd many-core processor with multi-level cache

A technology of many-core processors and sparse matrix, which is applied in the field of parallel programming, can solve the problems of missing cache, high memory access delay overhead, low x-vector data reuse rate, low SIMD utilization rate, etc., to improve utilization rate, The effect of high density and improved computing efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] This section applies the present invention to a typical sparse matrix-vector multiplication calculation on a SIMD many-core processor with multi-level Cache. Thereby, the object, advantages and key technical features of the present invention are further described. This implementation is only a typical example of the solution, and any technical solution formed by replacement or equivalent transformation falls within the scope of protection claimed by the present invention.

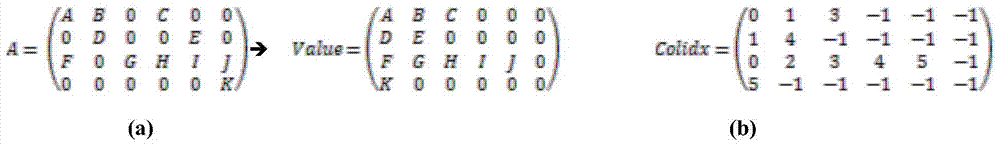

[0028] For a sparse matrix A to be computed:

[0029]

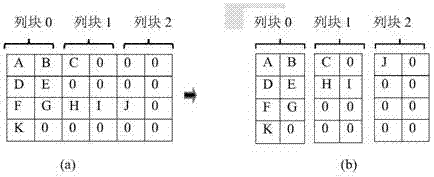

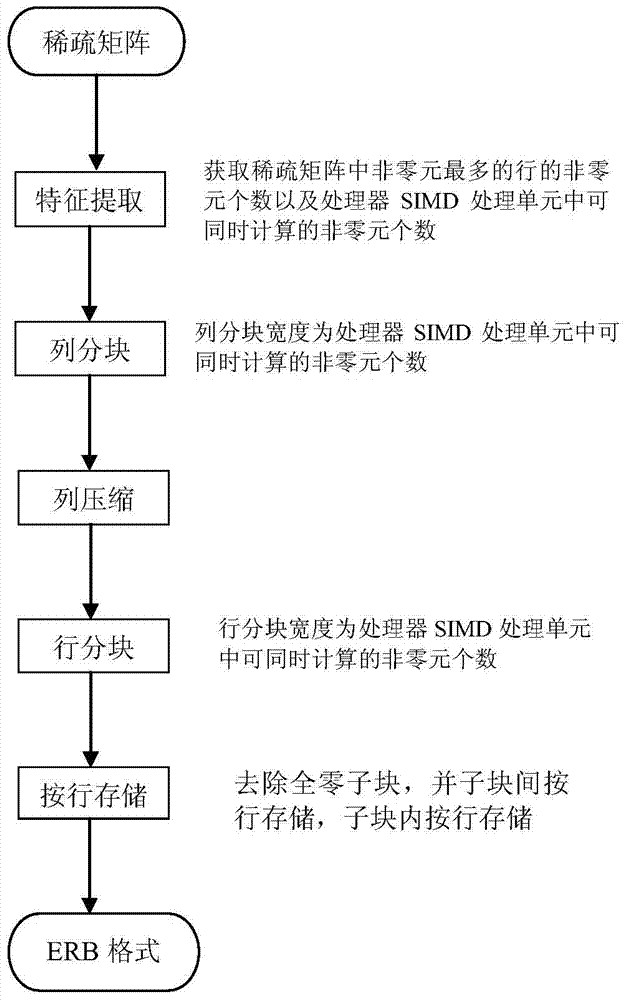

[0030] First, the matrix A is subjected to feature extraction, matrix scanning, column block, column compression, row block, and row-by-row storage according to the optimization method proposed by the present invention. converted to as Figure 5 ERB storage format, or directly store the sparse matrix to be calculated as Figure 5 storage format and save it to a file.

[0031] When calculating, read from the file Figure 5 The sparse matr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com