Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

91 results about "Language speech" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Speech is the verbal expression of language and includes articulation (the way sounds and words are formed). Language is the entire system of giving and getting information in a meaningful way. It's understanding and being understood through communication — verbal, nonverbal, and written.

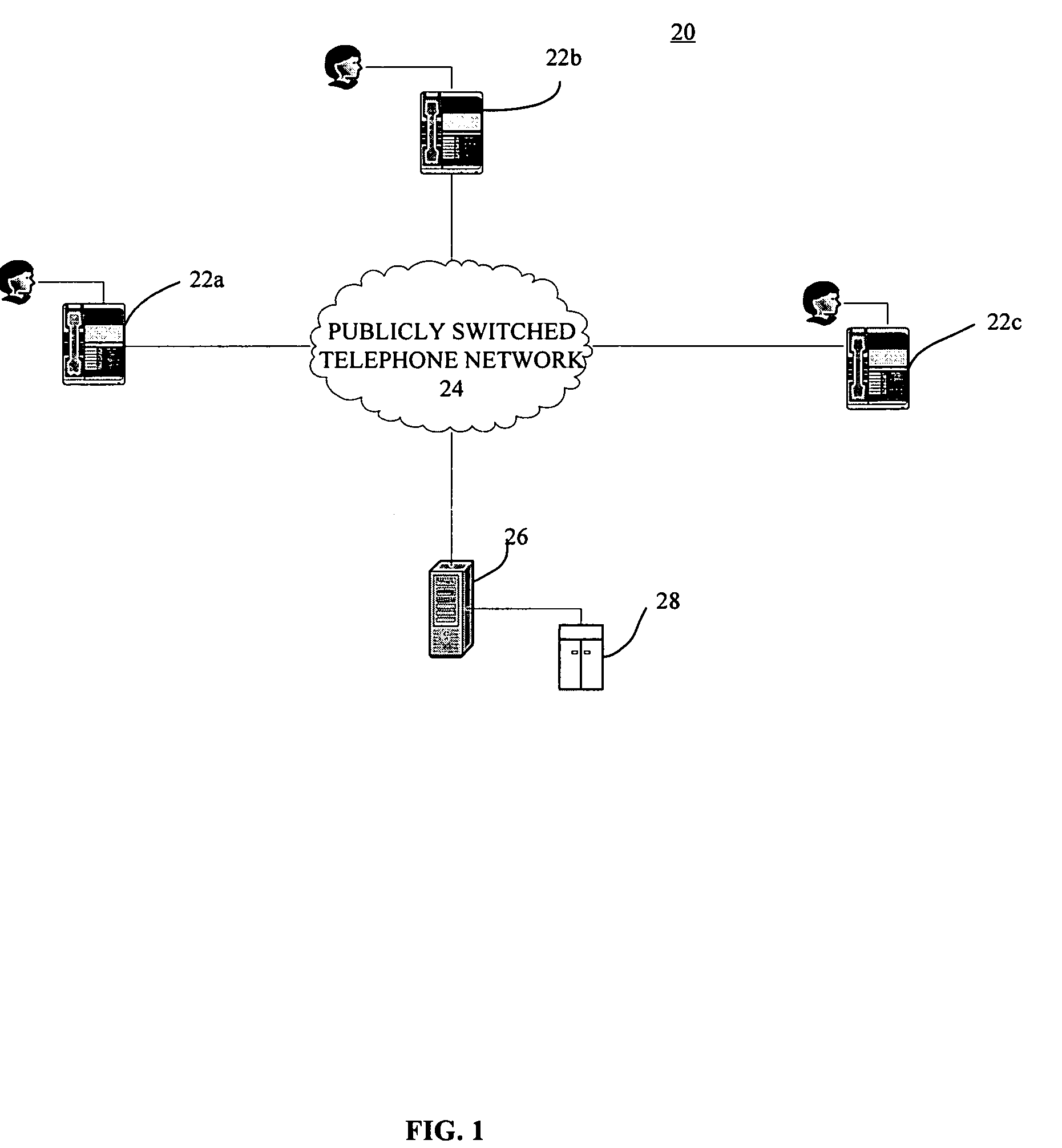

Multi-language speech recognition system

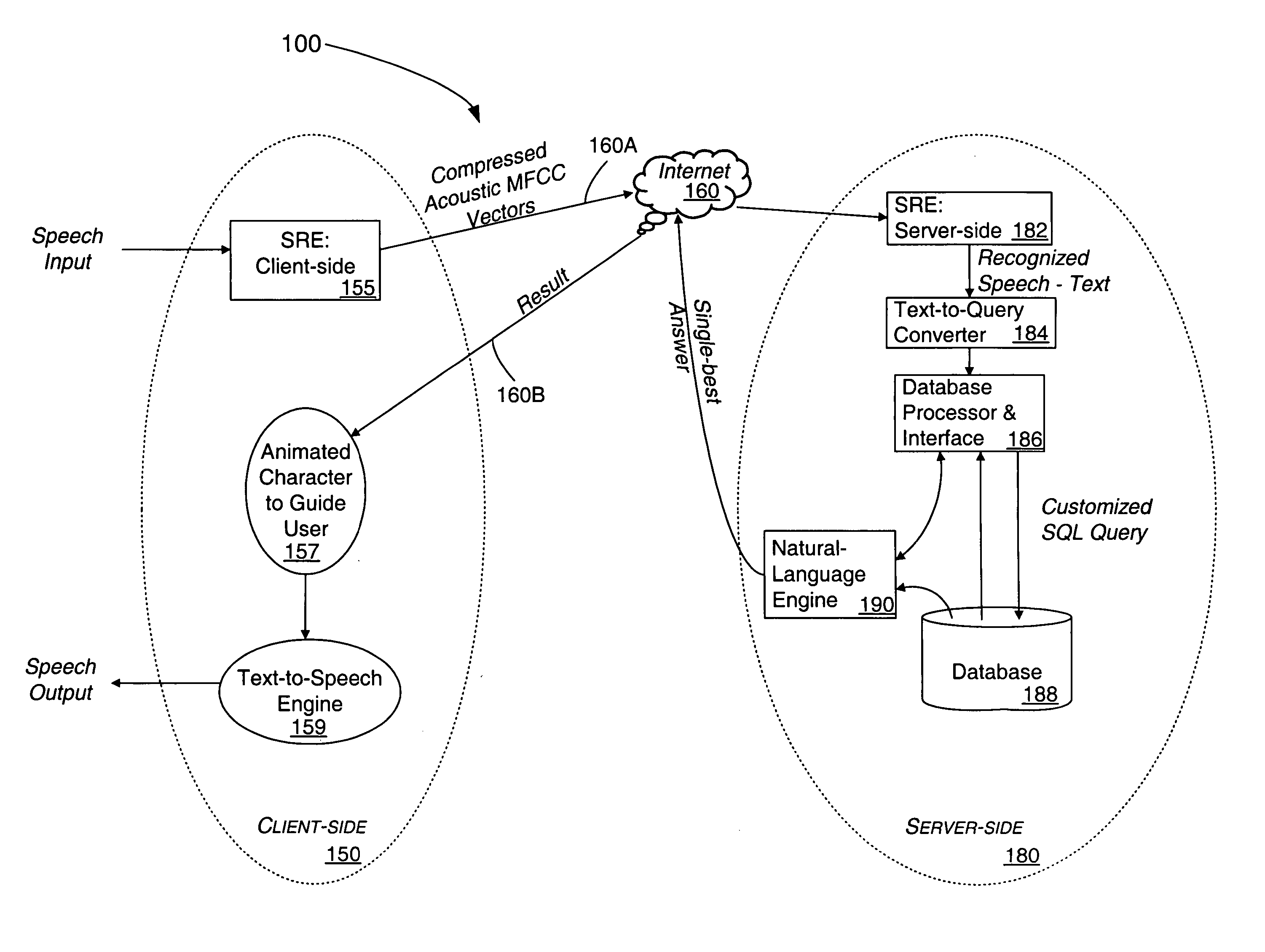

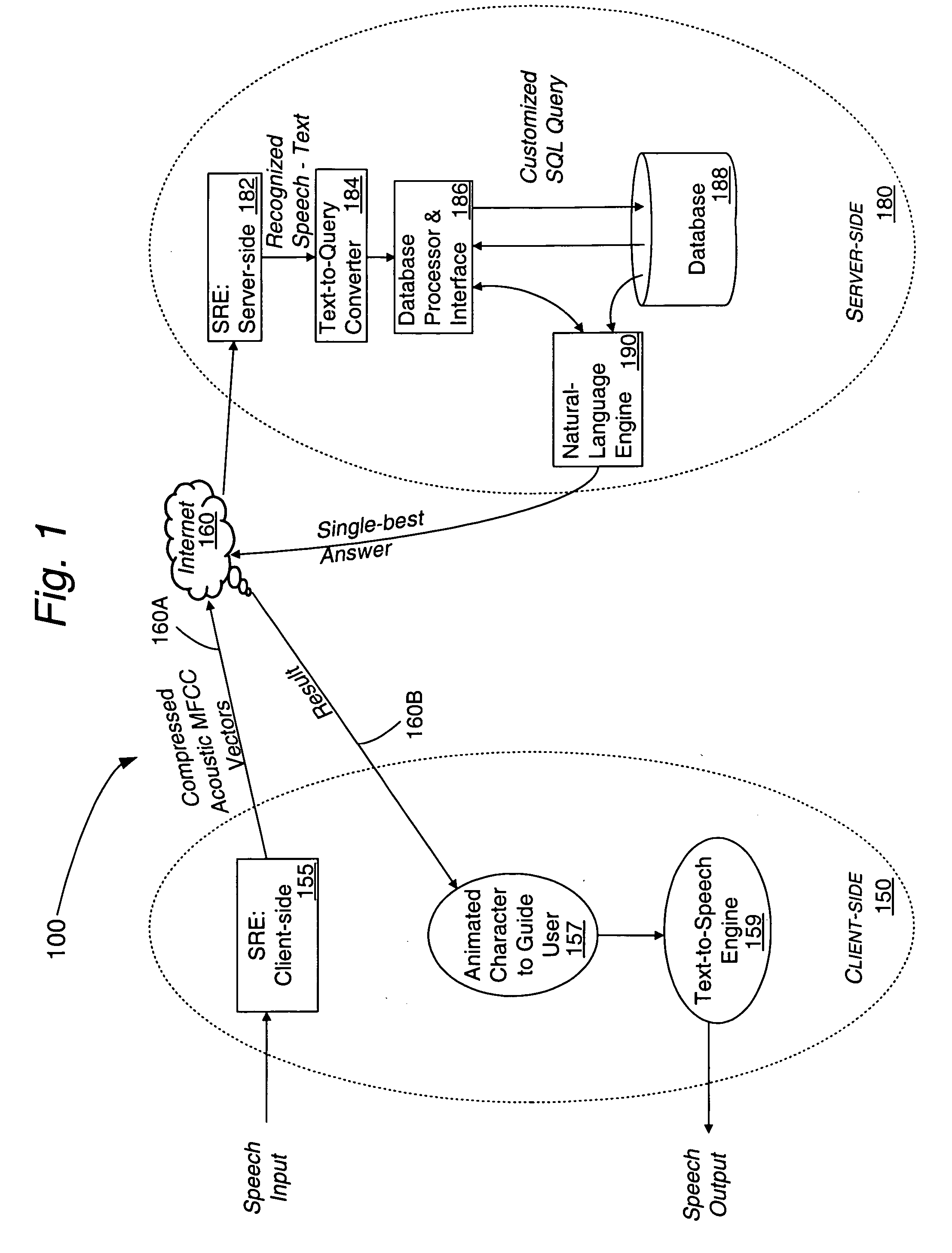

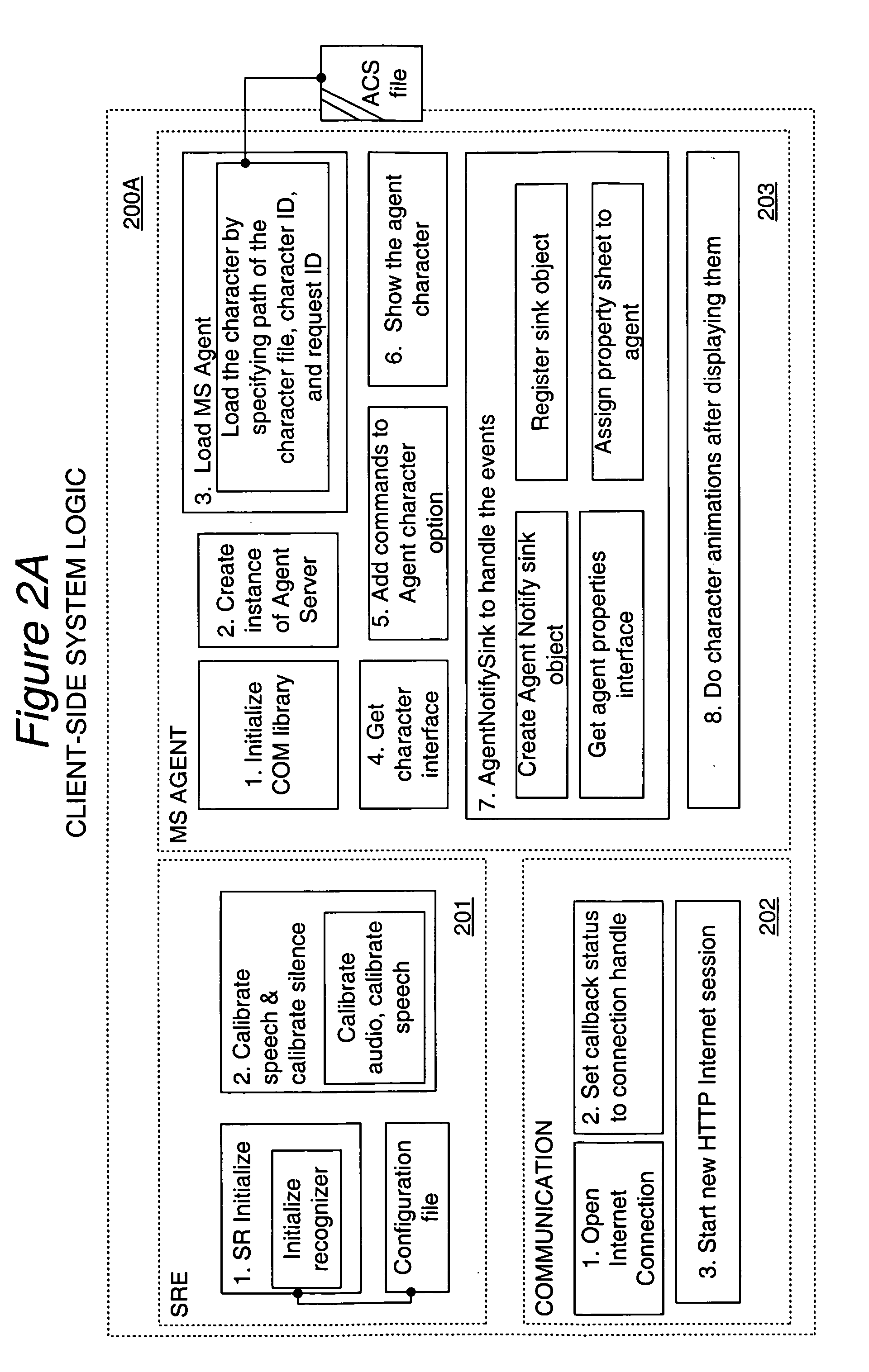

InactiveUS20050119897A1Flexibly and optimally distributedImprove accuracyNatural language translationDigital data information retrievalMulti languageClient-side

A speech recognition system includes distributed processing across a client and server for recognizing a spoken query by a user. A number of different speech models for different natural languages are used to support and detect a natural language spoken by a user. In some implementations an interactive electronic agent responds in the user's native language to facilitate an real-time, human like dialogue.

Owner:NUANCE COMM INC

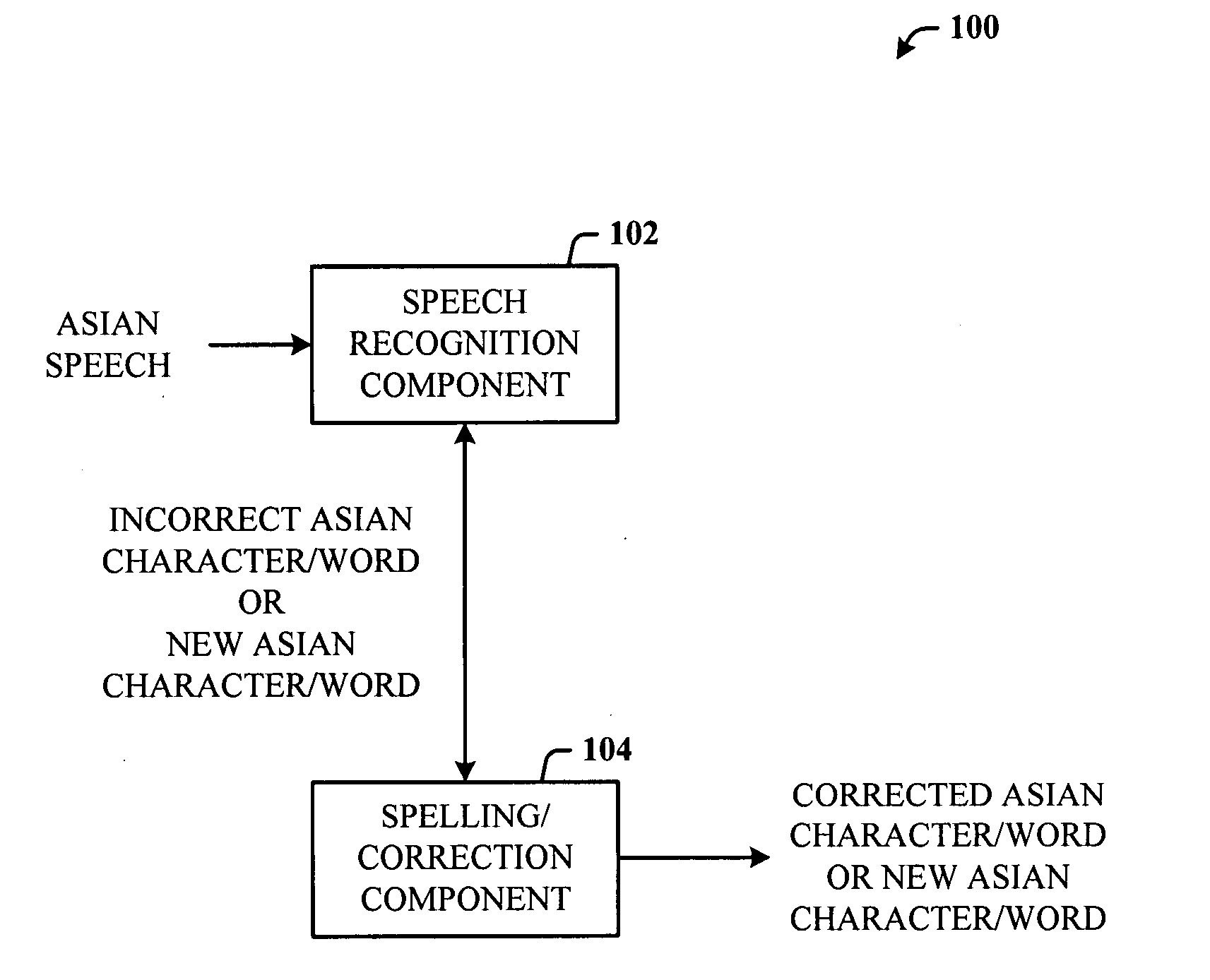

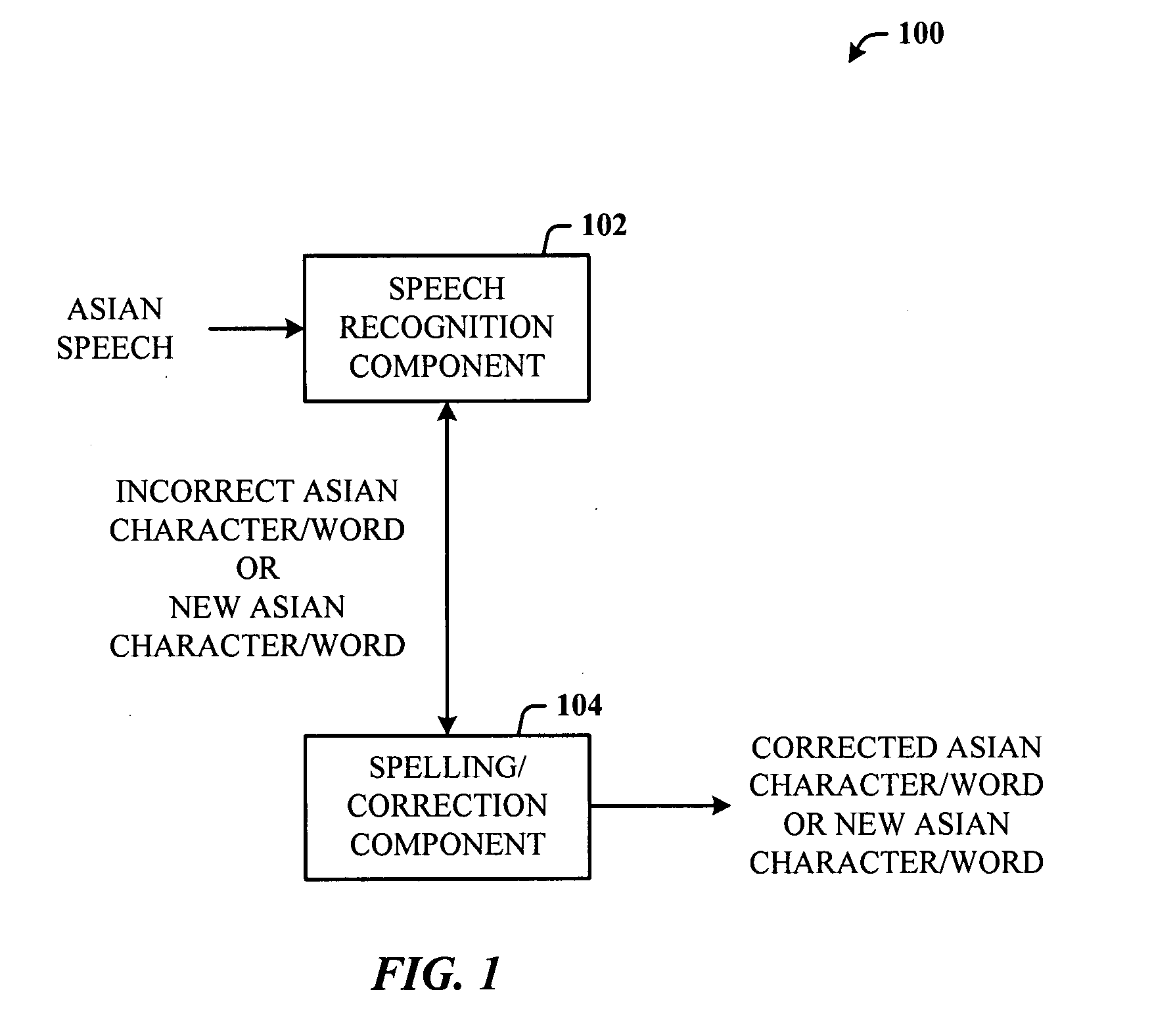

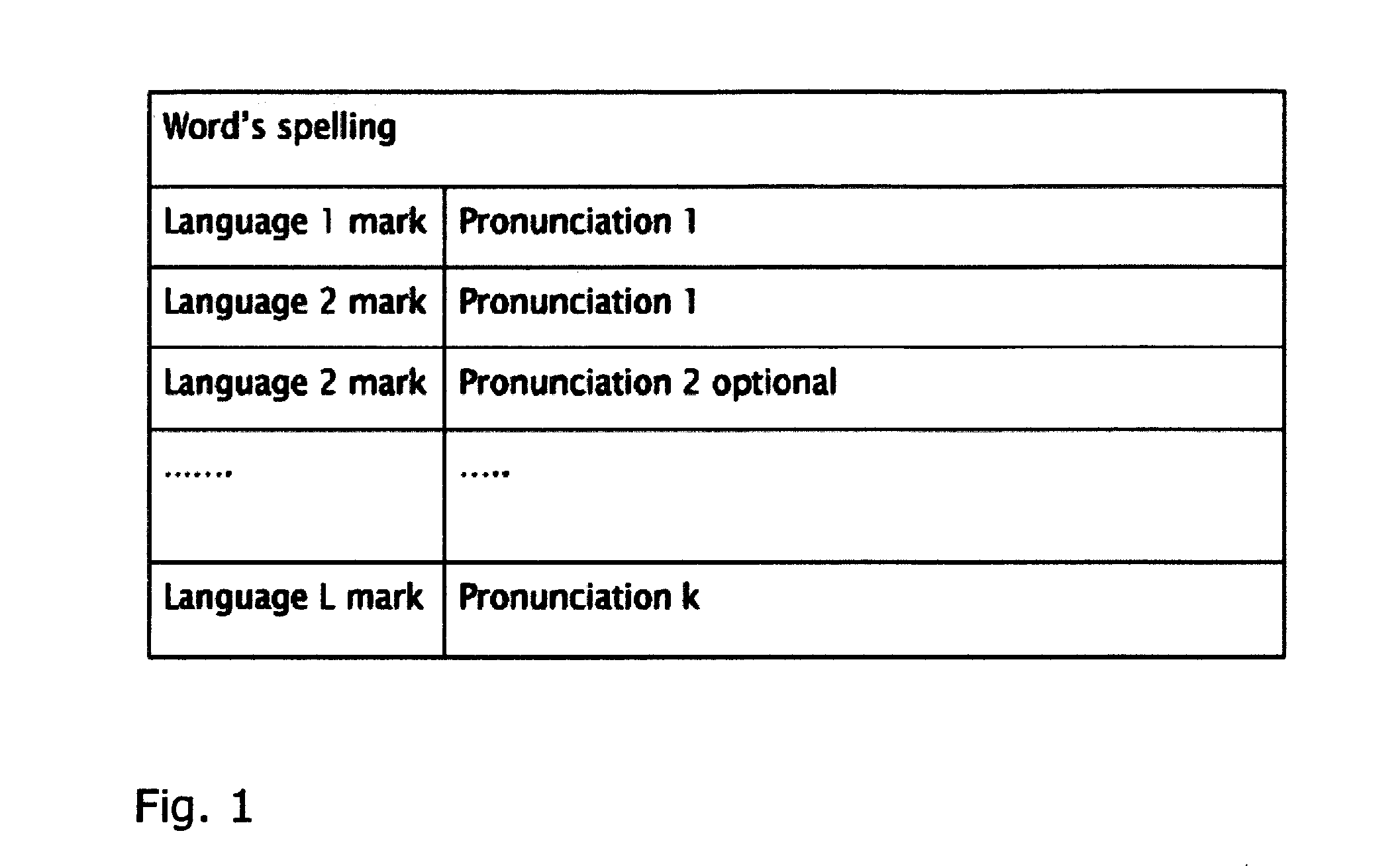

Recognition architecture for generating Asian characters

ActiveUS20080270118A1Easy to determineEasy inputNatural language data processingSpeech recognitionSpeech inputLanguage speech

Architecture for correcting incorrect recognition results in an Asian language speech recognition system. A spelling mode can be launched in response to receiving speech input, the spelling mode for correcting incorrect spelling of the recognition results or generating new words. Correction can be obtained using speech and / or manual selection and entry. The architecture facilitates correction in a single pass, rather than multiples times as in conventional systems. Words corrected using the spelling mode are corrected as a unit and treated as a word. The spelling mode applies to languages of at least the Asian continent, such as Simplified Chinese, Traditional Chinese, and / or other Asian languages such as Japanese.

Owner:MICROSOFT TECH LICENSING LLC

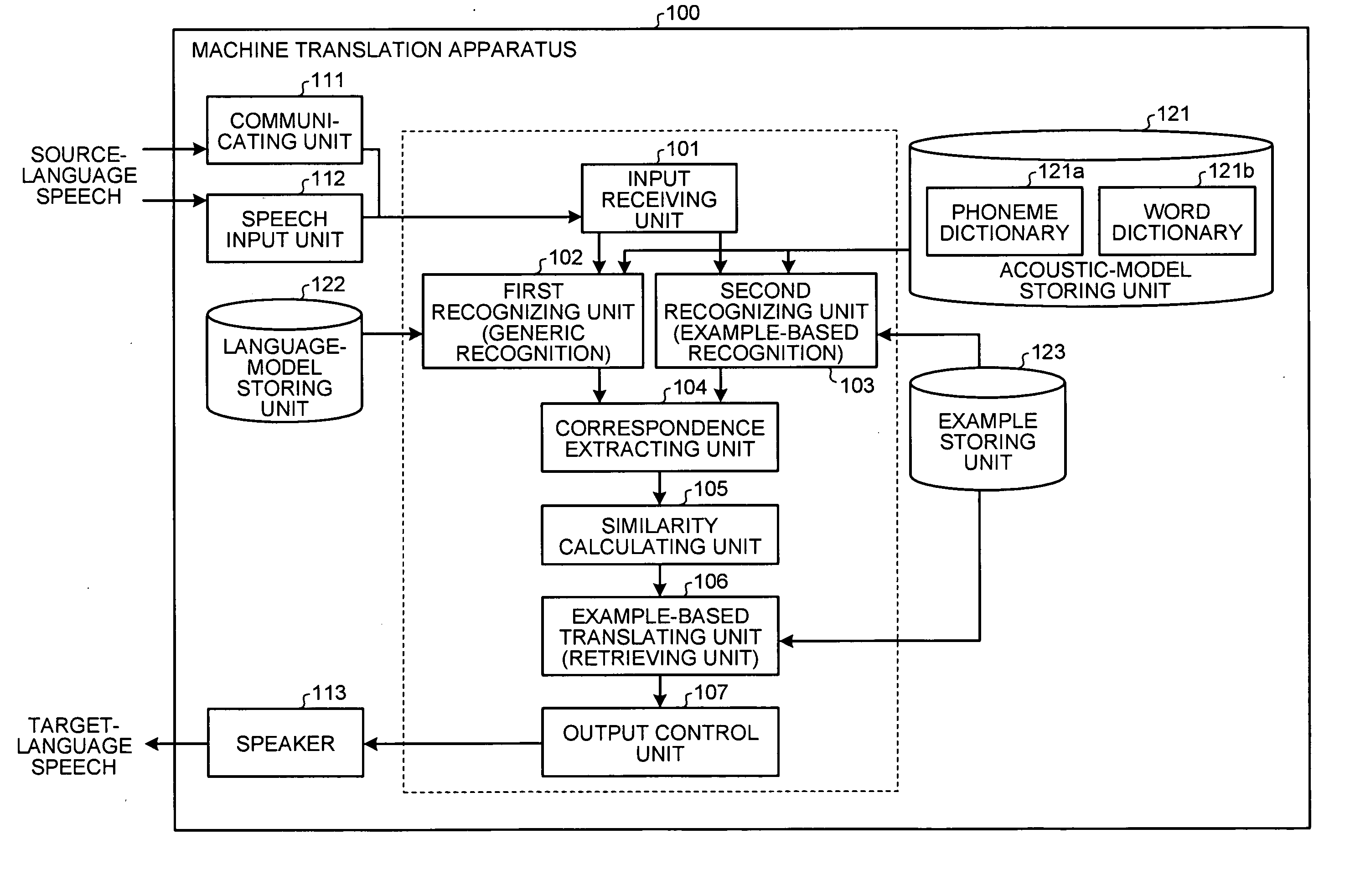

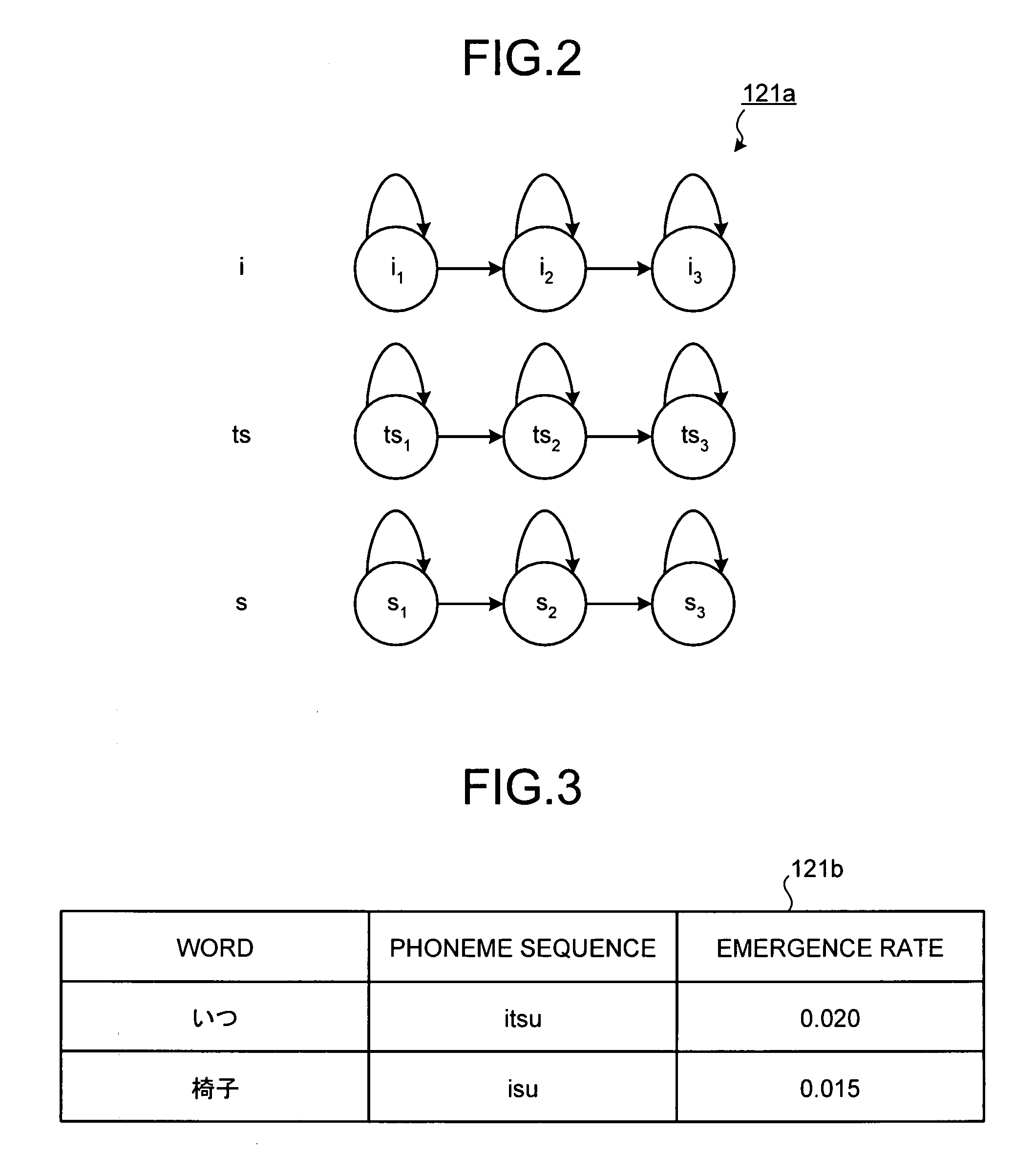

Method, apparatus, and computer program product for machine translation

InactiveUS20080077391A1Natural language translationSpeech recognitionLanguage speechMachine translation

A first recognizing unit recognizes a first-language speech as a first-language character string, and outputs a first recognition result. A second recognizing unit recognizes the first-language speech as a most probable first-language example from among first-language examples stored in an example storing unit, and outputs a second recognition result. A retrieving unit retrieves, when a similarity between the first recognition result and the second recognition result exceeds a predetermined threshold, a second-language example corresponding to the second recognition result from the example storing unit.

Owner:TOSHIBA DIGITAL SOLUTIONS CORP

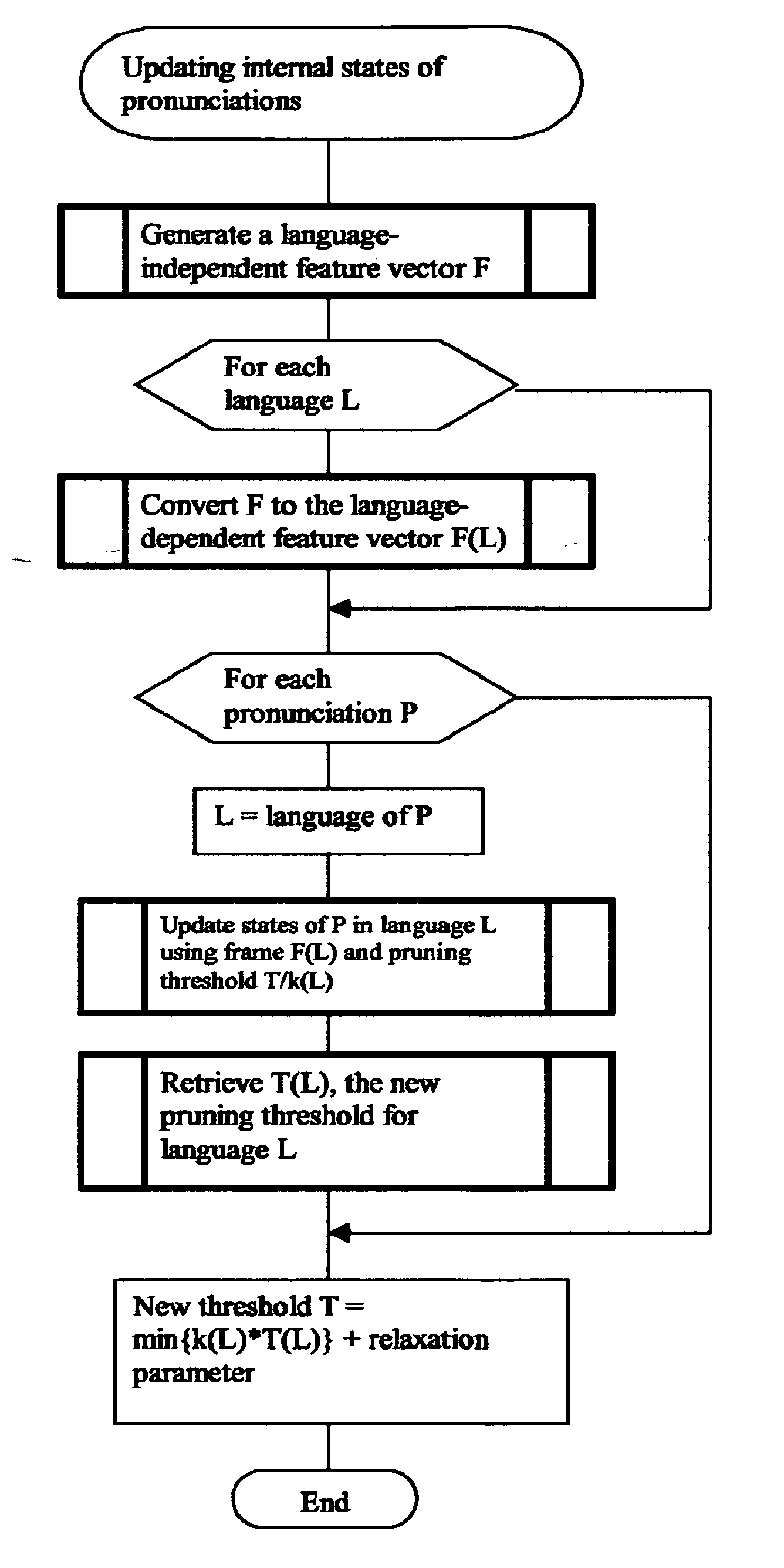

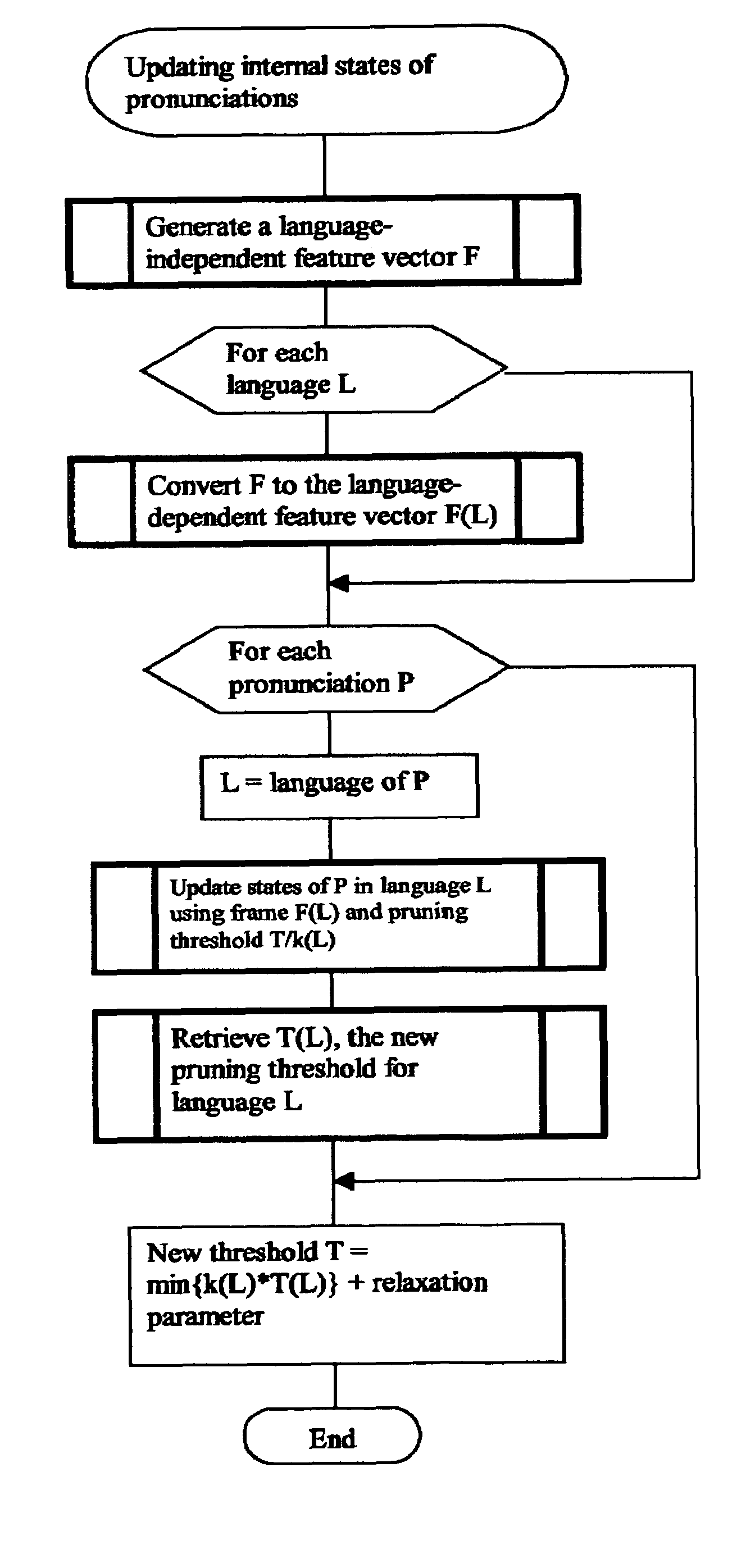

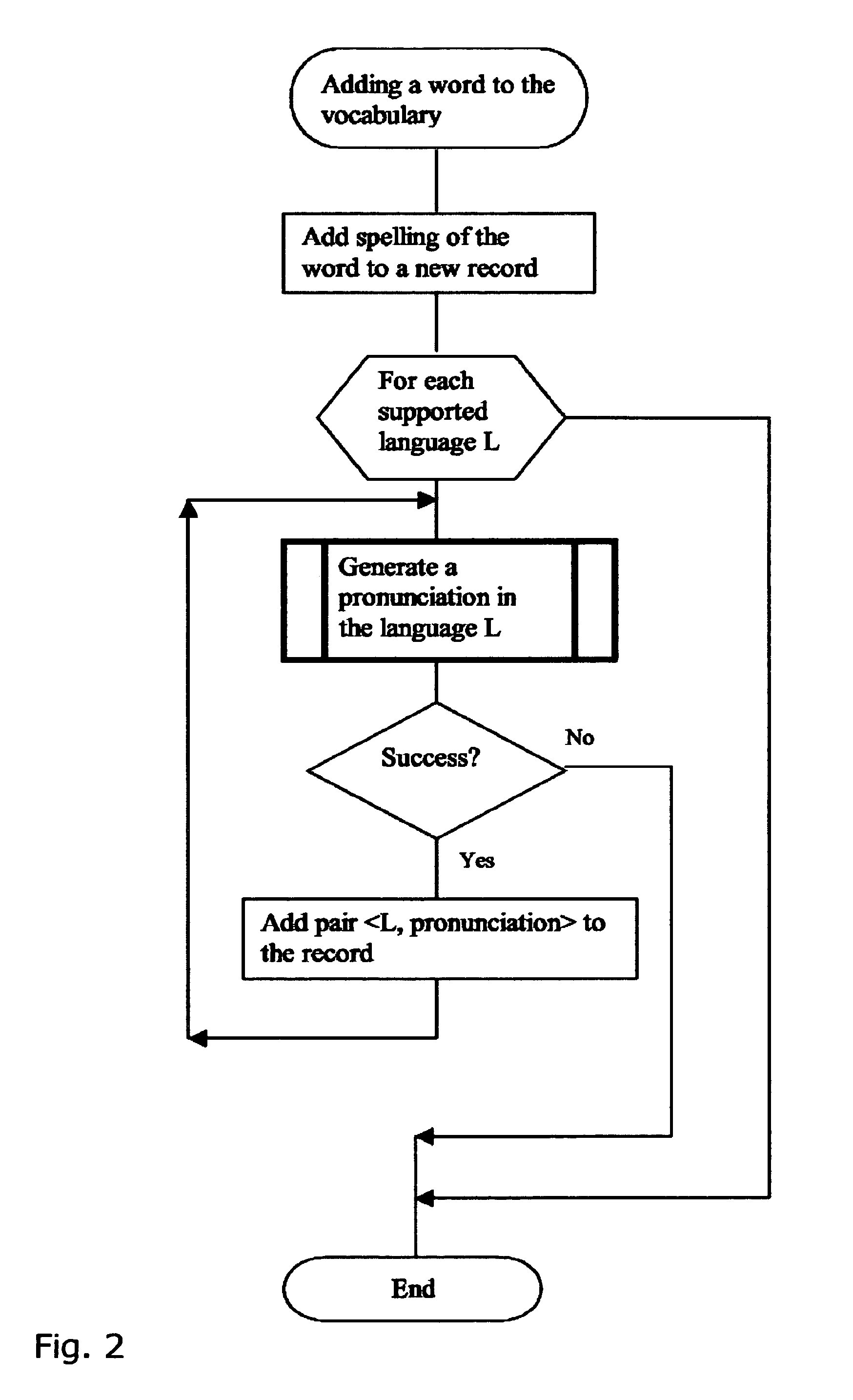

Method of Multilingual Speech Recognition by Reduction to Single-Language Recognizer Engine Components

InactiveUS20050187758A1Natural language data processingSpeech recognitionComputation complexityLanguage speech

In some speech recognition applications, not only the language of the utterance is not known in advance, but also a single utterance may contain words in more than one language. At the same time, it is impractical to build speech recognizers for all expected combinations of languages. Moreover, business needs may require a new combination of languages to be supported in short period of time. The invention addresses this issue by a novel way of combining and controlling the components of the single-language speech recognizers to produce multilingual speech recognition functionality capable of recognizing multilingual utterances at a modest increase of computational complexity.

Owner:KHASIN ARKADY

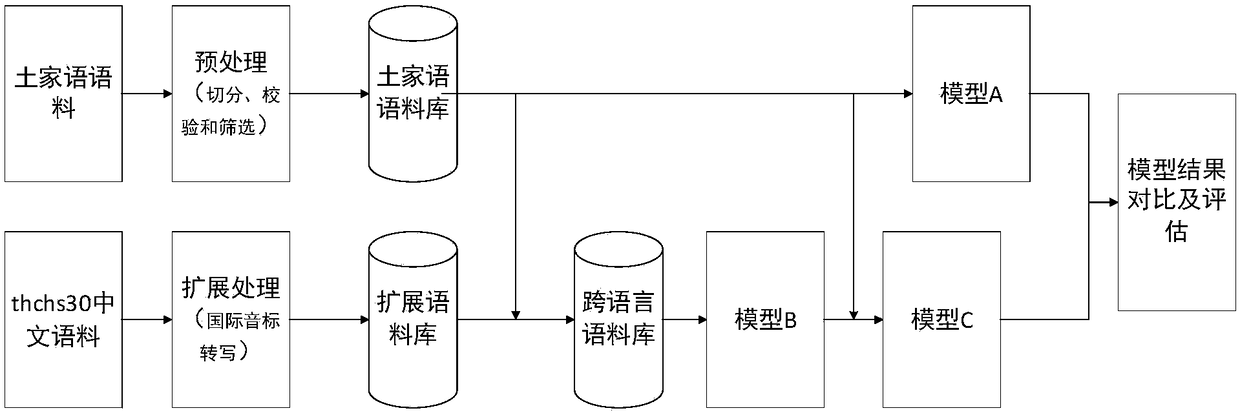

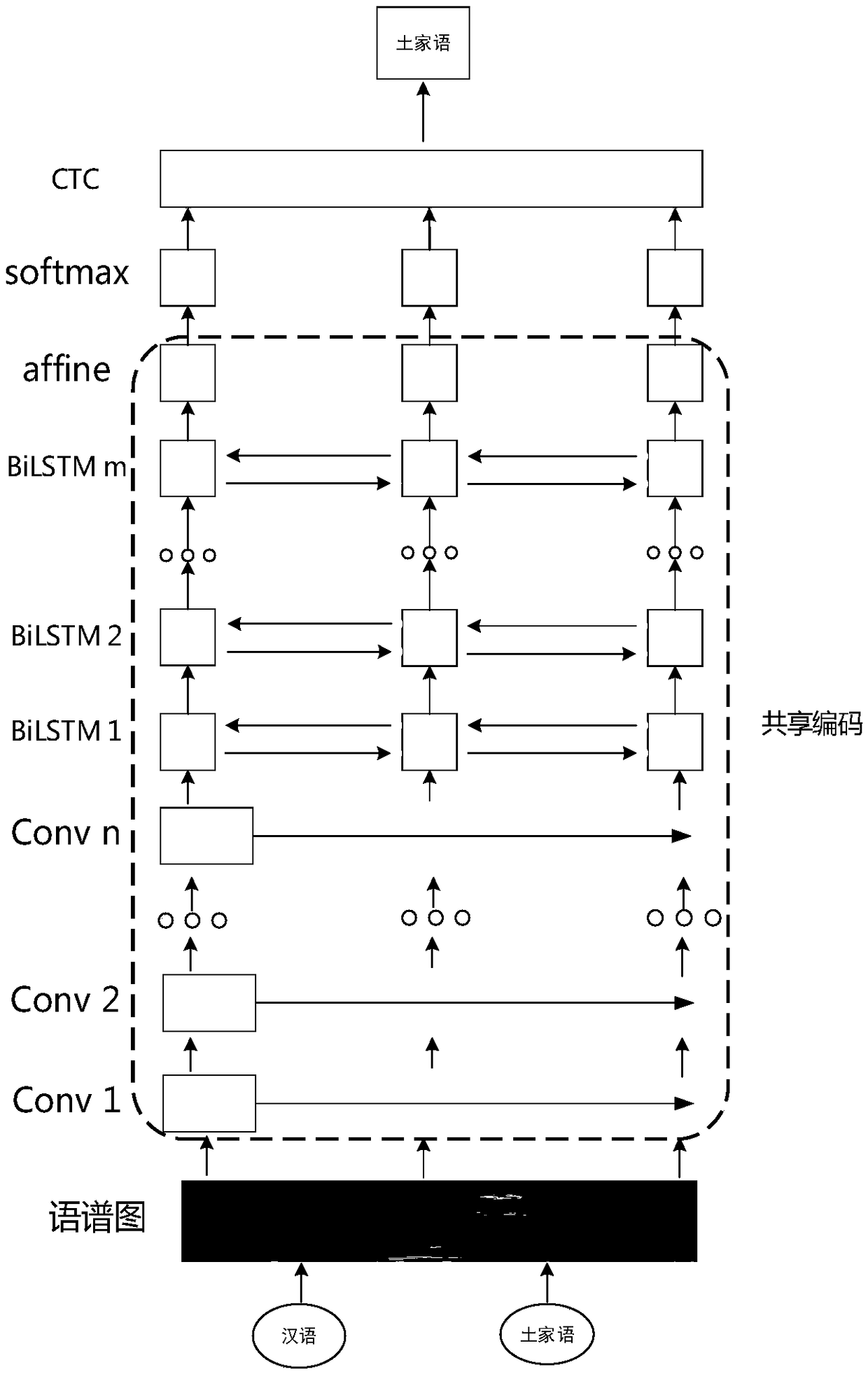

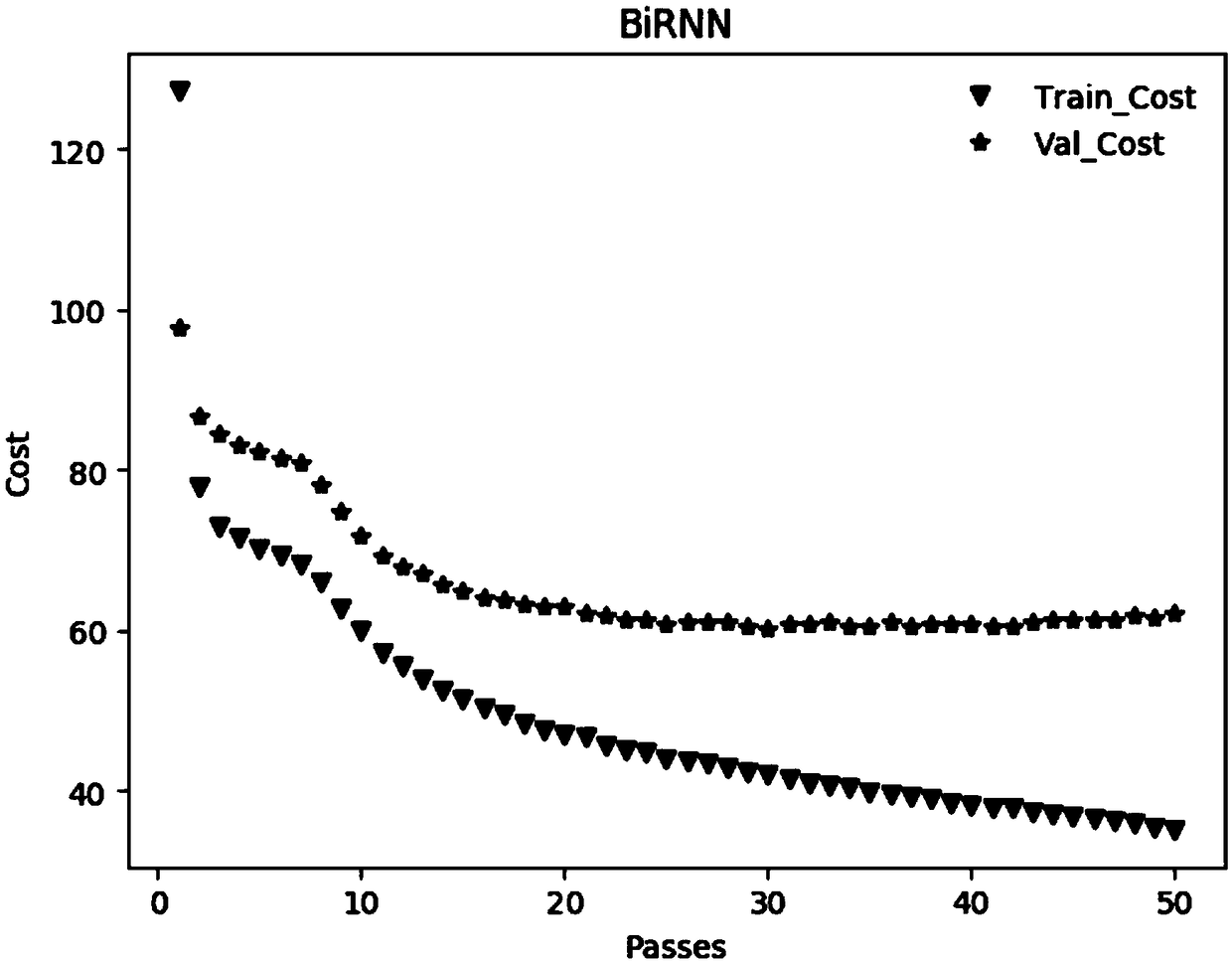

Cross-language end-to-end speech recognition method for low resource Tujia language

InactiveCN109003601AImprove recognition rateImprove generalization abilitySpeech recognitionSpeech identificationLanguage speech

The invention discloses a cross-language end-to-end speech recognition method for the low resource Tujia language. The method comprises the following steps: preprocessing the Tujia language data; constructing a cross-language Tujia language corpus; establishing a unified coding dictionary of Chinese international phonetic alphabets and national international phonetic alphabets; establishing a cross-language end-to-end Tujia speech recognition model; and using a join temporal classification model and performing decoding under the action of the coding dictionary so as to obtain the recognition result. The recognition model with higher generalization is constructed by taking advantage of sufficient major language data and combining the idea of transfer learning so as to improve the accuracy of Tujia language speech recognition.

Owner:BEIJING TECHNOLOGY AND BUSINESS UNIVERSITY

Intelligent cross-language speech recognition and conversion method

ActiveCN107945805ASolve efficiency problemsSolve balance problemsSpeech recognitionSpeech identificationLanguage speech

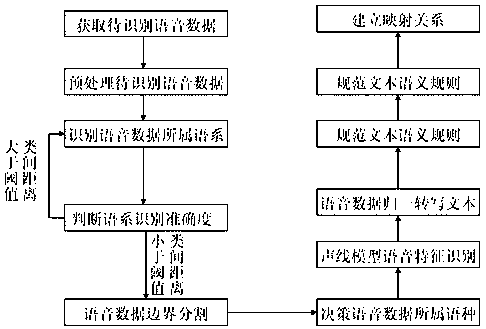

An intelligent cross-language speech recognition and conversion method claimed by the present invention classifies speech data based on the language family, establishes inter-language distances, initially determines a language family to which to-be-recognized speech data belongs, and then performs language refined identification in the language family. After the first language identification error, neighbor language families can be further found based on established inter-language distances, and the language family is established; and after the language is identified, the language is convertedinto a standardized text, then the text is subjected to word segmentation, word frequency statistics and other processing to establish the mapping relationship, which facilitates subsequent voice query. On the one hand, the method effectively solves the disadvantages that the efficiency and the speed cannot be balanced in the current speech recognition. On the other hand, the processing of the speech text conversion is more reasonable, and the establishment of mapping relationship makes the recognition conversion efficiency more accurate.

Owner:北京烽火万家科技有限公司

Method of multilingual speech recognition by reduction to single-language recognizer engine components

InactiveUS7689404B2Natural language data processingSpeech recognitionComputation complexityLanguage speech

In some speech recognition applications, not only the language of the utterance is not known in advance, but also a single utterance may contain words in more than one language. At the same time, it is impractical to build speech recognizers for all expected combinations of languages. Moreover, business needs may require a new combination of languages to be supported in short period of time. The invention addresses this issue by a novel way of combining and controlling the components of the single-language speech recognizers to produce multilingual speech recognition functionality capable of recognizing multilingual utterances at a modest increase of computational complexity.

Owner:KHASIN ARKADY

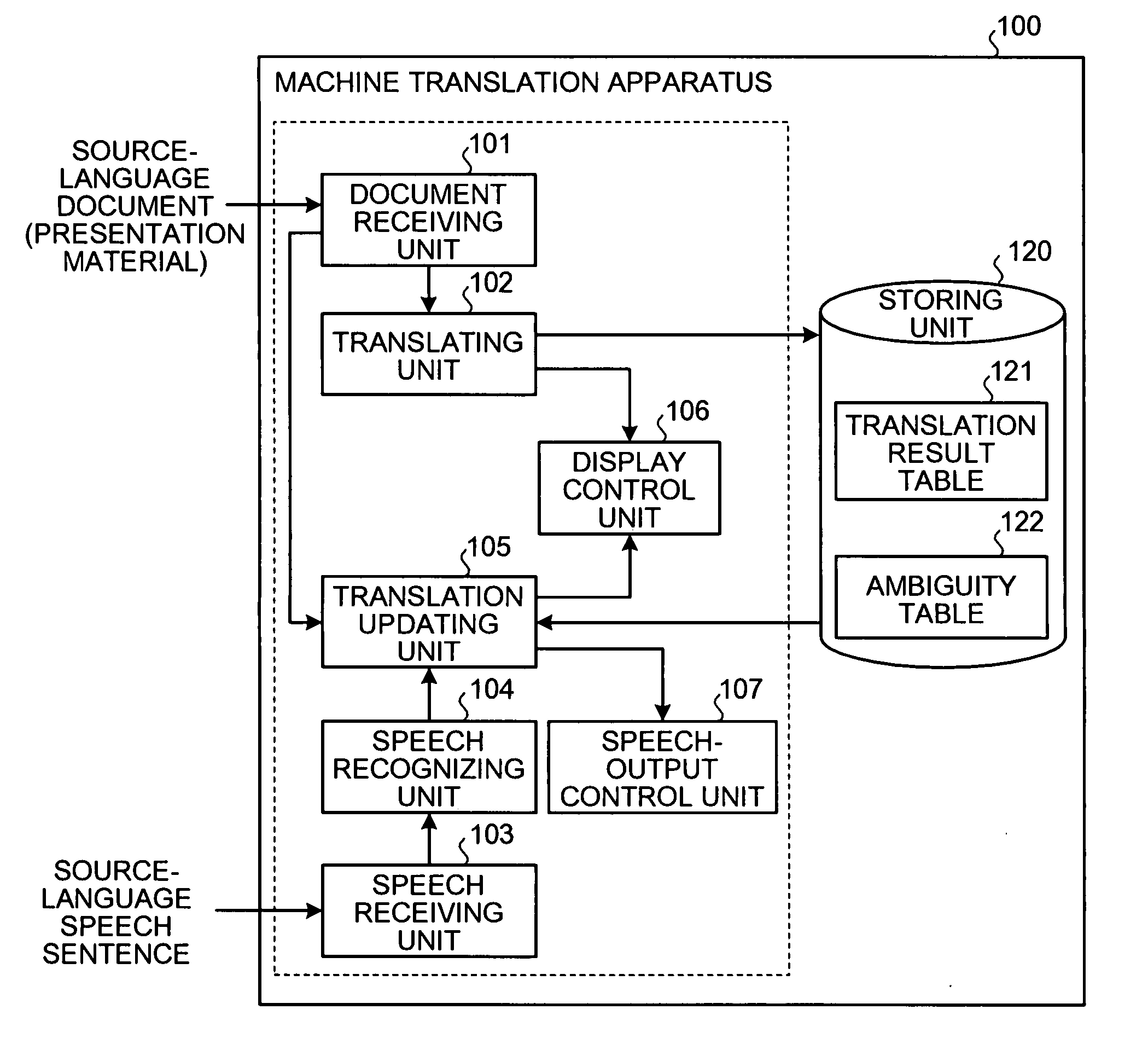

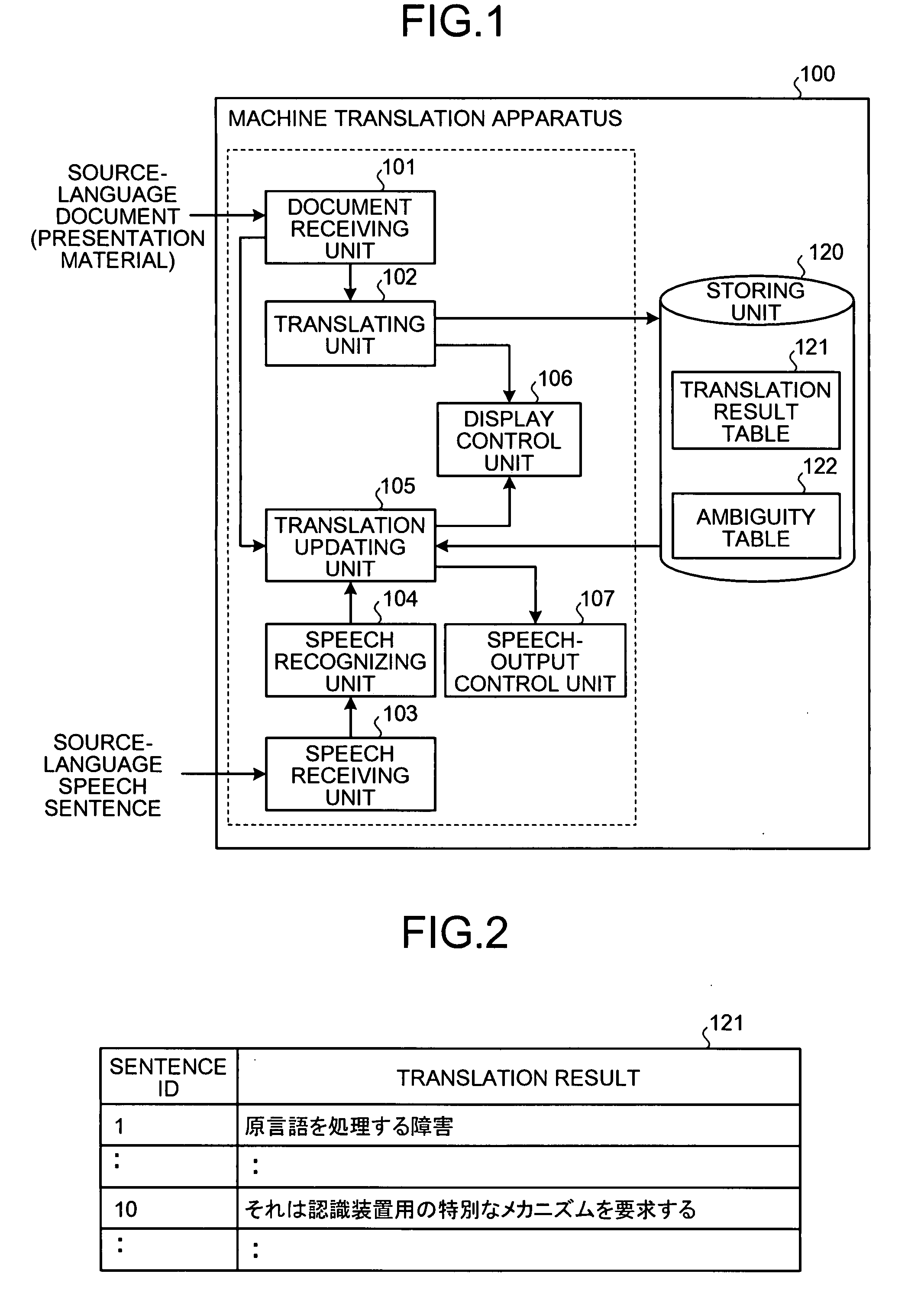

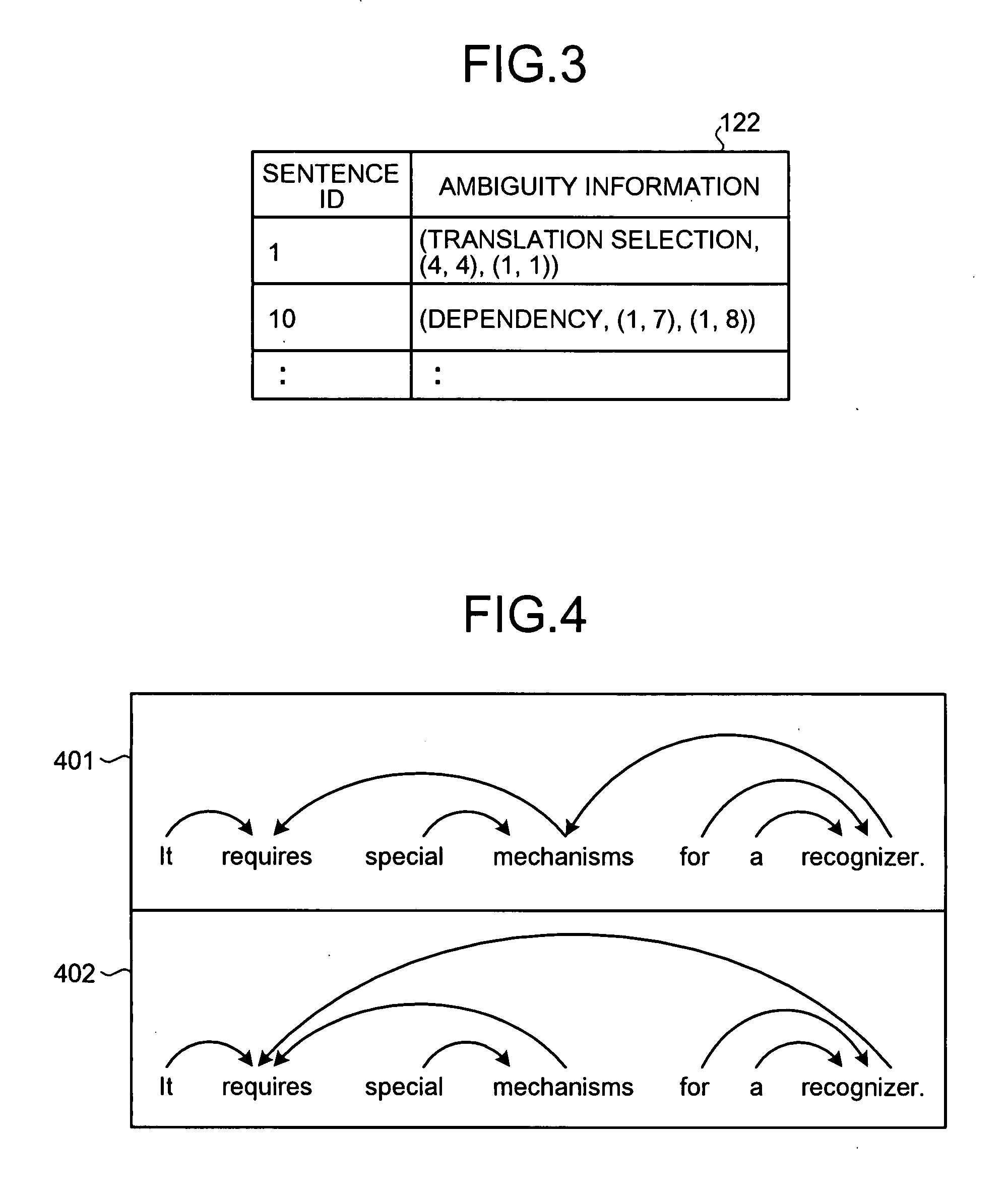

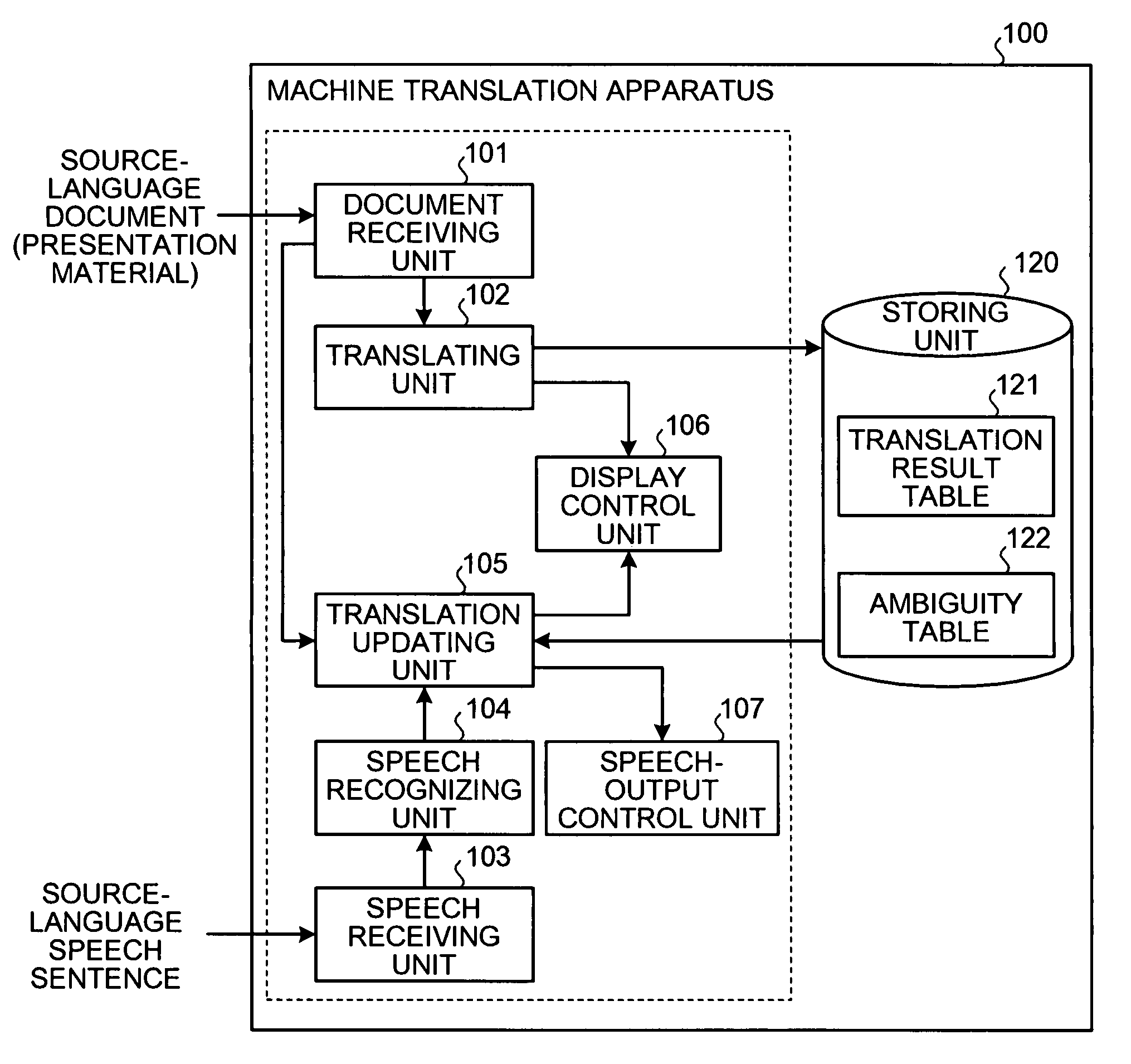

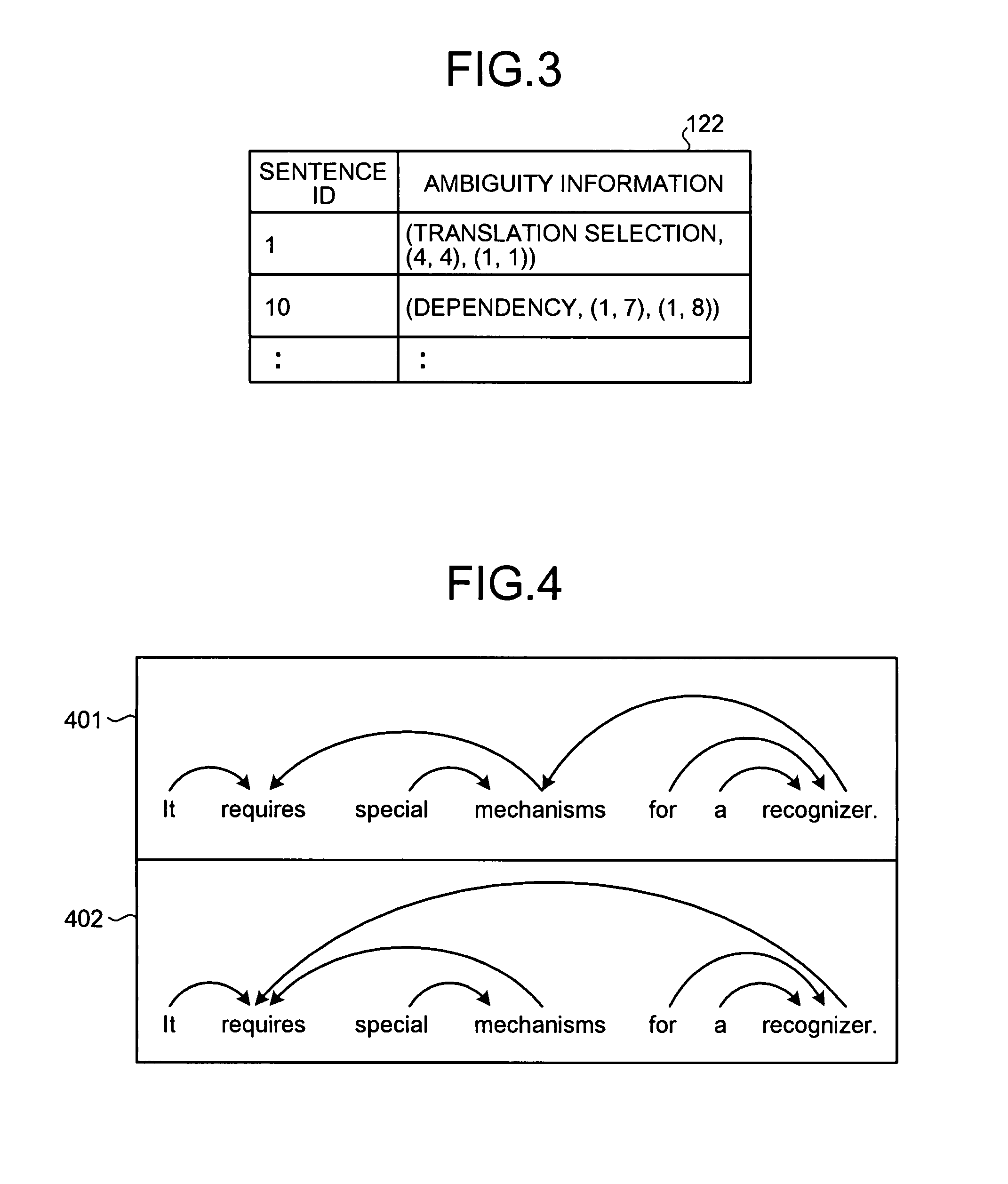

Method, apparatus, system, and computer program product for machine translation

A machine translation apparatus includes a translating unit that translates a source language document into a translated document described in a target language, and creates an ambiguous portion that is a word or a sentence having an ambiguity occurred during translation; a storing unit that stores the translated document and the ambiguous portion; a speech receiving unit that receives a speech in the source language; a recognition unit that recognizes the speech received and creates a source language speech sentence as a recognition result; a translation updating unit that updates the translated language document by retranslating a part of a speech content of the source language speech sentence to which the ambiguous portion corresponds, when the source language document includes the ambiguous portion; and a display control unit that displays the updated translated document on a display unit.

Owner:TOSHIBA DIGITAL SOLUTIONS CORP

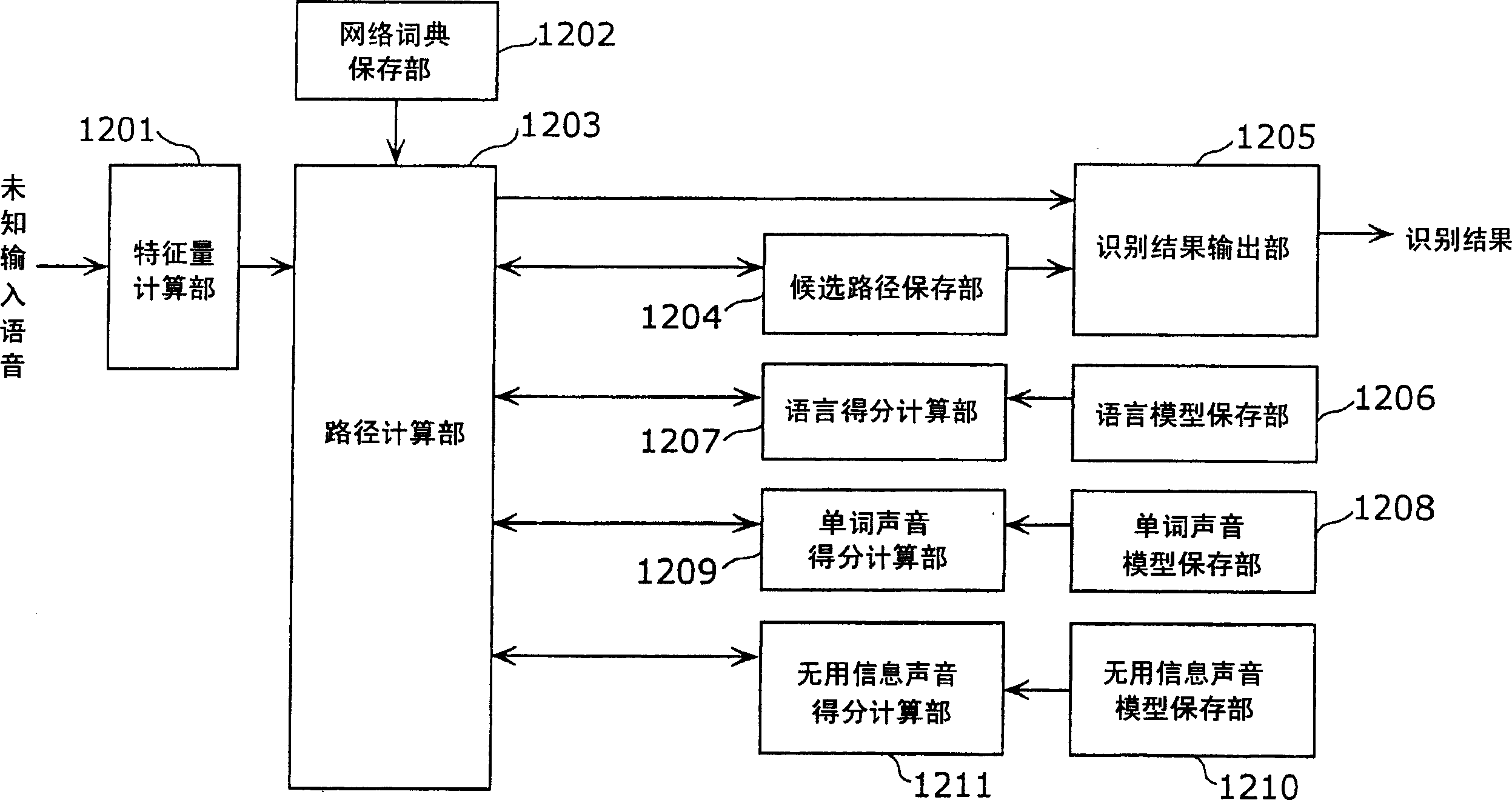

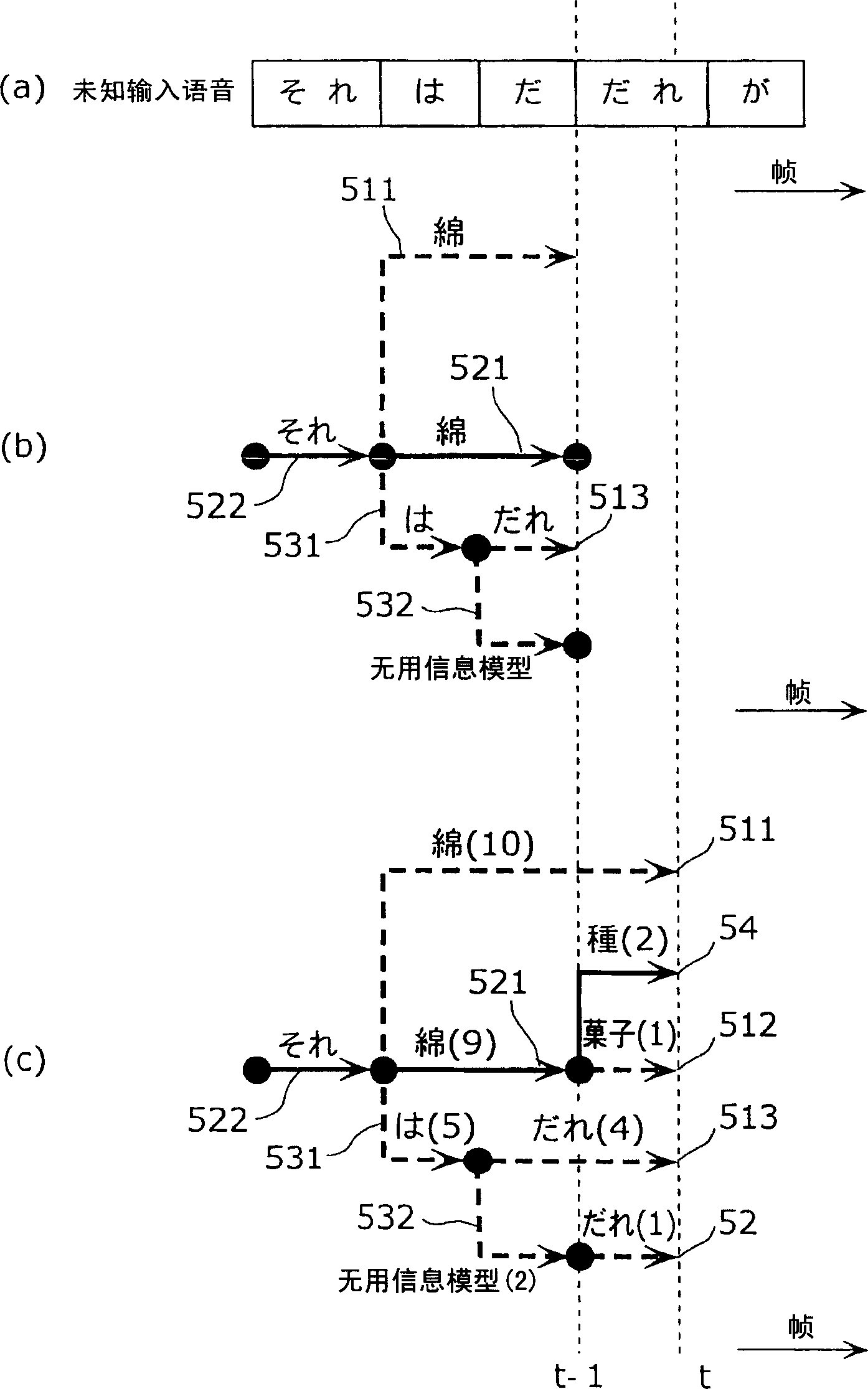

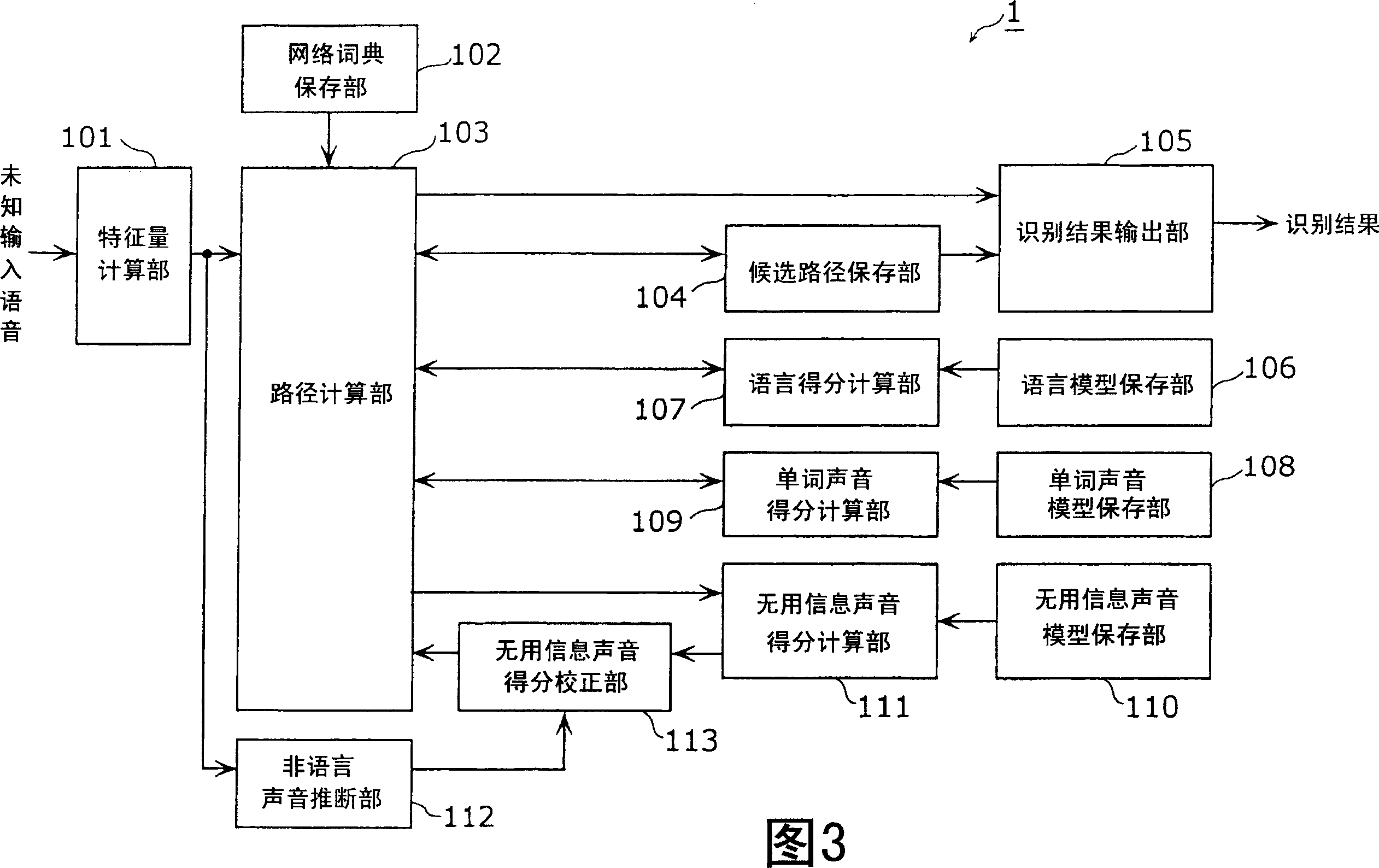

Speech recognition device and speech recognition method

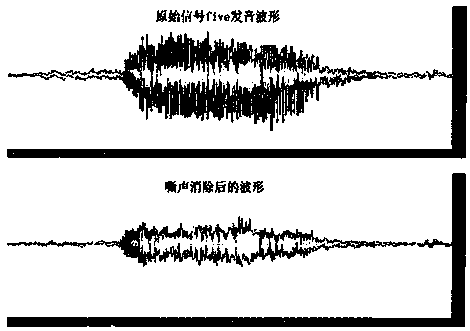

InactiveCN1698097ASpeech recognition is accurateHigh utility valueSpeech recognitionLanguage speechSpeech identification

The speech recognition apparatus ( 1 ) is equipped with the garbage acoustic model storage unit ( 110 ) storing the garbage acoustic model which learned the collection of the unnecessary words; the feature value calculation unit ( 101 ) which calculates the feature parameter necessary for recognition by acoustically analyzing the unidentified input speech including the non-language speech per frame which is a unit for speech analysis; the garbage acoustic score calculation unit ( 111 ) which calculates the garbage acoustic score by comparing the feature parameter and the garbage acoustic model; the garbage acoustic score correction unit ( 113 ) which corrects the garbage acoustic score calculated by the garbage acoustic score calculation unit ( 111 ) so as to raise it in the frame where the non-language speech is inputted; and the recognition result output unit ( 105 ) which outputs, as the recognition result of the unidentified input speech, the word string with the highest cumulative score of the language score, the word acoustic score, and the garbage acoustic score which is corrected by the garbage acoustic score correcting means.

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

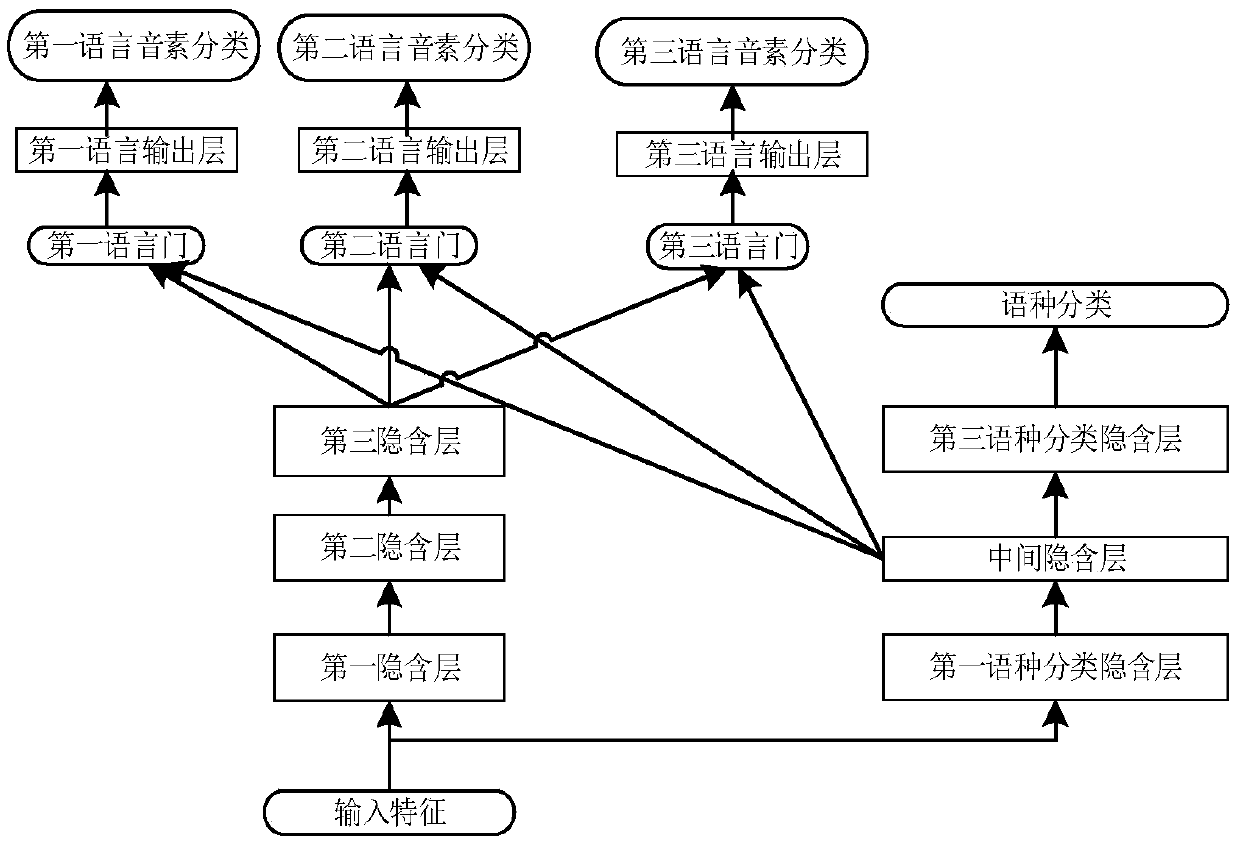

Multi-language speech recognition method based on language type and speech content collaborative classification

ActiveCN110895932ASolving Adaptive ProblemsRealize collaborative identificationSpeech recognitionAcoustic modelPosteriori probability

The invention discloses a multi-language speech recognition method based on language type and speech content collaborative classification. The method comprises the following steps: 1) establishing andtraining a language type and speech content collaborative classification acoustic model; wherein the acoustic model is fused with a language feature vector containing language related information, and model adaptive optimization can be performed on a phoneme classification layer of a specific language by utilizing the language feature vector in a multi-language recognition process; 2) inputting aspeech feature sequence to be recognized into the trained language type and speech content collaborative classification acoustic model, and outputting phoneme posteriori probability distribution corresponding to the feature sequence; the decoder generating a plurality of candidate word sequences and acoustic model scores corresponding to the candidate word sequences in combination with the sequence phoneme posteriori probability distribution of the features; and 3) combining the acoustic model scores and the language model scores of the candidate word sequences to serve as an overall score, and taking the candidate word sequence with the highest overall score as a recognition result of the voice content of the specific language.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

Speech recognition interface unit and speed recognition method thereof

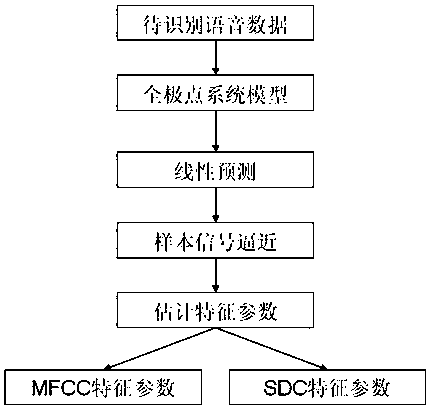

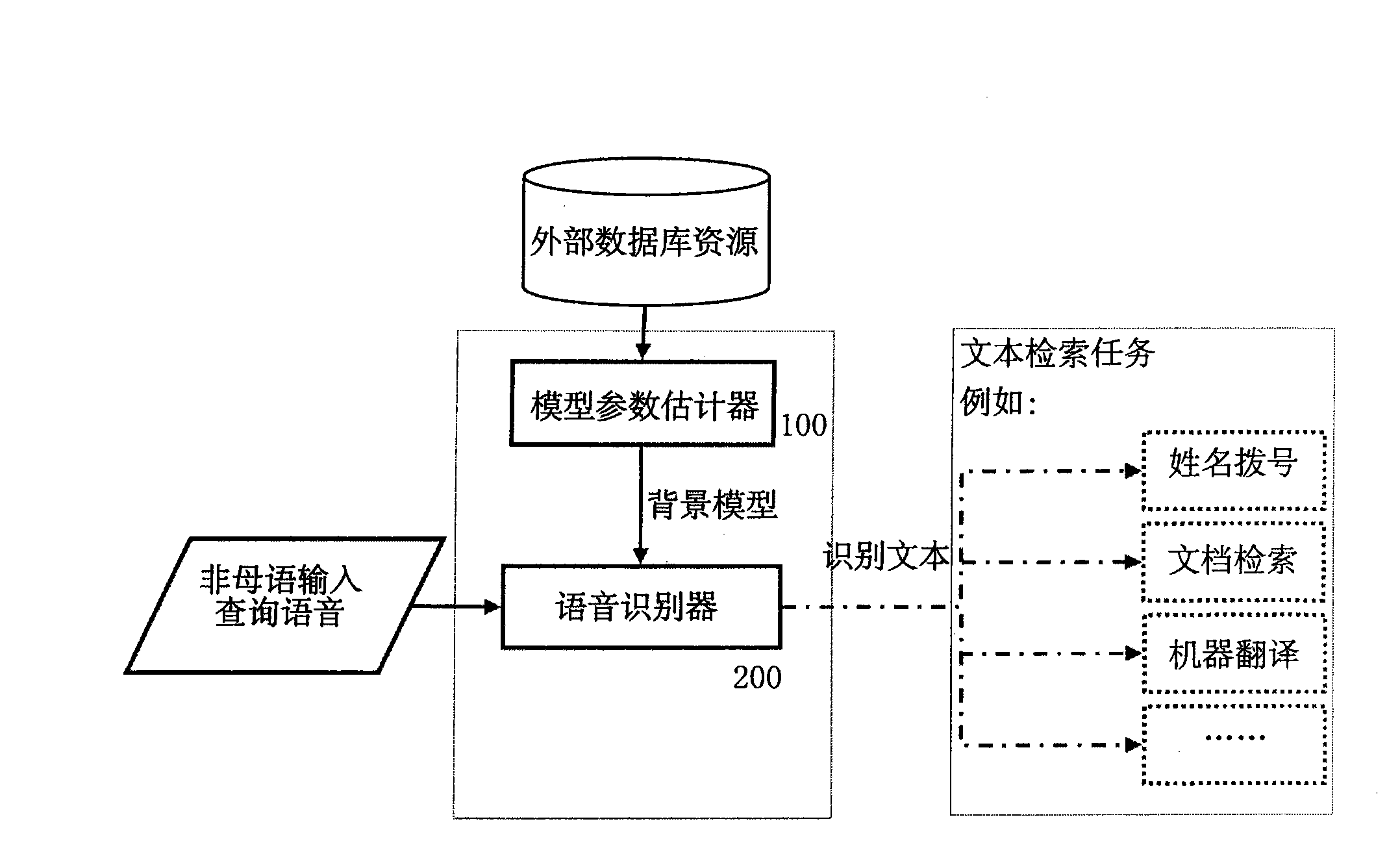

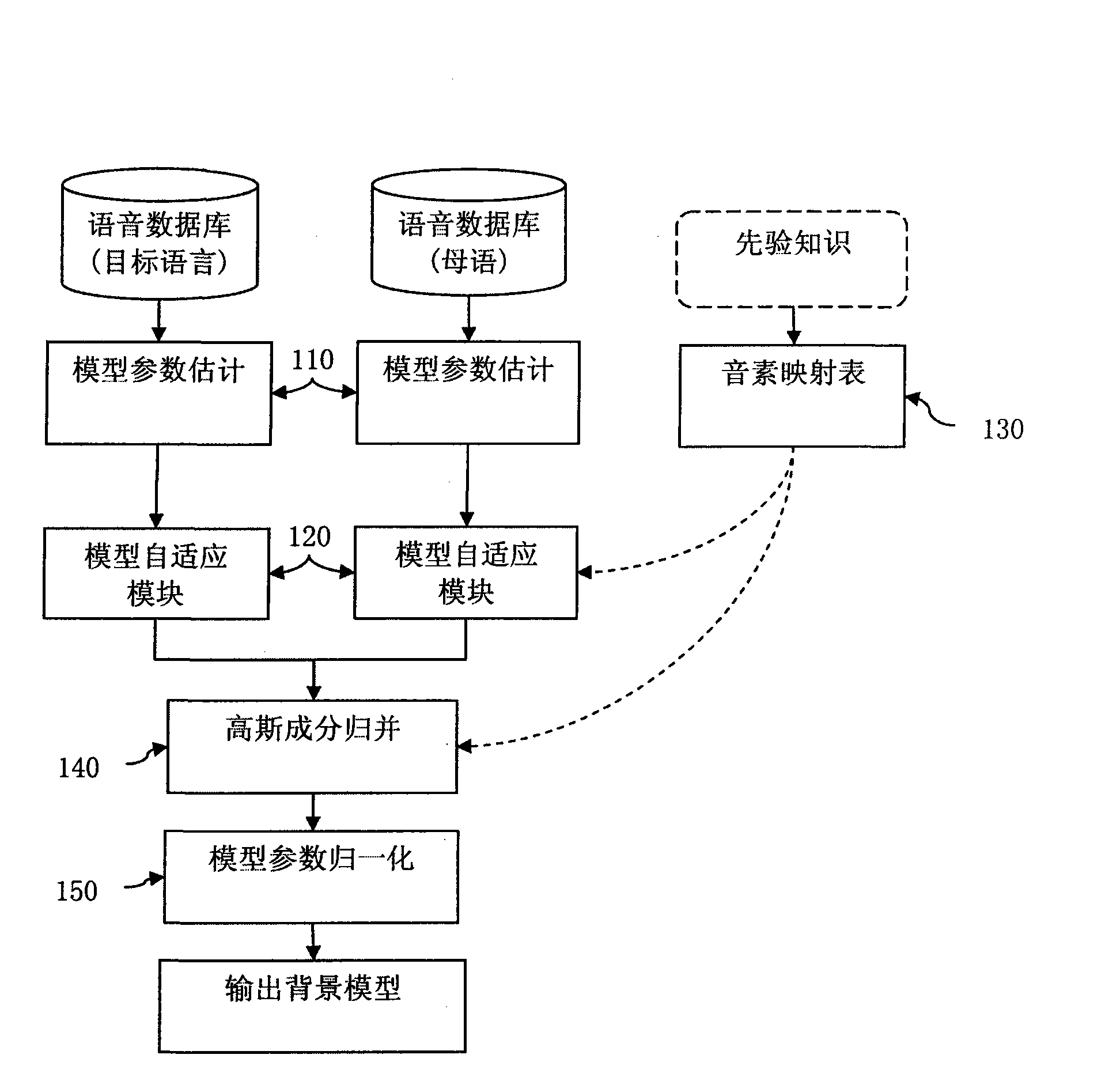

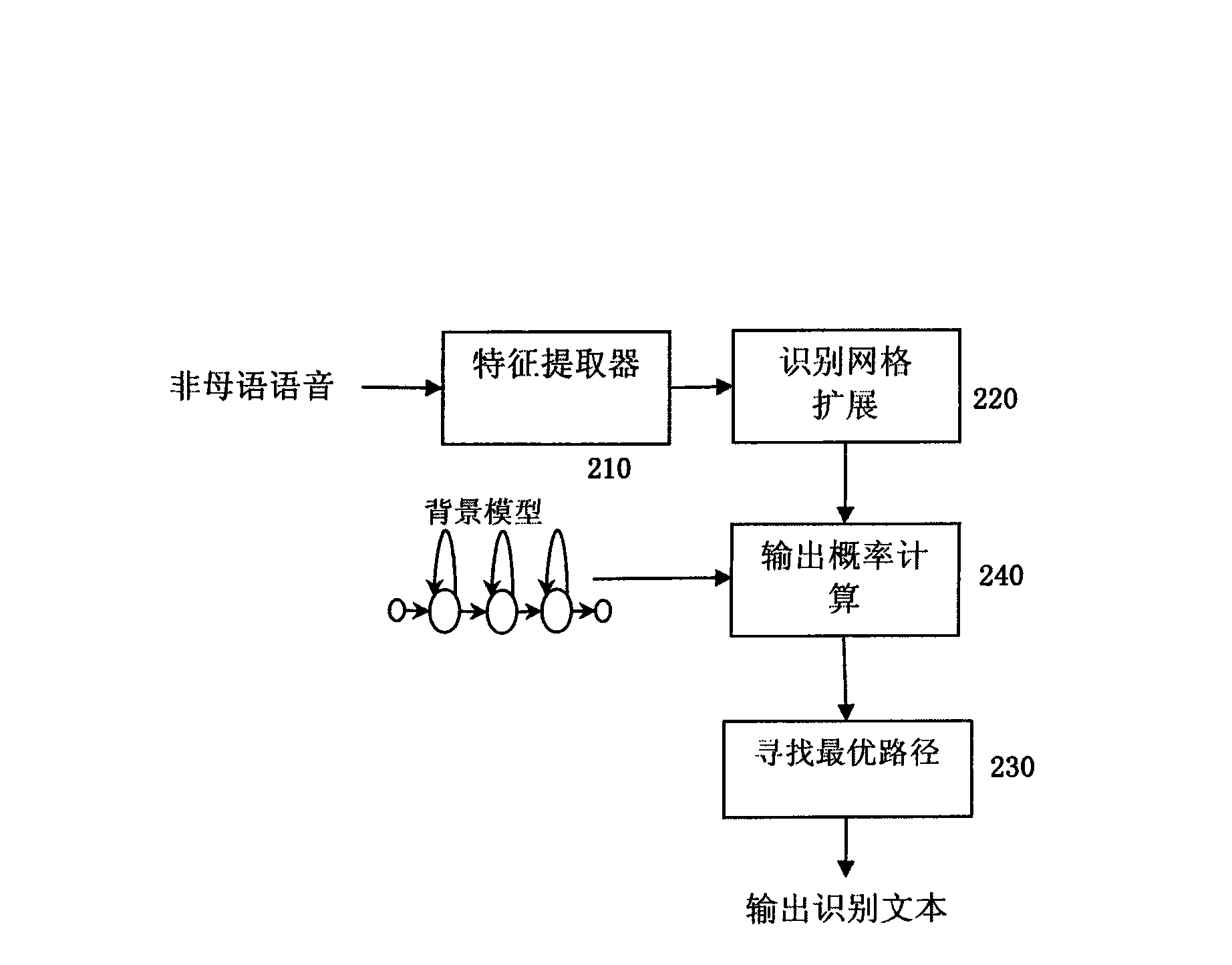

InactiveCN101515456AImprove the accuracy of non-native language speech recognitionSpeech recognitionSpeech identificationAcoustic model

The invention provides a speech recognition interface unit and a speech recognition method thereof. The speech recognition interface unit comprises a model parameter estimator and a speech recognizer. The model parameter estimator extracts the acoustic information of a target language and a native language from an exterior speech database and acquires an acoustic model of the target language and an acoustic model of the native language through respective training; and then the module parameter estimator applies model self-adaptive technology respectively to the two acoustic models and obtains a background model through the application of gauss component consolidation technology. The speech recognizer respectively receives the background model inputted from the model parameter estimator and exteriorly inputted non-native language speech and recognizes the inputted non-native language speech based on the background model. The speech recognition interface unit and the speech recognition method can improve the recognition accuracy of the non-native language speech.

Owner:SAMSUNG ELECTRONICS CO LTD +1

Multi-to-multi speaker conversion method based on STARGAN and x vector

ActiveCN109671442AImprove voice qualityOvercoming the smoothing problemSpeech synthesisSpeech translationLanguage speech

The invention discloses a multi-to-multi speaker conversion method based on STARGAN and an x vector, which comprises a training stage and a conversion stage, wherein a speech conversion system is achieved by combining the STARGAN and the x vector, the personality similarity and quality of the converted speech can be greatly improved, particularly, for the short-time utterance, the x vector has better characterization performance and better speech conversion quality can be achieved, meanwhile, the problem of over-smoothing in C-VAE can be overcome, and a high-quality speech conversion method isachieved. In addition, the method can achieve the speech conversion under the condition of non-parallel text, the training process does not need any alignment process, the universality and practicability of a speech conversion system are improved, and the method can also achieve that the conversion system with multiple source-target speaker pairs is integrated in a conversion model, namely, the multi-speaker-to-multi-speaker conversion is achieved, and the system has a better application prospect in the fields of cross-language speech conversion, film dubbing, speech translation and the like.

Owner:NANJING UNIV OF POSTS & TELECOMM

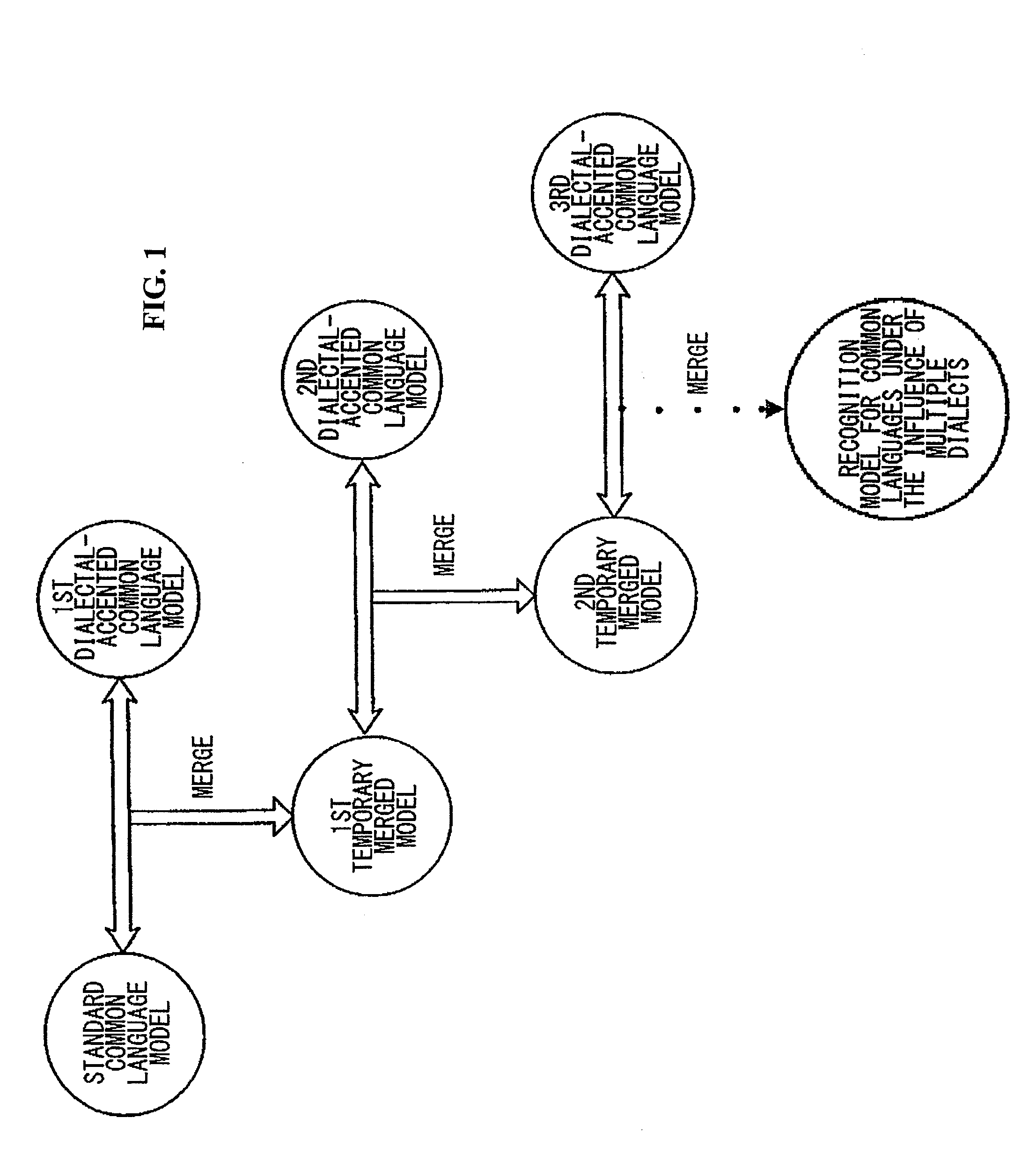

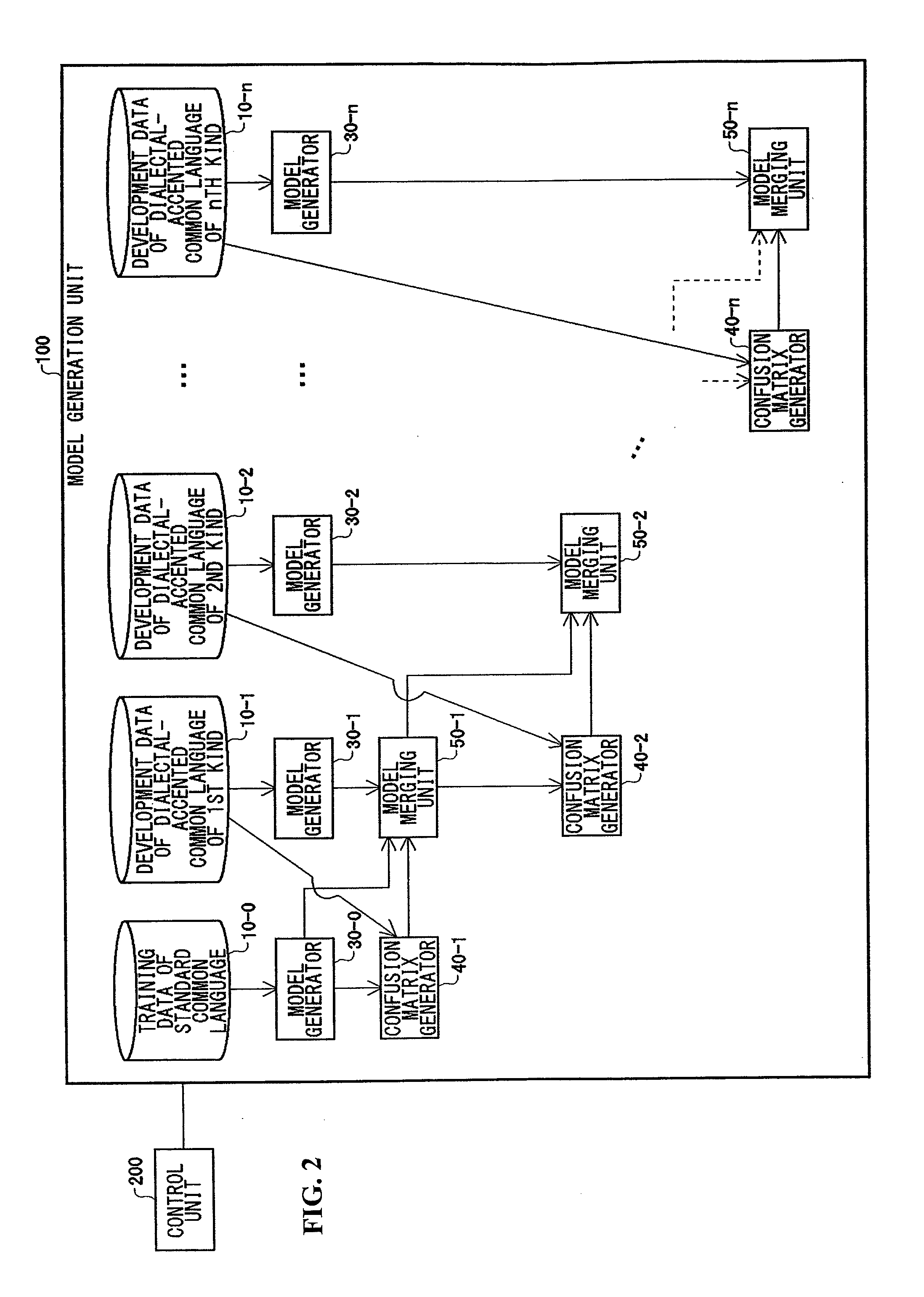

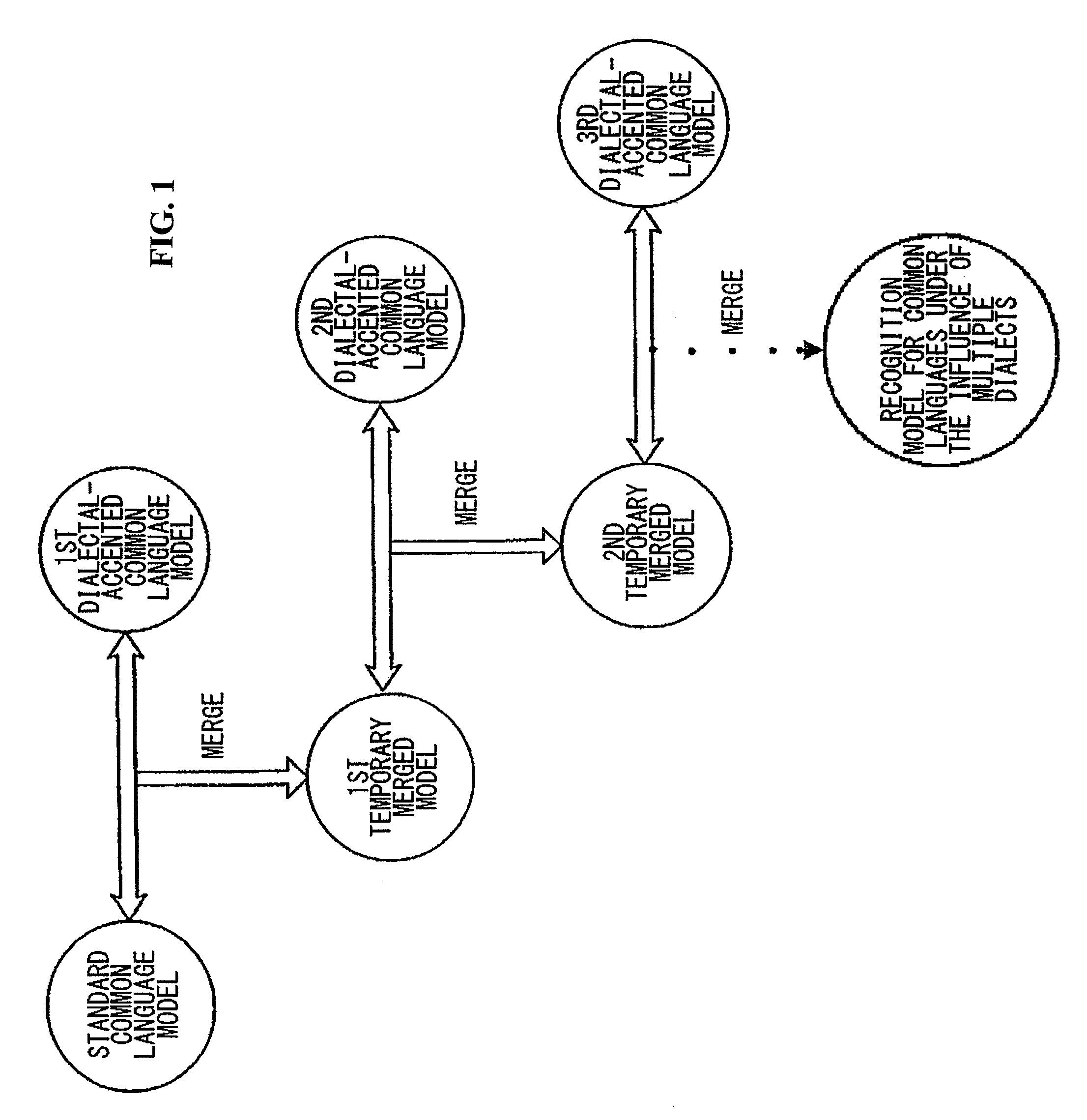

Method and system for modeling a common-language speech recognition, by a computer, under the influence of a plurality of dialects

ActiveUS20100121640A1Increase ratingsImprove work efficiencySpeech recognitionAlgorithmSpeech identification

The present invention relates to a method for modeling a common-language speech recognition, by a computer, under the influence of multiple dialects and concerns a technical field of speech recognition by a computer. In this method, a triphone standard common-language model is first generated based on training data of standard common language, and first and second monophone dialectal-accented common-language models are based on development data of dialectal-accented common languages of first kind and second kind, respectively. Then a temporary merged model is obtained in a manner that the first dialectal-accented common-language model is merged into the standard common-language model according to a first confusion matrix obtained by recognizing the development data of first dialectal-accented common language using the standard common-language model. Finally, a recognition model is obtained in a manner that the second dialectal-accented common-language model is merged into the temporary merged model according to a second confusion matrix generated by recognizing the development data of second dialectal-accented common language by the temporary merged model. This method effectively enhances the operating efficiency and admittedly raises the recognition rate for the dialectal-accented common language. The recognition rate for the standard common language is also raised.

Owner:SONY COMPUTER ENTERTAINMENT INC +1

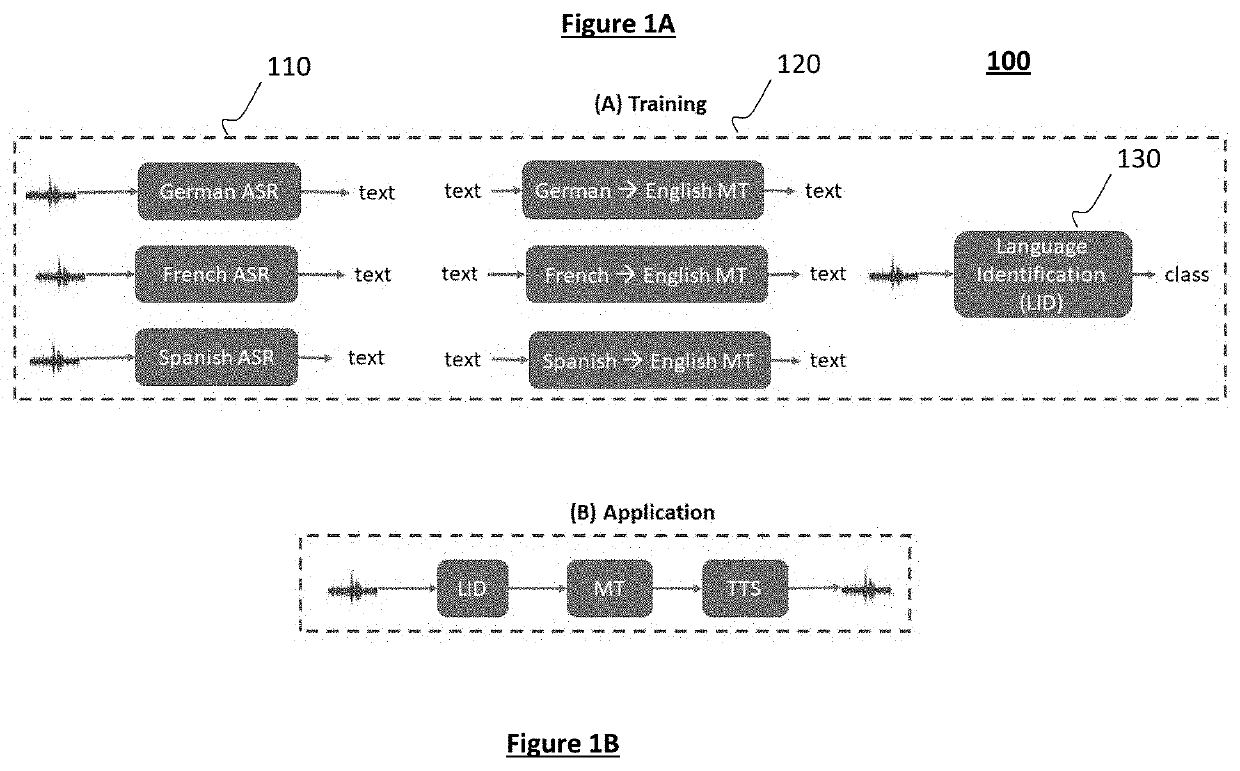

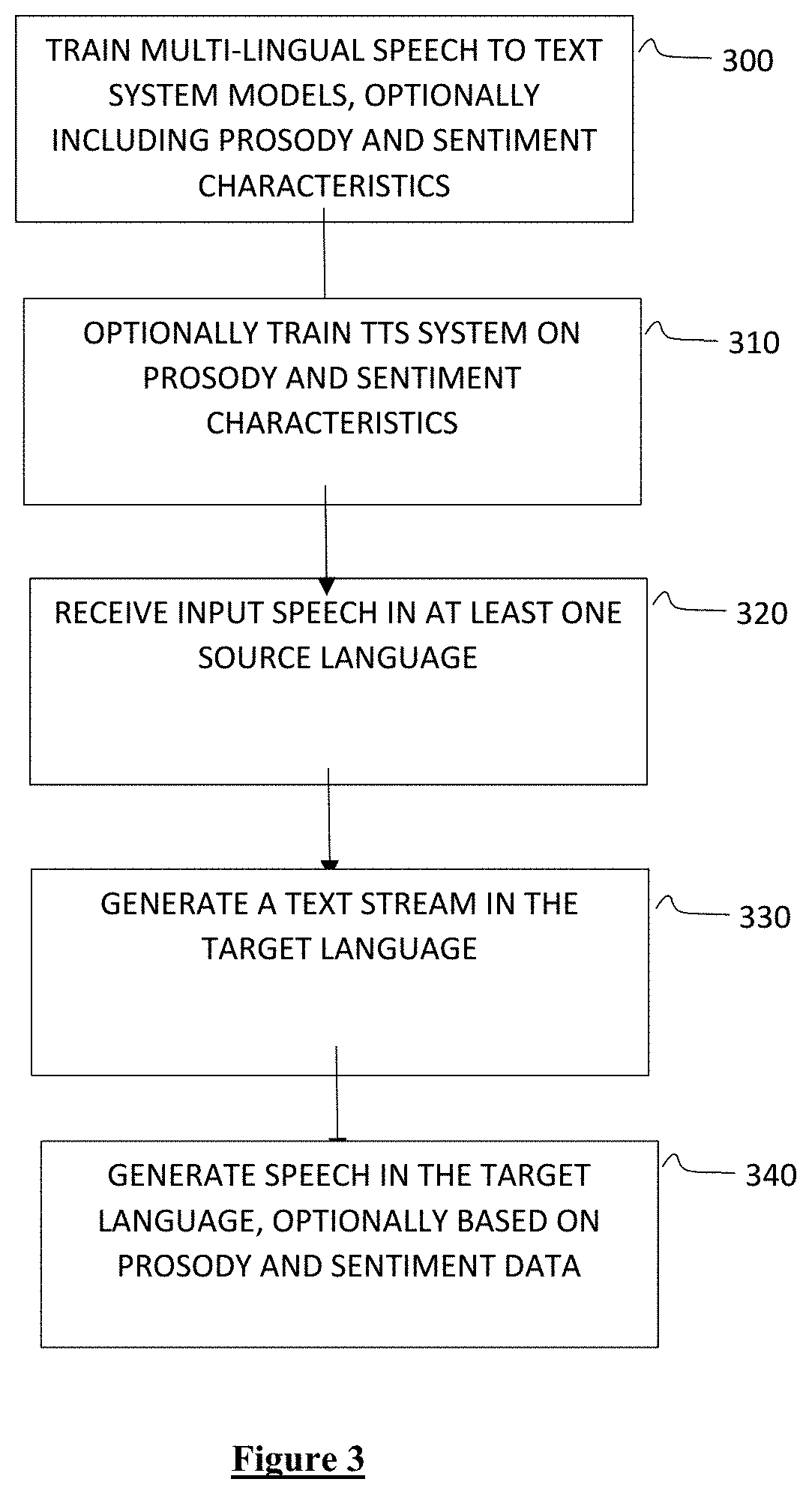

System and method for direct speech translation system

PendingUS20200226327A1Simplifies speech recognitionSimplifies translationNatural language translationMathematical modelsEncoder decoderSpeech translation

A system for translating speech from at least two source languages into another target language provides direct speech to target language translation. The target text is converted to speech in the target language through a TTS system. The system simplifies speech recognition and translation process by providing direct translation, includes mechanisms described herein that facilitate mixed language source speech translation, and punctuating output text streams in the target language. It also in some embodiments allows translation of speech into the target language to reflect the voice of the speaker of the source speech based on characteristics of the source language speech and speaker's voice and to produce subtitled data in the target language corresponding to the source speech. The system uses models having been trained using (i) encoder-decoder architectures with attention mechanisms and training data using TTS and (ii) parallel text training data in more than two different languages.

Owner:APPL TECH APPTEK

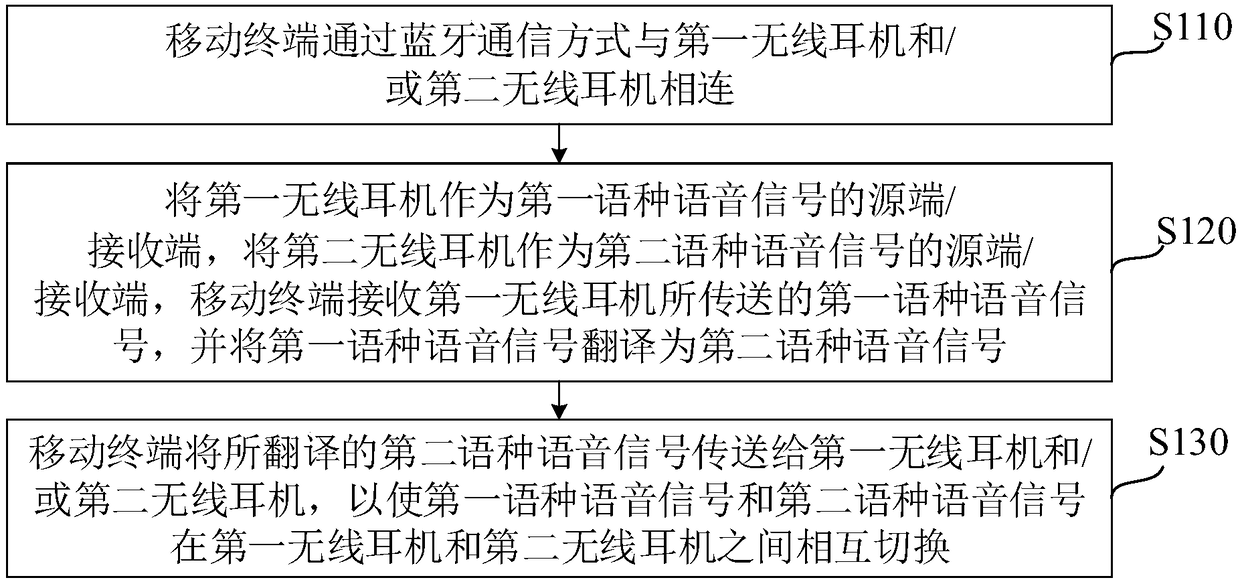

Speech real-time translation method and system based on mobile terminal and double-ear wireless headset

InactiveCN108345591AVarious forms of applicationImprove user experienceNatural language translationShort range communication serviceComputer terminalHeadphones

The invention provides a speech real-time translation method based on a mobile terminal and a double-ear wireless headset. The method comprises the steps that the mobile terminal is connected with a first wireless earphone and / or a second wireless earphone through a Bluetooth communication mode; the first wireless earphone is used as a source end / receiving end of a first language speech signal, the second wireless earphone is used as a source end / receiving end of a second language speech signal, and the mobile terminal receives the first language speech signal transmitted by the first wirelessearphone and translates the first language speech signal into the second language speech signal; and the mobile terminal transmits the second language speech signal obtained through translation to the first wireless earphone and / or the second wireless earphone, so that the first language speech signal and the second language speech signal are mutually switched between the first wireless earphoneand the second wireless earphone. Through the method, two types of speech signals can be mutually switched between the two earphones of the double-ear wireless headset, application forms of the double-ear wireless headset are richer, and the usage experience of a user is improved.

Owner:GOERTEK INC

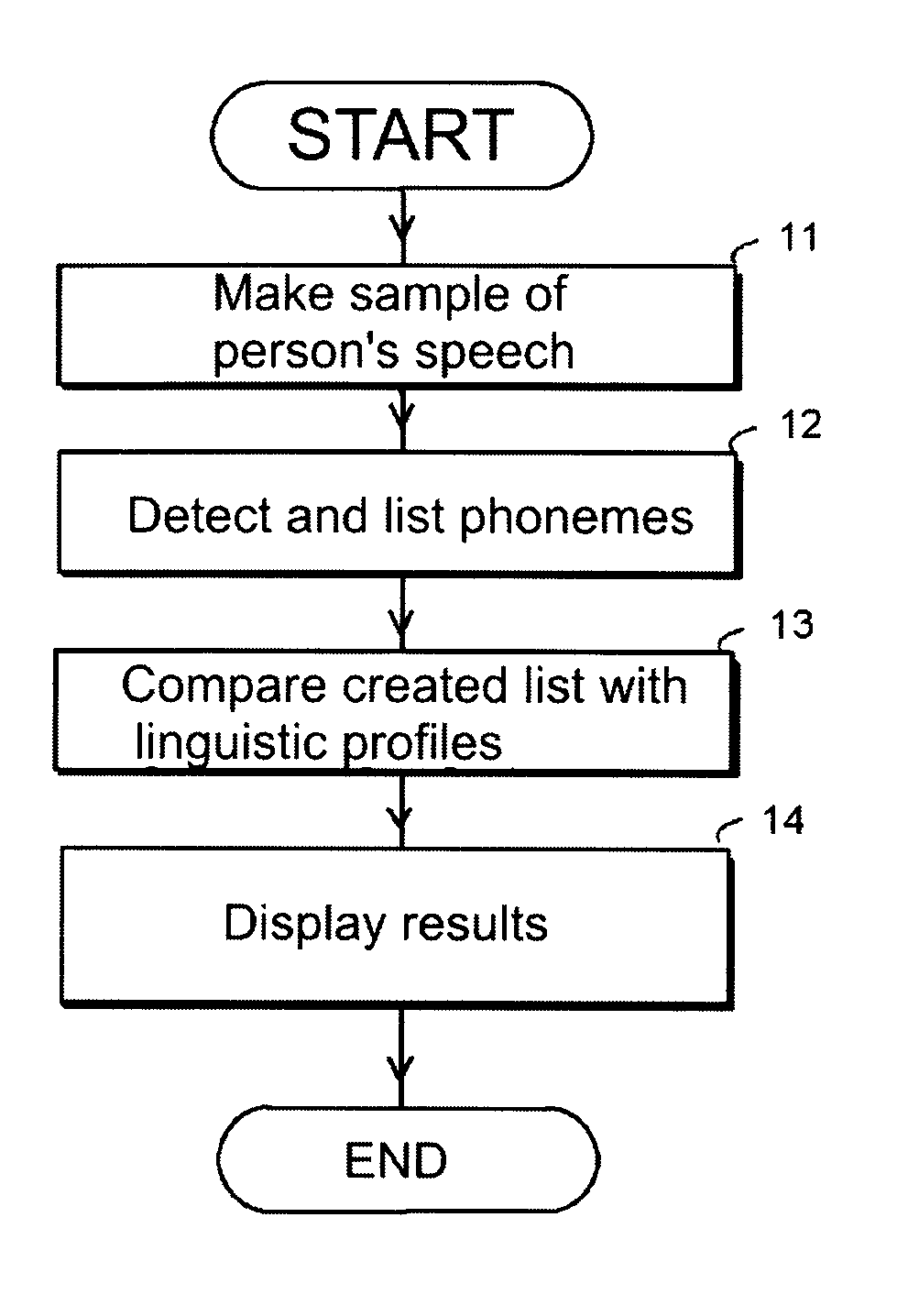

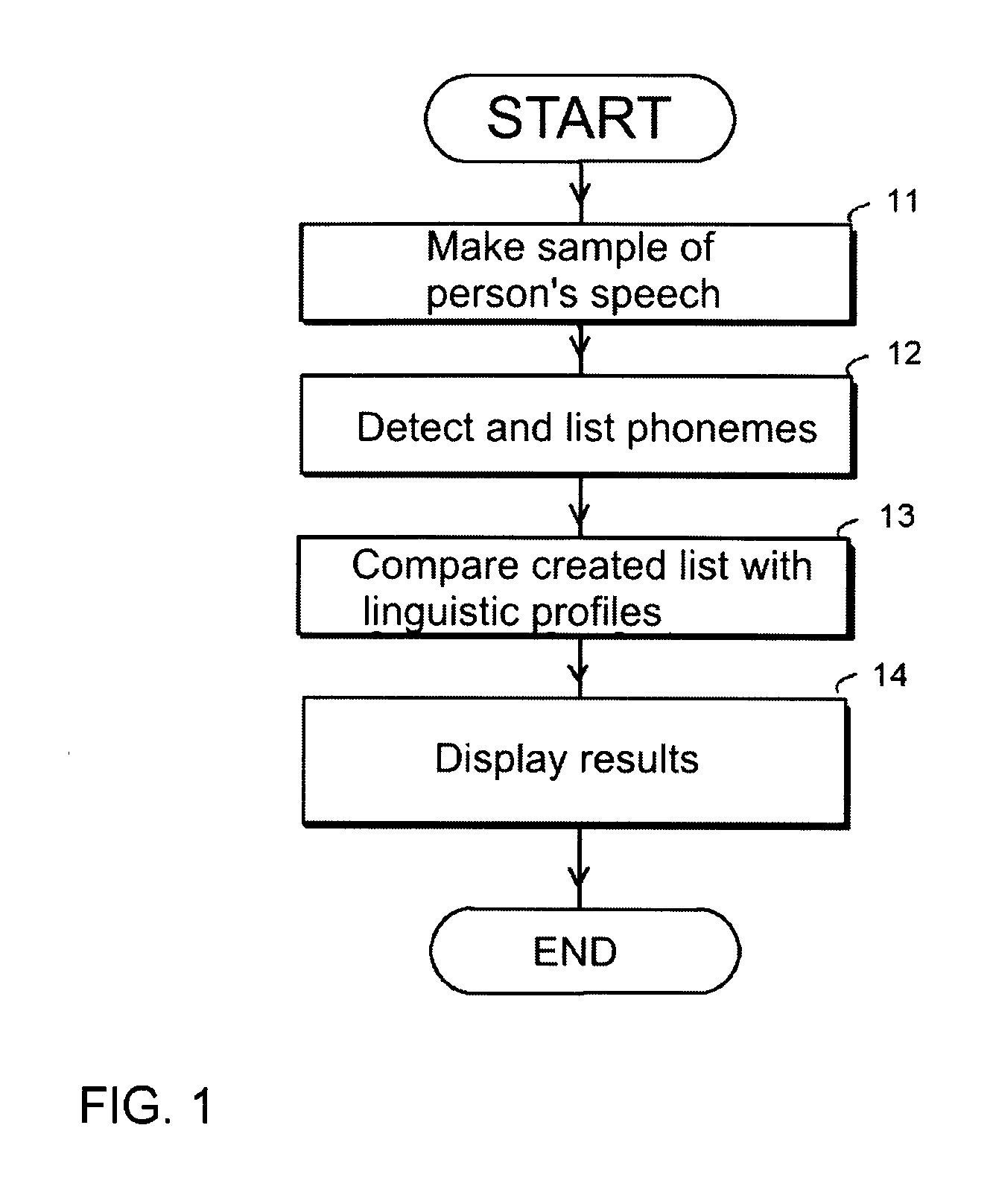

Method of linguistic profiling

InactiveUS20130189652A1Learning speedEasy to learnSpeech analysisElectrical appliancesLanguage speechSpeech sound

In order to define or measure the language proficiency of a person, particularly the degree of flawlessness in the pronunciation, and / or to find out the linguistic background and identity of a person, the person's speech is compared with a selected reference language. This is achieved by applying autocorrelation and / or pattern recognition and / or signal processing and / or other corresponding methods for identifying and registering such sound elements and features that are typical of the reference language and occur repeatedly in the reference language speech sample. On the basis of the obtained linguistic profile of the reference language, corresponding sound elements and features are searched in the speech of the person, and there is calculated how many of the sound elements and features of the reference language linguistic profile the person substitutes with such sound elements or features that deviate from the reference language, and the substitute sound elements and features are defined.

Owner:PRONOUNCER EURO

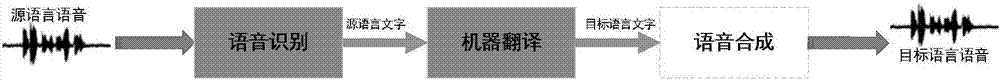

Anthropomorphic oral translation method and system with man-machine communication function

InactiveCN107315742AWith man-machine dialogue functionImprove accuracyNatural language translationSpeech recognitionUser needsSpoken language

The invention provides an anthropomorphic oral translation method with a man-machine communication function. The method comprises the following steps of conducting intelligent speech recognition of source language speech and obtaining source language text; processing the source language text and a communication scene, and conducting anthropomorphic man-machine communication; conducting machine translation to obtain a translation result. The invention further provides an anthropomorphic oral translation system with the man-machine communication function. Through the adoption of the system, man-machine communication with a user needs to be conducted according to a translation task if necessary, the information used for obviously improving translation experiences of the user in a complex application scene is obtained accurately, and the accuracy of translation semantics is improved.

Owner:BEIJING ZIDONG COGNITIVE TECH CO LTD

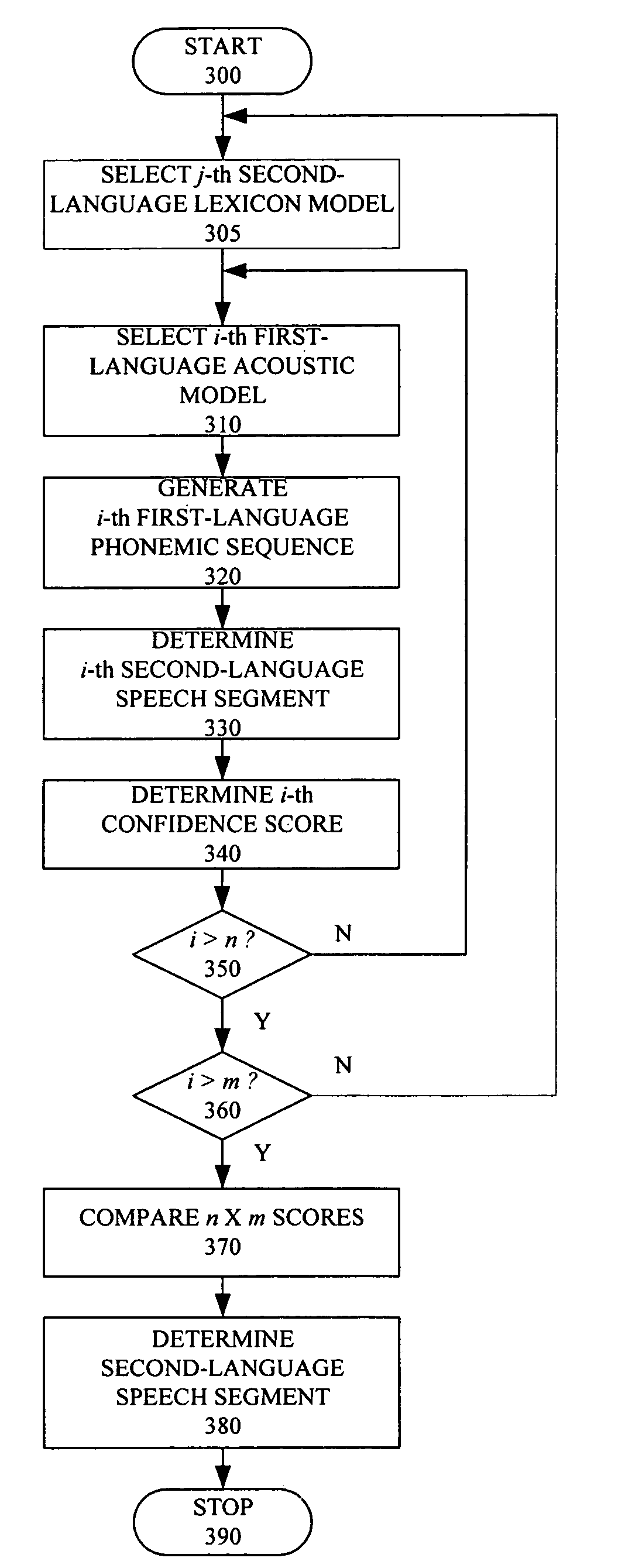

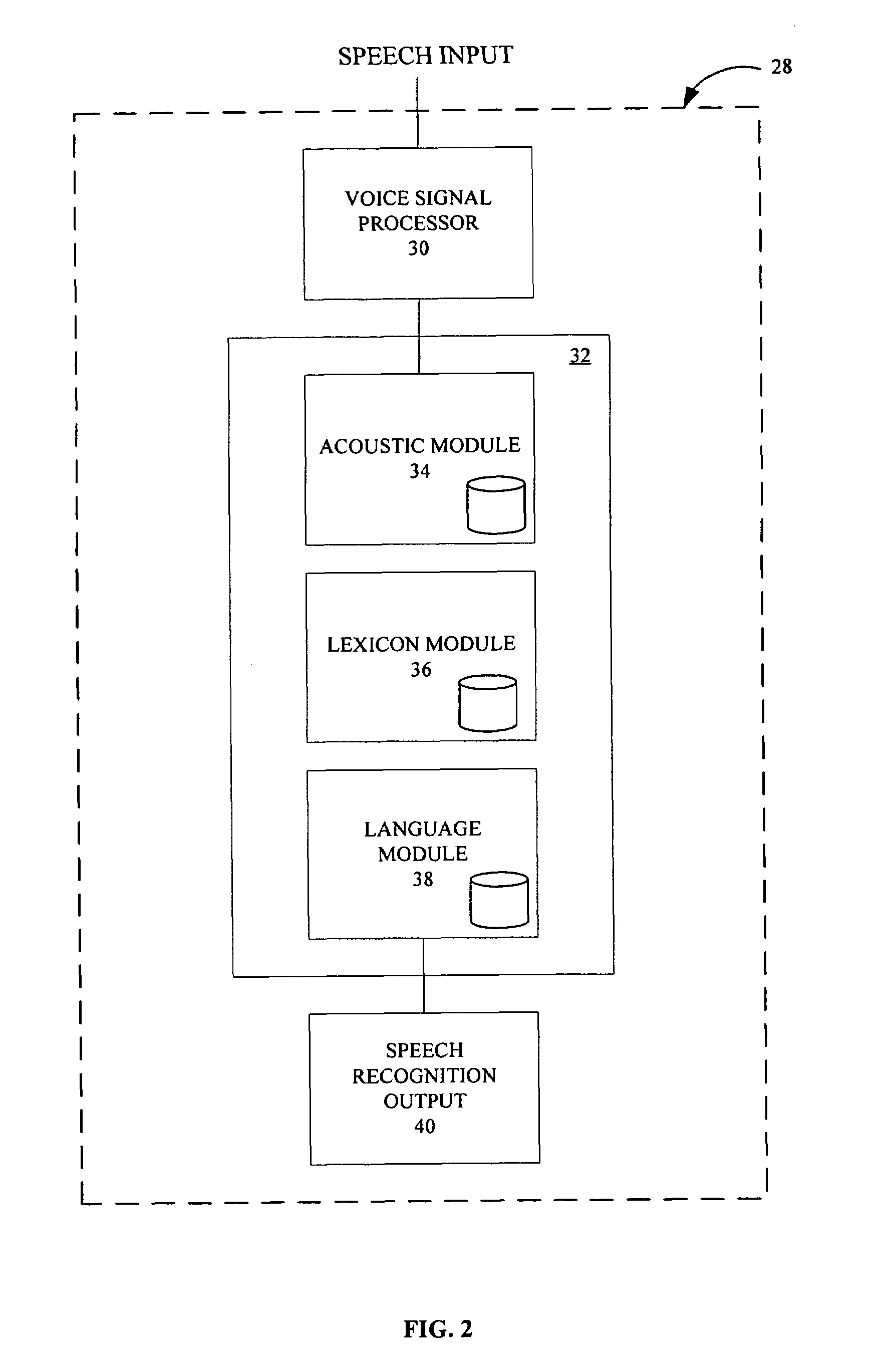

System and method of speech recognition for non-native speakers of a language

ActiveUS7640159B2Reduces and eliminates adverse effectReduce eliminateAutomatic call-answering/message-recording/conversation-recordingNatural language data processingFeature vectorSpeech identification

An accent compensative speech recognition system and related methods for use with a signal processor generating one or more feature vectors based upon a voice-induced electrical signal are provided. The system includes a first-language acoustic module that determines a first-language phoneme sequence based upon one or more feature vectors, and a second-language lexicon module that determines a second-language speech segment based upon the first-language phoneme sequence. A method aspect includes the steps of generating a first-language phoneme sequence from at least one feature vector based upon a first-language phoneme model, and determining a second-language speech segment from the first-language phoneme sequence based upon a second-language lexicon model.

Owner:MICROSOFT TECH LICENSING LLC

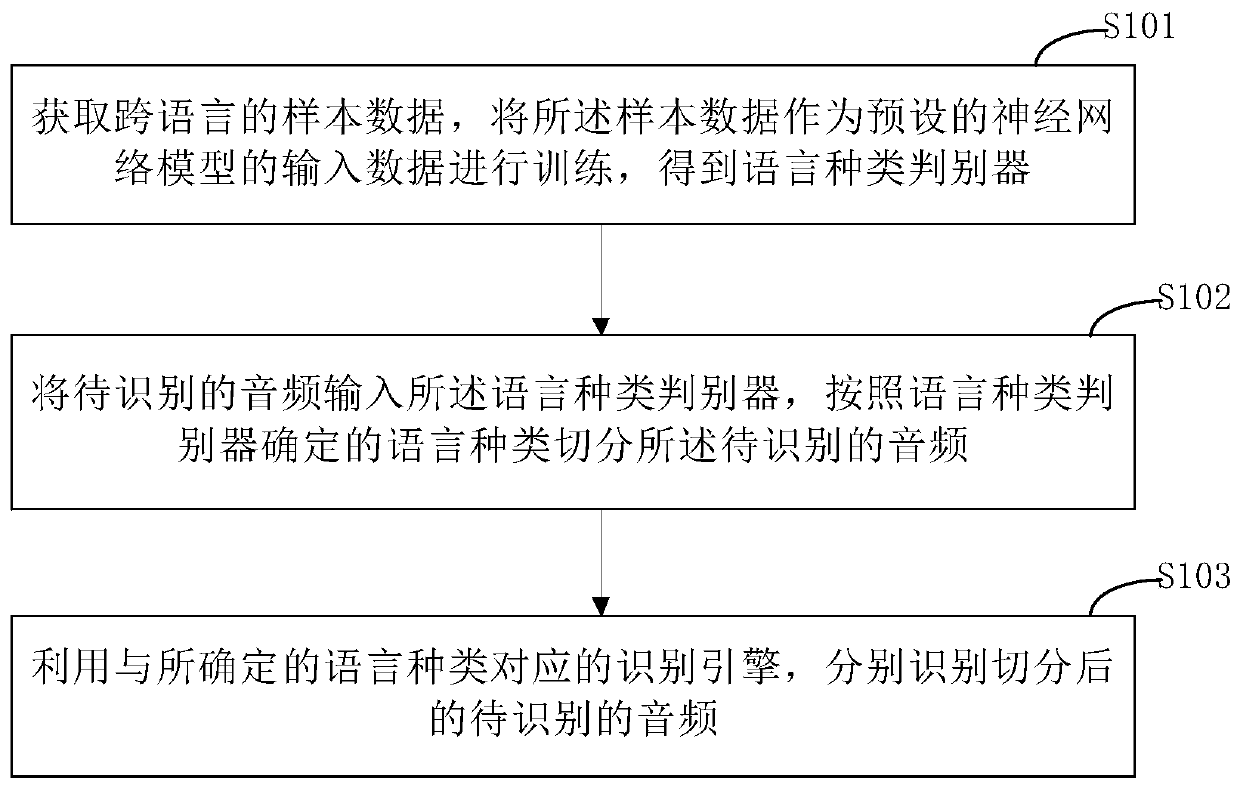

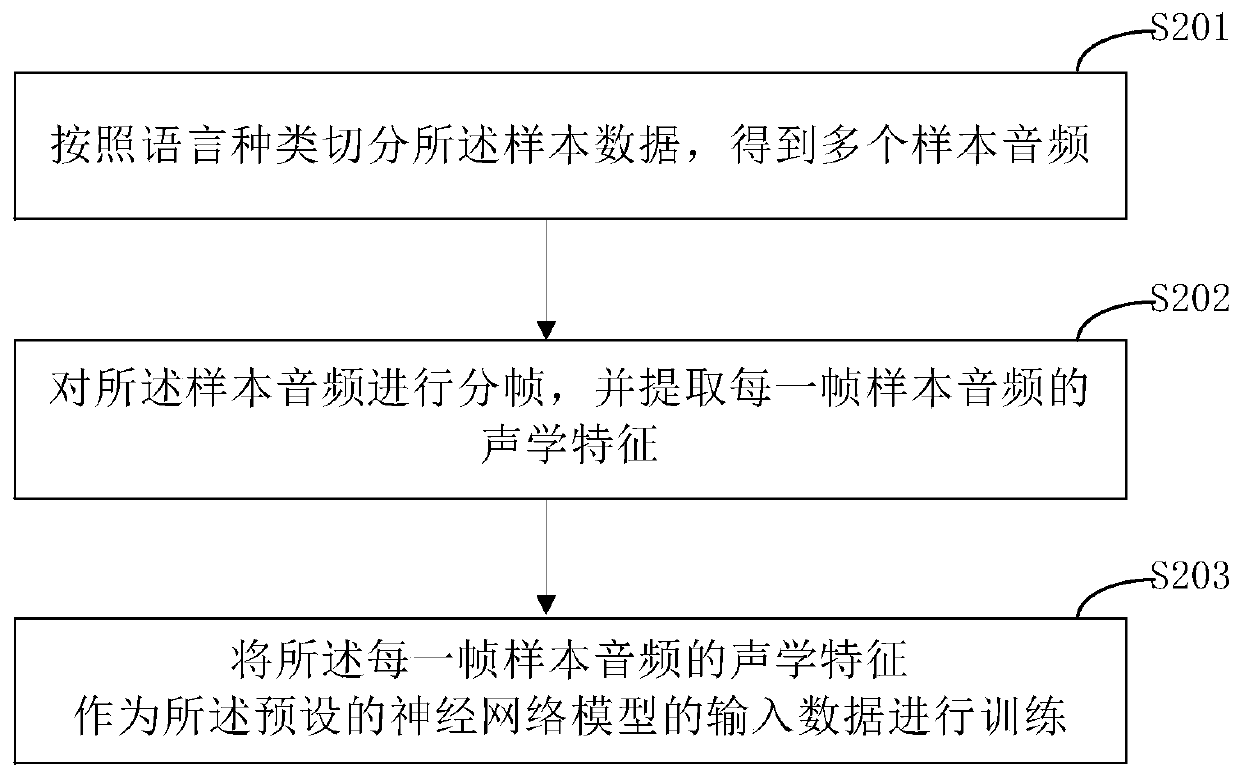

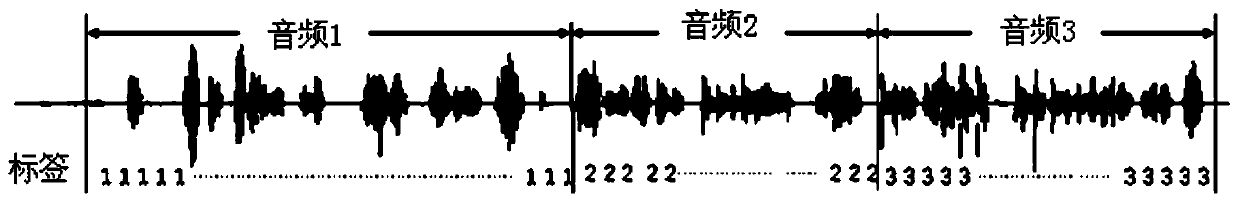

Cross-language speech recognition method and device

ActiveCN110349564ALow costImprove recognition rateSpeech recognitionDiscriminatorSpeech identification

The invention discloses cross-language speech recognition method and device, and relates to the technical field of speech processing. A concrete implementation mode of the method comprises the steps of acquiring cross-language sample data, training the sample data as input data of a preset neural network model, and obtaining a language category discriminator; inputting audio to be recognized intothe language category discriminator, and segmenting the audio to be recognized according to a language category determined by the language category discriminator; and utilizing a recognition engine corresponding to the determined language category for recognizing the segmented audio to be recognized. According to the implementation mode, an existing speed recognition engine has no need to be modified, so that the cost is low, the recognition rate is high, and the accuracy is high.

Owner:AISPEECH CO LTD

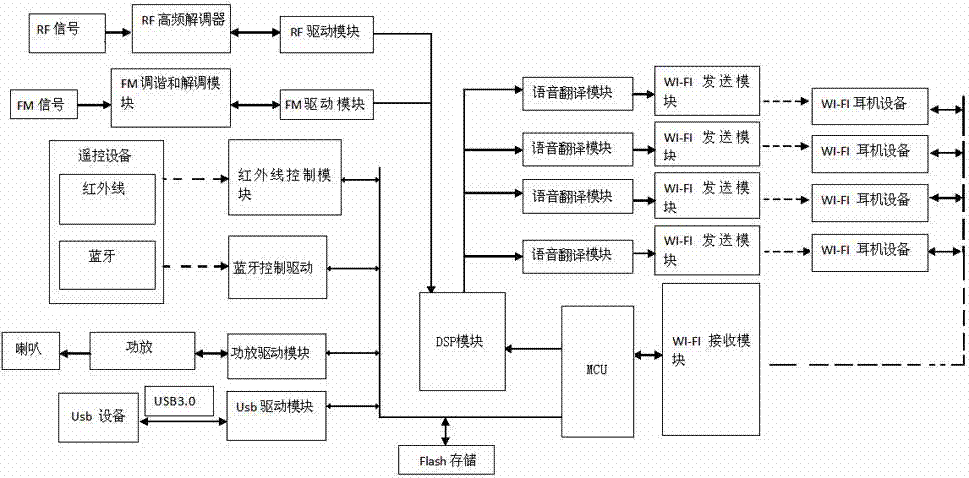

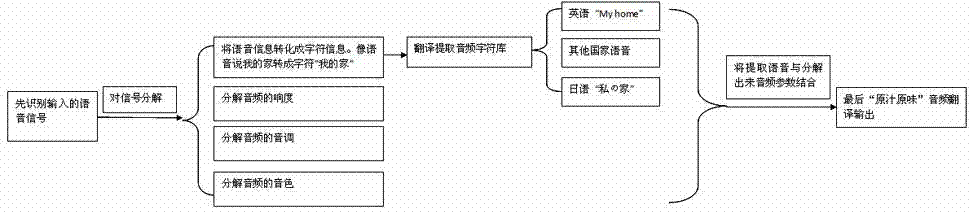

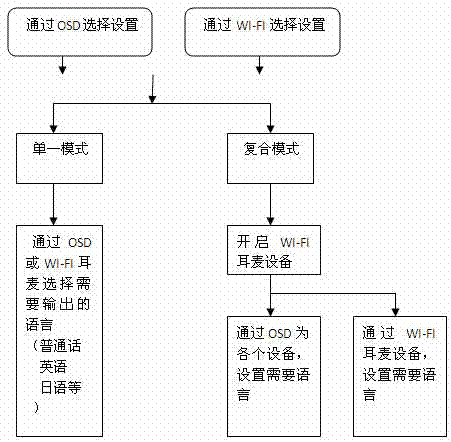

TV (television) system with multi-language speech translation and realization method thereof

ActiveCN102821259ATelevision system detailsColor television detailsWireless transmissionSpeech translation

The invention relates to a TV (television) system with multi-language speech translation and a realization method of the TV system. The realization method is characterized by comprising the following steps: encoding, decoding and processing a speech of the TV system by using a DSP (digital signal processor) module in the TV system, so as to obtain character information; then distributing the character information to at least two speech translation modules according to a process set by the system; and sending translated speech data to an earphone assorted with a wireless receiving module through a corresponding wireless transmission module by the speech translation modules. With the adoption of the TV system provided by the invention, the speech in a TV can be translated into various languages simultaneously. The TV system is more suitable for humanized design. With the adoption of the TV system provided by the invention, people can more conveniently know about economical and cultural information in the other nations well. The TV system is more suitable for globalized development, and has better practical values.

Owner:TPV DISPLAY TECH (XIAMEN) CO LTD

Apparatus, system, method, and computer program product for resolving ambiguities in translations

ActiveUS8214197B2Natural language translationSpeech recognitionTranslation languageMachine translation

Owner:TOSHIBA DIGITAL SOLUTIONS CORP

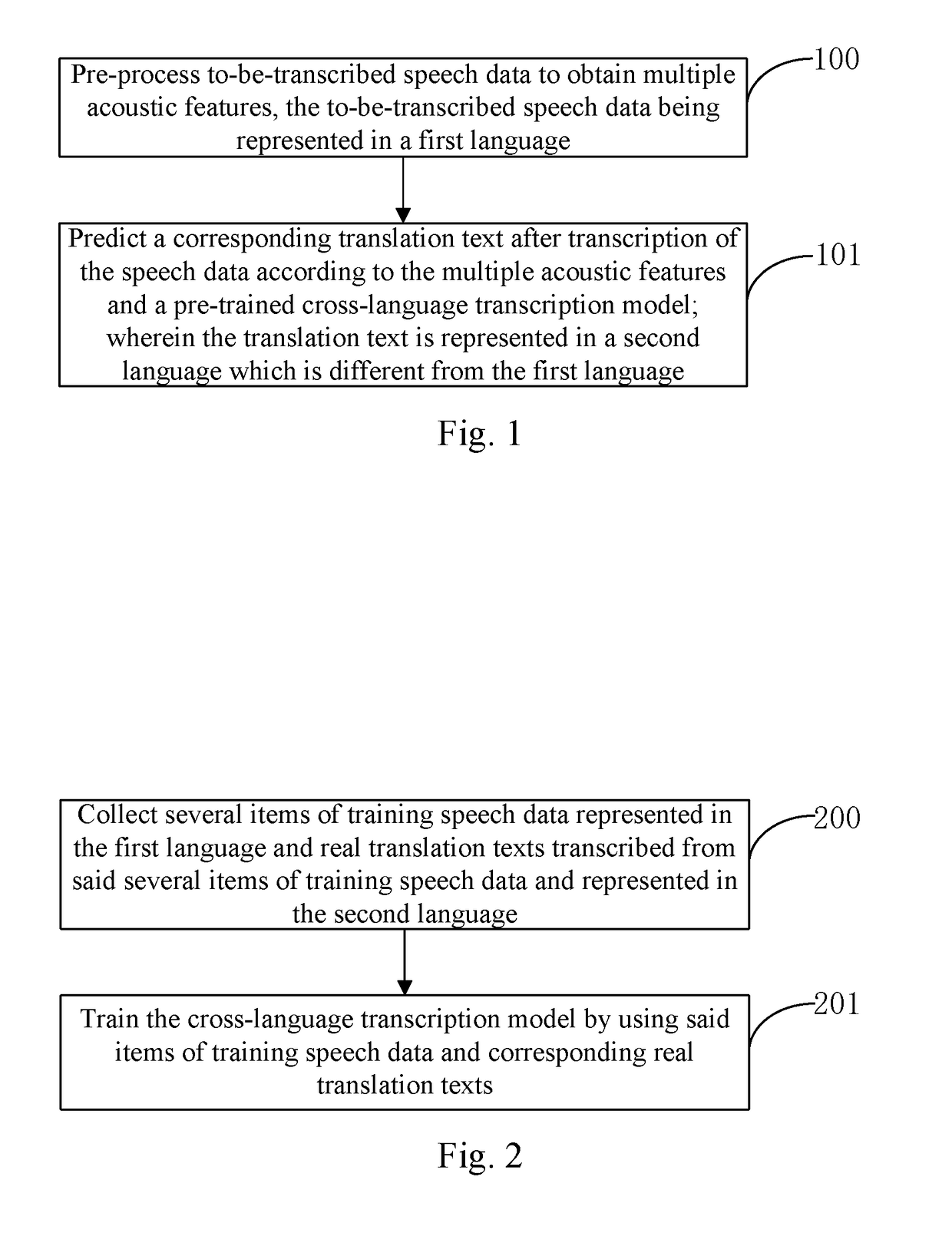

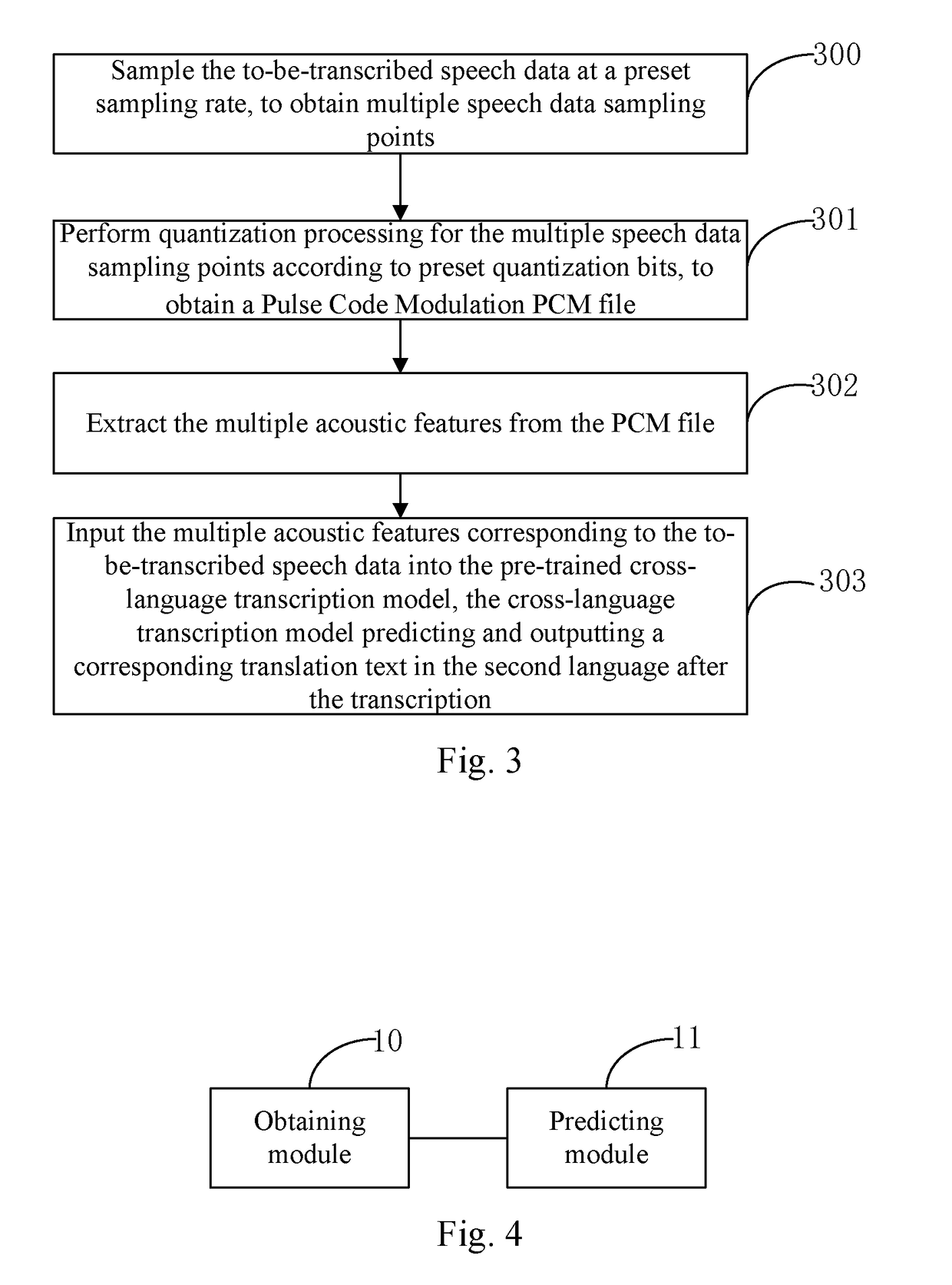

Artificial Intelligence-Based Cross-Language Speech Transcription Method and Apparatus, Device and Readable Medium

ActiveUS20180336900A1Ensure high efficiency and accuracyOvercome accumulationNatural language translationSpeech recognitionSpeech identificationLanguage speech

An artificial intelligence-based cross-language speech transcription method and apparatus, a device and a readable medium. The method includes pre-processing to-be-transcribed speech data to obtain multiple acoustic features, the to-be-transcribed speech data being represented in a first language; predicting a corresponding translation text after transcription of the speech data according to the multiple acoustic features and a pre-trained cross-language transcription model; wherein the translation text is represented in a second language which is different from the first language. According to the technical solution, it is unnecessary, upon cross-language speech transcription, to perform speech recognition first and then perform machine translation, but to directly perform cross-language transcription according to the pre-trained cross-language transcription model. The technical solution can overcome the problem of error accumulation in the two-step cross-language transcription manner in the prior art, and can effectively improve accuracy and efficiency of the cross-language speech transcription as compared with the prior art.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

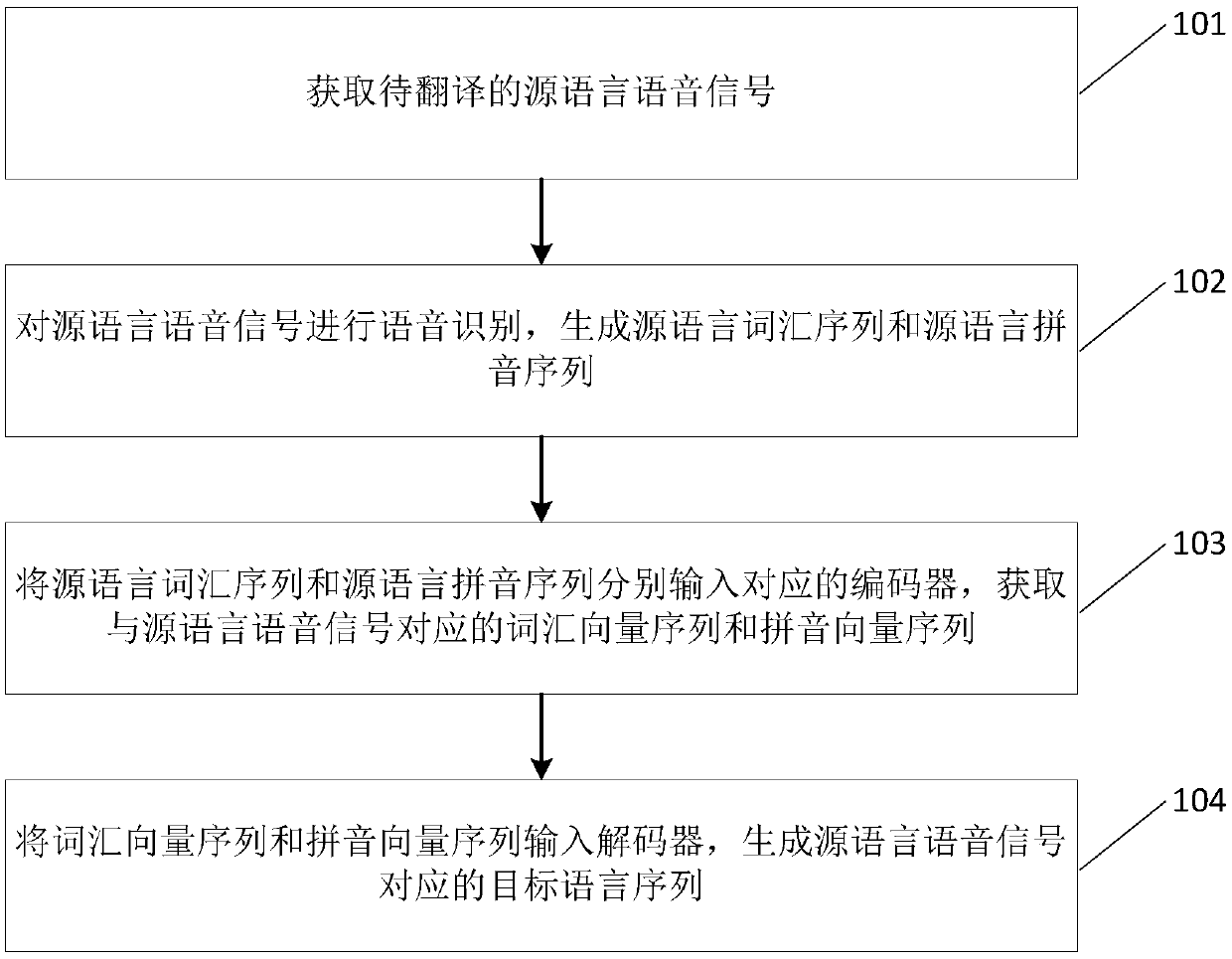

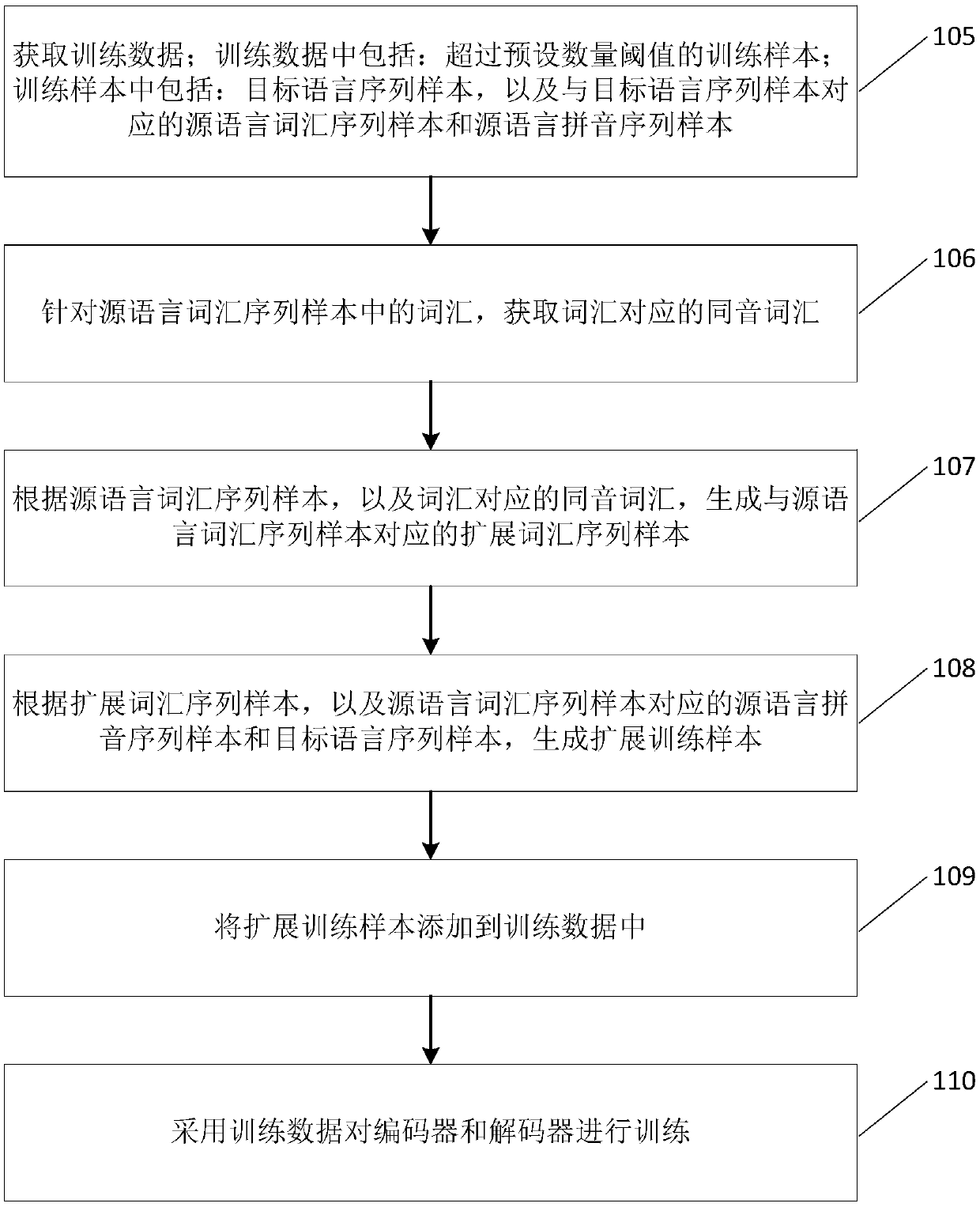

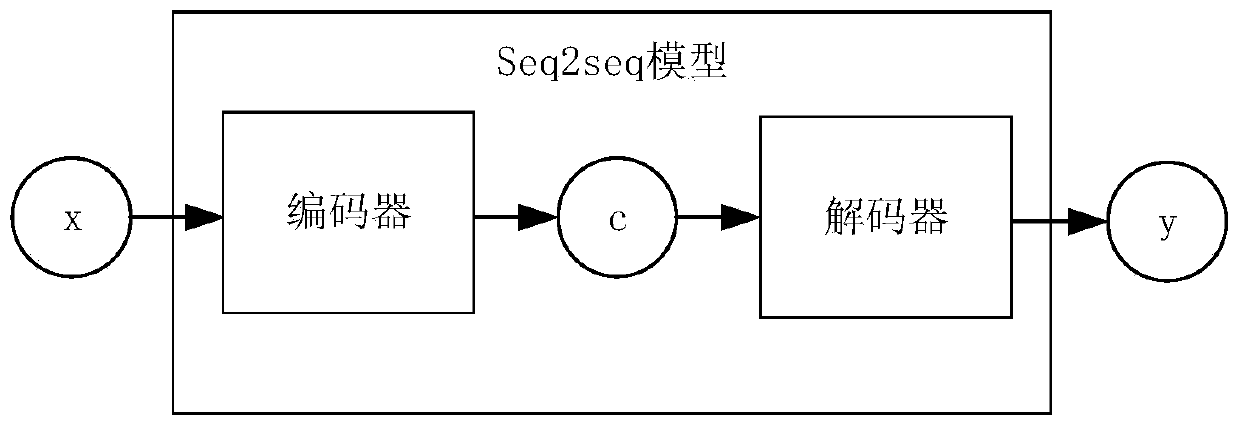

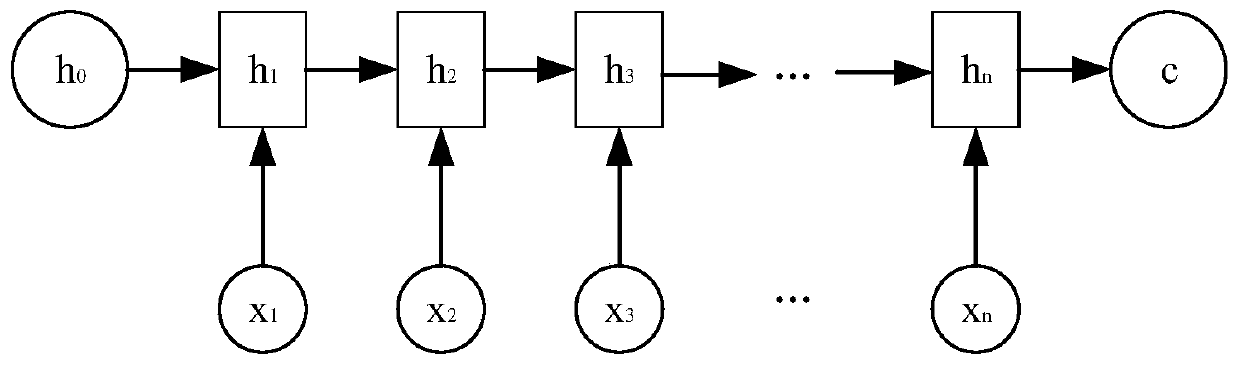

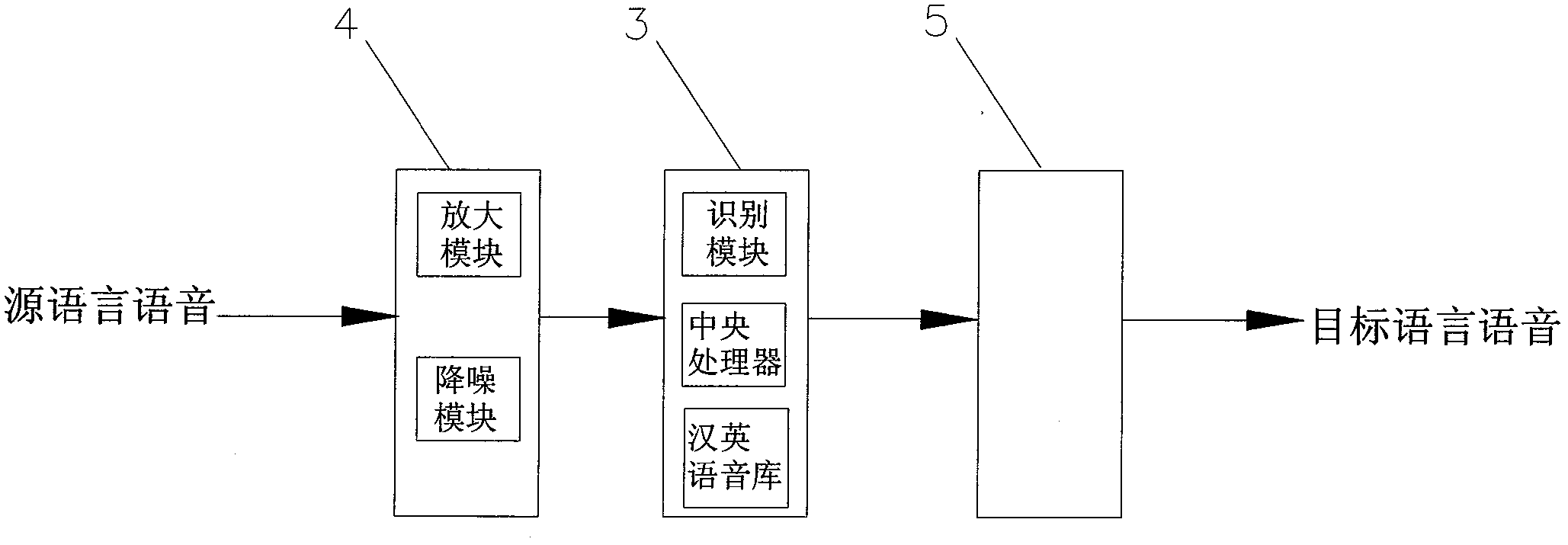

Simultaneous interpretation method and device, and computer equipment

PendingCN110147554AImprove simultaneous translation efficiencyImprove fault toleranceNatural language translationSpecial data processing applicationsLanguage speechSpeech sound

The invention provides a simultaneous interpretation method and device, and computer equipment. The simultaneous interpretation method comprises the steps: acquiring a source language voice signal tobe translated; carrying out speech recognition on the source language speech signal to generate a source language vocabulary sequence and a source language pinyin sequence; inputting the source language vocabulary sequence and the source language pinyin sequence into corresponding encoders respectively, and obtaining a vocabulary vector sequence and a pinyin vector sequence corresponding to the source language voice signal; inputting the vocabulary vector sequence and the pinyin vector sequence into a decoder; generating a target language sequence corresponding to the source language voice signal; and due to the fact that the source language pinyin sequence generally does not make mistakes, determining the target language sequence corresponding to the source language voice signal by combining the source language pinyin sequence, so that part of errors in the source language vocabulary sequence can be corrected, and the simultaneous interpretation efficiency is improved, and the fault-tolerant capability of voice recognition errors is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Speech translation method and device, electronic equipment and storage medium

PendingCN111368559AImprove efficiencyImprove accuracyNatural language translationSpeech recognitionTranslation languageSpeech translation

The embodiment of the invention discloses a speech translation method and device, electronic equipment and a storage medium. The method comprises the steps that source voice corresponding to a language to be translated is acquired; acquiring a specified target language; inputting the source voice and indication information matched with the target language into a pre-trained voice translation model, wherein the voice translation model is used for translating languages in a first language set into languages in a second language set, the first language set comprises a plurality of languages, thefirst language set comprises the language to be translated, the second language set comprises a plurality of languages, and the second language set comprises the target language; and obtaining translation voice output by the voice translation model and corresponding to the target language, the language to be translated being different from the target language. According to the embodiment of the invention, multi-language speech translation can be realized, and the efficiency and accuracy of speech translation are improved.

Owner:BEIJING BYTEDANCE NETWORK TECH CO LTD

Earphone capable of improving English listening comprehension of user

InactiveCN103179481AImprove listening skillsIn line with the laws of language learningEarpiece/earphone attachmentsTransducer circuitsOutput deviceElectrophonic hearing

The invention discloses an earphone capable of improving the English listening comprehension of a user, and relates to a study aid. The earphone is characterized in that speech receivers are arranged on an earphone body of the earphone, speed output devices are arranged in earmuffs, the speech receivers and the speech output devices are connected with a Chinese-English simultaneous translator by conducting wires, source-language speech in an environment is received by the speech receivers, is transmitted to the Chinese-English simultaneous translator via the conducting wires, and is converted into target-language speech by the Chinese-English simultaneous translator, and the voice output devices are used for playing the target-language speech which is converted from the source-language speech by the Chinese-English simultaneous translator. The earphone has the advantages that the Chinese speech in the ambient environment can be converted into corresponding English speech in real time, and a natural and real English language environment can be created for the English learner, so that English learning becomes visual, specific and vivid, language learning laws are met, and the listening competence of the English learner is greatly improved.

Owner:DEZHOU UNIV

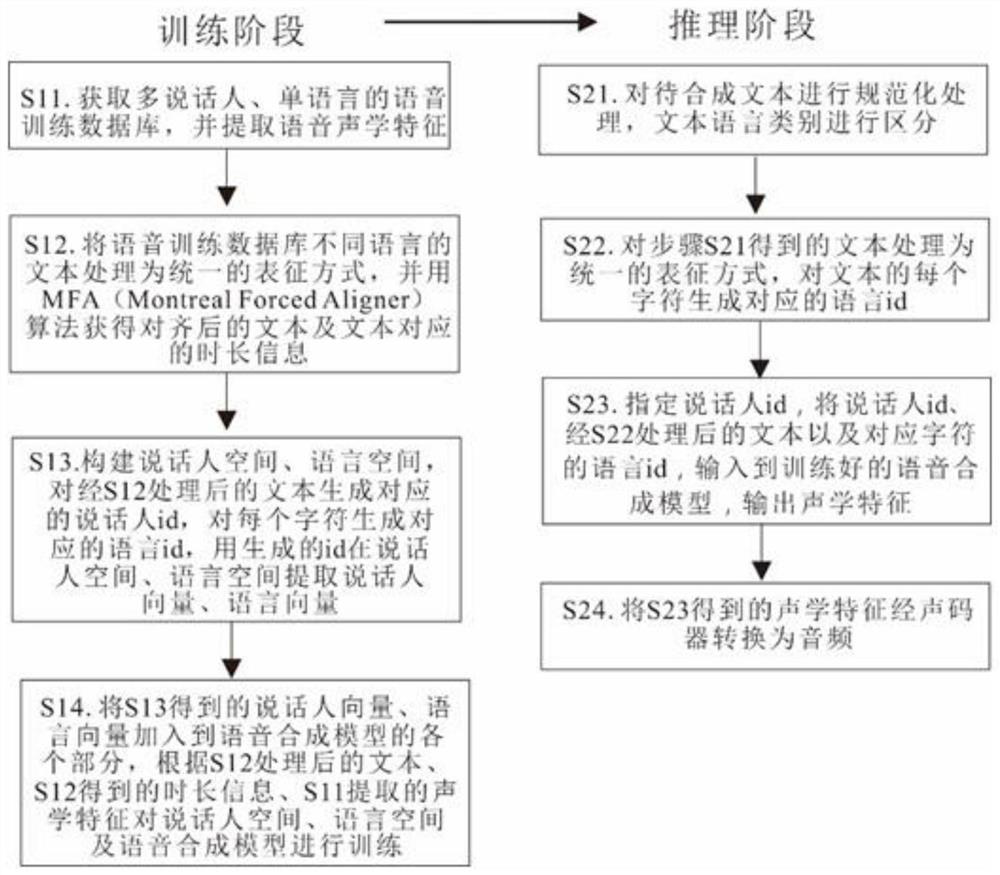

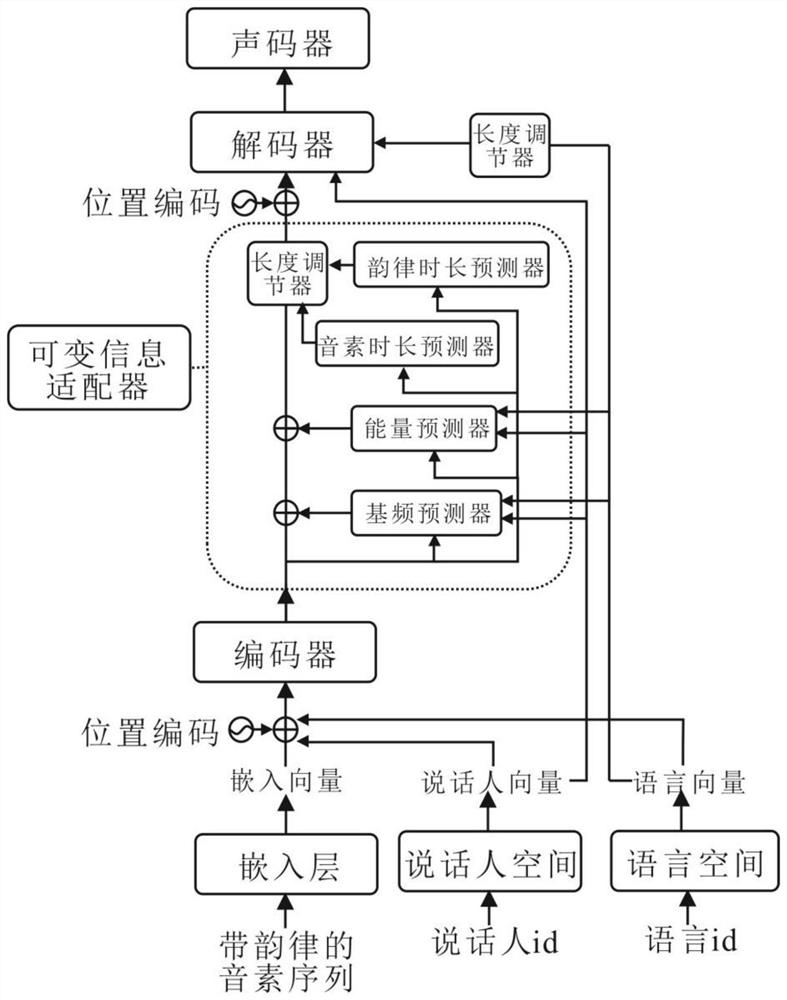

Multi-speaker and multi-language speech synthesis method and system thereof

ActiveCN112435650AKeep Tonal ConsistencyAchieve conversionSpeech synthesisSynthesis methodsSpeech Acoustics

The invention discloses a multi-speaker and multi-language speech synthesis method. The method comprises the following steps: extracting speech acoustic features; processing the texts in different languages into a unified representation mode, and aligning the audio with the texts to obtain duration information; constructing a speaker space and a language space, generating a speaker id and a language id, extracting a speaker vector and a language vector, adding the speaker vector and the language vector into the initial speech synthesis model, and training the initial speech synthesis model byusing the aligned text, duration information and speech acoustic features to obtain a speech synthesis model; processing the to-be-synthesized text to generate the speaker id and the language id; andinputting the speaker id, the text and the language id into a speech synthesis model, outputting speech acoustic features and converting the speech acoustic features into audio. A system is also disclosed. According to the method, unentanglement of the characteristics of the speaker and the language characteristics is realized, and conversion of the speaker or the language can be realized only bychanging the id.

Owner:SICHUAN CHANGHONG ELECTRIC CO LTD

Method and system for modeling a common-language speech recognition, by a computer, under the influence of a plurality of dialects

ActiveUS8712773B2Reduce stepsGuaranteed to workSpeech recognitionSpecial data processing applicationsAlgorithmLanguage speech

The present invention relates to a method for modeling a common-language speech recognition, by a computer, under the influence of multiple dialects and concerns a technical field of speech recognition by a computer. In this method, a triphone standard common-language model is first generated based on training data of standard common language, and first and second monophone dialectal-accented common-language models are based on development data of dialectal-accented common languages of first kind and second kind, respectively. Then a temporary merged model is obtained in a manner that the first dialectal-accented common-language model is merged into the standard common-language model according to a first confusion matrix obtained by recognizing the development data of first dialectal-accented common language using the standard common-language model. Finally, a recognition model is obtained in a manner that the second dialectal-accented common-language model is merged into the temporary merged model according to a second confusion matrix generated by recognizing the development data of second dialectal-accented common language by the temporary merged model. This method effectively enhances the operating efficiency and admittedly raises the recognition rate for the dialectal-accented common language. The recognition rate for the standard common language is also raised.

Owner:SONY COMPUTER ENTERTAINMENT INC +1

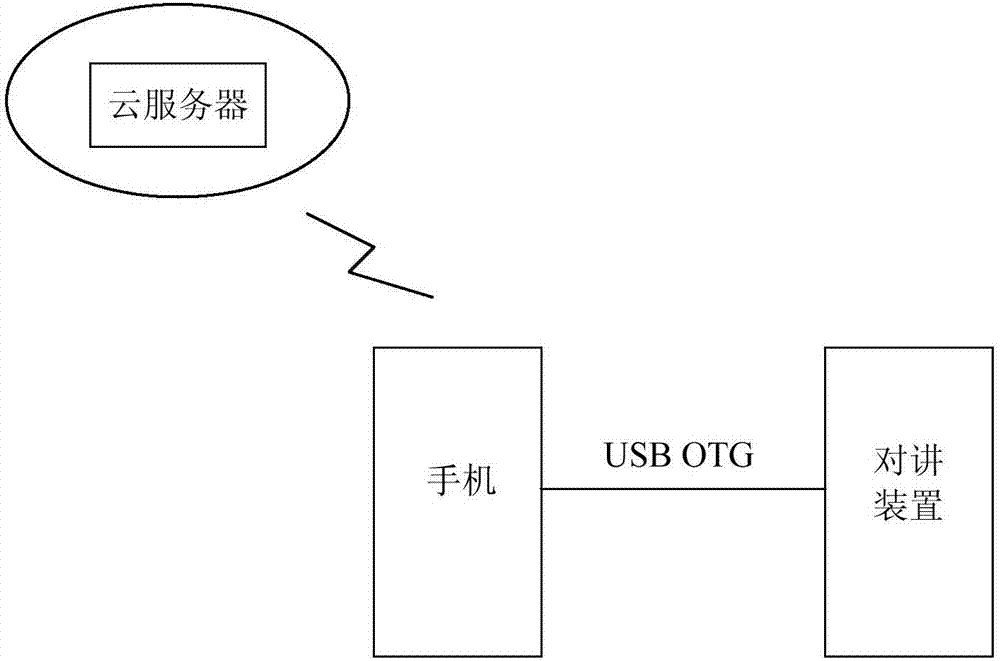

System for realizing real-time speech mutual translation

InactiveCN107979686AFacilitate two-way communicationNatural language translationDevices with voice recognitionLanguage speechSpeech synthesis

The invention discloses a system for realizing real-time speech mutual translation. The system comprises an intercom device and a mobile communication device; the intercom device acquires a speech signal, converts the speech signal into speech data, and sends the speech data to the mobile communication device; the mobile communication device or a cloud server converts the speech data into statements and characters after performing speech recognition on the speech data, translates the statements and characters into a text of a target language, performs speech synthesis processing and generatesplayable speech data for playing in the mobile communication device; the mobile communication device obtains the speech signal, converts the speech signal into speech data, converts the speech data into the statements and characters after performing speech recognition on the speech data, translates the statements and characters into the text of the target language, performs the speech synthesis processing and generates playable speech data; and the playable speech data are sent to the intercom device for playing. By adoption of the system provided by the invention, a language speech can be translated into a speech signal in another language in real time, which facilitates two-way communication between people in different languages.

Owner:北京分音塔科技有限公司

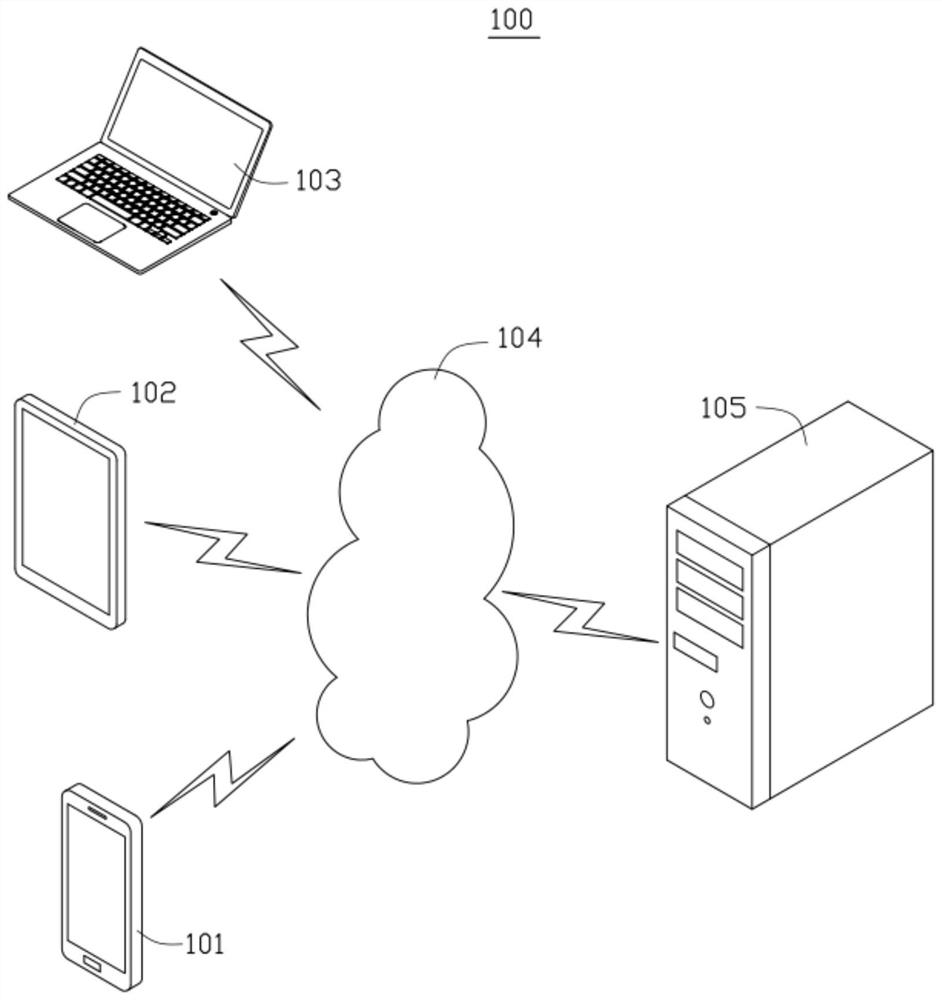

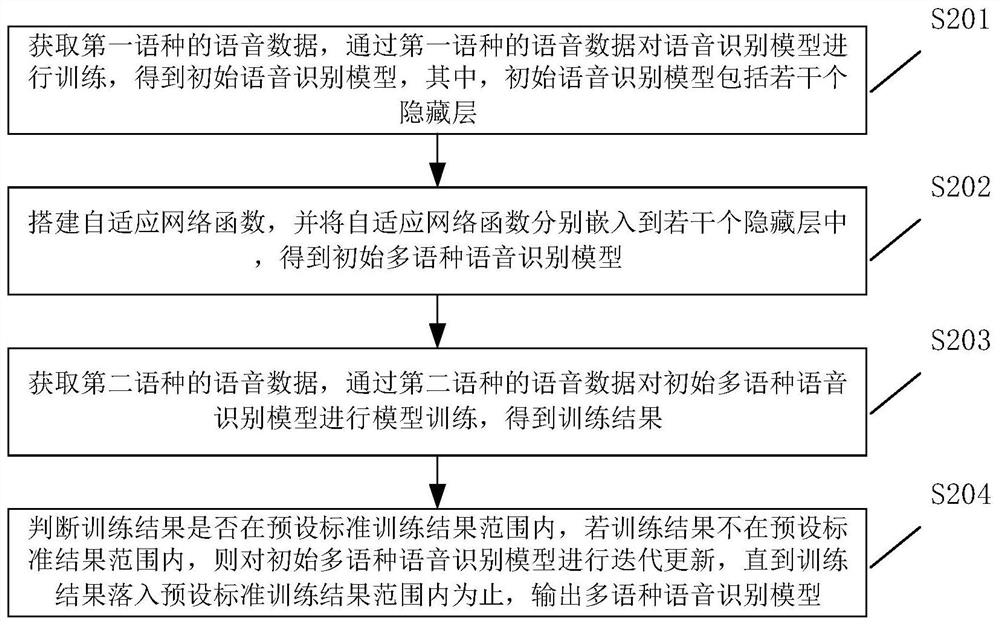

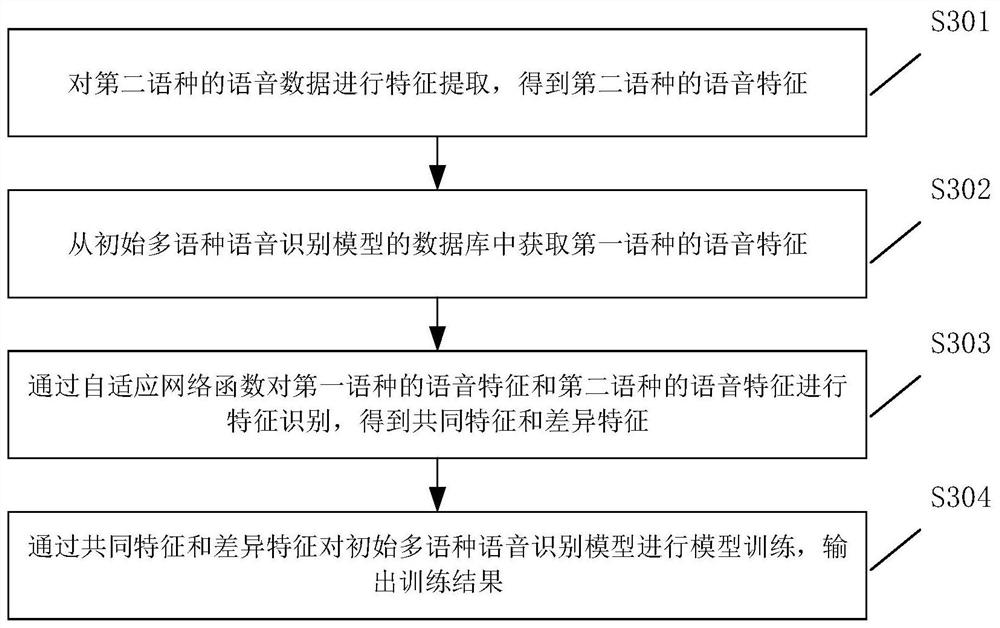

Multilingual speech recognition model training method and device thereof, equipment and storage medium

The invention discloses a multilingual speech recognition model training method, and relates to the field of artificial intelligence, and the method comprises the steps: carrying out the training of aspeech recognition model through a first language, and obtaining an initial speech recognition model; building an adaptive network function, and embedding the adaptive network function into a hiddenlayer of the initial speech recognition model to obtain an initial multilingual speech recognition model; performing model training on the initial multilingual speech recognition model through the speech data of the second language to obtain a training result; and iteratively updating the initial multilingual speech recognition model until the training result falls into a preset standard trainingresult range, and outputting the multilingual speech recognition model. In addition, the invention also relates to a blockchain technology, and the voice data of the first language and the voice dataof the second language can be stored in the blockchain. According to the invention, the adaptive network function is embedded into the hidden layer of the initial speech recognition model, so that thetraining efficiency of the multi-language speech recognition model can be improved.

Owner:PING AN TECH (SHENZHEN) CO LTD

Speech translation apparatus, method and computer readable medium for receiving a spoken language and translating to an equivalent target language

Speech translation apparatus includes first generation unit generating first text representing speech recognition result, and first prosody information, second generation unit generating first para-language information, first association unit associating each first portion of first text with corresponding first portion of first para-language information, translation unit translating first text into second texts, second association unit associating each second portion of first para-language information with corresponding second portion of each second text, third generation unit generating second prosody-information items, fourth generation unit generating second para-language-information items, computation unit computing degree-of-similarity between each first para-language information and corresponding one of second para-language-information items to obtain degrees of similarity, selection unit selecting, from second prosody-information items, maximum-degree-of-similarity prosody information corresponding to maximum degree, fifth generation unit generating prosody pattern of one of second texts which corresponds to maximum-degree-of-similarity prosody information, and output unit outputting one of second texts which corresponds to maximum-degree-of-similarity prosody information.

Owner:KK TOSHIBA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com