Urban remote sensing image vegetation coverage identification method based on deep learning

A technology of vegetation coverage and remote sensing images, applied in the field of remote sensing recognition, can solve problems such as the limitation of recognition methods, complex building facilities and components, etc., and achieve the effect of simplifying processing content, improving processing efficiency and accuracy, and improving recognition accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

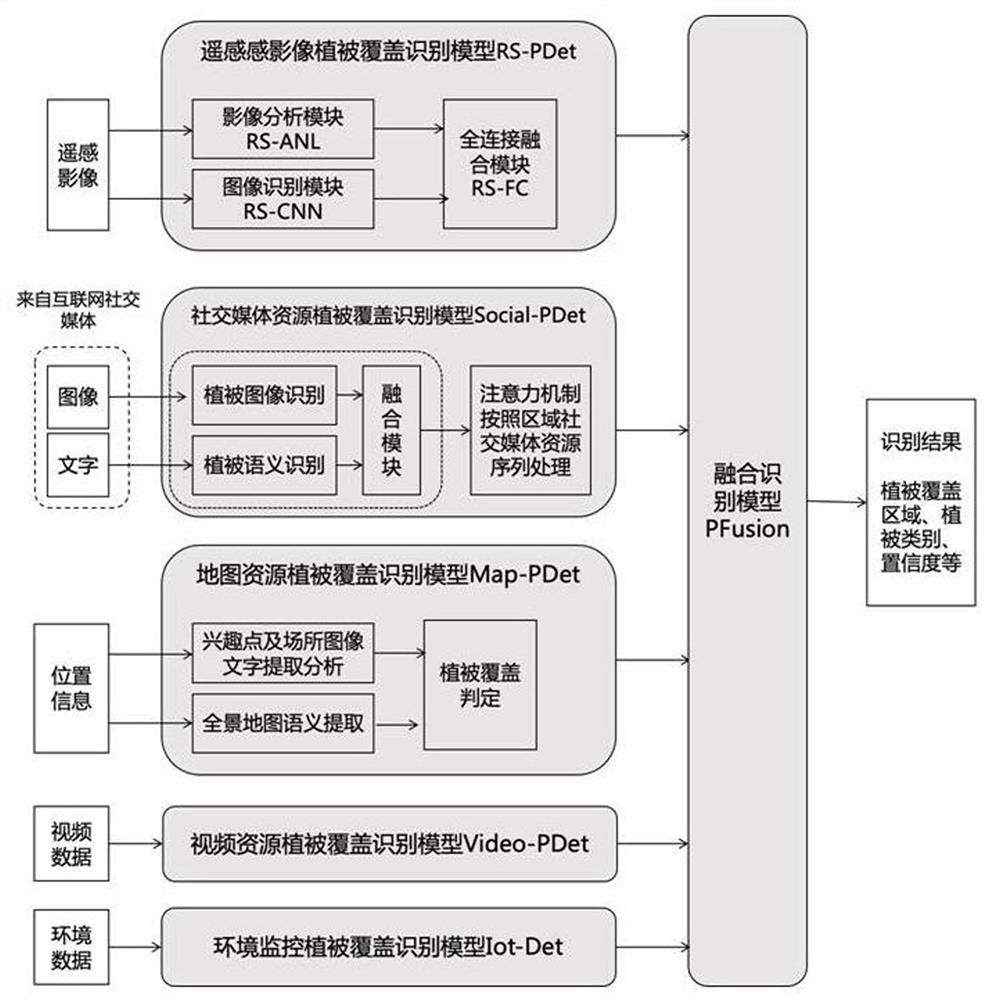

[0053] In step d), the urban vegetation coverage recognition model CPC-Det is composed of the remote sensing image vegetation coverage recognition model RS-PDet, the social media resource vegetation coverage recognition model Social-PDet, the map resource vegetation coverage recognition model Map-PDet, and the video resource vegetation coverage recognition model Video-PDet and environmental monitoring vegetation cover recognition model Iot-Det and fusion recognition model PFusion are composed.

Embodiment 2

[0055] The establishment of the RS-PDet vegetation cover identification model in remote sensing images includes the following steps:

[0056] 1.1) Collect urban remote sensing image data and mark vegetation coverage areas;

[0057] 1.2) Select the Faster R-CNN model or the SSD model or the YOLO model as the general overhead view vegetation recognition model, and the selection of the general overhead view vegetation identification model involves the CNN vegetation image recognition module RS-CNN;

[0058] 1.3) According to the input image size requirements of the CNN vegetation image recognition module RS-CNN, the remote sensing image data is cut with road information and river information as signs;

[0059] 1.4) Train the RS-CNN model of the CNN vegetation image recognition module;

[0060] 1.5) Based on the vegetation index NDVI, the vegetation is extracted by fusing spectral and texture features, and the remote sensing image analysis module RS-ANL is obtained to analyze and...

Embodiment 3

[0064] The establishment of the social media resource vegetation cover identification model Social-PDet includes the following steps:

[0065] 2.1) Collect social media data, and label semantic text and images related to plant coverage;

[0066] 2.2) Use the natural language processing semantic BERT language model to set the semantic keywords of vegetation cover, and perform training based on the collected social media data to obtain a semantic recognition model of vegetation cover text;

[0067] 2.3) Using the image target detection SSD model to operate the gradient descent method based on social media images to obtain a vegetation cover image detection model;

[0068] 2.4) Integrate the text and image of the same social media record, and use the gradient descent method based on the vegetation cover text semantic recognition model and the vegetation cover image detection model to obtain the social media record vegetation cover recognition model;

[0069] 2.5) Input several p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com