Multi-precision neural network computing device and method based on data stream architecture

A neural network and computing device technology, applied in the computer field, can solve problems such as waste of operating bandwidth, waste of power consumption, and lack of instruction support, and achieve the effects of reducing computing delay, avoiding data overflow, and improving user experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below through specific embodiments in conjunction with the accompanying drawings. It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

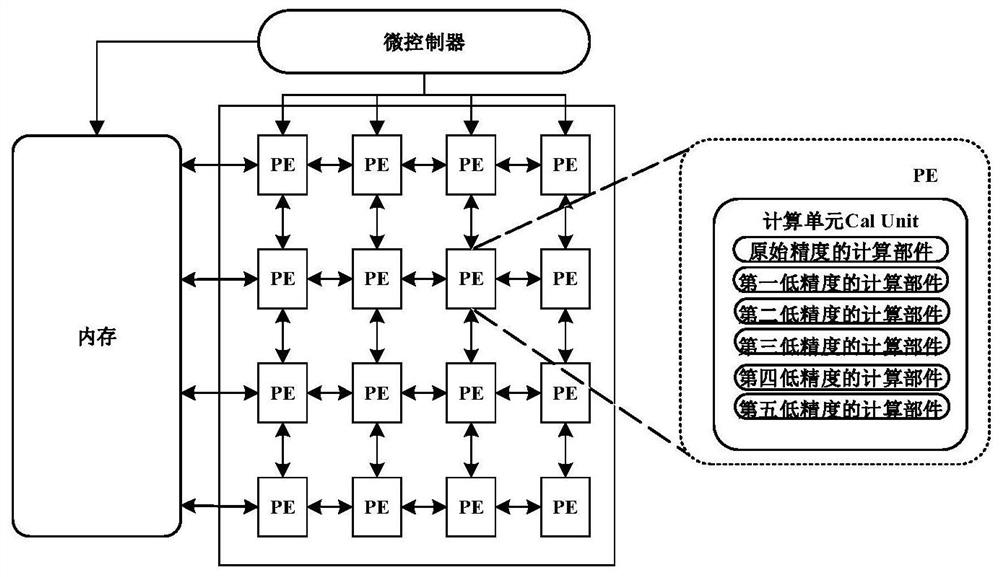

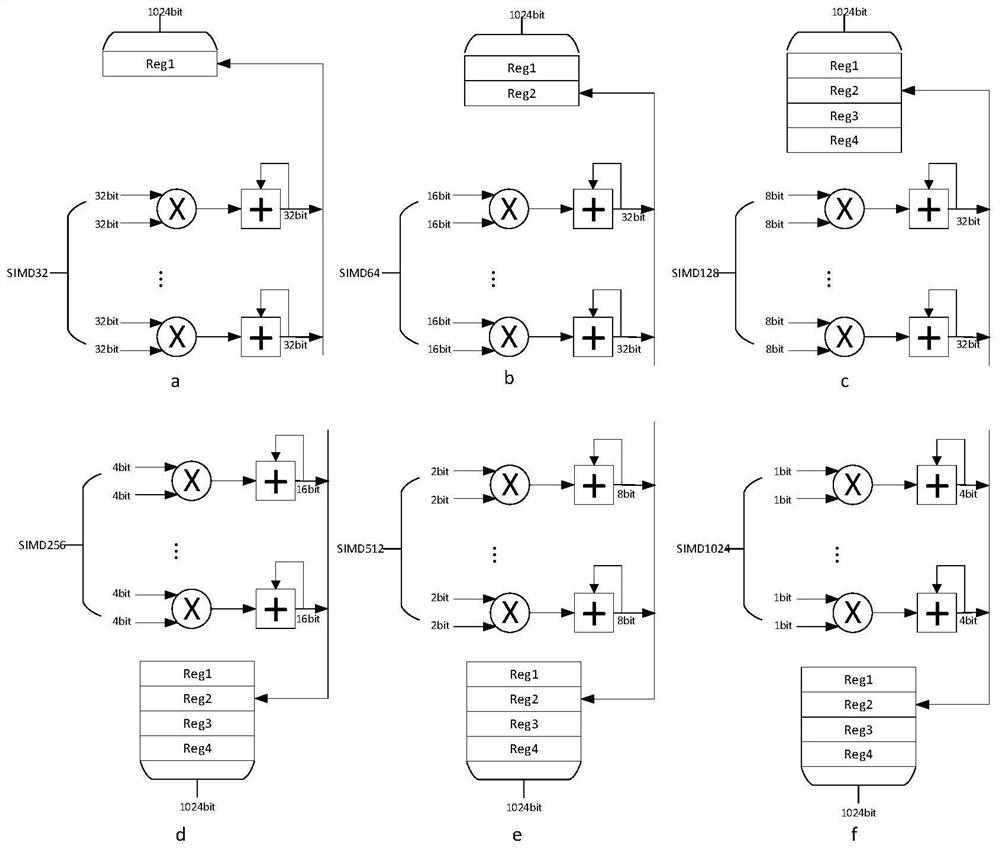

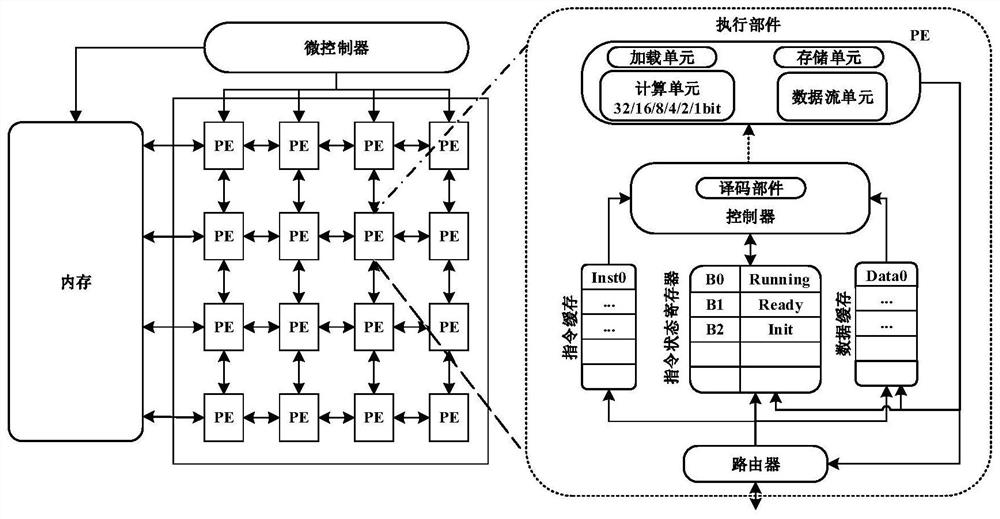

[0033] As mentioned in the background technology section, the use of SIMD operations in existing computing devices has high data bandwidth (on-chip network bandwidth). When the designed low-precision components are unreasonable, not only will the operating bandwidth be wasted, but also data of overflow. Therefore, the present application constructs a multi-precision neural network computing device based on a data flow architecture, including: a microcontroller and a PE array connected to it, each PE of the PE array is configured with an original precision and a precision lower than the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com