Face visible region analysis and segmentation method, face makeup method and mobile terminal

A face area and area technology, which is applied in the fields of face makeup, mobile terminals and computer-readable storage media, can solve problems such as time-consuming, difficult real-time development, and insufficient robustness of occlusion, so as to improve computing efficiency, Stable prediction results and improved real-time performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

[0049] The first embodiment (face segmentation method)

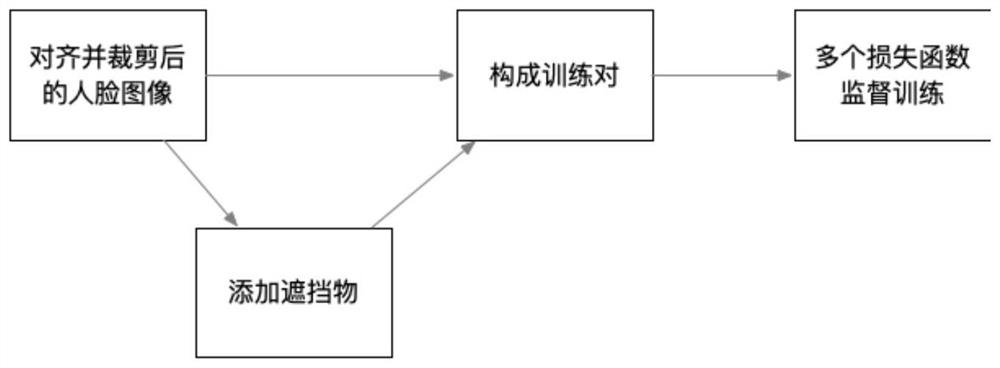

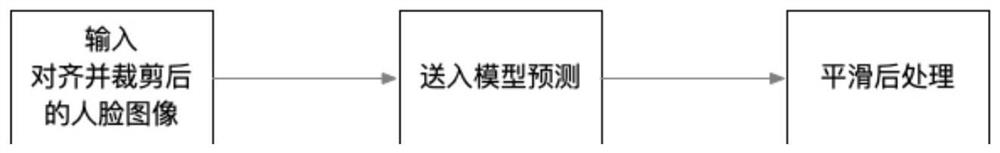

[0050] Such as figure 1 with figure 2 As shown, the present embodiment provides a human face visible area analysis and segmentation method, which includes the following steps:

[0051] A. Obtain the face area image of the sample image to obtain the initial image;

[0052] B. Randomly select more than one occluder from the material library, and add the occluder to a random area of the face region image to obtain an occluded image;

[0053] C. Utilize the initial image and the occluded image to form an image pair (such as Figure 3-a with Figure 3-b ), and adopt U-Net network to carry out training to described image respectively, obtain face parsing model;

[0054] D. Predict the image to be processed by using the face parsing model (such as figure 2 shown), to obtain the segmentation result map of the image to be processed (such as Figure 4-a with Figure 4-b ).

[0055] In this embodiment, the segmentation r...

no. 2 example

[0073] Second embodiment (face makeup method)

[0074] The accuracy of face analysis is very important for face-centered analysis, which can be applied in facial expression analysis, virtual reality, makeup special effects and other fields. This embodiment also provides a face makeup method on the basis of the face visible area analysis and segmentation method, which includes the following steps:

[0075] Obtain image to be processed (as Figure 8 Shown) the segmentation result map (such as Figure 10 shown);

[0076] The segmentation result map and the image to be processed are superimposed to obtain the area to be applied makeup (such as Figure 11 shown);

[0077] The makeup material map (such as Figure 9 shown) and the area to be applied for makeup are superimposed to obtain a makeup effect map (such as Figure 12 shown).

[0078] Wherein, the segmentation result image is a grayscale image, and the range of each pixel point is 0-1, 1 represents the confidence of no...

no. 3 example

[0080] Third Embodiment (Mobile Terminal with Image Processing Function or Virtual Makeup Function)

[0081] This embodiment also provides a mobile terminal, the mobile terminal includes a memory, a processor, and an image processing program stored in the memory and operable on the processor, the image processing program is executed by the processor During execution, the steps of the human face visible area analysis and segmentation method described in any one of the above and / or the steps of the human face makeup method described above are realized.

[0082] The mobile terminal includes: a mobile terminal with a camera function, such as a mobile phone, a digital camera, or a tablet computer, or a mobile terminal with an image processing function, or a mobile terminal with an image display function. The mobile terminal may include components such as a memory, a processor, an input unit, a display unit, and a power supply.

[0083] Wherein, the memory can be used to store soft...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com