Multi-parallel strategy convolutional network accelerator based on FPGA

A convolutional network and accelerator technology, applied in the field of network computing, can solve the problems of computational redundancy, high computational complexity, and low implementation efficiency, achieve high parallel processing efficiency, solve computational redundancy, and improve computational speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

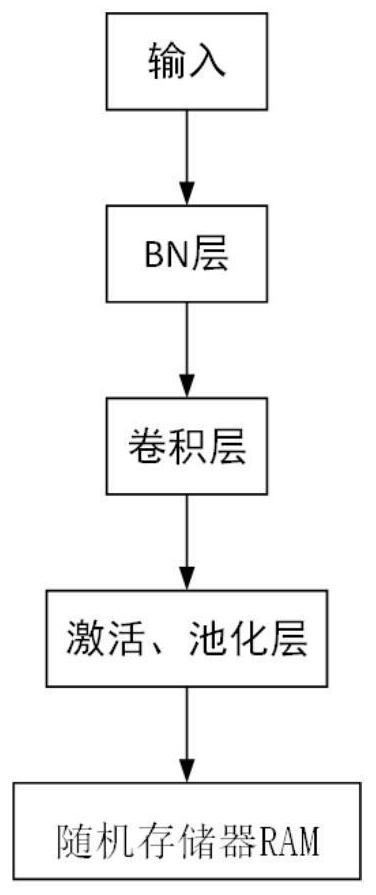

Method used

Image

Examples

Embodiment 1

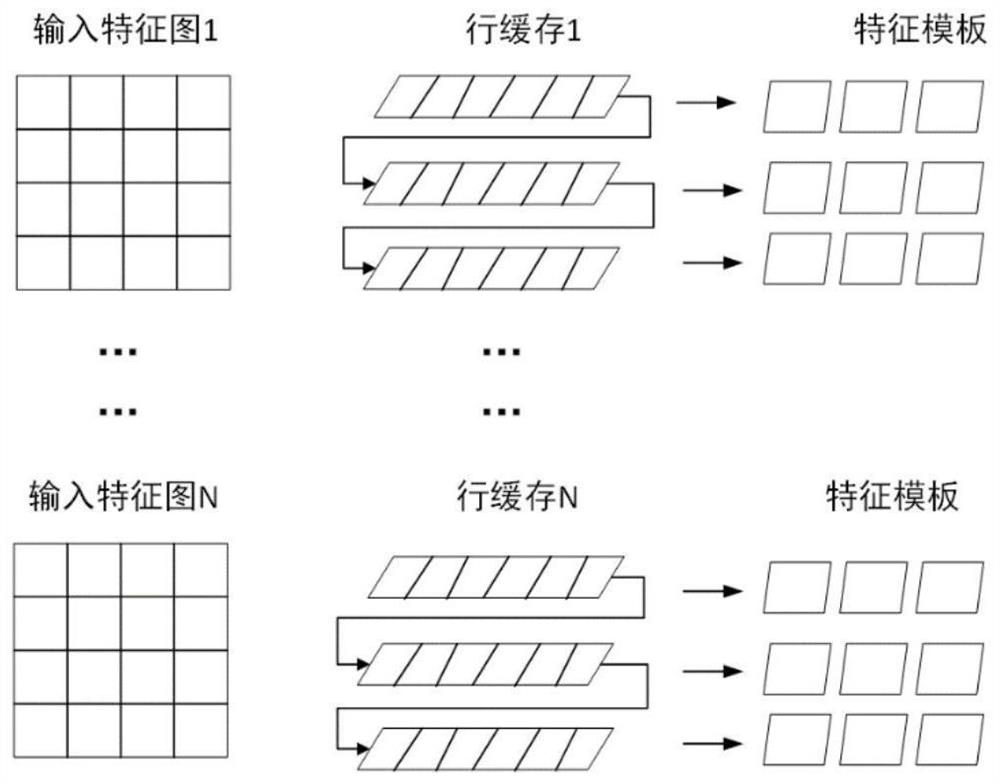

[0042] like figure 2 As shown, the input parallel behavior uses feature templates to process N input feature maps in parallel. The input feature maps enter the row cache in the order of row by row and column by column. When a row cache is full, the data of the previous row is filled Enter the next line buffer, and get the data of the size of the feature template at the exit of each line buffer along with the flow of pixels;

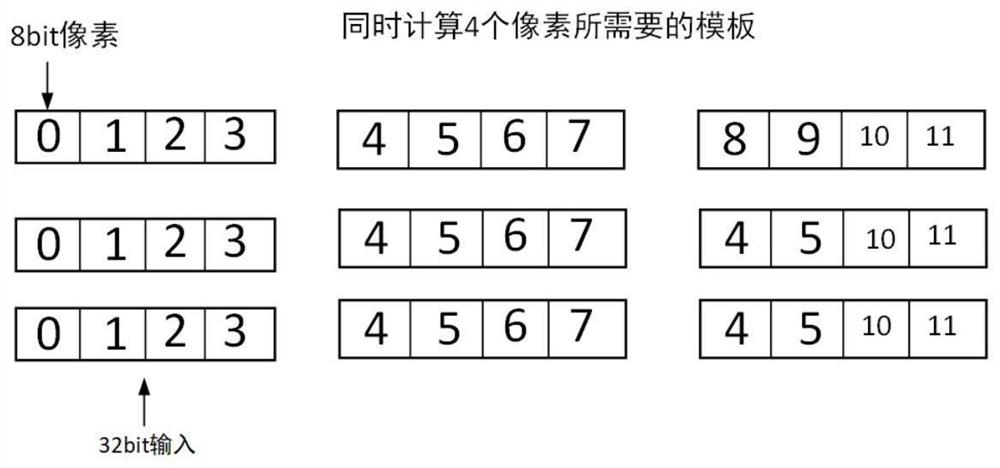

[0043] like image 3 As shown, the pixel parallel behavior completes the convolution process of multiple consecutive pixels at the same time, using an 8-bit pixel strategy; the top-level interface is 32-bit input, and the feature template with a size of 3×3 can store the convolution process of 4 pixels at the same time. The required input feature map.

[0044] like Figure 4 As shown, the output parallel can process N input feature maps in parallel, and the same input feature map is convoluted with the weight calculation of N groups of output channels...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com