Asymmetric GM multi-modal fusion saliency detection method and system based on CWAM

A detection method and detection system technology, applied in the field of visual saliency detection of deep learning, can solve the problems of low accuracy, poor effect of saliency prediction map, loss of image feature information, etc., and achieve the effect of enhanced expression

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

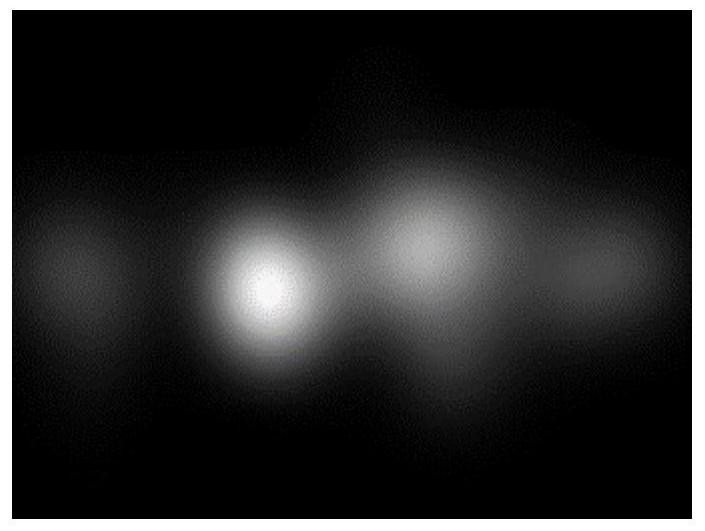

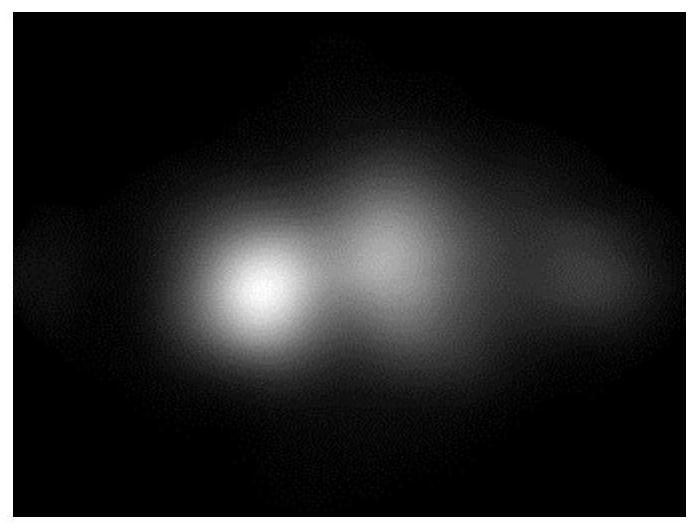

[0037] The saliency detection of the image is to simplify the original image into the salient area in the image and mark it out, which provides accurate positioning for subsequent editing processes such as image segmentation, recognition, and scaling. Capture and other fields have a wide range of application prospects. In recent years, with the rise of big data and deep learning technologies, convolutional neural networks (CNN) have shown very superior performance in the detection of salient objects in images. Through the classification of convolutional neural networks and Regression to achieve better positioning and capture of the boundary information of image salient objects.

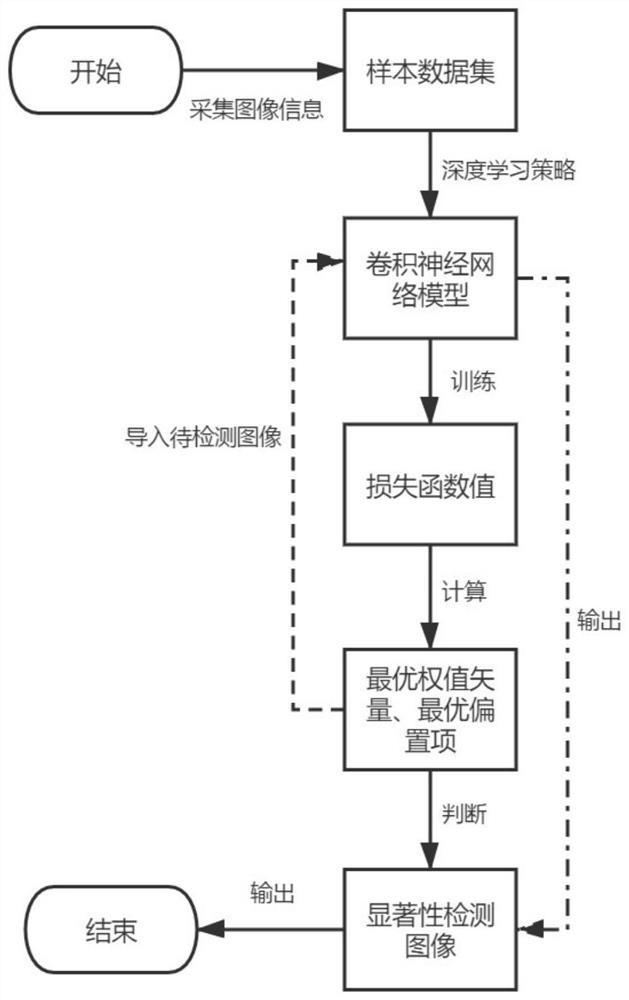

[0038] refer to figure 1 ~ Fig. 5 is the first embodiment of the present invention, which provides a CWAM-based asymmetric GM multimodal fusion saliency detection method, including:

[0039] S1: Collect image data for preprocessing to form a sample data set. What needs to be explained is:

[0040] ...

Embodiment 2

[0066] refer to Figure 7 , which is the second embodiment of the present invention. This embodiment is different from the first embodiment in that it provides a CWAM-based asymmetric GM multimodal fusion saliency detection system, including:

[0067] The acquisition module 100 is used to acquire the RGB image, the depth image and the real human eye annotation image of the original three-dimensional graphics, and construct a sample data set.

[0068]The data processing center module 200 is used to receive, calculate, store, and output the weight vector and offset item to be processed, which includes a computing unit 201, a database 202 and an input and output management unit 203, and the computing unit 201 is connected to the acquisition module 100 , used to receive the image data acquired by the acquisition module 100, perform preprocessing and weight calculation on it, the database 202 is connected to each module, and is used to store all the data information received, and p...

Embodiment 3

[0075] In order to better understand the application of the method of the present invention, this embodiment chooses to describe the combined operation of the detection method and system, refer to Image 6 ,as follows:

[0076] (1) Convolutional neural network includes input layer, hidden layer and output layer.

[0077] The input end of the input layer inputs the RGB image of the original stereo image and the corresponding depth map, the output end of the input layer outputs the R channel component, the G channel component and the B channel component of the original input image, and the output of the input layer is the input of the hidden layer Amount; Among them, the depth map is processed with RGB after being processed by HHA encoding Figure 1 The sample has three channels, that is, it is processed into three components after passing through the input layer, and the input original stereo image has a width of W and a height of H;

[0078] The components of the hidden laye...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com