Deep neural network-based scattering medium penetrating target positioning and reconstruction method

A deep neural network and target positioning technology, applied in the field of machine learning and image reconstruction, can solve problems such as inability to measure spatial position information, and achieve the effects of improving positioning accuracy and imaging quality, good performance, and strong constraint ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] The present invention is described in further detail now in conjunction with accompanying drawing. These drawings are all simplified schematic diagrams, which only illustrate the basic structure of the present invention in a schematic manner, so they only show the configurations related to the present invention.

[0034] A target location and reconstruction method through a scattering medium based on a deep neural network, the specific steps are:

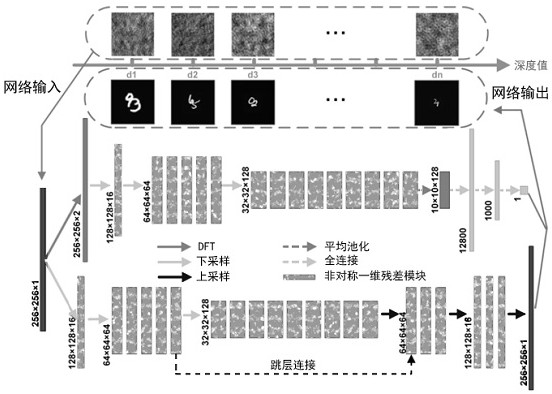

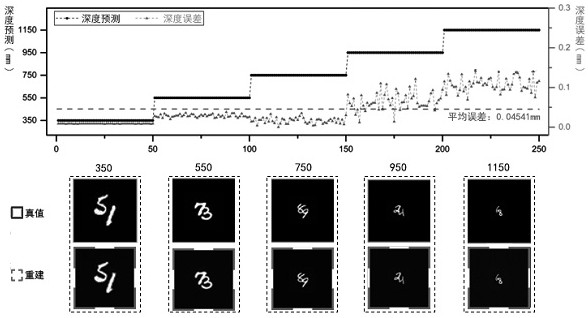

[0035] Step 1. Propose a multi-task depth prediction and image reconstruction network DINet for learning and training the statistical model of speckle patterns generated by different positions;

[0036] System configuration such as Figure 1-3 As shown, the system configuration is used to acquire the image data of the experiment and the distance between the object and the scattering medium. figure 2 It is the expanded diagram of the optical path described by the experimental system for the distance. The network structure ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com