Neural network compression method and device, computer equipment and storage medium

A neural network and compression method technology, applied in the field of neural network compression method, computer equipment and storage medium, and devices, can solve the problems of low accuracy rate of students' network

- Summary

- Abstract

- Description

- Claims

- Application Information

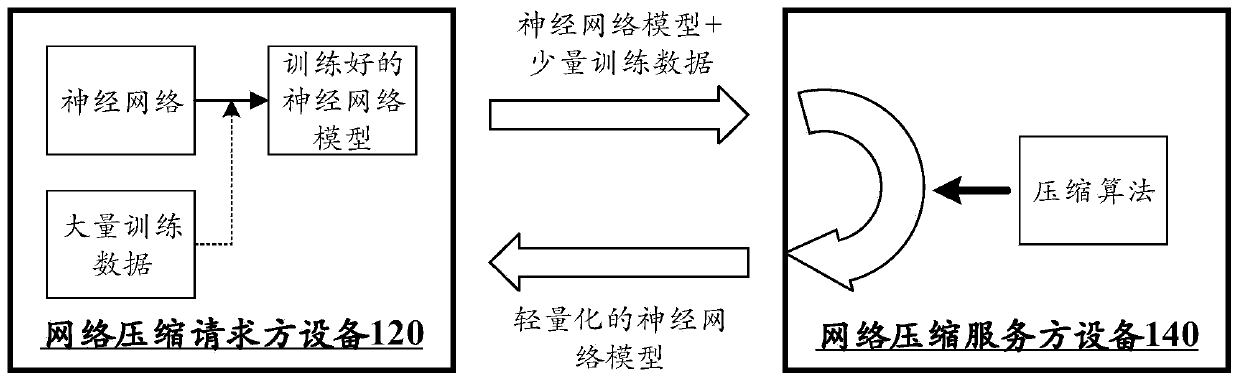

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example

[0168] target sparsity r';

[0169] output:

[0170] Lightweight Student Network F S

[0171]

[0172]

[0173] For the network compression algorithm under very little data, in addition to the several schemes provided under the above steps 404 to 406, there is another possible technical alternative: replace the connection method of cross distillation with the student network and teacher Data augmentation is performed on the feature map of the hidden layer of the network, including adding Gaussian noise to the feature map, linear interpolation on the feature map corresponding to different inputs to obtain more intermediate data, rotating and scaling the feature map to obtain various The generalized intermediate signal enhances the generalization ability of the model.

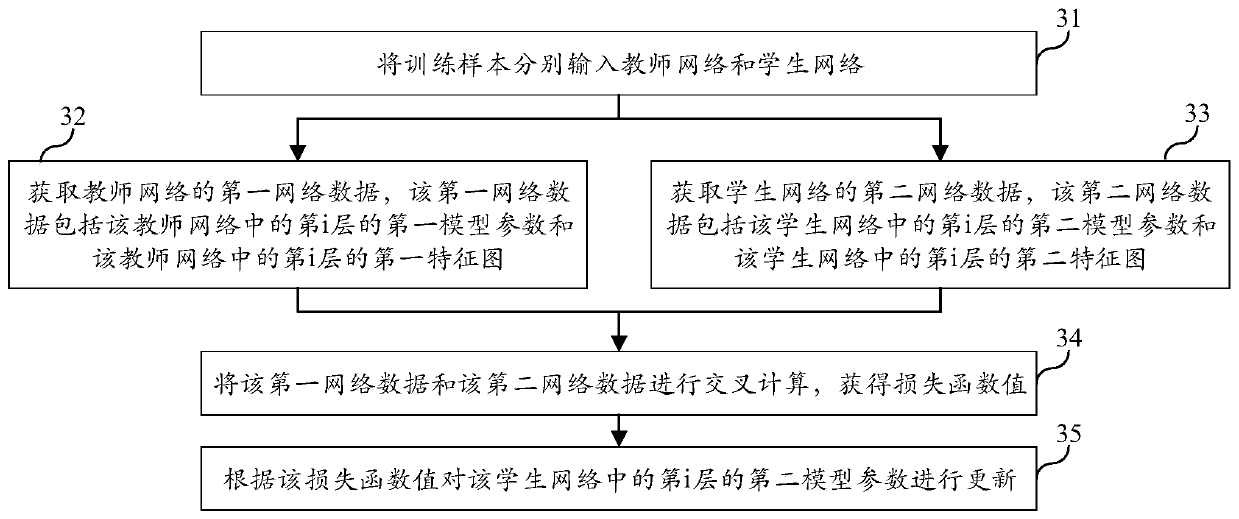

[0174]To sum up, in the scheme shown in the embodiment of this application, by inputting the training samples into the teacher network and the student network respectively; obtaining the first network da...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com