Method for realizing neural network model splitting by using multi-core processor and related product

A neural network model, multi-core processor technology, applied in the field of deep learning

- Summary

- Abstract

- Description

- Claims

- Application Information

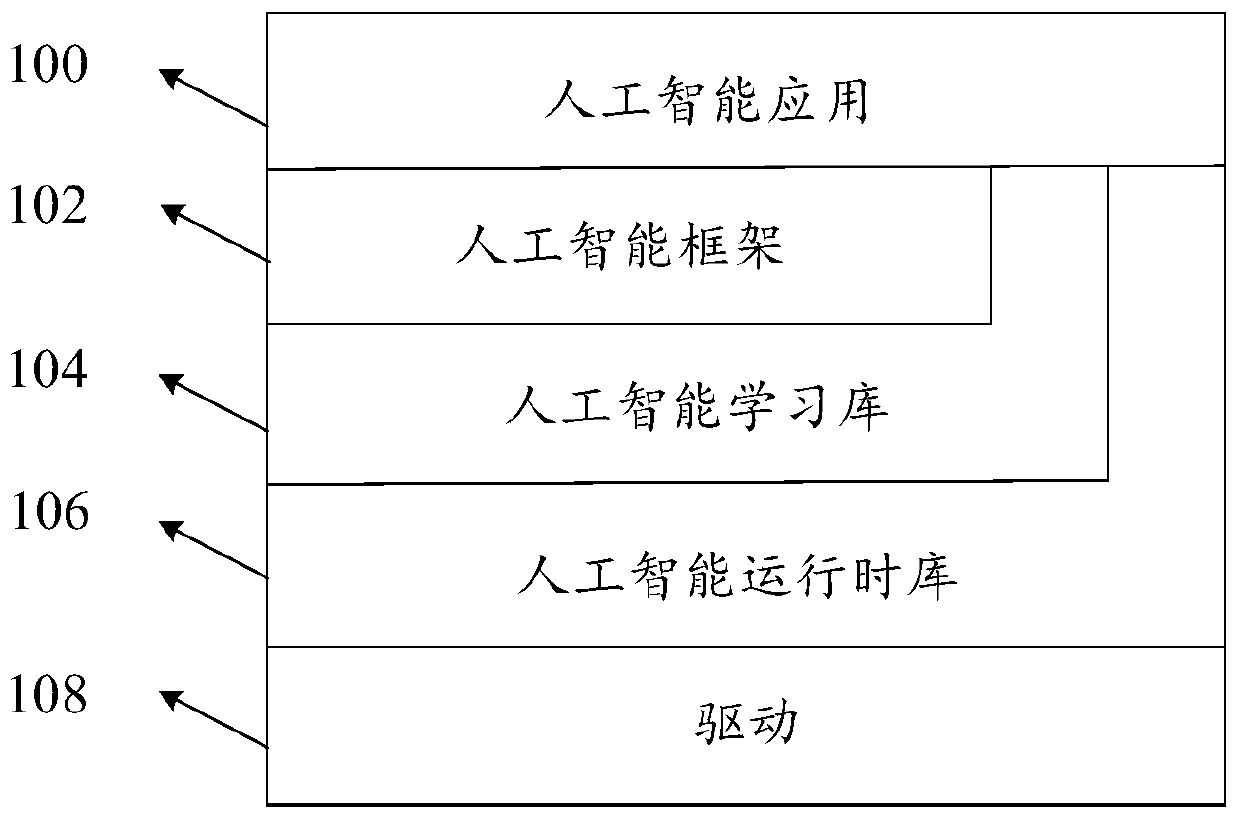

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

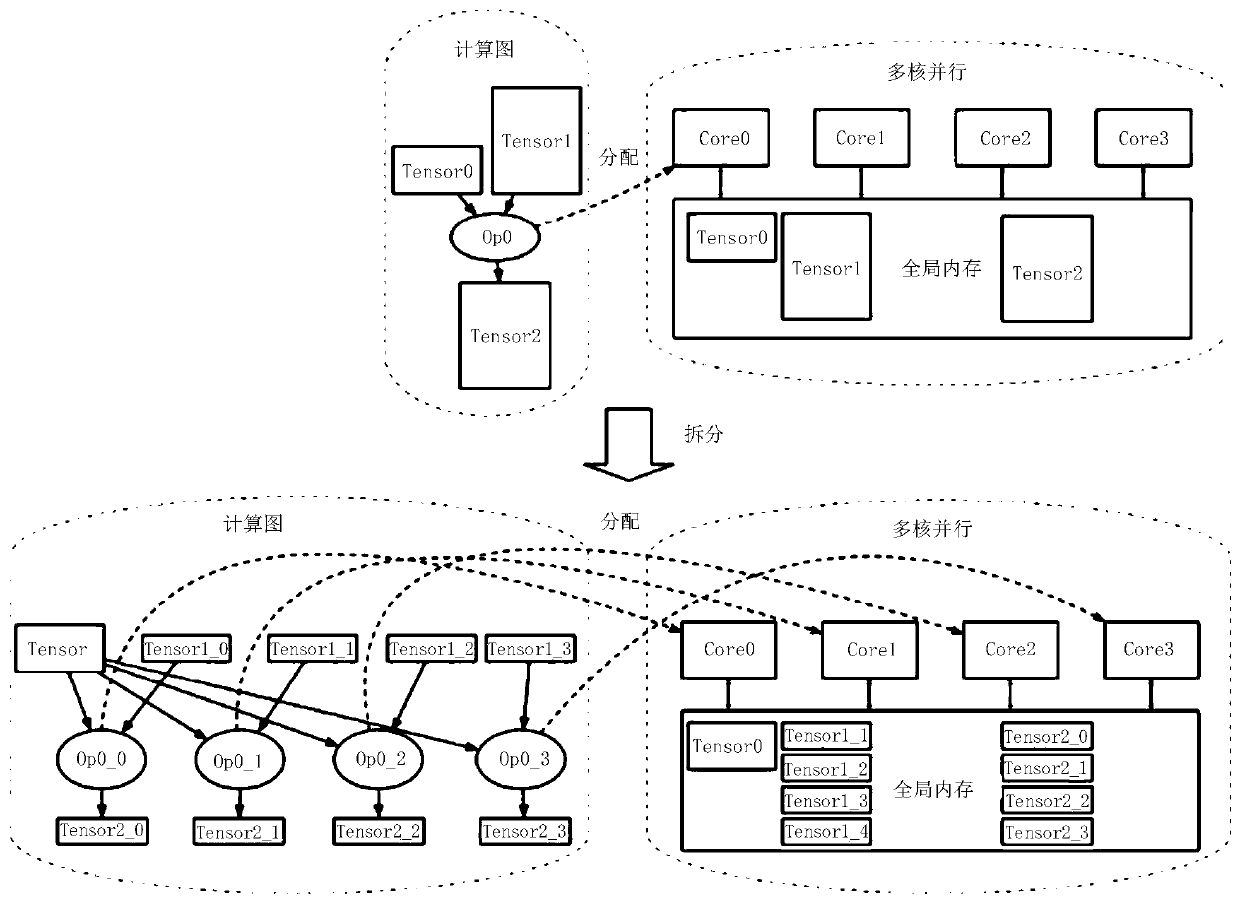

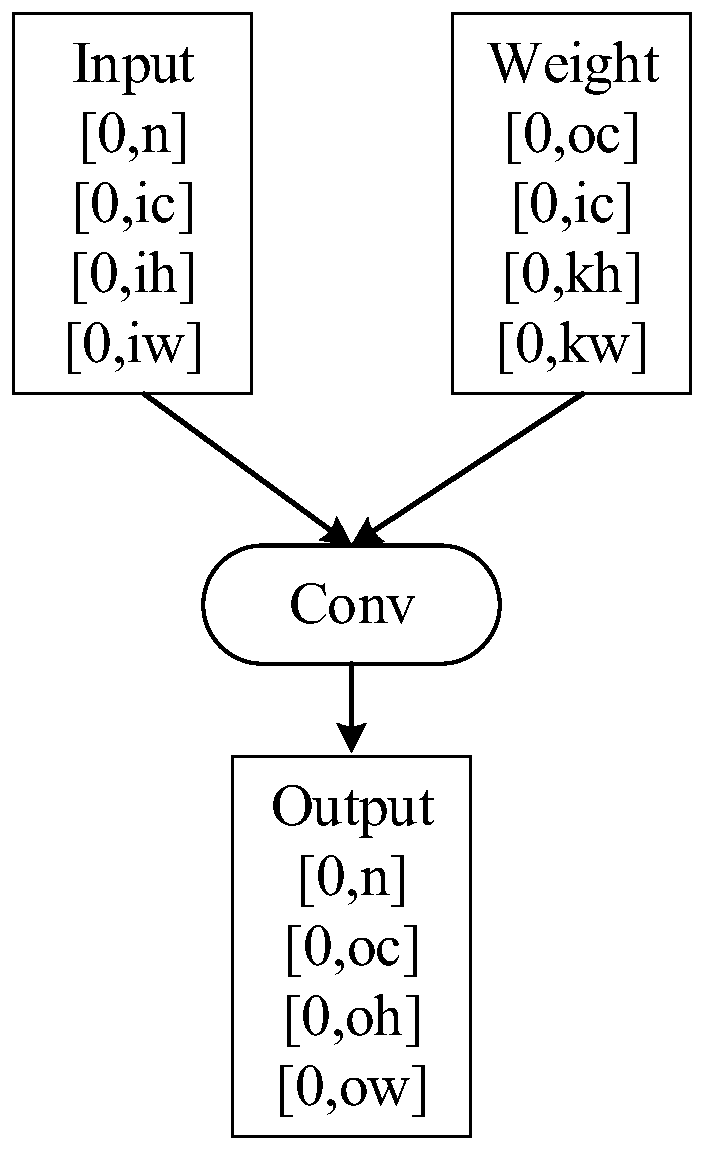

[0083] The technical solutions in the embodiments of the present application will be described below with reference to the drawings in the embodiments of the present application.

[0084] It should be understood that the terms "first", "second" and "third" in the claims, specification and drawings of the present disclosure are used to distinguish different objects, rather than to describe a specific sequence. The terms "comprising" and "comprises" used in the specification and claims of this disclosure indicate the presence of described features, integers, steps, operations, elements and / or components, but do not exclude one or more other features, integers , steps, operations, elements, components, and / or the presence or addition of collections thereof.

[0085] It should also be understood that the terminology used in this disclosure description is for the purpose of describing specific embodiments only, and is not intended to limit the present disclosure. As used in this d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com