FPGA accelerator of LSTM neural network and acceleration method of FPGA accelerator

A neural network and accelerator technology, applied in the field of FPGA accelerator and its acceleration, can solve problems such as load imbalance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0015] The above solution will be further described below in conjunction with specific embodiments. It should be understood that these examples are used to illustrate the present invention and not to limit the scope of the present invention.

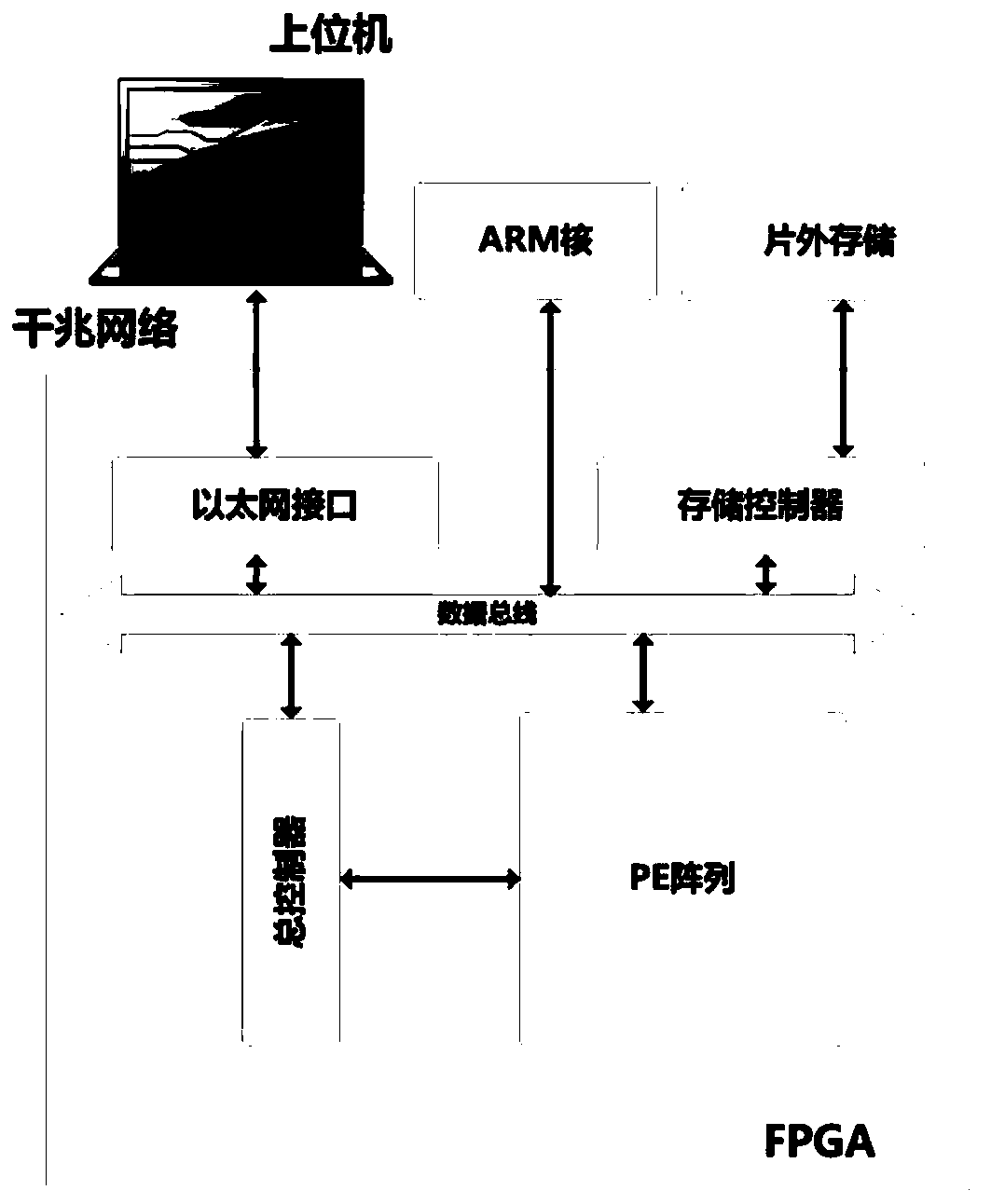

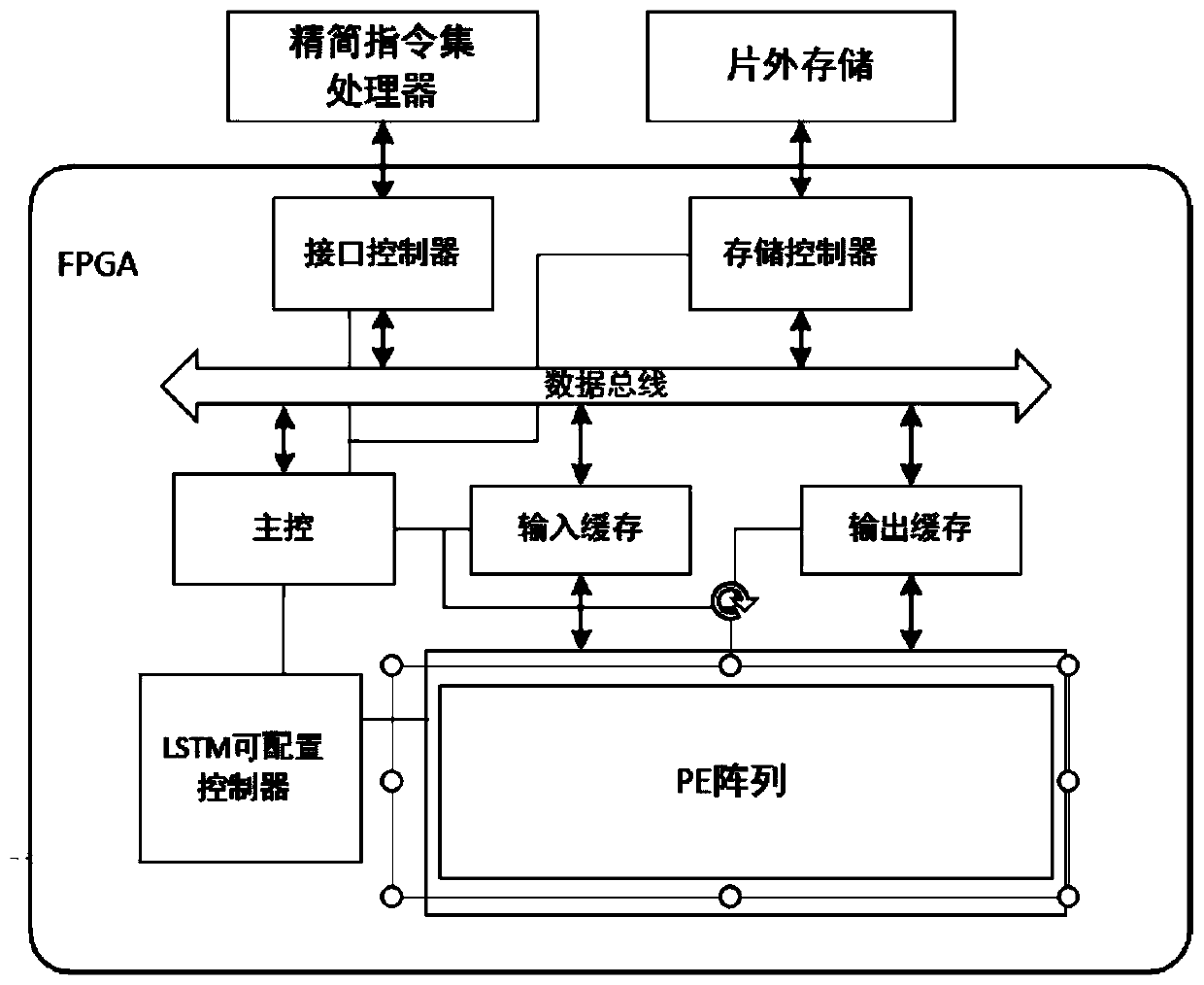

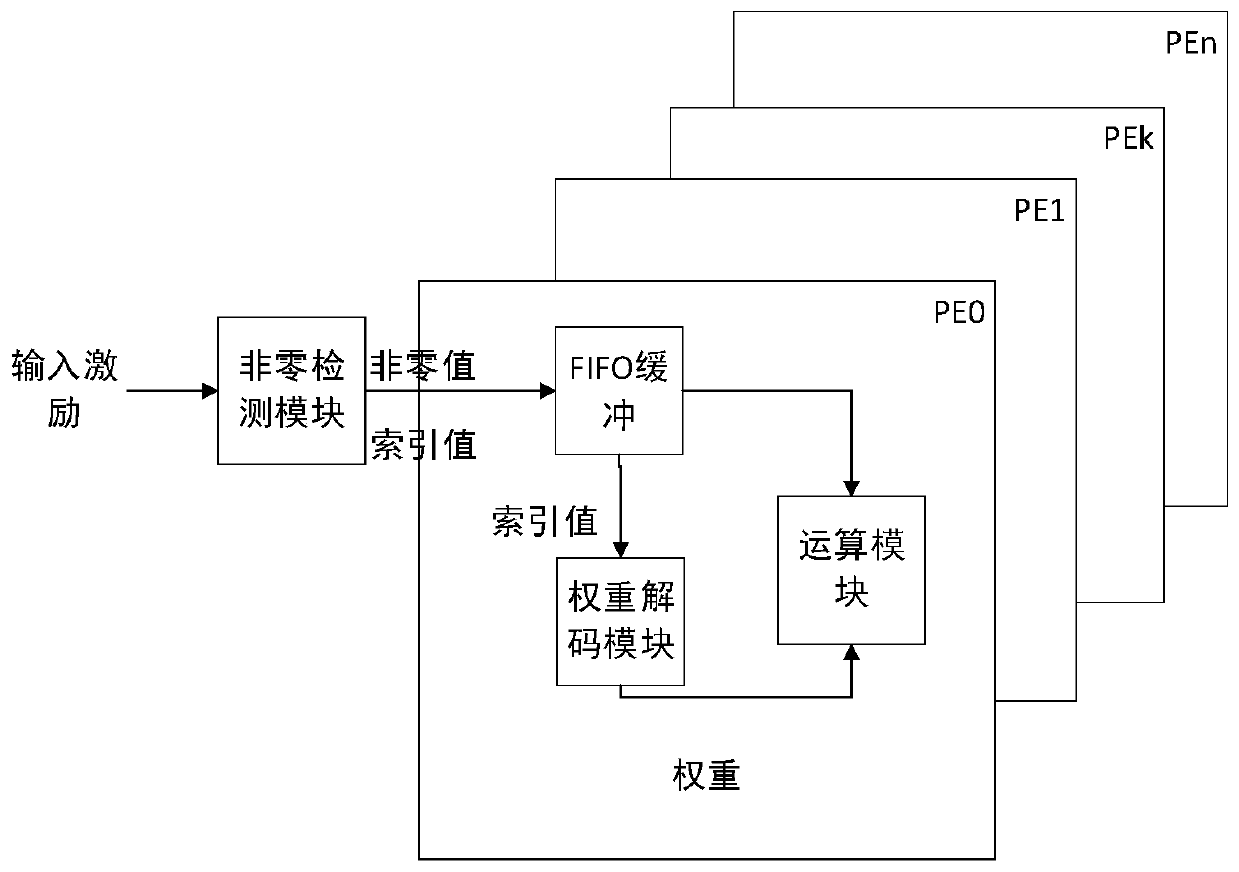

[0016] figure 1 It is a system architecture diagram of an FPGA-based LSTM neural network accelerator for optimizing system computing performance. When the parameter scale of the original LSTM neural network exceeds the storage resource limit of the FPGA, the model parameters are compressed by means of pruning and quantization. The compressed neural network model becomes more sparse, and its weight matrix becomes a sparse matrix. In order to improve the operation balance of parallelism and computing units, the compressed sparse matrix is rearranged row by row, from top to bottom, each row The number of non-zero weights gradually decreases. The number of non-zero weight values is evenly distributed to each operation unit to ensure t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com