A multi-GPU task scheduling method under a virtualization technology

A virtualization technology and task scheduling technology, applied in the field of multi-GPU task scheduling, can solve the problems of uneven load, high communication overhead, low throughput and transmission rate, etc., and achieve the effect of load balancing and scheduling efficiency improvement

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

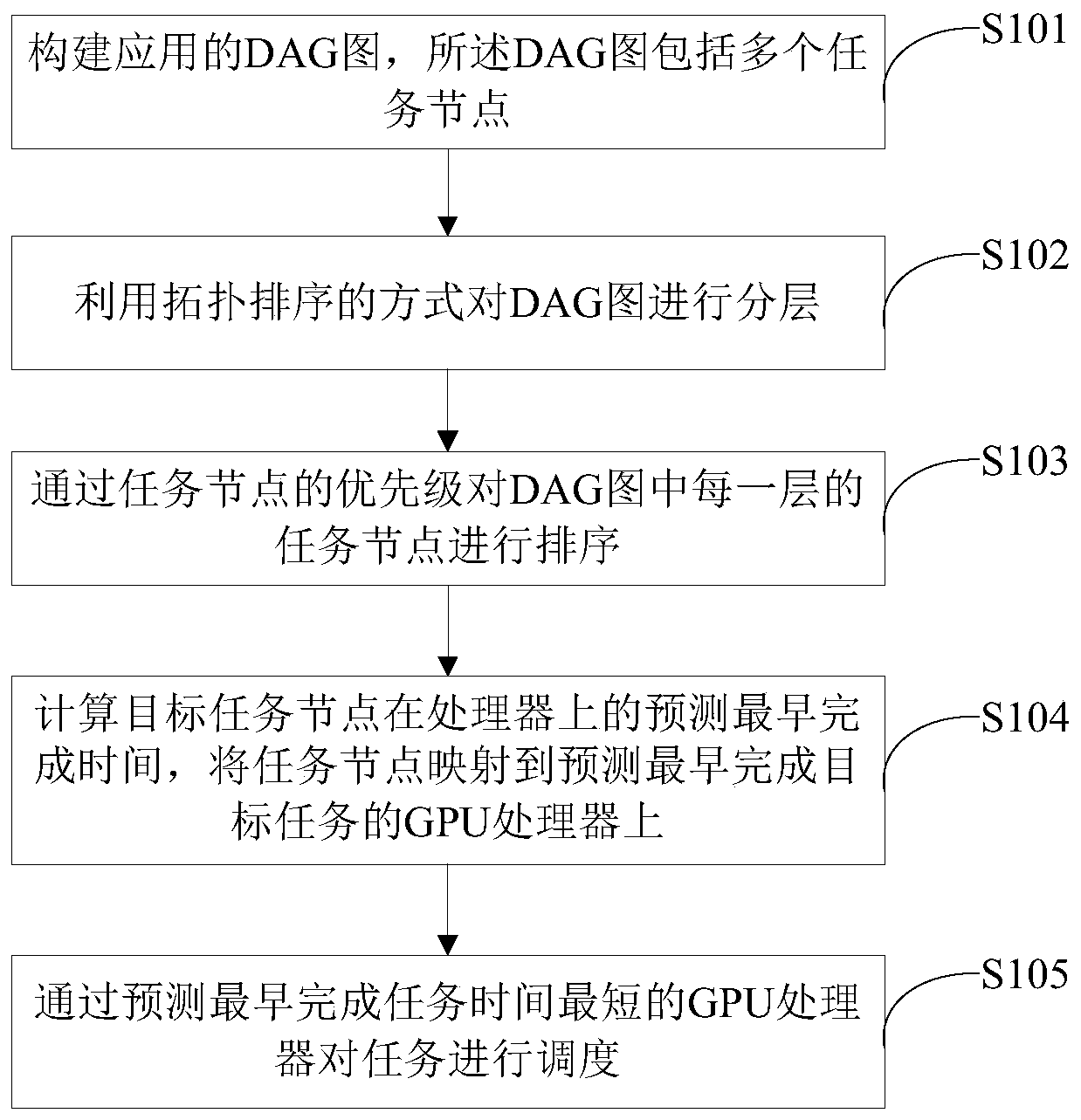

[0040] Such as figure 1 As shown, a multi-GPU task scheduling method under a virtualization technology includes the following steps:

[0041] Step S101: Construct a DAG graph of the application, the DAG graph includes a plurality of task nodes;

[0042] Specifically, the DAG graph of the task is expressed as DAG=[V, E, C, TC, TP], where V represents the task node, E represents the directed edge connecting two task nodes, and C represents the calculation amount of the task node , TC represents the amount of data to be processed by the task node, and TP represents the amount of data generated.

[0043] Step S102: layering the DAG graph by means of topological sorting;

[0044] Step S103: sort the task nodes in each layer in the DAG graph according to the priority of the task nodes;

[0045] Specifically, the priority of the task node is obtained through the priority formula of the task node, and the priority formula of the task node is:

[0046] Priority=Density+AverDown (2)...

Embodiment 2

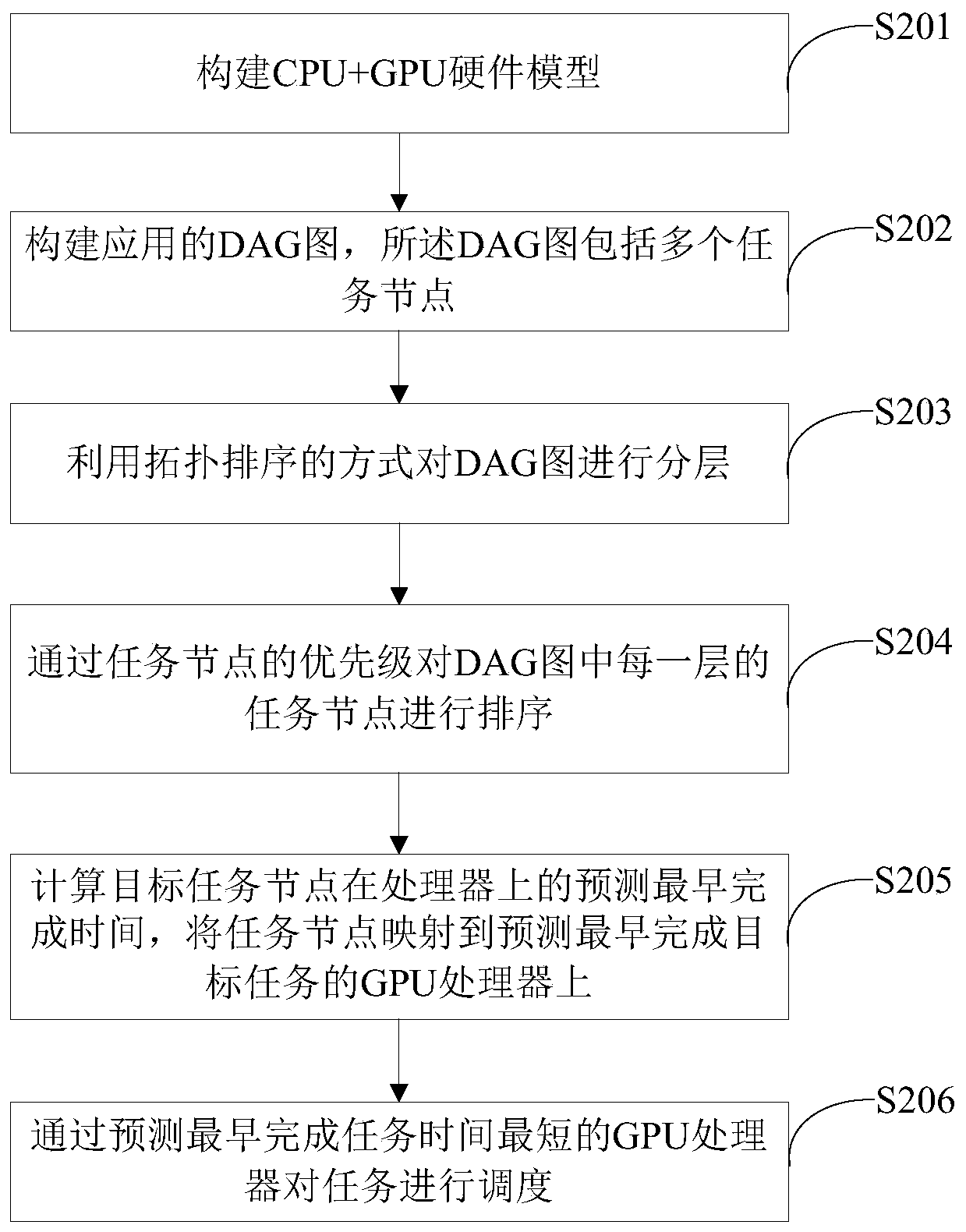

[0057] Such as figure 2 As shown, another multi-GPU task scheduling method under virtualization technology includes:

[0058] Step S201: building a CPU+GPU hardware model;

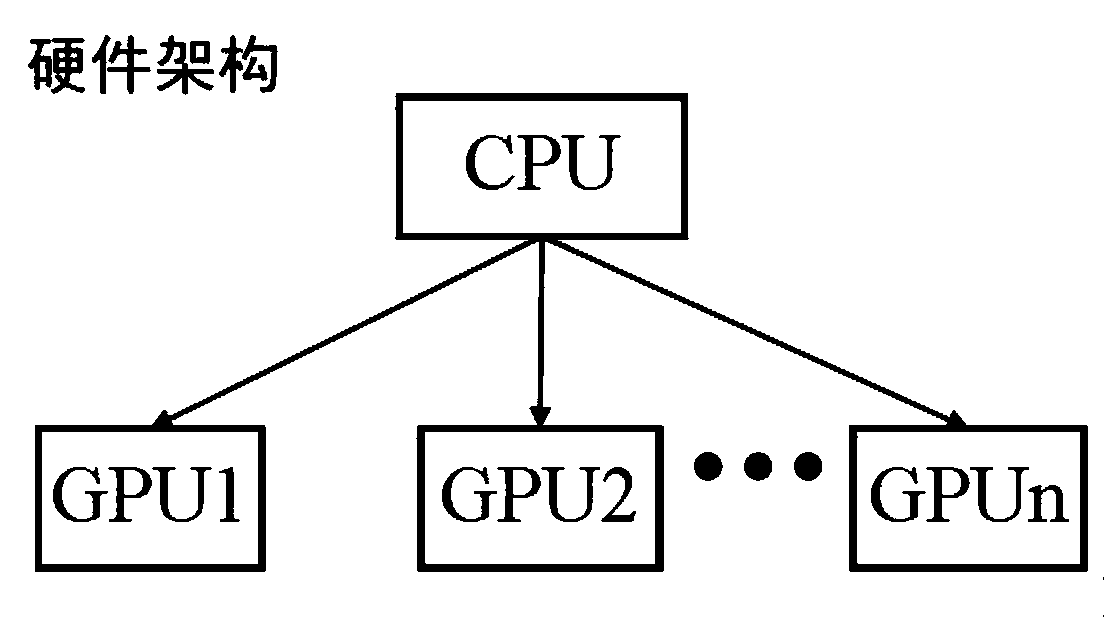

[0059] As the performance improvement rate brought by the CPU manufacturing process gradually enters the bottleneck, the advantages of the high throughput of lightweight multi-threaded computing of the GPU are gradually highlighted. Manufacturers combine the logical control capabilities of the CPU with the floating-point computing capabilities of the GPU. Form a heterogeneous collaborative processing platform where the CPU master controls the GPU main operation, and its platform model is as follows: image 3 shown.

[0060] The CPU and GPU are connected through the PCIE bus, and there are two connection methods between multiple GPUs. One is that multiple GPUs are on the same PCIE bus, and the GPUs can directly transmit data through the PCIE bus; the other is the GPU Data transmission needs to be carrie...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com